Identification and Posture Evaluation of Effective Tea Buds Based on Improved YOLOv8n

Abstract

1. Introduction

2. Dataset Construction

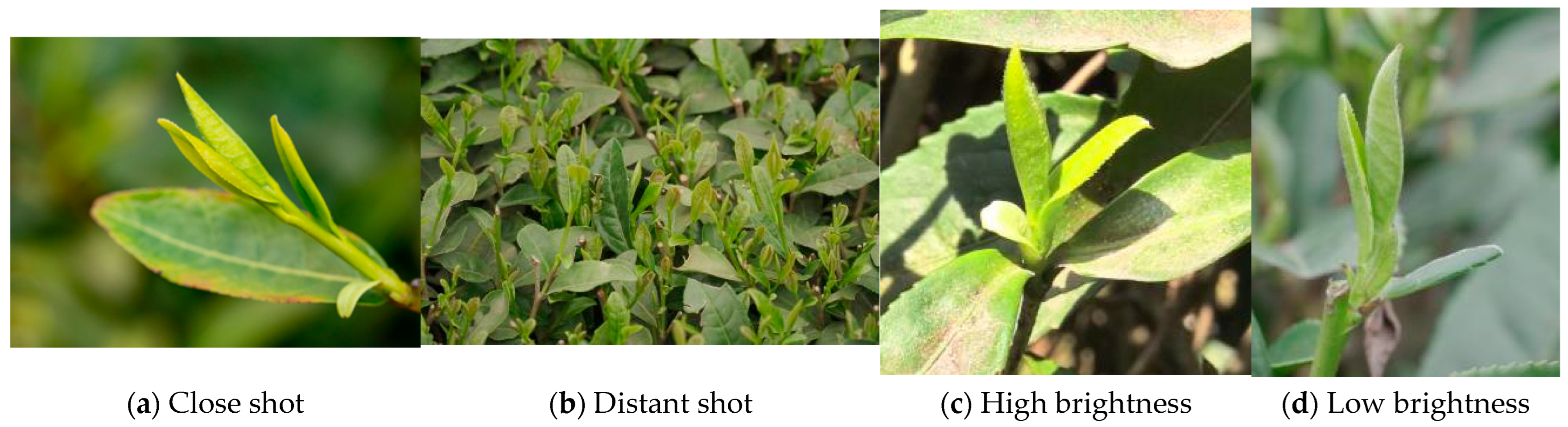

2.1. Data Acquisition

2.2. Dataset Annotation

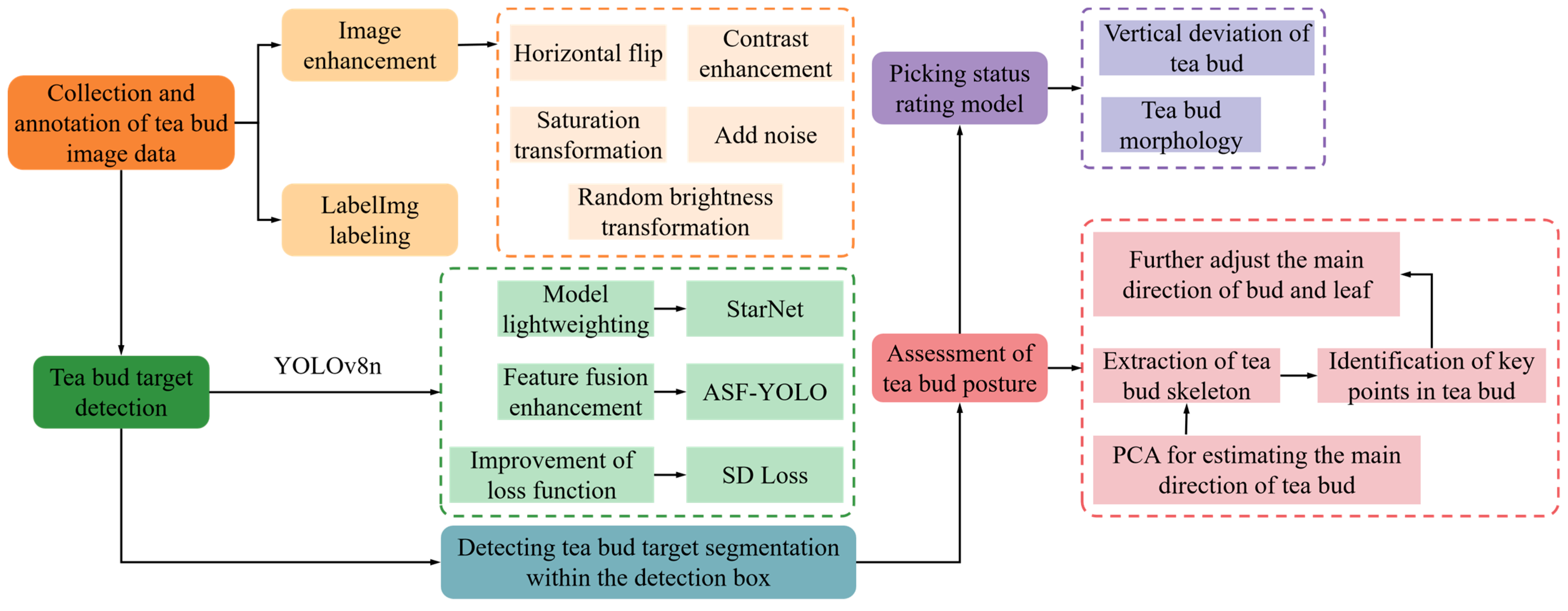

3. Identification and Posture Evaluation of Effective Buds

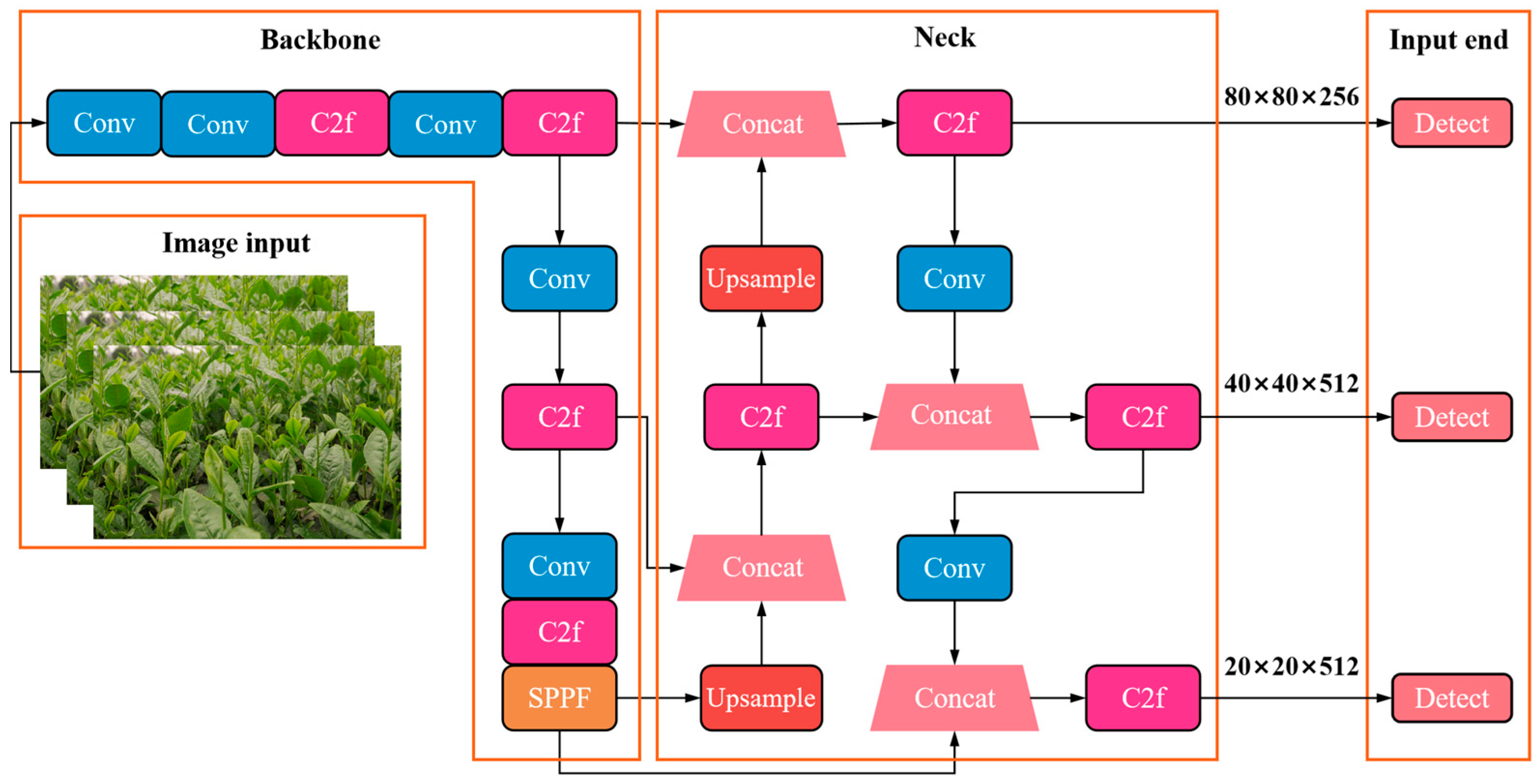

3.1. Identification of Effective Buds Based on Improved YOLOv8n

3.1.1. Model Lightweight

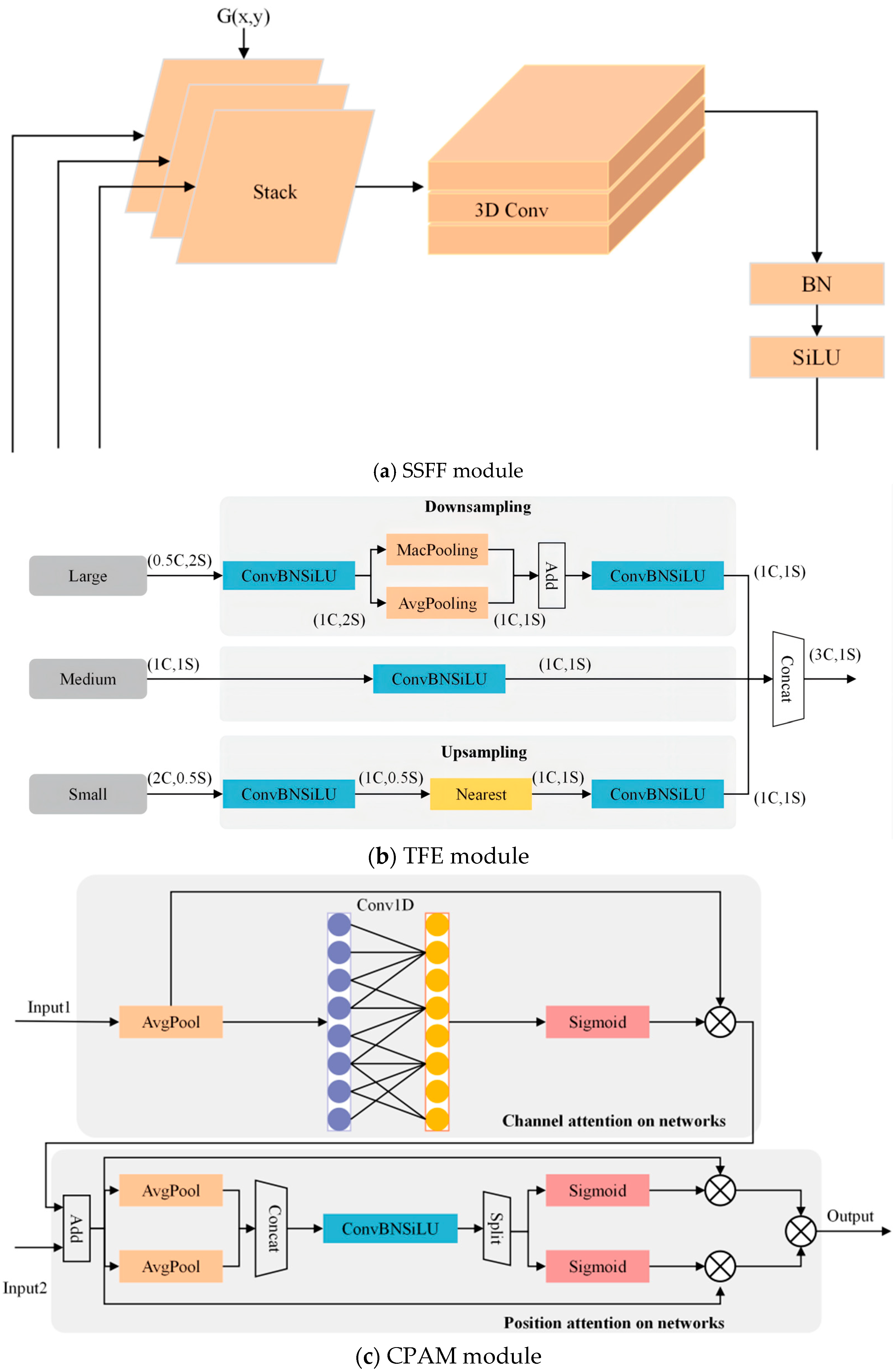

3.1.2. Feature Fusion for Target Detection of Dense Tea Buds

3.1.3. Improvement of Loss Function

3.2. Evaluation of Tea Bud Position and Posture

3.2.1. Segmentation of Tea Buds

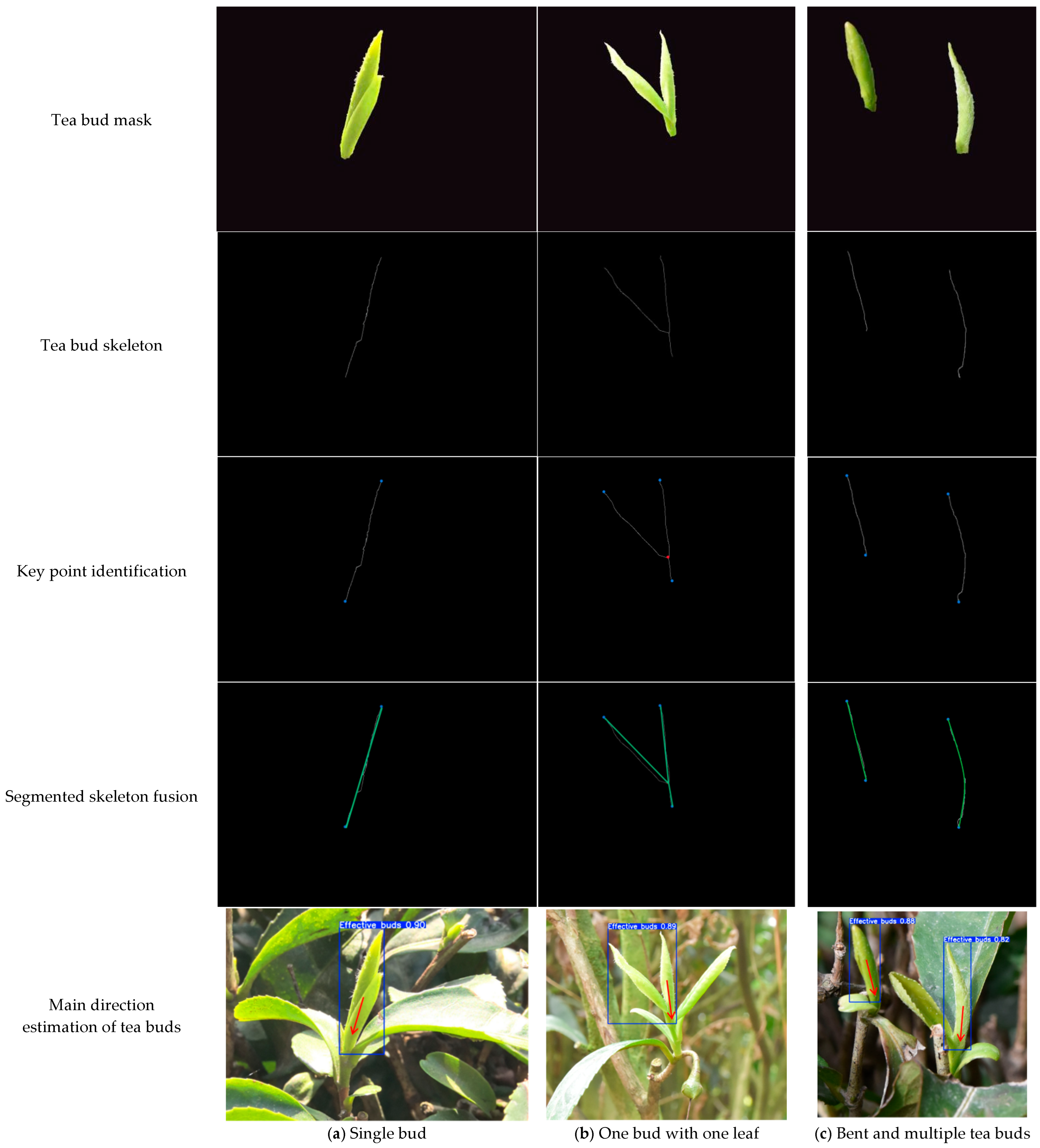

3.2.2. Posture Estimation of Tea Buds

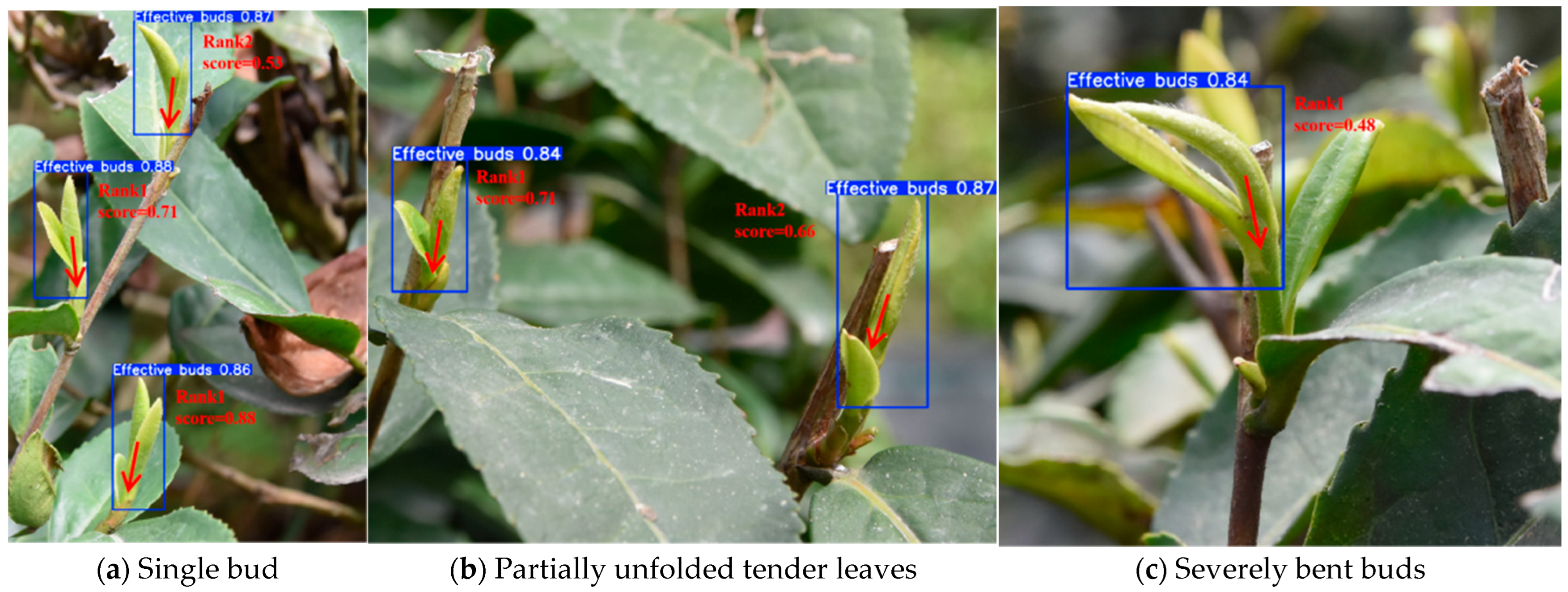

3.2.3. Evaluation of Tea Bud Picking

4. Results and Discussion

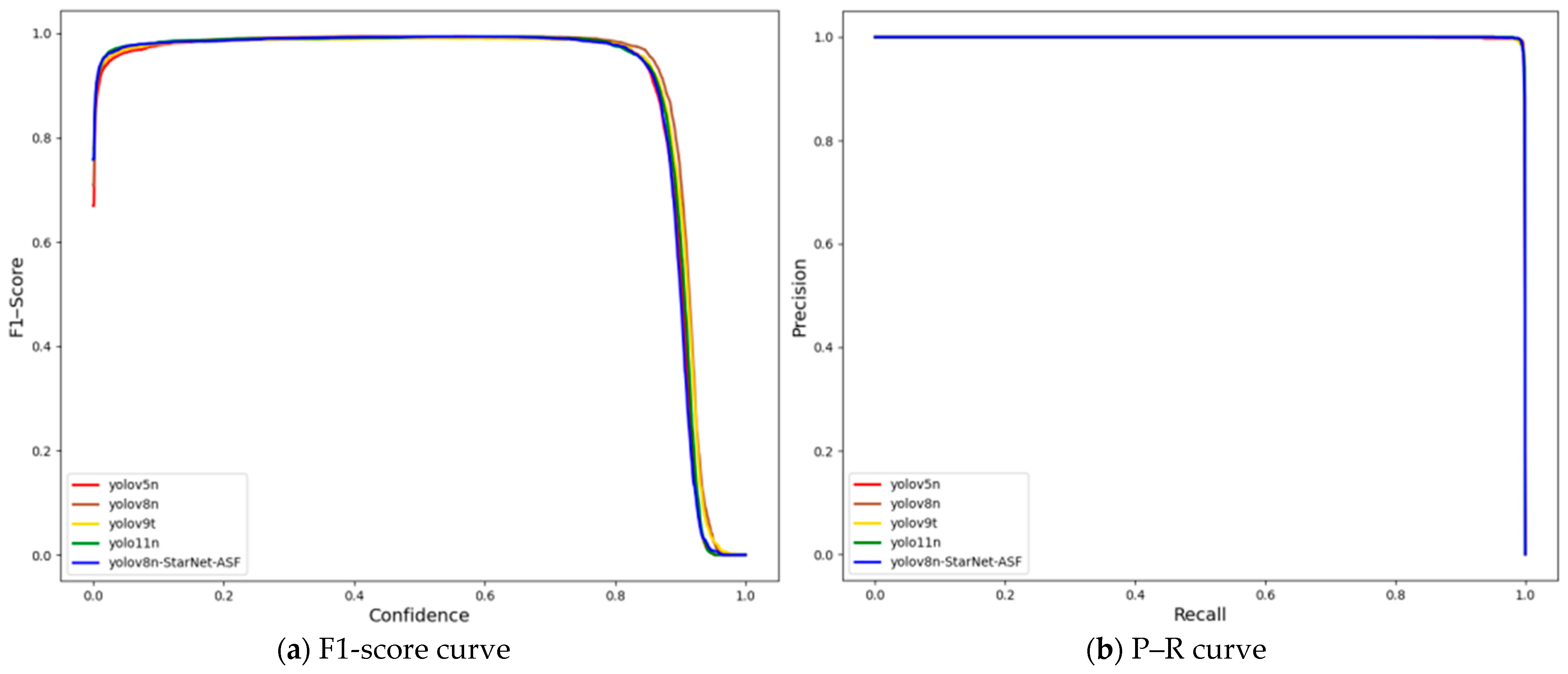

4.1. Identification Results of Effective Buds

4.1.1. Ablation Test

4.1.2. Detection Results of Effective Buds

4.2. Assessment Results of Tea Bud Posture

4.2.1. Target Extraction of Tea Buds

4.2.2. Estimation of the Main Direction of Tea Buds Based on PCA

4.2.3. Estimation of Tea Bud Posture Based on Skeleton Extraction

4.2.4. Evaluation of Tea Bud Scoring Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- FAOSTAT. Crops Statistics; United Nations FAO: Rome, Italy, 2025; Available online: http://www.fao.org/faostat/en/#data/QC (accessed on 15 August 2025).

- Lu, J.; Yang, Z.; Sun, Q.; Gao, Z.; Ma, W. A machine vision-based method for tea buds segmentation and picking point location used on a cloud platform. Agronomy 2023, 13, 1537. [Google Scholar] [CrossRef]

- Wang, M.; Gu, J.; Wang, H.; Hu, T.; Fang, X.; Pan, Z. Method for identifying tea buds based on improved YOLOv5s model. Trans. Chin. Soc. Agric. Eng. 2023, 39, 150–157. [Google Scholar]

- Tang, Y.; Han, W.; Hu, A.; Wang, W. Design and experiment of intelligentized tea-plucking machine for human riding based on machine vision. Trans. Chin. Soc. Agric. Mach. 2016, 47, 15–20. [Google Scholar]

- Lin, Y.K.; Chen, S.F.; Kuo, Y.F.; Liu, T.L.; Lee, S.Y. Developing a guiding and growth status monitoring system for riding-type tea plucking machine using fully convolutional networks. Comput. Electron. Agric. 2021, 191, 106540. [Google Scholar] [CrossRef]

- Zhou, Y.; Wu, Q.; He, L.; Zhao, R.; Jia, J.; Chen, J.; Wu, C. Design and experiment of intelligent picking robot for famous tea. J. Mech. Eng. 2022, 58, 12–23. [Google Scholar]

- Yang, Z.; Ma, W.; Lu, J.; Tian, Z.; Peng, K. The application status and trends of machine vision in tea production. Appl. Sci. 2023, 13, 10744. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Yang, M.; Wang, G.; Zhao, Y.; Hu, Y. Optimal training strategy for high-performance detection model of multi-cultivar tea shoots based on deep learning methods. Sci. Hortic. 2024, 328, 112949. [Google Scholar] [CrossRef]

- Xu, W.; Zhao, L.; Li, J.; Shang, S.; Ding, X.; Wang, T. Detection and classification of tea buds based on deep learning. Comput. Electron. Agric. 2022, 192, 106547. [Google Scholar] [CrossRef]

- Zhang, F.; Sun, H.; Xie, S.; Dong, C.; Li, Y.; Xu, Y.; Zhang, Z.; Chen, F. A tea bud segmentation, detection and picking point localization based on the MDY7-3PTB model. Front. Plant Sci. 2023, 14, 1199473. [Google Scholar] [CrossRef]

- Li, J.; Li, J.; Zhao, X.; Su, X.; Wu, W. Lightweight detection networks for tea bud on complex agricultural environment via improved YOLO v4. Comput. Electron. Agric. 2023, 211, 107955. [Google Scholar] [CrossRef]

- Wang, Y. Study on Automatic Bud Recognition and Buds Classification System of JunMei Tea in Fujian Province. Master’s Thesis, Fujian Agriculture and Forestry University, Fuzhou, China, 2020. [Google Scholar]

- Long, Z.; Wang, J.; Li, G.; Zeng, C.; He, Y.; Li, B. An Algorithm for Identifying and Locating Tea Buds. China Patent CN111784764A, 16 October 2020. [Google Scholar]

- Jiang, H.; He, B.; Zhang, Y. Research on the Method of Recognizing and Positioning the Shoots of the Tea Picking Manipulator. Mach. Electron. 2021, 39, 60–64+69. [Google Scholar]

- Wang, W.; Xiao, H.; Chen, Q.; Song, Z.; Han, Y.; Ding, W. Research progress analysis of tea intelligent recognition and detection technology based on image processing. J. Chin. Agric. Mech. 2020, 41, 178–184. [Google Scholar]

- Liu, F.; Wang, S.; Pang, S.; Han, Z. Detection and recognition of tea buds by integrating deep learning and image-processing algorithm. Food Meas. 2024, 18, 2744–2761. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, J.; He, L.; Gui, J.; Jia, J. An RGB-D object detection model with high-generalization ability applied to tea harvesting robot for outdoor cross-variety tea shoots detection. J. Field Robot. 2024, 41, 1167–1186. [Google Scholar] [CrossRef]

- Wei, Y.; Wen, Y.; Huang, X.; Ma, P.; Wang, L.; Pan, Y.; Lv, Y.; Wang, H.; Zhang, L.; Wang, K.; et al. The dawn of intelligent technologies in tea industry. Trends Food Sci. Technol. 2024, 144, 104337. [Google Scholar] [CrossRef]

- Zhu, M. Research and Application of Recognition and Localization Algorithm for Tea Buds Based on Embedded Platform; Central China Normal University: Wuhan, China, 2024. [Google Scholar]

- Zhang, F. Research and System Development of Tea Bud Recognition and Location Based on Computer Vision; Hangzhou Dianzi University: Hangzhou, China, 2023. [Google Scholar]

- Shi, W.; Yuan, W.; Yang, M.; Xu, G. A lightweight model for identifying the stalks of tea buds based on the improved YOLOv8n-seg. Jiangsu J. Agric. Sci. 2025, 41, 75–86. [Google Scholar]

- Yang, D.; Huang, Z.; Zheng, C.; Chen, H.; Jiang, X. Detecting tea shoots using improved YOLOv8n. Trans. Chin. Soc. Agric. Eng. 2024, 40, 165–173. [Google Scholar]

- Li, H.; Gao, Y.; Xiong, G.; Li, Y.; Yang, Y. Extracting tea bud contour and location of picking points in large scene using case segmentation. Trans. Chin. Soc. Agric. Eng. 2024, 40, 135–142. [Google Scholar]

- Yu, T.; Chen, J.; Chen, Z.; Li, Y.; Tong, J.; Du, X. DMT: A model detecting multispecies of tea buds in multi-seasons. Int. J. Agric. Biol. Eng. 2024, 17, 199–208. [Google Scholar] [CrossRef]

- Hu, C.; Tan, L.; Wang, W.; Song, M. Lightweight tea shoot picking point recognition model based on improved DeepLabV3+. Smart Agric. 2024, 6, 119–127. [Google Scholar]

- Gu, J.; Wang, M.; Wang, H.; Hu, T.; Zhang, W.; Fang, X. Construction Method of Improved YOLOv5 Target Detection Model and Method for Identifying Tea Buds and Locating Picking Point. China Patent CN114882222A, 9 August 2022. [Google Scholar]

- Cheng, Y.; Li, Y.; Zhang, R.; Gui, Z.; Dong, C.; Ma, R. Locating tea bud keypoints by keypoint detection method based on convolutional neural network. Sustainability 2023, 15, 6898. [Google Scholar] [CrossRef]

- Guo, S.; Yoon, S.; Li, L.; Wang, W.; Zhuang, H.; Wei, C.; Liu, Y.; Li, Y. Recognition and positioning of fresh tea buds using YOLOv4-lighted + ICBAM model and RGB-D sensing. Agriculture 2023, 13, 518. [Google Scholar] [CrossRef]

- Wei, T.; Zhang, J.; Wang, J.; Zhou, Q. Study of tea buds recognition and detection based on improved YOLOv7 model. J. Intell. Agric. Mech. 2024, 5, 42–50. [Google Scholar]

- Luo, K.; Zhang, X.; Cao, C.; Wu, Z.; Qin, K.; Wang, C.; Li, W.; Chen, L.; Chen, W. Continuous identification of the tea shoot tip and accurate positioning of picking points for a harvesting from standard plantations. Front. Plant Sci. 2023, 14, 1211279. [Google Scholar] [CrossRef]

- Li, Y.; He, L.; Jia, J.; Lv, J.; Chen, J.; Qiao, X.; Wu, C. In-field tea shoot detection and 3D localization using an RGB-D camera. Comput. Electron. Agric. 2021, 185, 106149. [Google Scholar] [CrossRef]

- Zhang, Z. Research into the Essential Technique of Intelligent Harvesting of the Famous Tea; Zhongkai University of Agriculture and Engineering: Guangzhou, China, 2024. [Google Scholar]

- Long, Z.; Jiang, Q.; Wang, J.; Zhu, H.; Li, B.; Wen, F. Research on method of tea flushes vision recognition and picking point localization. Transducer Microsyst. Technol. 2022, 41, 39–41+45. [Google Scholar]

- Zou, L.; Zhang, L.; Wu, C.; Chen, J. A Method for Obtaining Location Information of Picking Points of Famous Tea Based on Machine Vision. China Patent CN112861654A, 2024. [Google Scholar]

- Zhu, L.; Zhang, Z.; Lin, G.; Zhang, S.; Chen, J.; Chen, P.; Guo, X.; Lai, Y.; Deng, W.; Wang, M.; et al. A Secondary Locating Method for Tender Bud Picking of Famous Tea. China Patent CN116138036B, 2 April 2024. [Google Scholar]

- Arısoy, M.V.; Uysal, İ. BiFPN-enhanced SwinDAT-based cherry variety classification with YOLOv8. Sci. Rep. 2025, 15, 5427. [Google Scholar] [CrossRef]

- Guan, S.; Lin, Y.; Lin, G.; Su, P.; Huang, S.; Meng, X.; Liu, P.; Yan, J. Real-Time Detection and Counting of Wheat Spikes Based on Improved YOLOv10. Agronomy 2024, 14, 1936. [Google Scholar] [CrossRef]

| Model | StarNet_s050 | ASF-YOLO | BiFPN | SEAM | Precision Rate/% | Recall Rate/% | F1-Score/% | mAP/% | Calculation Load/GFLOPs | |

|---|---|---|---|---|---|---|---|---|---|---|

| mAP50 | mAP50-95 | |||||||||

| 1 | × | × | × | × | 87.3 | 80.5 | 83.8 | 91.8 | 67.9 | 8.9 |

| 2 | √ | × | × | × | 85.0 | 83.2 | 84.1 | 89.9 | 63.8 | 6.5 |

| 3 | √ | √ | × | × | 85.6 | 81.7 | 83.6 | 90.5 | 65.2 | 6.0 |

| 4 | √ | × | √ | × | 84.9 | 84.4 | 84.65 | 88.9 | 64.6 | 6.5 |

| 5 | √ | × | × | √ | 83.2 | 82.6 | 82.89 | 89.6 | 64.3 | 6.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; He, T.; Xie, L.; Yi, W.; Zhao, L.; Wang, C.; Wang, J.; Bai, Z.; Mei, S. Identification and Posture Evaluation of Effective Tea Buds Based on Improved YOLOv8n. Processes 2025, 13, 3658. https://doi.org/10.3390/pr13113658

Wang P, He T, Xie L, Yi W, Zhao L, Wang C, Wang J, Bai Z, Mei S. Identification and Posture Evaluation of Effective Tea Buds Based on Improved YOLOv8n. Processes. 2025; 13(11):3658. https://doi.org/10.3390/pr13113658

Chicago/Turabian StyleWang, Pan, Tingting He, Luxin Xie, Wenyu Yi, Lei Zhao, Chunxia Wang, Jiani Wang, Zhiye Bai, and Song Mei. 2025. "Identification and Posture Evaluation of Effective Tea Buds Based on Improved YOLOv8n" Processes 13, no. 11: 3658. https://doi.org/10.3390/pr13113658

APA StyleWang, P., He, T., Xie, L., Yi, W., Zhao, L., Wang, C., Wang, J., Bai, Z., & Mei, S. (2025). Identification and Posture Evaluation of Effective Tea Buds Based on Improved YOLOv8n. Processes, 13(11), 3658. https://doi.org/10.3390/pr13113658