A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots

Abstract

1. Introduction

2. Detection of Agricultural Pests in Farmland Based on Image Processing Algorithms

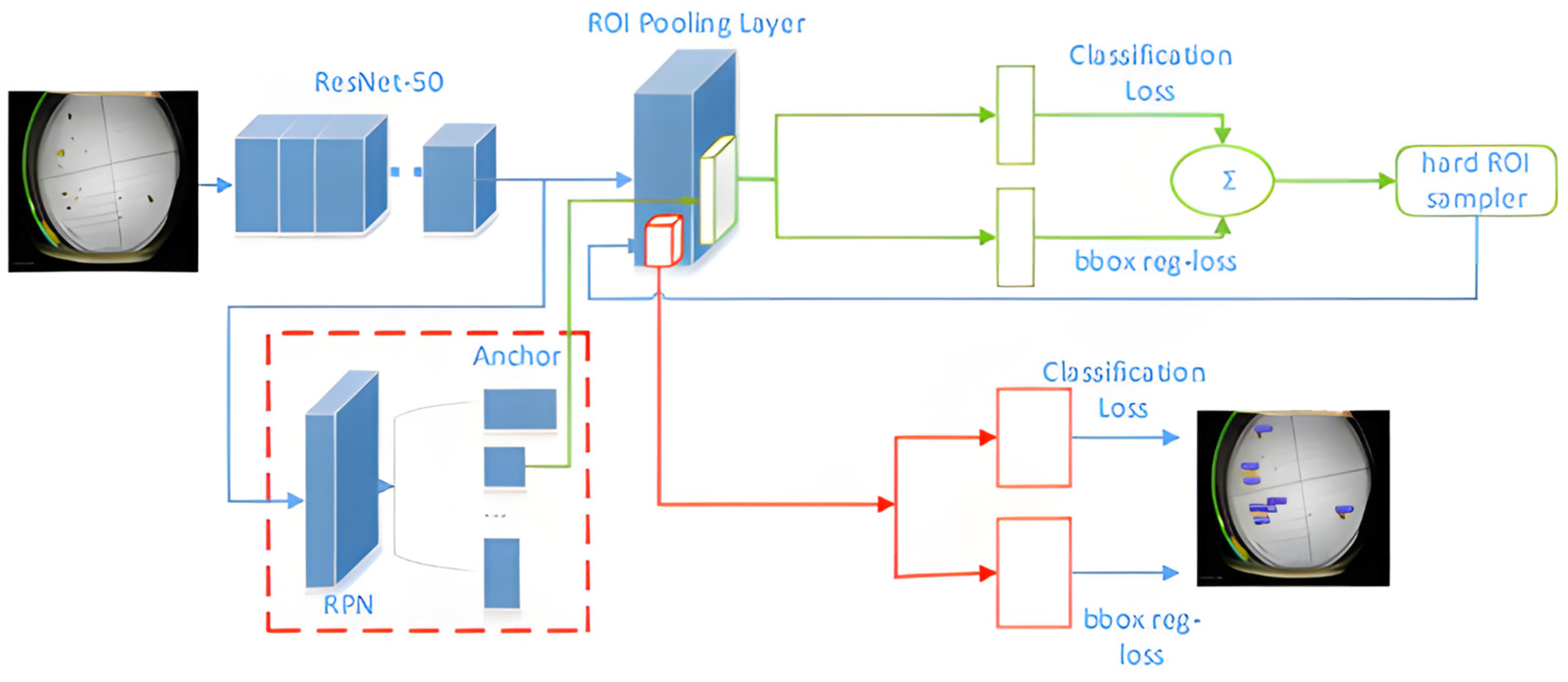

2.1. Pest Detection Based on the Faster R-CNN Algorithm

2.2. Detection for Improvement and Applications of Faster R-CNN and ResNet AlgorithMS

2.3. The Study Utilized a Dataset Composed of Images and Videos of Rice Pests and Diseases for Training

2.4. Improvements and Applications of Faster R-CNN and ResNet Algorithms

3. Detection of Mature Crops Using Agricultural Robots

3.1. Maturity Detection Based on Image Processing

3.2. Maturity Detection Based on the YOLO Algorithm

3.3. Comprehensive Application of Agricultural Robots in Crop Maturity Detection

4. Leveraging UWB Technology for Localization and Its Various Application Scenarios

4.1. RSSI-Assisted Localization Method Based on Integrated UWB Technology

4.2. The AOA (Angle of Arrival) Positioning Method Based on UWB (Ultra-Wideband) Technology

4.3. TOA and TODA Localization Methods Incorporating UWB Technology

5. Application of Multi-Sensor Fusion and Integration with Deep Learning Algorithms

5.1. Fusion Localization Leveraging LiDAR and Vision Sensors Enhances Precision in Spatial Positioning

5.2. Integration of Multi-Sensor Fusion Technology with Deep Learning Algorithms for Localization Applications in Agriculture

6. Comprehensive Applications of Agricultural Robots for Detection and Localization

6.1. Detection of Soil and Crop Positioning and Planting Using Deep Learning Algorithms

6.2. Integration of Depth Cameras and Deep Learning Algorithms for Pest and Weed Localization and Detection

6.3. Localization and Harvesting of Crops Based on Depth Images and Deep Learning Algorithm Integration

7. Discussion and Prospects

- (1)

- Innovate and Revamp the Structure of Agricultural Robots: Explore practical structural innovations to enhance the adaptability and flexibility of agricultural robots in complex environments. Upholding the concept of low-carbon environmental protection, develop agricultural robots powered by clean energy sources. Concurrently, improve their battery life to ensure prolonged and efficient real-time monitoring of agricultural environments while adhering to principles of environmental conservation in farmland.

- (2)

- Enhance Autonomous Pest Management Capabilities: Improving the autonomous handling of pests by robots is crucial for protecting crops from infestations, a key function of detection and localization. Delve deeper into robotic capabilities for pest management to promptly eradicate pests, thereby reducing labor inputs and enhancing crop protection capabilities [90], steering agricultural robot development towards benefiting society.

- (3)

- Strengthen Infrastructure Development for Agricultural Robots: Effective detection and localization by agricultural robots rely on various sensors, with base station infrastructure being critical for signal strength. Strengthen the construction of base stations around farmlands to ensure comprehensive coverage, thereby providing robust support for the detection and localization capabilities of agricultural robots.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Han, X.; Xu, L.; Peng, Y.; Wang, Z. Trend of Intelligent Robot Application Based on Intelligent Agriculture System. In Proceedings of the 2021 3rd International Conference on Artificial Intelligence and Advanced Manufacture (AIAM), Manchester, UK, 23–25 October 2021; pp. 205–209. [Google Scholar] [CrossRef]

- Li, W.; Zhang, Z.; Li, C.; Zou, J. Small Target Detection Algorithm Based on Two-Stage Feature Extraction. In Proceedings of the 2023 6th International Conference on Software Engineering and Computer Science (CSECS), Chengdu, China, 22–24 December 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Su, D.; Qiao, Y.; Kong, H.; Sukkarieh, S. Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosyst. Eng. 2021, 204, 198–211. [Google Scholar] [CrossRef]

- Liu, R.; Yu, Z.; Mo, D.; Cai, Y. An Improved Faster-RCNN Algorithm for Object Detection in Remote Sensing Images. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 7188–7192. [Google Scholar] [CrossRef]

- Bajait, V.; Malarvizhi, N. Recognition of suitable Pest for Crops using Image Processing and Deep Learning Techniques. In Proceedings of the 2022 4th International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 16–17 December 2022; pp. 1042–1046. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Mohamed, R.; Elgohary, A.; Alzantot, M.F.; Wang, H.; Sen, S.; Choudhury, R.R.; Youssef, M. Semantic SLAM: Using Environment Landmarks for Unsupervised Indoor Localization. IEEE Trans. Mob. Comput. 2016, 15, 1770–1782. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Della Corte, B.; Andreasson, H.; Stoyanov, T.; Grisetti, G. Unified Motion-Based Calibration of Mobile Multi-Sensor Platforms with Time Delay Estimation. IEEE Robot. Autom. Lett. 2019, 4, 902–909. [Google Scholar] [CrossRef]

- He, Y.; Zhou, Z.; Tian, L.; Liu, Y.; Luo, X. Brown rice planthopper (Nilaparvata lugens Stal) detection based on deep learning. Precis. Agric. 2020, 21, 1385–1402. [Google Scholar] [CrossRef]

- Ayan, E.; Erbay, H.; Varçın, F. Crop pest classification with a genetic algorithm-based weighted ensemble of deep convolutional neural networks. Comput. Electron. Agric. 2020, 179, 105809. [Google Scholar] [CrossRef]

- Shi, F.; Liu, Y.; Wang, H. Target Detection in Remote Sensing Images Based on Multi-scale Fusion Faster RCNN. In Proceedings of the 2023 35th Chinese Control and Decision Conference (CCDC), Yichang, China, 20–22 May 2023; pp. 4043–4046. [Google Scholar] [CrossRef]

- Wang, Z.; Qiao, L.; Wang, M. Agricultural pest detection algorithm based on improved faster RCNN. In Proceedings of the International Conference on Computer Vision and Pattern Analysis (ICCPA 2021), Hangzhou, China, 19–21 November 2021; Volume 12158, pp. 104–109. [Google Scholar]

- Patel, D.; Bhatt, N. Improved accuracy of pest detection using augmentation approach with Faster R-CNN. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Sanya, China, 12–14 November 2021; Volume 1042, p. 012020. [Google Scholar]

- Zhang, M.; Chen, Y.; Zhang, B.; Pang, K.; Lv, B. Recognition of pest based on faster rcnn. In Signal and Information Processing, Networking and Computers, Proceedings of the 6th International Conference on Signal and Information Processing, Networking and Computers (ICSINC), Guiyang, China, 13–16 August 2019; Springer: Singapore, 2020; pp. 62–69. [Google Scholar]

- Deng, F.; Mao, W.; Zeng, Z.; Zeng, H.; Wei, B. Multiple diseases and pests detection based on federated learning and improved faster R-CNN. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Sudha, C.; JaganMohan, K.; Arulaalan, M. Real Time Riped Fruit Detection using Faster R-CNN Deep Neural Network Models. In Proceedings of the 2022 International Conference on Smart Technologies and Systems for Next Generation Computing (ICSTSN), Villupuram, India, 25–26 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Quach, L.-D.; Quoc, N.P.; Thi, N.H.; Tran, D.C.; Hassan, M.F. Using SURF to Improve ResNet-50 Model for Poultry Disease Recognition Algorithm. In Proceedings of the 2020 International Conference on Computational Intelligence (ICCI), Bandar Seri Iskandar, Malaysia, 8–9 October 2020; pp. 317–321. [Google Scholar] [CrossRef]

- Gui, J.; Xu, H.; Fei, J. Non-destructive detection of soybean pest based on hyperspectral image and attention-resnet meta-learning model. Sensors 2023, 23, 678. [Google Scholar] [CrossRef]

- Dewi, C.; Christanto, H.J.; Dai, G.W. Automated identification of insect pests: A deep transfer learning approach using resnet. Acadlore Trans. Mach. Learn 2023, 2, 194–203. [Google Scholar] [CrossRef]

- Hassan, S.M.; Maji, A.K. Pest Identification based on fusion of Self-Attention with ResNet. IEEE Access 2024, 12, 6036–6050. [Google Scholar] [CrossRef]

- Wang, P.; Luo, F.; Wang, L.; Li, C.; Niu, Q.; Li, H. S-ResNet: An improved ResNet neural model capable of the identification of small insects. Front. Plant Sci. 2022, 13, 1066115. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Wang, R.; Xie, C.; Liu, L.; Zhang, J.; Li, R.; Wang, F.; Zhou, M.; Liu, W. A recognition method for rice plant diseases and pests video detection based on deep convolutional neural network. Sensors 2020, 20, 578. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Chen, Y.; Guo, M.; Wang, J. Pest Detection and Identification Guided by Feature Maps. In Proceedings of the 2023 Twelfth International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 16–19 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Bari, B.S.; Islam, M.N.; Rashid, M.; Hasan, M.J.; Razman, M.A.M.; Musa, R.M.; Ab Nasir, A.F.; Majeed, A.P.A. A real-time approach of diagnosing rice leaf disease using deep learning-based faster R-CNN framework. PeerJ Comput. Sci. 2021, 7, e432. [Google Scholar] [CrossRef] [PubMed]

- Fan, J.; Lee, J.; Jung, I.; Lee, Y. Improvement of object detection based on faster R-CNN and YOLO. In Proceedings of the 2021 36th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Jeju, Republic of Korea, 27–30 June 2021; pp. 1–4. [Google Scholar]

- Du, B.; Zhao, J.; Cao, M.; Li, M.; Yu, H. Behavior Recognition Based on Improved Faster RCNN. In Proceedings of the 2021 14th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 23–25 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, T.L.; Chang, H.Y.; Chen, K.H. The pest and disease identification in the growth of sweet peppers using faster R-CNN and mask R-CNN. J. Internet Technol. 2020, 21, 605–614. [Google Scholar]

- Liu, J.; Zhang, G.; Feng, B.; Hou, Y.; Kang, W.; Shen, B. A Method for Plant Diseases Detection Based on Transfer Learning and Data Enhancement. In Proceedings of the 2022 International Conference on High Performance Big Data and Intelligent Systems (HDIS), Tianjin, China, 10–11 December 2022; pp. 154–158. [Google Scholar] [CrossRef]

- Krishnan, H.; Lakshmi, A.A.; Anamika, L.S.; Athira, C.H.; Alaikha, P.V.; Manikandan, V.M. A Novel Underwater Image Enhancement Technique using ResNet. In Proceedings of the 2020 IEEE 4th Conference on Information & Communication Technology (CICT), Chennai, India, 3–5 December 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Krueangsai, A.; Supratid, S. Effects of Shortcut-Level Amount in Lightweight ResNet of ResNet on Object Recognition with Distinct Number of Categories. In Proceedings of the 2022 International Electrical Engineering Congress (iEECON), Khon Kaen, Thailand, 9–11 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Teng, Y.; Zhang, J.; Dong, S.; Zheng, S.; Liu, L. MSR-RCNN: A multi-class crop pest detection network based on a multi-scale super-resolution feature enhancement module. Front. Plant Sci. 2022, 13, 810546. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; Sun, Y.; Chen, S.; Feng, J.; Zhao, Y.; Yan, Z.; Zhang, X.; Bian, Y. A novel object detection model based on faster R-CNN for spodoptera frugiperda according to feeding trace of corn leaves. Agriculture 2022, 12, 248. [Google Scholar] [CrossRef]

- Cao, C.; Wang, B.; Zhang, W.; Zeng, X.; Yan, X.; Feng, Z.; Liu, Y.; Wu, Z. An Improved Faster R-CNN for Small Object Detection. IEEE Access 2019, 7, 106838–106846. [Google Scholar] [CrossRef]

- Yang, L.; Zhong, J.; Zhang, Y.; Bai, S.; Li, G.; Yang, Y.; Zhang, J. An Improving Faster-RCNN with Multi-Attention ResNet for Small Target Detection in Intelligent Autonomous Transport With 6G. IEEE Trans. Intell. Transp. Syst. 2023, 24, 7717–7725. [Google Scholar] [CrossRef]

- Le, V.N.T.; Truong, G.; Alameh, K. Detecting weeds from crops under complex field environments based on Faster RCNN. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 350–355. [Google Scholar] [CrossRef]

- Shi, P.; Xu, X.; Ni, J.; Xin, Y.; Huang, W.; Han, S. Underwater Biological Detection Algorithm Based on Improved Faster-RCNN. Water 2021, 13, 2420. [Google Scholar] [CrossRef]

- Altun, A.A.; Taghiyev, A. Advanced image processing techniques and applications for biological objects. In Proceedings of the 2017 2nd IEEE International Conference on Computational Intelligence and Applications (ICCIA), Beijing, China, 8–11 September 2017; pp. 340–344. [Google Scholar] [CrossRef]

- Miao, Z.; Yu, X.; Li, N.; Zhang, Z.; He, C.; Li, Z.; Deng, C.; Sun, T. Efficient tomato harvesting robot based on image processing and deep learning. Precis. Agric. 2023, 24, 254–287. [Google Scholar] [CrossRef]

- Al-Mashhadani, Z.; Chandrasekaran, B. Autonomous ripeness detection using image processing for an agricultural robotic system. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; pp. 743–748. [Google Scholar]

- Puttemans, S.; Vanbrabant, Y.; Tits, L.; Goedemé, T. Automated visual fruit detection for harvest estimation and robotic harvesting. In Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Xiao, F.; Wang, H.; Li, Y.; Cao, Y.; Lv, X.; Xu, G. Object detection and recognition techniques based on digital image processing and traditional machine learning for fruit and vegetable harvesting robots: An overview and review. Agronomy 2023, 13, 639. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Parron, J.; Obidat, O.; Tuininga, A.R.; Wang, W. Ready or Not? A Robot-Assisted Crop Harvest Solution in Smart Agriculture Contexts. In Proceedings of the 2023 IEEE International Conference on Smart Computing (SMARTCOMP), Nashville, TN, USA, 26–30 June 2023; pp. 373–378. [Google Scholar] [CrossRef]

- Irham, A.; Kurniadi; Yuliandari, K.; Fahreza, F.M.A.; Riyadi, D.; Shiddiqi, A.M. AFAR-YOLO: An Adaptive YOLO Object Detection Framework. In Proceedings of the 2024 ASU International Conference in Emerging Technologies for Sustainability and Intelligent Systems (ICETSIS), Manama, Bahrain, 28–29 January 2024; pp. 594–598. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Paul, A.; Machavaram, R.; Kumar, D.; Nagar, H. Smart solutions for capsicum Harvesting: Unleashing the power of YOLO for Detection, Segmentation, growth stage Classification, Counting, and real-time mobile identification. Comput. Electron. Agric. 2024, 219, 108832. [Google Scholar] [CrossRef]

- Selvam, N.A.M.B.; Ahmad, Z.; Mohtar, I.A. Real time ripe palm oil bunch detection using YOLO V3 algorithm. In Proceedings of the 2021 IEEE 19th Student Conference on Research and Development (SCOReD), Kota Kinabalu, Malaysia, 23–25 November 2021; pp. 323–328. [Google Scholar]

- Aljaafreh, A.; Elzagzoug, E.Y.; Abukhait, J.; Soliman, A.-H.; Alja’afreh, S.S.; Sivanathan, A.; Hughes, J. A Real-Time Olive Fruit Detection for Harvesting Robot Based on YOLO Algorithms. Acta Technol. Agric. 2023, 26, 121–132. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row detection BASED navigation and guidance for agricultural robots and autonomous vehicles in row-crop fields: Methods and applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Yang, Q.; Du, X.; Wang, Z.; Meng, Z.; Ma, Z.; Zhang, Q. A review of core agricultural robot technologies for crop productions. Comput. Electron. Agric. 2023, 206, 107701. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Zhou, J.; Yungbluth, D.; Vong, C.N.; Scaboo, A.; Zhou, J. Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sens. 2019, 11, 2075. [Google Scholar] [CrossRef]

- Chong, A.-M.; Yeo, B.-C.; Lim, W.-S. Integration of UWB RSS to Wi-Fi RSS fingerprinting-based indoor positioning system. Cogent Eng. 2022, 9, 2087364. [Google Scholar] [CrossRef]

- Alsmadi, L.; Kong, X.; Sandrasegaran, K.; Fang, G. An Improved Indoor Positioning Accuracy Using Filtered RSSI and Beacon Weight. IEEE Sens. J. 2021, 21, 18205–18213. [Google Scholar] [CrossRef]

- Wang, J.; Park, J. An Enhanced Indoor Positioning Algorithm Based on Fingerprint Using Fine-Grained CSI and RSSI Measurements of IEEE 802.11n WLAN. Sensors 2021, 21, 2769. [Google Scholar] [CrossRef] [PubMed]

- Lou, X.; Ye, K.; Jiang, R.; Wang, S. Research and Implementation of Mono-Anchor AOA Positioning System Based on UWB. In Proceedings of the The International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery, Xi’an, China, 1–3 August 2020. [Google Scholar]

- Zhang, K.; Shen, C.; Aslam, B.U.; Long, K.; Chen, X.; Li, N. Simulation Optimization of AOA Estimation Algorithm Based on MIMO UWB Communication System. In Proceedings of the 2nd International Conference on Information Technologies and Electrical Engineering, Changsha, China, 6–7 December 2019. [Google Scholar] [CrossRef]

- Wang, T.; Man, Y.; Shen, Y. A Deep Learning Based AoA Estimation Method in NLOS Environments. In Proceedings of the 2021 IEEE Globecom Workshops (GC Wkshps), Madrid, Spain, 7–11 December 2021. [Google Scholar] [CrossRef]

- Sidorenko, J.; Schatz, V.; Scherer-Negenborn, N.; Arens, M.; Hugentobler, U. Error corrections for ultra-wideband ranging. IEEE Trans. Instrum. Meas. 2020, 69, 9037–9047. [Google Scholar] [CrossRef]

- Li, D.; Chen, L.; Hu, J.; Wu, H. Research on UWB Positioning Based on Improved ABC Algorithm. In Proceedings of the 2021 4th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Yibin, China, 20–22 August 2021. [Google Scholar] [CrossRef]

- Vecchia, D.; Corbalan, P.; Istomin, T.; Picco, G.P. TALLA: Large-scale TDoA Localization with Ultra-wideband Radios. In Proceedings of the IEEE 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, Z.; Xiao, G.; Liu, H.; Wei, H. Multi-Sensor Measurement and Data Fusion. IEEE Instrum. Meas. Mag. 2022, 25, 28–36. [Google Scholar] [CrossRef]

- de Farias, C.M.; Pirmez, L.; Fortino, G.; Guerrieri, A. A multi-sensor data fusion technique using data correlations among multiple applications. Future Gener. Comput. Syst. 2018, 92, 109–118. [Google Scholar] [CrossRef]

- Senel, N.; Kefferpütz, K.; Doycheva, K.; Elger, G. Multi-Sensor Data Fusion for Real-Time Multi-Object Tracking. Processes 2023, 11, 501. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Wang, W.; Ran, Y.; Tan, Z.; Luo, M. A Review of Multi-Sensor Fusion SLAM Systems Based on 3D LIDAR. Remote Sens. 2022, 14, 2835. [Google Scholar] [CrossRef]

- Shin, Y.-S.; Park, Y.S.; Kim, A. DVL-SLAM: Sparse depth enhanced direct visual-LiDAR SLAM. Auton. Robot. 2019, 44, 115–130. [Google Scholar] [CrossRef]

- Kang, H.; Wang, X.; Chen, C. Accurate fruit localization using high resolution LiDAR-camera fusion and instance segmentation. Comput. Electron. Agric. 2022, 203, 107450. [Google Scholar] [CrossRef]

- Mai, N.A.M.; Duthon, P.; Khoudour, L.; Crouzil, A.; Velastin, S.A. 3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions. Sensors 2021, 21, 6711. [Google Scholar] [CrossRef]

- Mai, N.A.M.; Duthon, P.; Salmane, P.H.; Khoudour, L.; Crouzil, A.; Velastin, S.A. Camera and LiDAR analysis for 3D object detection in foggy weather conditions. In Proceedings of the International Conference on Pattern Recognition Systems (ICPRS), Saint-Etienne, France, 7–10 June 2022. [Google Scholar] [CrossRef]

- Lv, M.; Wei, H.; Fu, X.; Wang, W.; Zhou, D. A Loosely Coupled Extended Kalman Filter Algorithm for Agricultural Scene-Based Multi-Sensor Fusion. Front. Plant Sci. 2022, 13, 849260. [Google Scholar] [CrossRef]

- Gao, P.; Lee, H.; Jeon, C.-W.; Yun, C.; Kim, H.-J.; Wang, W.; Liang, G.; Chen, Y.; Zhang, Z.; Han, X. Improved Position Estimation Algorithm of Agricultural Mobile Robots Based on Multisensor Fusion and Autoencoder Neural Network. Sensors 2022, 22, 1522. [Google Scholar] [CrossRef]

- Tang, B.; Guo, Z.; Huang, C.; Huai, S.; Gai, J. A Fruit-Tree Mapping System for Semi-Structured Orchards based on Multi-Sensor-Fusion SLAM. IEEE Access, 2024; early access. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, H.; Zhang, F.; Zhang, B.; Tao, S.; Li, H.; Qi, K.; Zhang, S.; Ninomiya, S.; Mu, Y. Real-Time Localization and Colorful Three-Dimensional Mapping of Orchards Based on Multi-Sensor Fusion Using Extended Kalman Filter. Agronomy 2023, 13, 2158. [Google Scholar] [CrossRef]

- Ding, H.; Zhang, B.; Zhou, J.; Yan, Y.; Tian, G.; Gu, B. Recent developments and applications of simultaneous localization and mapping in agriculture. J. Field Robot. 2022, 39, 956–983. [Google Scholar] [CrossRef]

- Xie, B.; Jin, Y.; Faheem, M.; Gao, W.; Liu, J.; Jiang, H.; Cai, L.; Li, Y. Research progress of autonomous navigation technology for multi-agricultural scenes. Comput. Electron. Agric. 2022, 211, 107963. [Google Scholar] [CrossRef]

- Dyson, J.; Mancini, A.; Frontoni, E.; Zingaretti, P. Deep Learning for Soil and Crop Segmentation from Remotely Sensed Data. Remote. Sens. 2019, 11, 1859. [Google Scholar] [CrossRef]

- Azadnia, R.; Jahanbakhshi, A.; Rashidi, S.; Khajehzadeh, M.; Bazyar, P. Developing an automated monitoring system for fast and accurate prediction of soil texture using an image-based deep learning network and machine vision system. Measurement 2021, 190, 110669. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Klopfenstein, A.; Douridas, N.; Shearer, S. Integration of high resolution remotely sensed data and machine learning techniques for spatial prediction of soil properties and corn yield. Comput. Electron. Agric. 2018, 153, 213–225. [Google Scholar] [CrossRef]

- Du, Y.; Mallajosyula, B.; Sun, D.; Chen, J.; Zhao, Z.; Rahman, M.; Quadir, M.; Jawed, M.K. A Low-cost Robot with Autonomous Recharge and Navigation for Weed Control in Fields with Narrow Row Spacing. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3263–3270. [Google Scholar] [CrossRef]

- Paul, S.; Jhamb, B.; Mishra, D.; Kumar, M.S. Edge loss functions for deep-learning depth-map. Mach. Learn. Appl. 2022, 7, 100218. [Google Scholar] [CrossRef]

- Chen, C.-J.; Huang, Y.-Y.; Li, Y.-S.; Chen, Y.-C.; Chang, C.-Y.; Huang, Y.-M. Identification of Fruit Tree Pests With Deep Learning on Embedded Drone to Achieve Accurate Pesticide Spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Sorbelli, F.B.; Palazzetti, L.; Pinotti, C.M. YOLO-based detection of Halyomorpha halys in orchards using RGB cameras and drones. Comput. Electron. Agric. 2023, 213, 108228. [Google Scholar] [CrossRef]

- Xu, K.; Zhu, Y.; Cao, W.; Jiang, X.; Jiang, Z.; Li, S.; Ni, J. Multi-Modal Deep Learning for Weeds Detection in Wheat Field Based on RGB-D Images. Front. Plant Sci. 2021, 12, 732968. [Google Scholar] [CrossRef] [PubMed]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Islam, M.H.; Wadud, M.F.; Rahman, M.R.; Alam, A.S. Greenhouse Monitoring and Harvesting Mobile Robot with 6DOF Manipulator Utilizing ROS, Inverse Kinematics and Deep Learning Models. Ph.D. Dissertation, Brac University, Dhaka, Bangladesh, 2022. Available online: http://hdl.handle.net/10361/21813 (accessed on 20 January 2022).

- Aghi, D.; Cerrato, S.; Mazzia, V.; Chiaberge, M. Deep Semantic Segmentation at the Edge for Autonomous Navigation in Vineyard Rows. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3421–3428. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Chen, G.; Zhao, D. Target recognition method of green pepper harvesting robot based on manifold ranking. Comput. Electron. Agric. 2020, 177, 105663. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of Fruit-Bearing Branches and Localization of Litchi Clusters for Vision-Based Harvesting Robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Adhikari, S.P.; Kim, G.; Kim, H. Deep Neural Network-Based System for Autonomous Navigation in Paddy Field. IEEE Access 2020, 8, 71272–71278. [Google Scholar] [CrossRef]

- Balaska, V.; Adamidou, Z.; Vryzas, Z.; Gasteratos, A. Sustainable Crop Protection via Robotics and Artificial Intelligence Solutions. Machines 2023, 11, 774. [Google Scholar] [CrossRef]

| Model | Research Object | Technical Characteristics and Performance Indicators | References |

|---|---|---|---|

| Faster R-CNN | Farmland in a natural environment | This study utilized an enhanced Faster R-CNN to identify agricultural pests under natural environmental conditions; the mAP has increased from 62.88% to 87.7%, and the time consumption has increased by 12.29 milliseconds. | [13] |

| White grub, Helicoverpa, and Spodoptera | The Faster R-CNN architecture proposed in this study, based on deep learning using TensorFlow (TensorFlow v2.6) for multi-class pest detection and classification, achieved an insect detection rate of 91.02%. | [14] | |

| Actual field scene. | This study utilized an improved Faster R-CNN for pest detection, achieving 84.96% mAP with a 5% accuracy improvement observed for 10 common pest samples. | [15] | |

| Faster R-CNN | Apple | This study employed an enhanced Faster R-CNN to detect pests on apples in orchards, achieving an average precision mAP) of 89.34%. The model showed improved fitting, with training speed increasing by 59%. | [16] |

| Target detection and behavior prediction | This study employs an improved Faster R-CNN for action recognition, effectively detecting actions in images with an mAP of 67.2%, an increase of 14 percentage points. | [27] | |

| Plant Diseases Detection | This study compares the improved Faster R-CNN before and after enhancement and finds that with a training epoch of 20,000, PD-IFRCNN can enhance the model’s generalization capability without sacrificing detection accuracy, thus mitigating overfitting. | [29] | |

| ResNet | Soybean | This study combined the ResNet network with Attention and designed the classifier as a multi-class Support Vector Machine (SVM) for learning. Experimental results demonstrate that the model achieved an accuracy of 94.57 ± 0.19%. | [19] |

| Injurious insect | This study proposes a method to enhance automation by manually segmenting images or extracting features in the preprocessing stage. When ResNet-50 is applied in a transfer learning paradigm, the model achieves a typical classification accuracy of 99.40% in pest detection. | [20] | |

| Injurious insect | This study introduces the ResNet50-SA model, which combines the self-attention mechanism with ResNet. This model achieves a detection accuracy of 99.80% for specific pests. | [21] | |

| Aphid, Red Spider, Locust et al. | This study utilized the improved S-ResNet for specific pest identification. Compared to meta-models with depths of 18, 30, and 50 layers, this model achieved a 7% improvement in recognition accuracy. | [22] | |

| Rice Plant Diseases | This study employed an improved ResNet model for identifying and detecting rice diseases, achieving a positional accuracy of 88.90% with the model used in this research. | [23] |

| Model | Research Object | Technical Characteristics and Performance Indicators | References |

|---|---|---|---|

| Image Processing | Tomato | This experiment employed classical image processing methods combined with the YOLOv5 network for tomato harvesting, achieving a harvesting success rate of 90%. | [39] |

| Tomato | This study employed computer vision and image processing techniques to detect the ripeness of tomatoes, enabling accurate differentiation between fully ripe and nearly ripe fruits. | [41] | |

| Strawberry and Apple | This experiment utilized color thresholding for fruit detection and classification, achieving a detection accuracy of over 90%. | [42] | |

| YOLO | Cherry Fruit | This study employed an optimized YOLO-V4 algorithm for cherry fruit detection, achieving an accuracy of 95.2%. The YOLO-V4-Dense model exhibited a 0.15 increase in mAP compared to the YOLO-V4 model. | [45] |

| Capsicum | This study explores various YOLO algorithms to enhance pepper harvesting. Among these, the YOLOv8s model achieved a mAP of 0.967 at a 0.5 Intersection over Union threshold, marking it as the most successful model. | [46] | |

| Palm Oil | This study utilizes an enhanced version of the YOLOv3 algorithm for real-time detection and harvesting of oil palm bunches, achieving a 95.18% mAP at the 5000th iteration. | [47] | |

| Olive Fruit | This study utilized an improved YOLOv5 network model for detecting olives, achieving high-precision detection of olive fruits with mAP_0.5 exceeding 0.75. | [48] |

| Positioning Technology | Positioning Accuracy | Spectral Range | Positioning Strengths and Weaknesses |

|---|---|---|---|

| Wi-Fi | 2~50 m | 2.4 GHz, 5 GHz | Low cost, strong communication capability, but susceptible to environmental interference. |

| Bluetooth | 2~10 m | 2.4 GH~2.4835 GHz | Easy to integrate and popularize; however, it has limited transmission distances and lower stability. |

| ZigBee | 1~2 m | 2.4 GHz, 816/915 MHz | Low power consumption and cost-effective; however, it exhibits poor stability and susceptibility to environmental interference. |

| Infrared | 5~10 m | 0.3~400 THz | While it offers high positioning accuracy, it is limited by short line-of-sight and transmission distances and is susceptible to interference. |

| RFID | 0.05~5 m | LF: 120~150 kHz HF: 13.56 MHz UHF: 433 MHz, 800/900 MHz, 2.45 GHz, 5.8 GHz | Economical with high precision yet limited by short positioning distance. |

| UWB | 6~10 cm | 3.1~10.6 GHz | The system features centimeter-level high positioning accuracy, robust anti-interference capabilities, efficient real-time transmission, stability, and strong signal penetration. Nevertheless, this advanced performance is accompanied by higher implementation costs. Additionally, its accuracy is susceptible to non-line-of-sight errors, multipath effects, challenges in clock synchronization, and variations in the spatial arrangement of base stations. |

| Application Environment (A) | Transmission Loss Coefficient | The Signal Power Value at Distance A (dBm) | Random Variable |

|---|---|---|---|

| Indoor environment with line-of-sight (LOS) | 1.7 | 5.07 | 2.22 |

| Indoor environment with non-line-of-sight (NLOS) | 4.58 | 3.64 | 3.51 |

| Outdoor environment LOS (line-of-sight). | 1.76 | 4.86 | 0.83 |

| Outdoor environment NLOS (non-line-of-sight) | 2.5 | 4.23 | 2 |

| Product Name | AOA Positioning Base Station | AOA Asset Tracking Beacon | |

|---|---|---|---|

| Product Model | CL-GA10-P | CL-TA40 | |

| Product Parameters | Network Interface | 10/100M RJ45 | power switch |

| Power Supply | standard PoE | button cell battery | |

| Technical Specifications | Installation | standard Ethernet interface | standard Ethernet and Bluetooth interface |

| Data Interface | The maximum coverage radius extends to twice the installation height of the base station, with an upper limit for installation height set at 10 m. | Within the permissible range, the height typically does not exceed 10 m. | |

| General Parameters | Positioning Accuracy | 0.1–1 m | Less than 1 m |

| Operating Temperature | −20~60 °C | −20~60 °C | |

| Type | Mechanical Type | Semi-Solid-State Type | Solid-State Type | |||

|---|---|---|---|---|---|---|

| Architecture | Mechanical Rotation | MEMS | Mirror | Prism | FLASH | OPA |

| Technical features include | Mechanical components drive the launcher to rotate and pitch. | Utilizes microscanning mirror to reflect laser light. | Motor-driven mirror rotation while the transceiver module remains stationary. | Uses polygonal, irregular mirrors for non-repetitive scanning. | Employs short-pulsed lasers for large area coverage, followed by high-sensitivity detectors for image detection. | Adjusts the phase of each phase shifter in the array phase shifter to emit light in a specific direction using interferometric principles. |

| Advantages | Fast scanning speed, 5–20 revolutions per second; high accuracy. | Reduced moving parts, improved reliability, lower cost. | Low power consumption, high accuracy, lower cost. | High point cloud density, capable of long-range detection. | Small size, large amount of information, simple structure. | Fast scanning speed, high accuracy, lower cost after mass production. |

| Disadvantages | Poor stability, high automotive-grade costs, manual assembly involvement, low reliability, and short lifespan. | Limited detectionangles, significant difficulty in electroplating adjustment links if splicing is required, and a short lifespan. | Low signal-to-noise ratio, limited detection distance segment, and restricted FOV (Field of View) angle. | Complex mechanical structure prone to bearing or bushing wear. | Low detection accuracy and short detection distance. | Stringent requirements for materials and processes are leading to current high costs. |

| Cost | Over USD 3000. | Over USD 500 to USD 1000. | From USD 500 to USD 1200. | USD 800. | Expected to drop below USD 500 after mass production. | Expected to drop below USD 200 after mass production. |

| Compare | Average of Absolute Value of Errors in x, y, and z Directions | Mean Square Deviation of Errors in x, y, and z Directions | ||||

|---|---|---|---|---|---|---|

| Axis | x/m | y/m | z/m | x/m | y/m | z/m |

| Fusion-groundtruth | 4.5948 | 14.9209 | 4.7763 | 2.6959 | 2.2211 | 2.2949 |

| MSCKF-groundtruth | 20.9995 | 75.3966 | 54.2210 | 35.0502 | 96.6908 | 77.0708 |

| IMU-ndtruth | 45.2532 | 75.0033 | 0.8808 | 25.8619 | 44.9099 | 0.2860 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Chen, L.; Huang, Z.; Zhang, W.; Wu, S. A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots. Processes 2024, 12, 1833. https://doi.org/10.3390/pr12091833

Wang R, Chen L, Huang Z, Zhang W, Wu S. A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots. Processes. 2024; 12(9):1833. https://doi.org/10.3390/pr12091833

Chicago/Turabian StyleWang, Ruyi, Linhong Chen, Zhike Huang, Wei Zhang, and Shenglin Wu. 2024. "A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots" Processes 12, no. 9: 1833. https://doi.org/10.3390/pr12091833

APA StyleWang, R., Chen, L., Huang, Z., Zhang, W., & Wu, S. (2024). A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots. Processes, 12(9), 1833. https://doi.org/10.3390/pr12091833