Abstract

Batch processes in the biopharmaceutical and chemical manufacturing industries often develop new products to meet changing market demands. When the dynamic models of these new products are trained, dynamic modeling with limited data for each product can lead to inaccurate results. One solution is to extract useful knowledge from past historical production data that can be applied to the product of a new grade. In this way, the model can be built quickly without having to wait for additional modeling data. In this study, a subspace identification combined common feature learning scheme is proposed to quickly learn a model of a new grade. The proposed modified state-space model contains common and special parameter matrices. Past batch data can be used to train common parameter matrices. Then, the parameters can be directly transferred into a new SID model for a new grade of the product. The new SID model can be quickly well trained even though there is a limited batch of data. The effectiveness of the proposed algorithm is demonstrated in a numerical example and a case of an industrial penicillin process. In these cases, the proposed common feature extraction for the SID learning framework can achieve higher performance in the multi-input and multi-output batch process regression problem.

1. Introduction

The batch process is more flexible than the large-scale continuous production process as the former can better respond to market changes and customer needs. Contrary to the era of big data advocated for in recent years, in many fields, such as chemical process control [1,2] and military electronic product production [3], data are collected with diverse small batch production models employed to cope with rapid product changes [4]. Moreover, the difficulty in collecting data involves many key variables [5], resulting in the problem of small samples in the batch process. Such small samples are called the “low-N problem” [6]. It is difficult to obtain sufficient batches of production data in a certain manufacturing period because of the long operation time, complex operation steps, expensive costs for data collection, and insufficient manpower and material resources. It is not uncommon for the new chemical compounds to run only once or twice in the production equipment, resulting in a low-N scenario in the production of new products [7].

The problem of small samples has been studied in many fields. Some solutions have been used to enhance the robustness of the results obtained from the follow-up training by adding more information to the data or to assist the training of the target by transferring knowledge or data from other aspects [8]. Tulsyan et al. [7] considered using the data sampled repeatedly by hardware to train a GP-based generator and generate a large number of data; then, the authors used the generated data for further process modeling. This method of requiring hardware to repeat sampling may not be implemented in every process; in particular, the measurement data of some key variables are difficult to obtain many times. In addition, the quality of the samples generated by the generator providing auxiliary information is not as good as expected. Zhang et al. [9] combined the physical model and data-driven model, and then a hybrid model framework was proposed to model the process with fewer data in some operating conditions and applied to the dynamic model prediction of algae lutein synthesis. This method is not for practical applications as it is often difficult to obtain the mathematical model in most of the complicated batch processes.

In the industrial batch process, there may be a number of data for other similar products produced in the past. As the current producing product and the past similar product data are measured in the same process, they must share some similar or even the same features. It is reasonable to extract the information from data in these different grades of products that are highly relevant to the current (or target) produced product. Then, the target process model with the transferred data information can be enhanced. Jaeckle et al. [10] proposed extended principal component regression (EPCR), which combines the output data of similar old processes with the output data of the new process for calculation. However, EPCR does not consider the input information of the process, which is unfavorable for a regression problem. Muñoz et al. [11] extended EPCR, considered the input information, and proposed Joint-Y PLS (JY-PLS). In JY-PLS, the variables of the output data are supposed to have the same statistical distributions. Recently, the method based on JY-PLS has been applied to process quality prediction and soft sensing [12,13]. Chu et al. [14] considered the shortage of new data in the initial operation stage of the batch and used the latent variable process migration model (LV-PTM) to transfer similar process data to the new batch of the initial stage. These transfer-based methods require sufficient source data from the same source, which may not be applicable to all kinds of small data scenarios. The above methods based on transfer learning allow the target to transfer knowledge from the data of a single source. However, the data from a single source often does not have enough information as a reliable source of knowledge. The data collected are often diverse, and the number of individual data is small. Therefore, it is necessary to maximize and efficiently use all source data.

Yamaguchi et al. [15] used multi-task learning (MTL) to address the data scarcity of each product in the multi-level batch process. By sharing useful information among multiple related grade products, MTL can improve the accuracy of the model in the case of data shortage. Although MTL makes use of the data of all small sample products for mutual assistance and complementarity, it does not focus on the modeling and analysis of new products. It also does not provide the common information of all collected data. To obtain knowledge transferred from the multiple tasks for the learning of new tasks, Tripuraneni et al. [16] proposed a method to learn common feature representation from multiple tasks, so the data of the new task can be projected into this representation, which can reduce the number of samples required to find the best regressor on the new task. Combining data from multiple products with small samples can help compensate for the limitations of using the data from a single source. However, in the research conducted by Tripuraneni et al. [16], only the common feature space of the input was extracted without considering the dynamic variables. It is impossible to extract shared knowledge of industrial processes in general dynamic MIMO systems.

In this study, a method for batch process modeling with the low-N problem is proposed. The method extracts common features from multi-grade batch process data and incorporates these features into the subspace identification for the new batch process modeling. The problem and novelties considered in this study are summarized as follows:

- The proposed method considers the characteristics of batch processes, including their multi-input and multi-output structures, their dynamic behavior, and the issue of the uneven length of collected data. The dynamic behavior of the batch process is described using a linear time-invariant state-space (LTI-SS) model, and the original data are used for modeling without the need for data warping.

- To extract common parts from the historical data, we propose a modified version of the state-space model, which further divides the original model parameters into common and individual parts.

- When using the proposed model, the input-output equation derived from the proposed LTI-SS model has two coupled common features. We introduce the technique of oblique projection to separate the two parameters so that it can avoid computationally expensive iterative solutions and problems that may not converge.

- Based on the two separate sets of equations, we derive and organize their final solutions, which can be obtained by calculating the eigenvalue decomposition.

- Based on the modified state-space model, we combine it with the subspace identification method, and through the substitution of common knowledge, we can effectively improve the modeling performance in the case of a few samples.

The rest of this article is organized as follows. In Section 2, the input-output equation and the problem to be solved are specifically defined. Section 3 introduces the oblique projection to separate the input-output equation into z-space and u-space, respectively. In Section 4, the common feature parameter matrices in the model are estimated by solving the objective function defined in Section 3. In Section 5, the calculated common feature parameter matrices are applied to model the batch data of a new task. Then, the model of the new task can be quickly identified even though there is a small number of batch data in the new task. In Section 6, two examples, including a numerical case and a case of industrial penicillin production, show the performance of the proposed method. In Section 7, the conclusion is given. Finally, to enable readers to quickly and clearly understand the meaning of each symbol, a table is included in Nomenclature; it allows readers to easily compare and refer to it while reading.

2. Problem Formulation and Description

The chemical batch process often exhibits nonlinear and dynamic behaviors. Measurements of process variables are expected to be strongly serially correlated. There are additional characteristics of the long duration and operation in different operating conditions at different phases. To ensure the process is stirred evenly and the reaction is complete, the process is deliberately operated in a fixed operating condition for a while at each operation phase. Thus, the local behavior at each operation phase tends to be linear. To produce different grades of products, the batch process is operated in different operating conditions at different phases, but there are similar behaviors in the physical or chemical properties in each production process [17]. Thus, very limited batch data are often available for each grade of the operating batch, particularly in the new grade produced at the moment of the initial operation period. Only using the limited data of the new batch is certainly insufficient for establishing reliable models, while the model trained by directly extracting knowledge from all grades of batch data has a bias in favor of the graded products with more batch data.

To obtain common knowledge from different grade sources, consider different types of batch production processes, which are diverse but similar. The dataset of the task (the production grade) is:

where ; is the number of batches in the production grade , and are the input data and the output data at the th sampling time of the th batch data in the production grade , respectively, ; , , and is the operation time of the th batch in the production grade .

Similarly, for the modeling of new tasks, new batches in the production grade are

To properly describe the dynamic characteristics of the operating batch process, the state-space model is appropriate for multi-input and multi-output dynamic processes. Thus, for the production grade of the operating batch process, a linear time-invariant state-space model for each phase can be written by:

where and are -dimensional system output and -dimensional system states of the th batch in the production grade at the sampling time , respectively. is the -dimensional system input of batch in the production at the time point . It is assumed to have Gaussian distribution. is an -dimensional additional noise of batch in the production grade at the time point . It is independent of and follows the Gaussian distribution. , , and are the parameters of batch production grade . Some common features ( and ) in the parameters and are shared in all different grades, expressed as:

where and have orthogonal column vectors and orthogonal row vectors, respectively, and and are the remaining parts for each grade. With the common features ( and ), Equation (3) can be rewritten as

Equation (5) is then expressed in the form of the Hankel matrices.

where all of the Hankel matrices have a dynamic window size of , and the number of moving windows is :

As the state term of the system is unknown, the past input and output data are used to approximate it:

where

Equation (6) can be further rewritten as:

Now (Equation (11)) and (Equation (12)) are substituted into Equation (14), expressed as:

To obtain a more compact expression, some notations shown as follows are defined.

Thus, Equation (15) becomes:

Then, each column in Equation (18) can be written as:

where and are unknown parameter matrices to be estimated. In this work, an oblique projection, which is used to solve and , is discussed in the next subsection.

3. Model Decomposition into the z-Space and the u-Space Using Oblique Projection

Since and in Equation (18) are coupled together, to avoid solving these two parameters with the iterative way, the technique of oblique projection [18] is applied here to separate Equation (18) into two subspaces which lie in and , respectively. The oblique projection represents the projection onto along the direction parallel to the input vector .

where is the projection operator for computing orthogonal complementary spaces of . With Equations (19) and (20), it is projected along the input matrix onto , expressed as:

According to the assumption of data and . Define the notation ; then, the above equation can be further expressed as:

By combining all of the batch data ; ; , one wants to search for the parameter matrices, and . Assume that has the orthogonal structure, and then minimize the sum of squared error defined by:

where is the Frobenius norm, and .

and

Equation (23) is called the z-space model.

Similarly, using the oblique projection method, Equation (19) is projected onto along with the matrix of the past inputs and the outputs .

where is the projection operator for computing orthogonal complementary spaces of . With Equations (19) and (26), it is projected along onto the input matrix , and both sides multiplied by can expressed as:

where , and . Define notation ; then, the above equation can be further expressed as:

Similarly, all batch data ; ; are combined. One wants to search for the parameter matrices, and . First, assume that has the orthogonal structure; then, minimize the sum of squared errors defined by:

where

and

Equation (29) is called the u-space model. Thus, the common parameter matrices ( and ) and the individual parameter matrices ( and ) of the model defined in Equation (19) can be solved by separately minimizing the two objective functions.

4. Common Feature Parameter Matrices ( and ) Estimation

4.1. and Estimation in the z-Space

To solve Equation (23), first consider the loss function for each sub-task parameter, expressed as:

Taking Equation (32) derivative with respect to (w.r.t.) each ; is:

Then, the solution to ; is directly given.

With the expression of the optimal model parameter , Equation (34) is substituted into the loss function (Equation (32)); then, the optimization problem for the common feature parameter becomes:

According to the definition of the Frobenius norm, Equation (35) can be expanded as:

Considering the part related to , the objective function (36) can be transformed to:

The gradient of the objective function at the optimum must be zero. Then, Equation (36) can be written as:

where is Lagrange multiplier, and

Compute the eigen-decomposition of , and let consist of the eigenvectors of that have the largest eigenvalues. Once is obtained, can be directly calculated in Equation (34).

4.2. and Estimation in the u-Space

Similar to the procedure for solving Equation (29), consider the loss function for each sub-task parameter:

where . By taking Equation (40) derivative w.r.t. each and setting it equal to zero, one can obtain:

Then, the solution to ; is directly given.

With the expression of the optimal model parameter matrix , Equation (42) is substituted into the objective function (Equation (29)); then, the optimization problem for the common feature parameter becomes:

With the definition of the Frobenius norm, the loss function in Equation (43) can be expanded as:

Considering the part related to , the objective function (43) can be transformed to:

Solving Equation (44) is a nonlinear optimization problem. To reduce the complexity of nonlinear calculation, is calculated row by row. First, take the first-row vector of in Equation (45) and make it a derivative w.r.t. , which is expressed by:

where , ; . Let , and . Equation (46) can be compactly expressed as

where

Equation (47) is a nonlinear eigenvalue problem associated with the extracted eigenvector. For this problem, an iterative algorithm can be used [19]. In the first iteration, with the guessed , the left-hand side of Equation (47) can be computed and an approximation to the eigenvector associated with the smallest eigenvalue of can be estimated. Then, the left-hand side of Equation (47) with the new computed is updated, and the updated eigenvector associated with the smallest eigenvalue of can be estimated. The above same procedure is repeated until has no significant change. Once the optimal result is found, the deflation technique is used to remove the support and the query sets related to the direction :

Then, the second parameter vector of is solved based on Equation (47). The above procedure is repeated until the desired number of vectors is obtained. The whole iterative procedure originated is applied to solving Equation (47) as shown in Algorithm 1.

| Algorithm 1: Iterative procedure for the of the parameter estimation. |

| Input: , for and |

Process:

|

| Output: |

For clarity, the entire procedure for estimating the parameter matrices () of the model is summarized as shown in Algorithm 2.

| Algorithm 2: Algorithm for the estimation of the common features and . |

| Input: The normalized set data, The dynamic step size The common feature parameter sizes and |

Process:

|

| Output: The common feature parameters and |

5. Refining Parameter Matrices Using Testing Sets

Subspace Identification with the Common Feature Parameter Matrices

Once the common feature parameter matrices and are estimated using the meta-training sets, the pre-trained model structure and the corresponding parameter matrices will be transferred to the new batch . Then, the new batch with limited batch data can be quickly learned. Substituting and into Equation (18), the input-output matrix equation of the th batch process is:

where the batch grade index is omitted as the new batch has only one grade.

The first three terms of the right-hand side of Equation (50) are combined

where , , , , and .

For the solution of Equation (53), the sub-blocks ( and ) of all batches horizontally concatenate as follows:

Then, the objective function is written as:

The solution to Equation (55) can be obtained using the least-squares method, and can be solved uniquely.

To further obtain the state-space model parameters, the estimated parameter is divided into the corresponding matrix:

According to Equations (6) and (18), . Decompose with SVD:

where is the dominant singular value. The observability matrix and the state sequence of the system can be directly determined from the above SVD:

According to the definition of , the parameter matrix () in the new batch production is:

According to the model in Equation (3), the estimated results and are substituted to obtain:

In addition, the parameter matrices and in Equations (61) and (62) can be obtained using the least-squares method.

6. Case Study

Two cases were used to verify the proposed MT-L SID: a numerical example and an industrial penicillin batch production process.

6.1. Numerical Example

Consider a batch system whose model generates input and output data according to Equation (5). Each stage of batch production is regarded as a task (). The input and output numbers are and , respectively. The order of the system state is . The input of the system () is random samples generated from the Gaussian distribution , and the noise of the system is . The parameter matrices and with and shared in all batches are randomly generated through the Gaussian distribution and orthogonalized. The parameters, , , and vary with different tasks (batch grades). They are randomly generated with the Gaussian distributions , , and , respectively. The initial state of each grade of the batch is . Assume that there are different batch grades in the training phase. The data generation of the new batch grade is still based on the same above assumptions of model parameter matrices.

To verify the proposed common feature extraction scheme for identifying the subspace model, four cases are demonstrated, including (1) a varying number of grades, (2) a varying number of data in each batch grade, (3) identifying the subspace model for a new grade, and (4) modeling performance with the different common feature parameters and . The LF-MoM method in [16] was adapted to calculate Equation (28), and the results were used to compare the proposed methods. The principal angle was used to evaluate the difference between the estimated common feature parameter and the actual one. The concept of principal angles between subspaces was first introduced by [20]. Then, Hotelling and Harold [21] defined the form of the canonical relationship of principal angles in the statistical theory. In this paper, the calculation method of the principal angle is based on singular value decomposition (SVD) [22,23].

When using the proposed method to extract common knowledge, the adjustable input parameters are listed in Algorithm 2, including the dynamic window size, , and the dimensions and of the two common parameters.

The selection of parameter is used to extract dynamic features of the process, which theoretically must be greater than or equal to the potential state order of the model. In the case of , the performance of the identification will not significantly improve and may even decrease. The larger is, the more parameters must be calculated, and a higher data volume is required. In the case of fewer samples, the modeling performance will decrease. On the other hand, in the case of , it cannot adequately reflect the dynamic characteristics of the process, and the resulting model cannot effectively describe the process behavior. Therefore, it is necessary to adjust appropriately when identifying batch processes with a few samples:

- (1)

- Varying numbers of grades

Assume that each grade has only two batch data for the common feature extraction stage. Take the number of samples in each training batch. The batch indicator is omitted in this case. The input and output Hankel matrices , , and are arranged by taking and () from the training data. Then, and are obtained by the oblique projection.

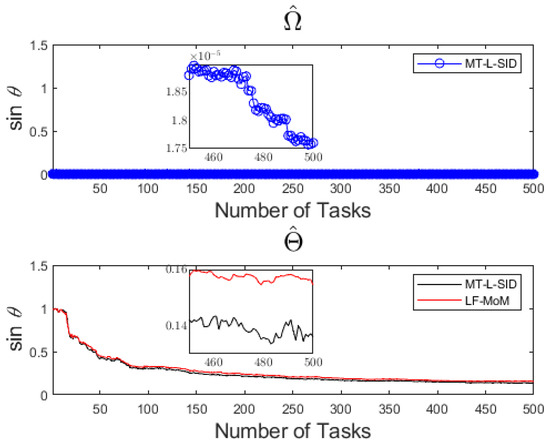

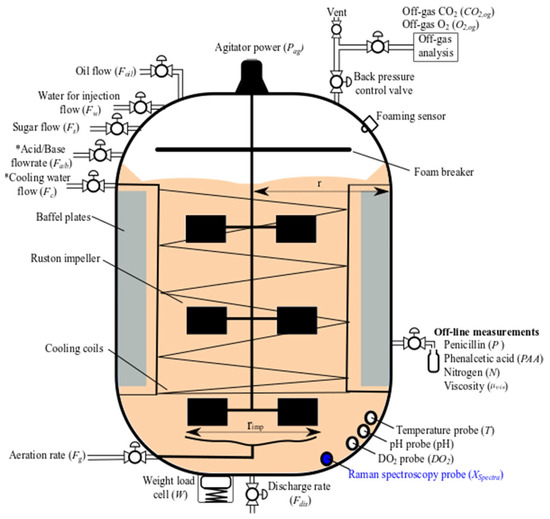

With different grade numbers, , the common feature parameter matrices ( and ) were estimated. The sine values of the principal angles between the estimated common feature parameter matrices and the actual common feature parameter matrices are shown in Figure 1. The x-axis in Figure 1 is the number of tasks used in the common feature extraction stage, and the y-axis is the sine value of the principal angle between the actual and the estimated common feature parameter matrices. The smaller is, the closer the estimated and the actual parameter matrices are. The common characteristic parameters of output variables were not discussed in [17], so only the MT-L SID test results are available. The results show that the accuracy of the parameter matrix can be achieved with fewer data. In the estimation of the parameter matrix , both the results of MT-L SID and LF-MoM are to be improved and need more training grades than the estimated parameter matrix , as the former is for the transmitter model, while the latter is for the emission model. More training grades are to be included for estimating a good parameter matrix . Furthermore, the results show that MT-L SID can achieve a slightly better performance than LF-MoM.

Figure 1.

Sine values of principal angles between estimated and actual , and between estimated and actual , respectively, with a varying number of tasks.

- (2)

- Varying numbers of data in each batch grade

In the second case, the number of grades was fixed at , but different numbers of training samples were applied. With the different samples in each batch, the corresponding dynamic windows of the Hankel matrix were changed from 31 to 500, but the window size remained at . The principal angles between the estimated common feature parameter matrices and the actual common feature parameter matrices are shown in Figure 2. The x-axis of Figure 2 represents the number of dynamic windows contained in each training batch, and the y-axis is the sine value of the principal angle between actual and estimated common feature parameter matrices. Similar to Case 1, the parameter matrix with fewer data samples still can provide a good model performance. At the same grade number, increasing the number of dynamic windows can improve the accuracy of the parameter matrix to a certain extent. In addition, estimated from MT-L SID showed better accuracy than LF-MoM.

Figure 2.

Sine value of the principal angle between estimated and actual , and between the estimated and actual , respectively, with varying numbers of dynamic windows.

- (3)

- System identification of a new grade

A new grade of the product was produced through a single batch. The system model of the new grade was then identified. The new grade in the testing stage had one batch for training and one for testing. The data of one new batch were arranged into the Hankel matrix with . With the common feature parameter matrices and obtained with 500 tasks and , the model parameter matrices and were identified by Equation (56); the corresponding parameter matrices (, , and ) of the state-space model can be calculated using Equations (57)–(60).

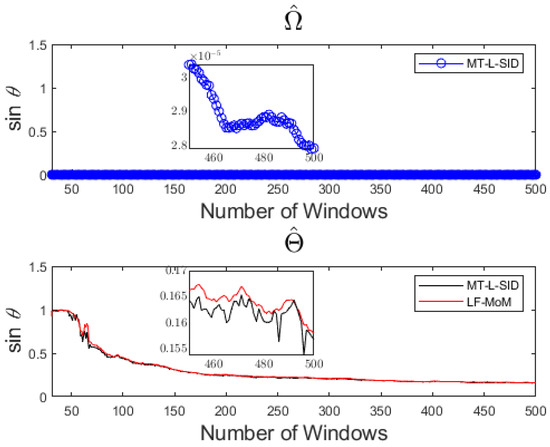

Three models, including the conventional subspace identification (SID), the SID combining the common feature parameters estimated from MT-L SID, and the SID from LF-MoM were, respectively, used for comparisons. Figure 3 shows the poles of the estimated batch parameter matrix with different numbers of samples for the three models. The black asterisk and the blue, cyan, and red circles represent the actual model, SID, MT-L SID, and SID with common feature parameters estimated from LF-MoM, respectively. In Figure 3a, when the data are insufficient (), the proposed MT-L SID outperformed other models. With the increasing number of training samples, all models were very close to the actual ones in Figure 3b with and in Figure 3c with . As for all the case studies, the proposed learning scheme can significantly reduce the training sample requirements in each grade compared to the other modeling schemes.

Figure 3.

Comparison of poles calculated by two different models and the actual model in different training samples: (a) , (b) , and (c) .

In the testing stage, the demand for training data can be reduced by including common feature parameter matrices when the target batch data are insufficient. If the target batch data collected in the test phase are already sufficient, then the model directly using only the target batch data without common feature parameters can achieve accurate estimated parameters or better. In this case, adding the common feature parameters for estimation becomes unnecessary. However, at the stage of modeling a new batch process, the proposed learning scheme plays an important role.

- Modeling performance with the different common feature parameters and

In the fourth case, quantity and batch grade data were considered to compare the support of selecting different common parameter structures in modeling new grades. Assume there are 100 grades of data, each with two batches of data, and each batch has an operating time of . In terms of parameter settings, take ; . Consider the case where and have different dimensions. Substitute the calculated common feature parameters into the modeling of new product grade data, and further calculate the MSE from the trained model parameters on the test data as an indicator to measure the transfer performance. The calculated results are shown in Table 1.

Table 1.

MSE calculation with different structures of common feature parameters.

From Table 1, it can be seen that the best modeling performance can be achieved when the dimensions of the common parameters are the same as the real dimensions. The dimensions and of the common parameters determine the amount of information transferred from historical data to new batch product modeling. If the selected dimension is greater than the potential actual dimension, then there is still information that can be transferred but not used. If it is smaller than the potential actual dimension, then it will compress the size of the structure describing individual parameters, so the description of the process in system identification is probably under-fitting. Therefore, similarly, the dimensions of common parameters also require several attempts to choose a more appropriate size.

6.2. Industrial Process for Penicillin Fermentation

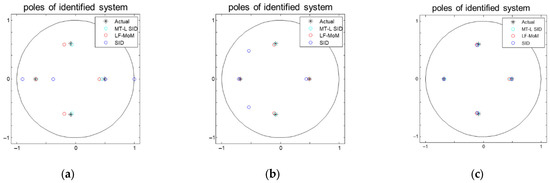

A simulation of industrial-scale penicillin (IndPen) production performed by the authors of [24] was used here to validate the proposed method. The simulation was developed using the historical batch records of a 100,000 L penicillin fermentation using a high-yielding industrial strain of Penicillium chrysogenum, and all the available process inputs and outputs were accurately simulated. At the same time, IndPen also integrated real Raman spectroscopy equipment. In addition to modeling all required online and offline variables, IndPen also considered the growth, morphology, metabolites, and degradation of Penicillium chrysogenum fermentation on a large scale. The process flow sheet from [24] is shown in Figure 4. Further details of the model can be found in [24,25].

Figure 4.

Flowchart of IndPen fermentation process. The * in the figure represents the variable controlled by the PID controller and the highlighted variable in blue indicates that the variable can be freely selected to be recorded and used to control PAA.

The input variables and online collected output variables of the fed-batch penicillin process are listed in Table 2. In the penicillin production process, the sampling time of the variable was 24 min. The variables were divided into two parts for control, namely automatic control variables and manual control variables. The temperature and pH were controlled by automatic control variables and regulated by a proportional integral differential (PID) feedback loop. In the manual control variables, the substrate flow rate and the phenylacetic acid flow rate were controlled by the recipe-driven method following the fixed curve of the whole batch or by the operator operating the fixed curve of the whole batch (depending on the operator). This control mode copied the control actions observed by the operator manually adjusting the whole batch of the substrate flow rate and the phenylacetic acid flow rate.

Table 2.

Input and output variables of the IndPen fermentation process.

In IndPen, the operation mode was adjusted as follows.

- Changing environment temperature.

- Control strategy (recipe-driven (i.e., SBC)).

- Option to include inhibitory effects on the growth rates during DO2, N, and PAA limitation, as well as during excessive PAA and CO2 concentrations and sub-optimal T and pH operation.

- Whether to use Raman spectroscopy to control PAA.

According to the selection of the above modes, 14 different modes were collected. The first 10 modes were operated in ambient temperature condition 1: substrate feed temperature 288 K, substrate feed cold water 288 K, air temperature 290 K, and inlet coolant temperature 285 K. The operation mode setting under these temperature conditions is shown in Table 3. The operation mode setting under temperature condition 2—substrate feed temperature 293 K, substrate supply cold water 293 K, air temperature 298 K, and inlet coolant temperature 288 K—is shown in Table 3.

Table 3.

Batch runs of product of each operation mode at ambient temperature condition 1.

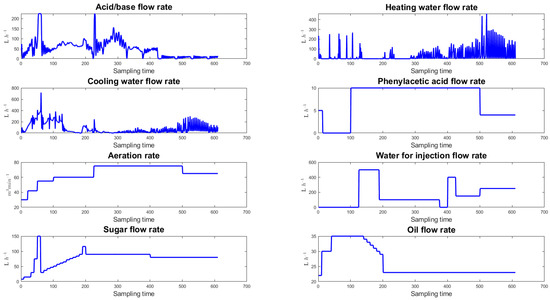

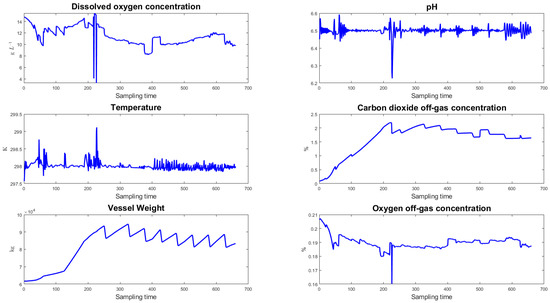

According to the variables in Table 2, the input and output profiles of mode 1 are shown in Figure 5 and Figure 6, respectively.

Figure 5.

The profile of input variables.

Figure 6.

The profile of output variables.

In the case of a small sample of batch data, operation mode 10 was selected for validation. The common knowledge was extracted from the data of other modes excluding the test. The proposed MT-L SID was compared with the combination of LF-MoM and SID and the conventional SID.

- Few-shot learning in mode 10 data

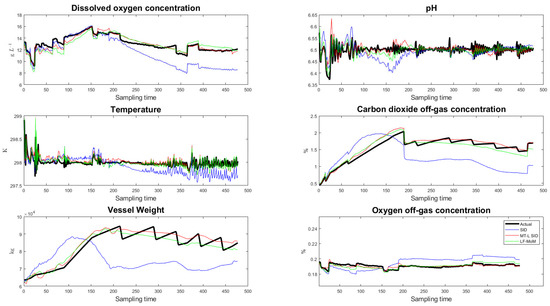

Considering the modeling of fed-batch penicillin process in operation mode 10, the common feature extraction involved using the batch data in other modes, and each of them was five batches. The dimensions of the common feature parameter matrices with were and . In the testing stage, nine batch data from mode 10 were used for training, and the rest of the batch was used for verification. The results are shown in Figure 7.

Figure 7.

Predicted outputs in operation mode 10.

In Figure 7, the black lines are the actual outputs, the blue lines are the predicted outputs without using common feature parameter matrices for modeling, the red lines are the predicted outputs of the MT-L SID, and the green lines are the predicted outputs of the model with LF-MoM. Although the outputs of the red lines do not completely follow the outputs well, the proposed learning model can better describe the process behaviors than the model established without using common feature parameter matrices and SID with LF-MoM. The MSE of these methods with five operation conditions (modes) are shown in Table 4. In Table 4, the process variables are scaled to the same size. The results show that in few-shot batch modeling, the performance of the proposed MT-L SID was helpful and better than LF-MoM and conventional SID.

Table 4.

MSEs of SID, MT-L SID, and LF-MoM models in the IndPen fermentation process case.

7. Conclusions

The data collected from the industrial batch process sometimes encounter the low-N problem. Such a small number of batch-run data will greatly degrade the performance of data-driven models. In this study, multitask-learning SID was proposed for modeling batch processes, which can transfer knowledge from multiple batch process data. The proposed method uses historical batch data with similar multiple batch production to train the state-space model and extract common feature parameter matrices. Then, during modeling, a batch with a new grade, as well as the input and output variables of the historical batch data, is projected into the common feature space to reduce the sample requirements for the training of new model parameters. Thus, the model of a new batch with a few batch data can be effectively and quickly constructed. In addition, the purpose of using oblique projection in our method is to avoid solving the common parameters of two objective functions simultaneously during the solution process. This technique can decouple the two parameters so that the solutions of the two parameters do not affect each other. According to the objective functions we have defined, the derivation result of solving the common parameters corresponds to solving the eigenvalue problem. The common parameters correspond to the maximum eigenvalue of the data matrix after the oblique projection transformation. The contributions of this study are summarized as follows:

- A modified linear time-invariant state-space model was proposed, which separates the common features and individual features corresponding to the input and input parameters so that it can be used to express the extraction of common features in the multi-input, multi-output dynamic system.

- The multi-task learning-based N4SID, called MT-L SID, was developed. The proposed SID model of the common feature parameters was learned from historical batch data. It can be effectively applied to the batch process with new grades. Then, the data requirement of a new batch process modeling can be reduced.

- With the oblique projection, MT-L SID can separate input-output equations to calculate the common feature parameter matrices. The calculations are straightforward without heavy iterations.

Finally, the proposed method was validated based on a numerical example and an industrial penicillin production process. In the generated numerical examples, increasing the number of batches (tasks) or the amount of data in individual batches improved the accuracy of common feature parameters. In the production of penicillin, the proposed method showed better prediction performance in the case of a few samples.

Author Contributions

Conceptualization, S.G., J.C. and L.X.; formal analysis, S.G. and J.C.; investigation, S.G., J.C. and L.X.; methodology, S.G. and J.C.; resources, S.G. and J.C.; software, S.G.; supervision, S.G., J.C. and L.X.; visualization, S.G.; writing—original draft, S.G. and J.C.; writing—review and editing, S.G. and J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology, Taiwan (MOST 109-2221-E-033-060-MY3), and National Natural Science Foundation of P.R. China (62073286).

Data Availability Statement

Data are available upon request due to restrictions privacy. The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| The data set of batch grade | |

| Number of batches in grade | |

| Dynamic window size | |

| System state of batch in grade at time | |

| System input of batch in grade at time | |

| System output of batch in grade at time | |

| Additional noise of batch in grade at time | |

| Parameter of grade | |

| Individual part of parameter | |

| Common part of parameter | |

| Common part of parameter | |

| Extended of parameter | |

| projection onto along the direction parallel to | |

| Total batch data of | |

| Total batch data of | |

| Number of grades | |

| Operation time of batch in grade | |

| Number of moving windows | |

| Number of system states | |

| Number of system inputs | |

| Number of system outputs | |

| Parameter of grade | |

| Parameter of grade | |

| Individual part of parameter | |

| Dimention of parameter | |

| Dimention of parameter | |

| Extended of parameter | |

| projection onto along the direction parallel to | |

| Total batch of | |

| Total batch of |

References

- Song, H.; Xu, R.; Wang, C. Research on statistical process control method for multi-variety and small batch production mode. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 2377–2381. [Google Scholar]

- Wang, J.; Zhang, T.; Wang, C.; Shi, X. Optimizing the Uncertainty of PPM on Small Batch of Quality Data. In Proceedings of the 2021 IEEE 6th International Conference on Smart Cloud (SmartCloud), Newark, NJ, USA, 6–8 November 2021; pp. 107–110. [Google Scholar]

- Cao, Y.; Feng, Z.; Jiang, Q. Automatic Data Acquisition Technology for SMT Manufacturing Based on Multi-Variety and Small-Batch. In Proceedings of the Seventh Asia International Symposium on Mechatronics; Springer: Berlin/Heidelberg, Germany, 2020; pp. 528–537. [Google Scholar] [CrossRef]

- Li, Q.; Wei, F.; Zhou, S. Early warning systems for multi-variety and small batch manufacturing based on active learning. J. Intell. Fuzzy Syst. 2017, 33, 2945–2952. [Google Scholar] [CrossRef]

- Cui, L.; Peng, Y.; Ding, L.; Lu, D. An improved batch fluidized drying experimental design based on digital sensors and a minicomputer. Eng. Rep. 2021, 3, e12366. [Google Scholar] [CrossRef]

- Tulsyan, A.; Garvin, C.; Ündey, C. Advances in industrial biopharmaceutical batch process monitoring: Machine-learning methods for small data problems. Biotechnol. Bioeng. 2018, 115, 1915–1924. [Google Scholar] [CrossRef] [PubMed]

- Tulsyan, A.; Garvin, C.; Undey, C. Industrial batch process monitoring with limited data. J. Process Control 2019, 77, 114–133. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Zhang, D.; Del Rio-Chanona, E.A.; Petsagkourakis, P.; Wagner, J. Hybrid physics-based and data-driven modeling for bioprocess online simulation and optimization. Biotechnol. Bioeng. 2019, 116, 2919–2930. [Google Scholar] [CrossRef] [PubMed]

- Jaeckle, C.M.; MacGregor, J.F. Product transfer between plants using historical process data. AIChE J. 2000, 46, 1989–1997. [Google Scholar] [CrossRef]

- Muñoz, S.G.; MacGregor, J.F.; Kourti, T. Product transfer between sites using Joint-Y PLS. Chemom. Intell. Lab. Syst. 2005, 79, 101–114. [Google Scholar] [CrossRef]

- Rudnitskaya, A.; Costa, A.M.S.; Delgadillo, I. Calibration update strategies for an array of potentiometric chemical sensors. Sens. Actuators B Chem. 2017, 238, 1181–1189. [Google Scholar] [CrossRef]

- Jia, R.; Zhang, S.; You, F. Transfer learning for end-product quality prediction of batch processes using domain-adaption joint-Y PLS. Comput. Chem. Eng. 2020, 140, 106943. [Google Scholar] [CrossRef]

- Chu, F.; Zhao, X.; Yao, Y.; Chen, T.; Wang, F. Transfer learning for batch process optimal control using LV-PTM and adaptive control strategy. J. Process Control 2019, 81, 197–208. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Yamashita, Y. Quality prediction for multi-grade batch process using sparse flexible clustered multi-task learning. Comput. Chem. Eng. 2021, 150, 107320. [Google Scholar] [CrossRef]

- Tripuraneni, N.; Jin, C.; Jordan, M. Provable meta-learning of linear representations. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 10434–10443. [Google Scholar]

- Lu, J.; Yao, K.; Gao, F. Process similarity and developing new process models through migration. AIChE J. 2009, 55, 2318–2328. [Google Scholar] [CrossRef]

- Behrens, R.T.; Scharf, L.L. Signal processing applications of oblique projection operators. IEEE Trans. Signal Process. 1994, 42, 1413–1424. [Google Scholar] [CrossRef]

- Li, Z.; Nie, F.; Chang, X.; Yang, Y. Beyond trace ratio: Weighted harmonic mean of trace ratios for multiclass discriminant analysis. IEEE Trans. Knowl. Data Eng. 2017, 29, 2100–2110. [Google Scholar] [CrossRef]

- Jordan, C. Essai sur la géométrie à n dimensions. Bull. Soc. Math. Fr. 1875, 3, 103–174. [Google Scholar] [CrossRef]

- Hotelling, H. Relations between two sets of variates. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 162–190. [Google Scholar] [CrossRef]

- Björck, Ȧ.; Golub, G.H. Numerical methods for computing angles between linear subspaces. Math. Comput. 1973, 27, 579–594. [Google Scholar] [CrossRef]

- Golub, G.H.; Zha, H. The canonical correlations of matrix pairs and their numerical computation. In Linear Algebra for Signal Processing; Springer: Berlin/Heidelberg, Germany, 1995; pp. 27–49. [Google Scholar] [CrossRef]

- Goldrick, S.; Ştefan, A.; Lovett, D.; Montague, G.; Lennox, B. The development of an industrial-scale fed-batch fermentation simulation. J. Biotechnol. 2015, 193, 70–82. [Google Scholar] [CrossRef] [PubMed]

- Goldrick, S.; Duran-Villalobos, C.A.; Jankauskas, K.; Lovett, D.; Farid, S.S.; Lennox, B. Modern day monitoring and control challenges outlined on an industrial-scale benchmark fermentation process. Comput. Chem. Eng. 2019, 130, 106471. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).