Abstract

Several metaheuristic algorithms have been implemented to solve global optimization issues. Nevertheless, these approaches require more enhancement to strike a suitable harmony between exploration and exploitation. Consequently, this paper proposes improving the arithmetic optimization algorithm (AOA) to solve engineering optimization issues based on the cuckoo search algorithm called AOACS. The developed approach uses cuckoo search algorithm operators to improve the ability of the exploitation operations of AOA. AOACS enhances the convergence ratio of the presented technique to find the optimum solution. The performance of the AOACS is examined using 23 benchmark functions and CEC-2019 functions to show the ability of the proposed work to solve different numerical optimization problems. The proposed AOACS is evaluated using four engineering design problems: the welded beam, the three-bar truss, the stepped cantilever beam, and the speed reducer design. Finally, the results of the proposed approach are compared with state-of-the-art approaches to prove the performance of the proposed AOACS approach. The results illustrated an outperformance of AOACS compared to other methods of performance measurement.

1. Introduction

Increasingly complicated optimization issues have arisen due to the rapid expansion of numerous application domains. Traditional optimization techniques take too much time and money to solve these new optimization challenges. It is common knowledge that exact and rigorous answers are not required in most situations [1]. That is, due to the significantly reduced time and costs, estimated ideal solutions can be acceptable in practice. In order to address these non-convex, non-linear limitations and difficult optimization problems, numerous optimization algorithms have been introduced in recent years. These algorithms have proven to be quite successful in solving these real-world problems.

The use of optimization techniques to address issues in the actual world is common. These challenging, nonlinear, and multimodal real-world issues often need the use of metaheuristic algorithms, which have proven to be reliable optimization techniques in such circumstances. Metaheuristic algorithms are popular because of their simplicity in design and implementation, gradient-freeness, and ability to work around obstacles.

Metaheuristic algorithms efficiently solve a wide range of real-world problems; this comes from the nature of these algorithms and adopts a gradient-free method. Various metaheuristic techniques have been released recently based on natural procedures, collaborative behavior, or scientific laws.

Four general categories can be used to categorize metaheuristic algorithms, as shown in Figure 1:

Figure 1.

Metaheuristic algorithms.

Human-based algorithms: Fireworks Algorithm (FW) [2], Child Drawing Development Optimization Algorithm (CDD) [3], Teaching-based learning algorithm (TBLA) [4], Socio Evolution & Learning Optimizer (SELO) [5], Genetic algorithm (GA) [6], and Harmony Search (HS) [7]. Swarm-based algorithms: Particle Swarm Optimization (PSO) [8], Prairie Dog Optimization Algorithm (PDOA) [9], Grasshopper Optimization Algorithm (GOA) [10], Moth Flame Optimization (MFO) [11], Firefly Algorithm (FA) [12], Aquila Optimizer (AO) [13], and Ant lion optimizer [14]. Evolutionary algorithms (EA): Backtracking Search Optimization Algorithm (BTSO) [15], Evolutionary Strategies algorithm (ES) [15], Differential evolution (DE) [16], Genetic Algorithm (GA) [6], and Tree Growth Algorithm (TGA) [17]. Physics-based algorithms: Multi-verse Optimizer (MVO) [18], Black Hole Algorithm (BHA) [19], Space Gravitational Algorithm (SGA) [20], The arithmetic optimization algorithm (AOA) [21], and Henry Gas Solubility Optimization (HGSO) [22]. An overview of the given method is presented in Table 1.

Table 1.

An overview of the presented methods.

Still, not all of the issues can be resolved by these techniques [23,24]. Heuristic algorithms are currently used to address optimization problems in many different disciplines, including optimal power flow problems and parameter optimization of photovoltaic models [25,26]. Therefore, in the face of complex difficulties, we must provide algorithms with more efficiency [27].

A population-based metaheuristic method called the arithmetic optimization algorithm (AOA) was just recently proposed. The approach is based on how the addition, subtraction, multiplication, and division arithmetic operators behave with respect to distributivity [21].

The AOA algorithm proves its stable and robust performance in different fields, such as data clustering, power systems, power controllers, feature selection, and image processing. In this paper [28], the authors propose an improved AOA algorithm based on flow direction for data clustering. The proposed algorithm is validated on different data clustering problems and outperforms compared to other algorithms. Elkasem, Ahmed HA, et al. present an approach to using fuzzy logic and AOA algorithms to enhance the performance of power controllers, such as the proportional-integral-derivative (PID); the conducted results show the superiority of the PID based on fuzzy logic and AOA [29]. A binary version of AOA extracts and selects features from images to detect osteosarcoma. AOA with different algorithms accurately classifies the images [30]. Ewees, Ahmed A., et al. accelerated the AOA algorithm with hybridization of AOA and genetic algorithms to enhance the algorithm search method. The proposed algorithm is implemented on the Cox proportional hazards method [31].

The AOA algorithm is widely used for solving several optimization problems because of the simplicity, robustness, and effectiveness of the results in terms of solving optimization problems [32]. However, AOA would also easily fall in the local optima for optimizing some complex issues, and the exploration and exploitation capabilities are less significant [33].

The primary function in avoiding local optima and balancing exploitation and exploration in the fundamental AOA algorithm is played by the control parameter and the position vectors C_Iter. In order to integrate the advantages of AOA and CS, we present a hybrid approach in this study. To improve AOA’s search procedure and find solutions that are close to optimal, the AOACS is created. Specifically, a new formulation of the C_ Iter uses the CS algorithm.

This paper proposes a novel optimization algorithm based on the cuckoo search algorithm for solving engineering design problems. The algorithm is specifically designed to optimize arithmetic expressions that arise in engineering design problems, such as mathematical models for physical systems, circuits, or mechanical systems. The cuckoo search algorithm is a nature-inspired optimization algorithm that is based on the behavior of cuckoo birds. The algorithm is known for its ability to efficiently search large solution spaces and find optimal or near-optimal solutions. The proposed algorithm in this work enhances the cuckoo search algorithm by introducing an accelerated arithmetic optimization technique that exploits the mathematical structure of the optimization problem. The main objective of the proposed algorithm is to minimize the objective function, which represents the cost or performance of the system being optimized. The algorithm iteratively searches the solution space using a set of cuckoo nests, each of which contains a potential solution. The nests are updated using a set of optimization operators, such as mutation and crossover, which are used to generate new potential solutions. The performance of the proposed algorithm is evaluated using several benchmark functions and compared with other state-of-the-art optimization algorithms. The results show that the proposed algorithm outperforms other algorithms in terms of solution quality and convergence speed. The proposed algorithm has potential applications in various fields, such as aerospace engineering, mechanical engineering, and electrical engineering, where optimization of complex mathematical models is necessary.

The following is a summary of this paper’s significant contributions:

- We suggest a brand-new hybrid algorithm called AOACS based on the arithmetic optimization algorithm (AOA) and cuckoo search (CS) approach inspired by the AOA and CS algorithm design.

- CS aids the suggested algorithm in increasing the diversity of the original population and its capacity to depart from the local optimum.

- Enhanced AOA exploration and exploitation to increase convergence accuracy.

- Twenty-three benchmark functions and CEC-2019 functions are implemented to increase the ability of AOACS to solve several numerical optimization problems.

- The performance of AOACS is validated using three engineering optimization issues: the welded beam, the three-bar truss, the stepped cantilever beam, and the speed reducer design.

- The results indicate the out-performance of AOACS over the basic AOA, CS, and other metaheuristic approaches.

The following is the order of the paper: The AOA algorithm is presented in Section 2. The search algorithm for cuckoo is introduced in Section 3. Section 4 description of the proposed AOACS algorithm. Section 5 discusses the outcomes of applying the AOACS to engineering challenges. This article is concluded in Section 6.

2. Arithmetic Optimization Algorithm (AOA)

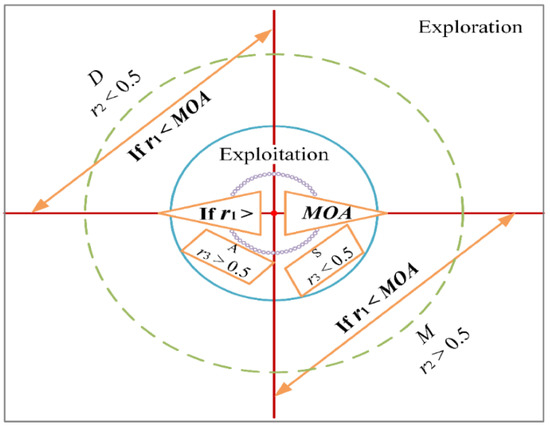

Using several equations and mathematical operators, Abualigah presented this approach in 2020 [21]. AOA mimics four basic arithmetic operators (i.e., Subtraction (S), Addition (A), Multiplication (M), Division (D)) and is used to update the positions and search for the optimal global solutions. For the exploration search, the Multiplication and Division operators are employed; on the other hand, the Addition and Subtraction operators are used to execute the exploitation search. Figure 2 shows the AOA optimization technique.

Figure 2.

The AOA optimization technique.

The AOA method begins with a population of unanticipated solutions, just like other metaheuristics. The objective value of each solution is computed after each iteration. Before changing the position of keys, two regulating parameters named MOA and MOP in this method should be modified as follows:

where MOA(t) denotes the result of the ith iteration of the function, the maximum number of repetitions is T, and the current number of repetitions is represented by t. Min and Max are accelerated processes of the minimum and maximum values.

where math optimizer probability (MOP) is a coefficient, MOP(t) is the procedure’s weight at the tth repetition, T is the highest repetitions number, t is the current repetition, and α illustrates a controlling value. Here, r1 is an integer that is created at random and MOP is a scaling parameter that encourages further exploration.

A random number named r1 is created to switch between exploitation and exploration after following MOA and MOP. The following formula is employed for exploration:

The current repetition is presented as t, µ is used as controlling element, ϵ is a small numeral to evade division by 0, and r3 is an arbitrary value in the range [0, 1]. The following formula is used for the exploitation.

where xi,j(t) demonstrates the jth placements of the ith answer at the recent repetition. The optimal (xj) is the jth placement in the optimal solution. Here, xi (t + 1) denotes the ith answer in the following repetition. Respectively, and define the placements upper and lower bound values. Algorithm 1 shows the pseudocode for the AOA algorithm.

| Algorithm 1 Pseudo-code of AOA algorithm. | ||

| Initialize the population size N and the maximum iteration T | ||

| Initialize the population size of each search agent Xi (I = 1,2, …, N) | ||

| While tT | ||

| Check if the position goes beyond the search space boundary and the adjust it. | ||

| Evaluate the fitness values of all search agents | ||

| Set Xbest as the position of current best solution | ||

| Calculate the MOA value using Equation (1) | ||

| Calculate the MOP value using Equation (2) | ||

| For i = 1 to N | ||

| If r1 MOA then | ||

| Update the search agent’s position using Equation (3) | ||

| Else | ||

| Update the search agent’s position using Equation (4) | ||

| End If | ||

| End For | ||

| t = t + 1 | ||

| End While | ||

| Return Xbest | ||

3. Cuckoo Search Algorithm

We initially idealize the main elements of the cuckoo-host strategy as a population of n cuckoos with n nests to discuss the cuckoo search algorithm as simply as possible. In true cuckoo-host systems, host bird nests usually contain three to four eggs or more, and by laying its eggs in such nests, a cuckoo can attack many nests. Because each cuckoo may only affect one host nest at a time while also applying one egg, the number of eggs, nests, and cuckoos is similar. Thus, an optimization problem’s solution vector, x, can be thought of as the location of an egg. As a result, there is no longer a need to distinguish between eggs, cuckoos, and nests. We essentially have the equivalence “egg = cuckoo = nest” as a result [34]. Algorithm 2 shows the pseudocode for AOA algorithm.

The initial population of the CS algorithm is generated by the Lévy flight algorithm. In the 1930s, the French mathematician Paul Pierre proposed the Lévy flight, a random walk mechanism whose walk steps fit the stable heavy-tail distribution that can make big jumps at nearby sites with a high probability. Sharp peaks, asymmetry, and lagging were features of the possibility density allocation of the Lévy flight. It moved in a rhythm that rotated between periodic short-distance jumps and sporadic long-distance hops, which can widen the search region for the population and jump out of the local optimal. In nature, numerous insects and animals, including flies and reindeer, fly in a manner resembling Lévy flying.

| Algorithm 2 Pseudo-code of Cuckoo search algorithm. | ||

| Objective function f | ||

| Generation t = 1 | ||

| Initial a population of n host nests | ||

| While (t Stop criterion) | ||

| Get a cuckoo (i) randomly by Lévy flight | ||

| Evaluate the fitness values of all search agents F | ||

| Choose a nest among n (such as c) randomly | ||

| If ) | ||

| Replace c by the new solution | ||

| End If | ||

| Abandon a faction () of the worst nests and build new ones | ||

| Keep the optimal solutions. | ||

| Rank the solutions an find the current best. | ||

| Update the generation number t = t + 1 | ||

| End While | ||

4. Hybridization of AOA with CS Algorithm

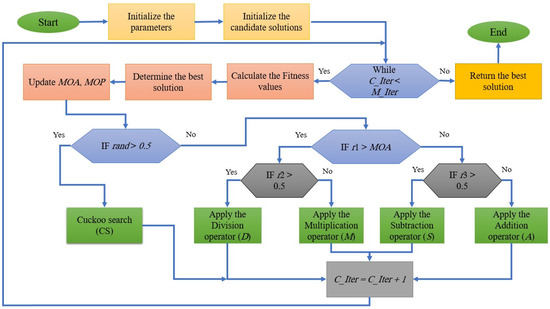

The elements in AOA swarms would hunt more randomly than CS swarms. However, elements in the AOA would execute poorer performance than those in the CS swarms during the exploitation operation. The exploitation ability of elements in AOA swarms would not be sufficient, and elements in CS swarms would be less qualified than those in AOA swarms despite the fact that both of these algorithms show a significant performance in optimization several problems. Therefore, it could be preferable if the exploitation process of people in CS swarms and the exploration process of elements in AOA swarms are coupled. Figure 3 illustrates the proposed AOACS algorithm.

Figure 3.

The proposed AOACS algorithm.

5. Results and Discussion

5.1. Benchmark Functions Description

Table 2 lists the 23 mathematical functions used, their categories, and their mathematical formulation. The unimodal benchmark functions in Table 2 are used because they only have one optimal solution, making them a good choice for testing the effectiveness of the proposed optimizer. Table 2 illustrates several mathematical functions. Multimodal functions contain some peaks, a few local optimums, and only one global optimum, which makes these benchmark functions the best option when assessing the exploration of the optimization process. Further, balancing the exploration and exploitation of any algorithm is a challenging assignment; thus, the fixed dimension numerical multimodal is used to prove the outperformance of the proposed algorithm. The minimum value for each function (fmin), the defined search space limitations, and the considered dimensions are demonstrated in Table 2.

Table 2.

Benchmark functions.

The proposed AOACS algorithm’s performance in this part is assessed in two steps; the first involves processing a complex set of mathematical benchmark functions. Second, two real word problems are solved using the proposed method to show the performance of the proposed work. The results are compared with different well-known algorithms, such as the original AOA, Whale optimization algorithm (WOA), Harris hawks optimization (HHO), Salp swarm algorithm (SSA), particle swarm optimization (PSO), and Slime mould algorithm (SMA). The selected methods are the most related and new in this domain. The worst, best, average, and standard deviation of the finesse values are the four metrics employed in the comparisons. Furthermore, the Wilcoxon summation rank is utilized to show the statistical distinctions between AOACS and the rest of the algorithms. Table 3 provides the values for the essential parameters for the used algorithms.

Table 3.

Parameter values of the proposed AOACS algorithm and other algorithms.

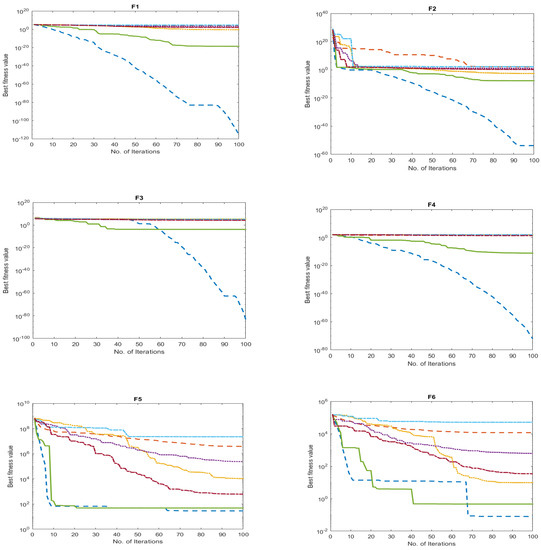

5.2. The Global Optimization Results

The suggested AOACS has been evaluated utilizing 23 more widely used mathematical functions in this area. With the help of several statistical analyses, the proposed AOACS’s results have been compared with many contemporary state-of-the-art methods to evaluate and show how well it handles problems involving global optimization. The original AOA [21], Whale optimization algorithm (WOA) [35], Harris hawks optimization (HHO) [36], Salp swarm algorithm (SSA) [37,38], particle swarm optimization (PSO), and Slime mould algorithm (SMA) [39] are among the algorithms that were taken into consideration.

The algorithms were applied with the same settings to ensure the fairness of the experimental results: population size was set to 30, and the maximum number of iterations was 500 for 30 separate instances. The study and simulations were conducted using Windows 10 and an Intel Core i7 processor running at 2.3 GHz with 16 GB of RAM. For a fair comparison, all competitors were run on the MATLAB 2018 platform.

5.2.1. Achieved Qualitative Results

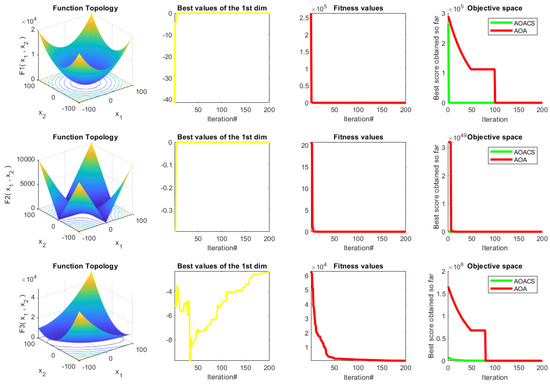

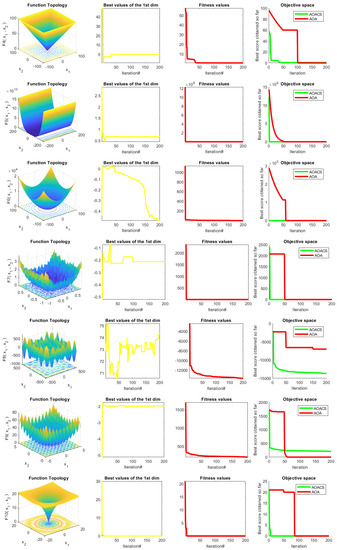

The AOACS behaviors regarding the trajectories and convergence are illustrated in Figure 4 to confirm the effectiveness of the proposed algorithm. The figure shows several results: functions plotted in 2D fashion appear in the first column. The second column represents the trajectory of the solution, followed by two columns, the fitness significance, and convergence, respectively.

Figure 4.

The achieved results of the tested qualitative analysis for 23 benchmark functions.

The solution’s magnitude and frequency in the initial iterations may be seen from the second column (trajectory behavior). They have almost completely disappeared in recent versions. This shows how AOACS had strong exploration capabilities in the early versions and strong exploitation capabilities in the later iterations. This tendency suggests that AOACS can find the ideal answer well. The ability of the AOACS to converge to highly qualified explanations in fewer repetitions is demonstrated by the average fitness value across all solutions among the number of repetitions shown in the third column of Figure 4. The average fitness value for the AOACS starts high in the early generations.

5.2.2. Results of Simulation of 23 Benchmark Functions and Discussions

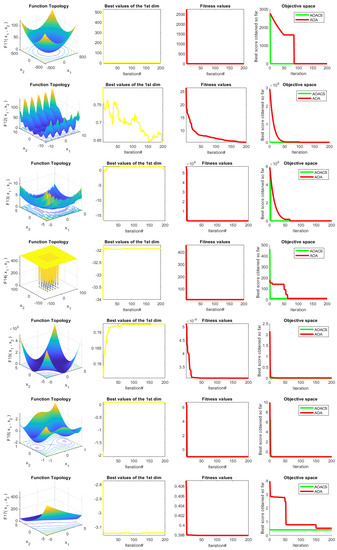

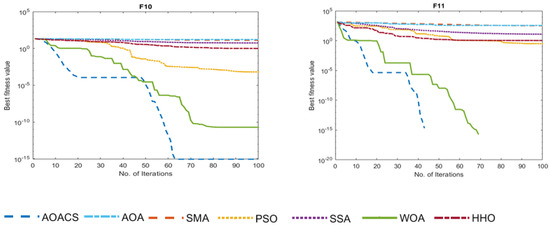

Figure 5 compares the convergence curves of the proposed AOACS with those of the basic AOA and cutting-edge methods to evaluate the effectiveness of the AOACS for the main balance target exploitation and exploration. In contrast to the SMA, PSO, SSA, WOA, and HHO, which experienced severe dormancy at the optimal local explanations, the results demonstrate the smooth convergence of the AOACS by reaching superior quality solutions.

Figure 5.

The comparison approaches behaved in terms of convergence for the test functions (F1–F6, F10, and F11), where the dimension was 10.

Table 4 statistics compare the performance of the AOACS to that of the standard AOA, PSO, WOA, SSA, SMA, HHO, and AOACS. It displayed the lowest values of the computed metrics in the functions of the worst, best, average, and standard deviation (STD) values by AOACS, demonstrating that it can outperform all other algorithms in around 50% of all the benchmarks that were taken into consideration (F: 2, 3, 5, 8, 9, 11, 12, 14, 19, 22). Furthermore, it performs similarly well in the other 50% of the relevant functions. The P-values acquired utilizing Wilcoxon summation rank with a significant value of 0.05, which is less than 0.05, demonstrate that the AOACS is superior to the SSA in 18 approaches. The null hypothesis test is therefore not accepted (h = 1 indicates a significant difference between the examined optimizers, AOACS and SSA). The P-values for PSO, SMA, HHO, WOA, SSA, and basic AOA demonstrate that the AOACS outperforms other algorithms in handling about 13 of the 23 functions; as a result, the null assumption examination is rejected (h = 1). Additionally, the proposed AOACS is compared to its equivalents while processing the 23 functions using the Friedman ranking test to determine where it ranks among them for further investigation. Table 5 lists the classes that were gained.

Table 4.

Results from methods of comparison on 23 benchmark functions (F1–F23), where the dimension is 10.

Table 5.

Twenty-three benchmark functions are used for the Friedman ranking test for comparative approaches, with a dimension of 10.

5.2.3. Scalability Study

In this section, the effectiveness of AOACS is assessed using 13 functions from Table 1 and the 10 CEC2019 benchmark functions.

- 1.

- Experiments on 13 benchmark functions:

The performance of AOACS is evaluated using 13 functions from Table 1 with a heightened size of 100 to determine the optimizer’s resilience as the size of the optimization issues it handles grows.

Table 6 presents the effects of the offered variant and the other techniques (PSO, WOA, SSA, SMA, HHO, and AOA) for the worst, best, average, and STD values. Additionally, Table 6 provides the P-value and null hypothesis test result for AOACS compared to the other methods using the Wilcoxon rank summation examination with a substantial difference of 0.05.

Table 6.

Results from methods of comparison on 23 benchmark functions (F1–F13), where the dimension is 50.

The statistics from Table 6 show how stable and effective the suggested AOACS is because it offers the best answers for all six functions (F1, F2, F3, F4, F9, and F11). In addition, compared to the other algorithms, it produces the most comparable outcomes for the best solutions of the other methods (F5, F6, F7, F8, F10). For 85% of the examined functions, the stated P-values are smaller than 0.05. AOACS is highly stable and superior for handling situations with high dimensions.

Table 7 computes the Friedman ranking test to highlight the noteworthiness of the suggested AOACS. The AOACS has the top rankings in nine of the thirteen benchmark functions that were analyzed as problems; as a result, it finally occupies the top spot in the queue of other high-dimensional problem-solving strategies. With an average rank almost as high as AOACS’s, unmodified AOA holds down the second spot. As a result, AOA offers higher-quality solutions for high-dimensional issues than modern state-of-the-art approaches.

Table 7.

Thirteen benchmark functions are used for the Friedman ranking test for comparative approaches, with a dimension of 50.

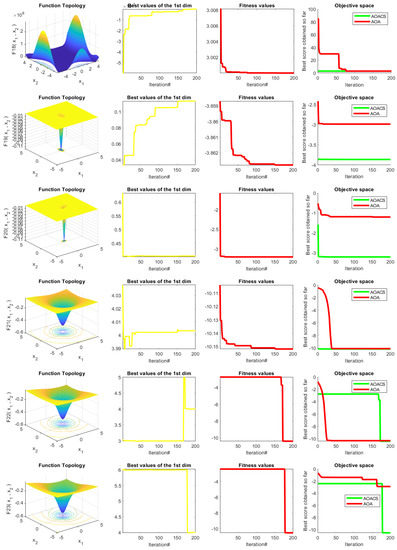

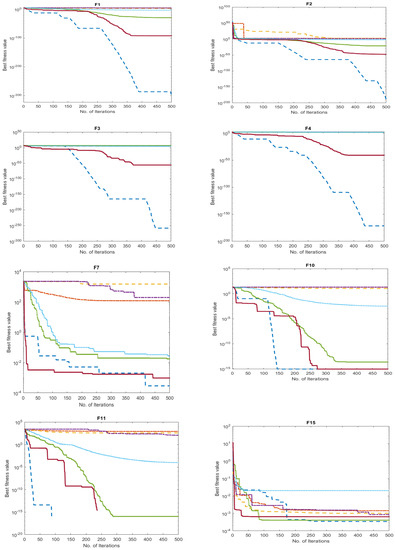

Figure 6 compares the proposed AOACS’s convergence curves to the original AOA and cutting-edge methods to evaluate the effectiveness of the AOACS’s exploration and exploitation. In contrast to the WOA, PSO, SSA, SMA, and HHO, which experienced severe recession at the local optimal solutions, the curves demonstrate the soft convergence of the AOACS by reaching superior quality solutions.

Figure 6.

Comparison of methods’ behavior regarding convergence on the test functions (F1–F4, F7, F10, F11, F15, F21–F23), where dimension 10 is present.

- 2.

- Experiments on 10 CEC2019 benchmark functions.

The translation trajectory function, the translation trajectory Schwefel function, the translation trajectory Lunacek double grating function, and the comprehensive Rosenbrock plus Griewangk function are the CEC1 through CEC4 test functions for CEC2020. These functions are divided into mixed functions (CEC5–CEC7) and compound functions (CEC8–CEC10). The best value, average value, and standard deviation for each algorithm were determined after 30 independent runs.

The output of each algorithm for each test function in the CEC2019 is shown in Table 8. The table shows that, regardless of the ideal or average value, the AOACS algorithm produced the best results in 10 benchmark functions and seven of the best outcomes. This demonstrates the AOACS algorithm’s powerful optimization impact. The AOACS algorithm was less stable than most other algorithms for CEC3, CEC8, and CEC9, but it was still better for STD in terms of numerical value.

Table 8.

Results from methods of comparison on 2019 benchmark functions (F1–F10).

The Friedman ranking test is computed in Table 9 to show how noteworthy the suggested AOACS are. The AOACS finally holds the top position in the queue of other high-dimensional problem-solving strategies since it has the highest rankings in six of the ten benchmark functions that were analyzed for problems. Unmodified AOA takes the runner-up position with an average rank nearly as high as AOACS’s.

Table 9.

Ten benchmark functions are used for the Friedman ranking test for comparative approaches.

6. AOACS for Solving Real Word Engineering Optimization Problems

This section uses the suggested algorithm to resolve four engineering design issues: the welded beam, the three-bar truss, the stepped cantilever beam, and the speed reducer design. A set of 30 solutions and 500 iterations are employed in each run to solve these issues.

The acquired results are contrasted with some related methods described in the literature. The results of the presented AOACS are contrasted with those of the most recent techniques in the subsections that follow. In order to assess the effectiveness of the suggested AOACS, bound-constrained and generally constrained optimization problems are used in this study. Each pattern variable is frequently required to give a boundary restriction for the bound-constrained optimization problems:

where is the total number of places given, and and stand for the position’s lower and upper bounds, respectively. Additionally, a general constrained problem is typically formulated as:

where there are p equilibrium constraints, and k different types of constraints. All of the constrained optimization issues in Equation (6) are mapped into the bound-constrained design in the performance evaluation of the proposed AOA by using the static cost function. A cost function will be incorporated into the underlying objective function for any infeasible solution. The static cost function is streamlined for ease of employment, and it is appropriate for all kinds of issues and calls for an auxiliary cost function. The aforementioned constrained optimization problem can be expressed as follows:

where and are typically charged a high amount; is the inaccuracy of equilibrium constraints and in this paper, we set it to . In optimization problems, constraints are used to restrict the values that the variables can take on. By properly handling constraints, we can find the optimal values of the variables that satisfy the constraints and obtain meaningful solutions to real-world problems [40].

Real word engineering design issues comprise nonlinear optimization problems attended by numerous complicated constraints and geometry. The proposed AOACS method is implemented in four issues (the welded beam design problem, the three-bar truss design problem, the stepped cantilever beam design, and the speed reducer design) to demonstrate how well it performs in optimization situations related to engineering problems. The proposed AOACS simulation is set as follows: the maximum iterations number is 200, the population size is set to 30, and the simulation runs 20 times. These details are well known to solve this kind of problem.

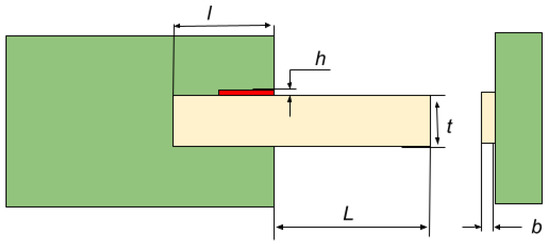

6.1. Welded Beam

One of the considerably well-known problem studies to assess algorithms’ effectiveness is the welded beam design problem. It was first put forth in [41] and sought to decrease the overall fabricating value of a welded beam by using four decision variables, as shown in Figure 7. These variables are the weld thickness (h), the joint beam’s length (l), its height (t), and its thickness (b).

Figure 7.

Welded beam.

The problem’s mathematical formulation and constraint functions are as follows:

Consider

Minimize

Subject to

Variable range

where

The proposed AOACS is implemented on the welded beam problem, and the result is compared with other metaheuristic approaches such as AOA, HHO, SSA, WOA, PSO, and SMA. Table 10 shows the achieved results; the variables h, l, t, and b are set as 1.96 × 10−1, 0.335 × 10−1, 0.904 × 10−1, and 2.06 × 10−1, respectively, and the optimal manufacturing cost of AOACS is 1.96 × 10−1. In this comparison, it is clear that AOACS produces better outcomes than all the other techniques. This demonstrates that AOACS is competitive in solving the welded beam design challenge.

Table 10.

Comparison of the achieved outcomes of different algorithms when solving the welded beam problem.

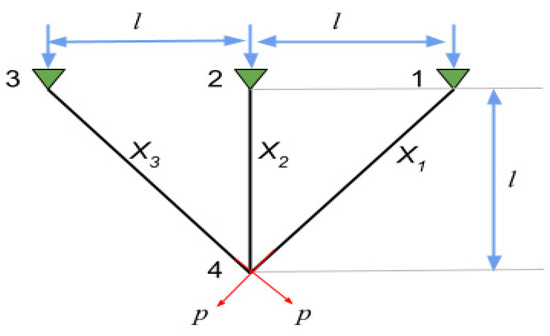

6.2. Three-Bar Truss

The three-bar truss design’s optimization goal is to reduce overall weight. Figure 8 depicts the three-bar truss construction together with its primary parameters, element 1’s cross-sectional site (X1) and element 2’s cross-sectional site (X2). Buckling, deflection, and stress are further limits on the issue.

Figure 8.

Three-Bar Truss Design.

The mathematical formulation is expressed as follows:

Consider

Minimize

Subject to

Variable range

where

Table 11 shows the performance of several algorithms, such as HHO, AOA, WOA, SSA, PSO, SMA, and the proposed AOACS algorithm for solving the problem of the three-bar truss design problem. The results demonstrate that the AOACS algorithm indicates an outperformance compared to other algorithms. The minimum weight is 2.6387 × 102, and the optimal values for X1() and X2() are 7.8859 × 10−1 and 4.0825 × 10−1, respectively. Thus, the AOACS has the promising prospect of solving such an issue with a minimal search area.

Table 11.

The achieved outcomes of several algorithms when solving the three-bar truss optimization issue.

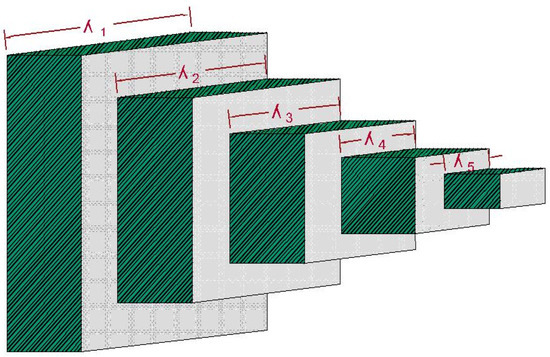

6.3. Stepped Cantilever Beam Design

As depicted in Figure 9, a stepped cantilever beam is kept at one end and loaded at the other. At a predetermined distance from the support, the beam must be capable of supporting the specified load. Each section’s width () can be altered by the beam’s designers. We assume that the length of each part of the cantilever is the same. The cantilever’s weight must be kept to a minimum in order to solve the cantilever beam design problem.

Figure 9.

Stepped Cantilever Beam Design.

The problem’s mathematical formulation and constraint functions are as follows:

Consider:

Minimize:

Subject to:

Variable range:

Table 12 displays the test findings for the cantilever beam design issue. The table shows that the weight acquired by the AOACS algorithm was the best weight (0.134 × 10−1) when compared to the HHO, WOA, SSA, PSO, and SMA algorithms, demonstrating the AOACS algorithm’s viability and efficiency in addressing the cantilever beam design problem.

Table 12.

The achieved outcomes of several algorithms when solving the stepped cantilever beam design issue.

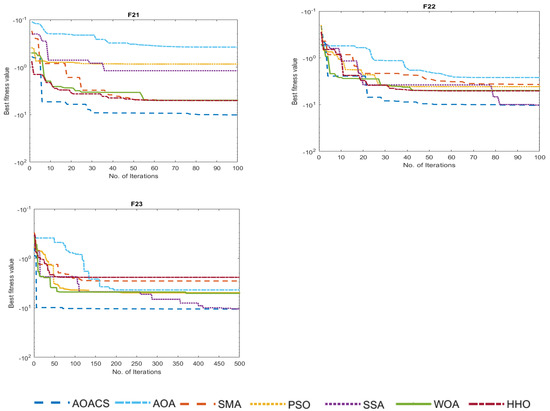

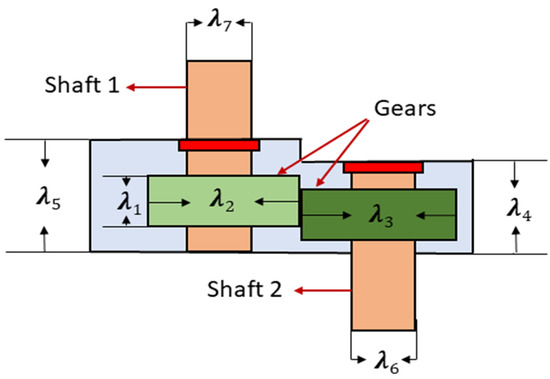

6.4. Speed Reducer Design

The tooth surface width , gear module , the number of teeth on the pinion , the length of the first shaft between bearings , the length of the second shaft between bearings , the diameter of the first shaft , and the diameter of the second shaft are the seven variables in the reducer design problem. In Figure 10, the variable diagram is displayed. The reducer design problem aims to determine the reducer’s smallest weight while adhering to four design restrictions. The four design limitations are stress in the shaft, lateral shaft deflection, stress on the shaft, and bending stress on the gear teeth.

Figure 10.

Speed Reducer Design.

The problem’s mathematical formulation and constraint functions are as follows:

Consider:

Minimize:

Subject to:

Variable range:

The results of the AOACS and the compared methods are listed in Table 13. From this table, the AOACS is ranked first and outperformed all methods in solving this problem, whereas the SMA is ranked second, followed by PSO, AOA, and HHO, respectively.

Table 13.

The achieved outcomes of several algorithms when solving the speed reducer issue.

This work presents an innovative optimization algorithm for solving engineering design problems. The performance of the proposed algorithm is evaluated using several benchmark functions and compared with other state-of-the-art optimization algorithms. Here is a brief discussion of the results obtained in the study. The results demonstrate that the proposed algorithm outperforms other optimization algorithms in terms of solution quality and convergence speed. The proposed algorithm is more efficient in finding the global optimum and has a faster convergence rate compared to the other algorithms. The study also presents a comparison between the standard cuckoo search algorithm and the proposed algorithm, which shows that the proposed algorithm performs better than the standard cuckoo search algorithm in terms of solution quality and convergence speed. The proposed algorithm achieves a better balance between exploration and exploitation of the solution space, leading to improved optimization performance. Moreover, the study shows that the proposed algorithm is robust and effective in solving different types of engineering design problems. The algorithm successfully optimizes various engineering design problems, including mathematical models for physical systems, circuits, and mechanical systems.

In summary, the results of the study demonstrate that the proposed algorithm is a powerful optimization tool that can efficiently solve complex engineering design problems. The algorithm offers superior performance in terms of solution quality and convergence speed compared to other state-of-the-art optimization algorithms. Therefore, the proposed algorithm has the potential to be widely applied in different fields, such as aerospace engineering, mechanical engineering, and electrical engineering, where optimization of complex mathematical models is necessary.

7. Conclusions

In this work, we propose an accelerated AOA algorithm approach called AOACS, which hybridizes the AOA with the cuckoo search algorithm. The CS is used to enhance the performance of AOA by balancing the exploitation and exploration to acquire more reasonable convergence accuracy. The proposed algorithm provides significant results in solving numerical optimization problems compared to other algorithms. The performance of the AOACS is examined using 23 benchmark functions to show the ability of the proposed work to solve different numerical optimization problems. Further, to verify the outperformance of the AOACS algorithm, AOACS is implemented to solve two examples of engineering optimization design problems, welded beam and three-bar truss design. AOACS is compared with the essential AOA and well-known algorithms such as HHO, SSA, WOA, PSO, and SMA and demonstrates superior performance in terms of minimum manufacturing cost for the welded beam and minimum weight for the three-bar truss design.

This work proposes a novel optimization algorithm that shows promising results in solving complex engineering design problems. Here are some future research directions that could build upon this work:

- Hybridization with other optimization algorithms: The proposed algorithm could be combined with other optimization algorithms, such as the Genetic Algorithm (GA) or Particle Swarm Optimization (PSO), to create hybrid algorithms that leverage the strengths of both approaches. Hybrid algorithms may lead to even better optimization performance for certain types of engineering design problems.

- Parameter tuning: The performance of the proposed algorithm is highly dependent on the values of its parameters, such as the population size and the number of iterations. Future work could focus on finding optimal parameter settings that can improve the performance of the algorithm.

- Application to real-world engineering problems: The proposed algorithm has the potential to be applied to real-world engineering design problems, such as designing efficient aircraft or optimizing the performance of renewable energy systems. Future research could focus on applying the proposed algorithm to such problems and evaluating its performance in comparison to other optimization algorithms.

- Further theoretical analysis: Theoretical analysis of the proposed algorithm, such as convergence analysis or complexity analysis, could provide deeper insights into its behavior and limitations. Such analysis could also guide the development of more efficient optimization algorithms in the future.

- Extension to multi-objective optimization: The proposed algorithm is currently designed to optimize single-objective problems. Future research could focus on extending the algorithm to solve multi-objective optimization problems, where multiple conflicting objectives need to be optimized simultaneously.

In conclusion, this work presents a promising optimization algorithm that can be further developed and applied to real-world engineering problems. Future research can build upon this work to improve the algorithm’s performance and expand its application domain.

Author Contributions

Conceptualization, M.H., M.A. (Mohammad Alshinwan), H.G. and L.A.; methodology, M.H., M.A. (Mohammad Alshinwan), H.G.; validation, M.A. (Marah Alshdaifat) and W.A. (Waref Almanaseer); formal analysis, W.A. (Waleed Alomoush) and O.A.K.; writing—original draft preparation, M.H., M.A. (Mohammad Alshinwan), H.G. and L.A.; writing—review and editing, M.A. (Marah Alshdaifat) and W.A. (Waref Almanaseer), O.A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alomari, A.; Idris, N.; Sabri, A.Q.M.; Alsmadi, I. Deep reinforcement and transfer learning for abstractive text summarization: A review. Comput. Speech Lang. 2022, 71, 101276. [Google Scholar] [CrossRef]

- Tan, Y.; Zhu, Y. Fireworks algorithm for optimization. In Proceedings of the International Conference in Swarm Intelligence, Beijing, China, 12–15 June 2010; pp. 355–364. [Google Scholar]

- Abdulhameed, S.; Rashid, T.A. Child drawing development optimization algorithm based on child’s cognitive development. Arab. J. Sci. Eng. 2022, 47, 1337–1351. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching--learning-based optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1. [Google Scholar] [CrossRef]

- Kumar, M.; Kulkarni, A.J.; Satapathy, S.C. Socio evolution & learning optimization algorithm: A socio-inspired optimization methodology. Fut. Gener. Comput. Syst. 2018, 81, 252–272. [Google Scholar]

- Mirjalili, S. Genetic algorithm. In Evolutionary Algorithms and Neural Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 43–55. [Google Scholar]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Ezugwu, A.E.; Agushaka, J.O.; Abualigah, L.; Mirjalili, S.; Gandomi, A.H. Prairie dog optimization algorithm. Neural Comput. Appl. 2022, 34, 20017–20065. [Google Scholar] [CrossRef]

- Mirjalili, S.Z.; Mirjalili, S.; Saremi, S.; Faris, H.; Aljarah, I. Grasshopper optimization algorithm for multi-objective optimization problems. Appl. Intell. 2018, 48, 805–820. [Google Scholar] [CrossRef]

- Shehab, M.; Abualigah, L.; Al Hamad, H.; Alabool, H.; Alshinwan, M.; Khasawneh, A.M. Moth--flame optimization algorithm: Variants and applications. Neural Comput. Appl. 2020, 32, 9859–9884. [Google Scholar] [CrossRef]

- Yang, X.-S.; Slowik, A. Firefly algorithm. In Swarm Intelligence Algorithms; CRC Press: Boca Raton, FL, USA, 2020; pp. 163–174. [Google Scholar]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-Qaness, M.A.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Abualigah, L.; Shehab, M.; Alshinwan, M.; Mirjalili, S.; Abd Elaziz, M. Ant lion optimizer: A comprehensive survey of its variants and applications. Arch. Comput. Methods Eng. 2021, 28, 1397–1416. [Google Scholar] [CrossRef]

- Civicioglu, P. Evolutionary Strategies algorithm (ES) search optimization algorithm for numerical optimization problems. Appl. Math. Comput. 2013, 219, 8121–8144. [Google Scholar]

- Price, K.V. Differential evolution. In Handbook of Optimization; Springer: Berlin/Heidelberg, Germany, 2013; pp. 187–214. [Google Scholar]

- Cheraghalipour, A.; Hajiaghaei-Keshteli, M.; Paydar, M.M. Tree Growth Algorithm (TGA): A novel approach for solving optimization problems. Eng. Appl. Artif. Intell. 2018, 72, 393–414. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inf. Sci. (Ny) 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Hsiao, Y.-T.; Chuang, C.-L.; Jiang, J.-A.; Chien, C.-C. A novel optimization algorithm: Space gravitational optimization. In Proceedings of the 2005 IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 12 October 2005; Volume 3, pp. 2323–2328. [Google Scholar]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Fut. Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Gao, Z.-M.; Zhao, J.; Hu, Y.-R.; Chen, H.-F. The challenge for the nature-inspired global optimization algorithms: Non-symmetric benchmark functions. IEEE Access 2021, 9, 106317–106339. [Google Scholar] [CrossRef]

- Alsarhan, T.; Ali, U.; Lu, H. Enhanced discriminative graph convolutional network with adaptive temporal modelling for skeleton-based action recognition. Comput. Vis. Image Underst. 2022, 216, 103348. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Y.; Li, S.; Yao, F.; Tao, L.; Yan, Y.; Zhao, J.; Gao, Z. An enhanced adaptive comprehensive learning hybrid algorithm of Rao-1 and JAYA algorithm for parameter extraction of photovoltaic models. Math. Biosci. Eng. 2022, 19, 5610–5637. [Google Scholar] [CrossRef]

- AlMahmoud, R.H.; Hammo, B.; Faris, H. A modified bond energy algorithm with fuzzy merging and its application to Arabic text document clustering. Expert Syst. Appl. 2020, 159, 113598. [Google Scholar] [CrossRef]

- Li, S.; Gong, W.; Wang, L.; Gu, Q. Multi-objective optimal power flow with stochastic wind and solar power. Appl. Soft Comput. 2022, 114, 108045. [Google Scholar] [CrossRef]

- Abualigah, L.; Almotairi, K.H.; Abd Elaziz, M.; Shehab, M.; Altalhi, M. Enhanced Flow Direction Arithmetic Optimization Algorithm for mathematical optimization problems with applications of data clustering. Eng. Anal. Bound. Elem. 2022, 138, 13–29. [Google Scholar] [CrossRef]

- Elkasem, A.H.A.; Khamies, M.; Magdy, G.; Taha, I.B.M.; Kamel, S. Frequency stability of AC/DC interconnected power systems with wind energy using arithmetic optimization algorithm-based fuzzy-PID controller. Sustainability 2021, 13, 12095. [Google Scholar] [CrossRef]

- Bansal, P.; Gehlot, K.; Singhal, A.; Gupta, A. Automatic detection of osteosarcoma based on integrated features and feature selection using binary arithmetic optimization algorithm. Multimed. Tools Appl. 2022, 81, 8807–8834. [Google Scholar] [CrossRef] [PubMed]

- Ewees, A.A.; Al-qaness, M.A.A.; Abualigah, L.; Oliva, D.; Algamal, Z.Y.; Anter, A.M.; Ali Ibrahim, R.; Ghoniem, R.M.; Abd Elaziz, M. Boosting arithmetic optimization algorithm with genetic algorithm operators for feature selection: Case study on cox proportional hazards model. Mathematics 2021, 9, 2321. [Google Scholar] [CrossRef]

- Zhang, Y.-J.; Yan, Y.-X.; Zhao, J.; Gao, Z.-M. AOAAO: The hybrid algorithm of arithmetic optimization algorithm with aquila optimizer. IEEE Access 2022, 10, 10907–10933. [Google Scholar] [CrossRef]

- Zhang, Y.-J.; Wang, Y.-F.; Yan, Y.-X.; Zhao, J.; Gao, Z.-M. LMRAOA: An improved arithmetic optimization algorithm with multi-leader and high-speed jumping based on opposition-based learning solving engineering and numerical problems. Alex. Eng. J. 2022, 61, 12367–12403. [Google Scholar] [CrossRef]

- Shehab, M.; Daoud, M.S.; AlMimi, H.M.; Abualigah, L.M.; Khader, A.T. Hybridising cuckoo search algorithm for extracting the ODF maxima in spherical harmonic representation. Int. J. Bio-Inspired Comput. 2019, 14, 190–199. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Fut. Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Abualigah, L.; Shehab, M.; Alshinwan, M.; Alabool, H. Salp swarm algorithm: A comprehensive survey. Neural Comput. Appl. 2020, 32, 11195–11215. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Fut. Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Constrained Optimization and Lagrange Multiplier Methods; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Coello, C.A.C. Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: A survey of the state of the art. Comput. Methods Appl. Mech. Eng. 2002, 191, 1245–1287. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).