An Empirical Investigation to Understand the Issues of Distributed Software Testing amid COVID-19 Pandemic

Abstract

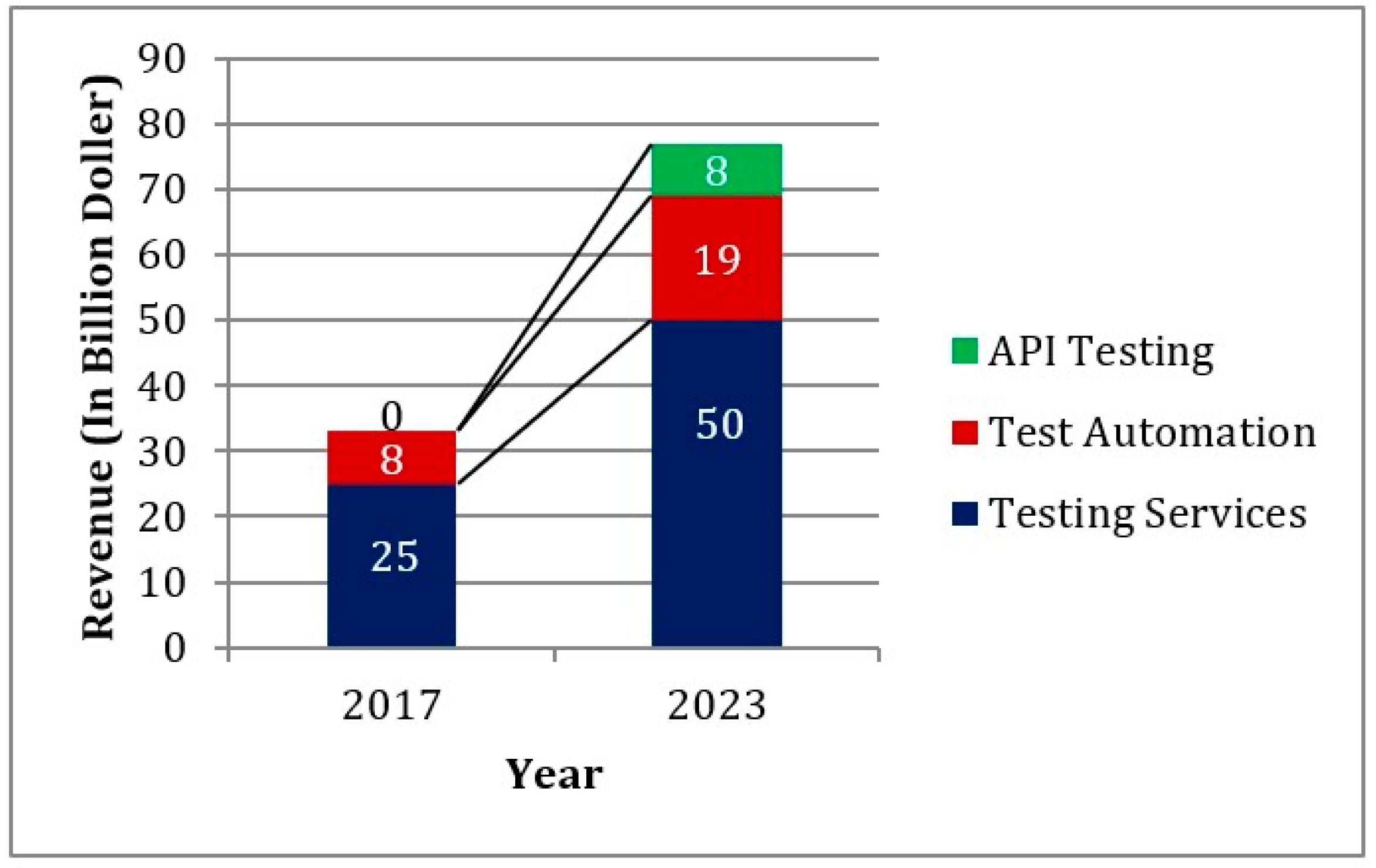

:1. Introduction

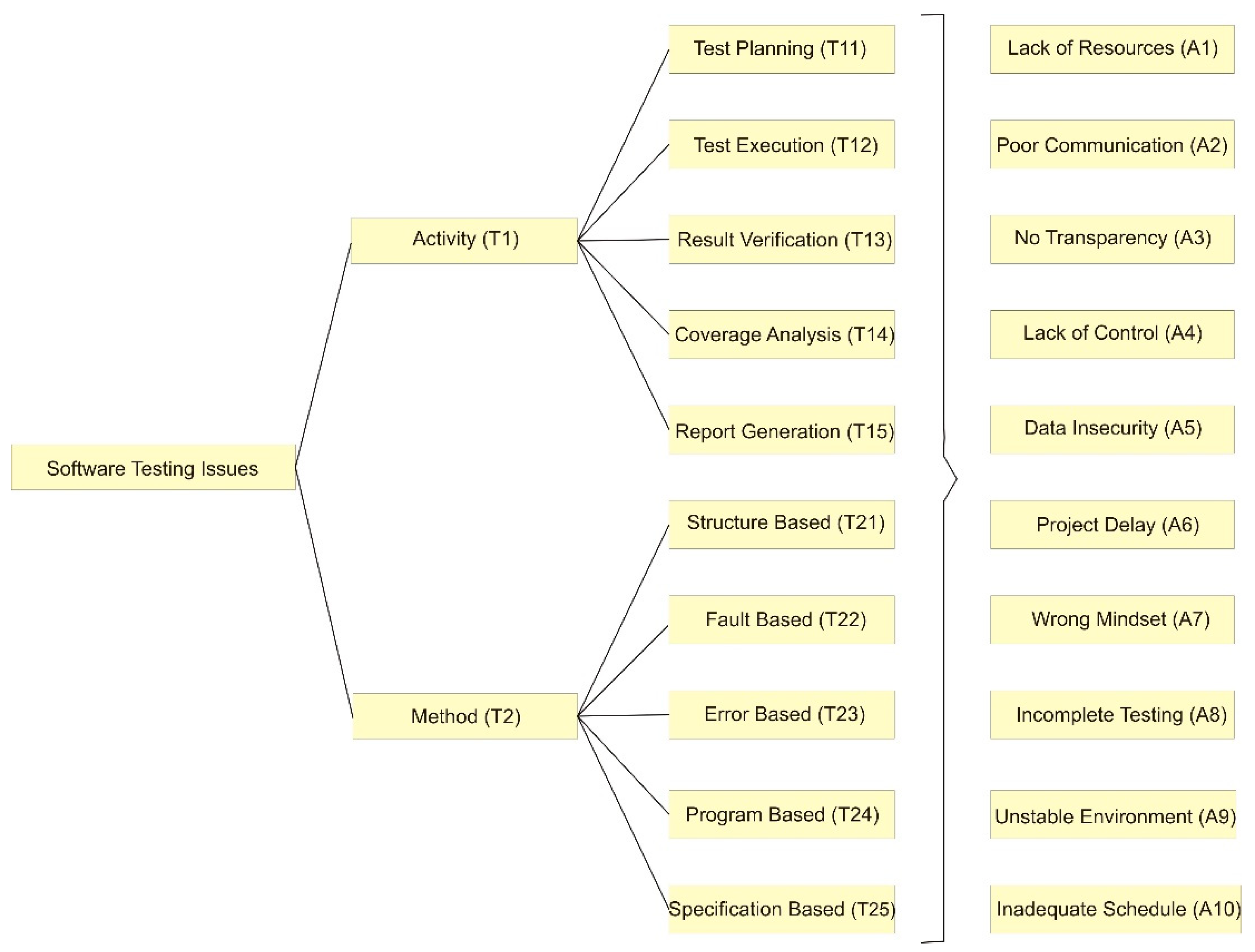

2. Software Testing Challenges amid COVID-19 Pandemic

2.1. Lack of Resources

2.2. Poor Communication

2.3. No Transparency in the Process

2.4. Lack of Control

2.5. Data Insecurity

2.6. Delay in Project Delivery

2.7. Wrong Mindset

2.8. Incomplete Testing

2.9. Unstable Environment

2.10. Inadequate Schedule

3. Evaluation of Distributed Software Testing Challenges

3.1. Hierarchy for the Prioritization

3.2. Fuzzy TOPSIS Method

3.3. Comparative Analysis

3.4. Validation of the Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- De Michell, G.; Gupta, R. K Hardware/Software Co-Design. Proc. IEEE 1997, 85, 349–365. [Google Scholar] [CrossRef]

- Dustin, E.; Rashka, J.; Paul, J. Automated Software Testing: Introduction, Management, and Performance; Addison-Wesley Professional: Boston, MA, USA, 1999. [Google Scholar]

- Murphy, C.; Shen, K.; Kaiser, G. Automatic system testing of programs without test oracles. In Proceedings of the Eighteenth International Symposium on Software Testing and Analysis, Chicago, IL, USA, 19–23 July 2009; pp. 189–200. [Google Scholar]

- El-Far, I.K.; Whittaker, J.A. Model-Based Software Testing; Wiley: Hoboken, NJ, USA, 2002. [Google Scholar]

- Myers, A. Introducing Redpoint’s Software Testing Landscape. Available online: https://medium.com/memory-leak/introducing-redpoints-software-testing-landscape-3c5615f7eeae (accessed on 11 May 2021).

- AlHakami, W.; Binmahfoudh, A.; Baz, A.; AlHakami, H.; Ansari, T.J.; Khan, R.A. Atrocious Impinging of COVID-19 Pandemic on Software Development Industries. Comput. Syst. Sci. Eng. 2021, 36, 323–338. [Google Scholar] [CrossRef]

- Technavio. $34.49 Billion Growth in Software Testing Services Market 2020–2024: Insights and Products Offered by Major VENDORS: TECHNAVIO. Available online: https://www.prnewswire.com/news-releases/-34-49-billion-growth-in-software-testing-services-market-2020-2024--insights-and-products-offered-by-major-vendors--technavio-301242103.html (accessed on 11 May 2021).

- Khan, M.E.; Khan, F. A comparative study of white box, black box and grey box testing techniques. Int. J. Adv. Comput. Sci. Appl. 2012, 3. [Google Scholar] [CrossRef]

- Frankl, P.G.; Hamlet, R.G.; Littlewood, B.; Strigini, L. Evaluating testing methods by delivered reliability [software]. IEEE Trans. Softw. Eng. 1998, 24, 586–601. [Google Scholar] [CrossRef] [Green Version]

- Zarour, M.; Alenezi, M.; Ansari, T.J.; Pandey, A.K.; Ahmad, M.; Agrawal, A.; Kumar, R.; Khan, R.A. Ensuring data integrity of healthcare information in the era of digital health. Health Technol. Lett. 2021, 8, 66–77. [Google Scholar] [CrossRef] [PubMed]

- Planning, S. The Economic Impacts of Inadequate Infrastructure for Software Testing; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2002.

- Amershi, S.; Begel, A.; Bird, C.; DeLine, R.; Gall, H.; Kamar, E.; Nagappan, N.; Nushi, B.; Zimmermann, T. Software Engineering for Machine Learning: A Case Study. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Montreal, QC, Canada, 27 May 2019; pp. 291–300. [Google Scholar]

- Zheng, W.; Bai, Y.; Che, H. A computer-assisted instructional method based on machine learning in software testing class. Comput. Appl. Eng. Educ. 2018, 26, 1150–1158. [Google Scholar] [CrossRef]

- Gunawan, J.; Juthamanee, S.; Aungsuroch, Y. Current Mental Health Issues in the Era of COVID-19. Asian J. Psychiatry 2020, 51, 102103. [Google Scholar] [CrossRef]

- Hwang, C.-L.; Yoon, K. Basic Concepts and Foundations. In Computer-Aided Transit Scheduling; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1981; pp. 16–57. [Google Scholar]

- Yoon, K. Systems Selection by Multiple Attribute Decision Making; Kansas State University: Manhattan, KS, USA, 1980; pp. 1–20. [Google Scholar]

- Chen, C.-T. Extensions of the TOPSIS for group decision-making under fuzzy environment. Fuzzy Sets Syst. 2000, 114, 1–9. [Google Scholar] [CrossRef]

- Collins, E.; Macedo, G.; Maia, N.; Dias-Neto, A. An Industrial Experience on the Application of Distributed Testing in an Agile Software Development Environment. In Proceedings of the 2012 IEEE Seventh International Conference on Global Software Engineering, Washington, DC, USA, 27–30 August 2012; pp. 190–194. [Google Scholar]

- Eassa, F.E.; Osterweil, L.J.; Fadel, M.A.; Sandokji, S.; Ezz, A. DTTAS: A Dynamic Testing Tool for Agent-based Systems. Pensee J. 2014, 76, 147–165. [Google Scholar]

- Di Lucca, G.A.; Fasolino, A.R. Testing Web-based applications: The state of the art and future trends. Inf. Softw. Technol. 2006, 48, 1172–1186. [Google Scholar] [CrossRef]

- Azzouzi, S.; Benattou, M.; Charaf, M.E.H. A temporal agent based approach for testing open distributed systems. Comput. Stand. Interfaces 2015, 40, 23–33. [Google Scholar] [CrossRef]

- Shaukat, K.; Luo, S.; Varadharajan, V.; Hameed, I.A.; Chen, S.; Liu, D.; Li, J. Performance Comparison and Current Challenges of Using Machine Learning Techniques in Cybersecurity. Energies 2020, 13, 2509. [Google Scholar] [CrossRef]

- Shaukat, K.; Faisal, A.; Masood, R.; Usman, A.; Shaukat, U. Security quality assurance through penetration testing. In Proceedings of the 2016 19th International Multi-Topic Conference (INMIC), Islamabad, Pakistan, 5–6 December 2016; pp. 1–6. [Google Scholar]

- Liu, X.; Deng, R.; Yang, Y.; Tran, H.N.; Zhong, S. Hybrid privacy-preserving clinical decision support system in fog–cloud computing. Future Gener. Comput. Syst. 2018, 78, 825–837. [Google Scholar] [CrossRef]

- Shaukat, K.; Shaukat, U.; Feroz, F.; Kayani, S.; Akbar, A. Taxonomy of automated software testing tools. Int. J. Comput. Sci. Innov. 2015, 1, 7–18. [Google Scholar]

- Dar, K.S.; Tariq, S.; Akram, H.J.; Ghani, U.; Ali, S. Web Based Programming Languages that Support Selenium Testing. Int. J. Foresight Innov. Policy 2015, 2015, 21–25. [Google Scholar]

- Corny, J.; Rajkumar, A.; Martin, O.; Dode, X.; Lajonchère, J.P.; Billuart, O.; Buronfosse, A. A machine learning–based clinical decision support system to identify prescriptions with a high risk of medication error. J. Am. Med. Inform. Assoc. 2020, 27, 1688–1694. [Google Scholar] [CrossRef]

- Anooj, P. Clinical decision support system: Risk level prediction of heart disease using weighted fuzzy rules. J. King Saud Univ.-Comput. Inf. Sci. 2012, 24, 27–40. [Google Scholar] [CrossRef] [Green Version]

- Sher, B. Challenges to Project Management in Distributed Software Development: A Systematic Literature Review. In Evolving Software Processes; Wiley: Hoboken, NJ, USA, 2022; pp. 241–251. [Google Scholar]

- Hsaini, S.; Azzouzi, S.; Charaf, M.E.H. A temporal based approach for MapReduce distributed testing. Int. J. Parallel. Emergent Distrib. Syst. 2021, 36, 293–311. [Google Scholar] [CrossRef]

- Shakya, S.; Smys, S. Reliable automated software testing through hybrid optimization algorithm. J. Ubiquitous Comput. Commun. Technol. (UCCT) 2020, 2, 126–135. [Google Scholar]

- Yoon, K.P.; Hwang, C.L. Multiple Attribute Decision Making: An Introduction; Sage Publications: Thousand Oaks, CA, USA, 1995. [Google Scholar]

- Khan, S.A.; Alenezi, M.; Agrawal, A.; Kumar, R.; Khan, R.A. Evaluating Performance of Software Durability through an Integrated Fuzzy-Based Symmetrical Method of ANP and TOPSIS. Symmetry 2020, 12, 493. [Google Scholar] [CrossRef] [Green Version]

- Ansari, M.T.J.; Al-Zahrani, F.A.; Pandey, D.; Agrawal, A. A fuzzy TOPSIS based analysis toward selection of effective security requirements engineering approach for trustworthy healthcare software development. BMC Med. Inform. Decis. Mak. 2020, 20, 236. [Google Scholar] [CrossRef] [PubMed]

- Alzhrani, F.A. Evaluating the usable-security of healthcare software through unified technique of fuzzy logic, ANP and TOPSIS. IEEE Access 2020, 8, 109905–109916. [Google Scholar] [CrossRef]

- Ansari, M.T.J.; Agrawal, A.; Khan, R. DURASec: Durable Security Blueprints for Web-Applications Empowering Digital India Initiative. ICST Trans. Scalable Inf. Syst. 2022. [Google Scholar] [CrossRef]

- Ansari, M.T.J.; Khan, N.A. Worldwide COVID-19 Vaccines Sentiment Analysis through Twitter Content. Electron. J. Gen. Med. 2021, 18, 1–10. [Google Scholar] [CrossRef]

- Alosaimi, W.; Ansari, M.T.J.; Alharbi, A.; Alyami, H.; Seh, A.; Pandey, A.; Agrawal, A.; Khan, R. Evaluating the Impact of Different Symmetrical Models of Ambient Assisted Living Systems. Symmetry 2021, 13, 450. [Google Scholar] [CrossRef]

- Alyami, H.; Nadeem, M.; Alharbi, A.; Alosaimi, W.; Ansari, M.T.J.; Pandey, D.; Kumar, R.; Khan, R.A. The Evaluation of Software Security through Quantum Computing Techniques: A Durability Perspective. Appl. Sci. 2021, 11, 11784. [Google Scholar] [CrossRef]

- Ansari, M.T.J.; Pandey, D.; Alenezi, M. STORE: Security Threat Oriented Requirements Engineering Methodology. J. King Saud Univ.-Comput. Inf. Sci. 2018, 34, 191–203. [Google Scholar] [CrossRef]

| Characteristics/Alternatives | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|

| T11 | 2.450000, 4.270000, 6.270000 | 1.450000, 3.070000, 4.910000 | 0.820000, 2.270000, 4.270000 | 0.910000, 2.450000, 4.450000 | 2.450000, 4.270000, 6.270000 | 2.450000, 4.270000, 6.270000 | 1.450000, 3.070000, 4.910000 | 0.820000, 2.270000, 4.270000 | 0.910000, 2.450000, 4.450000 | 2.450000, 4.270000, 6.270000 |

| T12 | 2.090000, 3.730000, 5.730000 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 | 2.090000, 3.730000, 5.730000 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 |

| T13 | 3.000000, 4.820000, 6.820000 | 3.000000, 5.000000, 7.140000 | 2.180000, 4.090000, 6.140000 | 1.820000, 3.730000, 5.730000 | 1.640000, 3.550000, 5.550000 | 3.000000, 4.820000, 6.820000 | 3.000000, 5.000000, 7.140000 | 2.180000, 4.090000, 6.140000 | 1.820000, 3.730000, 5.730000 | 1.640000, 3.550000, 5.550000 |

| T14 | 5.120000, 7.140000, 8.720000 | 3.150000, 5.150000, 6.910000 | 2.820000, 4.640000, 6.640000 | 1.550000, 3.180000, 5.180000 | 1.450000, 3.180000, 5.180000 | 5.120000, 7.140000, 8.720000 | 3.150000, 5.150000, 6.910000 | 2.820000, 4.640000, 6.640000 | 1.550000, 3.180000, 5.180000 | 1.450000, 3.180000, 5.180000 |

| T15 | 4.280000, 6.370000, 8.370000 | 2.450000, 4.450000, 6.450000 | 2.910000, 4.640000, 6.550000 | 1.450000, 3.000000, 4.910000 | 1.180000, 2.820000, 4.820000 | 4.280000, 6.370000, 8.370000 | 2.450000, 4.450000, 6.450000 | 2.910000, 4.640000, 6.550000 | 1.450000, 3.000000, 4.910000 | 1.180000, 2.820000, 4.820000 |

| T21 | 4.270000, 6.270000, 8.140000 | 2.820000, 4.820000, 6.820000 | 3.180000, 5.180000, 7.100000 | 1.450000, 3.070000, 4.910000 | 0.820000, 2.270000, 4.270000 | 4.270000, 6.270000, 8.140000 | 2.820000, 4.820000, 6.820000 | 3.180000, 5.180000, 7.100000 | 1.450000, 3.070000, 4.910000 | 0.820000, 2.270000, 4.270000 |

| T22 | 5.360000, 7.360000, 9.120000 | 3.730000, 5.730000, 7.550000 | 2.450000, 4.450000, 6.450000 | 0.910000, 2.450000, 4.450000 | 2.450000, 4.270000, 6.270000 | 5.360000, 7.360000, 9.120000 | 3.730000, 5.730000, 7.550000 | 2.450000, 4.450000, 6.450000 | 0.910000, 2.450000, 4.450000 | 2.450000, 4.270000, 6.270000 |

| T23 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 | 1.180000, 2.820000, 4.820000 | 4.280000, 6.370000, 8.370000 | 1.450000, 3.070000, 4.910000 | 4.640000, 6.640000, 8.550000 | 3.000000, 5.000000, 7.140000 | 2.180000, 4.090000, 6.140000 | 2.820000, 4.640000, 6.640000 | 1.910000, 3.730000, 5.730000 |

| T24 | 3.000000, 5.000000, 7.140000 | 2.180000, 4.090000, 6.140000 | 0.820000, 2.270000, 4.270000 | 4.270000, 6.270000, 8.140000 | 2.820000, 4.640000, 6.640000 | 3.120000, 5.000000, 7.140000 | 2.450000, 4.450000, 6.450000 | 3.550000, 5.550000, 7.450000 | 1.820000, 3.730000, 5.730000 | 1.640000, 3.550000, 5.550000 |

| T25 | 3.550000, 5.550000, 7.450000 | 1.820000, 3.730000, 5.730000 | 2.450000, 4.270000, 6.270000 | 5.360000, 7.360000, 9.120000 | 3.000000, 5.000000, 7.140000 | 5.360000, 7.360000, 9.090000 | 2.640000, 4.640000, 6.640000 | 2.900000, 4.800000, 6.700000 | 2.820000, 4.640000, 6.640000 | 2.550000, 4.450000, 6.450000 |

| Characteristics/Alternatives | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|

| T11 | 0.560000, 0.780000, 0.950000 | 0.410000, 0.680000, 0.910000 | 0.370000, 0.620000, 0.890000 | 0.230000, 0.470000, 0.780000 | 0.220000, 0.490000, 0.800000 | 0.300000, 0.530000, 0.790000 | 0.178100, 0.377100, 0.603100 | 0.118600, 0.328500, 0.617900 | 0.210000, 0.460000, 0.730000 | 0.120000, 0.350000, 0.660000 |

| T12 | 0.460000, 0.690000, 0.910000 | 0.320000, 0.580000, 0.850000 | 0.390000, 0.620000, 0.870000 | 0.210000, 0.450000, 0.730000 | 0.180000, 0.430000, 0.740000 | 0.260000, 0.470000, 0.720000 | 0.346400, 0.570000, 0.815700 | 0.276400, 0.539700, 0.829200 | 0.130000, 0.360000, 0.670000 | 0.370000, 0.660000, 0.970000 |

| T13 | 0.460000, 0.680000, 0.890000 | 0.370000, 0.630000, 0.900000 | 0.420000, 0.690000, 0.950000 | 0.210000, 0.460000, 0.730000 | 0.120000, 0.350000, 0.660000 | 0.370000, 0.600000, 0.860000 | 0.368500, 0.614200, 0.877100 | 0.315400, 0.591800, 0.888500 | 0.420000, 0.690000, 1.000000 | 0.290000, 0.570000, 0.880000 |

| T14 | 0.580000, 0.800000, 1.000000 | 0.490000, 0.750000, 1.000000 | 0.320000, 0.590000, 0.860000 | 0.130000, 0.360000, 0.670000 | 0.370000, 0.660000, 0.970000 | 0.490000, 0.740000, 0.980000 | 0.436100, 0.681800, 0.927500 | 0.263300, 0.539700, 0.829200 | 0.270000, 0.560000, 0.860000 | 0.250000, 0.550000, 0.860000 |

| T15 | 0.500000, 0.720000, 0.930000 | 0.390000, 0.660000, 0.940000 | 0.290000, 0.540000, 0.820000 | 0.420000, 0.690000, 1.000000 | 0.290000, 0.570000, 0.880000 | 0.320000, 0.560000, 0.810000 | 0.356200, 0.589600, 0.823000 | 0.408100, 0.671400, 0.960900 | 0.420000, 0.690000, 1.000000 | 0.390000, 0.700000, 1.000000 |

| T21 | 0.340000, 0.540000, 0.780000 | 0.320000, 0.580000, 0.850000 | 0.470000, 0.740000, 1.000000 | 0.270000, 0.560000, 0.860000 | 0.250000, 0.550000, 0.860000 | 0.490000, 0.740000, 1.000000 | 0.346400, 0.570000, 0.815700 | 0.369000, 0.643900, 0.933400 | 0.469200, 0.698400, 0.917700 | 0.158900, 0.337700, 0.538300 |

| T22 | 0.580000, 0.800000, 0.990000 | 0.340000, 0.610000, 0.870000 | 0.380000, 0.640000, 0.890000 | 0.420000, 0.690000, 1.000000 | 0.390000, 0.700000, 1.000000 | 0.400000, 0.650000, 0.890000 | 0.574500, 0.770200, 1.000000 | 0.408100, 0.697500, 0.986900 | 0.468200, 0.687500, 0.892500 | 0.394900, 0.649800, 0.929900 |

| T23 | 0.309000, 0.508000, 0.728000 | 0.209400, 0.408990, 0.628200 | 0.129300, 0.309200, 0.528500 | 0.469200, 0.698400, 0.917700 | 0.158900, 0.337700, 0.538300 | 0.089900, 0.248900, 0.468200 | 0.356200, 0.589600, 0.823000 | 0.455800, 0.745200, 1.000000 | 0.587700, 0.807000, 1.000000 | 0.420100, 0.700200, 1.000000 |

| T24 | 0.328900, 0.548200, 0.782800 | 0.252900, 0.494000, 0.813200 | 0.110000. 0.304600, 0.573100 | 0.468200, 0.687500, 0.892500 | 0.394900, 0.649800, 0.929900 | 0.241400, 0.471500, 0.724300 | 0.346400, 0.570000, 0.815700 | 0.354500, 0.643900, 0.933400 | 0.460000, 0.690000, 0.910000 | 0.320000, 0.580000, 0.850000 |

| T25 | 0.389200, 0.608500, 0.816800 | 0.241000, 0.494000, 0.758900 | 0.328800, 0.573100, 0.841600 | 0.587700, 0.807000, 1.000000 | 0.420100, 0.700200, 1.000000 | 0.275600, 0.517000, 0.776200 | 0.524500, 0.770200, 1.000000 | 0.408100, 0.697500, 0.986900 | 0.460000, 0.680000, 0.890000 | 0.370000, 0.630000, 0.900000 |

| Characteristics/Alternatives | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|

| T11 | 0.001310 0.002060 0.004790 | 0.000960 0.001800 0.004590 | 0.000870 0.001640 0.004490 | 0.000540 0.001240 0.003940 | 0.000520 0.001290 0.004040 | 0.000700 0.001400 0.003990 | 0.000420 0.001000 0.003040 | 0.000280 0.000870 0.003120 | 0.000500 0.001900 0.006300 | 0.001500 0.003600 0.009100 |

| T12 | 0.000300 0.000600 0.001700 | 0.000200 0.000500 0.001600 | 0.000300 0.000600 0.001600 | 0.000200 0.000400 0.001400 | 0.000100 0.000400 0.001400 | 0.000200 0.000400 0.001300 | 0.000300 0.000500 0.001500 | 0.000200 0.000500 0.001500 | 0.111900 0.200200 0.347400 | 0.077300 0.165400 0.305700 |

| T13 | 0.010000 0.018800 0.036800 | 0.008000 0.017400 0.037200 | 0.009100 0.019100 0.039200 | 0.004600 0.012700 0.030200 | 0.002600 0.009700 0.027300 | 0.008000 0.016600 0.035500 | 0.008000 0.017000 0.036200 | 0.006800 0.016400 0.036700 | 0.003700 0.008300 0.013100 | 0.003400 0.008100 0.013100 |

| T14 | 0.002300 0.004300 0.009400 | 0.002000 0.004000 0.009400 | 0.001300 0.003200 0.008100 | 0.000500 0.001900 0.006300 | 0.001500 0.003600 0.009100 | 0.002000 0.004000 0.009200 | 0.001800 0.003700 0.008700 | 0.001100 0.002900 0.007800 | 0.053100 0.105800 0.193900 | 0.049300 0.107300 0.193900 |

| T15 | 0.133200 0.209000 0.323100 | 0.103900 0.191500 0.326600 | 0.077300 0.156700 0.284900 | 0.111900 0.200200 0.347400 | 0.077300 0.165400 0.305700 | 0.085200 0.162500 0.281400 | 0.094900 0.171100 0.285900 | 0.108700 0.194800 0.333800 | 0.007800 0.015600 0.026100 | 0.002700 0.007500 0.015300 |

| T21 | 0.004600 0.008000 0.011900 | 0.004300 0.008600 0.013000 | 0.006400 0.010900 0.015200 | 0.003700 0.008300 0.013100 | 0.003400 0.008100 0.013100 | 0.006600 0.010900 0.015200 | 0.004700 0.008400 0.012400 | 0.005000 0.009500 0.014200 | 0.044500 0.082800 0.148200 | 0.037500 0.078300 0.154400 |

| T22 | 0.073400 0.122600 0.192000 | 0.043000 0.093500 0.168700 | 0.048100 0.098100 0.172600 | 0.053100 0.105800 0.193900 | 0.049300 0.107300 0.193900 | 0.050600 0.099600 0.172600 | 0.072700 0.118100 0.193900 | 0.051600 0.106900 0.191400 | 0.177700 0.279500 0.369400 | 0.127000 0.242500 0.369400 |

| T23 | 0.005200 0.011300 0.020700 | 0.003500 0.009100 0.017900 | 0.002200 0.006900 0.015000 | 0.007800 0.015600 0.026100 | 0.002700 0.007500 0.015300 | 0.001500 0.005600 0.013300 | 0.005900 0.013200 0.023400 | 0.007600 0.016600 0.028500 | 0.000300 0.000600 0.001700 | 0.000200 0.000500 0.001600 |

| T24 | 0.031200 0.066100 0.130000 | 0.024000 0.059500 0.135000 | 0.010400 0.036700 0.095200 | 0.044500 0.082800 0.148200 | 0.037500 0.078300 0.154400 | 0.022900 0.056800 0.120300 | 0.032900 0.068700 0.135400 | 0.033700 0.077600 0.155000 | 0.010000 0.018800 0.036800 | 0.008000 0.017400 0.037200 |

| T25 | 0.117700, 0.210700, 0.301700 | 0.072900 0.171100 0.280300 | 0.099400 0.198500 0.310900 | 0.177700 0.279500 0.369400 | 0.127000 0.242500 0.369400 | 0.083300 0.179000 0.286700 | 0.158600 0.266700 0.369400 | 0.123400 0.241500 0.364500 | 0.002300 0.004300 0.009400 | 0.002000 0.004000 0.009400 |

| Alternatives (HS) | di+ | di− | Gap Degree of CCi+ | Satisfaction Degree |

|---|---|---|---|---|

| A1 | 0.838400 | 0.060000 | 0.066700 | 0.933200 |

| A2 | 0.806700 | 0.140000 | 0.147800 | 0.852100 |

| A3 | 0.775100 | 0.180000 | 0.188400 | 0.811500 |

| A4 | 0.916900 | 0.040000 | 0.041000 | 0.963800 |

| A5 | 0.921300 | 0.030000 | 0.031500 | 0.979600 |

| A6 | 0.700400 | 0.240000 | 0.255200 | 0.721800 |

| A7 | 0.860300 | 0.110000 | 0.113300 | 0.885700 |

| A8 | 0.901300 | 0.070000 | 0.072000 | 0.936700 |

| A9 | 0.912200 | 0.050000 | 0.051900 | 0.944600 |

| A10 | 0.915600 | 0.050000 | 0.051700 | 0.956800 |

| Approaches/Alternatives | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Fuzzy TOPSIS | 0.933200 | 0.852100 | 0.811500 | 0.963800 | 0.979600 | 0.721800 | 0.885700 | 0.936700 | 0.944600 | 0.956800 |

| Classical TOPSIS | 0.938800 | 0.853000 | 0.807900 | 0.963500 | 0.978700 | 0.720900 | 0.881500 | 0.925800 | 0.951800 | 0.967900 |

| Delphi-TOPSIS | 0.933400 | 0.852110 | 0.807740 | 0.963490 | 0.978740 | 0.720810 | 0.881480 | 0.925770 | 0.951810 | 0.967890 |

| Fuzzy-Delphi-TOPSIS | 0.932100 | 0.855000 | 0.809500 | 0.964500 | 0.979200 | 0.721400 | 0.885000 | 0.926100 | 0.945800 | 0.966100 |

| Experiments | Weights/Alternatives | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Original Weights | 0.933200 | 0.852100 | 0.811500 | 0.963800 | 0.979600 | 0.721800 | 0.885700 | 0.936700 | 0.944600 | 0.956800 | |

| Expt-1 | S11 | 0.933524 | 0.852556 | 0.885644 | 0.968567 | 0.979647 | 0.721745 | 0.885777 | 0.936774 | 0.944471 | 0.956779 |

| Expt-2 | S12 | 0.933124 | 0.852745 | 0.811784 | 0.963774 | 0.979789 | 0.721999 | 0.885777 | 0.936852 | 0.944854 | 0.956857 |

| Expt-3 | S21 | 0.933789 | 0.852654 | 0.811456 | 0.963789 | 0.979741 | 0.721857 | 0.885859 | 0.936965 | 0.944745 | 0.956745 |

| Expt-4 | S22 | 0.933745 | 0.852963 | 0.811951 | 0.963957 | 0.979852 | 0.721358 | 0.885854 | 0.936745 | 0.944859 | 0.956958 |

| Expt-5 | S23 | 0.933754 | 0.852748 | 0.811754 | 0.963778 | 0.979785 | 0.721254 | 0.885745 | 0.936875 | 0.944425 | 0.956457 |

| Expt-6 | S31 | 0.963789 | 0.979741 | 0.721857 | 0.963789 | 0.979741 | 0.721857 | 0.885859 | 0.936965 | 0.944745 | 0.956745 |

| Expt-7 | S32 | 0.963957 | 0.979852 | 0.721358 | 0.963957 | 0.979852 | 0.721358 | 0.885854 | 0.936745 | 0.944859 | 0.956958 |

| Expt-8 | S33 | 0.963789 | 0.979741 | 0.721857 | 0.963789 | 0.979741 | 0.721857 | 0.885859 | 0.936965 | 0.944745 | 0.956745 |

| Expt-9 | S41 | 0.963957 | 0.979852 | 0.721358 | 0.963957 | 0.979852 | 0.721358 | 0.885854 | 0.936745 | 0.944859 | 0.956958 |

| Expt-10 | S42 | 0.933747 | 0.852745 | 0.811748 | 0.963778 | 0.979748 | 0.721968 | 0.885885 | 0.936748 | 0.944778 | 0.956758 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alharbi, A.; Ansari, M.T.J.; Alosaimi, W.; Alyami, H.; Alshammari, M.; Agrawal, A.; Kumar, R.; Pandey, D.; Khan, R.A. An Empirical Investigation to Understand the Issues of Distributed Software Testing amid COVID-19 Pandemic. Processes 2022, 10, 838. https://doi.org/10.3390/pr10050838

Alharbi A, Ansari MTJ, Alosaimi W, Alyami H, Alshammari M, Agrawal A, Kumar R, Pandey D, Khan RA. An Empirical Investigation to Understand the Issues of Distributed Software Testing amid COVID-19 Pandemic. Processes. 2022; 10(5):838. https://doi.org/10.3390/pr10050838

Chicago/Turabian StyleAlharbi, Abdullah, Md Tarique Jamal Ansari, Wael Alosaimi, Hashem Alyami, Majid Alshammari, Alka Agrawal, Rajeev Kumar, Dhirendra Pandey, and Raees Ahmad Khan. 2022. "An Empirical Investigation to Understand the Issues of Distributed Software Testing amid COVID-19 Pandemic" Processes 10, no. 5: 838. https://doi.org/10.3390/pr10050838

APA StyleAlharbi, A., Ansari, M. T. J., Alosaimi, W., Alyami, H., Alshammari, M., Agrawal, A., Kumar, R., Pandey, D., & Khan, R. A. (2022). An Empirical Investigation to Understand the Issues of Distributed Software Testing amid COVID-19 Pandemic. Processes, 10(5), 838. https://doi.org/10.3390/pr10050838