Abstract

In this research, we consider cyber risk in insurance using a quantum approach, with a focus on the differences between reported cyber claims and the number of cyber attacks that caused them. Unlike the traditional probabilistic approach, quantum modeling makes it possible to deal with non-commutative event paths. We investigate the classification of cyber claims according to different cyber risk behaviors to enable more precise analysis and management of cyber risks. Additionally, we examine how historical cyber claims can be utilized through the application of copula functions for dependent insurance claims. We also discuss classification, likelihood estimation, and risk-loss calculation within the context of dependent insurance claim data.

1. Introduction

The cyber insurance market is rapidly growing due to the effects of digital transformation in today’s world. Cyber risk, as both an emerging threat and an opportunity, is gaining prominence in the insurance landscape. Traditional insurance policies, such as those for motor vehicles or property, are increasingly incorporating cyber risks. This change is driven by the advent of connected and autonomous vehicles and the adoption of smart homes equipped with devices connected to servers and satellites. A major concern with traditional insurance policies is the undervaluation of cyber risk and ‘silent cyber’, which refers to previously unknown exposures that have neither been underwritten nor billed. Nevertheless, identifying and mitigating the exposure to silent cyber is possible (Aon 2018).

Cybercrime can manifest in many forms, including ransomware attacks, hacking, phishing, malware, spoofing, purchase fraud, theft of customer data, and tax fraud. This diversity, combined with the short history of available data and a rapidly evolving environment, makes handling cyber insurance claims and developing models significantly more complex than in traditional insurance (see, e.g., Dacorogna and Kratz 2022, 2023; Eling 2020; Tsohou et al. 2023). When evaluating common cyber risk scenarios, it is important to consider potential reputational damage, loss of confidential information, financial losses, regulatory fines, data privacy violations, data availability and integrity issues, contractual violations, and implications for third parties. After a cyber incident, the recovery time is crucial for mitigating business interruption. For example, the average recovery time for ransomware attacks is approximately 19 days (Tsohou et al. 2023).

Cyber insurance losses are generally categorized as ‘first party’ and ‘third party’. First-party losses are those that the insured party directly incurs. Third-party liability covers claims made by individuals or entities who allege they have suffered losses as a result of the insured’s actions (Romanosky et al. 2019; Tsohou et al. 2023). In the current research, we are focusing on first-party losses.

In today’s interconnected world, the perspective on risks is evolving. New emerging risks, technological advancements, and globalization increase the interdependence among risk parameters within the industry. For example, as demonstrated by COVID-19, a virus originating in one location can quickly escalate into a global pandemic, affecting insurance claims across distant regions. As a result, developing dependent risk models plays a crucial role in achieving better pricing in the insurance industry. However, employing dependent risk models is more complex than using independent models in predictive analytics. In actuarial science, copula functions are frequently used to model such dependencies (see, e.g., Constantinescu et al. 2011; Eling and Jung 2018; Embrechts et al. 2003; Lefèvre 2021).

Our goal here is to treat cyber claim amounts as quantum data rather than classical data due to the assumed uncertainty in the number of cyber attacks. In this context, the number of claims does not always correspond to the number of events (cyber attacks), so we analyze the cyber data by assuming that a single cyber claim can result from more than one cyber attack. Quantum methods originate from physics and have since been applied in various fields of application. Much of the theory and applications can be found in the books Baaquie (2014); Chang (2012); Griffiths (2002); Parthasarathy (2012). We also mention some recent work related to our subject. Thus, the analysis of quantum data in finance and insurance is studied in Hao et al. (2019); Lefèvre et al. (2018). Copulas and quantum mechanics are explored in Al-Adilee and Nanasiova (2009); Zhu et al. (2023). Cyber insurance pricing and modeling with copulas are discussed, e.g., in Awiszus et al. (2003); Eling and Jung (2018); Herath and Herath (2011).

In this paper, we start with a study on quantum theory for non-commutative paths. Next, we analyze synthetic data using a data classification method and risk-error functions. Finally, we use a classical copula function to predict future dependent claims.

2. Quantum Claim Data

Historical claim data from traditional insurance policies can be considered as classical data as there is generally no uncertainty about the number and amounts of claims, except in the cases of fraud and misreporting. Cyber claims, however, differ from traditional insurance claims in several ways, as listed in Table 1. Therefore, they should be handled from a different perspective.

Table 1.

Differences between traditional insurance and cyber insurance.

Cyber damage is often detected much later and may result from multiple cyber attacks originating from diverse sources. According to the Cost of a Data Breach Report IBM (2023), the average time taken to identify and contain a data breach in 2022 was 277 days. Thus, we assume that the number of claims was 1 when the damage was noticed after 277 days. However, this damage could have originated from multiple sources at various times during those days.

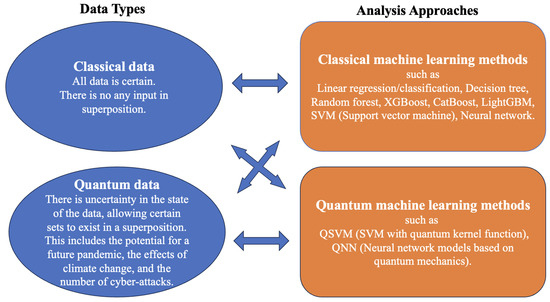

In Figure 1, the data types and analysis approaches are explained and illustrated (IBM 2024). While analyzing classical data using deterministic methods is widespread, the use of quantum algorithms—including quantum kernels and quantum neural networks—is becoming increasingly common. As previously noted, cyber insurance claims are considered under the assumption that they originate from several stochastic processes. Therefore, we will treat them as quantum data and examine them by computing likelihood and risk-loss functions within the context of dynamic classification.

Figure 1.

Data types and analysis methods.

3. Quantum for Non-Commutative Event Paths

Event paths and projectors. In a probabilistic setup, an event path is modeled as a product of indicators as

Such indicators correspond to the projection operators in quantum theory. Recall that an operator P is called projector if P is self-adjoint and idempotent (i.e., and ). A basic projector is defined via a unit vector e by

where and are, respectively, column and row vectors that describe the quantum state of the system. They correspond to the bra-ket notation, also called Dirac notation. A general projector is then given by

where denotes an orthonormal basis.

The probability of the system in the ground state e is measured by the expectation of (1). This expected value is obtained via a usual trace function as

where is a density operator which is a quantum state matrix of the system.

In quantum, a non-commutative version of the event path is defined as a product of projectors as

Hereafter, the modeling is initiated by considering paths having the following product form

In our context, is an event stating that the genuine customer has the right to exercise a financial claim if any, and the are bounded self-adjoint operators indicating possible obstacles such as computer malfunctions, cyberattacks, criminal activities of a fraudster …

A self-adjoint operator Q is known to be bounded if it can be expanded as

where and are the eigenvectors and eigenvalues of Q (i.e., ). The probability of the measurement is extended by linearity of the expectation to

after using (2).

Note, that the path is not a projector in general. However, when and Q is a projector, then (provided that ) and it is a projector if and only if P and Q are commutative as follows from Halmos’ two projections theorem (see Bottcher and Spitkovsky 2010).

Quantum Risk Model. Let us first look at a genuine customer model similar to the one introduced in Lefèvre et al. (2018). A customer has the right to exercise financial claims. Any claim amount, if requested, is given by a fixed real number m which corresponds to a small claim for a short period of time. If not requested, the amount is 0.

Recall that an operator Z is observable if it is self-adjoint (i.e., ). For this model, the overall observable H is given by the following sum

This includes the actual amount modeled, with the help of the tensor product, as

in agreement with Hao et al. (2019); Lefèvre et al. (2018). The exercise right is then described by the state projection , thus

so that H becomes

Quantum Risk Model with Obstacles (called (O) model). This time, we hypothesize that the projector may be vulnerable to potential cyberattacks. We model the corresponding event as a path of the form (1), using different parameters. Instead of (5), the j-th overall capital in this (O) model is then defined by

We now need to determine the overall observable . This will be conducted using the following result.

Lemma 1.

A general path σ admits the representation

Proof.

Returning to the (O) model, we have for all j by virtue of (9). Since , the formula (8) of becomes

From (10), the observable is thus given by

For clarity, let us assume that all are equal to the same a. In other words, the obstacle activities are considered here to be homogeneous ((OH) case). From (7) and (11), we have proven the following result.

Proposition 1.

Define

In the (OH) case,

More generally, we suppose there is one line of claims for the genuine customer model, yielding the observable , and n separate risk models with homogeneous obstacles, yielding the observables , . This combined claim process is called (CC) model.

Corollary 1.

For the (CC) model, the overall observable is modeled by

In Section 4, we will consider such a (CC) process with three possible obstacles, i.e., three (O) risk models, which leads to model the data using a sum of three stochastic processes. Before that, we present a few simple examples for illustration.

Example 1.

Consider two events A and B such that . Let and be the indicators of these events. Suppose that a sequence of events gives the first eight outcomes . In probability, the associated product of indicators is

because of the commutative property of indicators (see Lefèvre et al. 2017 for classical exchangeable sequences).

In quantum, and are replaced by projectors P and Q, respectively. Since projectors do not commute, in general, the associated product of projectors is as follows:

So, the order in which they are applied can provide different results.

Example 2.

For three events, we introduce the qubits . The outcomes then are as follows:

A basis for a qubit system is given by the eight states

so that for a ψ system,

where the represent amplitudes of the states and satisfy .

Example 3.

In the continuation of Example 1, consider for P and Q the following Jordan block matrices

where p and q take non-null values. We observe that and , so they are not projectors.

Defining the density ρ by

we then obtain

Basically, matrices with eigenvalues 1 cannot be treated as events, as this can lead to nonsensical results. How to deal with non-self-adjoint matrices is an important question; several methods are proposed in quantum modeling.

4. Quantum Approach to Cyber Insurance Claims

The overall compound claim is viewed here as the path resulting from a series of cyber attacks. Specifically, let denote the total cyber insurance claims occurred during successsive small time intervals , over a horizon of length . Each claim is assumed to come from a combination of several stochastic processes, and the corresponding data are treated as quantum data. Let m be the mean of and its frequency rate, so that measures the expected loss amount.

Our approach consists of two main steps: (i) learning patterns from existing data by dividing it into several stochastic processes, and (ii) using copula functions to generate data for estimating future claims. This section addresses point (i), while point (ii) will be discussed in the next section.

For illustration, we examine how to split historical claim data into (at most) three different stochastic processes. Naturally, if security vulnerabilities evolve over time, our current data may become less reliable. However, we can make it usable again by treating data as the result of combined processes and understanding their patterns.

Each claim is here the sum of the claims generated by three processes and can be expressed as

where given any , the claims , , are i.i.d. random variables with means , and they occur independently at rates . This implies that

The total expected claim amount per unit of time is shown in Table 2 when there are at most three generating processes.

Table 2.

Generating processes with claim means and rates for (16).

Additionally, let us assume that is, for example, a combination of at most two claims per unit of time. In this case, (16) simplifies to

where is positive but the ’s can be zero. All possible scenarios for the mean claims are listed in Table 3 in the case . For example, if , it is the 6-th scenario which is appropriate because the values in the classes are then in ascending order; if , this is the 11-th scenario for the same reason.

Table 3.

Mean claims scenarios ranked in ascending order when , for (17).

Moreover, Table 4 gives the corresponding total claims , , and their frequencies , the probabilities of occurrence being .

Table 4.

Total claims and their frequencies for (17).

4.1. Hamiltonian Operator

Observable quantities are represented by self-adjoint operators. In the paper Lefèvre et al. (2018), we showed how to analyze such data using the quantum spectrum. In quantum mechanics, the spectrum of an operator is precisely the set of eigenvalues which correspond to observables for certain Hermitian operators/self-adjoint matrices.

Consider the model (17) with three processes and at most two claims per unit of time. We define the corresponding Hamiltonian observable operator by the following tensorial product

where and are the operators for one and two claims occurrences which are defined by the matrices

B is the operator for the claim amount on a jump which is defined by the matrix

and is the identity operator of dimension 3 (ln is just applied to diagonal elements of the matrices).

The two terms of claim amounts in (18) are the diagonal matrices

Therefore, H is a self-adjoint matrix with eigenvalues

so its spectrum has nine distinct eigenvalues

4.2. Likelihood and Risk Functions

First, we classify the data with respect to the eigenvalues (19) of the operator (18) and label them. The order of two successive claims in a unit of time is ignored. The different classes are listed below:

Using the Maxwell–Boltzmann statistics, the associated likelihood function is given by

where are the numbers of claims in class , and are the normalized frequencies of Table 4 for the three Poisson processes.

Finally, the risk function is calculated via the square distance (), or the Gaussian (squared exponential) distance (, ). For the square distance kernel, the risk function is here

and for the Gaussian distance kernel,

To minimize such a risk value, we perform optimization on all possible values of . Additionally, we determine boundaries using the neighborhood approach.

4.3. Illustration

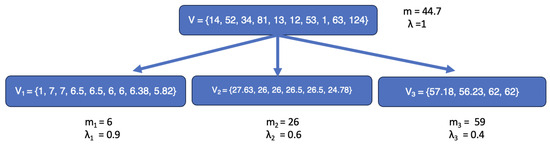

Let us consider the following dataset

The main reason for choosing a small dataset size is only to simplify the demonstration of the example. Our algorithm can of course be applied to large datasets.

Table 5 shows the means and the rates for the three processes, obtained using the two previous distance kernels. Neighborhood optimization is conducted here by perturbing the boundaries between classes with . Of course, it is possible to achieve better results with an advanced optimization technique.

Table 5.

Means and rates obtained with different distance kernels.

As expected, using different kernels with nearest neighbor approaches influence the results. For the current analysis, we applied the squared distance kernel and thus obtain , , (which corresponds to scenario 6 of Table 3). In addition, the dataset is distributed in the nine classes according to the distribution indicated in Table 6. For this classification, midpoint values are used to define class boundaries. Note, that these boundaries are dynamic and change in response to the values of , , .

Table 6.

Classification of the data set for , , .

The classes containing two claims, i.e., , , , , , , are separated with respect to the weights of , , as follows:

So, the two claims in of sum 14, for example, yield the amounts 7 and 7. For , the sum 34 is subdivided into and ; for , 63 becomes and ; for , 81 yields and .

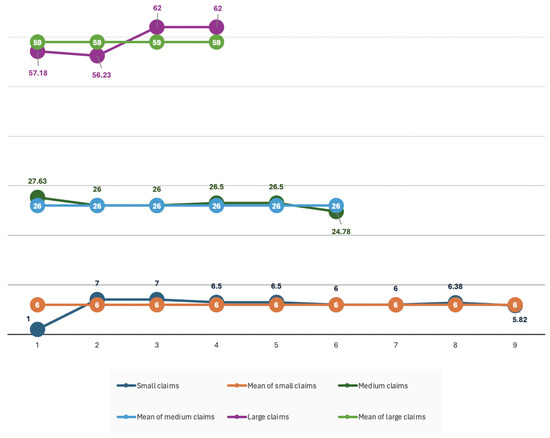

Following this method, the claims in the three processes are listed in Table 7 and displayed with their means and frequencies in Figure 2. According to Table 5, the claim arrival rates for these processes are , , .

Table 7.

Claims for the sub-processes.

Figure 2.

Original claims versus split claims for the three stochastic processes.

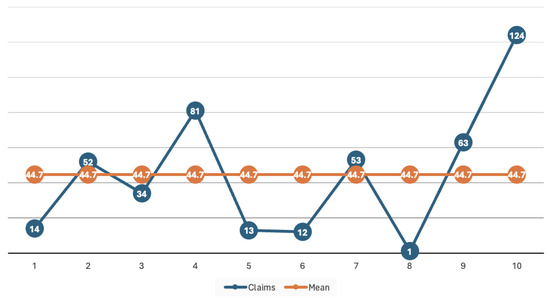

As shown in Figure 3 and Figure 4, the deviation from the mean in the sub-processes is minimized after the claims splitting and categorization process.

Figure 3.

Original claims and mean before analysis.

Figure 4.

Split and categorized claims and means after analysis.

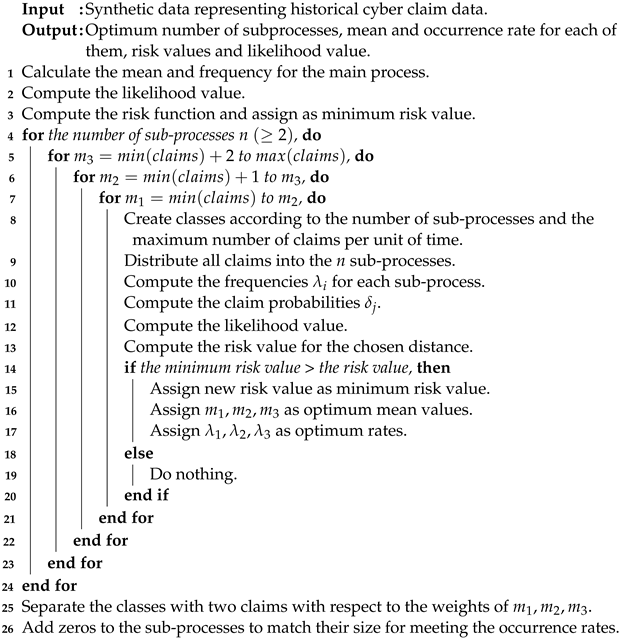

The detailed pseudo-code is provided by the Algorithm 1.

| Algorithm 1: for splitting the main claim process and classifying the cyber data. |

|

5. Generating Dependent Cyber Claims with Copulas

In the previous section, we analyzed a synthetic dataset by dividing the main claim process into three subprocesses. We are now able to estimate new claims by working precisely within this framework.

5.1. When the Sub-Processes Are Independent

Let us start by assuming that the behaviors of the subprocesses are always independent. Then, we can directly estimate the spectrum of future cyber claims by

where , , is the set of distinct elements from , which are in number . For example, in Section 4.3, and . So, the expected value of the spectrum is as follows

where is the k-th element of the column vector (of size ), and denotes its probability mass function which is calculated through the vector c given by

Without change, since merging the separate claims should give us the same result. In conclusion, in (20) applies in the independent case.

Of course, if the behaviors of the three processes change due to emerging technologies or risks, we may modify the claims associated with these processes accordingly as follows:

where , , represents the amount of change in each subprocess.

5.2. When the Sub-Processes Can Be Correlated

Consider a scenario involving the presence of dependence effects over time. A practical way to predict future claims is then to use some copula functions. We briefly recall basic points in the theory of copulas. Let be a vector of n random variables that are distributed uniformly on . A copula C is the joint distribution function of such a vector, i.e.,

In the particular degenerate case where the are independent, then . Sklar’s key theorem states that the joint distribution function F of any continuous vector having marginal distribution functions admits a unique copula representation as

Now, a copula is called Archimedean if it has the simplified form

for some univariate function which is completely monotonic with and . Observe that in this case, the vector is automatically exchangeable (in de Finetti sense). We note, however, that the symmetry of the distribution can be broken by making this vector only partially exchangeable (see Lefèvre 2021). One of the most common Archimedean copulas is the Clayton copula defined by

where represents a positive parameter. Here, we will work precisely with this copula for .

More precisely, we assume that there is dependence between claims coming from the first and second sub-processes, while claims originating from the third one remain independent. Then, we use the bivariate Clayton copula given by (21) to model claims of these first two sub-processes. For a discrete version of the copula, we refer to Trivedi and Zimmer (2017) which provides its probability mass function, denoted , as

where for .

Let c be the column vector (of dimension ) representing this mass function. The joint probability distribution for the three sub-processes is then given by

Therefore, the expected spectrum of future cyber claims becomes

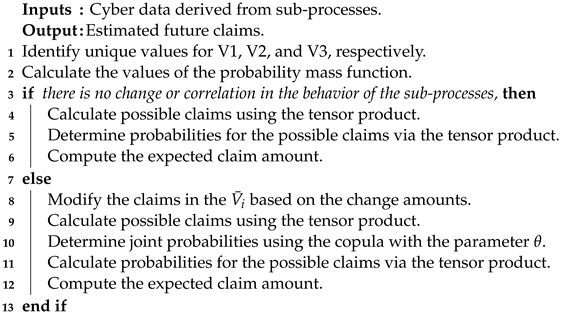

The detailed pseudo-code is provided by the Algorithm 2 below.

| Algorithm 2: for merging correlated claims in the sub-processes. |

|

6. Conclusions

With the advent of new technologies, such as generative AI, quantum computing and metaverse platforms, coupled with challenges such as climate change, pandemics and globalization, humanity has entered a period of exponential change. In such a rapidly evolving environment, relying solely on historical data can lead to incorrect predictions. As a solution, we used a stochastic process based on historical data, dividing it into three distinct sub-processes to better discern patterns. To account for parameter changes and correlated cases, we used Clayton copula, one of the well-known Archimedean copulas, which allows us to predict future claims by updating claims from the subprocesses and considering the magnitude of change. This methodology provides a fairly compelling example of how to turn unreliable historical data into a reliable resource in a rapidly changing environment.

Analyzing cyber insurance data is far more complex than what has been discussed here. In particular, it would be extremely beneficial to process actual cyber data and test the performance of the non-standard approach we are proposing. Nevertheless, in this work, we have demonstrated how cyber data can be considered as quantum data. We also explained how to segment the dataset using various subprocesses and how to make predictions in an uncertain environment.

In the analysis of cyber insurance data and forecasting, working with researchers in an agile environment and updating current models are essential. This necessity arises because hackers are becoming more innovative, and technology is rapidly evolving. In this paper, we have introduced a different approach from a mathematical perspective. We would like to emphasize that this approach is only applicable if the data are distributed across a wide spectrum, exhibiting high bias relative to the mean, in order to yield more accurate estimates. The approach can be used for large datasets. However, from the industrial perspective, the model should be tested and validated by experts using real cyber insurance data.

Author Contributions

Conceptualization, C.L., M.T., S.U. and M.C.; methodology, C.L., M.T. and S.U.; software, M.T.; validation, C.L., S.U. and M.C.; formal analysis, M.T.; investigation, C.L., S.U. and M.C.; resources, M.T.; data curation, M.T.; writing—original draft preparation, C.L. and M.T.; writing—review and editing, S.U. and M.C.; visualization, M.T.; supervision, C.L., S.U. and M.C.; project administration, M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Al-Adilee, Ahmed M., and Olga Nánásiová. 2009. Copula and S-Map on a Quantum Logic. Information Sciences 179: 4199–207. [Google Scholar] [CrossRef]

- Awiszus, Kerstin, Thomas Knispel, Irina Penner, Gregor Svindland, Alexander Voß, and Stefan Weber. 2023. Modeling and Pricing Cyber Insurance: Idiosyncratic, Systematic, and Systemic Risks. European Actuarial Journal 13: 1–53. [Google Scholar] [CrossRef]

- Baaquie, Belal E. 2014. Path Integrals and Hamiltonians: Principles and Methods. Cambridge: Cambridge University Press. [Google Scholar]

- Böttcher, Albrecht, and Ilya M. Spitkovsky. 2010. A Gentle Guide to the Basics of Two Projections Theory. Linear Algebra and its Applications 432: 1412–59. [Google Scholar] [CrossRef]

- Chang, Kow Lung. 2012. Mathematical Structures of Quantum Mechanics. Singapore: World Scientific. [Google Scholar]

- Constantinescu, Corina, Enkelejd Hashorva, and Lanpeng Ji. 2011. Archimedean Copulas in Finite and Infinite Dimensions-With Application to Ruin Problems. Insurance: Mathematics and Economics 49: 487–95. [Google Scholar] [CrossRef]

- Dacorogna, Michel, and Marie Kratz. 2022. Special issue “Cyber risk and security”. Risks 10: 112. [Google Scholar] [CrossRef]

- Dacorogna, Michel, and Marie Kratz. 2023. Managing cyber risk, a science in the making. Scandinavian Actuarial Journal 10: 1000–21. [Google Scholar] [CrossRef]

- Eling, Martin. 2020. Cyber risk research in business and actuarial science. European Actuarial Journal 10: 303–33. [Google Scholar] [CrossRef]

- Eling, Martin, and Kwangmin Jung. 2018. Copula approaches for modeling cross-sectional dependence of data breach losses. Insurance: Mathematics and Economics 82: 167–80. [Google Scholar] [CrossRef]

- Embrechts, Paul, Filip Lindskog, and Alexander McNeil. 2001. Modelling Dependence with Copulas and Applications to Risk Management. In Handbook of Heavy Tailed Distributions in Finance. Edited by Svetlozar T. Rachev. Amsterdam: Elsevier, chp. 8. pp. 329–84. [Google Scholar]

- Griffiths, Robert B. 2002. Consistent Quantum Theory. Cambridge: Cambridge University Press. [Google Scholar]

- Hao, Wenyan, Claude Lefèvre, Muhsin Tamturk, and Sergey Utev. 2019. Quantum Option Pricing and Data Analysis. Quantitative Finance and Economics 3: 490–507. [Google Scholar] [CrossRef]

- Herath, Hemantha S. B., and Tejaswini C. Herath. 2011. Copula-Based Actuarial Model for Pricing Cyber-Insurance Policies. Insurance Markets and Companies 2: 7–20. [Google Scholar]

- Lefèvre, Claude. 2021. On Partially Schur-Constant Models and their Associated Copulas. Dependence Modeling 9: 225–42. [Google Scholar] [CrossRef]

- Lefèvre, Claude, Stéphane Loisel, and Sergey Utev. 2017. On Finite Exchangeable Sequences and their Dependence. Journal of Multivariate Analysis 162: 93–109. [Google Scholar] [CrossRef]

- Lefèvre, Claude, Stéphane Loisel, Muhsin Tamturk, and Sergey Utev. 2018. A Quantum-Type Approach to Non-Life Insurance Risk Modelling. Risks 6: 99. [Google Scholar] [CrossRef]

- Parthasarathy, Kalyanapuram R. 2012. An Introduction to Quantum Stochastic Calculus. Basel: Birkhäuser. [Google Scholar]

- Romanosky, Sasha, Lillian Ablon, Andreas Kuehn, and Therese Jones. 2019. Content analysis of cyber insurance policies: How do carriers price cyber risk? Journal of Cybersecurity 5: 1–19. [Google Scholar] [CrossRef]

- Trivedi, Pravin, and David Zimmer. 2017. A note on Identification of Bivariate Copulas for Discrete Count Data. Econometrics 5: 10. [Google Scholar] [CrossRef]

- Tsohou, Aggeliki, Vasiliki Diamantopoulou, Stefanos Gritzalis, and Costas Lambrinoudakis. 2023. Cyber insurance: State of the art, trends and future directions. International Journal of Information Security 22: 737–48. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Daiwei, Weiwei Shen, Annarita Giani, Saikat Ray-Majumder, Bogdan Neculaes, and Sonika Johri. 2023. Copula-Based Risk Aggregation with Trapped Ion Quantum Computers. Scientific Reports 13: 18511. [Google Scholar] [CrossRef] [PubMed]

- IBM. 2023. Cost of a Data Breach. Available online: https://www.ibm.com/reports/data-breach (accessed on 8 May 2024).

- IBM. 2024. Qiskit Textbook on IBM Quantum. Available online: https://learn.qiskit.org/course/machine-learning/introduction (accessed on 8 May 2024).

- Aon. 2018. Managing Silent Cyber. Available online: https://www.aon.com/getmedia/2b1ad492-dcf0-429e-9eda-828d49b1396a/aon-silent-cyber-solution-for-insurers.aspx (accessed on 8 May 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).