1. Introduction

Acute ischemic stroke (AIS) is caused by the occlusion or blockage of small or large blood vessels due to a thrombus or embolism event, resulting in reduced blood flow to a portion of the brain tissue. It accounts for 87% of all strokes and has high morbidity and mortality [

1,

2]. Once an AIS occurs, a portion of the brain tissue may have already suffered irreversible damage (the Infarct Core, IC), and the surrounding brain tissue is also at risk due to reduced blood flow (the Ischemic Penumbra, IP) and may be salvageable [

3,

4]. Therefore, the goal of AIS treatment is to reperfuse the blood-deprived area before the salvageable IP transforms into the IC. The treatment methods for AIS patients mainly include intravenous thrombolysis and endovascular therapy [

5]. Neuroradiologists usually select the appropriate treatment method for patients based on clinical guidelines, e.g., mechanical thrombectomy being more suitable when the IC volume is less than 70 mL, the IP volume is greater than 15 mL and the IP to IC ratio exceeds 1.8 [

5,

6,

7,

8]. Due to the extremely short 4.5 h treatment window, the rapid and accurate assessment of the volume and location of the IP and IC is important for reperfusion therapy decision making for AIS patients.

In clinical practice, neuroradiologists typically evaluate the IP and IC through manual delineation on multi-modal images, such as by using diffusion imaging to identify the IC and diffusion–perfusion mismatch to identify the IP. However, these manual segmentations are subject to interobserver and intraobserver variability and fatigue-related errors, and they are time consuming. Moreover, invasive imaging modalities are sometimes unavailable. Therefore, a rapid, objective, accurate and widely applicable method for automated IP and IC segmentation is desired in the computer-aided diagnosis of AIS.

Machine learning and deep learning methods have been extensively used in recent years for fully automatic medical image segmentation. Numerous general 3D medical image segmentation methods are available for the segmentation of the IP and IC, such as [

9,

10,

11,

12,

13,

14], etc. Additionally, some researchers have developed specialized machine learning and deep learning methods for infarct lesion segmentation. Gupta et al. [

15] designed a U-shaped encoder–decoder network named MSNet. They utilized a combination of eight modalities of diffusion and perfusion maps to segment the IP and IC, where the diffusion–perfusion mismatch facilitates the differentiation between the IP and IC. Bhurwani et al. [

16] utilized U-Net [

17] to segment the IC and IP + IC from CTP scans, but they did not differentiate between the IP and IC. Lee et al. [

18] and Vupputuri et al. [

19] both adopted Diffusion-Weighted Imaging–Perfusion-Weighted Imaging (DWI-PWI) to quantify and differentiate the IP and IC. Werdiger et al. [

20] explored XGBoost, followed by 3D neighborhood analysis, for the concurrent segmentation of the IP and IC on CTP scans. Tomasetti et al. [

21] implemented a 4D Convolutional Neural Network (CNN) approach to leverage the spatiotemporal data contained within CTP scans, thereby delineating the IP and IC. Sathish et al. [

22] deployed an adversarially trained CNN to segment the IP and IC simultaneously from multi-sequence Magnetic Resonance Imaging (MRI) scans. In summary, the specialized methods mentioned here either do not strictly differentiate between the IP and IC or they rely on multiple advanced imaging modalities such as CTP, PWI and DWI to differentiate the IP and IC. However, these advanced imaging techniques are time consuming and sometimes even unavailable, and fast and cheap Non-Contrast CT (NCCT) has seldom been considered in previous studies.

In this study, we propose a neural network named PC-Net, which relies solely on widely available, cheap and fast baseline NCCT scans to simultaneously segment the IP and IC. PC-Net has a seven-level U-shaped encoder–decoder architecture, relying on pure convolution. In the encoder, to model the varying shapes, sizes and locations of the infarct lesions, we designed the Multi-Scale Convolution (MSC) block. To model the anatomical symmetry and capture the difference between the left and right sides of the brain, we propose the Symmetry Enhancement (SE) block. In the attention-based decoder, we utilized hierarchical deep supervision mechanisms for the entire ischemic region (IP + IC) in the three deep levels and for differentiating the IP and IC at the three low levels. Through the effective strategies proposed above, we hypothesized that the PC-Net can segment the IP and IC from NCCT well. Our contributions are summarized as follows: (1) We propose the MSC block to model the high variability of AIS lesions. (2) We introduce the SE block to capture the differences between the bilateral hemispheres of the brain. (3) An attention-based decoder was employed to better integrate high-level and low-level features. (4) Hierarchical deep supervision was designed to more effectively differentiate between the IP and IC on NCCT.

2. Materials and Methods

2.1. Data Acquisition

We collected multi-modal data including DWI, Fluid-Attenuated Inversion Recovery (FLAIR) and NCCT from 197 AIS patients in a prospective stroke registry at a single academic center. The institutional review board of the Seoul National University Bundang Hospital approved the data analysis, image evaluation and modeling process (B-2102/667-106). The included patients or their next of kin provided written consent for the prospective clinical stroke registry to record and collect their data (B-1401/236-007, B-1706/403-303).

All the modalities were coregistered to NCCT. There were two inclusion criteria for the patient samples: (1) each modality’s data encompass the entire brain without significant artifacts, and (2) expert annotations of the ischemic tissue region are available. In the dataset, the number of slices in the sagittal view is 512 and the ranges of the number of slices in the coronal and axial views are 512–638 and 28–37, respectively. The range of spacing is 0.326–0.429 mm for both the sagittal and coronal views and 4.999–5.015 mm for the axial view. Groundtruth labels for the IC and IP on NCCT were defined by high signal regions on DWI and DWI-FLAIR mismatch areas, respectively. These labels were first annotated by a neuroradiologist (Qiong Chen) with over 5 years of experience using the software ITK-SNAP version 4.2.0. [

23] and were then double checked by another neuroradiologist (Beom Joon Kim) with over 10 years of experience to achieve accurate annotations. Finally, we utilized these 197 annotated NCCT scans and divided them in a ratio of 7:2:1 for training, validation and testing, respectively.

2.2. Image Preprocessing

To eliminate the influence of the skull region, we first removed the skull following the method proposed by Najm et al. [

24].

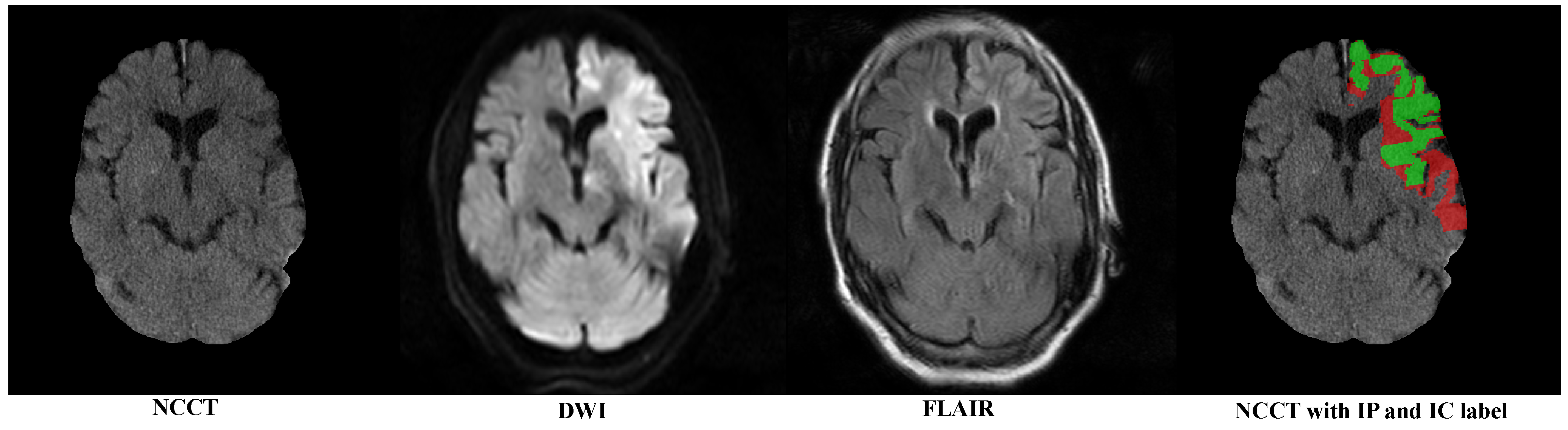

Figure 1 sequentially displays the NCCT after the skull removal, DWI with highlighted infarct signals, FLAIR showing a mismatch with DWI and the category labels for the IP (red) and IC (green).

Considering the robust and powerful performance of nnUNet [

9] for medical image segmentation, we followed its preprocessing approach, which depends on the statistical information of a specific dataset (called the dataset fingerprint). Initially, the images were cropped based on the 3D bounding box of the brain tissue to avoid unnecessary computations. Subsequently, all the images were resampled to the dataset’s median voxel spacing: 0.3789 mm × 0.3789 mm × 5.0 mm. This enhances the performance of CNN networks with inductive bias, enabling them to better learn the typical sizes of brain anatomical structures. Finally, Z-Score normalization was performed based on the mean and variance of the segmentation target (take pixel values within the range of 0.5% to 99.5%). This is equivalent to considering the window width and window level of the target lesion or anatomical structure, which helps the network learn more effective features and accelerates convergence.

2.3. The Proposed PC-Net

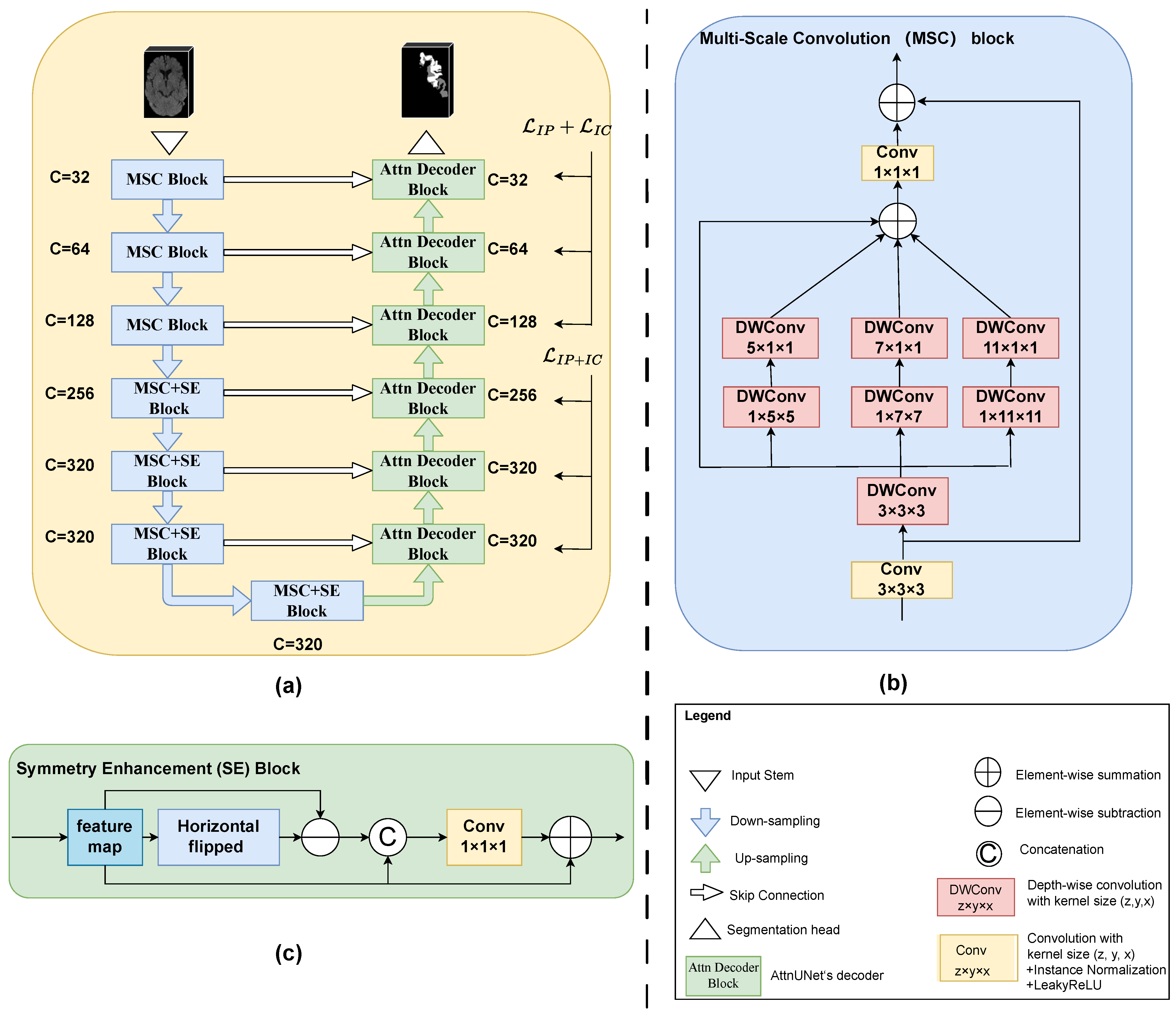

As illustrated in

Figure 2a,

PC-Net also adopted a U-shaped structure with a 7-level encoder and a 6-level decoder. The feature channel (i.e., the number of convolution filters) of each encoder level and decoder level are also given in

Figure 2a. The three low encoder levels were composed of MSC blocks, and the four deep encoder levels added an SE block after the MSC block. Convolution-based downsampling was interleaved between two adjacent encoder levels. In the six-level decoder, we adopted the attention-based decoder of Oktay et al. [

25] to better fuse high-level semantic information with low-level fine-grained image details. Transposed convolution-based upsampling was interleaved between two adjacent decoder levels. Note that for the spatial dimension D, downsampling or upsampling by a convolution of stride 2 was performed twice, i.e., only in the two levels above the bottleneck. Whereas for the H and W dimensions, upsampling or downsampling by a convolution of stride 2 occurred at every level. The input stem and segmentation head were, respectively, responsible for initial feature embedding and output generation. Considering the difficulty in differentiating the IP and IC on NCCT, a hierarchical deep supervision decoding mechanism was used for the decoder levels.

2.3.1. Multi-Scale Convolution Block

Existing general 3D medical image segmentation methods such as those targeting abdominal multi-organ segmentation and the similar shape size and location of the organs determine the feasibility of single-scale modeling. However, high variability in the location, size and shape of the infarct lesions needs multi-scale modeling. Inspired by Guo et al. [

26], we propose the MSC block, as shown in

Figure 2b. For the sake of simplicity in the diagram, we omitted the activation functions and normalization operations. Taking the MSC block in the first level of the encoder for example, the feature map was firstly passed to a vanilla convolution block (convolution with a kernel size of 3 × 3 × 3 + Instance Normalization + LeakyReLU). The output of this convolution operation was also added to the final output as a residual connection. Then, we designed a parallel depth-wise convolution branch with kernel sizes of 5, 7 and 11 to obtain the multi-scale features (note that here, all depth-wise convolutions were followed neither by normalization nor by activation functions, and to further enlarge the receptive field, a 3 × 3 × 3 depth-wise convolution was positioned before the other three scales). The four outputs of multiple depth-wise convolutional branches were added element-wise and then passed through a fusion convolution block (convolution with a kernel size of 1 × 1 × 1 + Instance Normalization + LeakyReLU). Additionally, to reduce the complexity of the model, depth-wise convolutions with a kernel size of 1 × k × k followed by a kernel size of k × 1 × 1 were employed in place of a kernel size of k × k × k, where

. Given an input feature map

, the MSC block’s output feature map

can be formalized as follows:

where

denotes the convolution with a kernel size of

, Norm represents Instance Normalization,

is the LeakyReLU activation function and

indicates a depthwise convolution with a kernel of

.

represents the

i-th depthwise convolutional branch, where i = 0 indicates the identity connection.

2.3.2. Symmetry Enhancement Block

The left and right hemispheres of the brain exhibit axial symmetry along the mid-sagittal line. Typically, the opposite side of a cerebral infarction is normal brain tissue. Previous studies had utilized this prior clinical knowledge to enhance the model’s ability to locate suspicious ischemic lesions. However, due to variations in patient positioning during imaging, the mid-sagittal line in the image may not be vertical. Previous strategies include the use of alignment neural networks and direct registration [

27,

28,

29,

30,

31,

32,

33]. We believe that the influence of slight tilts in brain scans can be mitigated at higher semantic levels, where each pixel represents a larger area of the original image. Therefore, we directly appended an SE block after the MSC block in the 4 high levels of the encoder. The structure of the SE block is shown in

Figure 2c. The feature map from the MSC block was first horizontally flipped and then it was element-wise subtracted from the flipped feature maps. The obtained feature map after subtraction was concatenated with the input feature map along the channel dimension. Subsequently, it passed through a convolution block (convolution with a kernel size of

+ Instance Normalization + LeakyReLU) to obtain the fused feature. Finally, the fused feature map was element-wise added to the input feature map to produce the final output. Given the input feature map

from the MSC block, the SE block’s output

can be formulated as

where

represents the feature map after horizontal flipping and Concat denotes concatenation along the channel dimension.

2.3.3. Attention-Based Decoder

The rational fusion of coarse-grained and fine-grained features is important for the final segmentation output. AttnUNet [

25] introduced gated attention units in skip connections. It used coarse-grained feature maps as queries to weight fine-grained feature maps from the same level encoder, thereby learning which spatial regions to focus on. Because this structure was designed to address anatomical structures with highly variable shapes, we believed that this design was equally applicable to stroke segmentation. In

Figure 2, it was denoted as “Attn Decoder Block”. Specifically, for each level of the “Attn Decoder Block”, the features from its subsequent level and the features from the corresponding level of the encoder were passed through a

convolution layer (the number of channels was halved) and then added element-wise. This was followed by a ReLU activation function and then another

convolution layer (where the number of channels was reduced to 1). The output then went through a Sigmoid activation function to obtain the weight (spatial attention score) at each pixel position. Finally, these weights were used to element-wise multiply with the features from the skip connections, thereby suppressing irrelevant feature responses in the fine-grained feature maps from the encoder. Lastly, the features from the subsequent level and the gated attention-modified features from the encoder at the same level were concatenated along the channel dimension and fused through a convolution layer. For detailed information, please refer to their publication [

25]. We believe that, building upon the precise and more powerful encoding blocks like MSC and SA, those multi-scale, symmetry-enhanced features could better suppress irrelevant feature responses transmitted from skip connections, making the final features more effective.

2.3.4. Hierarchical Deep Supervision

Owing to the exceedingly subtle differences between the IP and IC on NCCT, differentiating them directly in the deep layers of the network poses a significant challenge. In clinical practice, neuroradiologists initially approximate the location of the ischemic area and subsequently fine-tune the entire ischemic regions into the IP and IC. Drawing inspiration from this, we incorporated a hierarchical deep supervision strategy. Firstly, we continuously downsampled the ground truth label to match the spatial resolution of each decoder level. For each decoder level, we used a

convolutional layer to change the number of channels to the number of classification categories to achieve segmentation. That was, for the higher three levels, the number of categories was 2 (the background and IP + IC), and in the lower three levels, the number of categories was three (the background, IP and IC). We then calculated the loss by using the outputs of different levels and the corresponding downsampled ground truth. We used a linear combination of the Dice Similarity Coefficient (DSC) loss and Cross-Entropy (CE) loss as the objective function for each decoder level:

, where

and

are set to 1 in our practice. The total objective function of hierarchical deep supervision can be formalized as

where

represents the loss for the total ischemic area, treating the IP and IC as a single category, while

and

, respectively, denote the losses for the IP and IC regions and

represents the weights of different decoder level’s supervision loss. When

i ranges from 0 to 5 (six decoder levels from bottom to top),

takes the respective values of 0.02, 0.08, 0.2, 0.1, 0.2 and 0.4.

2.4. Implementation Details

We randomly sampled 3D patches of size 20 × 320 × 256 from the resampled and normalized data. For each patch, data augmentation includes spatial transformation (random rotation, random scaling and random elastic deformation), mirror transformation, adding white Gaussian noise, Gaussian blurring, low-resolution simulation, Gamma transformation and contrast and brightness adjustments. An initial learning rate of

with a polynomial decay schedule and a batch size of 2 were used. The Stochastic Gradient Descent (SGD) optimizer with a Nesterov Momentum of 0.99 and weight decay of

was used. The gradient clipping was set during training. We trained for 300 epochs, whereby each epoch consisted of 250 iterations. The code is available at

https://github.com/GitHub-TXZ/I2PC-Net/, which is accessible to anyone for free, allowing for the validation and utilization of our method.

We adopted the sliding window strategy and Test Time Augmentation (TTA) strategy [

9]. The window size is the same with the training patch size and its stride is

the patch size. The overlapping regions are weighted by a prepared Gaussian importance map. TTA is implemented via flipping along all axes. We did not perform any post-processing operations.

We compared several existing generic 2D or 3D segmentation methods, including pure CNN models such as nnUNet [

9] and AttnUNet [

25], pure Transformer models like nnFormer [

12] and D-Former [

11] as well as hybrid CNN and Transformer models like CoTr [

13] and Swin-UNETR [

14]. All the comparison methods were subjected to the same data processing and experimental settings to ensure fairness in the comparison. All the experiments were conducted on a ubuntu server (version 18.04) equipped with 5 NVIDIA A6000 48GB GPUs. The primary software dependencies include Pytorch version 2.0, nnU-Net version 2.2 (

https://github.com/MIC-DKFZ/nnUNet (accessed on 20 December 2023)), MONAI version 1.3 (

https://github.com/Project-MONAI/MONAI (accessed on 20 December 2023)) and Python version 3.10.11 (

https://www.python.org/ (accessed on 20 January 2023)).

2.5. Statistical Analysis

In evaluating the segmentation performance for the IP, IC and IC + IP, we computed the metrics DSC, 95th percentile Hausdorff Distance (HD95) and Average Symmetric Surface Distance (ASSD) along with their respective means and standard deviations [

31,

34]. To assess the volume concordance between the manual segmentation made by neuroradiologists and the

PC-Net, we calculated Pearson’s correlation coefficients with a 95% confidence interval (CI) and generated regression and Bland–Altman plots. Given a 70 mL cut-off as the volume threshold for binary classification, the volume classification performance was evaluated by using accuracy, Area Under the Curve (AUC), Kappa and their respective 95% CIs. The statistical analyses were conducted by using MedCalc software (version 20.218, MedCalc Software Ltd., Mariakerke, Belgium) and the Python programming language (version 3.10.11,

https://www.python.org/, (accessed on 20 January 2023)).

t-test and proportion tests were used and a two-sided alpha level of less than 0.05 was considered to denote statistical significance.

3. Results

3.1. Study Participants

In the dataset comprising 197 collected cases, the median age of the research participants was 72 [IQR, 63–80], with 72 male subjects (57.36%). The median Onset-to-CT time was 73 [IQR, 41–180] min, and the median baseline NIHSS was 11 [IQR: 6–17]. The details of patient characteristics for all 197 AIS patients collected were listed in

Table 1.

3.2. Results for IP Segmentation and IC Segmentation

We conducted comparisons with several existing 2D and 3D methods.

Table 2 demonstrates the segmentation results for the IP segmentation and IC segmentation. From

Table 2, our proposed

PC-Net achieved DSCs of 42.76% ± 21.84% and 33.54% ± 24.13%, HD95s of 13.81 ± 10.39 mm and 21.02 ± 14.81 mm and ASSDs of 3.59 ± 2.25 mm and 5.85 ± 4.28 mm for the IP and IC segmentation, respectively, outperforming all the compared 2D and 3D methods. These results show that our

PC-Net, benefiting from the effectiveness of the MSC and SA blocks, and the hierarchical deep supervision, achieved the optimal performance across various metrics. Overall, we could find that (1) the 3D methods were not necessarily superior to the 2D methods, which may be attributed to the large slice thickness, resulting in less strong connections between adjacent slices; (2) pure CNN approaches, such as AttnUNet [

25] and nnUNet [

9], continued to exhibit a robust performance in this task; (3) methods based solely on Transformers showed a weaker performance, possibly due to the challenges that Transformers face in smaller datasets rather than inherent limitations in the model itself; and (4) hybrid CNN–Transformer methods performed intermediately between pure Transformers and pure CNN methods. In other words, CNNs were more suitable for this task.

Figure 3 illustrates the visual segmentation results for our method and three representative methods: nnUNet [

9], AttnUNet [

25] and CoTr [

13] for the IP and IC segmentation. In the figure, we could see that our

PC-Net could accurately locate the affected regions in the GTs of the IP and IC well, and also match the GT labels (DSC = 47.44% and 74.57% for the IP and IC, respectively) better than the three compared methods, showing its potential to provide affected-region information in clinical applications.

3.3. Results for the Entire Infarct (IP + IC) Segmentation

In clinical practice, the evaluation of the entire ischemic infarct (the IP + IC) is also of paramount importance for diagnosis and prognosis [

31,

43]. Therefore, we also evaluated the segmentation performance of the entire ischemic infarct. Without any additional training, all the methods’ segmentation results and the Groundtruth segmentation results treated the IP and IC labels as one category, without distinguishing between the IP and IC. Subsequently, the segmentation metrics were calculated to obtain

Table 3. For the entire ischemic infarcts, our method achieved a DSC of 65.67% ± 12.30%, an HD95 of 12.54 ± 7.96 mm and an ASSD of 2.88 ± 1.27 mm, surpassing all comparative methods. In terms of the DSC, our method outperformed the best 2D method 2D nnUNet [

9] (65.67% ± 12.30% vs. 49.44% ± 23.63%), the best pure 3D CNN method 3D nnUNet [

9] (65.67% ± 12.30% vs. 62.22% ± 11.74%), the best pure 3D Transformer method D-former [

11] (65.67% ± 12.30% vs. 45.18% ± 23.25%) and the best hybrid CNN–Transformer method CoTr [

13] (65.67% ± 12.30% vs. 50.09% ± 22.14%).

As shown in the sixth subfigure (denoted by

PC-Net) in

Figure 3, our method could accurately locate the entire ischemic regions and match the GT IP + IC well. Our method shows a similar DSC performance to nnUNet (89.17% vs. 89.77%) for the IP + IC segmentation. However, our method achieved a higher DSC for the IP segmentation and IP segmentation, showing its effectiveness at distinguishing the IP and IC.

Figure 3.

Visual segmentation results of the PC-Net and three state-of-the-art methods: nnUNet, AttnUNet and CoTr. The red and green regions in each subfigure represent manual Groundtruth of IP and IC or IP and IC segmented by each compared algorithm, respectively. The numbers above the figure denote the DSC for IP, IC and IP + IC in this slice, respectively.

Figure 3.

Visual segmentation results of the PC-Net and three state-of-the-art methods: nnUNet, AttnUNet and CoTr. The red and green regions in each subfigure represent manual Groundtruth of IP and IC or IP and IC segmented by each compared algorithm, respectively. The numbers above the figure denote the DSC for IP, IC and IP + IC in this slice, respectively.

3.4. Volumetric Analysis of Segmented Infarcts

In clinical practice, the volume correlation as well as the infarct volume (e.g., 70 mL as the cut-off) are crucial for selecting AIS patients who will obtain good outcomes after different treatments [

31,

44,

45]. Therefore, we also conducted a volume analysis on the ischemic infarcts obtained by our method to illustrate the clinical relevance of the proposed method.

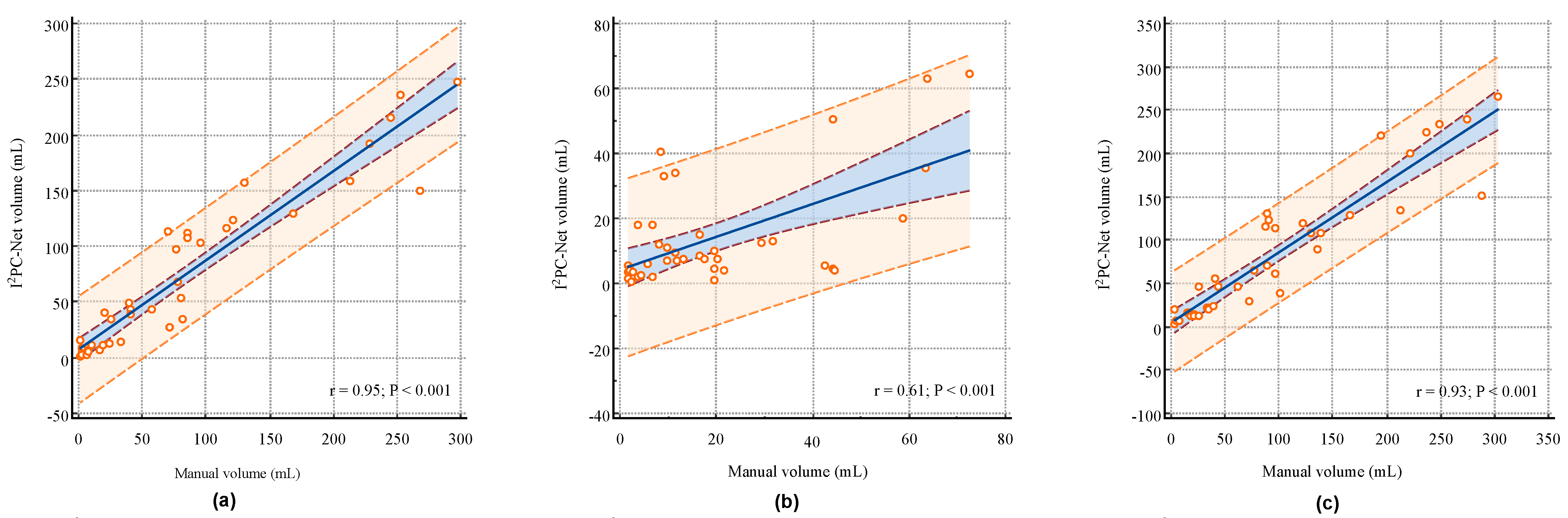

Figure 4a–c illustrate the correlation analysis between the

PC-Net segmented volumes and manual segmented volumes for the IP, IC and IP + IC, respectively. The proposed

PC-Net achieved Pearson linear correlation coefficients (r) of 0.95 (95% CI: 0.9019–0.9720,

p < 0.001), 0.61 (95% CI: 0.3637–0.7721,

p < 0.001) and 0.93 (95% CI: 0.8749–0.9639,

p < 0.001) for the IP, IC and IP + IC, respectively. These indicate a strong positive volume correlation for the IP and IP + IC, and the more challenging IC also exhibits a moderate volume correlation. Segmenting AIS infarcts in NCCT scans presents significant difficulties. First, compared to other imaging techniques like MRI, NCCT proves harder to analyze because of the lower signal-to-noise and contrast-to-noise ratios in cerebral tissues. Second, distinguishing infarct areas is complicated by normal physiological alterations, with the affected brain regions often exhibiting only slight differences in density and texture [

32,

33]. In the early stages of stroke, the IC does not appear significantly on NCCT, making it very difficult to distinguish between the IP and IC. Therefore, the correlation of the IC volume is relatively weak.

We also dichotomized the entire ischemic region (IP + IC) volume by using 70 mL as a cut-off and then evaluated the binary volume classification performance. Our developed PC-Net demonstrated the capability to discriminate between patients with lesion volumes of ≤70 mL and >70 mL with a Kappa of 0.7536 [95% CI: 0.5579–0.9494], an AUC of 0.886 [95% CI: 0.746–0.965] and an accuracy of 87.50% [95% CI: 73.19%–95.81%], suggesting reasonable dichotomization volume information for therapy decision making.

4. Discussion

In this study, we explored a fully automatic segmentation approach named PC-Net to simultaneously segment the IP and IC from NCCT scans. By employing MSC blocks, SA blocks and hierarchical deep supervision mechanisms, the proposed PC-Net demonstrated a superior performance compared to some existing methods.

A comparative analysis with other methods revealed that the pure Transformer-based methods exhibited the poorest performance. The hybrid methods showed performance improvements over the pure Transformers, and they did so at the expense of convergence speed and computational cost. Pure convolutional approaches were more suited for this task in terms of convergence speed and final performance. Our method outperformed the powerful nnUNet, attributable to its enhanced capability of muti-scale modeling, suspected ischemic area locating and IP and IC differentiating. The experiment results confirm our hypothesis: employing modules like MSC blocks and SA blocks allow for the better handling of the substantial variability in infarct shape, size and location, while a hierarchical deep supervision decoding mechanism more effectively addresses the challenges in distinguishing between the IP and IC. This study demonstrates the feasibility of using only NCCT for simultaneous quantitative assessments of the IP and IC. PC-Net can provide valuable insights for neuroradiologists in making therapeutic decisions, laying the groundwork for future researchers to develop more effective and broadly applicable methods.

From the quantitative segmentation metrics, visual segmentation results and volume analysis results, our approach demonstrated the effective localization of the ischemic region. Moreover, the classification performance using a cut-off volume of 70 mL was also favorable. This implies that in clinical applications, our method, relying solely on NCCT, can furnish valuable information for decision making in AIS treatments. The average time for automatic segmentation using the trained model was 3.49 s per NCCT scan, significantly enhancing the diagnostic efficiency of neuroradiologists for AIS patients.

Furthermore, to explore whether there were relevant clinical factors influencing the volume classification performance using 70 mL as cut-off, we conducted subgroup analyses based on factors such as gender, age, NIHSS score and Onset-to-CT time. As depicted in

Table 4, the classification performance for patients aged over 70 was significantly superior to those under 70, and for patients with an Onset-to-CT-time exceeding 180 min, the classification performance was significantly better than for those below 180 min. No significant differences were found in the gender and NIHSS subgroups. A subgroup analysis indicates that age and Onset-to-CT time are two clinical factors closely associated with segmentation and classification performance. The reason why segmentation and lesion volume classification are more effective when the onset-to-CT time ≥ 180 min is that the longer the Onset-to-CT time, the more stable the lesion changes become, and lesions become more contrasted against the healthy tissues, making them easier to be segmented. However, given that the golden treatment window for AIS is 4.5 h, it is generally recommended in clinical practice to perform an NCCT scan and decide the appropriate treatment as soon as possible to improve the success rate of the intervention. Therefore, the above results do not imply that we should wait until after 180 min to collect NCCT data for treatment. Future research can incorporate age and Onset-to-CT time into the modeling process to further improve accuracy.

This study also has several limitations. First, the sample size in this paper is limited, and there is no external validation cohort. In the future, we aim to collect more data to train models that are more effective and broadly applicable. Second, from the qualitative results, we can find that even though the model accurately locates the entire ischemic region, the segmentation performance of the IP and IC individually might not be optimal. How to better distinguish the IP and IC while maintaining the accuracy of the entire ischemic IP + IC region remains a topic for future work.

5. Conclusions

This study proposed a pure CNN-based method, termed PC-Net, which relies solely on NCCT to simultaneously and automatically segment the IP and IC. It mitigates the challenges of significant variations in the size, location and shape of infarct lesions through multi-scale modeling and Symmetry Enhancement blocks. We also employed a hierarchical deep supervision decoding mechanism to address the difficulty in distinguishing between the IP and IC in the deep layer. The results indicate that PC-Net can automatically and quantitatively assess the IP and IC with good localization of the affected regions, strong volume correlation and high dichotomized volume classification performance, potentially providing valuable infarct information for diagnosis and patient selection in clinical applications.

Author Contributions

Conceptualization, H.K., X.T., J.W., Z.Q., Y.C., Q.C., B.J.K. and W.Q.; data curation, H.K., Q.C., B.J.K. and W.Q.; formal analysis, H.K., X.T., J.W., Z.Q., Y.C. and W.Q.; funding acquisition, H.K. and W.Q.; investigation, X.T., J.W., Z.Q., Y.C. and W.Q.; methodology, H.K., X.T., J.W., Z.Q., Y.C. and W.Q.; project administration, W.Q.; resources, H.K., Q.C. and B.J.K.; software, H.K. and X.T.; supervision, H.K.; validation, H.K., X.T. and W.Q.; visualization, H.K. and X.T.; writing—original draft, H.K. and X.T.; writing—review and editing, H.K., X.T., J.W., Z.Q., Y.C., Q.C., B.J.K. and W.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (no. 62102454), the National Key Research and Development Program of China (2021YFF1201200, 2022YFE0209900 and 2023YFC2410802), the Science and Technology Innovation Program of Hunan Province (no. 2022RC1031), the Hubei Provincial Key Research and Development Program (2022BCE042 and 2023BCB007), the High-Performance Computing Center of Central South University, the High-Performance Computing platform of Huazhong University of Science and Technology and computer power at Wuhan Seekmore Intelligent Imaging Inc.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki. The study was approved by the Seoul National University Bundang Hospital, approval code: B-2102/667-10, approval date: 7 February 2021.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AIS | Acute Ischemic Stroke |

| IP | Ischemic Penumbra |

| IC | Ischemic Core |

| NCCT | Non-Contrast Computed Tomography |

| MSC | Multi-Scale Convolution |

| SA | Symmetry Enhancement |

| DSC | Dice Similarity Coefficient |

| HD95 | 95th Hausdorff Distance |

| ASSD | Average Symmetric Surface Distance |

| CNN | Convolutional Neural network |

| PC-Net | IP and IC neural Network |

| CI | Confidence interval |

References

- Rennert, R.C.; Wali, A.R.; Steinberg, J.A.; Santiago-Dieppa, D.R.; Olson, S.E.; Pannell, J.S.; Khalessi, A.A. Epidemiology, natural history, and clinical presentation of large vessel ischemic stroke. Neurosurgery 2019, 85, S4. [Google Scholar] [CrossRef]

- Smith, W.S.; Lev, M.H.; English, J.D.; Camargo, E.C.; Chou, M.; Johnston, S.C.; Gonzalez, G.; Schaefer, P.W.; Dillon, W.P.; Koroshetz, W.J.; et al. Significance of large vessel intracranial occlusion causing acute ischemic stroke and TIA. Stroke 2009, 40, 3834–3840. [Google Scholar] [CrossRef]

- Ginsberg, M.D. Adventures in the pathophysiology of brain ischemia: Penumbra, gene expression, neuroprotection: The 2002 Thomas Willis Lecture. Stroke 2003, 34, 214–223. [Google Scholar] [CrossRef]

- Paciaroni, M.; Caso, V.; Agnelli, G. The concept of ischemic penumbra in acute stroke and therapeutic opportunities. Eur. Neurol. 2009, 61, 321–330. [Google Scholar] [CrossRef]

- Jauch, E.C.; Saver, J.L.; Adams, H.P., Jr.; Bruno, A.; Connors, J.; Demaerschalk, B.M.; Khatri, P.; McMullan, P.W., Jr.; Qureshi, A.I.; Rosenfield, K.; et al. Guidelines for the early management of patients with acute ischemic stroke: A guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 2013, 44, 870–947. [Google Scholar] [CrossRef]

- Bourcier, R.; Goyal, M.; Liebeskind, D.S.; Muir, K.W.; Desal, H.; Siddiqui, A.H.; Dippel, D.W.; Majoie, C.B.; Van Zwam, W.H.; Jovin, T.G.; et al. Association of time from stroke onset to groin puncture with quality of reperfusion after mechanical thrombectomy: A meta-analysis of individual patient data from 7 randomized clinical trials. JAMA Neurol. 2019, 76, 405–411. [Google Scholar] [CrossRef]

- Evans, M.R.; White, P.; Cowley, P.; Werring, D.J. Revolution in acute ischaemic stroke care: A practical guide to mechanical thrombectomy. Pract. Neurol. 2017, 17, 252–265. [Google Scholar] [CrossRef]

- Powers, W.J.; Rabinstein, A.A.; Ackerson, T.; Adeoye, O.M.; Bambakidis, N.C.; Becker, K.; Biller, J.; Brown, M.; Demaerschalk, B.M.; Hoh, B.; et al. 2018 guidelines for the early management of patients with acute ischemic stroke: A guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 2018, 49, e46–e99. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Lee, H.H.; Bao, S.; Huo, Y.; Landman, B.A. 3d ux-net: A large kernel volumetric convnet modernizing hierarchical transformer for medical image segmentation. arXiv 2022, arXiv:2209.15076. [Google Scholar]

- Wu, Y.; Liao, K.; Chen, J.; Wang, J.; Chen, D.Z.; Gao, H.; Wu, J. D-former: A u-shaped dilated transformer for 3D medical image segmentation. Neural Comput. Appl. 2023, 35, 1931–1944. [Google Scholar] [CrossRef]

- Zhou, H.Y.; Guo, J.; Zhang, Y.; Han, X.; Yu, L.; Wang, L.; Yu, Y. nnFormer: Volumetric medical image segmentation via a 3D transformer. IEEE Trans. Image Process. 2023, 32, 4036–4045. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, J.; Shen, C.; Xia, Y. Cotr: Efficiently bridging cnn and transformer for 3d medical image segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part III 24; Springer: Berlin/Heidelberg, Germany, 2021; pp. 171–180. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin unetr: Swin transformers for semantic segmentation of brain tumors in mri images. In Proceedings of the International MICCAI Brainlesion Workshop, Virtual, 27 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 272–284. [Google Scholar]

- Gupta, A.; Vupputuri, A.; Ghosh, N. Delineation of ischemic core and penumbra volumes from mri using msnet architecture. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6730–6733. [Google Scholar]

- Bhurwani, M.M.S.; Boutelier, T.; Davis, A.; Gautier, G.; Swetz, D.; Rava, R.A.; Raguenes, D.; Waqas, M.; Snyder, K.V.; Siddiqui, A.H.; et al. Identification of infarct core and ischemic penumbra using computed tomography perfusion and deep learning. J. Med. Imaging 2023, 10, 014001. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Lee, H.; Jung, K.; Kang, D.W.; Kim, N. Fully automated and real-time volumetric measurement of infarct core and penumbra in diffusion-and perfusion-weighted MRI of patients with hyper-acute stroke. J. Digit. Imaging 2020, 33, 262–272. [Google Scholar] [CrossRef]

- Vupputuri, A.; Ashwal, S.; Tsao, B.; Haddad, E.; Ghosh, N. MRI based objective ischemic core-penumbra quantification in adult clinical stroke. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3012–3015. [Google Scholar]

- Werdiger, F.; Parsons, M.W.; Visser, M.; Levi, C.; Spratt, N.; Kleinig, T.; Lin, L.; Bivard, A. Machine learning segmentation of core and penumbra from acute stroke CT perfusion data. Front. Neurol. 2023, 14, 1098562. [Google Scholar] [CrossRef]

- Tomasetti, L.; Engan, K.; Høllesli, L.J.; Kurz, K.D.; Khanmohammadi, M. CT Perfusion is All We Need: 4D CNN Segmentation of Penumbra and Core in Patients with Suspected Acute Ischemic Stroke. IEEE Access 2023, 11, 138936–138953. [Google Scholar] [CrossRef]

- Sathish, R.; Rajan, R.; Vupputuri, A.; Ghosh, N.; Sheet, D. Adversarially trained convolutional neural networks for semantic segmentation of ischaemic stroke lesion using multisequence magnetic resonance imaging. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1010–1013. [Google Scholar]

- Yushkevich, P.A.; Piven, J.; Cody Hazlett, H.; Gimpel Smith, R.; Ho, S.; Gee, J.C.; Gerig, G. User-Guided 3D Active Contour Segmentation of Anatomical Structures: Significantly Improved Efficiency and Reliability. Neuroimage 2006, 31, 1116–1128. [Google Scholar] [CrossRef]

- Najm, M.; Kuang, H.; Federico, A.; Jogiat, U.; Goyal, M.; Hill, M.D.; Demchuk, A.; Menon, B.K.; Qiu, W. Automated brain extraction from head CT and CTA images using convex optimization with shape propagation. Comput. Methods Programs Biomed. 2019, 176, 1–8. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Barman, A.; Inam, M.E.; Lee, S.; Savitz, S.; Sheth, S.; Giancardo, L. Determining ischemic stroke from CT-angiography imaging using symmetry-sensitive convolutional networks. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1873–1877. [Google Scholar]

- Clèrigues, A.; Valverde, S.; Bernal, J.; Freixenet, J.; Oliver, A.; Lladó, X. Acute and sub-acute stroke lesion segmentation from multimodal MRI. Comput. Methods Programs Biomed. 2020, 194, 105521. [Google Scholar] [CrossRef]

- Cui, L.; Han, S.; Qi, S.; Duan, Y.; Kang, Y.; Luo, Y. Deep symmetric three-dimensional convolutional neural networks for identifying acute ischemic stroke via diffusion-weighted images. J. X-ray Sci. Technol. 2021, 29, 551–566. [Google Scholar] [CrossRef]

- Fuchigami, T.; Akahori, S.; Okatani, T.; Li, Y. A hyperacute stroke segmentation method using 3D U-Net integrated with physicians’ knowledge for NCCT. In Proceedings of the Medical Imaging 2020: Computer-Aided Diagnosis, SPIE, Houston, TX, USA, 16–19 February 2020; Volume 11314, pp. 96–101. [Google Scholar]

- Kuang, H.; Menon, B.K.; Sohn, S.I.; Qiu, W. EIS-Net: Segmenting early infarct and scoring ASPECTS simultaneously on non-contrast CT of patients with acute ischemic stroke. Med. Image Anal. 2021, 70, 101984. [Google Scholar] [CrossRef]

- Liang, K.; Han, K.; Li, X.; Cheng, X.; Li, Y.; Wang, Y.; Yu, Y. Symmetry-enhanced attention network for acute ischemic infarct segmentation with non-contrast CT images. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part VII 24; Springer: Berlin/Heidelberg, Germany, 2021; pp. 432–441. [Google Scholar]

- Ni, H.; Xue, Y.; Wong, K.; Volpi, J.; Wong, S.T.; Wang, J.Z.; Huang, X. Asymmetry Disentanglement Network for Interpretable Acute Ischemic Stroke Infarct Segmentation in Non-contrast CT Scans. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 416–426. [Google Scholar]

- Kuang, H.; Wang, Y.; Liang, Y.; Liu, J.; Wang, J. BEA-Net: Body and Edge Aware Network With Multi-Scale Short-Term Concatenation for Medical Image Segmentation. IEEE J. Biomed. Health Inform. 2023, 27, 4828–4839. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 205–218. [Google Scholar]

- Gao, Y.; Zhou, M.; Metaxas, D.N. UTNet: A hybrid transformer architecture for medical image segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part III 24; Springer: Berlin/Heidelberg, Germany, 2021; pp. 61–71. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016; Proceedings, Part II 19; Springer: Berlin/Heidelberg, Germany, 2016; pp. 424–432. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 565–571. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Wang, W.; Chen, C.; Ding, M.; Yu, H.; Zha, S.; Li, J. Transbts: Multimodal brain tumor segmentation using transformer. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part I 24; Springer: Berlin/Heidelberg, Germany, 2021; pp. 109–119. [Google Scholar]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3D medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Qiu, W.; Kuang, H.; Teleg, E.; Ospel, J.M.; Sohn, S.I.; Almekhlafi, M.; Goyal, M.; Hill, M.D.; Demchuk, A.M.; Menon, B.K. Machine learning for detecting early infarction in acute stroke with non–contrast-enhanced CT. Radiology 2020, 294, 638–644. [Google Scholar] [CrossRef]

- Campbell, B.C.; Mitchell, P.J.; Kleinig, T.J.; Dewey, H.M.; Churilov, L.; Yassi, N.; Yan, B.; Dowling, R.J.; Parsons, M.W.; Oxley, T.J.; et al. Endovascular therapy for ischemic stroke with perfusion-imaging selection. N. Engl. J. Med. 2015, 372, 1009–1018. [Google Scholar] [CrossRef]

- Kuang, H.; Wang, Y.; Liu, J.; Wang, J.; Cao, Q.; Hu, B.; Qiu, W.; Wang, J. Hybrid CNN-Transformer Network with Circular Feature Interaction for Acute Ischemic Stroke Lesion Segmentation on Non-contrast CT Scans. IEEE Trans. Med. Imaging 2024. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).