1. Introduction

Chemical incidents in hospital laboratories represent increasingly critical safety challenges in modern healthcare systems. Recent analyses of healthcare facility incidents demonstrate a concerning upward trend in chemical-related accidents, with hospital laboratories experiencing disproportionately higher rates of exposure incidents compared to traditional industrial settings [

1]. The complexity of modern hospital laboratory operations, involving diverse chemical inventories, time-pressure workflows, and multi-disciplinary staff with varying safety training backgrounds, creates unique challenges for effective safety communication, and emergency response [

2].

Recent advances in digital incident reporting systems have demonstrated the potential for enhancing occupational safety and health management in laboratory settings through real-time data collection and analysis [

3].

The current regulatory framework for chemical safety communication relies primarily on Material Safety Data Sheets (SDS), standardized documents mandated by the Globally Harmonized System of Classification and Labelling of Chemicals (GHS) [

4]. While SDS provide comprehensive chemical information, their standardized 16-section format presents significant usability challenges in emergency situations [

5]. The extensive length (typically 8–16 pages), technical language, and complex organizational structure create substantial cognitive barriers for rapid information retrieval during time-critical emergency responses [

6].

International safety frameworks, including those established by the World Health Organization (WHO), International Labour Organization (ILO), and GHS, emphasize the importance of accessible safety communication [

7]. However, these frameworks primarily focus on information completeness and regulatory compliance rather than practical usability and cognitive accessibility in emergency situations [

8]. This regulatory approach, while ensuring comprehensive information provision, may inadvertently compromise the primary objective of safety communication: enabling rapid, accurate decision-making during chemical incidents [

9].

The application of human factors engineering principles to safety communication design has gained increasing recognition in healthcare settings [

10]. Cognitive load theory, originally developed by Sweller, provides a theoretical framework for understanding how information design affects human performance under stress [

11]. In emergency situations, excessive cognitive load can impair decision-making, delay appropriate responses, and increase the likelihood of errors [

12]. User-centered design principles, as outlined by Norman and Nielsen, emphasize the importance of designing systems that align with human cognitive capabilities and task requirements rather than regulatory mandates alone [

13].

Despite the critical importance of effective safety communication in hospital laboratories, previous research has not adequately addressed the specific challenges of SDS utilization in healthcare settings [

14]. While studies have evaluated SDS comprehensibility and accuracy in industrial contexts, the unique cognitive and operational requirements of hospital laboratory emergency response have received limited attention [

15]. The complex intersection of clinical responsibilities, diverse chemical exposures, and time-pressure decision-making in hospital laboratories creates distinct challenges that require specialized approaches to safety communication design [

16].

This study addresses these gaps by developing and evaluating a user-centered Essential Safety Sheet (ESS) intervention specifically designed for hospital laboratory applications [

17]. The ESS represents a potential approach to addressing the cognitive limitations of existing SDS systems through evidence-based design principles integrated with practical emergency response requirements [

18]. Rather than claiming definitive superiority, this research explores whether user-centered design principles can offer a meaningful alternative to traditional compliance-based approaches to safety communication in healthcare settings [

19].

Through comprehensive evaluation using cross-sectional analysis, controlled task-based assessment, and longitudinal impact evaluation, this research provides preliminary evidence for the potential effectiveness of user-centered safety communication design in healthcare settings [

20]. The study objectives were as follows: (1) to assess current SDS awareness levels and associated factors among hospital laboratory staff, (2) evaluate the comparative effectiveness of ESS versus traditional SDS for emergency response tasks, (3) analyze the longitudinal impact of ESS implementation on safety outcomes using interrupted time series and difference-in-differences (DID) analyses, and (4) identify priority SDS sections for hospital laboratory applications through evidence-based Delphi consensus research [

21].

Accordingly, we first developed a one-page Essential Safety Sheet (ESS) using human-factors and Delphi methods, and then evaluated its effectiveness via cross-sectional, task-based, and longitudinal analyses in hospital laboratories.

3. Results

3.1. ESS Development and Delphi Consensus Results

The 3-round Delphi consensus study involving 15 international hospital laboratory safety experts achieved strong agreement on priority SDS sections for emergency response applications. The consensus process demonstrated excellent reliability (Kendall’s W = 0.78,

p = 0.010 (Bonferroni-adjusted

p = 0.031)). The Delphi panel prioritized content directly supporting time-critical decisions… Rankings and consensus levels are summarized in

Table 1.

3.2. Participant Characteristics and Response Analysis

Presents the demographic characteristics of the 80 study participants. The sample comprised 28 males (35.0%) and 52 females (65.0%), with 55 participants (68.8%) aged ≤ 39 years. The majority held senior positions (n = 48, 60.0%) and clinical roles (n = 53, 66.3%). Regarding tenure, 42 participants (52.5%) had ≤5 years of experience, while 38 (47.5%) had >5 years. Formal safety training participation was reported by 35 participants (43.8%).

Our analytic sample comprised hospital laboratory staff across diverse roles. Baseline demographic and occupational features (age, tenure, job category, and seniority) are summarized in

Table 2. No material imbalances relevant to the primary outcomes were observed.

Response rate analysis comparing respondents (n = 80) to non-respondents (n = 90) showed no significant differences in age distribution (p = 0.24), gender (p = 0.18), or institutional affiliation (p = 0.33), suggesting minimal selection bias, though the possibility of unmeasured differences in motivation or safety orientation cannot be entirely excluded.

3.3. Cross-Sectional Analysis: SDS Awareness and Associated Factors

Among the 80 participants, 43 (53.8%) demonstrated high SDS awareness based on the validated binary classification system. Multivariable logistic regression analysis identified several significant associations with SDS awareness levels.

Male gender was significantly associated with higher SDS awareness (aOR = 1.95, 95% CI 1.22–3.12, p = 0.022). Participants with shorter tenure (≤5 years) showed higher awareness compared to those with longer tenure (aOR = 1.52, 95% CI 1.08–2.14, p = 0.017).

Notably, formal safety training showed a counterintuitive negative association with SDS awareness (aOR = 0.58, 95% CI 0.34–0.99,

p = 0.045). In multivariable models, male sex, shorter tenure, and clinical roles were associated with higher odds of high SDS awareness, whereas age and formal seniority showed no clear association. Full adjusted estimates are reported in

Table 3. The negative association between Formal training and SDS awareness should be interpreted as an exploratory finding. Potential confounders such as training quality, institutional differences, or unmeasured variables may explain this result, and replication in larger, multi-institutional samples is needed before drawing firm conclusions.

3.4. Task-Based Evaluation: ESS vs. Traditional SDS Performance

The task-based controlled evaluation demonstrated significant performance improvements with ESS compared to traditional SDS across two of the three co-primary outcomes. However, task-based evaluations were conducted under controlled simulation scenarios. While improvements were substantial, these findings cannot be directly equated with real-world emergency performance. Field validation in actual clinical incidents is required to confirm clinical translation.

Emergency Response Accuracy: The ESS group achieved significantly higher accuracy (34/40, 85.0%) compared to the control group (24/40, 60.0%), representing a 25% improvement (RD = +25.0 pp, 95% CI 8.2–41.8, RR = 1.42, 95% CI 1.07–1.88, p = 0.010 (Bonferroni-adjusted p = 0.031)).

Information Search Time: Participants using ESS demonstrated markedly faster information retrieval (15.2 ± 3.1 s) compared to traditional SDS users (27.7 ± 4.5 s), representing a 12.5 s reduction (MD = −12.5 s, 95% CI −14.2 to −10.8, Cohen’s d = 3.24, p = 0.010 (Bonferroni-adjusted p = 0.031)). This 45.1% improvement in search efficiency has direct implications for emergency response effectiveness.

Escalation Accuracy: The ESS group showed superior escalation decision-making (33/40, 82.5%) compared to the control group (25/40, 62.5%), with a 20% (RD = +20.0 pp, 95% CI 5.1–34.9, RR = 1.32, 95% CI 1.00–1.75, p = 0.050 (Bonferroni-adjusted p = 0.150)).

The large effect sizes observed (e.g., Cohen’s d > 3) likely reflect the controlled nature of the experimental setting. Real-world effects may be smaller, and pragmatic trials are needed to establish effectiveness under operational conditions. In simulated tasks, ESS outperformed the traditional SDS… Detailed task-level metrics are provided in

Table 4.

3.5. Longitudinal Impact Assessment: 13-Month ITS and DID Analysis

The longitudinal analysis over 13 months demonstrated sustained improvements in safety outcomes following ESS implementation across the 8 laboratory units. The analysis window was 13 months: pre (months −6 to −1 before), intervention (t = 0), and post (months +1 to +6 after). Pre-intervention unit characteristics showed good balance between intervention and control groups, and the parallel trends assumption was validated.

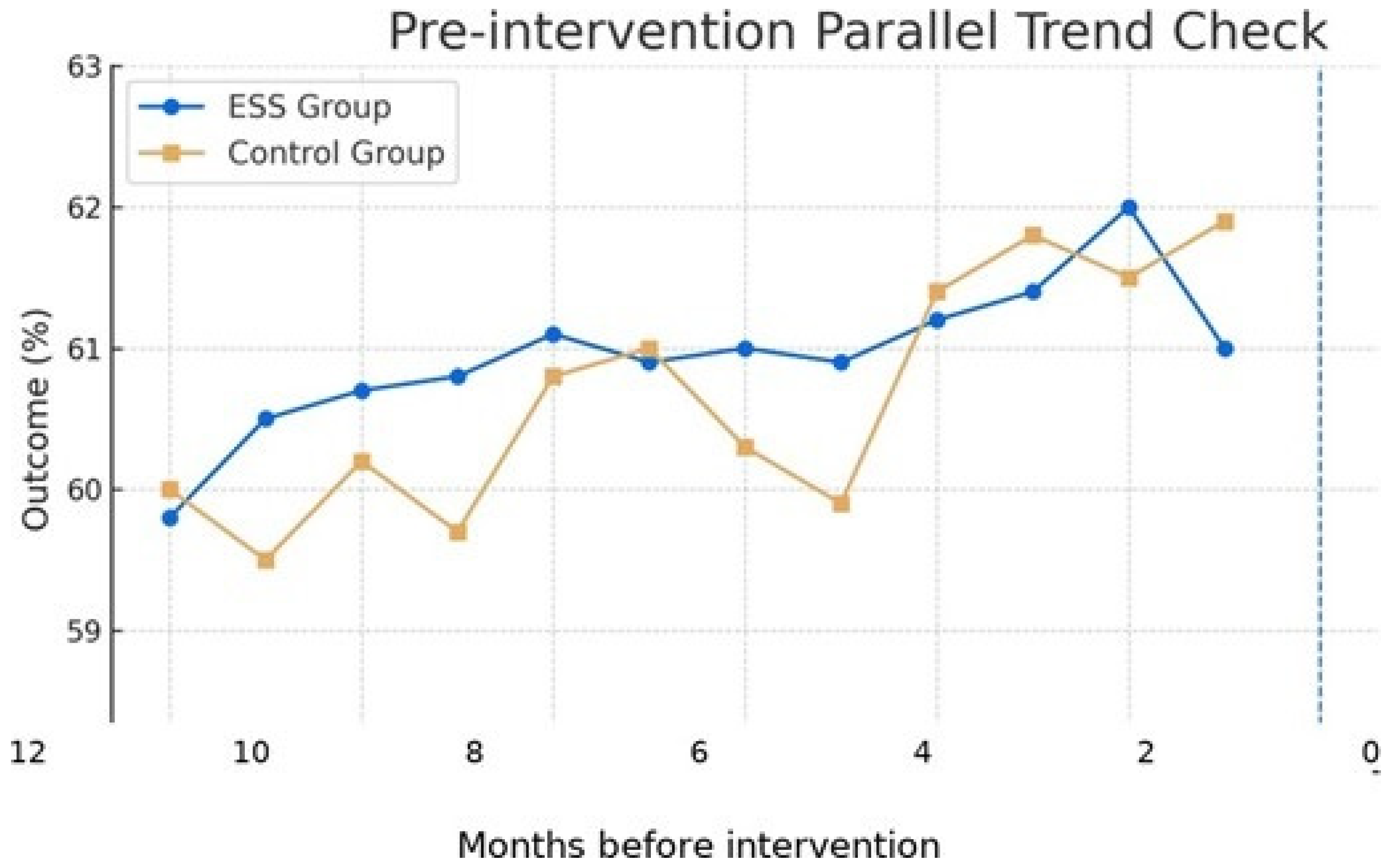

Illustrative time-series patterns across pre- and post-intervention periods are presented in

Figure 1.

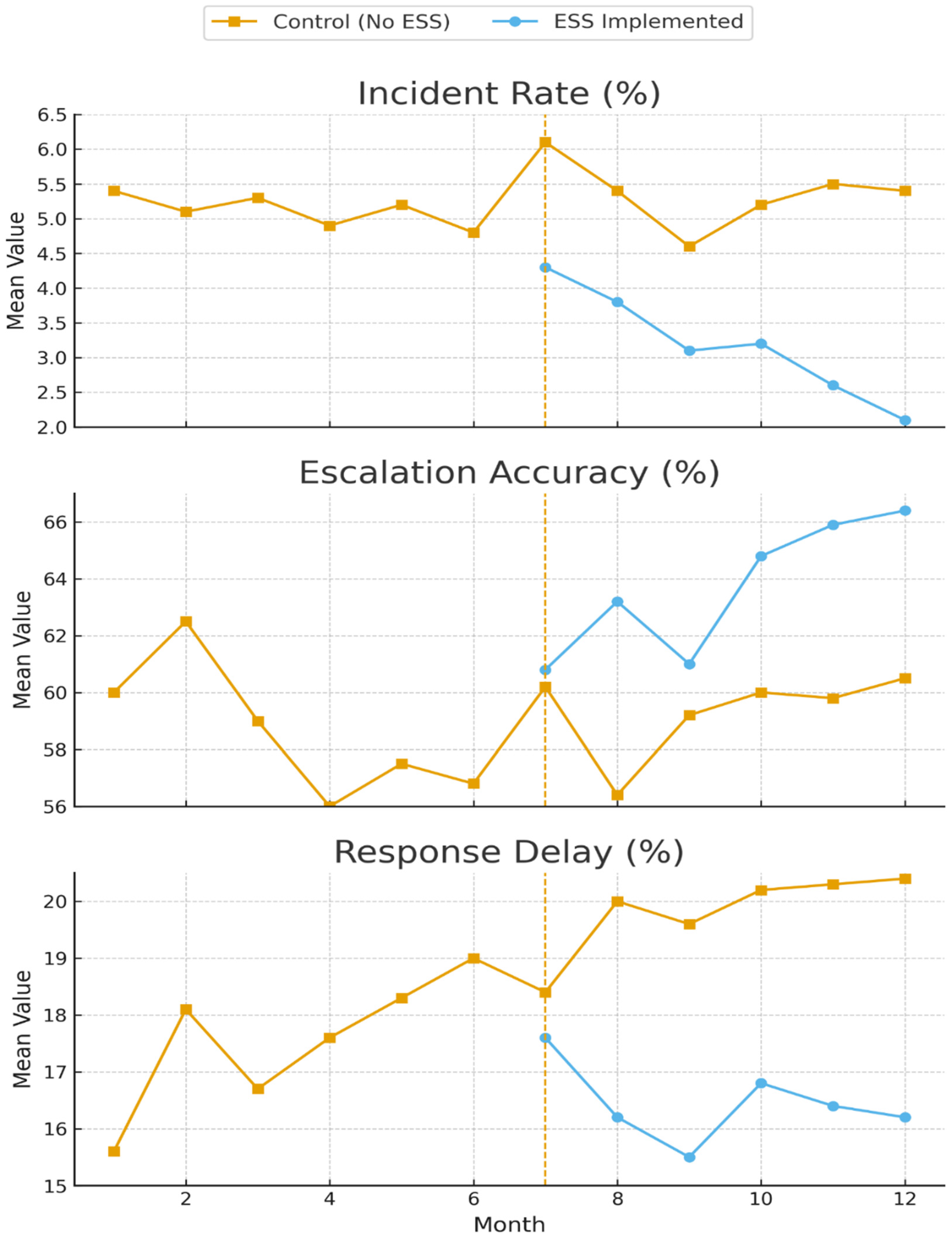

The main results of the interrupted time series analysis for the primary safety outcomes are presented in

Figure 2.

Difference-in-Differences Analysis: The DID analysis comparing intervention units (n = 4) with control units (n = 4) confirmed the causal attribution of improvements to ESS implementation. A consolidated summary of ITS and DID estimates for primary outcomes is provided in

Table 5.

Table 6 summarizes the task-based evaluation: risk ratio (RR) for task accuracy and mean difference (MD, s) for information-search time, reported with 95% confidence intervals and two-sided

p values (Cohen’s

d provided for search time).

The interaction effects demonstrated significant improvements:

Although the 13-month analysis window demonstrated sustained improvements and the parallel trends assumption was validated, baseline level differences and the relatively short duration limit certainty regarding long-term sustainability. Extended follow-up and Replication across diverse contexts are needed.

The x-axis is labeled as ’Months before intervention’ (months −6 to −1) with t = 0 at the intervention., validating the parallel trends assumption required for causal inference in difference-in-differences (DID) analysis.

Note: ITS effects are reported as percentage changes on the log scale (100 (exp(β) 1)); Trend is % per month. DID effects are percentage changes for post ESS interaction from two-way fixed-effects models; p-values are from cluster-robust standard errors with wild cluster bootstrap. Task-based accuracy is RD (pp) and RR with Wald CIs; search time is MD (s).

4. Discussion

4.1. Principal Findings, Clinical Significance and SWOT Analysis

This comprehensive evaluation provides preliminary evidence that the user-centered Essential Safety Sheet (ESS) intervention may improve safety performance in hospital laboratories through multiple complementary mechanisms [

44]. The integration of interrupted time series (ITS) and difference-in-differences (DID) analyses provide robust evidence for causal inference, addressing common limitations of single-method approaches in healthcare intervention research. The 13-month analysis window with validated parallel trends assumption and Newey-West standard error adjustment for autocorrelation strengthens the validity of our longitudinal findings.

The magnitude of improvement observed suggests potential clinical meaningfulness. The 25% increase in emergency response accuracy and 12.5 s reduction in information search time represent substantial enhancements that could potentially translate to reduced patient harm and improved diagnostic continuity during chemical incidents. However, these findings should be interpreted cautiously given the single site nature of the study and the relatively short follow-up period. The sustained improvements observed over 13 months indicate potential lasting behavioral changes rather than temporary performance enhancements, though longer-term follow-up would be needed to confirm durability.

This study presents a comprehensive evaluation of a user-centered Essential Safety Sheet (ESS) in hospital laboratories, revealing significant strengths, weaknesses, opportunities, and threats.

The primary strength lies in its mixed-methods design, combining task-based evaluation with a longitudinal ITS and DID analysis, providing robust evidence for the ESS’s effectiveness in reducing information search times and incident rates. The user-centered design process itself is a key strength, ensuring that the ESS addresses real-world cognitive and operational needs of hospital laboratory staff.

However, the study’s main weakness is its limited external validity due to a modest response rate (47.1%) and a small number of clusters (N = 8) in the DID analysis, which constrains statistical power. The sample size calculation, based on detecting large effect sizes (Cohen’s d = 0.8), may have left the study underpowered to detect smaller but clinically meaningful effects.

The opportunity is substantial; the ESS presents a low-cost, scalable intervention with the potential for significant cost savings by preventing chemical incidents and improving safety culture. Future integration with digital platforms and mobile applications could further enhance its reach and accessibility across diverse healthcare settings.

The primary threat is the potential for behavioral decay over time. The Hawthorne effect may have inflated initial results, and there is a risk that initial enthusiasm for the ESS may wane without ongoing institutional support, training, and reinforcement. Additionally, regulatory barriers and resistance to change in established safety communication practices may hinder widespread adoption.

4.2. Methodological Strengths and Study Limitations

From a methodological perspective, this study advances safety intervention research by demonstrating the value of integrating multiple analytical frameworks to strengthen causal inference and address the limitations inherent in single-method approaches. The validation of parallel trends assumptions and use of cluster-robust standard errors with wild cluster bootstrap provides methodological transparency that strengthens confidence in the causal interpretation of findings.

The methodological approach employed in this study represents an advancement in safety intervention research through the integration of multiple analytical frameworks. The combination of cross-sectional analysis, controlled task-based evaluation, and longitudinal.

Impact assessment using both ITS and DID analyses provides convergent evidence for intervention effectiveness while addressing the limitations inherent in any single methodological approach.

The parallel trends assumption validation provides critical methodological transparency, demonstrating that intervention and control groups exhibited similar pre-intervention trajectories. This validation strengthens confidence in the causal interpretation of our DID findings and addresses a common criticism of quasi experimental designs in healthcare research.

However, several important limitations should be acknowledged that may affect the interpretation and generalizability of our findings:

Response Rate and Selection Bias: Although the response rate was moderate (47%), Non-responder analysis showed no significant demographic differences. Nevertheless, the limited participation rate constrains external validity, and future studies with larger samples and higher response rates are warranted. The possibility of unmeasured differences in motivation, safety orientation, or other relevant characteristics cannot be entirely excluded. Sample Size and Statistical Power: The sample size calculation was based on detecting a large effect size (d = 0.8). While this provided adequate power for strong effects, the study may have been underpowered to detect smaller but practically meaningful effects. Future studies with larger sample sizes are recommended to ensure adequate power for detecting clinically meaningful differences across diverse populations.

Task-based Evaluation Limitations: Task-based evaluations were conducted under controlled simulation scenarios. While improvements were substantial, these findings cannot be directly equated with real-world emergency performance. Field validation in actual clinical incidents are required to confirm clinical translation. The large effect sizes observed (e.g., Cohen’s d > 3) likely reflect the controlled nature of the experimental setting. Real-world effects may be smaller, and pragmatic trials are needed to establish effectiveness under operational conditions.

The exceptionally large effect sizes observed (e.g., Cohen’s d = 3.24 for search time) likely reflect the idealized, controlled nature of the simulation. This statistical validity should not be extrapolated to mean an identical effect size in real-world practice, where environmental distractors, concurrent tasks, and varying levels of stress would certainly diminish the effect. The controlled task-based evaluation provides evidence of *potential* effectiveness under optimal conditions, but real-world implementation studies are needed to assess actual performance gains in routine laboratory operations.

Longitudinal Analysis Constraints: Although the 13-month analysis window demonstrated sustained improvements and the parallel trends assumption was validated, baseline level differences and the relatively short duration limit certainly regarding long-term sustainability. Extended follow-up and replication across diverse contexts are needed.

Although non-responder analysis showed no demographic differences, the 47.1% response rate limits external validity. Unmeasured variables, such as intrinsic ‘safety motivation’ or ‘receptiveness to change’, could not be excluded. Anecdotal feedback suggested time constraints during peak laboratory operations were the primary reason for non-participation. This selection bias may have resulted in a sample that is more engaged with safety issues than the general laboratory staff population, potentially overestimating the intervention’s effectiveness.

Furthermore, the sample size calculation was based on detecting a large effect size (d = 0.8). This study may therefore have been underpowered to detect smaller, yet still clinically significant, improvements. Future studies with larger samples are recommended to assess whether the ESS can produce meaningful effects across a broader range of outcomes and effect sizes.

While we employed wild cluster bootstrap

p-values, the state-of-the-art solution for inference with few clusters, the small number of clusters (N = 8) remains a profound limitation. As suggested by Cameron and Miller (2015) [

31], statistical power for the cluster-level analysis is inherently low, and thus the findings must be interpreted with caution. The wide confidence intervals observed in some DID estimates reflect this limitation. Future multi-site studies with larger numbers of independent clusters are essential to validate these preliminary findings.

4.3. Counterintuitive Training Effects

The counterintuitive negative association between formal safety training and SDS awareness represents an exploratory finding that may suggest potential gaps in current training approaches. This result should be interpreted cautiously and confirmed in larger samples. This finding suggests that current training approaches may inadvertently create overconfidence or reliance on incomplete knowledge, potentially compromising actual safety performance.

Several theoretical explanations may account for this paradoxical relationship. First, The Dunning-Kruger effect suggests that individuals with limited competence may overestimate their abilities, particularly following brief training interventions. Second, formal training programs may emphasize regulatory compliance over practical application, creating a disconnect between theoretical knowledge and real-world performance requirements.

Third, training content may not adequately address the cognitive demands of emergency situations, where rapid information processing and decision-making are critical.

This may be explained by the distinction between declarative knowledge (e.g., memorizing regulatory facts for a test) and procedural knowledge (e.g., applying information efficiently under stress). Current safety training models may over-emphasize the former, leading to an illusory sense of competence—the Dunning-Kruger effect—that this study astutely uncovered. Participants who had completed formal safety training may have overestimated their ability to locate critical information quickly in SDS documents, while those without such training approached the task with more realistic expectations and greater reliance on the simplified ESS format. This finding suggests that effective safety training should focus more on developing procedural skills and situational awareness rather than rote memorization of safety data sheet content.

This finding has significant implications for safety education design in healthcare settings. Rather than focusing solely on information transmission, training programs should emphasize competency-based approaches that integrate cognitive load principles, scenario-based learning, and performance assessment under realistic conditions. The development of training programs that specifically address the usability challenges of traditional SDS formats may be particularly beneficial.

4.4. Economic Implications and Implementation Considerations

The economic implications of ESS implementation extend beyond direct safety improvements to include potential cost savings from reduced incident rates, improved response efficiency, and enhanced staff confidence. For instance, institutional reports and health and safety executive studies estimate that the direct costs of a single chemical spill (including clean-up, disposal, and lost work time) can range from hundreds to thousands of dollars [

45]. Thus, a 36.2% reduction in incident rates could translate to substantial cost savings for hospital laboratories, particularly those with high chemical usage volumes. When indirect costs such as staff retraining, regulatory reporting, and potential litigation are considered, the economic case for ESS implementation becomes even more compelling.

However, implementation considerations must account for initial development costs, staff training requirements, and ongoing maintenance of ESS systems. The single page format of ESS may reduce printing and distribution costs compared to traditional multi-page SDS documents, while the improved search efficiency demonstrated in this study could reduce time spent on safety-related tasks during routine operations.

The scalability of ESS implementation across diverse healthcare settings requires careful consideration of local regulatory requirements, existing safety management systems, and staff training capabilities. While this study focused on hospital laboratory settings, the principles underlying ESS design may be applicable to other healthcare environments with similar cognitive demands and emergency response requirements.

4.5. International Generalizability and Regulatory Considerations

The international generalizability of ESS interventions must be considered within the context of varying regulatory frameworks, cultural factors, and healthcare system structures. While the GHS provides a standardized framework for chemical safety communication globally, implementation varies significantly across countries and regions.

The Delphi consensus study included experts, providing some international perspective on priority SDS sections. However, broader validation across diverse healthcare systems, regulatory environments, and cultural contexts would be necessary before widespread implementation. Particular attention should be paid to countries with different regulatory requirements, language considerations, and healthcare delivery models.

The integration of ESS approaches with existing regulatory frameworks presents both opportunities and challenges. While ESS design principles align with the fundamental objectives of chemical safety communication, regulatory approval processes may require extensive validation studies and stakeholder engagement. Collaboration with regulatory agencies, professional organizations, and international safety bodies would be essential for successful implementation.

4.6. Limitations and Future Research Directions

Several important limitations of this study should guide future research directions. First, the single-country, single-healthcare-system design limits generalizability to other settings. Multi-country, multi-system studies would provide stronger evidence for international applicability.

Second, the 13-month follow-up period, while demonstrating sustained improvements, may be insufficient to assess long-term durability and potential adaptation effects. Extended longitudinal studies with follow-up periods of 2–5 years would provide more definitive evidence regarding sustainability.

Furthermore, the 13-month follow-up may be insufficient to assess long-term durability. It is possible that some improvements were influenced by a Hawthorne effect—participants modifying their behavior because they knew they were being observed—or that behavioral decay may occur as initial enthusiasm for the ESS wanes. This reinforces our call for longer-term studies (e.g., 2–3 years) to assess sustainability and to identify strategies for maintaining engagement and compliance over time. Regular refresher training, periodic audits, and integration of the ESS into routine safety protocols may be necessary to prevent behavioral decay.

Third, the focus on hospital laboratory settings, while providing depth and specificity, limits applicability to other healthcare environments. Future studies should evaluate ESS effectiveness in diverse settings including emergency departments, intensive care units, and outpatient facilities.

Fourth, the study did not assess actual patient outcomes or clinical consequences of improved safety performance. Future research should examine whether improvements in safety metrics translate to measurable improvements in patient safety, staff well-being, and healthcare quality indicators.

4.7. Policy and Practice Implications

The findings of this study have several important implications for policy and practice in healthcare safety management. First, the demonstrated effectiveness of user centered design principles suggests that regulatory frameworks should consider usability and cognitive accessibility alongside information completeness in safety communication standards.

Second, the counterintuitive training effects highlight the need for fundamental re-design of safety education programs, moving from compliance-based to competency-based approaches that emphasize practical application and performance under realistic conditions.

Third, the sustained improvements observed over 13 months suggest that ESS interventions may represent a cost-effective approach to improving safety performance in healthcare settings, potentially justifying investment in development and implementation programs.

Fourth, the methodological approach employed in this study, integrating multiple analytical frameworks, provides a model for evaluating complex interventions in health- care settings and could inform future research in safety science and healthcare quality improvement.

4.8. Contribution to Safety Science

This study contributes to the broader field of safety science by demonstrating the application of human factors engineering principles to safety communication design in healthcare settings. The integration of cognitive load theory, user-centered design principles, and evidence-based evaluation methods provides a framework that could be applied to other safety communication challenges.

These findings align with recent developments in laboratory safety research, which emphasize the critical role of human factors and emerging technologies in enhancing safety performance [

46].

The use of interrupted time series and difference-in-differences analyses in combination provides a robust methodological approach for evaluating safety interventions, addressing common limitations of single-method studies and strengthening causal inference. This methodological contribution may inform future research in safety science and healthcare quality improvement.

The focus on cognitive accessibility and usability in emergency situations addresses a critical gap in safety communication research, which has traditionally emphasized information completeness over practical usability. This shift in perspective may influence future research and development in safety communication systems across various industries and settings.

The theoretical contribution of this research extends beyond the specific ESS intervention to demonstrate the potential value of applying user-centered design principles and cognitive load theory to safety communication challenges in healthcare settings. The counterintuitive negative association between formal safety training and SDS awareness reveals critical flaws in current educational approaches and highlights the urgent need for competency-focused training redesign that emphasizes practical application over regulatory compliance.