Abstract

Objective: To evaluate the psychometric properties of the short version of the Token Test (SVTT) and the Rey–Osterrieth Complex Figure (ROCF) using an item response theory (IRT) framework and to establish normative data for Colombian children and adolescents based on ability scores. Methods: A total of 668 healthy participants aged 6–17 years took part in this study. Factorial structure was assessed through confirmatory factor analysis (CFA). Item parameters were estimated using a two-parameter logistic (2PL) model for the SVTT, which accounts for both item difficulty and discrimination in dichotomous responses, and a graded response model (GRM) for the ROCF, suitable for items scored on ordered categories reflecting increasing levels of performance accuracy and Differential Item Functioning (DIF) analysis was conducted to assess potential bias related to sex. Reliability was examined using the Test Information Function (TIF), internal consistency throughout Cronbach’s alpha, and the influence of sociodemographic variables was analyzed through regression models. Results: CFA confirmed unidimensionality for all measures. For most items, moderate-to-low ability was sufficient to achieve the highest scores in the ROCF, and low ability in the SVTT. DIF analysis indicated no meaningful sex-related bias in any of the subtests. Both tests showed excellent reliability and internal consistency. Copy scores were influenced by polynomial age and parents’ mean years of education (MPE), while both immediate recall in the ROCF and SVTT were affected by MPE and the interaction of logarithmic age. Conclusions: This study provides strong psychometric evidence and, together with the integration of digital tools for generating normative data, represents a meaningful advancement in neuropsychological assessment.

1. Introduction

Neuropsychological assessment is a comprehensive, structured process for evaluating cognitive and psychological functioning, which encompasses data from multiple sources, including standardized tests, to support clinical decision making. These tests allow clinicians and researchers to compare patients’ performance across diverse populations [1]. Due to their central role, their utility depends on robust psychometric properties, a requisite for accurate assessments, diagnosis and clinical decision making [2].

Neuropsychological tests are intended to be reliable, valid, and adapted to the cultural, linguistic, and demographic contexts of the population to ensure representativeness and minimize measurement bias [2,3]. Instruments developed, validated and normed in different cultural and linguistic settings may fail to capture cognitive performance in other populations, leading to misdiagnosis and limited generalizability of findings [3,4,5]. Despite this, validation and normative studies in diverse populations remain insufficient, and it is still necessary to validate commonly used cognitive function tools for clinical practice and research.

The Rey–Osterrieth Complex Figure [6] and the Token Test [7] are two of the most used neuropsychological measures that were developed and normed on relatively homogeneous samples, lacking adequate representation of specific populations [5]. The ROCF assesses the visuo-constructional ability and non-verbal memory, including copying and recall tests, and has been used for brain injury or cognitive disorders and the general population with adequate reliability and validity [8,9]. On the other hand, TT evaluates verbal comprehension through the execution of spoken commands and serves as a diagnostic tool for certain language disorders, such as aphasia or dementia [10,11]. The TT has demonstrated good reliability, validity, and adequate classification power [10,12]. More efficient adaptations of the TT have been developed, such as the Shortened Version of the Token Test [13], which has also shown adequate psychometric properties for assessing language difficulties in both adults and children [11,14].

However, most neuropsychological tests have been developed and validated within the framework of Classical Test Theory (CTT), including ROCF and TT [5]. The CTT approach estimates an individual’s observed score as the sum of their true ability and measurement error and provides metrics of reliability and validity that are useful for both clinical and research purposes [15]. However, CTT presents some limitations that include relying on test-level statistics, assuming equal item contribution, and being sample-dependent. These constraints can limit the interpretability, precision, and generalizability of scores, particularly when applied across diverse cultural or linguistic groups [15].

In contrast, Item Response Theory (IRT) emerged as an alternative to address some of the CTT limitations [16,17]. The IRT focuses on item-level analysis, modeling the probability of a given response as a function of an individual’s latent ability (θ), and specific item parameters, such as difficulty and discrimination, and how it distinguishes between individuals with different ability levels [17]. This approach provides a more accurate estimation of individuals’ ability levels in specific cognitive functioning, regardless of individuals’ differences. Also, IRT incorporates Differential Item Functioning (DIF) to detect and reduce measurement bias across demographic groups [17] and it has gained popularity in neuropsychological assessment due to the limitations of CTT, which relies on total scores and fails to capture the information provided by individual items. Prior studies have already provided empirical support for the superior performance of IRT-based scoring compared with CTT approaches. Specifically, IRT models have been shown to produce lower Type I error rates, greater statistical power, and more accurate effect-size estimation [18,19], as well as higher sensitivity to true individual change, as reflected by the Magnitude of True Change index [20].

In the ROCF, the total score is commonly used to determine an individual’s performance in copy or memory conditions. However, this limits the interpretation of item-level performance. Two individuals may obtain the same total score but differ on specific items, reflecting different underlying cognitive difficulties [15]. IRT allows us to identify which of the ROCF 18 scoring units are most difficult or discriminative, and therefore, estimate a more precise and person-specific ability score. A similar limitation exists for TT, where clinicians often rely solely on the total score. Moreover, this test was originally designed with increasing items of difficulty, making IRT ideal for evaluating whether the original intended item order remains for a particular population.

IRT has gained increasing attention in neuropsychological research for its ability to identify redundant items and effective items in distinguishing abilities, optimizing test efficiency and improving interpretation guidelines in neuropsychological assessment [8,17,21,22]. Despite its advantages, IRT-based research remains limited, especially in low- and middle-income countries. Few IRT studies focused on pediatric populations in Latin America, thereby creating a major gap in culturally appropriate evidence [23].

Although normative data available for the ROCF and the SVTT exist for various populations, including Spanish-speaking adults and pediatric populations from Spain and several Latin American countries [11,14], most are based on the CTT framework and lack of psychometric analyses of the neuropsychological test. Norms based on raw scores under the CTT may therefore lack the precision required for sensitive clinical decision making [15]. Therefore, it is necessary to conduct validation studies based on IRT that also provide normative data to determine whether an individual’s performance within a specific population is ‘normative’ or indicative of impairments [1].

Improving measurement precision is critical for the early detection of cognitive impairment and for preventing developmental decline, including behavioral difficulties and declines in attention, language, and cognitive performance, even among initially high-functioning children [24]. In this regard, there is a need for valid and culturally sensitive appropriate tools to detect and monitor cognitive changes over time, particularly during childhood, when declines may go unnoticed, reducing the chances for early intervention.

Based on previous scientific literature, there is a growing need to improve the use and adaptation of neuropsychological instruments, particularly those applied across different cultural and linguistic contexts. The inappropriate use of these instruments may compromise the validity of clinical practice and increase the risk of biased interpretations [3,4,5], especially when transporting tests and norms from other cultural-linguistic contexts to Spanish-speaking populations, where validation studies are still scarce [23]. In addition, norms based solely on the CTT framework provide limited assessment precision [15] and may not be sufficiently sensitive to guide decision making in pediatric settings, where applications of IRT remain uncommon [23]. In this regard, children and adolescents require assessment tools that can deliver individualized evaluations and accurately differentiate among varying levels of ability, enabling clinicians to make better-informed and fairer diagnostic judgments [17].

To fill this gap, the present study provides IRT-based psychometric analyses and regression-adjusted normative data for two widely used neuropsychological tests (ROCF and SVTT) in a pediatric, Spanish-speaking, Latin American population. The aims of this study were: (1) to examine the psychometric properties of the ROCF and SVTT, including validity, reliability, item difficulty and discrimination parameters, and to evaluate Differential Item Functioning [DIF] by sex; and (2) to generate normative data for Colombian children using IRT and regression models. This study aims to enhance the use of neuropsychological assessment tools in specific populations and to minimize the risk of bias in clinical practice among pediatric patients.

2. Materials and Methods

2.1. Participants

The initial sample included 690 children and adolescents from four Colombian cities Medellín, Cali, Ibagué and Bogotá. To be included in the study, participants had to meet the following criteria: (a) be between 6 and 17 years old, (b) be born and residing in Colombia, (c) have an IQ ≥ 80 on the Test of Nonverbal Intelligence [25], and (d) score < 19 on the Children’s Depression Inventory [26]. Exclusion criteria included: (a) reported learning difficulties, (b) a history of psychoactive substance use (for adolescents), and (c) a score < 5 on the Alcohol Use Disorder Identification Test (AUDIT-C) [27]. Verification of inclusion and exclusion criteria was conducted through an interview with the participants’ parents or caregivers. Due to incomplete demographic data, 22 participants were excluded, resulting in a final sample of 668 individuals. Of the total participants, 54.19% were female (n = 374). The average age was 11.34 years (SD = 3.28), and the Mean Years of Parental Education (MPE) was 12.15 years (SD = 3.62). Half of the sample attended public schools, while the other half attended private schools. The distribution across cities were: Medellín (n = 181), Cali (n = 141), Ibagué (n = 190), and Bogotá (n = 156).

2.2. Instruments

2.2.1. Rey-Osterrieth Complex Figure (ROCF)

The ROCF assesses visual–spatial construction and immediate visual memory. In this study, the A-version of the figure, which composed of 18 elements was used. The test is administered in two phases: first, the participant is asked to copy the figure; then, after a delay of three minutes, the figure is removed and the participant is instructed to reproduce it from memory. Scoring is based on the accuracy and placement of each element: 2 points are awarded for accurate and correctly placed reproductions, 1 point for elements that are distorted or incomplete but correctly located, or for complete elements that are poorly positioned. If the element is both distorted or incomplete and misplaced, 0.5 points are assigned. A score of 0 is given when the element is omitted or unrecognizable [6].

2.2.2. Shortened Version of the Token Test (SVTT)

The SVTT is designed to measure verbal comprehension through the execution of verbal commands involving tokens of varying shapes, sizes, and colors. The task consists of six parts (36 items) with increasing complexity, where participants are instructed to manipulate 20 tokens according to specific verbal directions. Each correct response receives 1 point; if the correct response is given only after repeating the instruction, 0.5 points are awarded [13]. However, in line with this study’s criteria, all 0.5-point responses were recoded as 0. No points are awarded if the participant fails to respond correctly, even after the instruction is repeated.

2.3. Procedure

This study is part of a larger multicenter project aimed at generating normative data for Spanish-speaking populations. Participants completed a battery of neuropsychological tests, including the ROCF and SVTT, which were the only tests suitable for item-level analysis. To ensure consistency and minimize potential bias, the order of test administration was randomized for all participants. Additionally, a Microsoft Excel template was used to minimize data entry errors. The study was approved by the Ethics Committee of the University of the North and Pedagogical and Technological University of Colombia and conducted in accordance with the ethical principles of the Declaration of Helsinki. Parents or legal guardians who agreed to participate signed an informed consent form and completed a questionnaire gathering sociodemographic data, medical history, and the child’s health status. All assessments were conducted individually at participating schools. Data collection took place between July and December 2017. Participation was entirely voluntary, and no monetary compensation was offered.

2.4. Statistical Analysis

2.4.1. Psychometric Properties

To assess the construct validity of each instrument, we conducted separate Confirmatory Factor Analyses (CFAs) using the Diagonally Weighted Least Squares (DWLS) estimator, which is well-suited for ordinal data. These analyses aimed to evaluate whether the items from each test reflected a single underlying factor, that is, whether the assumption of unidimensionality was supported. CFAs model fit was evaluated using several standard indices like the chi-square to degrees of freedom ratio , where values of ≤3 indicate adequate fit [28], Comparative Fit Index (CFI) and Tucker–Lewis Index (TLI), for which values ≥ 0.95 reflect excellent fit; and the Root Mean Square Error of Approximation (RMSEA), considered optimal when ≤0.06, accompanied by its 90% confidence interval. The Standardized Root Mean Square Residual (SRMR), with values < 0.08, was also used to determine the acceptability of the models [29].

To further investigate item functioning, IRT models were employed to estimate item-level parameters. Dichotomous SVTT items were modeled using a two-parameter logistic (2PL) model, which allows both item difficulty (b) and discrimination (a) to vary across items, offering greater flexibility than the Rasch model [30]. In contrast, the ROCF copy and immediate recall tasks, which consist of polytomous items, were analyzed using the Graded Response Model (GRM). This model estimates the probability of responding in each category as a function of the latent trait level (θ) and evaluates how well each item discriminates across different levels of the trait [31].

The estimated ability parameter (θ) obtained from the IRT models was used as a refined measure of individual performance. This parameter represents each person’s standing on the underlying trait (e.g., visuoconstructive ability or verbal comprehension) and is derived by modeling their pattern of responses in relation to the psychometric characteristics of each item (such as how difficult or discriminative the items are). Unlike raw scores, θ-score provides a standardized, interpretable metric that enables more accurate comparisons across individuals and across different sets of items. Differential Item Functioning (DIF) was analyzed to identify potential bias across specific groups, focusing on sex as the variable of interest [32]. Logistic regression was employed for DIF detection, leveraging trait scores derived from IRT, and this method iteratively adjusts item parameters based on group differences (in this case, boys and girls), as modeled through a series of regressions and evaluates the magnitude of explained variance through the incremental ΔR2 [33].

Measurement precision (reliability) was evaluated for both the ROCF and SVTT using IRT methods. Test information functions (TIF) were computed to assess the precision of measurement across ability score (θ), with higher information values indicating greater reliability at specific trait levels. The relationship between information and measurement error is given by , where SEM is the standard error of measurement.

2.4.2. The Effects of Age, Gender, and MPE on the θ-Scores

To explore the influence of demographic variables on test performance, multiple linear regression analyses were conducted using the ability estimates (θ) as dependent variables for ROCF copy, ROCF immediate recall, and SVTT. To examine the predictive value of age, MPE, and sex, we compared two families of models differing in the transformation applied to the age variable: one included a logarithmic transformation ln(Age), and the other a quadratic orthogonal polynomial effect (Age and Age2). The quadratic formulation was motivated by the previous literature reporting nonlinear age effects in neuropsychological tasks [34,35], and was expressed .

However, from a clinical perspective, a monotonic increase in performance during childhood and adolescence is typically expected, making the logarithmic transformation more plausible in this context, and was expressed as . Where residuals were assumed to follow a normal distribution with a mean of 0 and constant variance , that is . To select the best-fitting model, for both formulations, an exhaustive variable selection approach was implemented, evaluating all possible combinations of the predictor variables ( models) and selecting the optimal one based on the Mallows’ Cp and Bayesian Information Criterion (BIC), selecting the model with the lowest value in each case.

The primary objective of the modeling strategy was predictive rather than purely explanatory. Model parameters were estimated to compute expected scores for new individuals based on demographic variables, thereby forming the foundation for regression-based normative data. To rigorously assess model stability and generalizability, repeated 10-fold cross-validation (CV) was performed, iterated 100 times using 80% (randomly partitioning) of the sample for training. This resampling procedure allowed for the estimation of average out-of-sample prediction error (mean squared error, MSE) across folds, providing a robust internal measure of predictive performance for both the log-transformed and quadratic age models selected according to Cp and BIC criteria.

External validation was further conducted using the independent sample (20%). Each model was fitted on the training data, and its predictive accuracy was quantified on the test data using root mean squared error (RMSE), thereby providing an unbiased estimate of generalization error. The combination of repeated CV and out-of-sample testing enabled a comprehensive evaluation of model performance and supported the reliability of the normative equations when applied to new clinical cases [36]. Finally, assumptions in the final linear regression models were tested. Multicollinearity was evaluated using the Variance Inflation Factor (VIF), with values ≤ 10 considered acceptable. Homoscedasticity was assessed via Levene’s test, and normality of residuals through the Kolmogorov–Smirnov test. To identify potential influential data points, we examined Cook’s distance (Dᵢ), with values exceeding 1 flagged for further inspection [37]. All analyses were conducted in R (version 4.5.1) using the following packages: lavaan for structural equation modeling [38], mIRT for multidimensional item response theory modeling [39], and ltm for latent trait analysis [40].

2.4.3. Normative Data Procedure

Following the procedure described by Rivera et al. (2024) [41], demographically adjusted normative conversions were derived for the estimated ability scores from the ROCF copy, ROCF immediate recall, and SVTT . These conversions transformed latent trait estimates (θ) into percentile ranks that account for individual demographic profiles. First, the expected ability score for each participant was computed using the regression model coefficients based on their demographic variables:

where represents the individual’s demographic profile. Second, the cumulative probability of the observed ability estimates for participant in task was obtained from the standard normal cumulative distribution function, centered on the expected value and the model’s residual standard error ():

where denotes the standard normal cumulative distribution function. Finally, this probability was multiplied by 100 to obtain the corresponding percentile rank :

3. Results

3.1. Psychometric Properties

For the ROCF copy, CFA supported the assumption of unidimensionality (; CFI = 0.988; TLI = 0.986; RMSEA = 0.060 [0.053, 0.068]; SRMR = 0.069). Item parameters derived from the IRT analysis (see Table 1) revealed discrimination values ranging from 1.29 (item 16: line) to 3.95 (item 3: diagonal cross). For example, threshold parameters for Item 1 indicate the latent-trait (θ) locations at which the probability of scoring at least category k (vs. ≤ k − 1) equals 50%. Boundary 1 (−2.506) marks P(score ≥ 1) = 0.50; boundary 2 (−1.542) marks P(score ≥ 2) = 0.50; and boundary 3 (2.292) marks P(score ≥ 3) = 0.50.

Table 1.

ROCF item parameters, based on item response theory.

The DIF analysis identified item 6 due to minor differences in location parameters between boys and girls. These discrepancies reflect minimal variations in the perceived ease of the item across groups; however, the ΔR2 of 0.012 indicates that the overall impact on the probability of a correct response is minimal [33]. Therefore, these differences are not substantial enough to affect the interpretation of the subtest, confirming that ROCF copy functions fairly for both sexes (see Table 2).

Table 2.

Differential item functioning for ROCF and SVTT.

The measurement precision though TIF for the ROCF copy subtest under the 2PL model exhibits a bimodal pattern, with two distinct peaks of precision across the ability continuum. The highest precision is achieved around θ = 0.21, with a SEM of 0.15 indicating excellent reliability (reliability = 0.98) for individuals with higher ability levels. A second, smaller peak appears around , suggesting that the test also provides meaningful information for individuals with lower levels of the latent trait. The instrument demonstrated excellent internal consistency, with an ordinal alpha of 0.94, indicating highly reliable measurement of the underlying construct. The Category Response Curves (CRCs) for all ROCF copy items are presented in Supplementary Material Figure S1.

Regarding the ROCF immediate recall, CFA also supported unidimensionality (; CFI = 0.978; TLI = 0.975; RMSEA = 0.057 [0.049, 0.065]; SRMR = 0.076). Discrimination parameters ranged from 0.708 (item 15: line) to 1.853 (item 3: diagonal cross). Boundary 1 parameters reflect the level of the latent trait at which there is a 50% chance of obtaining a score of 0.5. The CRCs for items 1 to 6 suggested a limited capacity to differentiate individuals at the highest levels of ability. In contrast, items 8 to 10 required a higher level of proficiency for accurate responses, indicating better performance at the upper end of the trait continuum. For this subtest, items 2 and 4 exhibited small shifts in location parameters between boys and girls, suggesting marginal group-level differences in response probability at certain ability levels. However, the extremely low ΔR2 values (0.002 and 0.011, respectively) indicate that the impact of these differences on responses is not significant. This confirms that ROCF immediate recall items can be reliably compared across sexes (see Table 2). The remaining items showed more balanced functioning. The TIF indicated that the instrument achieves its highest precision at the ability level , with a SEM of 0.315 (reliability of 0.90). These findings suggest that the test is best suited to discriminate among individuals with moderate levels of the latent trait, reaching this range. However, the average information across the ability ranges from −2 to 0 was relatively low (4.20), implying reduced precision for individuals with lower trait levels. Ordinal alpha coefficient of 0.90 reflects a good level of internal consistency for this subtest. Detailed CRCs for all items are available in Supplementary Material Figure S2.

For the SVTT, CFA supported the assumption of unidimensionality (χ2/df = 1.32; CFI = 0.959; TLI = 0.956; RMSEA = 0.022 [0.017, 0.027]; SRMR = 0.131). The TIF revealed that the instrument reaches its highest precision at the ability level , with a SEM of 0.076. These results indicate that the test is optimized to discriminate among individuals with low levels of the latent trait, showing a reliability of 0.99 in this range. However, the information decreases rapidly as ability increases, suggesting lower precision for individuals with average or high levels of the trait. The ordinal alpha of α = 0.90, indicating an adequate level of internal consistency.

The IRT analysis (see Table 3) revealed that item difficulty parameters ranged from −4.799 (item 3: Touch a yellow token) to 0.131 (item 25: Touch the black circle with the red square), indicating that the test is better suited for assessing individuals with lower levels of ability. Discrimination parameters varied widely, from 0.575 (item 25) to 14.664, with the highest values observed for items 5 (Touch a black one), 6 (Touch a green one), and 7 (Touch a white one), suggesting these items are particularly effective at distinguishing between participants across the latent trait continuum. The ICCs for all SVTT items are available in Supplementary Material Figure S3.

Table 3.

Shortened Version of the Token Test item parameters, based on item response theory.

In the SVTT subtest, several items showed slight group-related variations in difficulty and discrimination parameters between boys and girls. Nevertheless, the very low ΔR2 values indicate that these differences have minimal impact on the probability of a correct response and therefore do not represent meaningful bias. Consequently, the SVTT items can be considered appropriate for comparing cognitive abilities across sexes (see Table 2).

3.2. Demographic Variables’ Effect on Neuropsychological Performance

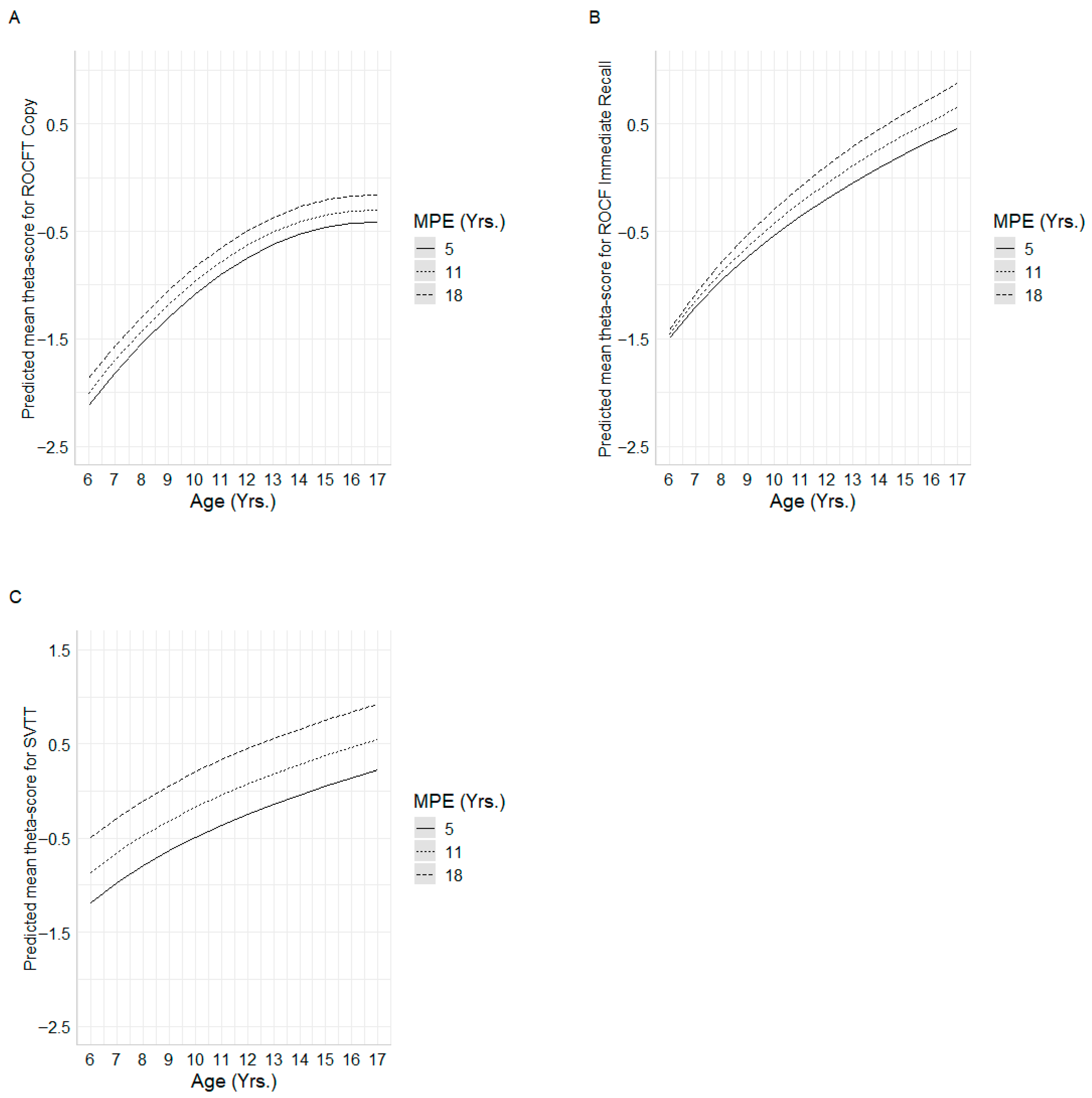

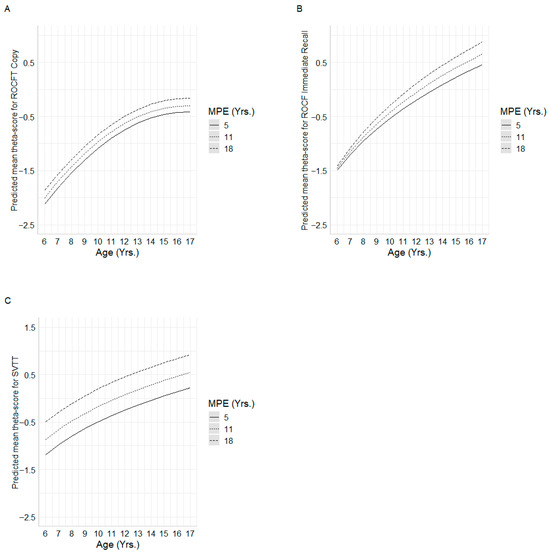

For the ROCF copy, four multiple linear regression models based on ability estimates were compared. Repeated cross-validation revealed that the quadratic age model selected by Mallows’ Cp delivered the best performance, with an average of root mean squared error (Mean RMSE) of 0.34 and the lowest MSE in 91% of iterations. In the external test set (20%), differences among models were minimal, with the quadratic Cp model again producing the lowest root mean squared error (RMSE = 0.576). Given its superior predictive accuracy and theoretical interpretability, the model included age, age2 and MPE as predictors, accounting for 49% of the variance in copy performance (R2 = 0.48). The quadratic age predictor showed accelerated gains at younger ages that stabilized in adolescence. In addition, MPE had a positive and significant effect, suggesting that higher parental education years were associated with better performance on the copy task, with this advantage consistently observed across the entire age range (see Table 4, Figure 1A).

Table 4.

Final multiple linear regression models.

Figure 1.

Mean predicted ability scores for each regression model. Subfigures (A–C) show the interactions between age and MPE for (A) = ROCF Copy, (B) = ROCF Immediate recall, and (C) = SVTT.

Model assumptions were adequately accomplished. No multicollinearity was observed (VIF ≤ 1.001), and no highly influential observations were identified (maximum Cook’s distance = 0.041). Homoscedasticity was confirmed (Levene’s test = 1.9867; p-value = 0.116), and residuals did not significantly deviate from normality (p-value = 0.732).

Among competing models predicting ROCF immediate-recall θ-scores, cross-validation identified the ln(age) × MPE interaction model as superior, yielding the lowest mean RMSE (0.630). Model-based trajectories indicated that higher MPE was associated with enhanced recall performance, especially in early childhood, with MPE-related differences converging modestly in late adolescence (see Figure 1B). This specification explained 41.4% of the variance in recall θ-scores (R2 = 0.414).

For the SVTT, four multiple linear regression models were compared. In the repeated 10-fold, performance was similar across models, with mean RMSE ranging from 0.495 to 0.500. The log-Cp and log-BIC models obtained the lowest RMSE (0.495 and 0.495, respectively), each ranking first in 34% of the iterations, followed closely by the quadratic Cp model (32%). In the external test set, RMSE values were likewise close: 0.715 for both log-Cp and log-BIC, 0.717 for the quadratic Cp model, and 0.720 for the quadratic BIC model. Given its parsimony and robust predictive performance, the final model retained ln(age) and MPE as predictors, jointly accounting for 29% of the variance in SVTT performance (adjusted R2 = 0.297). Both ln(Age) and MPE exerted significant effects: predicted trajectories demonstrate that higher parental education consistently confers superior SVTT performance across the entire age span, with the greatest MPE-related gains occurring in younger children (see Figure 1C).

Diagnostic tests confirmed that the final model met all assumptions: multicollinearity was negligible (VIF = 1.001), no observations exerted undue influence (maximum Cook’s distance = 0.032), homoscedasticity held (p-value = 0.260), and residuals did not depart significantly from normality (p-value = 0.180).

3.3. Normative Data Application

An online calculator was developed using the Shiny platform to facilitate probability estimation and score interpretation. Clinicians simply need to input the required individual information like namely, test-specific scores, age, MPE, and sex, into the calculator. The tool automatically converts raw item-by-item scores into ability estimates (theta scores), which are then adjusted for sociodemographic variables following the procedure described in Section Normative Data Procedure. As a result, the clinician receives the corresponding percentile for the patient. This tool is freely available to all users at https://elianafuentes.shinyapps.io/itr_col_app/ and will be accessible starting September 2025.

4. Discussion

The objectives of this study were to evaluate the validity, reliability, and psychometric properties of both the ROCF and the SVTT using IRT, and to construct normative data for Colombian children, considering the influence of age, educational level, and cultural background. The section is organized according to its aims.

4.1. Psychometric Properties

Results supported the unidimensional structure of the ROCF and SVTT, their high reliability and adequate internal consistency. For the SVTT, these findings are in line with previous applications of IRT, such as those reported for the Revised Token Test [42] and the German Token Test [43]. Notably, only Fierro Bósquez et al. [8], working with children from the Waranka Indigenous community, specifically examined the SVTT and also reported evidence of unidimensionality and good reliability.

Regarding the ROCF, in prior research applying the Rasch model, Prieto et al. [44] has provided partial support for its psychometric robustness. However, to our knowledge, the present study is the first to employ the TIF to evaluate the reliability of this instrument, offering a more detailed picture of measurement precision across the ability spectrum.

Regarding the IRT analysis, results for ROCF indicated that all items were able to effectively distinguish between individuals who have adequate visuoconstructional (Copy) and visual memory (Immediate Recall) abilities and those who do not. Additionally, both tasks are suitable for assessing performance across a wide range of ability levels, particularly in those with low levels of skills (e.g., very young children). However, for individuals with high levels of skills, the tasks may not present sufficient difficulty to adequately challenge them. This ceiling effect suggests that, in such cases, the test may be less sensitive to differences between people with higher levels of ability. This is consistent with the observation by [9] that the ROCF copy task is relatively easy. As a result, the measure may not fully capture performance variability among the most capable participants, thereby limiting its diagnostic precision within this subgroup.

Regarding SVTT, the IRT model revealed discrimination parameters within acceptable ranges, indicating an adequate ability to differentiate individuals along the latent trait (auditory language comprehension). However, several items exhibited signs of overfitting or misfit, and should therefore be interpreted with caution, as such deviations may affect the classification accuracy of examinees [45]. A ceiling effect was also observed, reducing the test’s sensitivity to discriminate among high-ability individuals. This pattern is consistent with the TIF results, which indicate that the instrument provides its highest measurement precision at lower ability levels. Similar findings were reported by [8,14], where no significant performance gains were observed in children aged 14 and 11, respectively, further supporting the presence of a ceiling effect. This does not indicate a flaw in the instrument, as it aligns with its original purpose of detecting comprehension deficits in individuals with aphasia. Accordingly, the items were calibrated so that individuals without language disorders would solve them with high probability, thereby reflecting the test’s clinical design.

In contrast, several items displayed optimal psychometric properties and contributed meaningful information across the full range of ability. These results suggest that, although the SVTT performs adequately, item-level refinements, such as removing or replacing overly easy items, could enhance measurement precision and reduce model distortion. Nonetheless, such refinements should be considered with caution, as modifications aimed at improving psychometric properties in healthy samples may not necessarily align with the test’s original clinical purpose.

The DIF analysis conducted revealed that none of the items in the ROCF-Copy, ROCF-Immediate recall, and SVTT subtests exhibited significant sex-related effects. These findings are consistent with previous research showing that, even when DIF is detected, the effects are often clinically irrelevant when ΔR2 values are small [46]. Therefore, instruments can be considered equitable and appropriate for comparing cognitive abilities between boys and girls.

4.2. Demographic Variables and Normative Data

Regarding the influence of sociodemographic variables on test performance, the most robust predictors were age2, ln(age) and, in some cases, MPE and its interaction with age. The use of different age transformations reflects the nonlinear nature of cognitive development: age2 captures the rapid gains observed in early childhood followed by a plateau in adolescence, whereas ln(age) better models the decelerating growth rate across the developmental span. These patterns highlight the complexity of modeling developmental trajectories, where cognitive growth is substantial at younger ages but tends to slow down as children approach adolescence [3].

Parental education emerged as a key variable, highlighting the important role of the family’s educational environment in shaping cognitive performance. Children whose parents had more years of formal schooling consistently outperformed their peers, in line with findings from previous Latin American pediatric samples [8,14,34]. Beyond a main effect, the interaction between age and MPE suggests that parental education may modulate the pace of cognitive growth; it means that in some cases accelerating developmental gains, while in others buffering against a slower trajectory. This interaction underscores the broader impact of the sociocultural environment on child cognition, particularly in domains central to language [47], visuoconstructive abilities [48], and visual memory [49]. This pattern is consistent with evidence that parental education often reflects broader socioeconomic advantages, including access to adequate nutrition and healthcare (Ross & Mirowsky, 2011) [50], higher-quality educational opportunities (e.g., access to material and educational resources), and cognitively enriched home environments (e.g., shared reading, elaborated conversations, exposure to complex vocabulary, offer opportunities for bilingual or second-language experiences [51]). Indeed, previous evidence shows that parental education may operate indirectly through the quality of the home environment and the development of children’s self-regulatory and attentional capacities [52] as well as through access to cognitively enriching school contexts and family learning practices that mediate socioeconomic effects on cognitive and language outcomes [53].

These findings emphasize that performance on the SVTT and ROCF should be interpreted in the context of parental education and its broader socioeconomic correlates discussed above, especially in Latin American settings where structural inequalities in access to quality education persist [54]. Without such contextualization, children from less advantaged educational environments may be at risk of misinterpretation, with typical variability being mistaken for cognitive impairment. This perspective aligns with calls to interpret neuropsychological results within their ecological and social context to avoid pathologizing expected variability.

Also, these sociodemographic influences highlight the need for precise methods of capturing individual differences. In this regard, a key contribution of this study is the establishment of normative data based on ability scores derived from IRT, rather than traditional total scores. While prior studies relied primarily on raw totals, the use of ability scores provides a more precise and psychometrically grounded representation of performance, offering finer discrimination across different ability levels [55]. This approach addresses some of the limitations of ceiling effects and enhances the interpretability of individual differences across the developmental spectrum.

Finally, the exhaustive selection of predictor variables combined with repeated cross-validation strengthens the robustness and generalizability of the models. By minimizing overfitting and ensuring stable parameter estimates, this methodology provides a more reliable framework for understanding the determinants of cognitive performance and for developing normative references that can inform both clinical practice and research [56].

The calculator developed in this study is intended as a supportive tool to facilitate the interpretation of test scores, particularly in settings where rapid or standardized evaluation is needed. Importantly, it is not designed to replace professional clinical judgment. Clinicians should integrate its outputs with their broader assessment of the child, considering contextual factors such as educational environment, language exposure, and socioeconomic background, as well as qualitative observations from testing.

4.3. Limitations, Strengths, and Clinical Implications

The lack of appropriate normative data has tangible implications. Clinicians serving underrepresented populations may not have reliable normative references to interpret performance accurately. In such cases, professionals might inadvertently pathologize normal variability or, conversely, fail to detect true cognitive difficulties. In the absence of appropriate data, such as those provided in this study, there is a risk of relying on norms that are not representative of the populations they serve, which may lead to diagnostic inaccuracies and inequitable clinical decisions.

This study has some limitations that should be considered. First, only variables traditionally identified in the literature as relevant were examined. For future research, it will be important to consider valuable external predictors or moderators of cognitive and behavioral performance such as socioeconomic status, screen exposure or nutrition. Socioeconomic status may be operationalized through parental education, household income, occupation, or composite indices. Screen exposure can be measured in terms of duration (hours per day), type (educational vs. recreational), and timing (e.g., before or after bedtime). Nutritional status can be assessed using indicators, such as Body Mass Index (BMI) normalized for age and sex (WHO BMI-for-age percentiles or z-scores), low height-for-age (WHO z-scores), low weight-for-height (WHO z-scores), or micronutrient deficiencies.

Although the empirical superiority of IRT-based scoring has been demonstrated in terms of lower Type I error, greater statistical power, and more accurate effect-size estimation, future external validation should be conducted to establish its predictive utility using independent criteria such as teacher or parent ratings, academic performance, and daily functioning measures. Moreover, metrics like Area Under Curve for discrimination, and Root Mean Square Error and Cross-validation systems for predictions should be implemented.

Also, even though the sample was demographically diverse and the IRT framework minimizes sample dependence in parameter estimation, it did not include children from rural or indigenous communities. This limitation constrains the generalizability of the findings to the broader Colombian population. Moreover, bilingual status was not systematically characterized, which may be relevant given that language exposure and use can influence cognitive and linguistic performance. However, bilingualism is relatively uncommon in the urban regions represented in this study and is more prevalent in indigenous populations that were not included in the sample.

Finally, while regression-based norms offer greater flexibility and precision, they assume that the relationship between predictors and test performance remains stable across the entire ability spectrum. In other words, the effect of a predictor on performance is assumed to be the same for both low-ability and high-ability individuals. But, from a clinical perspective, these limitations underscore the importance of critically examining the impact of cultural, linguistic, and socioeconomic diversity when interpreting test results. This reflective stance is particularly relevant in Latin American contexts, where variability in educational quality, language exposure, and access to resources can substantially shape cognitive performance. Such sources of heterogeneity, together with potential ceiling effects in higher-ability groups, may reduce the sensitivity of the models to detect meaningful differences. When test scores approach ceiling levels, clinicians are advised to incorporate complementary and more demanding measures, such as design fluency tasks, delayed recall, or complex language assessments. At the same time, the presence of ceiling effects provides valuable insight for future test development: refining or adding items that target higher-order cognitive abilities could enhance the discriminatory power of the instruments for high-performing children.

Despite this, this study offers several important strengths. First, the development of regression-based normative data, accompanied by an automated online calculator, significantly improves the clinical utility of the ROCF and SVTT. This tool allows professionals to derive demographically adjusted ability estimates (θ-scores) and percentiles with greater diagnostic precision, surpassing traditional norm table approaches.

Second, the application of IRT, still underutilized in Latin American pediatric contexts, offers substantial methodological advantages. IRT allows finer-grained estimation of individual ability, identifies items with poor psychometric performance (misfit, ceiling effects), and provides the foundation for future development of culturally adapted, shortened, or computerized versions of the tests [57].

Third, the study focuses on a large, diverse sample of Colombian children and adolescents, representing multiple cities and sociodemographic backgrounds. Given the scarcity of regionally specific data in Latin American populations, particularly among children, this sample adds substantial value and addresses gaps noted in international reviews [5]. Most IRT-based neuropsychological studies to date have focused on adult samples or clinical populations, making this study particularly valuable due to its focus on a pediatric population and its broader geographical scope.

The IRT framework allows the examination of group differences in item parameters (e.g., children with low vs. high SES). Future studies could incorporate SES, screen exposure, and nutrition into IRT models in several ways: (1) As grouping variables in DIF analyses, for example, using Multiple-Group IRT to determine whether items function differently across low- and high-SES groups; however, there is a risk of losing information while dichotomizing the variables. (2) As covariates in explanatory IRT models (regression within IRT), allowing SES, screen exposure, and nutrition to predict the latent trait (θ) and modeling children’s probability of correct or endorsed responses as a function of both their trait level and these covariates.

5. Conclusions

This study offers a robust psychometric and normative foundation for clinicians working with Colombian Spanish-speaking children and adolescents. The integration of IRT, regression-based normative modeling, and user-friendly digital tools represents a meaningful advancement in neuropsychological assessment, particularly in culturally and socioeconomically diverse settings. Together, these innovations provide health professionals with a highly accurate and accessible resource for evaluating visual–spatial construction, immediate visual memory and verbal comprehension functioning in pediatric populations.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/healthcare13212683/s1. Figure S1 presents the category response curves for the ROCF-Copy task (Line 1 = response category 2; Line 2 = response category 1; Line 3 = response category 0.5; Line 4 = response category 0). Figure S2 shows the category response curves for the ROCF-Immediate Recall task (Line 1 = response category 2; Line 2 = response category 1; Line 3 = response category 0.5; Line 4 = response category 0). Figure S3 displays the item characteristic curves for the Shortened Version Token Test.

Author Contributions

D.R. is responsible for conceptualization, investigation, methodology, project administration, software, supervision, validation, visualization, and writing—original draft; L.O.-L. is responsible for conceptualization, validation, visualization, and writing—original draft; E.M.F.M. are responsible for formal analysis, software, visualization, writing—original draft; C.S.-D., O.T. and J.C.A.-L. are responsible for writing the original draft. All authors have read and agreed to the published version of the manuscript.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

Institutional Review Board Statement

This study was approved by the Ethics Committee for Research in the Health Sciences Division of the Universidad del Norte Evaluation Record: No. 138 (25 February 2016), and Ethics Committee for Research of Universidad Pedagógica y Tecnológica de Colombia (27 October 2015), considering the declaration of Helsinki.

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The data and code can be provided upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mitrushina, M.; Boone, K.B.; Razani, J.; D’Elia, L.F. Handbook of Normative Data for Neuropsychological Assessment, 2nd ed.; Mitrushina, M.N., Ed.; Oxford University Press: New York, NY, USA, 2005; ISBN 978-0-19-516930-0. [Google Scholar]

- Casaletto, K.B.; Heaton, R.K. Neuropsychological Assessment: Past and Future. J. Int. Neuropsychol. Soc. 2017, 23, 778–790. [Google Scholar] [CrossRef]

- Rivera, D.; Arango-Lasprilla, J.C. Methodology for the Development of Normative Data for Spanish-Speaking Pediatric Populations. NeuroRehabilitation 2017, 41, 581–592. [Google Scholar] [CrossRef] [PubMed]

- Iñesta, C.; Oltra-Cucarella, J.; Bonete-López, B.; Calderón-Rubio, E.; Sitges-Maciá, E. Regression-Based Normative Data for Independent and Cognitively Active Spanish Older Adults: Digit Span, Letters and Numbers, Trail Making Test and Symbol Digit Modalities Test. Int. J. Environ. Res. Public Health 2021, 18, 9958. [Google Scholar] [CrossRef]

- Kusi-Mensah, K.; Nuamah, N.D.; Wemakor, S.; Agorinya, J.; Seidu, R.; Martyn-Dickens, C.; Bateman, A. A Systematic Review of the Validity and Reliability of Assessment Tools for Executive Function and Adaptive Function Following Brain Pathology among Children and Adolescents in Low- and Middle-Income Countries. Neuropsychol. Rev. 2022, 32, 974–1016. [Google Scholar] [CrossRef] [PubMed]

- Rey, A. Test de Copia y de Reproducción de Memoria de Figuras Geométricas Complejas: Manual, 8th ed.; revisada y ampliada; TEA: Madrid, Spain, 2003; ISBN 978-84-7174-750-1. [Google Scholar]

- De Renzi, E.; Vignolo, L.A. The Token Test: A Sensitive Test to Detect Receptive Disturbances in Aphasics. Brain 1962, 85, 665–678. [Google Scholar] [CrossRef]

- Fierro Bósquez, M.J.; Fuentes Mendoza, E.M.; Olabarrieta-Landa, L.; Abiuso Lillo, T.; Orozco-Acosta, E.; Mascialino, G.; Arango-Lasprilla, J.C.; Rivera, D. Psychometric Properties and Normative Data Using Item Response Theory Approach for Three Neuropsychological Tests in Waranka Children Population. Healthcare 2025, 13, 423. [Google Scholar] [CrossRef]

- Zhang, X.; Lv, L.; Min, G.; Wang, Q.; Zhao, Y.; Li, Y. Overview of the Complex Figure Test and Its Clinical Application in Neuropsychiatric Disorders, Including Copying and Recall. Front. Neurol. 2021, 12, 1–10. [Google Scholar] [CrossRef] [PubMed]

- De Paula, J.J.; Bertola, L.; Ávila, R.T.; Moreira, L.; Coutinho, G.; De Moraes, E.N.; Bicalho, M.A.C.; Nicolato, R.; Diniz, B.S.; Malloy-Diniz, L.F. Clinical Applicability and Cutoff Values for an Unstructured Neuropsychological Assessment Protocol for Older Adults with Low Formal Education. PLoS ONE 2013, 8, e73167. [Google Scholar] [CrossRef]

- Julio-Ramos, T.; Mora-Castelletto, V.; Conejeros-Pavez, J.; Saez-Martínez, J.; Solinas-Ivys, P.; Donoso, P.; Soler-León, B.; Martínez-Ferreiro, S.; Quezada, C.; Méndez-Orellana, C. Validation of the Abbreviated Version of the Token Test in Latin American Spanish Stroke Patients. Int. J. Lang. Commun. Disord. 2024, 59, 2815–2827. [Google Scholar] [CrossRef]

- Panuccio, F.; Rossi, G.; Nuzzo, A.; Ruotolo, I.; Cianfriglia, G.; Simeon, R.; Sellitto, G.; Berardi, A.; Galeoto, G. Quality of Assessment Tools for Aphasia: A Systematic Review. Brain Sci. 2025, 15, 271. [Google Scholar] [CrossRef]

- De Renzi, E.; Faglioni, P. Normative Data and Screening Power of a Shortened Version of the Token Test. Cortex 1978, 14, 41–49. [Google Scholar] [CrossRef]

- Olabarrieta-Landa, L.; Rivera, D.; Rodríguez-Lorenzana, A.; Pohlenz Amador, S.; García-Guerrero, C.E.; Padilla-López, A.; Sánchez-SanSegundo, M.; Velázquez-Cardoso, J.; Díaz Marante, J.P.; Caparros-Gonzalez, R.A.; et al. Shortened Version of the Token Test: Normative data for Spanish-speaking pediatric population. NeuroRehabilitation 2017, 41, 649–659. [Google Scholar] [CrossRef]

- Ayanwale, M.A.; Chere-Masopha, J.; Morena, M.C. The Classical Test or Item Response Measurement Theory: The Status of the Framework at the Examination Council of Lesotho. Int. J. Learn. Teach. Educ. Res. 2022, 21, 384–406. [Google Scholar] [CrossRef]

- Reise, S.P.; Moore, T.M. Item Response Theory. In APA Handbook of Research Methods in Psychology: Foundations, Planning, Measures, and Psychometrics, 2nd ed.; American Psychological Association: Washington, DC, USA, 2023; Volume 1. [Google Scholar]

- Thomas, M.L. Advances in Applications of Item Response Theory to Clinical Assessment. Psychol. Assess. 2019, 31, 1442–1455. [Google Scholar] [CrossRef]

- Wang, M.; Reeve, B.B. Evaluations of the Sum-Score-Based and Item Response Theory-Based Tests of Group Mean Differences under Various Simulation Conditions. Stat. Methods Med. Res. 2021, 30, 2604–2618. [Google Scholar] [CrossRef] [PubMed]

- Sébille, V.; Hardouin, J.-B.; Le Néel, T.; Kubis, G.; Boyer, F.; Guillemin, F.; Falissard, B. Methodological Issues Regarding Power of Classical Test Theory (CTT) and Item Response Theory (IRT)-Based Approaches for the Comparison of Patient-Reported Outcomes in Two Groups of Patients—A Simulation Study. BMC Med. Res. Methodol. 2010, 10, 24. [Google Scholar] [CrossRef] [PubMed]

- Jabrayilov, R.; Emons, W.H.M.; Sijtsma, K. Comparison of Classical Test Theory and Item Response Theory in Individual Change Assessment. Appl. Psychol. Meas. 2016, 40, 559–572. [Google Scholar] [CrossRef]

- Li, D.; Liu, X.; Yu, J.; Zhang, Y.; Hu, N.; Lu, Y.; Sun, F.; Zhang, M.; Ma, X.; Wang, F. Item Response Theory for the Color-Picture Version of Boston Naming Test in a Chinese Sample with Neurodegenerative Diseases. J. Alzheimer’s Dis. 2025, 103, 775–784. [Google Scholar] [CrossRef]

- Martins, P.S.R.; Barbosa-Pereira, D.; Valgas-Costa, M.; Mansur-Alves, M. Item Analysis of the Child Neuropsychological Assessment Test (TENI): Classical Test Theory and Item Response Theory. Appl. Neuropsychol. Child 2022, 11, 339–349. [Google Scholar] [CrossRef]

- Kusi-Mensah, K.; Nuamah, N.D.; Wemakor, S.; Agorinya, J.; Seidu, R.; Martyn-Dickens, C.; Bateman, A. Assessment Tools for Executive Function and Adaptive Function Following Brain Pathology Among Children in Developing Country Contexts: A Scoping Review of Current Tools. Neuropsychol. Rev. 2022, 32, 459–482. [Google Scholar] [CrossRef]

- Van Noort-van Der Spek, I.L.; Stipdonk, L.W.; Goedegebure, A.; Dudink, J.; Willemsen, S.; Reiss, I.K.M.; Franken, M.-C.J.P. Are Multidisciplinary Neurodevelopmental Profiles of Children Born Very Preterm at Age 2 Relevant to Their Long-Term Development? A Preliminary Study. Child Neuropsychol. 2022, 28, 437–457. [Google Scholar] [CrossRef]

- Brown, L.; Sherbenou, R.J.; Johnsen, S.K. Toni 2 Test de Inteligencia no Verbal: Apreciación de la Habilidad Cognitiva Sin Influencia del Lenguaje: Manual, Tercera Edición; TEA Ediciones: Madrid, Spain, 2009; ISBN 978-84-7174-980-2. [Google Scholar]

- Kovacs, M. Children’s Depression Inventory (CDI) Manual; Multi-Health Systems: Toronto, ON, Canada, 1992. [Google Scholar]

- Bush, K. The AUDIT Alcohol Consumption Questions (AUDIT-C): An Effective Brief Screening Test for Problem Drinking. Arch. Intern. Med. 1998, 158, 1789. [Google Scholar] [CrossRef] [PubMed]

- Kline, R.B. Principles and Practice of Structural Equation Modeling. In Methodology in the Social Sciences, 3rd ed.; Guilford Press: New York, NY, USA, 2011; ISBN 978-1-60623-877-6. [Google Scholar]

- Schreiber, J.B.; Nora, A.; Stage, F.K.; Barlow, E.A.; King, J. Reporting Structural Equation Modeling and Confirmatory Factor Analysis Results: A Review. J. Educ. Res. 2006, 99, 323–338. [Google Scholar] [CrossRef]

- Mair, P. Modern Psychometrics with R. In Use R! Springer International Publishing: Cham, Switzerland, 2018; ISBN 978-3-319-93175-3. [Google Scholar]

- Sethar, W.A.; Pitafi, A.; Bhutto, A.; Nassani, A.A.; Haffar, M.; Kamran, S.M. Application of Item Response Theory (IRT)-Graded Response Model (GRM) to Entrepreneurial Ecosystem Scale. Sustainability 2022, 14, 5532. [Google Scholar] [CrossRef]

- Abad, F.J.; Olea, J.; Ponsoda, V. García Medición en Ciencias Sociales y de la Salud; Síntesis: Madrid, Spain, 2014; ISBN 978-84-9958-614-4. [Google Scholar]

- Choi, S.W.; Gibbons, L.E.; Crane, P.K. Lordif: An R Package for Detecting Differential Item Functioning Using Iterative Hybrid Ordinal Logistic Regression/Item Response Theory and Monte Carlo Simulations. J. Stat. Soft. 2011, 39, 1–30. [Google Scholar] [CrossRef]

- Arango-Lasprilla, J.C.; Rivera, D.; Ertl, M.M.; Muñoz Mancilla, J.M.; García-Guerrero, C.E.; Rodriguez-Irizarry, W.; Aguayo Arelis, A.; Rodríguez-Agudelo, Y.; Barrios Nevado, M.D.; Vélez-Coto, M.; et al. Rey–Osterrieth Complex Figure—Copy and Immediate Recall (3 Minutes): Normative Data for Spanish-Speaking Pediatric Populations. NeuroRehabilitation 2017, 41, 593–603. [Google Scholar] [CrossRef] [PubMed]

- Van Der Elst, W.; Hurks, P.; Wassenberg, R.; Meijs, C.; Jolles, J. Animal Verbal Fluency and Design Fluency in School-Aged Children: Effects of Age, Sex, and Mean Level of Parental Education, and Regression-Based Normative Data. J. Clin. Exp. Neuropsychol. 2011, 33, 1005–1015. [Google Scholar] [CrossRef] [PubMed]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer Texts in Statistics; Springer New York: New York, NY, USA, 2013; Volume 103, ISBN 978-1-4614-7137-0. [Google Scholar]

- Cook, R.D. Detection of Influential Observation in Linear Regression. Technometrics 1977, 19, 15. [Google Scholar] [CrossRef]

- Rosseel, Y. Lavaan: An R Package for Structural Equation Modeling. J. Stat. Soft. 2012, 48, 1–36. [Google Scholar] [CrossRef]

- Chalmers, R.P. Mirt: A Multidimensional Item Response Theory Package for the R Environment. J. Stat. Soft. 2012, 48, 1–29. [Google Scholar] [CrossRef]

- Rizopoulos, D. Ltm: An R Package for Latent Variable Modeling and Item Response Theory Analyses. J. Stat. Soft. 2006, 17, 1–25. [Google Scholar] [CrossRef]

- Rivera, D.; Forte, A.; Olabarrieta-Landa, L.; Perrin, P.B.; Arango-Lasprilla, J.C. Methodology for the Generation of Normative Data for the U.S. Adult Spanish-Speaking Population: A Bayesian Approach. NeuroRehabilitation 2024, 55, 155–167. [Google Scholar] [CrossRef] [PubMed]

- Quintana, M.; Salinas González, I.; Gallardo, G.; McNeil, M.R. An Item Response Theory Analysis of the Revised Token Test in Normally Developing Native Spanish Speaking Children. Anu. Psicol. UB J. Psychol. 2015, 45, 147–160. [Google Scholar]

- Willmes, K. A New Look at the Token Test Using Probabilistic Test Models. Neuropsychologia 1981, 19, 631–646. [Google Scholar] [CrossRef] [PubMed]

- Prieto, G.; Delgado, A.R.; Perea, M.V.; Ladera, V. Scoring Neuropsychological Tests Using the Rasch Model: An Illustrative Example with the Rey-Osterrieth Complex Figure. Clin. Neuropsychol. 2010, 24, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Hambleton, R.K. Practical Consequences of Item Response Theory Model Misfit in the Context of Test Equating with Mixed-Format Test Data. Front. Psychol. 2017, 8, 484. [Google Scholar] [CrossRef]

- Scott, N.W.; Fayers, P.M.; Aaronson, N.K.; Bottomley, A.; De Graeff, A.; Groenvold, M.; Gundy, C.; Koller, M.; Petersen, M.A.; Sprangers, M.A. Differential Item Functioning (DIF) Analyses of Health-Related Quality of Life Instruments Using Logistic Regression. Health Qual Life Outcomes 2010, 8, 81. [Google Scholar] [CrossRef]

- Sultana, N.; Purdy, S.C. Supporting Children’s Communication Development through Parental Education. In Education and Human Development; Cristina Richaud, M., Vargas Rubilar, J., Noemí Lemos, V., Eds.; IntechOpen: London, UK, 2024; Volume 32, ISBN 978-1-83769-425-9. [Google Scholar]

- Zappullo, I.; Senese, V.P.; Trojano, L.; Cecere, R.; Conson, M. Specific and Shared Cognitive Predictors of Drawing and Block Building in Typically Developing Children. Front. Hum. Neurosci. 2024, 18, 1436362. [Google Scholar] [CrossRef]

- Li, Y.; Geary, D.C. Children’s Visuospatial Memory Predicts Mathematics Achievement through Early Adolescence. PLoS ONE 2017, 12, e0172046. [Google Scholar] [CrossRef]

- Ross, C.E.; Mirowsky, J. The interaction of personal and parental education on health. Soc. Sci. Med. 2011, 72, 591–599. [Google Scholar] [CrossRef]

- Tamayo Martinez, N.; Xerxa, Y.; Law, J.; Serdarevic, F.; Jansen, P.W.; Tiemeier, H. Double Advantage of Parental Education for Child Educational Achievement: The Role of Parenting and Child Intelligence. Eur. J. Public Health 2022, 32, 690–695. [Google Scholar] [CrossRef] [PubMed]

- Merz, E.C.; Landry, S.H.; Williams, J.M.; Barnes, M.A.; Eisenberg, N.; Spinrad, T.L.; Valiente, C.; Assel, M.; Taylor, H.B.; Lonigan, C.J.; et al. Associations among Parental Education, Home Environment Quality, Effortful Control, and Preacademic Knowledge. J. Appl. Dev. Psychol. 2014, 35, 304–315. [Google Scholar] [CrossRef] [PubMed]

- Rakesh, D.; Lee, P.A.; Gaikwad, A.; McLaughlin, K.A. Annual Research Review: Associations of Socioeconomic Status with Cognitive Function, Language Ability, and Academic Achievement in Youth: A Systematic Review of Mechanisms and Protective Factors. J. Child Psychol. Psychiatry 2025, 66, 417–439. [Google Scholar] [CrossRef] [PubMed]

- Borchers, J.; da Cunha, M.S. School Performance and Inequality of Opportunities in Latin America. Stud. Educ. Eval. 2025, 86, 101497. [Google Scholar] [CrossRef]

- Van Der Elst, W.; Reed, H.; Jolles, J. The Logical Grammatical Structures Test: Psychometric Properties and Normative Data in Dutch-Speaking Children and Adolescents. Clin. Neuropsychol. 2013, 27, 396–409. [Google Scholar] [CrossRef]

- Little, M.A.; Varoquaux, G.; Saeb, S.; Lonini, L.; Jayaraman, A.; Mohr, D.C.; Kording, K.P. Using and Understanding Cross-Validation Strategies. Perspectives on Saeb et Al. GigaScience 2017, 6, gix020. [Google Scholar] [CrossRef]

- Embretson, S.E.; Reise, S.P. Item Response Theory; Psychology Press: London, UK, 2013; ISBN 978-1-4106-0526-9. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).