Artificial Intelligence and Digital Technologies Against Health Misinformation: A Scoping Review of Public Health Responses

Abstract

1. Introduction

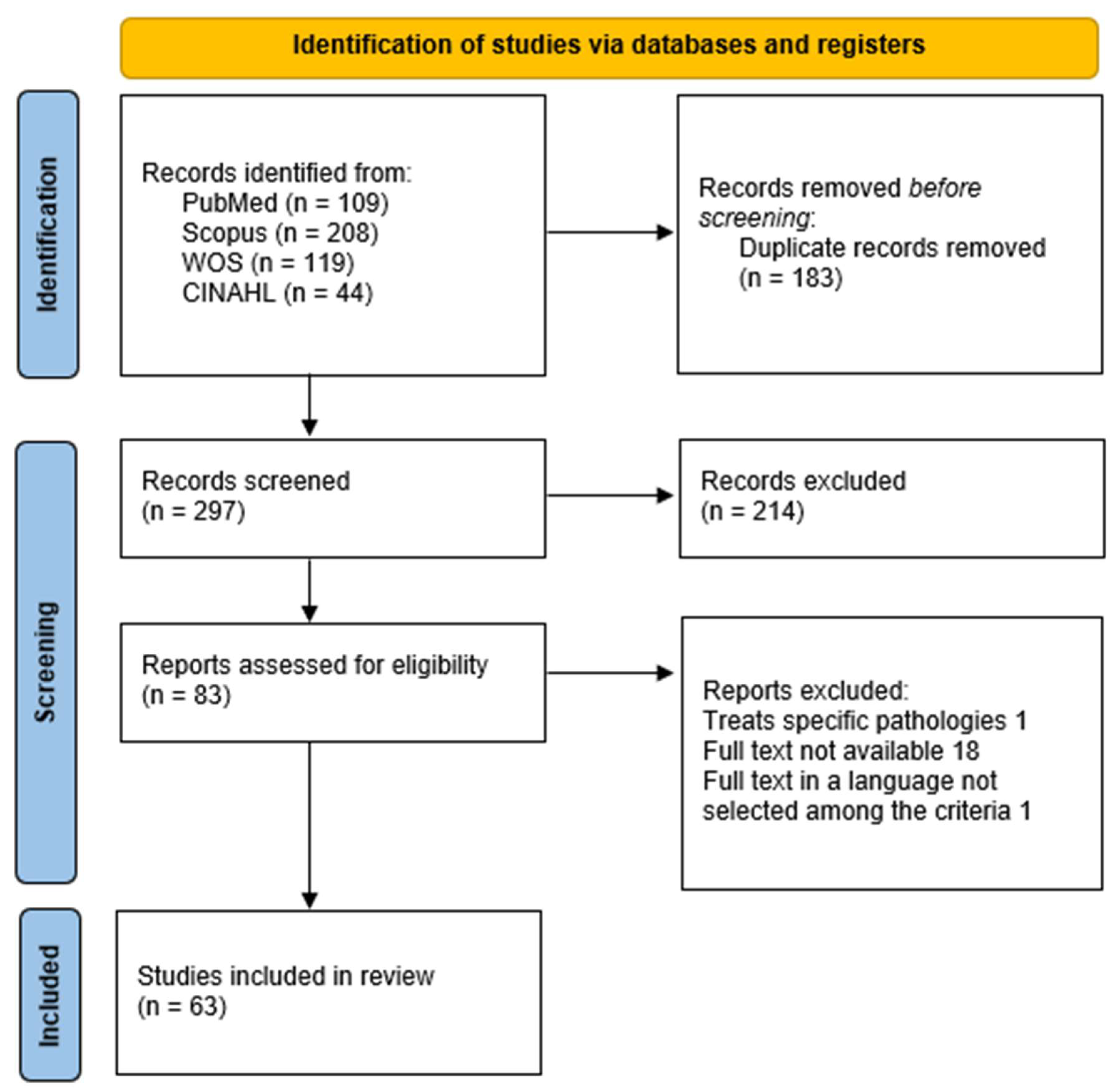

2. Materials and Methods

2.1. Study Design and Setting

2.2. Participants and Eligibility Criteria

- Population: general population, patients, healthcare workers, or policymakers exposed to health-related misinformation.

- Concept: application of AI/ML, social media analytics, or digital communication strategies for monitoring, detection, prevention, education, or mitigation of misinformation.

- Context: public health and health communication at global, regional, or local levels.

2.3. Information Sources and Search Strategy

2.4. Selection Process

- Title/abstract screening against eligibility criteria.

- Full-text assessment of potentially eligible studies.

2.5. Data Extraction and Charting

2.6. Outcomes of Interest

- Applications—operational uses of AI and digital tools in infodemic management, including detection, classification, and surveillance of health misinformation.

- Responsiveness—capacity of interventions to support timely and adaptive public health responses, such as early warning systems, crisis communication, and real-time monitoring.

- Ethical concerns—issues related to algorithmic bias, transparency, accountability, data protection, and the risk of exacerbating misinformation or inequities.

- Equity and Accessibility—attention to vulnerable populations, digital divides, multilingual contexts, inclusivity of tools, and accessibility features.

- Policies and Strategic frameworks—implications for governance, regulatory initiatives, institutional guidelines, and integration of digital tools into public health systems.

2.7. Data Management and Synthesis

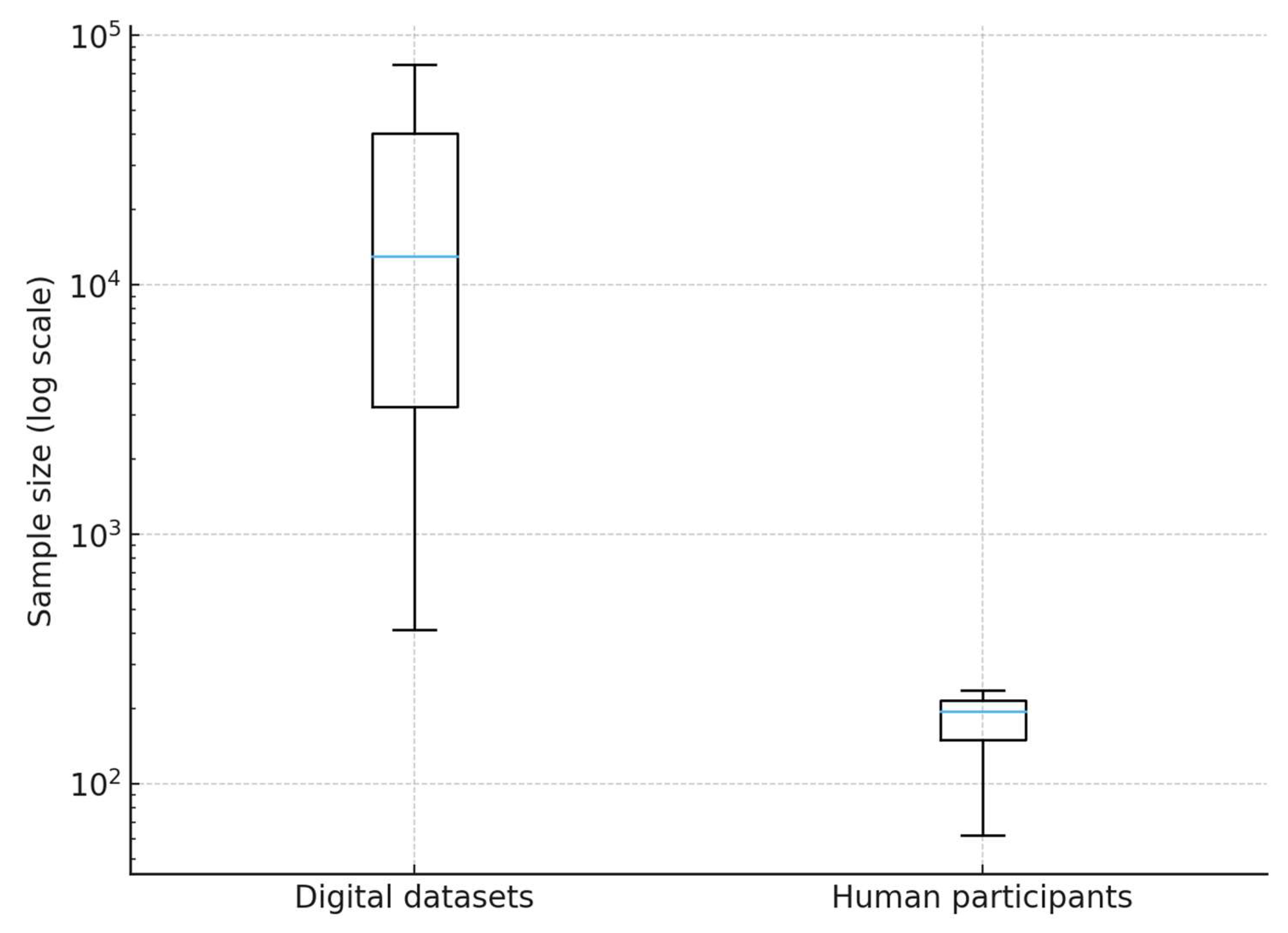

2.8. Statistical Analysis

3. Results

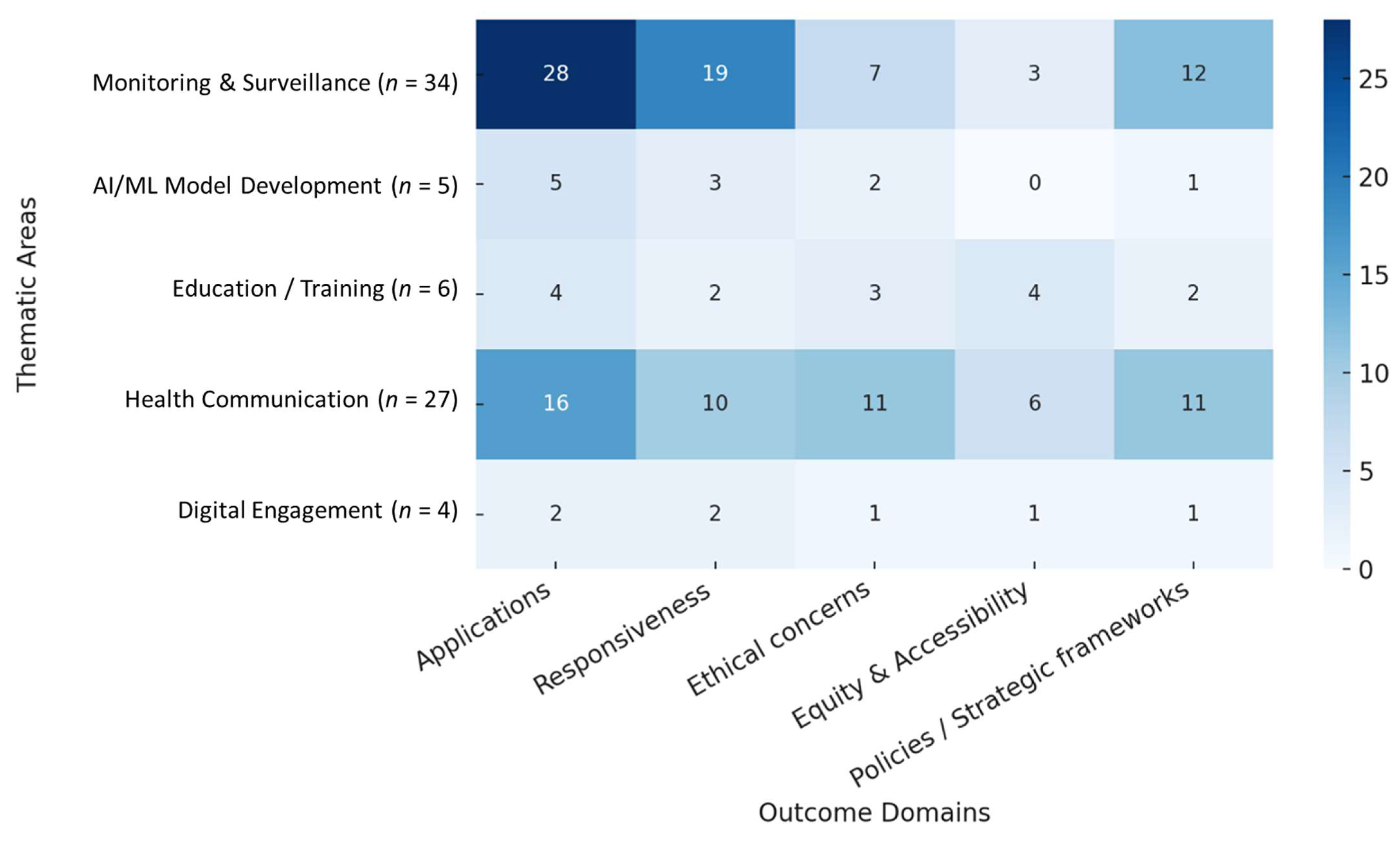

3.1. Main Thematic Areas

3.1.1. Monitoring and Surveillance

3.1.2. AI/ML Model Development

3.1.3. Education and Training

3.1.4. Health Communication

3.1.5. Digital Engagement

3.2. Cross-Cutting Domains

3.2.1. Applications

3.2.2. Responsiveness

3.2.3. Ethical Concerns

3.2.4. Equity and Accessibility

3.2.5. Policies/Strategic Frameworks

3.3. Outcomes

3.4. Synthesis of Findings

4. Discussion

- Informational epidemiology: digital signals can anticipate epidemic trends and behaviors, serving as proxies for public health preparedness.

- Technology and literacy: AI models, when integrated with educational and communication strategies, can enhance health literacy and resilience against misinformation.

- Governance and trust: the legitimacy and adoption of digital tools depend on transparency, independent audits, and the inclusion of vulnerable groups.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Managing the COVID-19 Infodemic: Promoting Healthy Behaviours and Mitigating the Harm from Misinformation and Disinformation; WHO: Geneva, Switzerland, 2020; Available online: https://www.who.int/news/item/23-09-2020-managing-the-covid-19-infodemic-promoting-healthy-behaviours-and-mitigating-the-harm-from-misinformation-and-disinformation (accessed on 6 September 2025).

- Islam, S.; Sarkar, T.; Khan, S.H.; Kamal, A.-H.M.; Hasan, S.M.M.; Kabir, A.; Yeasmin, D.; Islam, M.A.; Chowdhury, K.I.A.; Anwar, K.S.; et al. COVID-19–Related Infodemic and Its Impact on Public Health: A Global Social Media Analysis. Am. J. Trop. Med. Hyg. 2020, 103, 1621–1629. [Google Scholar] [CrossRef] [PubMed]

- Roozenbeek, J.; Schneider, C.R.; Dryhurst, S.; Kerr, J.; Freeman, A.L.J.; Recchia, G.; van der Bles, A.M.; van der Linden, S. Susceptibility to misinformation about COVID-19 around the world. R. Soc. Open Sci. 2020, 7, 201199. [Google Scholar] [CrossRef]

- Su, Z.; McDonnell, D.; Wen, J.; Kozak, M.; Abbas, J.; Šegalo, S.; Li, X.; Ahmad, J.; Cheshmehzangi, A.; Cai, Y.; et al. Mental health consequences of COVID-19 media coverage: The need for effective crisis communication practices. Glob. Health 2021, 17, 1–8. [Google Scholar] [CrossRef]

- Eysenbach, G. How to Fight an Infodemic: The Four Pillars of Infodemic Management. J. Med. Internet Res. 2020, 22, e21820. [Google Scholar] [CrossRef]

- Cinelli, M.; Quattrociocchi, W.; Galeazzi, A.; Valensise, C.M.; Brugnoli, E.; Schmidt, A.L.; Zola, P.; Zollo, F.; Scala, A. The COVID-19 social media infodemic. Sci. Rep. 2020, 10, 16598. [Google Scholar] [CrossRef]

- Gallotti, R.; Valle, F.; Castaldo, N.; Sacco, P.; De Domenico, M. Assessing the risks of ‘infodemics’ in response to COVID-19 epidemics. Nat. Hum. Behav. 2020, 4, 1285–1293. [Google Scholar] [CrossRef]

- Sharma, K.; Seo, S.; Meng, C.; Rambhatla, S.; Dua, A.; Liu, Y. Coronavirus on Social Media: Analyzing Misinformation in Twitter Conversations [preprint]. arXiv 2020. [Google Scholar] [CrossRef]

- Zhou, C.; Xiu, H.; Wang, Y.; Yu, X. Characterizing the dissemination of misinformation on social media in health emergencies: An empirical study based on COVID-19. Inf. Process. Manag. 2021, 58, 102554. [Google Scholar] [CrossRef] [PubMed]

- Cinelli, M.; Morales, G.D.F.; Galeazzi, A.; Quattrociocchi, W.; Starnini, M. The echo chamber effect on social media. Proc. Natl. Acad. Sci. USA 2021, 118, e2023301118. [Google Scholar] [CrossRef]

- Broniatowski, D.A.; Kerchner, D.; Farooq, F.; Huang, X.; Jamison, A.M.; Dredze, M.; Quinn, S.C. The COVID-19 Social Media Infodemic Reflects Uncertainty and State-Sponsored Propaganda. arXiv 2020. [Google Scholar] [CrossRef]

- Morley, J.; Cowls, J.; Taddeo, M.; Floridi, L. Ethical guidelines for COVID-19 tracing apps. Nature 2020, 582, 29–31. [Google Scholar] [CrossRef]

- Leslie, D. Tackling COVID-19 through responsible AI innovation: Five steps in the right direction. arXiv 2020, arXiv:2008.06755. [Google Scholar] [CrossRef]

- Aromataris, E.; Munn, Z. (Eds.) JBI Reviewer’s Manual: Methodology for JBI Scoping Reviews; JBI: Adelaide, Australia, 2020; Available online: https://jbi-global-wiki.refined.site/download/attachments/355863557/JBI_Reviewers_Manual_2020June.pdf?download=true (accessed on 6 September 2025).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Wang, H.; Fu, T.; Du, Y.; Gao, W.; Huang, K.; Liu, Z.; Chandak, P.; Liu, S.; Van Katwyk, P.; Deac, A.; et al. Scientific discovery in the age of artificial intelligence. Nature 2023, 620, 47–60, Erratum in Nature 2023, 621, E33. [Google Scholar] [CrossRef]

- Roy Rosenzweig Center for History and New Media. Zotero; George Mason University: Fairfax, VA, USA, 2006; Available online: http://www.zotero.org (accessed on 29 August 2025).

- Chen, S.; Zhou, L.; Song, Y.; Xu, Q.; Wang, P.; Wang, K.; Ge, Y.; Janies, D. A Novel Machine Learning Framework for Comparison of Viral COVID-19–Related Sina Weibo and Twitter Posts: Workflow Development and Content Analysis. J. Med. Internet Res. 2021, 23, e24889. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.P.; Chen, Y.Y.; Yang, K.C.; Lai, F.; Huang, C.H.; Chen, Y.N.; Tu, Y.C. Misinformation About COVID-19 Vaccines on Social Media: Rapid Review. J. Med. Internet Res. 2022, 24, e37367. [Google Scholar] [CrossRef]

- Abonizio, H.Q.; Barbon, A.P.A.d.C.; Rodrigues, R.; Santos, M.; Martínez-Vizcaíno, V.; Mesas, A.E.; Barbon, S., Jr. How people interact with a chatbot against disinformation and fake news in COVID-19 in Brazil: The CoronaAI case. Int. J. Med. Inform. 2023, 177, 105134. [Google Scholar] [CrossRef]

- Algarni, M.; Ben Ismail, M.M. Applications of Artificial Intelligence for Information Diffusion Prediction: Regression-based Key Features Models. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 1191–1201. [Google Scholar] [CrossRef]

- Boatman, D.; Starkey, A.; Acciavatti, L.; Jarrett, Z.; Allen, A.; Kennedy-Rea, S. Using Social Listening for Digital Public Health Surveillance of Human Papillomavirus Vaccine Misinformation Online: Exploratory Study. JMIR Infodemiology 2024, 4, e54000. [Google Scholar] [CrossRef]

- Du, J.; Preston, S.; Sun, H.; Shegog, R.; Cunningham, R.; Boom, J.; Savas, L.; Amith, M.; Tao, C. Using Machine Learning–Based Approaches for the Detection and Classification of Human Papillomavirus Vaccine Misinformation: Infodemiology Study of Reddit Discussions. J. Med. Internet Res. 2021, 23, e26478. [Google Scholar] [CrossRef]

- Edinger, A.; Valdez, D.; Walsh-Buhi, E.; Trueblood, J.S.; Lorenzo-Luaces, L.; Rutter, L.A.; Bollen, J. Misinformation and Public Health Messaging in the Early Stages of the Mpox Outbreak: Mapping the Twitter Narrative With Deep Learning. J. Med. Internet Res. 2023, 25, e43841. [Google Scholar] [CrossRef]

- Fernandez-Luque, L.; Imran, M. Predicting post-stroke activities of daily living through a machine learning-based approach on initiating rehabilitation. Int. J. Med. Inform. 2018, 111, 159–164. [Google Scholar] [CrossRef]

- Gao, S.; Wang, Y. Can User Characteristics Predict Norm Adherence on Social Media? In Exploring User-Centric Misinformation Interventions. In Proceedings of the 22nd ISCRAM Conference, Halifax, Canada, 18–21 May 2025; ISCRAM: Halifax, Canada, 2025; p. 21. [Google Scholar] [CrossRef]

- Wang, Y.; O’cOnnor, K.; Flores, I.; Berdahl, C.T.; Urbanowicz, R.J.; Stevens, R.; Bauermeister, J.A.; Gonzalez-Hernandez, G. Mpox Discourse on Twitter by Sexual Minority Men and Gender-Diverse Individuals: Infodemiological Study Using BERTopic. JMIR Public Health Surveill. 2024, 10, e59193. [Google Scholar] [CrossRef]

- Gentili, A.; Villani, L.; Osti, T.; Corona, V.F.; Gris, A.V.; Zaino, A.; Bonacquisti, M.; De Maio, L.; Solimene, V.; Gualano, M.R.; et al. Strategies and bottlenecks to tackle infodemic in public health: A scoping review. Front. Public Health 2024, 12, 1438981. [Google Scholar] [CrossRef]

- Ghenai, A.; Mejova, Y. Catching Zika Fever: Application of Crowdsourcing and Machine Learning for Tracking Health Misinformation on Twitter. In Proceedings of the 2017 IEEE International Conference on Healthcare Informatics (ICHI), Park City, UT, USA, 23–26 August 2017; p. 518. [Google Scholar]

- Guo, Y.; Liu, X.; Susarla, A.; Padman, R. YouTube Videos for Public Health Literacy? A Machine Learning Pipeline to Curate Covid-19 Videos. Stud. Health Technol. Inform. 2024, 310, 760–764. [Google Scholar]

- He, Y.; Liang, J.; Fu, W.; Liu, Y.; Yang, F.; Ding, S.; Lei, J. Mapping Knowledge Landscapes and Emerging Trends for the Spread of Health-Related Misinformation During the COVID-19 on Chinese and English Social Media: A Comparative Bibliometric and Visualization Analysis. J. Multidiscip. Health 2024, 17, 6043–6057. [Google Scholar] [CrossRef]

- Hussna, A.U.; Alam, G.R.; Islam, R.; Alkhamees, B.F.; Hassan, M.M.; Uddin, Z. Dissecting the infodemic: An in-depth analysis of COVID-19 misinformation detection on X (formerly Twitter) utilizing machine learning and deep learning techniques. Heliyon 2024, 10, e37760. [Google Scholar] [CrossRef] [PubMed]

- Ismail, H.; Hussein, N.; Elabyad, R.; Abdelhalim, S.; Elhadef, M. Aspect-based classification of vaccine misinformation: A spatiotemporal analysis using Twitter chatter. BMC Public Health 2023, 23, 1–14. [Google Scholar] [CrossRef]

- Lanyi, K.; Green, R.; Craig, D.; Marshall, C. COVID-19 Vaccine Hesitancy: Analysing Twitter to Identify Barriers to Vaccination in a Low Uptake Region of the UK. Front. Digit. Health 2022, 3, 804855. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Gong, R.; Zhou, W. SmartEye: Detecting COVID-19 misinformation on Twitter for Mitigating Public Health Risk. In Proceedings of the 2023 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju, Republic of Korea, 13–16 February 2023; IEEE: New York City, NY, USA, 2023; pp. 330–337. [Google Scholar]

- Lokhande, S.; Pandey, D.S. Harnessing Deep Learning to Combat Misinformation and Detect Depression on Social Media: Challenges and Interventions. Nanotechnol. Percept. 2024, 20, 1141–1150. [Google Scholar] [CrossRef]

- Lu, J.; Zhang, H.; Xiao, Y.; Wang, Y. An Environmental Uncertainty Perception Framework for Misinformation Detection and Spread Prediction in the COVID-19 Pandemic: Artificial Intelligence Approach. JMIR AI 2024, 3, e47240. [Google Scholar] [CrossRef] [PubMed]

- Papanikou, V.; Papadakos, P.; Karamanidou, T.; Stavropoulos, T.G.; Pitoura, E.; Tsaparas, P. Health Misinformation in Social Networks: A Survey of Information Technology Approaches. Futur. Internet 2025, 17, 129. [Google Scholar] [CrossRef]

- Patel, M.; Surti, M.; Adnan, M. Artificial intelligence (AI) in Monkeypox infection prevention. J. Biomol. Struct. Dyn. 2023, 41, 8629–8633. [Google Scholar] [CrossRef] [PubMed]

- Pobiruchin, M.; Zowalla, R.; Wiesner, M. Temporal and Location Variations, and Link Categories for the Dissemination of COVID-19–Related Information on Twitter During the SARS-CoV-2 Outbreak in Europe: Infoveillance Study. J. Med. Internet Res. 2020, 22, e19629. [Google Scholar] [CrossRef]

- Purnat, T.D.; Vacca, P.; Czerniak, C.; Ball, S.; Burzo, S.; Zecchin, T.; Wright, A.; Bezbaruah, S.; Tanggol, F.; Dubé, È.; et al. Infodemic Signal Detection During the COVID-19 Pandemic: Development of a Methodology for Identifying Potential Information Voids in Online Conversations. JMIR Infodemiol. 2021, 1, e30971. [Google Scholar] [CrossRef]

- Rees, E.; Ng, V.; Gachon, P.; Mawudeku, A.; McKenney, D.; Pedlar, J.; Yemshanov, D.; Parmely, J.; Knox, J. Risk assessment strategies for early detection and prediction of infectious disease outbreaks associated with climate change. Can. Commun. Dis. Rep. 2019, 45, 119–126. [Google Scholar] [CrossRef]

- Röchert, D.; Shahi, G.K.; Neubaum, G.; Ross, B.; Stieglitz, S. The Networked Context of COVID-19 Misinformation: Informational Homogeneity on YouTube at the Beginning of the Pandemic. Online Soc. Netw. Media 2021, 26, 100164. [Google Scholar] [CrossRef]

- Rodrigues, F.; Newell, R.; Babu, G.R.; Chatterjee, T.; Sandhu, N.K.; Gupta, L. The social media Infodemic of health-related misinformation and technical solutions. Health Policy Technol. 2024, 13, 100846. [Google Scholar] [CrossRef]

- Temiz, H.; Temizel, T.T. A multi-feature fusion-based approach for automatic fake news detection on social media. Appl. Sci. 2023, 13, 5572. [Google Scholar] [CrossRef]

- Thomas, M.J.; Lal, V.; Baby, A.K.; Rabeeh, V.P.; James, A.; Raj, A.K. Can technological advancements help to alleviate COVID-19 pandemic? A review. J. Biomed. Inform. 2021, 117, 103787. [Google Scholar] [CrossRef] [PubMed]

- Tsao, S.-F.; Chen, H.; Tisseverasinghe, T.; Yang, Y.; Li, L.; Butt, Z.A. What social media told us in the time of COVID-19: A scoping review. Lancet Digit. Health 2021, 3, e175–e194. [Google Scholar] [CrossRef]

- Wang, J.; Wang, X.; Yu, A. Tackling misinformation in mobile social networks a BERT-LSTM approach for enhancing digital literacy. Sci. Rep. 2025, 15, 1118. [Google Scholar] [CrossRef]

- Wang, Z.; Yin, Z.; Argyris, Y.A. Detecting Medical Misinformation on Social Media Using Multimodal Deep Learning. IEEE Trans. Neural. Netw. Learn. Syst. 2021, 32, 2569–2582. [Google Scholar] [CrossRef]

- White, B.; Cabalero, I.; Nguyen, T.; Briand, S.; Pastorino, A.; Purnat, T.D. An adaptive digital intelligence system to support infodemic management: The WHO EARS platform. Stud. Health Technol. Inform. 2023, 302, 891–898. [Google Scholar] [CrossRef]

- Yan, Q.; Shan, S.; Sun, M.; Zhao, F.; Yang, Y.; Li, Y. A Social Media Infodemic-Based Prediction Model for the Number of Severe and Critical COVID-19 Patients in the Lockdown Area. Int. J. Environ. Res. Public Health 2022, 19, 8109. [Google Scholar] [CrossRef]

- Zhang, Y.; Zong, R.; Shang, L.; Yue, Z.; Zeng, H.; Liu, Y.; Wang, D. Tripartite Intelligence: Synergizing Deep Neural Network, Large Language Model, and Human Intelligence for Public Health Misinformation Detection (Archival Full Paper). In Proceedings of the CI ‘24: ACM Collective Intelligence Conference, Boston, MA, USA, 27–28 June 2024; pp. 63–75. [Google Scholar]

- Di Sotto, S.; Viviani, M. Health Misinformation Detection in the Social Web: An Overview and a Data Science Approach. Int. J. Environ. Res. Public Health 2022, 19, 2173. [Google Scholar] [CrossRef] [PubMed]

- Sharifpoor, E.; Okhovati, M.; Ghazizadeh-Ahsaee, M.; Beigi, M.A. Classifying and fact-checking health-related information about COVID-19 on Twitter/X using machine learning and deep learning models. BMC Med. Inform. Decis. Mak. 2025, 25, 73. [Google Scholar] [CrossRef] [PubMed]

- Antoliš, K. Education against Disinformation. Interdiscip. Descr. Complex Syst. 2024, 22, 71–83. [Google Scholar] [CrossRef]

- Brown, D.; Uddin, M.; Al-Hossami, E.; Janies, D.; Shaikh, S.; Cheng, Z. Multidisciplinary Engagement of Diverse Students in Computer Science Education through Research Focused on Social Media COVID-19 Misinformation. In Proceedings of the ASEE Annual Conference & Exposition, Minneapolis, MN, USA, 26–29 June 2022; American Society for Engineering Education: Minneapolis, MN, USA, 2022; pp. 1–17. [Google Scholar]

- Yang, C. Navigating vaccine misinformation: A study on users’ motivations, active communicative action and anti-misinformation behavior via chatbots. Online Inf. Rev. 2025, 49, 643–663. [Google Scholar] [CrossRef]

- Liu, T.; Xiao, X. A Framework of AI-Based Approaches to Improving eHealth Literacy and Combating Infodemic. Front. Public Health 2021, 9, 755808. [Google Scholar] [CrossRef]

- Olusanya, O.A.; Ammar, N.; Davis, R.L.; Bednarczyk, R.A.; Shaban-Nejad, A. A Digital Personal Health Library for Enabling Precision Health Promotion to Prevent Human Papilloma Virus-Associated Cancers. Front. Digit. Health 2021, 3, 683161. [Google Scholar] [CrossRef]

- Albrecht, S.S.; Aronowitz, S.V.; Buttenheim, A.M.; Coles, S.; Dowd, J.B.; Hale, L.; Kumar, A.; Leininger, L.; Ritter, A.Z.; Simanek, A.M.; et al. Lessons Learned From Dear Pandemic, a Social Media–Based Science Communication Project Targeting the COVID-19 Infodemic. Public Health Rep. 2022, 137, 449–456. [Google Scholar] [CrossRef] [PubMed]

- Bin Naeem, S.; Boulos, M.N.K. COVID-19 Misinformation Online and Health Literacy: A Brief Overview. Int. J. Environ. Res. Public Health 2021, 18, 8091. [Google Scholar] [CrossRef] [PubMed]

- Burke-Garcia, A.; Hicks, R.S. Scaling the Idea of Opinion Leadership to Address Health Misinformation: The Case for “Health Communication AI”. J. Health Commun. 2024, 29, 396–399. [Google Scholar] [CrossRef] [PubMed]

- ElSherief, M.; Sumner, S.; Krishnasamy, V.; Jones, C.; Law, R.; Kacha-Ochana, A.; Schieber, L.; De Choudhury, M. Identification of Myths and Misinformation About Treatment for Opioid Use Disorder on Social Media: Infodemiology Study. JMIR Form. Res. 2024, 8, e44726. [Google Scholar] [CrossRef]

- Fabrizzio, G.C.; de Oliveira, L.M.; da Costa, D.G.; Erdmann, A.L.; dos Santos, J.L.G. Virtual assistant: A tool for health co-production in coping with COVID-19. Texto Context. Enferm. 2023, 32, e20220136. [Google Scholar] [CrossRef]

- Garett, R.; Young, S.D. Online misinformation and vaccine hesitancy. Transl. Behav. Med. 2021, 11, 2194–2199. [Google Scholar] [CrossRef]

- Malecki, K.M.C.; Keating, J.A.; Safdar, N. Crisis Communication and Public Perception of COVID-19 Risk in the Era of Social Media. Clin. Infect. Dis. 2021, 72, 699–704. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.A.; Al-Tammemi, A.B.; Barakat, M.; Fayyad, D.; Hallit, S.; Harapan, H.; Hallit, R.; Mahafzah, A. ChatGPT Output Regarding Compulsory Vaccination and COVID-19 Vaccine Conspiracy: A Descriptive Study at the Outset of a Paradigm Shift in Online Search for Information. Cureus 2023, 15, e35029. [Google Scholar] [CrossRef]

- Sathianathan, S.; Ali, A.M.; Chong, W.W. How the General Public Navigates Health Misinformation on Social Media: Qualitative Study of Identification and Response Approaches. JMIR Infodemiol. 2025, 5, e67464. [Google Scholar] [CrossRef]

- Singhal, A.; Mago, V. Exploring How Healthcare Organizations Use Twitter: A Discourse Analysis. Informatics 2023, 10, 65. [Google Scholar] [CrossRef]

- Tasnim, S.; Hossain, M.; Mazumder, H. Impact of Rumors and Misinformation on COVID-19 in Social Media. J. Prev. Med. Public Health 2020, 53, 171–174. [Google Scholar] [CrossRef]

- Tsao, S.-F.; Chen, H.H.; Meyer, S.B.; Butt, Z.A. Proposing a Conceptual Framework: Social Media Infodemic Listening for Public Health Behaviors. Int. J. Public Health 2024, 69, 1607394. [Google Scholar] [CrossRef]

- Tuccori, M.; Convertino, I.; Ferraro, S.; Cappello, E.; Valdiserra, G.; Focosi, D.; Blandizzi, C. The Impact of the COVID-19 “Infodemic” on Drug-Utilization Behaviors: Implications for Pharmacovigilance. Drug Saf. 2020, 43, 699–709. [Google Scholar] [CrossRef] [PubMed]

- Unlu, A.; Truong, S.; Tammi, T.; Lohiniva, A.-L. Exploring Political Mistrust in Pandemic Risk Communication: Mixed-Method Study Using Social Media Data Analysis. J. Med. Internet Res. 2023, 25, e50199. [Google Scholar] [CrossRef] [PubMed]

- Valdez, D.; Soto-Vásquez, A.D.; Montenegro, M.S. Geospatial vaccine misinformation risk on social media: Online insights from an English/Spanish natural language processing (NLP) analysis of vaccine-related tweets. Soc. Sci. Med. 2023, 339, 116365. [Google Scholar] [CrossRef]

- White, B.K.; Gombert, A.; Nguyen, T.; Yau, B.; Ishizumi, A.; Kirchner, L.; León, A.; Wilson, H.; Jaramillo-Gutierrez, G.; Cerquides, J.; et al. Using Machine Learning Technology (Early Artificial Intelligence–Supported Response With Social Listening Platform) to Enhance Digital Social Understanding for the COVID-19 Infodemic: Development and Implementation Study. JMIR Infodemiology 2023, 3, e47317. [Google Scholar] [CrossRef] [PubMed]

- Xue, H.; Gong, X.; Stevens, H. COVID-19 Vaccine Fact-Checking Posts on Facebook: Observational Study. J. Med. Internet Res. 2022, 24, e38423. [Google Scholar] [CrossRef]

- Wehrli, S.; Irrgang, C.; Scott, M.; Arnrich, B.; Boender, T.S. The role of the (in)accessibility of social media data for infodemic management: A public health perspective on the situation in the European Union in March 2024. Front. Public Health 2024, 12, 1378412. [Google Scholar] [CrossRef]

- Zenone, M.; Snyder, J.; Bélisle-Pipon, J.-C.; Caulfield, T.; van Schalkwyk, M.; Maani, N. Advertising Alternative Cancer Treatments and Approaches on Meta Social Media Platforms: Content Analysis. JMIR Infodemiology 2023, 3, e43548. [Google Scholar] [CrossRef]

- Zang, S.; Zhang, X.; Xing, Y.; Chen, J.; Lin, L.; Hou, Z. Applications of Social Media and Digital Technologies in COVID-19 Vaccination: Scoping Review. J. Med. Internet Res. 2023, 25, e40057. [Google Scholar] [CrossRef]

- Crowley, T.; Tokwe, L.; Weyers, L.; Francis, R.; Petinger, C. Digital Health Interventions for Adolescents with Long-Term Health Conditions in South Africa: A Scoping Review. Int. J. Environ. Res. Public Health 2024, 22, 2. [Google Scholar] [CrossRef] [PubMed]

- Gomez-Cabello, C.A.; Borna, S.; Pressman, S.; Haider, S.A.; Haider, C.R.; Forte, A.J. Artificial-Intelligence-Based Clinical Decision Support Systems in Primary Care: A Scoping Review of Current Clinical Implementations. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 685–698. [Google Scholar] [CrossRef] [PubMed]

- Rahman, S.; Sarker, S.; Al Miraj, A.; Nihal, R.A.; Haque, A.K.M.N.; Al Noman, A. Deep Learning–Driven Automated Detection of COVID-19 from Radiography Images: A Comparative Analysis. Cogn. Comput. 2021, 16, 1735–1764. [Google Scholar] [CrossRef] [PubMed]

- Palanisamy, P.; Padmanabhan, A.; Ramasamy, A.; Subramaniam, S. Remote Patient Activity Monitoring System by Integrating IoT Sensors and Artificial Intelligence Techniques. Sensors 2023, 23, 5869. [Google Scholar] [CrossRef]

- World Health Organization. WHO Public Health Research Agenda for Managing Infodemics; WHO: Geneva, Switzerland, 2021; Available online: https://www.who.int/publications/i/item/9789240019508 (accessed on 14 October 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cianciulli, A.; Santoro, E.; Manente, R.; Pacifico, A.; Quagliarella, S.; Bruno, N.; Schettino, V.; Boccia, G. Artificial Intelligence and Digital Technologies Against Health Misinformation: A Scoping Review of Public Health Responses. Healthcare 2025, 13, 2623. https://doi.org/10.3390/healthcare13202623

Cianciulli A, Santoro E, Manente R, Pacifico A, Quagliarella S, Bruno N, Schettino V, Boccia G. Artificial Intelligence and Digital Technologies Against Health Misinformation: A Scoping Review of Public Health Responses. Healthcare. 2025; 13(20):2623. https://doi.org/10.3390/healthcare13202623

Chicago/Turabian StyleCianciulli, Angelo, Emanuela Santoro, Roberta Manente, Antonietta Pacifico, Savino Quagliarella, Nicole Bruno, Valentina Schettino, and Giovanni Boccia. 2025. "Artificial Intelligence and Digital Technologies Against Health Misinformation: A Scoping Review of Public Health Responses" Healthcare 13, no. 20: 2623. https://doi.org/10.3390/healthcare13202623

APA StyleCianciulli, A., Santoro, E., Manente, R., Pacifico, A., Quagliarella, S., Bruno, N., Schettino, V., & Boccia, G. (2025). Artificial Intelligence and Digital Technologies Against Health Misinformation: A Scoping Review of Public Health Responses. Healthcare, 13(20), 2623. https://doi.org/10.3390/healthcare13202623