1. Introduction

Approximately 18.1 million new cancer (CA) cases are diagnosed each year in the world, and 9.6 million people lose their lives due to cancer [

1]. The incidence of cancer is increasing worldwide due to aging and negative lifestyle changes [

2]. There are various treatment options for cancer patients, and these options are affected by many factors. Many factors, such as patients’ socio-demographic characteristics, comorbidities, lifestyle, and tumor status, are effective when making a treatment decision. In addition, technological developments constantly update diagnosis and treatment options. Therefore, managing cancer patients is very important [

3,

4]. The treatment plan of cancer patients becomes more difficult due to new drug discoveries, updated scientific guidelines, and rapidly changing evidence. To overcome these difficulties, meetings consisting of physicians from different branches called Multidisciplinary Tumor Councils (MDTs) have been established [

5]. Medical and radiation oncologists, radiologists, surgeons, and pathologists usually participate in MDTs and decide on the patient’s most appropriate and effective treatment option [

6]. For this reason, MDTs are essential in providing a comprehensive and multidisciplinary approach for each patient and tailoring treatment plans to individual needs [

5,

7].

Artificial Intelligence (AI) is a computer program that mimics cognitive functions to create systems that learn and think like humans [

8]. AI can perform cognitive functions such as perception, reasoning, decision making, problem solving, learning, and interacting with the environment [

9]. AI is also frequently used in medicine, particularly in diagnosing and treating diseases [

10].

Many studies in MDT have investigated artificial intelligence as a decision-support tool. Studies conducted on breast, lung, stomach, brain, and cervical cancers have determined a high rate of compatibility [

6,

11,

12,

13,

14,

15]. Recent advances in artificial intelligence (AI) have introduced several large language models (LLMs), such as GPT-3.5, GPT-4.0, Bard, and Med-PaLM, which differ in their architectures, data sources, and clinical applicability. However, despite these developments, few studies have evaluated the real-time performance of such models in multidisciplinary tumor board (MDT) decision making. To our knowledge, this is the first prospective study to assess the alignment between ChatGPT-4.0 and MDT treatment decisions in oncology patients. This highlights the potential role of ChatGPT-4.0 as a decision-support tool, particularly in resource-limited clinical settings

Within the scope of this research, we aimed to elucidate the compatibility of artificial intelligence with the decisions made in patients brought to the multidisciplinary tumor council. We sought to answer whether we can accept artificial intelligence as a new member, other than humans, as a decision-support tool in the council.

2. Method

2.1. Study Design

This prospective study was conducted on 100 patients who attended the tumor council at Van Regional Education and Research Hospital between November 2024 and January 2025. All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008. Our institution has granted ethics committee approval on 4 November 2024 with protocol number B.30.2.YYU.0.01.00.00/95. Informed consent has been obtained from all participants. This trial has been registered at ClinicalTrials.gov under the registration number NCT069866564.

The multidisciplinary tumor board is composed of specialists from medical oncology, radiation oncology, nuclear medicine, radiology, pathology, and surgical disciplines. The information of each patient to be presented at the board is shared by the primary physician with all board members one week in advance. During the meeting, the patient’s demographic data, clinical complaints, physical examination findings, comorbidities, performance status, laboratory results, pathology reports, and radiological findings are reviewed by the participants. Imaging studies are presented and re-evaluated in real time by radiology and nuclear medicine specialists using a projector. Following the discussion, a consensus decision is reached based on the opinions of all attending specialists.

During the planning phase of the study, the structure and operational principles of the multidisciplinary tumor board were first introduced to Chat-GPT 4o. It was clearly stated that, after the patients were discussed in the tumor board and treatment plans were determined, the AI’s decision would be queried solely for comparison purposes and would not influence the actual treatment planning.

The data of each patient presented to the board were compiled in a Word document by a physician who did not attend the board meeting and was blinded to the board’s decision. An example patient file was provided as a supplementary document. The file included detailed information such as the patient’s sex, age, comorbidities, performance status, clinical complaints, physical examination findings, radiology and pathology reports, and laboratory results (

Table 1).

This document was uploaded to Chat-GPT 4o, and the model was asked to evaluate the patient and propose a treatment plan based solely on the provided data. No additional prompts or questions were given in order to avoid external guidance beyond the shared information.

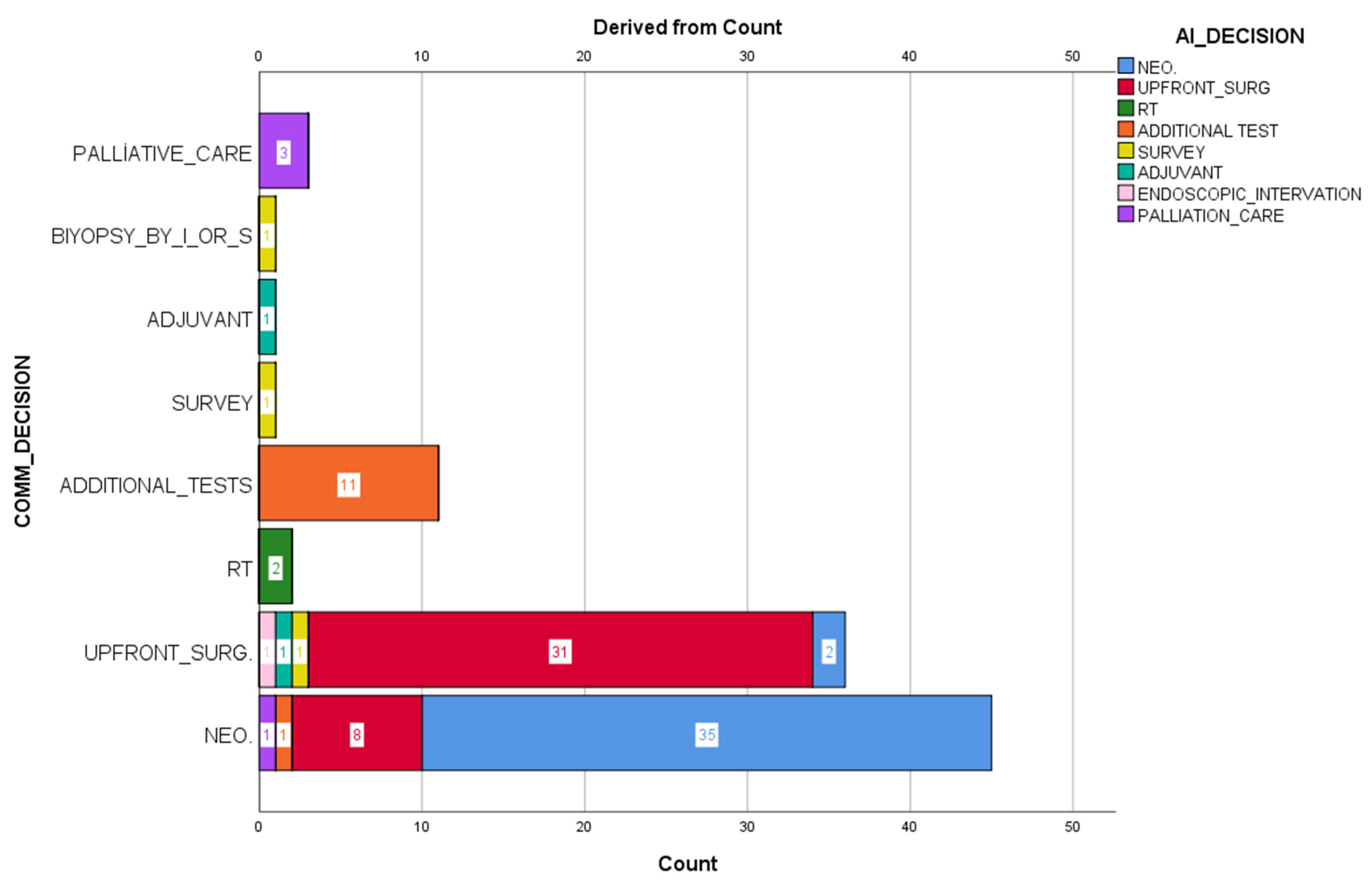

The decisions made by Chat CPT-4o and the council decisions were coded as neoadjuvant treatment, surgery, radiotherapy, additional examination request, follow-up, adjuvant therapy, interventional-surgical sampling, endoscopic intervention, and palliative. Artificial intelligence decisions were not reported to the council members. An independent statistics expert analyzed all results.

The study’s primary aim was to investigate the tumor council’s and artificial intelligence’s suitability in decision making. The second aim was to determine the reasons for patients’ inconsistent decisions.

2.2. Participants

Patients over the age of 18 who were diagnosed with cancer in pathology, regardless of their anatomical location, and were brought to the tumor council, were included in the study. All participants were considered patients brought to the council for the first time. Patients with incomplete information, those without a pathological cancer diagnosis, those brought for radiological opinion, and those brought to two or more times to council were not included in the study.

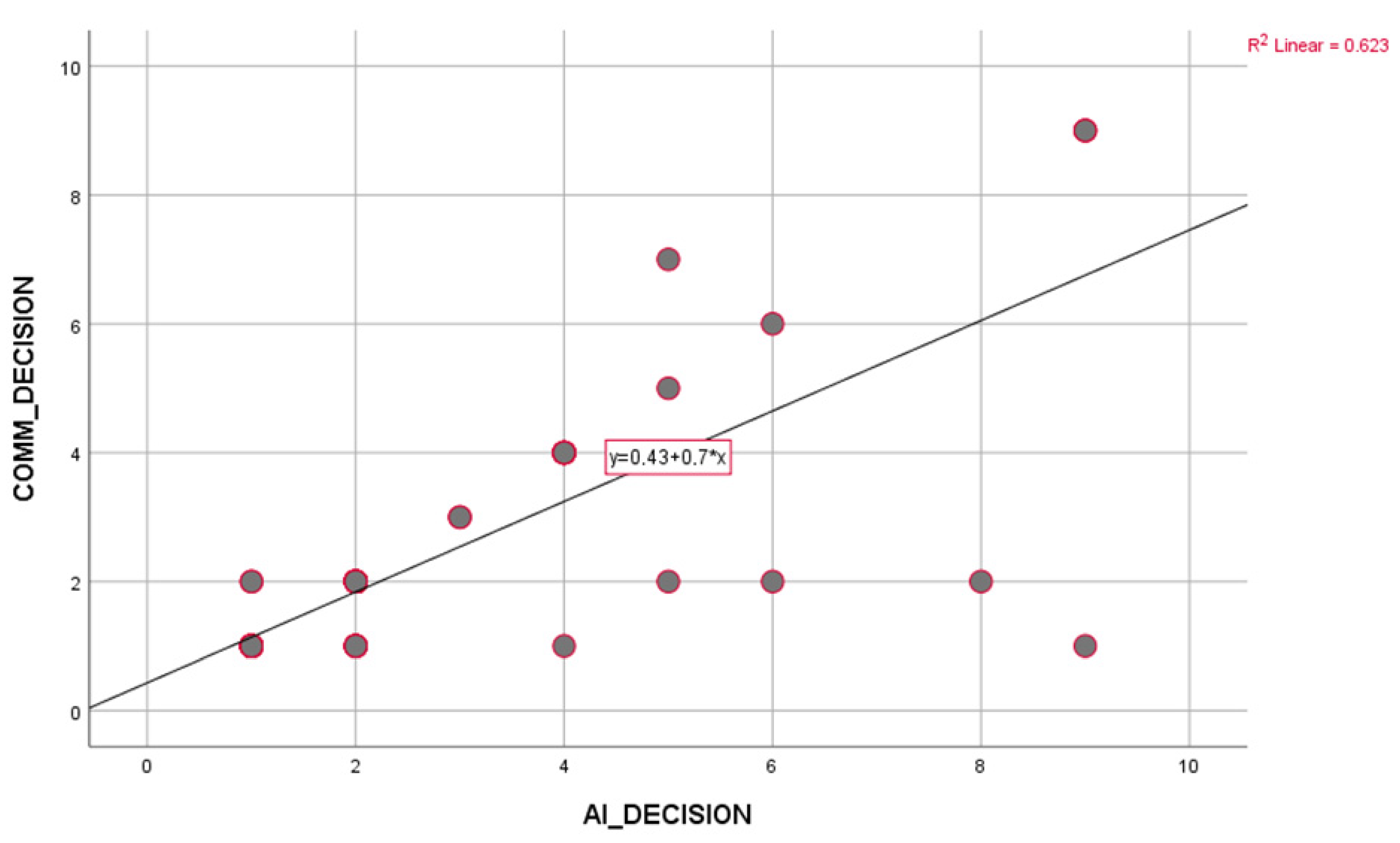

2.3. Statistical Analysis

Patient data collected within the study’s scope were analyzed using the IBM Statistical Package for the Social Sciences (SPSS) for Windows 26.0 (IBM Corp., Armonk, NY, USA) package program. To assess data distribution, we employed the Kolmogorov–Smirnov test to determine whether continuous variables followed a normal distribution or not. Frequency and percentage for categorical data and mean and standard deviation for continuous data were given as descriptive values. The agreement between MDT and AI was measured using Cohen’s Kappa test. Correlation analysis between variables was performed using Pearson or Spearman correlation techniques, as appropriate. For comparisons between groups, the “Independent Sample t-test” was used for two groups, and the “Pearson Chi-Square Test” was used to compare categorical variables. The results were considered statistically significant when the p-value was less than 0.05.

4. Discussion

In this prospective study, clinical case-based treatment decisions of multidisciplinary tumor councils (MDT) were compared with the recommendations of the large language model ChatGPT, and as a result of the evaluation conducted on 100 patients, a concordance of 76.4% was determined. Unlike most retrospective analyses in this field, the study’s prospective design allowed direct observation of real-time decision processes and a more objective assessment of how much the model overlaps with clinical reality. In this respect, the study makes an essential contribution to the literature evaluating the practical potential of artificial intelligence as a clinical decision support system.

The 76.4% concordance obtained reveals ChatGPT’s high familiarity with oncological clinical guidelines and standard protocols. The artificial intelligence model made similar recommendations to MDT, especially in cases with clear staging systems, classical treatment algorithms, and low comorbidity burden. Recent studies have similarly reported that large language models can perform strongly in answering medical questions and defining clinical protocols [

16,

17].

However, a detailed examination of the remaining 23.6% of non-compliance revealed the limitations of ChatGPT. Non-compliance mainly occurred in cases where patient-specific individual factors were not considered. In MDT meetings, clinicians’ decisions are not limited to medical guidelines but also include many variables such as the patient’s general condition, quality of life, comorbidities, potential for treatment compliance, psychosocial factors, and patient preferences. Language models such as ChatGPT, on the other hand, are inadequate in evaluating such contextual information. This shows that artificial intelligence cannot yet be considered a decision maker with real clinical intuition and ethical values. Another limitation of the model’s recommendations is the lack of transparency. ChatGPT does not present to the user what data or information it bases its recommendations on. This situation can create a security gap for clinicians, especially in sensitive patient-specific decisions. This situation, referred to as the “black box” problem in the literature, raises the question of who will assume clinical responsibility for artificial intelligence systems [

18]. As long as the ethical and legal responsibility for clinical decisions still lies with human physicians, such support systems should remain in a position of suggestion providers rather than decision makers [

19,

20]. Nevertheless, large language models such as ChatGPT have significant advantages. The model can be valuable in providing physicians with rapid access to literature, summarizing existing treatment guidelines, evaluating possible alternative treatment options, and providing reference points in treatment planning. In addition, in healthcare systems under time pressure, especially in low-resource regions, it is possible for such AI-supported systems to play a supporting role in clinical decision-making processes by facilitating access to information.

The prospective design of our study allowed us to observe the differences between AI and human decisions promptly. This made it possible to compare decisions theoretically and in practical application conditions. For example, in some cases, the MDT made individualized decisions that deviated from established guidelines, based on factors such as the patient’s likelihood of adhering to treatment or recent concerns regarding quality of life. In contrast, ChatGPT generated recommendations without taking these nuanced, patient-specific considerations into account. A study examining the perspective of surgeons, medical and radiation oncologists on AI during the diagnosis, treatment, and follow-up phases of cancer patients was conducted by Valerio et al. [

21]. This study found that surgeons increased their performance and training by assisting the surgeon before, during, and after surgery. It has been stated that medical oncologists benefit from artificial intelligence in molecular profiling and treatment selection, predictive modeling for drug response and personalized therapy, and integration of artificial intelligence in clinical trial design and patient records. They emphasized that radiosonde oncologists receive support from artificial intelligence in the areas of artificial intelligence-supported radiotherapy workflow and prediction of radiotherapy outcomes and toxicity, and that this support reduces workload and increases work quality.

Kim et al. [

11] found 92.4% concordance between MDT and artificial intelligence in a study of 405 lung cancer patients in 2018. They determined this rate as 100%, especially in advanced metastatic lung cancers. Benedick et al. [

22] found 96% concordance in metastatic patients and 86% concordance in recurrent or metastatic head and neck cancer patients. They concluded from the study that artificial intelligence is mostly an auxiliary tool, needs to be approved by an experienced clinician due to a lack of transparency, and sometimes suggests treatment methods not in the current guidelines. In another study, Benedick et al. [

13] investigated the compliance rate of patients with head and neck cancer who were taken to MDT by working on different versions of artificial intelligence. The researcher who used ChatCPT-3.5 and ChatCPT-4.0 found a high level of similarity in both. However, when looking at treatment options, it was seen that, although MDT offered at most two options, ChatCPT-3.5 offered more options than MDT and fewer than ChatCPT-4.0. It was also stated that ChatCPT-4.0 had better summarization, explanation, and clinical recommendations, and references from current literature were provided. On the other hand, he avoided making definitive recommendations and stated that he did not intend to give medical advice or replace a medical doctor.

In the study conducted by Park et al. [

12] on 322 gastric cancer patients, they found an agreement between MDT and artificial intelligence of 86%. When they looked at the stages of stomach cancer one by one, they found it to be 96.93% for stage 1, 88.89% for stage 2, 90.91% for stage 3, and 45.83% for stage 4. They stated that artificial intelligence plays an effective role in managing stomach cancer patients in MDT and can even participate as a member of MDT2.

In a retrospective study conducted by Somashekhar et al. [

15] on breast cancer patients, they found high rates of concordance, such as 97% and 95% in patients with stage 2 and stage 3 cancer. There was less concordance in patients with stage 1 and stage 4, 80% and 86%. Since the years 2014–2016, when MDT examined the patients, and 2016, when the artificial intelligence examined the same patient information, were not taken into account, the similarity rate was 73%. A blind secondary review was conducted by MDT in 2016 for patients with different results, and the concordance increased from 73% to 93%. When they also examined the concordance rate by age, it was determined that it decreased as age increased, except for patients under 45 and 55–64. It was also determined that concordance decreased much more in patients over 75. Unlike the abovementioned studies, our study evaluated all cancers presented to the council. The high level of agreement in other studies assessing specific cases may be due to the lack of absolute case heterogeneity. The current research seems more realistic in this respect.

A comparative summary of these studies and their reported limitations is presented in

Table 7.

4.1. Limitations

This study has certain limitations. First, the ChatGPT model cannot fully access the most up-to-date versions of clinical protocols due to the information limits and historical data it was trained on. In addition, since the model cannot directly analyze specific clinical data belonging to the patient, the recommendations remain more general. It should not be forgotten that MDT decisions also carry a certain degree of subjectivity. The study is single-centered, and different results may be obtained when a similar analysis is performed in institutions with varying hospital structures. Not knowing which decision is more effective in the long term does not mean that perfect compliance yields good results. It may be more appropriate to follow how the course progresses, especially in cases of incompatibility. The relatively small sample size (n = 100) may limit the generalizability of our findings. However, as this is the first feasibility study evaluating AI-assisted decision making in oncology MDTs, the dataset provides valuable initial insights and guides future multicenter studies with larger cohorts

4.2. Recommendations for Future Studies

Future studies should include similar prospective comparisons of different AI models (e.g., Med-PaLM, BioGPT, Claude Medical). In addition, technical developments should be evaluated to integrate models with real patient data (laboratory results, radiology findings, genomic data) to provide contextual recommendations. Pilot applications where clinical decision processes are carried out with real-time AI-supported platforms will also significantly contribute to this literature.

5. Conclusions

This prospective study evaluated how much the large language model ChatGPT overlaps with the treatment decisions made by multidisciplinary tumor councils (MDTs) on 100 oncology patients. The 76.4% agreement rate shows that AI can successfully mimic guideline-based clinical reasoning in specific standard oncological scenarios. ChatGPT’s decisions largely overlapped with MDTs, especially in patients with precise staging and protocol-based treatments. AI can be a powerful decision support tool in providing guideline information, summarizing alternative treatment options, and facilitating literature access. It can play a significant supporting role in terms of providing rapid access to information, especially in clinical settings with limited resources. Qualitative evaluation of discordant cases revealed that most differences arose in situations requiring individualized clinical decisions. While MDT decisions considered patient-specific contextual factors such as comorbidities, surgical risks, and quality of life, ChatGPT-4.0 primarily relied on standard guideline-based approaches. This highlights the model’s current limitations in interpreting patient-specific nuances.” The integration of AI into MDT decision-making processes will only be possible if it reaches the capacity to analyze real-time patient data, transparently base its recommendations, and align with the human-centered medical approach. Therefore, AI technologies must be further evaluated from ethical, legal, and clinical perspectives and supported by multicenter, comprehensive, advanced studies.