Abstract

Background/Objectives: Artificial intelligence (AI)-enabled digital interventions are increasingly used to expand access to mental health care. This PRISMA-ScR scoping review maps how AI technologies support mental health care across five phases: pre-treatment (screening), treatment (therapeutic support), post-treatment (monitoring), clinical education, and population-level prevention. Methods: We synthesized findings from 36 empirical studies published through January 2024 that implemented AI-driven digital tools, including large language models (LLMs), machine learning (ML) models, and conversational agents. Use cases include referral triage, remote patient monitoring, empathic communication enhancement, and AI-assisted psychotherapy delivered via chatbots and voice agents. Results: Across the 36 included studies, the most common AI modalities included chatbots, natural language processing tools, machine learning and deep learning models, and large language model-based agents. These technologies were predominantly used for support, monitoring, and self-management purposes rather than as standalone treatments. Reported benefits included reduced wait times, increased engagement, and improved symptom tracking. However, recurring challenges such as algorithmic bias, data privacy risks, and workflow integration barriers highlight the need for ethical design and human oversight. Conclusion: By introducing a four-pillar framework, this review offers a comprehensive overview of current applications and future directions in AI-augmented mental health care. It aims to guide researchers, clinicians, and policymakers in developing safe, effective, and equitable digital mental health interventions.

1. Introduction

In recent decades, mental health disorders have surged despite economic and technological progress, with barriers such as stigma, cost, and professional shortages continuing to hinder treatment access [1,2,3]. Digital health technologies offer promise in addressing these challenges [4], with teletherapy, mental health apps, and computerized cognitive behavioral therapy showing effectiveness [5]. The COVID-19 pandemic further accelerated the use of digital mental health tools, revealing unmet needs at the intersection of technology and psychotherapy [6,7]. This growing demand has sparked increased interest in the role of conversational artificial intelligence in mental health applications [8,9], with research exploring its potential to enhance psychotherapy and improve care delivery [10,11,12,13]. Researchers have increasingly focused on leveraging conversational artificial intelligence to support psychotherapy effectiveness, as the mental health sector grapples with rising demand and the need for innovative solutions [12,13,14,15,16]. Since the public release of ChatGPT (Version GPT-4, United States of America) in early 2023, it has become the first conversational artificial intelligence tool to achieve global mainstream use, reshaping approaches to learning, communication, and problem solving [8,17]. Research on ChatGPT has expanded rapidly across disciplines, especially in education, medicine, and psychology, highlighting its growing potential to advance mental health services [10,11,12,13].

Artificial intelligence-driven digital interventions in mental health refer to software systems or mobile applications that embed artificial intelligence techniques to deliver, support, or evaluate mental health services [6,12]. These include conversational artificial intelligence agents that interact with users through natural language, ranging from simple FAQ style or rule-based chatbots to more advanced multi-turn dialogue systems capable of handling complex communication tasks [18,19]. Natural language processing techniques enable these agents to parse user input, detect sentiment, and extract key emotional cues [20]. Machine learning models, such as classification and regression algorithms, and deep learning networks, such as convolutional and recurrent neural networks, power predictive and monitoring tools to classify diagnoses, forecast risks, and tailor treatment recommendations based on user data [20]. Large language models such as GPT and BERT, which belong to a subclass of deep learning models built on transformer architectures with self-attention mechanisms, expand capabilities by generating and comprehending coherent and context-rich text, opening new possibilities for nuanced therapeutic dialogue and personalized content creation [18,19]. Artificial intelligence has emerged as a promising tool to augment human therapists [12], although its adoption challenges traditional care models and raises concerns regarding efficacy, ethics, privacy, and the interpretation of human mental health experiences [21]. This review aims to provide a comprehensive analysis of conversational artificial intelligence in mental health care by mapping empirical evidence across different clinical phases. It offers insights into current applications, challenges, and future opportunities. Although previous reviews have focused narrowly on large language model capabilities, ethical concerns, or specific generative artificial intelligence applications, this scoping review provides a broader synthesis by mapping artificial intelligence-driven digital interventions across five clinical phases of mental health care: (1) pre-treatment, (2) treatment, (3) post-treatment, (4) clinical education, and (5) general improvement and prevention. We organize this landscape into a unified life cycle framework grounded in empirical evidence. Four research questions guide our analysis: Research Question 1 (RQ1): Within each clinical phase, which artificial intelligence modalities (rule-based chatbots, natural language processing, machine learning or deep learning models, and large language models) power digital interventions, and what evidence exists regarding their efficacy and limitations?

Research Question 2 (RQ2): For each phase, which artificial intelligence-driven tools demonstrate the greatest impact, and what performance metrics and barriers have been reported?

Research Question 3 (RQ3): What are the general strengths, weaknesses, opportunities, and threats of utilizing artificial intelligence-driven interventions in mental health care both overall and within each clinical phase?

Research Question 4 (RQ4): Which artificial intelligence technologies and applications appear most mature today, which emerging trends warrant priority research, and which technical, clinical, and policy challenges must be addressed to advance artificial intelligence-driven digital interventions? By linking conceptual insights with empirical outcomes, this review complements and extends prior work to provide an integrated reference for research, practice, and policy. Finally, it aims to equip future researchers and practitioners to identify promising development pathways and positions in this review as a key reference for understanding the full spectrum of artificial intelligence applications in mental health care.

2. Methods

2.1. Research Aims

Various types of AI technologies are utilized within the broad context of mental health care. Many of these technologies are specifically linked to the conversational AI interface, which engages users to provide a wide range of support. Various technologies, including AI chatbots, different language models, prediction modeling, sentiment analysis, and recommender systems, have been implemented into health care settings [22,23,24,25]. These technologies are making significant advancements in mental health care by improving diagnostic accuracy, enhancing personalized treatment, providing insights and recommendations to clinicians, tailoring services to individual needs, and offering accessible and cost-effective mental health support to everyone [26,27,28,29]. As the field of computer science continues to progress, there is a transformative opportunity for the mental health care field to understand and apply these technologies to their services effectively.

However, it is crucial to approach this integration thoughtfully, maintaining standards of care and prioritizing patient-centric approaches. There is a need for a scoping review examining how different AI technologies are being used in mental health, their impacts, ethical considerations, and practical aspects of combining AI with human care across various settings. This review aims to present a framework for the existing state of integrating AI into mental health services in a manner that maximizes benefits and minimizes risks. The summarization can serve as an overview for those interested in researching and developing solutions for these issues.

To realize their full promise, integration must be guided by evidence on efficacy, ethical safeguards, and alignment with clinical workflows. Accordingly, this scoping review maps the current landscape of AI-driven digital interventions in mental health, proposes a four-pillar mapping to organize empirical findings, and identifies practical barriers and enablers to maximize benefits and minimize risks. Our primary goals are to chart which AI modalities are deployed in each care phase, summarize their proven outcomes and reported limitations, and outline strategic directions for future research and policy.

2.2. Design and Scope of the Study

This scoping review adhered to the PRISMA-ScR guidelines [30] to ensure a rigorous, transparent, and reproducible methodology for mapping the use of AI-driven digital interventions in mental health care. We define our scope as all conversational AI agents (from rule-based/FAQ chatbots to ML-powered multi-turn systems and transformer-based LLMs) and related predictive/monitoring models (NLP and ML/DL algorithms) deployed across five phases: (1) pre-treatment (screening and triage), (2) treatment (therapeutic support), (3) post-treatment (follow-up and monitoring), (4) clinical education, and (5) general improvement and prevention. Conducting this review, we examined how each technology is applied, its demonstrated outcomes (e.g., accuracy, engagement, and health gains), and its limitations, thereby offering a unified life cycle framework for AI in mental health.

2.3. Identification and Selection of Studies

Our search strategy encompassed empirical research reports and publications up to January 2024, focusing on the application and efficacy of artificial intelligence technologies in mental health care. The search included multiple databases using keywords and phrases related to conversational artificial intelligence, machine learning, and mental health, such as “conversational AI and mental health”, “ChatGPT psychotherapy”, “AI counseling”, “AI psychotherapy”, “AI counselor”, and “machine learning mental health”.

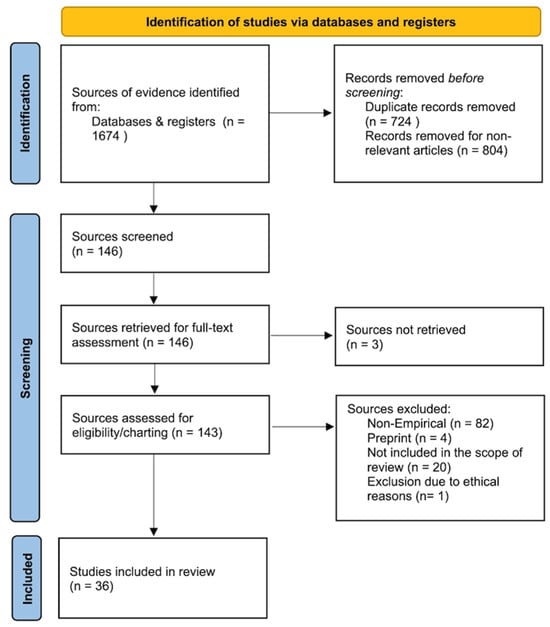

Initially, the comprehensive search yielded 1674 records. After removing 724 duplicates and excluding 804 articles that did not meet predefined criteria, 146 records remained for further evaluation. We retrieved 143 full-text reports for in-depth assessment.

Inclusion criteria targeted empirical research reports and publications published in English that examined the application of artificial intelligence technologies in mental health contexts. We excluded non-empirical works, such as literature reviews, editorials, and opinion pieces, as well as studies focusing on non-AI technologies or those outside the scope of mental health. Following screening, we excluded 82 non-empirical studies, 4 preprints that did not meet inclusion criteria, and 20 articles that fell outside our scope.

The first author independently conducted the search and data extraction using a custom-designed template to systematically capture key information from each study, including objectives, AI technologies used, main findings, and conclusions. Discrepancies were resolved through discussion to ensure consensus with the second author. Data were organized by clinical phase, AI modality, reported outcomes, and identified limitations. In total, 36 studies met the inclusion criteria and formed the core sample for this scoping review (Figure 1). These studies represent the most relevant and methodologically sound research currently available on conversational AI in mental health care. Owing to the heterogeneity of study designs and outcomes, we did not conduct a risk-of-bias appraisal or quantitative synthesis.

Figure 1.

PRISMA-ScR flow chart.

2.4. Search Strategy and Data Extraction

A customized data-charting form was developed to capture key information: study phase (application scenarios), AI technology, setting, outcomes, and limitations from each included article. It captured key details such as the objectives, AI technologies utilized, main findings, and conclusions. This structured approach allowed for a thorough and organized analysis of the empirical evidence on conversational AI in mental health care. Two reviewers independently extracted all study-level data; any discrepancies were discussed and resolved by consensus adjudication prior to synthesis.

3. Results

3.1. Summary of Reviewed Results

The review process included 36 articles that showcased survey results of users, clinical students, and mental health professionals’ perceptions toward AI applications. Additionally, all 36 articles evaluated the efficacy of specific aspects of AI applications. To organize the reviewed studies, we classified them based on the primary AI technologies employed. Given the considerable overlap between categories, we focused on highlighting the main types of technologies without reporting precise counts. The technologies identified across the studies include AI chatbots, conversational agents, natural language processing (NLP) tools, large language models (LLMs), machine learning (ML) models, deep learning (DL) models, and AI-based prediction systems. We acknowledge that many studies employed hybrid approaches or combinations of these technologies. To improve clarity, we report general trends and key examples without quantifying the exact number of studies per category. Table 1 lists the technologies mentioned in the articles.

Table 1.

AI modalities categories.

This classification directly supports RQ1, helping us map which AI modalities power interventions across different mental health phases. In the following Results sections, we unpack these mappings phase by phase, for example, noting the prominence of rule-based chatbots in screening, NLP agents in empathic support, ML/DL models in post-treatment risk assessment, and emerging LLM agents in multi-turn counseling.

The reviewed studies reported applications across multiple clinical phases (RQ2): pre-treatment (screening and triage), treatment (therapeutic support), post-treatment (monitoring and follow-up), general mental health support and prevention, and clinical education. Functions included assessment, diagnosis, patient monitoring, treatment outcome prediction, mental health counseling or therapy, clinical decision support, and general mental health assistance. Many studies covered multiple functions, which we discuss in detail in the Results subsection “Key Findings in the Applications in Mental Health Care” by linking these functions to observed clinical outcomes and implementation challenges (see Table 2).

Table 2.

Clinical phases of AI deployment in mental health care. Functional roles of AI in mental health interventions.

3.2. Analysis and Synthesis of the Results

The results of the included studies were synthesized using a narrative synthesis approach. Given the expected heterogeneity in study designs and objectives, this method allows for the identification of overarching themes, discussion of patterns and discrepancies, and a nuanced understanding of the current landscape and potential future directions of conversational AI applications in mental health care (see Table 3).

Table 3.

Descriptive table of scoping reviews.

Key Findings in AI Technologies in Mental Health Care

This section directly addresses RQ1 by cataloguing the primary AI modalities, rule-based chatbots, traditional NLP agents, ML/DL predictive models, and LLM-based agents, and summarizing the key empirical outcomes and limitations reported for each algorithm class.

3.3. Applying Natural Language Processing

Through the applications developed by natural language processing (NLP), machine learning (ML), and deep learning (DL), AI tools empower clinical services and treatments and make mental health services much more accessible [25,35]. First, NLP, with mainly applications of chatbots and AI agents, is extensively employed to enhance the interactivity and efficacy of using conversational agents to help deliver mental health care [25,37,40]. Many research-oriented AI-driven chatbots or agents, such as “Hailey”, “MYLO”, and “Limbic Access”, are utilizing sophisticated NLP algorithms to deeply understand human language and initiate, respond, and engage in meaningful conversations related to users’ well-being. Conversations empowered by NLP techniques, such as emotion detection and sentiment analysis, can effectively help provide mental health support and offer computerized therapies [29,36].

The capabilities of NLP in engaging users through text and voice interactions foster mental health interventions with no limits on time and space. Many AI-based chatbots leveraged NLP to provide mental health support through conversations [23,40]. An AI-enhanced cognitive behavioral therapy (CBT) chatbot named Hailey used NLP to analyze user text inputs and, specifically, detect emotions and train users to give empathic responses for facilitating peer-to-peer communications, and an agent called TEO provided similar techniques to enhance stress reduction [34,48].

Moreover, preliminary research on ChatGPT highlighted LLM-based conversational AI’s potential to expand access to mental health services to all populations due to its incredible capabilities in understanding human language. It surpasses capabilities in identifying emotions, tailoring interventions for conditions such as borderline personality disorder (BPD) and sensory processing disorder (SPD), and offering advice that aligns with primary health care guidelines for depression. In addition to the opportunities NLP brought, some NLP models in psychiatry still demonstrated significant biases related to religion, race, gender, nationality, sexuality, and age, which highlighted the need for further enhancing the technology [56].

3.4. Applying Machine Learning

Machine learning (ML) has proven to be a powerful tool in predicting mental health outcomes and enhancing diagnostic accuracy, ultimately aiming to improve treatment efficiency and recovery outcomes. By leveraging predictive analytics and classification models, ML provides clinicians with valuable decision-support systems and enables the creation of personalized treatment plans for clients [24,57]. For example, machine learning models, including logistic regression, ridge regression, and LASSO regression, have been used to develop AI-based assessment tools that can accurately predict mental disorders based on responses to a mental health assessment tool like the SCL-90-R [32]. This tool can diagnose mental disorders with an impressive accuracy of 89% using just 28 questions. Regarding remote patient monitoring, ML algorithms analyze data from devices monitoring vital signs and physical activity to identify subtle changes indicative of worsening mental health conditions, such as depression or anxiety [24,36]. This capability is crucial for timely intervention in these conditions, where even minor changes can be serious indicators of patient distress.

ML has also been applied to optimize operational efficiency in mental health services. For instance, it has been used to improve caller-counselor matching in a mental health contact center, leading to more efficient use of resources and higher quality of service [51]. Furthermore, in larger health care organizations, ML algorithms have been used to streamline patient flow, which indirectly contributes to the improvement of mental health care [57]. In therapeutic settings, ML offers valuable insights into the efficacy of interventions on an individual basis. By analyzing transcripts of therapy sessions, patient feedback, and progress over time, ML models can assist therapists in personalizing their approaches to meet the needs of each patient [22,31]. This ability to tailor treatment plans based on data-driven insights has the potential to enhance the effectiveness of mental health interventions significantly.

3.5. Applying Deep Learning

Deep learning (DL) algorithms, a subset of machine learning, learn complex patterns directly from data, enabling accurate predictions and analyses for innovative applications such as real-time emotional state monitoring and predictive analytics for treatment outcomes. DL contributes to improving online cognitive behavioral therapy (CBT) and art psychotherapy by customizing mental health treatments to cater to individual needs [31,43]. In the realm of iCBT, DL algorithms and recurrent neural networks (RNNs) are employed to analyze anonymous patient data [31]. They detect patterns that accurately forecast treatment outcomes, assisting in identifying mental health issues and the customization of therapy for more effective and personalized interventions [31,35].

Similarly, in art psychotherapy, DL models with co-attention mechanisms are revolutionizing the evaluation of art therapy [43]. These models assess stress and mood levels based on multiple data points. DL’s capacity to interpret complex emotional expressions and provide insights that align closely with therapeutic goals [42,43]. Through these advancements, DL serves as a technological tool and acts as a bridge to compassionate, precise, and personalized mental health care. Having mapped the core AI modalities above, we now turn to how these tools (chatbots, predictive models, and LLMs),are deployed at each phase of mental health care.

Key Findings in the Applications in Mental Health Care

Here, we answer RQ2 by showing how those same AI modalities are deployed across the five clinical phases (pre-treatment, treatment, post-treatment monitoring, clinical education, and prevention), highlighting which technologies are most prevalent in each phase and where critical gaps remain.

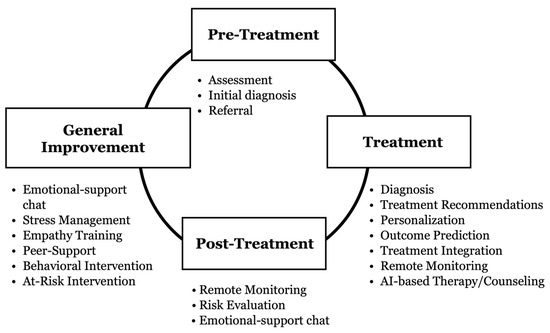

The review identified various applications of AI in mental health care that cater to diverse users, ranging from patients and clinicians to the general public and psychology students. These applications serve different purposes, including providing computerized therapies to patients, offering early-stage mental health support, assisting clinicians with diagnosis and treatment, and enhancing learning for psychology students. Overall, the studies showcased the positive impact of AI technology in improving mental health care and emphasized the significant potential of these applications to revolutionize the industry. Interestingly, most of the studies focused on how these AI-powered applications can complement and enhance the existing services provided by clinicians rather than replacing them. Figure 2 illustrates the four pillars of AI applications in mental health care, demonstrating their extensive utilization across various stages of support and treatment. The four pillars encompass four key stages: pre-treatment, treatment, post-treatment, and general improvement and prevention. Artificial intelligence is leveraged throughout these stages to enhance various aspects of mental health services, creating a continuous source for optimized care and support. In the pre-treatment phase, AI expedites assessment, facilitates initial diagnosis, and aids in referral. During treatment, AI refines diagnoses, personalizes treatment plans, predicts outcomes, and delivers AI-based therapeutic interventions. Post-treatment involves leveraging AI for remote monitoring and risk evaluation. The general improvement and prevention stage focuses on providing proactive mental health support to the broader population through AI-enabled resources.

Figure 2.

Four pillars framework where AI-driven interventions are deployed.

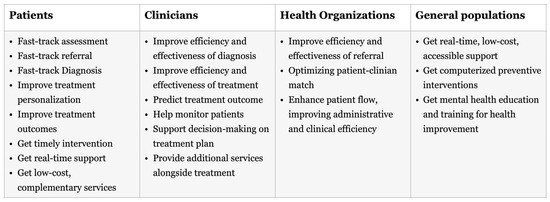

Throughout the four pillars framework, AI technologies benefit multiple stakeholders (see Figure 3). Patients gain access to fast-track services and more effective, personalized interventions. Clinicians can make enhanced, data-driven decisions. Health organizations improve efficiency and treatment efficacy. The general public benefits from increased access to low-cost, AI-powered mental health resources. This integrated model showcases the vast potential of AI in revolutionizing mental health care. In the following sections, we turn to the existing literature to explore examples of AI implementation within each stage of the model.

Figure 3.

Benefits of AI technologies for multiple stakeholders in health care.

3.6. Applications in the Pre-Treatment Stage

First, the AI chatbot “Limbic Access” has shown great promise in the pre-treatment stage of mental health care. This tool, developed by Shaik et al. [24] and further refined by Rollwage et al. [39], helps clinicians assess patients and refer them to appropriate services.

One key feature of Limbic Access is its AI self-referral tool. This user-friendly tool collects important information from patients applying for the UK’s National Health Service (NHS) Talking Therapy program. It asks about the patient’s symptoms using standardized questionnaires like the Patient Health Questionnaire-9 (PHQ-9) and the Generalized Anxiety Disorder Assessment-7 (GAD-7). The tool also gathers demographic details and other clinical information. By automating this intake process, Limbic Access makes it faster and easier for clinicians to assess patients and get them started on the right track.

Rollwage et al. evaluated the impact of Limbic Access by comparing outcomes from patients who used the AI tool with those who followed traditional assessment methods [39]. The results were promising. Patients who used Limbic Access waited less time for both clinical assessment and treatment. They were also less likely to drop out of the program and more likely to recover overall. As a result, Limbic Access has proven to be a valuable asset in the pre-treatment stage, streamlining the assessment process and improving patient outcomes.

3.7. Applications in the Treatment and the Post-Treatment Stage

The effectiveness of AI in assisting the treatment process is highlighted by its ability to aid in the detection and diagnosis of conditions, predict treatment outcomes, and monitor patient progress [32,36,41,43,53]. AI systems have achieved high accuracy in diagnosing mental health disorders and predicting stress levels from art therapy tests [32,43,53].

AI also enhances treatment outcome predictions, leading to better personalization and planning [24,31]. By analyzing non-invasive digital data, identifying patterns in mental health symptoms, and considering past preferences and ratings, AI provides strong support for clinicians to deliver timely and personalized interventions [22,24,31]. The accuracy of AI in predicting outcomes and the continuous improvement of algorithms demonstrate its effectiveness in personalizing treatment and improving outcomes [22,26,35,47]. In terms of clinical knowledge, ChatGPT exhibits high capabilities in providing treatment recommendations and aligning well with clinical guidelines [47]. AI-enabled Remote Patient Monitoring (RPM) systems support clinicians in delivering timely interventions, enhancing patient safety, and preventing incidents like self-harm [24].

AI agents like Tess and MYLO have proven they can deliver therapy and counseling services that rival human clinicians, showing moderate to high efficacy in reducing symptoms, improving health, and increasing user satisfaction [25,31,33,36,40,48,50,55]. These agents are trained to use emotions and empathy to provide high-quality services, and users report increased satisfaction when these agents disclose emotions [33,44,50,55]. However, users still prefer human responses over AI empathy [33,44].

Research has explored how AI agents’ emotions, empathy, and personalities impact intervention outcomes and satisfaction. While some aspects have positive effects, like driving user engagement, there Is room for improvement in advancing models and algorithms to better meet the high demand for counseling [33,44]. AI agents demonstrate flexibility in format, offering services through text, chat, videos, and even virtual reality [25,36,51]. They can also support specific mental health therapies like CBT, BA, problem-solving therapy, and problem-solving treatment [31,36,48,54]. Implementing BA in a chatbot has shown improvements in mood levels [36].

Since therapies can be standardized, AI offers opportunities to automate services and make them widely accessible through digital applications. However, challenges exist, such as risk assessment and ensuring timely human intervention for individuals in crisis [22]. Additionally, some AI systems, like ChatGPT, discontinue interventions for high-risk conditions and cannot provide effective referrals [52]. This highlights the need for human monitoring of AI-based interventions to identify high risks and establish systems for timely referrals and crisis interventions [22].

3.8. Applications in General Support, Improvement, and Prevention

Beyond the treatment period, AI provides ongoing support to clients and offers general mental health resources for improvement and prevention [23,37]. Numerous AI chatbots and platforms are designed to provide accessible, cost-effective, and scalable mental health support outside of the clinical setting [23,34,36,37,40,41,44,50].

Chatbots can identify human emotions and sentiments and assess psychological conditions to generate individualized support, with studies showing high accuracy in measuring users’ psychological states [29,35]. AI agents have demonstrated significantly higher emotional awareness than human norms and can provide useful interventions to low- and middle-risk conditions, ensuring safety [46,52]. For example, Tess, a mental health support chatbot, reported significant reductions in symptoms of depression and anxiety among university students, demonstrating higher engagement and satisfaction compared to a control group [40]. Another chatbot, HAILEY, focused on improving users’ empathetic abilities and found substantial results in increasing users’ empathetic responses [25].

Research is ongoing to enhance AI’s models, algorithms, and product design to deliver mental health services better [23,29]. For instance, ChatCounselor developed a fine-tuned model by analyzing real counseling data and established quality benchmarks, outperforming all open-source models [23]. These advancements present exciting opportunities to support the mental health of many populations, particularly those without access to traditional clinical care. For example, an Israeli mental health hotline applied AI to provide daily support to various populations [51].

3.9. Clinical Education

AI has proven its potential to support mental health care by aiding in the education of professionals. A study surveyed clinical psychology students from a Swiss university and found that students recognized the growing importance of AI in mental health care and expressed a significant interest in and need for instruction on AI in their clinical training [38].

A descriptive study of mental health professionals emphasized the need to adopt AI in practice and highlighted the importance of educational initiatives for broader adoption [40]. Another study explored the feasibility of using ChatGPT to simulate client scenarios and help clinical students practice their counseling skills [45]. While ChatGPT has limitations, such as a lack of non-verbal cues or overly idealized situations, it demonstrated capabilities in displaying authenticity, consistency, appropriate emotional expression, cultural sensitivity, and empathy in simulations, presenting its effectiveness for clinical training [45].

ChatGPT’s emotional awareness for aligning with psychotic symptoms indicated its feasibility as a useful research tool for understanding emotional experiences in psychopathology [26]. Furthermore, ChatGPT’s proven ability to provide unbiased treatment recommendations with clinical guidelines can effectively support clinical students in their educational journey [46].

4. Discussions

Unlike recent systematic reviews focused exclusively on generative AI or ethical risks, our mapping spans rule-based chatbots through LLMs and charts empirical outcomes across five clinical phases. This review demonstrates AI applications with different efficacy and potential, simply displaying the different levels of effectiveness and applicability.

4.1. AI Modalities Applied Across Phases

First, AI is widely used as an emotional support agent and clinical assistant, most often delivered through chatbots [14,25,36]. Many cases of baseline emotional support and clinical assistance reported high efficacy and involved the lowest level of risks; thus, these applications can be applied most quickly and universally [58]. It is foreseeable that an emotional support chatbot will be a core AI application for researchers paying attention in the short term, and AI can start its application by assisting clinical work before the treatment stage. Secondly, the main context in AI, which might have a relatively high efficacy and potential in mental health care, mainly focuses on supporting the clinical work in the real clinical setting. The strengths of AI in supporting treatment displayed high potential in improving treatment outcomes by developing personalized treatment, providing treatment recommendations and diagnoses, and predicting treatment outcomes [21,22,29]. Thirdly, AI can complete partial therapies or counselling sessions, automating the treatments. Using AI to offer therapies or counselling sessions directly can potentially solve the problem of accessibility of mental health care [43,59,60]. Meanwhile, the significant risks and ethical concerns make this layer hard to realize in the short term [34,40,41,42,52]. It is expected to advance models and algorithms to improve accuracy and reduce biases. Pilot tests and clinical trials should be done along with the development and iteration processes.

4.2. AI-Clinician Collaboration

AI applications are perceived as supporters of human clinicians rather than replacements, presenting their proven track record for effectively supporting clinicians’ work [32,42,43,47,57]. A pivotal question of deploying AI in mental health care revolves around the work division and integration of AI and human clinicians, which could largely influence the future direction. This panel raises three main questions for debate. The first concerns the respective responsibilities of AI and human clinicians. The second addresses how human clinicians integrate AI into their existing workflow and the potential impact. The third is about the ethical and regulatory obligations of humans to oversee AI’s activities. Regarding the first question, AI is currently viewed as a complement to human clinicians’ existing jobs, but it will have a higher potential to replace parts of clinicians’ jobs, including diagnosis and providing therapies [24,29]. At the current stage, there is a lack of views seeing AI as a standalone method because of its high risks in resolving accuracy; instead, AI is boosting clinicians’ efficiency as a tool [21,24,27,31,32,34,36].

The second question focuses on the integration of AI into clinical services. This will depend on organizational adaptability and will face concerns from various stakeholders, such as clinicians, management, board members, shareholders, and patients [61,62]. For example, if human clinicians are highly resistant to adopting specific AI applications, integration cannot go smoothly. The management and board might reject the integration as they see the AI transformation as highly risky and likely to cause public concerns. To establish organizational readiness for AI adaptation, it is crucial to adopt actions like AI literacy and application training, conduct pilot tests to demonstrate benefits, and hold organizational meetings for discussion of concerns. At this stage, there is no universally recognized way to integrate AI into clinical settings, and it will largely depend on how organizations proactively lead changes. Detailed standards will be developed as industrial organizations, academic associations, and policy authorities provide compliance guidelines.

The third question addresses the responsibility of overseeing AI’s risks and problems. Currently, AI cannot be solely held accountable, so human clinicians and organizations will be responsible for overseeing any risks and problems caused by AI. This will increase the workload of clinicians and require a higher level of technological literacy. When organizations apply AI applications in the clinical setting, they should provide comprehensive training to clinicians and develop a scheme for monitoring and accountability management [34,40,41,42,52]. As AI becomes more accurate and reliable, the responsibility for overseeing will decrease. However, in the short term, clear definitions of accountability will be needed.

4.3. Strengths, Weaknesses, Opportunities, and Threats

This section directly addresses RQ3 by synthesizing the key strengths, weaknesses, opportunities, and threats of AI-driven digital interventions as reported across our 36 included studies. AI technologies have several key strengths that hold promise for mental health care. First, they can increase access to services by overcoming economic, geographical, and logistical barriers. Chatbots like Limbic Access can provide first-line support to anyone with an internet connection, democratizing access to mental health resources [21]. AI-powered chatbots also offer personalized experiences, elevating user satisfaction in counseling [33]. Some conversational agents, like HAILEY, are designed to foster empathy in peer support, potentially creating meaningful connections for users [34]. From an analytical standpoint, machine learning (ML) can uncover insights from large mental health datasets, and ML models are being used to predict treatment outcomes in internet-based therapies [31]. Finally, large language models like ChatCounselor, fine-tuned with actual counseling data, present a scalable path for providing support to a large number of users simultaneously [23].

Despite these strengths, AI technologies also have several weaknesses. One of the most pressing concerns is privacy and security. AI chatbots hold sensitive patient information, and their implementation poses data security challenges [39]. There is also a risk of misinterpretation by AI models, highlighting the need for human oversight to prevent errors [63]. Questions remain about whether AI tools truly enhance assessment efficiency without compromising diagnostic precision [64]. For instance, can AI agents like HAILEY genuinely foster empathy, and can chatbots with certain personalities effectively engage users? These questions are still under debate [28,34]. Technically, current AI is still far from effectively recognizing mental disorders, posing a significant limitation to its implementation [63].

The field of AI in mental health is rapidly evolving, presenting several opportunities for growth and improvement. AI prediction modelling could refine patient monitoring, enabling earlier interventions and better health outcomes [63]. There is optimism about large language models creating novel, large-scale mental health solutions [65]. For instance, researchers are developing interpretable mental health analysis tasks using generative models like LLMs [63]. Collaboration between stakeholders, including patients and AI researchers, is crucial for human-centered mental health AI research, presenting an opportunity to develop tools that meet real user needs [65].

Despite these opportunities, several threats must be addressed to realize the potential of AI in mental health. Algorithmic bias could exacerbate existing health disparities, undermining the equity of AI-powered mental health tools [66]. The need to regulate and monitor AI-based mental health apps is pressing, and navigating these challenges while harnessing AI’s strengths is crucial for its successful integration into care. From a technical standpoint, the need for ongoing model enhancement to prevent accuracy decline is clear [66]. The field of ML in mental health is rapidly evolving and requires ongoing assessment to ensure tools remain effective and safe [63,66]. Failure to address these threats could hinder the adoption of AI technologies in mental health care.

4.4. Advancing Mental Health Prevention and Improvement

In responding to RQ4, we outline which AI modalities and applications show the greatest maturity for population-level prevention and ongoing support, and we set the stage for the following sections, Policy Implications, which further elaborate the strategic priorities and barriers identified.

It is expected that many AI applications have a high potential to advance mental health prevention and improvement. Prevention is one of the biggest opportunities AI brings because AI can generate interventional services for a huge number of populations through emotional support chatbots, behavioral intervention apps, and peer support platforms [23,36,65]. All these services can effectively improve mood levels and reduce stress levels [23,34,36,37,40,41,44,50]. AI’s capacity to analyze large datasets can uncover patterns and risk factors that were previously hidden, allowing for more individualized and efficient early intervention strategies [24,32]. In addition, the predictive analytics of AI can improve screening processes, making them more effective and less invasive, thus increasing the number of people who take part in preventive measures [39,57]. The combination of AI and mobile health apps with wearable devices provides opportunities for continuous monitoring and real-time personalized feedback, thus improving preventative care [67]. Utilizing these low-cost, widely applied AI applications, mental health prevention can be conducted at a population level and designed to adapt to a specific population’s needs. If AI applications can reduce the number of patients with high-level symptoms, the demand for traditional mental health facilities can be reduced, contributing to the global mental health care industry.

It is foreseeable that government agencies can apply AI applications in different populations for prevention and improvement work to reduce the number of at-risk populations, such as students, the elderly, and people with substance use [68,69]. Organizations like schools and local communities can develop and implement specific interventional programs that AI empowers them to meet the specific challenges of each demographic. For example, AI can provide personalized learning and coping mechanisms for students under academic and social pressures, support programs designed for the elderly to fight loneliness and cognitive decline, and relapse prevention tools for people with substance use. The integration of AI tools into the current health, educational, and social welfare systems enables these programs to provide adaptive support and interventions in real time [70]. This approach is not only directed at immediate risk reduction but also at the development of mental resilience in the long run, which will result in a reduction of general mental health problems and a move toward a more preventive mental health care paradigm. The following policy section builds on these findings to outline how regulatory frameworks can support the safe and equitable implementation of AI innovations.

4.5. Policy Implications

A contemporary policy architecture for AI in mental health care must protect service users while still allowing technology to evolve at pace. This balance can be struck by weaving together three strands (e.g., patient safety, innovation incentives, and bias mitigation) into a single, adaptive regulatory fabric. First, regulators must insist on secure data handling, algorithmic transparency, routine clinical validation, and clear lines of human oversight so that new tools do not erode public trust or amplify harm. Second, they should create pro-innovation pathways, regulatory sandboxes, adaptive licensing, and rapid ethics review that give researchers and companies room to experiment without waiting for blanket legislation to catch up. Third, every approval process must include explicit checks for demographic performance and inclusive training data so that diagnostic or triage models do not systematically disadvantage minority groups. Recent national road maps show what this regulation-for-innovation ecosystem looks like in practice. New Zealand’s Digital Mental Health and Addiction Service Evaluation Framework (DMHAS) advocates a co-regulatory model in which authorities define outcome standards, while innovators choose the technical means to meet them, employing instruments that range from voluntary codes and accreditation seals to binding legislation, each calibrated to the level of risk and the speed of technological change [71]. In a similar vein, the Mental Health Commission of Canada urges policymakers to evaluate AI systems at three translational checkpoints: whether an algorithm that performs well in silico retains its accuracy with real patients, whether it integrates into clinical workflows without adding cognitive burden for clinicians, and whether it scales equitably across regions and demographic groups [72]. Together, these examples suggest a constructive path forward. Policies should facilitate the rapid yet evidence-based migration of laboratory prototypes to bedside tools, provide clinicians with clear operational guidance so that AI augments rather than complicates practice, and embed continuous outcome monitoring so that accountability keeps pace with innovation. A progressive, layered framework, neither technology-neutral nor technology-prescriptive, can therefore foster creativity, safeguard patients, and ensure that AI becomes a feasible, trustworthy ally in mental health services.

4.6. Limitations

This review is subject to several constraints that should interpret its findings with caution. First, AI for mental health is a fast-moving field; studies released after our January 2024 cutoff, including work on the latest large language model generations, are not reflected here. Additionally, the search strategy may not have captured all relevant literature. We limited our search to English-language publications, so relevant studies in other databases or non-English sources may have been overlooked.

Second, the heterogeneity of study designs, intervention types, outcome measures, and reporting practices precluded a formal risk-of-bias appraisal and any quantitative synthesis. We opted for a narrative, chart-based approach to preserve breadth, but this means we cannot provide pooled effect sizes or graded levels of evidence. Although the first author screened and extracted data, the assignment of each paper to one of the clinical phases required subjective judgment, introducing a possibility of selection or interpretation bias.

Third, many included studies examined prototypes or pilot deployments in controlled or single-site settings, often with convenience samples. Real-world implementation factors, such as integration into existing clinical workflows, sustainability, and user diversity, were rarely reported in depth. Consequently, the generalizability of reported benefits to routine practice remains uncertain, and future research should prioritize multi-site evaluations, transparent reporting of model development, and rigorous assessments of ethical, privacy, and equity impacts.

Finally, as a scoping review, this study aimed to map the landscape rather than critically appraise study quality. Some included studies may have been preliminary investigations, used correlational designs, or relied on small sample sizes, and no formal quality assessment or risk-of-bias evaluation was conducted. Therefore, findings should be interpreted with caution, and future systematic reviews should incorporate structured quality appraisal to evaluate the robustness and reliability of the evidence base.

5. Conclusions

This scoping review synthesized findings from 36 studies on artificial intelligence-driven digital interventions across screening, treatment, monitoring, clinical education, and prevention in mental health care. Our mapping of chatbots, natural language processing, machine learning, deep learning, and large language models highlighted growing evidence of artificial intelligence’s contributions to expanding access, enhancing symptom monitoring, and supporting personalized interventions. At the same time, challenges such as algorithmic bias, data privacy risks, and integration barriers underscore the need for ethical design, transparent model development, and human oversight.

While previous review studies have provided valuable insights into specific aspects of AI in mental health, such as diagnostic applications of ChatGPT, ethical and regulatory discussions, or evaluations of chatbots for anxiety treatment [73,74,75,76,77,78,79,80], these have often focused on single technologies, clinical phases, or conditions. In contrast, the current scoping review offers a broader synthesis by mapping multiple AI modalities across five clinical phases. By linking conceptual insights with empirical outcomes, this review complements and extends prior work, providing an integrated reference for research, practice, and policy.

Future research should prioritize multi-site evaluations, longitudinal studies in diverse populations, and rigorous assessments of safety, privacy, and equity impacts. Collaboration among clinicians, artificial intelligence developers, policymakers, and patients will be essential to ensure artificial intelligence systems are clinically effective, ethically sound, and socially equitable. By offering an empirical map of artificial intelligence applications across the mental health care continuum, this review provides a foundation for guiding research, practice, and policy toward responsible integration of artificial intelligence in mental health services.

Author Contributions

Conceptualization, Y.N. and F.J.; Methodology, Y.N.; Formal analysis, Y.N.; Data curation, Y.N.; Writing—original draft preparation, Y.N.; Writing—review and editing, Y.N. and F.J.; Visualization, Y.N.; Resources, F.J.; Supervision, F.J. All authors have read and agreed to the published version of the manuscript.

Funding

Support for this project was provided to the first author by Symbiotic Future AI (Shanghai) and to the second author by the AI Academy at Seton Hall University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Coombs, N.C.; Meriwether, W.E.; Caringi, J.; Newcomer, S.R. Barriers to healthcare access among U.S. adults with mental health challenges: A population-based study. SSM Popul. Health 2021, 15, 100847. [Google Scholar] [CrossRef] [PubMed]

- Hidaka, B.H. Depression as a disease of modernity: Explanations for increasing prevalence. J. Affect. Disord. 2012, 140, 205–214. [Google Scholar] [CrossRef]

- Uutela, A. Economic crisis and mental health. Curr. Opin. Psychiatry 2010, 23, 127–130. [Google Scholar] [CrossRef]

- Torous, J.; Myrick, K.J.; Rauseo-Ricupero, N.; Firth, J. Digital mental health and COVID-19: Using technology today to accelerate the curve on access and quality tomorrow. JMIR Ment. Health 2020, 7, e18848. [Google Scholar] [CrossRef]

- Arean, P.A. Here to stay: Digital mental health in a post-pandemic world—Looking at the past, present, and future of teletherapy and telepsychiatry. Technol. Mind Behav. 2021, 2, e00073. [Google Scholar]

- Friis-Healy, E.A.; Nagy, G.A.; Kollins, S.H. It is time to REACT: Opportunities for digital mental-health apps to reduce mental-health disparities in racially and ethnically minoritized groups. JMIR Ment. Health 2021, 8, e25456. [Google Scholar] [CrossRef]

- Prescott, M.R.; Sagui-Henson, S.J.; Chamberlain, C.E.W.; Sweet, C.C.; Altman, M. Real-world effectiveness of digital mental-health services during the COVID-19 pandemic. PLoS ONE 2022, 17, e0272162. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A brief overview of ChatGPT: History, status-quo and potential future development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Lattie, E.G.; Stiles-Shields, C.; Graham, A.K. An overview of and recommendations for more accessible digital mental-health services. Nat. Rev. Psychol. 2022, 1, 87–100. [Google Scholar] [CrossRef]

- Adeshola, I.; Adepoju, A.P. The opportunities and challenges of ChatGPT in education. Interact. Learn. Environ. 2023, 32, 6159–6172. [Google Scholar] [CrossRef]

- Biswas, S.S. Role of ChatGPT in public health. Ann. Biomed. Eng. 2023, 51, 868–869. [Google Scholar] [CrossRef] [PubMed]

- D’Alfonso, S. AI in mental health. Curr. Opin. Psychol. 2020, 36, 112–117. [Google Scholar] [CrossRef] [PubMed]

- Su, S.; Wang, Y.; Jiang, W.; Zhao, W.; Gao, R.; Wu, Y.; Tao, J.; Su, Y.; Zhang, J.; Li, K.; et al. Efficacy of Artificial Intelligence-assisted psychotherapy in patients with anxiety disorders: A prospective, national multicentre randomized controlled trial protocol. Front. Psychiatry 2022, 12, 799917. [Google Scholar] [CrossRef] [PubMed]

- Sedlakova, J.; Trachsel, M. Conversational artificial intelligence in psychotherapy: A new therapeutic tool or agent? Am. J. Bioeth. 2022, 23, 4–13. [Google Scholar] [CrossRef]

- Henson, P.; Wisniewski, H.; Hollis, C.; Keshavan, M.; Torous, J. Digital mental-health apps and the therapeutic alliance: Initial review. BJPsych Open 2019, 5, e15. [Google Scholar] [CrossRef]

- Vilaza, G.N.; McCashin, D. Is the automation of digital mental health ethical? Front. Digit. Health 2021, 3, 689736. [Google Scholar] [CrossRef]

- Roumeliotis, K.I.; Tselikas, N.D. ChatGPT and Open-AI models: A preliminary review. Future Internet 2023, 15, 192. [Google Scholar] [CrossRef]

- Adamopoulou, E.; Moussiades, L. An overview of chatbot technology. In Artificial Intelligence Applications and Innovations: AIAI 2019; Springer: Cham, Switzerland, 2020; pp. 261–280. [Google Scholar]

- Bayani, A.; Ayotte, A.; Nikiema, J.N. Transformer-based tool for automated fact-checking of online health information: Development study. JMIR Infodemiol. 2025, 5, e56831. [Google Scholar] [CrossRef]

- Hang, C.N.; Yu, P.D.; Chen, S.; Tan, C.W.; Chen, G. MEGA: Machine-learning-enhanced graph analytics for infodemic-risk management. IEEE J. Biomed. Health Inform. 2023, 27, 6100–6111. [Google Scholar] [CrossRef]

- Rollwage, M.; Juchems, K.; Habicht, J.; Carrington, B.; Hauser, T.; Harper, R. Conversational AI facilitates mental-health assessments and is associated with improved recovery rates. medRxiv 2022. medRxiv:2022.11.03.22281887. [Google Scholar] [CrossRef]

- Lewis, R.; Ferguson, C.; Wilks, C.; Jones, N.; Picard, R.W. Can a recommender system support treatment personalisation in digital mental-health therapy? In CHI ’22 Extended Abstracts; ACM: New York, NY, USA, 2022; p. 3519840. [Google Scholar]

- Liu, J.M.; Li, D.; Cao, H.; Ren, T.; Liao, Z.; Wu, J. ChatCounselor: A large-language-model-based system for mental-health support. arXiv 2023, arXiv:2309.15461. [Google Scholar]

- Shaik, T.; Tao, X.; Higgins, N.; Li, L.; Gururajan, R.; Zhou, X.; Acharya, U.R. Remote patient monitoring using AI: Current state, applications and challenges. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1485. [Google Scholar] [CrossRef]

- Trappey, A.; Lin, A.P.C.; Hsu, K.K.; Trappey, C.; Tu, K.L.K. Development of an empathy-centric counselling chatbot system capable of sentimental-dialogue analysis. Processes 2022, 10, 930. [Google Scholar] [CrossRef]

- Hadar-Shoval, D.; Elyoseph, Z.; Lvovsky, M. The plasticity of ChatGPT’s mentalizing abilities. Front. Psychiatry 2023, 14, 1234397. [Google Scholar] [CrossRef] [PubMed]

- Kapoor, A.; Goel, S. Applications of conversational AI in mental health: A survey. In Proceedings of the ICOEI 2022, Tirunelveli, India, 8–30 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1013–1016. [Google Scholar]

- Moilanen, J.; Visuri, A.; Suryanarayana, S.A.; Alorwu, A.; Yatani, K.; Hosio, S. Measuring the effect of mental-health-chatbot personality on user engagement. In Proceedings of the MUM 2022, Lisbon, Portugal, 27–30 November 2022; ACM: New York, NY, USA, 2022; pp. 138–150. [Google Scholar]

- Moulya, S.; Pragathi, T.R. Mental-health assist and diagnosis conversational interface using logistic-regression model. J. Phys. Conf. Ser. 2022, 2161, 012039. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Thieme, A.; Hanratty, M.; Lyons, M.; Palacios, J.; Marques, R.F.; Morrison, C.; Doherty, G. Designing human-centred AI for mental health. ACM Trans. Comput. Hum. Interact. 2023, 30, 15. [Google Scholar] [CrossRef]

- Tutun, S.; Johnson, M.E.; Ahmed, A.; Albizri, A.; Irgil, S.; Yesilkaya, I.; Ucar, E.N.; Sengun, T.; Harfouche, A. An AI-based decision-support system for predicting mental-health disorders. Inf. Syst. Front. 2023, 25, 1261–1276. [Google Scholar] [CrossRef]

- Park, G.; Chung, J.; Lee, S. Effect of AI-chatbot emotional disclosure on user satisfaction. Curr. Psychol. 2023, 42, 28663–28673. [Google Scholar] [CrossRef]

- Sharma, A.; Lin, I.W.; Miner, A.S.; Atkins, D.C.; Althoff, T. Human–AI collaboration enables empathic conversations. arXiv 2022, arXiv:2203.15144. [Google Scholar]

- Amanat, A.; Rizwan, M.; Javed, A.R.; Abdelhaq, M.; Alsaqour, R.; Pandya, S.; Uddin, M. Deep Learning for Depression Detection from Textual Data. Electronics 2022, 11, 676. [Google Scholar] [CrossRef]

- Rathnayaka, P.; Mills, N.; Burnett, D.; De Silva, D.; Alahakoon, D.; Gray, R. A mental-health chatbot with cognitive skills. Sensors 2022, 22, 3653. [Google Scholar] [CrossRef]

- Siemon, D.; Ahmad, R.; Harms, H.; De Vreede, T. Requirements and solution approaches to personality-adaptive conversational agents in mental health care. Sustainability 2022, 14, 3832. [Google Scholar] [CrossRef]

- Blease, C.; Kharko, A.; Annoni, M.; Gaab, J.; Locher, C. Machine learning in clinical psychology education. Front. Public Health 2021, 9, 623088. [Google Scholar]

- Rollwage, M.; Habicht, J.; Juechems, K.; Carrington, B.; Stylianou, M.; Hauser, T.U.; Harper, R. Using conversational AI to facilitate mental-health assessments. JMIR AI 2023, 2, e44358. [Google Scholar] [CrossRef]

- Fulmer, R.; Joerin, A.; Gentile, B.; Lakerink, L.; Rauws, M. Using psychological AI (Tess) to relieve symptoms of depression and anxiety. JMIR Ment. Health 2018, 5, e64. [Google Scholar] [CrossRef] [PubMed]

- Nichele, E.; Lavorgna, A.; Middleton, S.E. Challenges in digital mental-health moderation. SN Soc. Sci. 2022, 2, 217. [Google Scholar] [CrossRef]

- Chen, M.; Shen, K.; Wang, R.; Miao, Y.; Jiang, Y.; Hwang, K.; Hao, Y.; Tao, G.; Hu, L.; Liu, Z. Negative information measurement at AI edge: A new perspective for mental health monitoring. ACM Trans. Internet Technol. 2022, 22, 1–16. [Google Scholar] [CrossRef]

- Jin, S.; Choi, H.; Han, K. AI-augmented art psychotherapy. In Proceedings of the CIKM 2022, Atlanta, GA, USA, 17–21 October 2022; ACM: New York, NY, USA, 2022; pp. 4089–4093. [Google Scholar]

- Shao, R. An empathetic AI for mental-health intervention: Conceptualizing and examining artificial empathy. In Empathy-Centric Design Workshop 2023; ACM: New York, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Maurya, R.K. Qualitative content analysis of ChatGPT’s client simulation role-play for practicing counselling skills. Couns. Psychother. Res. 2023, 24, 614–630. [Google Scholar] [CrossRef]

- Elyoseph, Z.; Hadar-Shoval, D.; Asraf, K.; Lvovsky, M. ChatGPT outperforms humans in emotional-awareness evaluations. Front. Psychol. 2023, 14, 1199058. [Google Scholar] [CrossRef] [PubMed]

- Levkovich, I.; Elyoseph, Z. Identifying depression and its determinants with ChatGPT. Fam. Med. Community Health 2023, 11, e002391. [Google Scholar] [CrossRef]

- Danieli, M.; Ciulli, T.; Mousavi, S.M.; Riccardi, G. Conversational AI agent for a mental-health-care app. JMIR Form. Res. 2021, 5, e30053. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Scandiffio, J.; Younus, S.; Jeyakumar, T.; Karsan, I.; Charow, R.; Salhia, M.; Wiljer, D. Adoption of AI in mental-health care—Perspectives from professionals. JMIR Form. Res. 2023, 7, e47847. [Google Scholar] [CrossRef]

- Wrightson-Hester, A.R.; Anderson, G.; Dunstan, J.; McEvoy, P.M.; Sutton, C.J.; Myers, B.; Egan, S.; Tai, S.; Johnston-Hollitt, M.; Chen, W. An artificial therapist to support youth mental health. JMIR Hum. Factors 2023, 10, e46849. [Google Scholar] [CrossRef]

- Kleinerman, A.; Rosenfeld, A.; Rosemarin, H. ML-based routing of callers in a mental-health hotline. Isr. J. Health Policy Res. 2022, 11, 25. [Google Scholar] [CrossRef] [PubMed]

- Heston, T.F. Safety of large-language models in addressing depression. Cureus 2023, 15, e50729. [Google Scholar] [CrossRef]

- Kaywan, P.; Ahmed, K.; Ibaida, A.; Miao, Y.; Gu, B. Early detection of depression using a conversational AI bot. PLoS ONE 2023, 18, e0279743. [Google Scholar] [CrossRef]

- Kannampallil, T.; Ronneberg, C.R.; Wittels, N.E.; Kumar, V.; Lv, N.; Smyth, J.M.; Gerber, B.S.; A Kringle, E.; A Johnson, J.; Yu, P.; et al. Virtual voice-based coach for problem-solving treatment. JMIR Form. Res. 2022, 6, e38092. [Google Scholar] [CrossRef]

- Danieli, M.; Ciulli, T.; Mousavi, S.M.; Silvestri, G.; Barbato, S.; Di Natale, L.; Riccardi, G. Assessing the impact of conversational AI for stress and anxiety in aging adults: Randomized controlled trial. JMIR Ment. Health 2022, 9, e38067. [Google Scholar] [CrossRef]

- Straw, I.; Callison-Burch, C. AI in mental health and the biases of language-based models. PLoS ONE 2020, 15, e0240376. [Google Scholar] [CrossRef] [PubMed]

- Dawoodbhoy, F.M.; Delaney, J.; Cecula, P.; Yu, J.; Peacock, I.; Tan, J.; Cox, B. AI in patient-flow management in mental-health units. Heliyon 2021, 7, e06993. [Google Scholar] [CrossRef]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Saleh, K.B.; Badreldin, H.A.; et al. Revolutionizing healthcare: AI in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Rahman, S.M.A.; Ibtisum, S.; Bazgir, E.; Barai, T. The significance of machine learning in clinical-disease diagnosis: A review. Int. J. Comput. Appl. 2023, 185, 10–17. [Google Scholar] [CrossRef]

- Lejeune, A.; Le Glaz, A.; Perron, P.A.; Sebti, J.; Walter, M.; Lemey, C.; Berrouiguet, S. Artificial intelligence and suicide prevention: A systematic review. Eur. Psychiatry 2022, 65, e19. [Google Scholar] [CrossRef] [PubMed]

- Kruszyńska-Fischbach, A.; Sysko-Romańczuk, S.; Napiórkowski, T.M.; Napiórkowska, A.; Kozakiewicz, D. Organizational e-health readiness: How to prepare the primary healthcare providers’ services for digital transformation. Int. J. Environ. Res. Public Health 2022, 19, 3973. [Google Scholar] [CrossRef]

- Hunter, A.; Riger, S. Meaning of community in community mental health. J. Community Psychol. 1986, 14, 55–71. [Google Scholar] [CrossRef]

- Lawrence, H.R.; Schneider, R.A.; Rubin, S.B.; Mataric, M.J.; McDuff, D.J.; Jones Bell, M. Opportunities and risks of LLMs in mental health. arXiv 2024, arXiv:2403.14814. [Google Scholar]

- Timmons, A.C.; Duong, J.B.; Simo Fiallo, N.; Lee, T.; Vo, H.P.Q.; Ahle, M.W.; Comer, J.S.; Brewer, L.C.; Frazier, S.L.; Chaspari, T.; et al. A call to action on assessing and mitigating bias in AI for mental health. Perspect. Psychol. Sci. 2023, 18, 1062–1096. [Google Scholar] [CrossRef]

- Holohan, M.; Fiske, A. “Like I’m talking to a real person”: Exploring transference in AI-based psychotherapy applications. Front. Psychol. 2021, 12, 720476. [Google Scholar] [CrossRef]

- Lin, B.; Cecchi, G.; Bouneffouf, D. Psychotherapy AI companion with reinforcement-learning recommendations. In WebConf 2023 Companion; ACM: New York, NY, USA, 2023; p. 3587623. [Google Scholar]

- Knight, A.; Bidargaddi, N. Commonly available activity tracker apps and wearables as a mental health outcome indicator: A prospective observational cohort study among young adults with psychological distress. J. Affect. Disord. 2018, 236, 31–36. [Google Scholar] [CrossRef] [PubMed]

- Beardslee, W.R.; Chien, P.L.; Bell, C.C. Prevention of mental disorders: A developmental perspective. Psychiatr. Serv. 2011, 62, 247–254. [Google Scholar] [CrossRef]

- Kazdin, A.E. Adolescent mental health: Prevention and treatment programs. Am. Psychol. 1993, 48, 127–141. [Google Scholar] [CrossRef]

- Garrido, S.; Millington, C.; Cheers, D.; Boydell, K.; Schubert, E.; Meade, T.; Nguyen, Q.V. What works and what doesn’t work? A systematic review of Digital mental-health interventions for depression and anxiety in young people. Front. Psychiatry 2019, 10, 759. [Google Scholar] [CrossRef] [PubMed]

- World Economic Forum. Global Governance Toolkit for Digital Mental Health. Available online: https://www3.weforum.org/docs/WEF_Global_Governance_Toolkit_for_Digital_Mental_Health_2021.pdf (accessed on 10 April 2025).

- Mental Health Commission of Canada. Artificial Intelligence in Mental Health Services: An Environmental Scan. Available online: https://mentalhealthcommission.ca (accessed on 10 April 2025).

- Volkmer, S.; Meyer-Lindenberg, A.; Schwarz, E. Large language models in psychiatry: Opportunities and challenges. Psychiatry Res. 2024, 339, 116026. [Google Scholar] [CrossRef] [PubMed]

- Xian, X.; Chang, A.; Xiang, Y.T.; Liu, M.T. Debate and dilemmas regarding generative AI in mental health care: Scoping review. Interact. J. Med. Res. 2024, 13, e53672. [Google Scholar] [CrossRef]

- Guo, Z.; Lai, A.; Thygesen, J.; Farrington, J.; Keen, T.; Li, K. Large language models for mental health applications: Systematic review. JMIR Ment. Health 2024, 11, e57400. [Google Scholar] [CrossRef]

- Liu, Z.; Bao, Y.; Zeng, S.; Yang, L.; Zhang, X.; Wang, Y. Large language models in psychiatry: Current applications, limitations, and future scope. Big Data Min. Anal. 2024, 7, 1148–1168. [Google Scholar] [CrossRef]

- Obradovich, N.; Khalsa, S.S.; Khan, W.U.; Suh, J.; Perlis, R.H.; Ajilore, O.; Paulus, M.P. Opportunities and risks of large language models in psychiatry. NPP Digit. Psychiatry Neurosci. 2024, 2, 8. [Google Scholar] [CrossRef]

- Kolding, S.; Lundin, R.M.; Hansen, L.; Østergaard, S.D. Use of generative artificial intelligence (AI) in psychiatry and mental health care: A systematic review. Acta Neuropsychiatr. 2025, 37, e37. [Google Scholar] [CrossRef]

- Holmes, G.; Tang, B.; Gupta, S.; Venkatesh, S.; Christensen, H.; Whitton, A. Applications of large language models in the field of suicide prevention: Scoping review. J. Med. Internet Res. 2025, 27, e63126. [Google Scholar] [CrossRef] [PubMed]

- Blease, C.; Rodman, A. Generative artificial intelligence in mental healthcare: An ethical evaluation. Curr. Treat. Options Psychiatry 2025, 12, 5. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).