Gynaecological Artificial Intelligence Diagnostics (GAID) GAID and Its Performance as a Tool for the Specialist Doctor

Abstract

1. Introduction

2. Materials and Methods

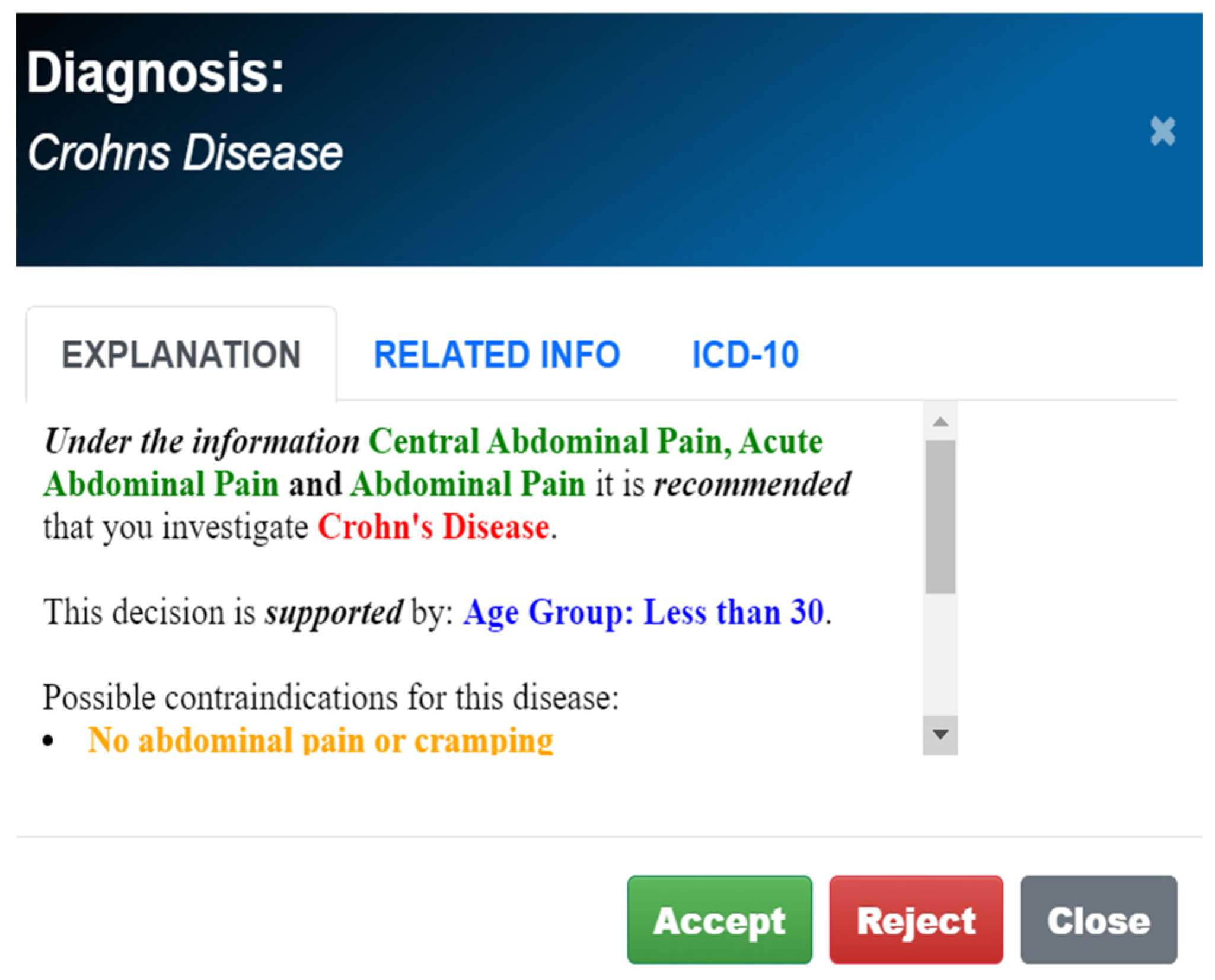

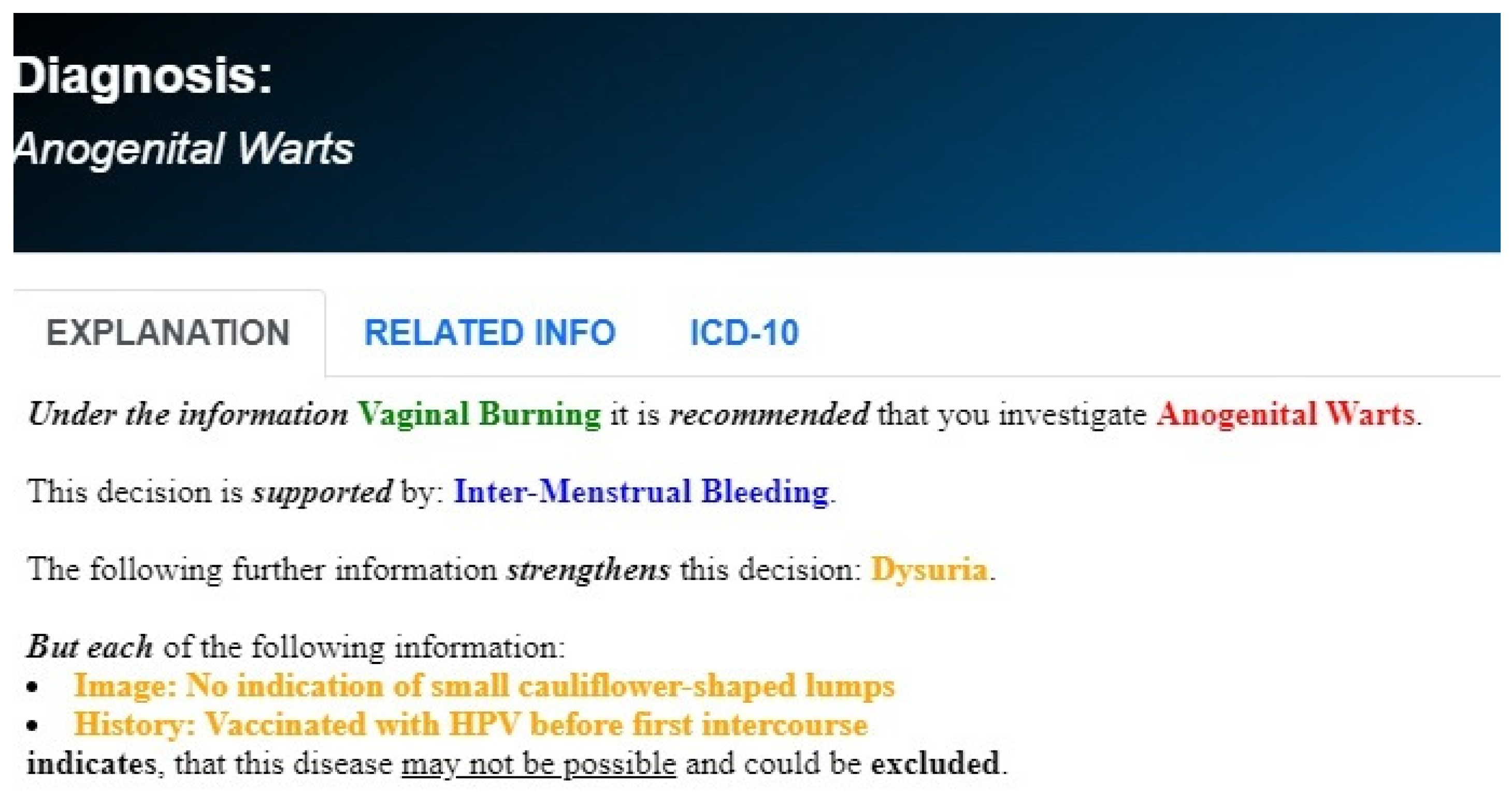

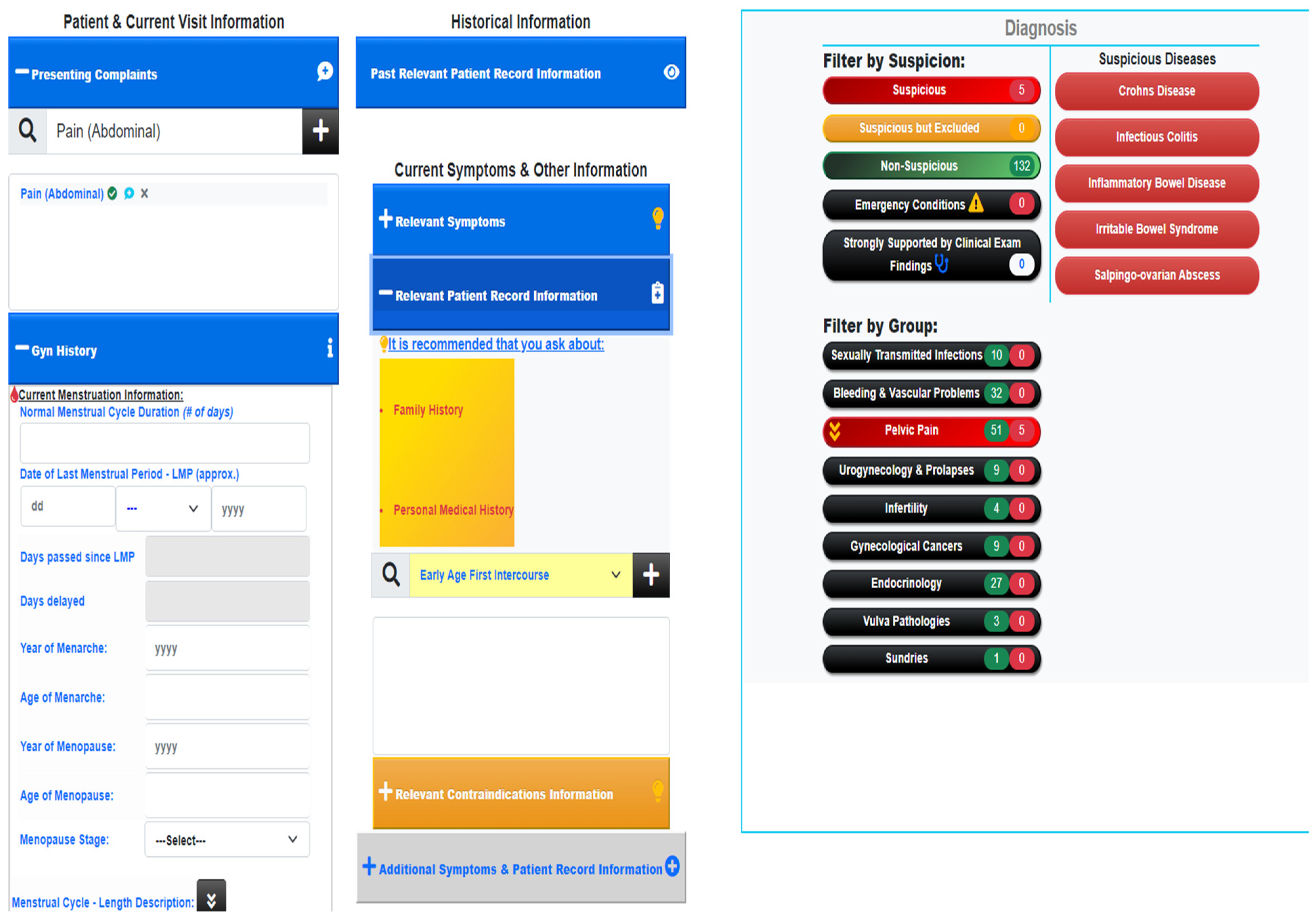

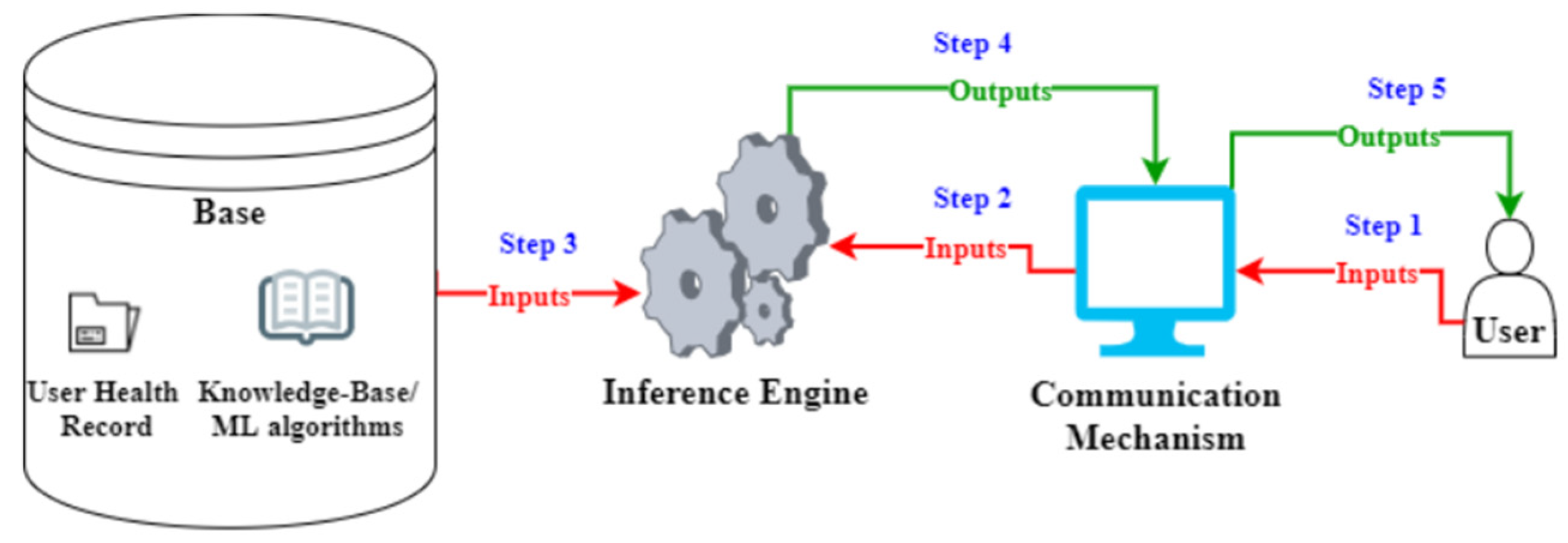

2.1. AI Technology

2.1.1. Knowledge Acquisition Methodology and Algorithmic Reasoning

2.1.2. Dependence on Existing Medical Knowledge

2.2. Evaluation Methods

- A random patient file is selected from a pool of patient records. This file is used to create an annotated file, highlighting history and initial suspicions before clinical examination.

- A demo file is prepared, to be used for testing.

- The test case is executed on GAID.

- GAID collects clinical symptoms;

- GAID collects more detailed clinical symptoms;

- GAID collects the results from clinical examinations and laboratory investigations;

- All these details feed into the knowledge acquisition.

- Metrics are computed and recorded.

- The doctor checks the testing results and gives feedback on missing and existing diseases given by GAID. This is further validated using resources such as BMJ, PubMed, NICE Guidelines, CDC and FIGO, to ensure there is no discrepancy in knowledge between the expert and the online literature.

2.3. Performance Parameters and Calculations

2.4. Patient Cohort

2.5. Ethical Guidelines

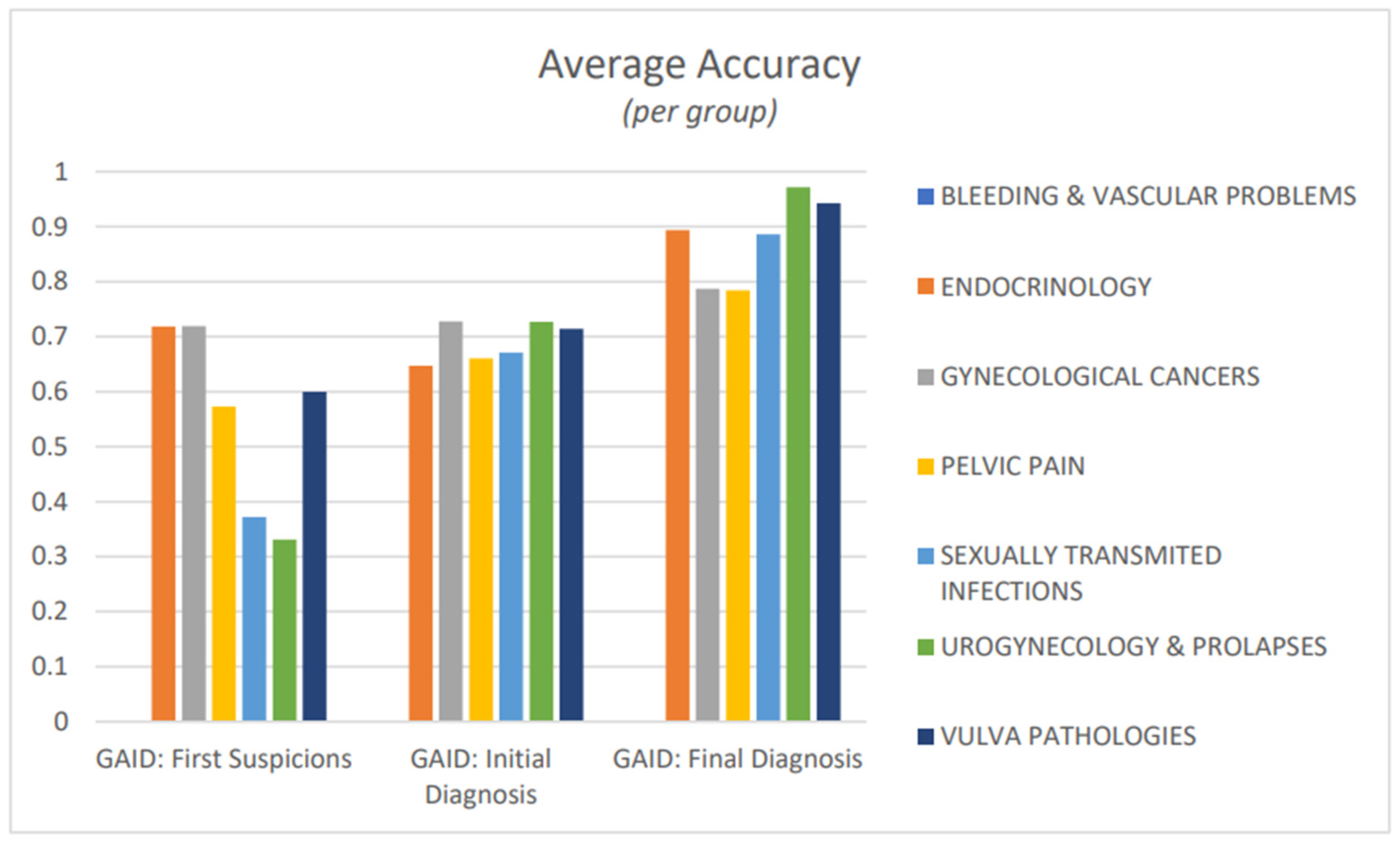

3. Results

4. Discussion

4.1. Artificial Intelligence in Gynaecology

4.2. Advantages and Disadvantages

4.3. GAID

4.4. Knowledge Revision and Refinement

4.5. Data Privacy and Security

4.6. Limitations

4.7. The Future

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Macagno, F.; Bigi, S. The Role of Evidence in Chronic Care Decision-Making. Topoi 2021, 40, 343–358. [Google Scholar] [CrossRef]

- Korteling, J.E.H.; van de Boer-Visschedijk, G.C.; Blankendaal, R.A.M.; Boonekamp, R.C.; Eikelboom, A.R. Human- versus Artificial Intelligence. Front. Artif. Intell. 2021, 4, 622364. [Google Scholar] [CrossRef]

- Leinster, S. Training medical practitioners: Which comes first, the generalist or the specialist? J. R. Soc. Med. 2014, 107, 99–102. [Google Scholar] [CrossRef]

- Ritchie, J.K.; Sahu, B.; Wood, P.L. Obstetric and gynaecology trainees’ knowledge of paediatric and adolescent gynaecology services in the UK: A national qualitative thematic analysis. Eur. J. Obstet. Gynecol. Reprod. Biol. 2019, 235, 30–35. [Google Scholar] [CrossRef]

- Miller, K.L.; Baraldi, C.A. Geriatric gynecology: Promoting health and avoiding harm. Am. J. Obstet. Gynecol. 2012, 207, 355–367. [Google Scholar] [CrossRef]

- Stotland, N.L. Contemporary issues in obstetrics and gynecology for the consultation-liaison psychiatrist. Hosp. Community Psychiatry 1985, 36, 1102–1108. [Google Scholar] [CrossRef]

- Wang, Y.; He, X.; Nie, H.; Zhou, J.; Cao, P.; Ou, C. Application of artificial intelligence to the diagnosis and therapy of colorectal cancer. Am. J. Cancer Res. 2020, 10, 3575–3598. [Google Scholar]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Naik, S.; Al Kheraif, A.A.; Vishwanathaiah, S.; Maganur, P.C.; Alhazmi, Y.; Mushtaq, S.; Sarode, S.C.; Sarode, G.S.; Zanza, A.; et al. Application and Performance of Artificial Intelligence Technology in Oral Cancer Diagnosis and Prediction of Prognosis: A Systematic Review. Diagnostics 2021, 11, 1004. [Google Scholar] [CrossRef]

- Computer-Assisted Fetal Monitoring—Obstetrics (SURFAO-Obst) [January 2020]. Available online: https://ctv.veeva.com/study/computer-assisted-fetal-monitoring-obstetrics (accessed on 10 November 2023).

- Dawes, G.S.; Moulden, M.; Redman, C.W.G. System 8000: Computerized antenatal FHR analysis. J. Perinat. Med. 1991, 19, 47–51. [Google Scholar] [CrossRef]

- Polak, S.; Mendyk, A. Artificial intelligence technology as a tool for initial GDM screening. Expert Syst. Appl. 2004, 26, 455–460. [Google Scholar] [CrossRef]

- Bahado-Singh, R.O.; Sonek, J.; McKenna, D.; Cool, D.; Aydas, B.; Turkoglu, O.; Bjorndahl, T.; Mandal, R.; Wishart, D.; Friedman, P.; et al. Artificial intelligence and amniotic fluid multiomics: Prediction of perinatal outcome in asymptomatic women with short cervix. Ultrasound Obstet. Gynecol. 2019, 54, 110–118. [Google Scholar] [CrossRef]

- Idowu, I.O.; Fergus, P.; Hussain, A.; Dobbins, C.; Khalaf, M.; Casana Eslava, R.V.; Keight, R. Artificial Intelligence for detecting preterm uterine activity in gynecology and obstetric care. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; Volume 215, pp. 215–220. [Google Scholar]

- Tanos, V.; Neofytou, M.; Soliman, A.S.A.; Tanos, P.; Pattichis, C.S. Is Computer-Assisted Tissue Image Analysis the Future in Minimally Invasive Surgery? A Review on the Current Status of Its Applications. J. Clin. Med. 2021, 10, 5770. [Google Scholar] [CrossRef]

- Dietz, E.; Kakas, A.; Michael, L. Argumentation: A calculus for Human-Centric AI. Front. Artif. Intell. 2022, 5, 955579. [Google Scholar] [CrossRef]

- Vassiliades, A.; Bassiliades, N.; Patkos, T. Argumentation and explainable artificial intelligence: A survey. Knowl. Eng. Rev. 2021, 36, e5. [Google Scholar] [CrossRef]

- Atkinson, K.; Baroni, P.; Giacomin, M.; Hunter, A.; Prakken, H.; Reed, C.; Simari, G.; Thimm, M.; Villata, S. Towards artificial argumentation. AI Mag. 2017, 38, 25–36. [Google Scholar] [CrossRef]

- Kakas, A.C.; Moraitis, P.; Spanoudakis, N.I. GORGIAS: Applying argumentation. J. Argum. Comput. 2019, 10, 55–81. [Google Scholar] [CrossRef]

- Website: Argument Theory. Available online: https://www.argument-theory.com (accessed on 25 December 2023).

- Committee on Diagnostic Error in Health Care; Board on Health Care Services; Institute of Medicine; The National Academies of Sciences, Engineering, and Medicine; Balogh, E.P.; Miller, B.T.; Ball, J.R. (Eds.) Improving Diagnosis in Health Care; National Academies Press: Washington, DC, USA, 2015; Volume 2, The Diagnostic Process. [Google Scholar]

- Porat, T.; Delaney, B.; Kostopoulou, O. The impact of a diagnostic decision support system on the consultation: Perceptions of GPs and patients. BMC Med. Inform. Decis. Mak. 2017, 17, 79. [Google Scholar] [CrossRef]

- Schaaf, J.; Sedlmayr, M.; Sedlmayr, B.; Prokosch, H.-U.; Storf, H. Evaluation of a clinical decision support system for rare diseases: A qualitative study. BMC Med. Inform. Decis. Mak. 2021, 21, 65–2021. [Google Scholar] [CrossRef]

- Zhou, Z.G.; Liu, F.; Jiao, L.C.; Wang, Z.L.; Zhang, X.P.; Wang, X.D.; Luo, X.Z. An evidential reasoning based model for diagnosis of lymph node metastasis in gastric cancer. BMC Med. Inform. Decis. Mak. 2013, 13, 123. [Google Scholar] [CrossRef]

- Rosenfeld, A. Better Metrics for Evaluating Explainable Artificial Intelligence: Blue Sky Ideas Track. In Proceedings of the 21th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2021), Virtual, 3–7 May 2021. [Google Scholar]

- Iftikhar, P.; Kuijpers, M.V.; Khayyat, A.; Iftikhar, A.; DeGouvia De Sa, D. Artificial Intelligence: A New Paradigm in Obstetrics and Gynecology Research and Clinical Practice. Cureus 2020, 12, e7124. [Google Scholar] [CrossRef]

- Joshi, G.; Jain, A.; Araveeti, S.R.; Adhikari, S.; Garg, H.; Bhandari, M. FDA approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices: An updated landscape. medRxiv 2022. [Google Scholar] [CrossRef]

- Yiangou, I. GAIA: An AI Diagnostic Decision-Support System for Gynecology Based on Argumentation. Master’s Thesis, University of Cyprus, Nicosia, Cyprus, 2021. [Google Scholar]

- Procopiou, G. Cognitive Argumentation-Based Assistants in Gynecology. Bachelor’s Thesis, University of Cyprus, Nicosia, Cyprus, 2022. [Google Scholar]

| Scenario | BV | TM | VC | SP | NG | CM | HSV | AW |

|---|---|---|---|---|---|---|---|---|

| Vaginal discharge (VD) | √ | √ | √ | √ | √ | |||

| Vaginal discharge (VD) | √ | √ | √ | |||||

| ++ | ||||||||

| (quantity (VD, profuse), | ||||||||

| Texture (VD, thin) | ||||||||

| Colour (VD, green) | ||||||||

| Vaginal discharge (VD) | √ | √ | ||||||

| ++ | ||||||||

| (quantity (VD, profuse), | ||||||||

| Texture (VD, thin) | ||||||||

| Colour (VD, green) | ||||||||

| ++ | ||||||||

| Texture (VD, frothy) |

| Scenario | BV | TM | VC | SP | NG | CM | HSV | AW | HIV |

|---|---|---|---|---|---|---|---|---|---|

| Burning +/ itching | √ | √ | √ | √ | √ | √ | √ | ||

| Burning +/ itching | √ | √ | √ | √ | |||||

| ++ | |||||||||

| Intermenstrual_bleeding +/ postcoital_bleeding | |||||||||

| Burning +/ itching | √ | ||||||||

| ++ | |||||||||

| Intermenstrual_bleeding +/ postcoital_bleeding | |||||||||

| ++ | |||||||||

| (lumps(small_cauliflower) +/ image (2,condyloma)) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanos, P.; Yiangou, I.; Prokopiou, G.; Kakas, A.; Tanos, V. Gynaecological Artificial Intelligence Diagnostics (GAID) GAID and Its Performance as a Tool for the Specialist Doctor. Healthcare 2024, 12, 223. https://doi.org/10.3390/healthcare12020223

Tanos P, Yiangou I, Prokopiou G, Kakas A, Tanos V. Gynaecological Artificial Intelligence Diagnostics (GAID) GAID and Its Performance as a Tool for the Specialist Doctor. Healthcare. 2024; 12(2):223. https://doi.org/10.3390/healthcare12020223

Chicago/Turabian StyleTanos, Panayiotis, Ioannis Yiangou, Giorgos Prokopiou, Antonis Kakas, and Vasilios Tanos. 2024. "Gynaecological Artificial Intelligence Diagnostics (GAID) GAID and Its Performance as a Tool for the Specialist Doctor" Healthcare 12, no. 2: 223. https://doi.org/10.3390/healthcare12020223

APA StyleTanos, P., Yiangou, I., Prokopiou, G., Kakas, A., & Tanos, V. (2024). Gynaecological Artificial Intelligence Diagnostics (GAID) GAID and Its Performance as a Tool for the Specialist Doctor. Healthcare, 12(2), 223. https://doi.org/10.3390/healthcare12020223