Continual Learning with Deep Neural Networks in Physiological Signal Data: A Survey

Abstract

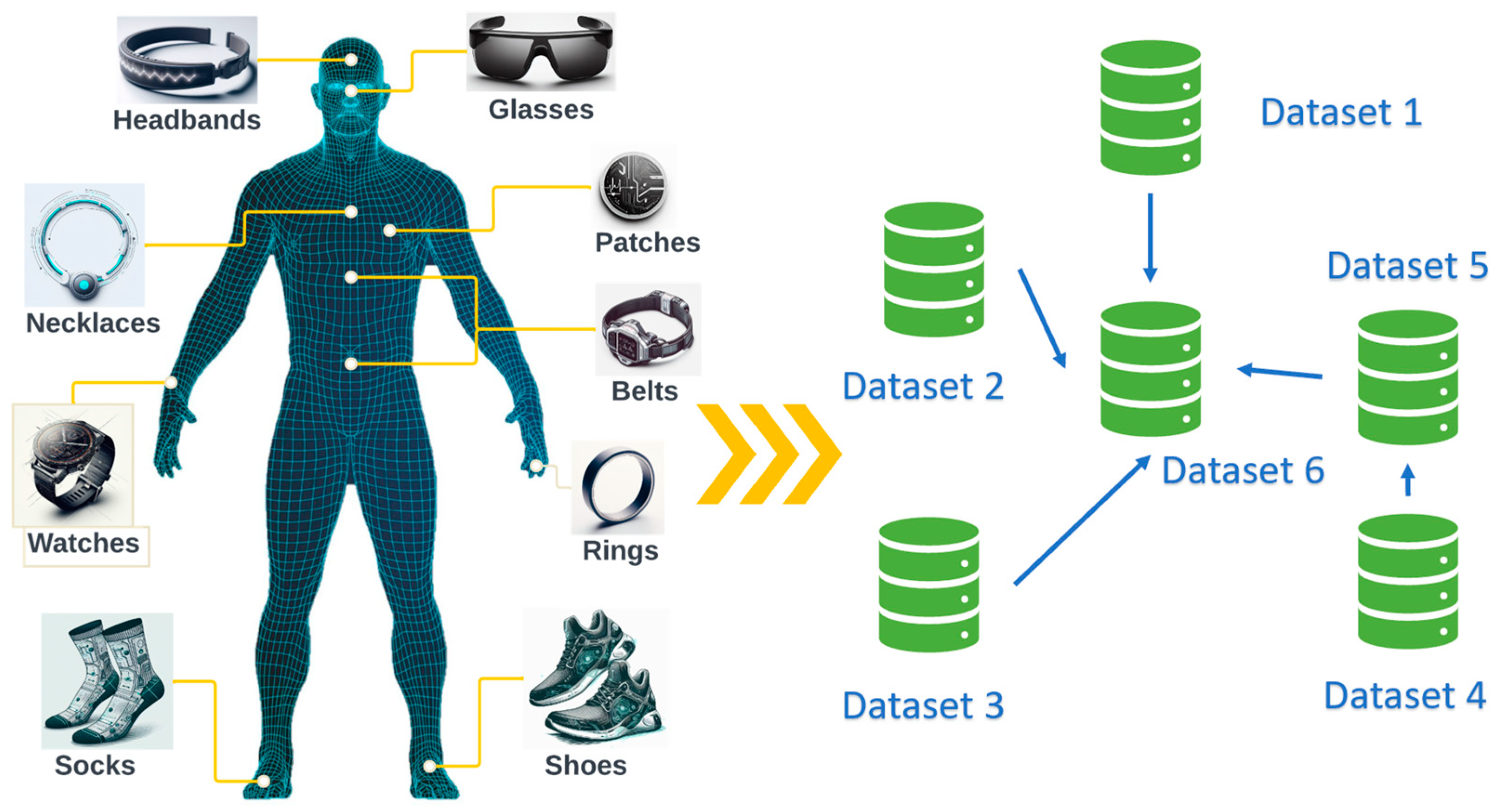

1. Introduction

2. Continual Learning

2.1. From Traditional Approaches to Continual Learning

2.2. Continual-Learning Approaches

2.2.1. Replay-Based Approaches

2.2.2. Regularization-Based Approaches

2.2.3. Architecture-Based Approaches

3. Reviews

3.1. Continual Learning for ECG Signals

3.2. Continual Learning for Other Physiological Signals

| Authors | Objectives | Signals | Datasets | Incremental Learning Scenarios |

|---|---|---|---|---|

| Ammour et al. [31] | A learning-without-forgetting approach for ECG heartbeat classification | ECG | MIT-BIH Arrhythmia Database, St Petersburg INCART 12-Lead Arrhythmia Database, MIT-BIH Supraventricular Arrhythmia Database | Task-incremental |

| Kiyasseh et al. [35] | A replay-based approach (CLOPS) for cardiac arrhythmia classification | ECG | Cardiology Dataset, Chapman Dataset, PhysioNet 2017 Challenge Dataset, PhysioNet 2020 Challenge Dataset | Class-incremental Domain-incremental |

| Sun et al. [40] | A meta self-attention prototype incrementor framework for medical time series classification | ECG | MIT-BIH Arrhythmia Database, MIT-BIH Long-Term ECG, FaceAll, UWave | Class-incremental |

| Gao et al. [42] | A parameter-isolation-based ECG continual-learning (ECG-CL) approach | ECG | CPSC 2019, 12-Lead QRS, ICBEB 2018, PTBXL | Class-incremental Domain-incremental Task-incremental |

| Hua et al. [47] | A framework with ELFCNN and HDOD for gesture classification | EMG | Ninapro DB2 | Task-incremental |

| Armstrong et al. [5] | Evaluation of a variety of continual-learning methods on longitudinal ICU data | Multivariate time series | eICU-CRD, MIMIC-III | Domain-incremental |

| Sun et al. [51] | A federated learning and blockchain framework tailored for physiological signal classification | EEG, ECG, PPG | MIT-BIH Arrhythmia Database, Wrist PPG During Exercise, St Petersburg INCART 12- Lead Arrhythmia, Sleep-EDF Expanded | Task-incremental, Domain-incremental |

| Sun et al. [54] | An algorithm for online continual learning in sensor time series classification within the context of nanorobots | ECG, PPG | MIT-BIH Arrhythmia Database, MIT-BIH Supraventricular Arrhythmia, St Petersburg INCART 12- Lead Arrhythmia, MIT-BIH Long-Term ECG, and Wrist PPG During Exercise | Class-incremental |

3.3. Continual-Learning Datasets

4. Discussion, Challenges, and Future Exploration

4.1. Benchmarks and Performance Assessment

4.2. Energy Efficiency and Computation Capability

4.3. Future Directions

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Escabí, M.A. Biosignal Processing. In Introduction to Biomedical Engineering; Academic Press: Cambridge, MA, USA, 2005; pp. 549–625. ISBN 9780122386626. [Google Scholar]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A Guide to Deep Learning in Healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Rim, B.; Sung, N.J.; Min, S.; Hong, M. Deep Learning in Physiological Signal Data: A Survey. Sensors 2020, 20, 969. [Google Scholar] [CrossRef] [PubMed]

- Buongiorno, D.; Cascarano, G.D.; De Feudis, I.; Brunetti, A.; Carnimeo, L.; Dimauro, G.; Bevilacqua, V. Deep Learning for Processing Electromyographic Signals: A Taxonomy-Based Survey. Neurocomputing 2021, 452, 549–565. [Google Scholar] [CrossRef]

- Armstrong, J.; Clifton, D.A. Continual Learning of Longitudinal Health Records. In Proceedings of the BHI-BSN 2022—IEEE-EMBS International Conference on Biomedical and Health Informatics and IEEE-EMBS International Conference on Wearable and Implantable Body Sensor Networks, Symposium Proceedings, Ioannina, Greece, 27–30 September 2022. [Google Scholar] [CrossRef]

- Hadsell, R.; Rao, D.; Rusu, A.A.; Pascanu, R. Embracing Change: Continual Learning in Deep Neural Networks. Trends Cogn. Sci. 2020, 24, 1028–1040. [Google Scholar] [CrossRef]

- De Lange, M.; Aljundi, R.; Masana, M.; Parisot, S.; Jia, X.; Leonardis, A.; Slabaugh, G.; Tuytelaars, T. A Continual Learning Survey: Defying Forgetting in Classification Tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3366–3385. [Google Scholar] [CrossRef] [PubMed]

- Lesort, T.; Lomonaco, V.; Stoian, A.; Maltoni, D.; Filliat, D.; Díaz-Rodríguez, N. Continual Learning for Robotics: Definition, Framework, Learning Strategies, Opportunities and Challenges. Inf. Fusion 2020, 58, 52–68. [Google Scholar] [CrossRef]

- Ke, Z.; Liu, B. Continual Learning of Natural Language Processing Tasks: A Survey. arXiv 2022, arXiv:2211.12701. [Google Scholar]

- van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three Types of Incremental Learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual Lifelong Learning with Neural Networks: A Review. Neural. Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef]

- Faust, O.; Hagiwara, Y.; Hong, T.J.; Lih, O.S.; Acharya, U.R. Deep Learning for Healthcare Applications Based on Physiological Signals: A Review. Comput. Methods Programs Biomed. 2018, 161, 1–13. [Google Scholar] [CrossRef]

- Sannino, G.; De Pietro, G. A Deep Learning Approach for ECG-Based Heartbeat Classification for Arrhythmia Detection. Future Gener. Comput. Syst. 2018, 86, 446–455. [Google Scholar] [CrossRef]

- Lee, C.S.; Lee, A.Y. Clinical Applications of Continual Learning Machine Learning. Lancet Digit. Health 2020, 2, e279–e281. [Google Scholar] [CrossRef] [PubMed]

- New, A.; Baker, M.; Nguyen, E.; Vallabha, G. Lifelong Learning Metrics. arXiv 2022, arXiv:2201.08278. [Google Scholar]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental classifier and representation learning. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Lopez-Paz, D.; Ranzato, M.A. Gradient Episodic Memory for Continual Learning. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized Experience Replay. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Juan, Puerto Rico, 2–4 May 2015. [Google Scholar]

- Arani, E.; Sarfraz, F.; Zonooz, B. Learning Fast, Learning Slow: A General Continual Learning Method Based on Complementary Learning System. In Proceedings of the ICLR 2022—10th International Conference on Learning Representations 2022, Virtual, 25–29 April 2022. [Google Scholar]

- Shin, H.; Lee, J.K.; Kim, J.; Kim, S.K.T.; Brain, J. Continual Learning with Deep Generative Replay. Adv. Neural Inf. Process. Syst. 2017, 30, 2990–2999. [Google Scholar]

- van de Ven, G.M.; Tolias, A.S. Generative Replay with Feedback Connections as a General Strategy for Continual Learning. arXiv 2018, arXiv:1809.10635. [Google Scholar]

- Li, Z.; Hoiem, D. Learning without Forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2935–2947. [Google Scholar] [CrossRef]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming Catastrophic Forgetting in Neural Networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Han, X.; Guo, Y. Continual Learning with Dual Regularizations. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). In Proceedings of the European Conference, ECML PKDD 2021, Bilbao, Spain, 13–17 September 2021; pp. 619–634. [Google Scholar] [CrossRef]

- Akyürek, A.F.; Akyürek, E.; Wijaya, D.T.; Andreas, J. Subspace Regularizers for Few-Shot Class Incremental Learning. In Proceedings of the ICLR 2022—10th International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Rusu, A.A.; Rabinowitz, N.C.; Desjardins, G.; Soyer, H.; Kirkpatrick, J.; Kavukcuoglu, K.; Pascanu, R.; Hadsell, R. Progressive Neural Networks. arXiv 2016, arXiv:1606.04671. [Google Scholar]

- Aljundi, R.; Chakravarty, P.; Tuytelaars, T. Expert Gate: Lifelong Learning with a Network of Experts. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2016; pp. 7120–7129. [Google Scholar] [CrossRef]

- Gao, Q.; Luo, Z.; Klabjan, D.; Zhang, F. Efficient Architecture Search for Continual Learning. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8555–8565. [Google Scholar] [CrossRef]

- Graffieti, G.; Borghi, G.; Maltoni, D. Continual Learning in Real-Life Applications. IEEE Robot. Autom. Lett. 2022, 7, 6195–6202. [Google Scholar] [CrossRef]

- Mirzadeh, S.I.; Chaudhry, A.; Yin, D.; Nguyen, T.; Pascanu, R.; Gorur, D.; Farajtabar, M. Architecture Matters in Continual Learning. arXiv 2022, arXiv:2202.00275. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Alajlan, N. LwF-ECG: Learning-without-Forgetting Approach for Electrocardiogram Heartbeat Classification Based on Memory with Task Selector. Comput. Biol. Med. 2021, 137, 104807. [Google Scholar] [CrossRef] [PubMed]

- Moody, G.B.; Mark, R.G. The Impact of the MIT-BIH Arrhythmia Database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.N.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [PubMed]

- Greenwald, S.D.; Patil, R.S.; Mark, R.G. Improved Detection and Classification of Arrhythmias in Noise-Corrupted Electrocardiograms Using Contextual Information. In Proceedings of the [1990] Computers in Cardiology, Chicago, IL, USA, 23–26 September 1990; pp. 461–464. [Google Scholar] [CrossRef]

- Kiyasseh, D.; Zhu, T.; Clifton, D. A Clinical Deep Learning Framework for Continually Learning from Cardiac Signals across Diseases, Time, Modalities, and Institutions. Nat. Commun. 2021, 12, 4221. [Google Scholar] [CrossRef]

- iRhythm Deep Neural Networks for ECG Rhythm Classification. Available online: https://irhythm.github.io/cardiol_test_set/ (accessed on 11 October 2023).

- Zheng, J.; Zhang, J.; Danioko, S.; Yao, H.; Guo, H.; Rakovski, C. A 12-Lead Electrocardiogram Database for Arrhythmia Research Covering More than 10,000 Patients. Sci. Data 2020, 7, 48. [Google Scholar] [CrossRef]

- Clifford, G.D.; Liu, C.; Moody, B.; Lehman, L.H.; Silva, I.; Li, Q.; Johnson, A.E.; Mark, R.G. AF Classification from a Short Single Lead ECG Recording: The PhysioNet/Computing in Cardiology Challenge 2017. Comput. Cardiol. 2017, 44, 1–4. [Google Scholar] [CrossRef]

- Alday, E.A.P.; Gu, A.; Shah, A.J.; Robichaux, C.; Wong, A.K.I.; Liu, C.; Liu, F.; Rad, A.B.; Elola, A.; Seyedi, S.; et al. Classification of 12-Lead ECGs: The PhysioNet/Computing in Cardiology Challenge 2020. Physiol. Meas. 2020, 41, 124003. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Zhang, M.; Wang, B.; Tiwari, P. Few-Shot Class-Incremental Learning for Medical Time Series Classification. IEEE J. Biomed. Health Inf. 2023. [Google Scholar] [CrossRef]

- Chen, Y.; Keogh, E.; Hu, B.; Begum, N.; Bagnall, A.; Mueen, A.; Batista, G. The UCR Time Series Classification Archive. IEEE/CAA J. Autom. Sin. 2015, 6, 1293–1305. [Google Scholar]

- Gao, H.; Wang, X.; Chen, Z.; Member, S.; Wu, M.; Li, J.; Liu, C.; Liu, C. ECG-CL: A Comprehensive Electrocardiogram Interpretation Method Based on Continual Learning. IEEE J. Biomed. Health Inf. 2023, 27, 5225–5236. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Liu, C.; Wang, X.; Zhao, L.; Shen, Q.; Ng, E.Y.K.; Li, J. An Open-Access ECG Database for Algorithm Evaluation of QRS Detection and Heart Rate Estimation. J. Med. Imaging Health Inf. 2019, 9, 1853–1858. [Google Scholar] [CrossRef]

- Gao, H.; Liu, C.; Shen, Q.; Li, J. Representative Databases for Feature Engineering and Computational Intelligence in ECG Processing. In Feature Engineering and Computational Intelligence in ECG Monitoring; Springer: Berlin/Heidelberg, Germany, 2020; pp. 13–29. [Google Scholar] [CrossRef]

- Liu, F.; Liu, C.; Zhao, L.; Zhang, X.; Wu, X.; Xu, X.; Liu, Y.; Ma, C.; Wei, S.; He, Z.; et al. An Open Access Database for Evaluating the Algorithms of Electrocardiogram Rhythm and Morphology Abnormality Detection. J. Med. Imaging Health Inf. 2018, 8, 1368–1373. [Google Scholar] [CrossRef]

- Wagner, P.; Strodthoff, N.; Bousseljot, R.D.; Kreiseler, D.; Lunze, F.I.; Samek, W.; Schaeffter, T. PTB-XL, a Large Publicly Available Electrocardiography Dataset. Sci. Data 2020, 7, 154. [Google Scholar] [CrossRef] [PubMed]

- Hua, S.; Wang, C.; Lam, H.K.; Wen, S. An Incremental Learning Method with Hybrid Data over/down-Sampling for SEMG-Based Gesture Classification. Biomed. Signal Process. Control 2023, 83, 104613. [Google Scholar] [CrossRef]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.G.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Müller, H. Electromyography Data for Non-Invasive Naturally-Controlled Robotic Hand Prostheses. Sci. Data 2014, 1, 140053. [Google Scholar] [CrossRef]

- Pollard, T.J.; Johnson, A.E.W.; Raffa, J.D.; Celi, L.A.; Mark, R.G.; Badawi, O. The EICU Collaborative Research Database, a Freely Available Multi-Center Database for Critical Care Research. Sci. Data 2018, 5, 180178. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.W.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Anthony Celi, L.; Mark, R.G. MIMIC-III, a Freely Accessible Critical Care Database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef]

- Sun, L.; Wu, J.; Xu, Y.; Zhang, Y. A Federated Learning and Blockchain Framework for Physiological Signal Classification Based on Continual Learning. Inf. Sci. 2023, 630, 586–598. [Google Scholar] [CrossRef]

- Jarchi, D.; Casson, A.J. Description of a Database Containing Wrist PPG Signals Recorded during Physical Exercise with Both Accelerometer and Gyroscope Measures of Motion. Data 2017, 2, 1. [Google Scholar] [CrossRef]

- Kemp, B.; Zwinderman, A.H.; Tuk, B.; Kamphuisen, H.A.C.; Oberyé, J.J.L. Analysis of a Sleep-Dependent Neuronal Feedback Loop: The Slow-Wave Microcontinuity of the EEG. IEEE Trans. Biomed. Eng. 2000, 47, 1185–1194. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Chen, Q.; Zheng, M.; Ning, X.; Gupta, D.; Tiwari, P. Energy-Efficient Online Continual Learning for Time Series Classification in Nanorobot-Based Smart Health. IEEE J. Biomed. Health Inf. 2023. [Google Scholar] [CrossRef]

- Lal, B.; Gravina, R.; Spagnolo, F.; Corsonello, P. Compressed Sensing Approach for Physiological Signals: A Review. IEEE Sens. J. 2023, 23, 5513–5534. [Google Scholar] [CrossRef]

- Kumari, A.; Tanwar, S.; Tyagi, S.; Kumar, N. Fog Computing for Healthcare 4.0 Environment: Opportunities and Challenges. Comput. Electr. Eng. 2018, 72, 1–13. [Google Scholar] [CrossRef]

- Karunarathne, G.; Kulawansa, K.; Firdhous, M.F.M. Wireless Communication Technologies in Internet of Things: A Critical Evaluation. In Proceedings of the 2018 International Conference on Intelligent and Innovative Computing Applications (ICONIC), Mon Tresor, Mauritius, 6–7 December 2018; pp. 1–5. [Google Scholar]

- Pandey, A.K. Introduction to Healthcare Information Privacy and Security Concerns. In Security and Privacy of Electronic Healthcare Records: Concepts, Paradigms and Solutions; Institution of Engineering and Technology; IET: London, UK, 2019; pp. 17–42. [Google Scholar]

- Guo, S.; Wang, Y.; Li, Q.; Yan, J. DMCP: Differentiable Markov Channel Pruning for Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Yuan, G.; Behnam, P.; Cai, Y.; Shafiee, A.; Fu, J.; Liao, Z.; Li, Z.; Ma, X.; Deng, J.; Wang, J.; et al. Tinyadc: Peripheral Circuit-Aware Weight Pruning Framework for Mixed-Signal Dnn Accelerators. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 926–931. [Google Scholar]

- Yang, Y.; Deng, L.; Wu, S.; Yan, T.; Xie, Y.; Li, G. Training High-Performance and Large-Scale Deep Neural Networks with Full 8-Bit Integers. Neural. Netw. 2020, 125, 70–82. [Google Scholar] [CrossRef] [PubMed]

- Yuan, G.; Chang, S.-E.; Jin, Q.; Lu, A.; Li, Y.; Wu, Y.; Kong, Z.; Xie, Y.; Dong, P.; Qin, M.; et al. You Already Have It: A Generator-Free Low-Precision DNN Training Framework Using Stochastic Rounding. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 34–51. [Google Scholar]

- Chen, T.; Moreau, T.; Jiang, Z.; Zheng, L.; Yan, E.; Shen, H.; Cowan, M.; Wang, L.; Hu, Y.; Ceze, L.; et al. TVM: An Automated End-to-End Optimizing Compiler for Deep Learning. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), Carlsbad, CA, USA, 8–9 October 2018; pp. 578–594. [Google Scholar]

- Niu, W.; Ma, X.; Lin, S.; Wang, S.; Qian, X.; Lin, X.; Wang, Y.; Ren, B. Patdnn: Achieving Real-Time Dnn Execution on Mobile Devices with Pattern-Based Weight Pruning. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 907–922. [Google Scholar]

- Wang, Z.; Zhan, Z.; Gong, Y.; Yuan, G.; Niu, W.; Jian, T.; Ren, B.; Ioannidis, S.; Wang, Y.; Dy, J. SparCL: Sparse Continual Learning on the Edge. Adv. Neural Inf. Process. Syst. 2022, 35, 20366–20380. [Google Scholar]

- Yuan, G.; Ma, X.; Niu, W.; Li, Z.; Kong, Z.; Liu, N.; Gong, Y.; Zhan, Z.; He, C.; Jin, Q.; et al. MEST: Accurate and Fast Memory-Economic Sparse Training Framework on the Edge. Adv. Neural Inf. Process. Syst. 2021, 34, 20838–20850. [Google Scholar]

- Xiao, Q.; Wu, B.; Zhang, Y.; Liu, S.; Pechenizkiy, M.; Mocanu, E.; Mocanu, D.C. Dynamic Sparse Network for Time Series Classification: Learning What to “See”. Adv. Neural Inf. Process. Syst. 2022, 35, 16849–16862. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, A.; Li, H.; Yuan, G. Continual Learning with Deep Neural Networks in Physiological Signal Data: A Survey. Healthcare 2024, 12, 155. https://doi.org/10.3390/healthcare12020155

Li A, Li H, Yuan G. Continual Learning with Deep Neural Networks in Physiological Signal Data: A Survey. Healthcare. 2024; 12(2):155. https://doi.org/10.3390/healthcare12020155

Chicago/Turabian StyleLi, Ao, Huayu Li, and Geng Yuan. 2024. "Continual Learning with Deep Neural Networks in Physiological Signal Data: A Survey" Healthcare 12, no. 2: 155. https://doi.org/10.3390/healthcare12020155

APA StyleLi, A., Li, H., & Yuan, G. (2024). Continual Learning with Deep Neural Networks in Physiological Signal Data: A Survey. Healthcare, 12(2), 155. https://doi.org/10.3390/healthcare12020155