Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review

Abstract

1. Introduction

- To the best of our knowledge, this is the first systematic review that focuses on mobile computer vision-based algorithms for food recognition, volume estimation and dietary assessment to determine the extent to which existing computer vision-based applications provide explanations to help the users understand how the algorithms make decisions.

- The analysis proposed provides a critical comparison among mobile-based automatic food recognition and nutritional-value-estimation techniques.

- This study analyses gaps and proposes possible solutions to create trustworthy image-based food recognition and calorie estimation applications for nutritional monitoring.

2. Materials and Methods

- Only articles available in English.

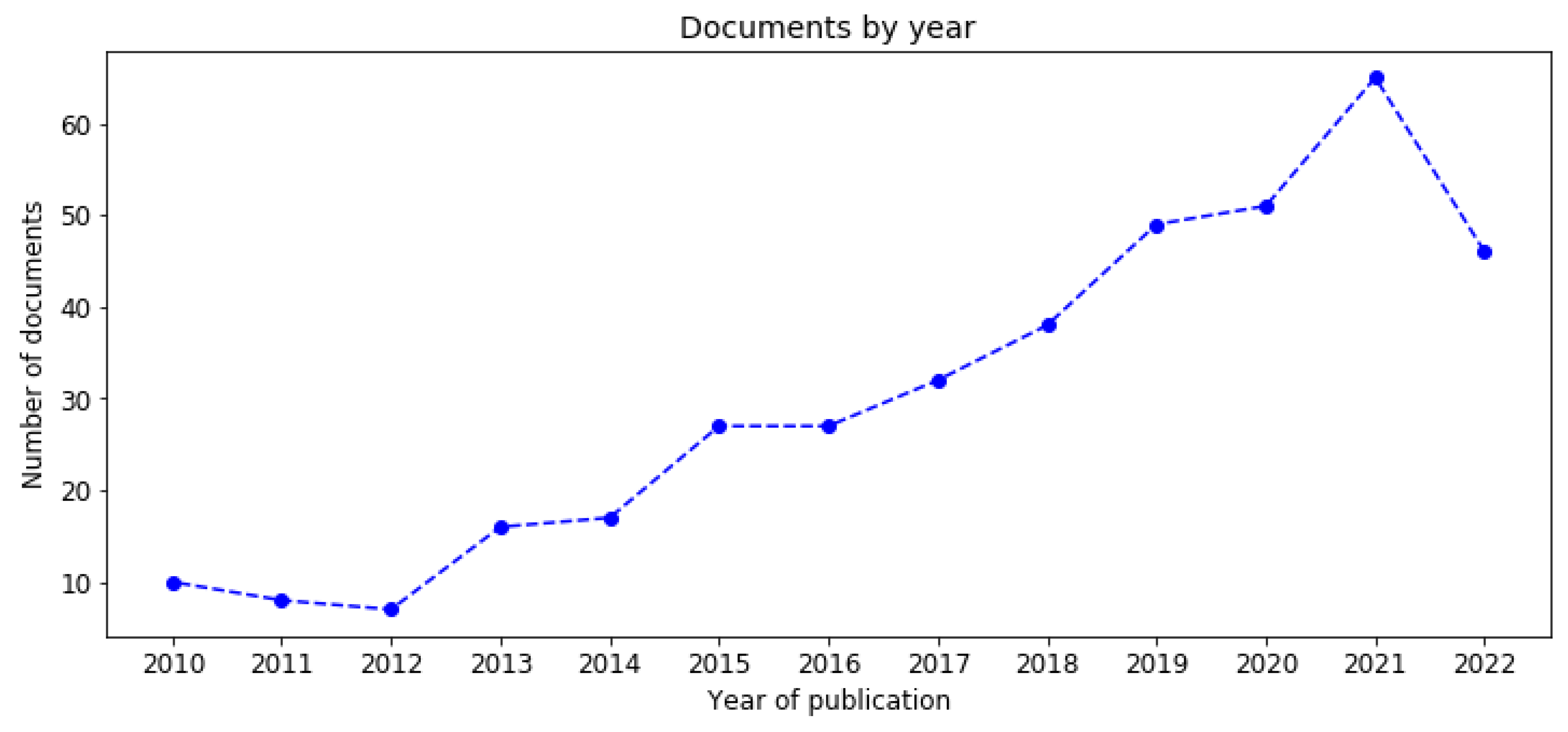

- Only articles published between January 2010 and October 2022.

- Only papers that discuss computer vision systems on mobile phones for food recognition, volume estimation and calorie estimation.

- (1)

- Short conference papers;

- (2)

- Review articles;

- (3)

- Full-text not available.

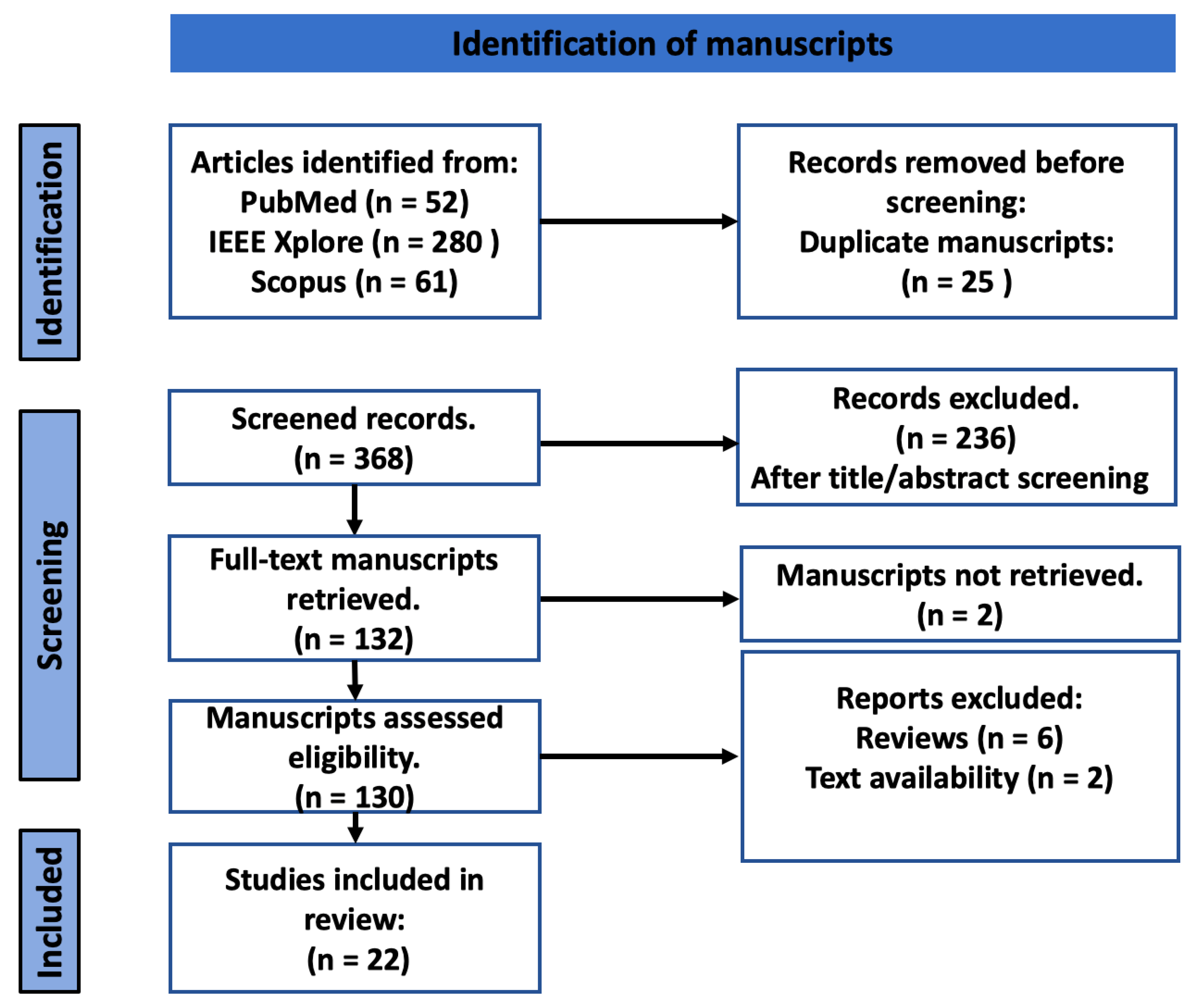

2.1. Search Methods

2.2. Selection of Studies

- Assessment of the title;

- Assessment of the abstract;

- Assessment of full article.

2.3. Data Extraction

3. Results

3.1. Study Selection

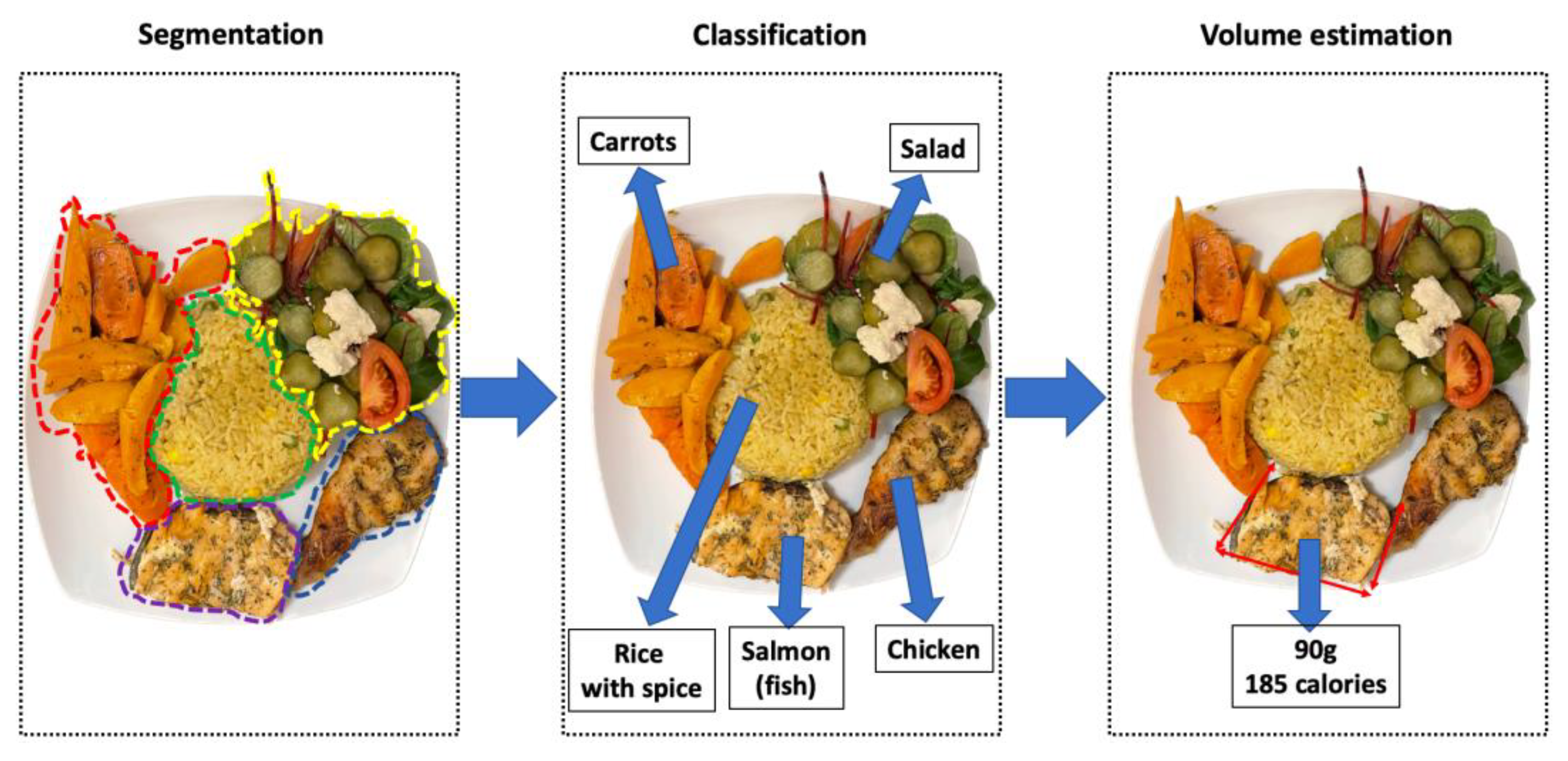

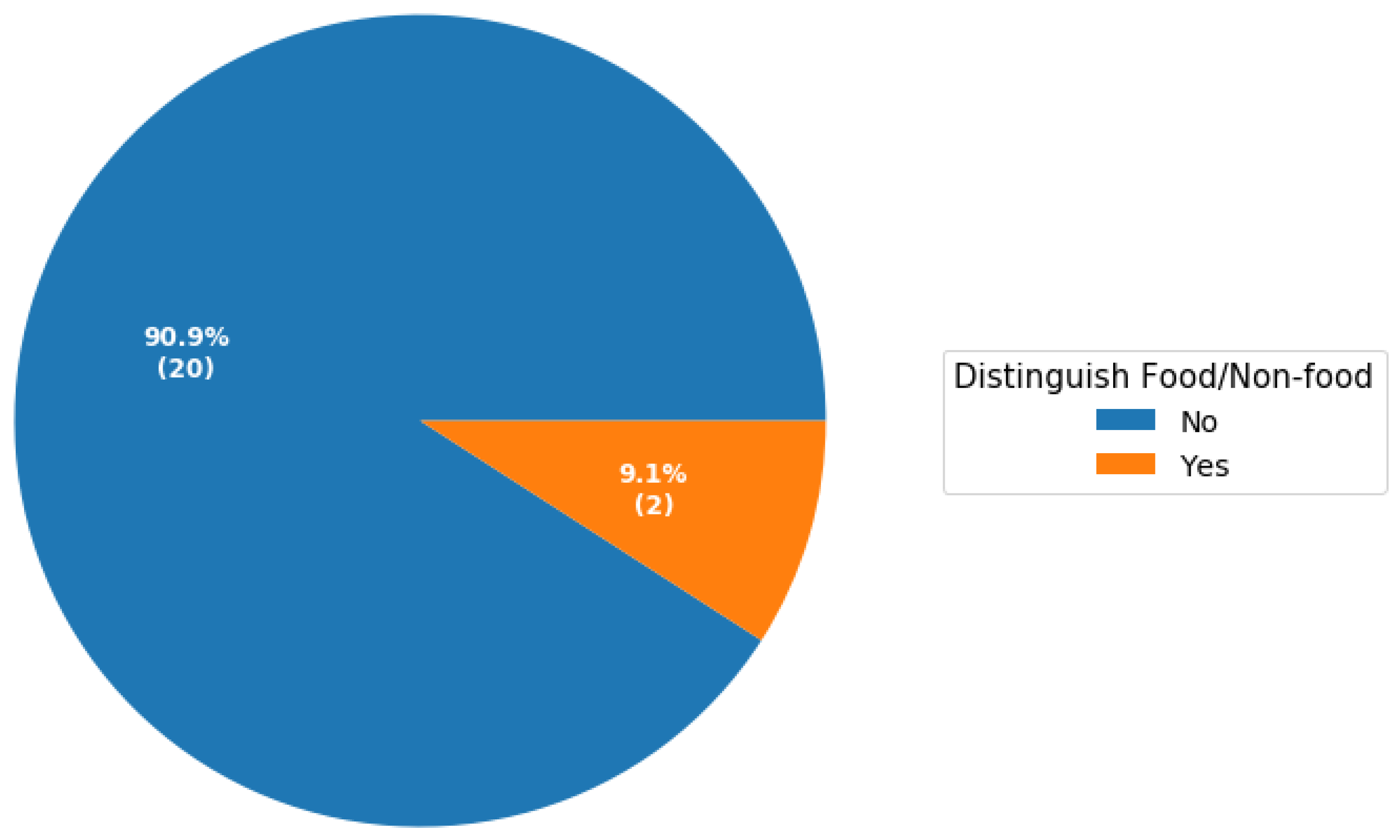

3.2. Food Recognition

| Author | Focus | App Name | Dataset (Categories) | Algorithm | Features | Accuracy (Top 5) | Distinguish Food/Non-food | Explainability | Mobile Platform |

|---|---|---|---|---|---|---|---|---|---|

| Kawano and Yanai [33] 2013 | Food recognition | FoodCam | 6781 images (50) | Linear SVM | histogram, Bag-of-SURF. | (81.55%) | No | No | Android |

| Zhang et al. [21] 2015 | Food recognition | Snap-n-Eat | (15) | Linear SVM | Colour, HOG, SIFT, gradient. | 85% | No | No | Android |

| Mezgec and Seljak [25] 2017 | Food and drink recognition | - | 225,953 images (520) | Deep CNN adapted from AlexNet | CNN-based features | 86.39% (55%) | No | No | Mobile-web |

| Silva et al. [22] 2018 | Food recognition | - | Extended Food-101 | Quadratic SVM | Gabor and SURF features. | - | No | No | Android |

| Temdee and Uttama [28] 2017 | Food recognition | - | 2500 images (40) | CNN | Filter based on three RGB colour channels. | 75.2% | No | No | Mobile-web |

| Termritthikun, Muneesawan, and Kanprachar [34] 2017 | Food recognition | - | THFOOD-50 | CNN | CNN-based features | 69.8% (92.3%) | No | No | Android |

| Tiankaew et al. [29] 2018 | Food recognition | Calpal | 7632 images (13) | CNN and adapting VGG19 | CNN-based features | 82% | No | No | Cross-platform (Android and iOS) |

| Qayyum and Şah [23] 2018 | Food image recognition | - | 5000 images | Modified CNN | CNN-based feature | 86.97% (97.42%) | No | No | iOS |

| Sahoo et al. [35] 2019 | Food image recognition | FoodAi | FoodAI-756 | Transfer learning. CNN | CNN-based feature | 80.09% | No | No | Mobile-web |

| Park et al. [30] 2019 | Food recognition | - | 92,000 images (23) | DCNN | CNN-based features | 91.3% | No | No | Mobile-web |

| Kayikci, Basol and Dörter [36] 2019 | Food classification | Türk Mutfağı | Food24 | CNN | CNN-based features | 93% | No | No | iOS |

| Freitas, Corddeiro and Macario [31] 2020 | Food segmentation and classification | MyFood | 1250 images (9) | Mask RCNN | CNN-based features | IoU = 0.70 | No | No | Cross-platform (Android and iOS) |

| Cornejo et al. [26] 2021 | Food recognition | NutriCAM | 3600 (36) | CNN | CNN-based features | 85% | No | No | Cross-platform (Android and iOS) |

| Tahir and Loo 2021 [27] | Food image analysis | - | Food/Non-Food, Food101, UECFood100, UECFood256, Malaysian Food. | MobileNetV3 | CNN-based features with fine-tuning. | Food/Non-Food: 99.12%. Food101: 80.80% UECFOOD100: 80.40% UECFOOD256: 68.50% MalaysianFood: 71.2% | Yes | Yes | Android |

3.3. Volume Estimate

| Author | Focus | Dataset (Categories) | Method | Features | Result (Error) | Explainability | Application Name |

|---|---|---|---|---|---|---|---|

| Zhu et al. [41] 2010 | Volume estimation | 3000 images | Step 1: camera calibration. Step 2: 3D volume reconstruction. (multi-view) | Fiducial markers | Error: 1% | No | - |

| Zhang et al. [21] 2015 | Calorie estimation | (15) | Counting pixels in each segmented item. Additionally, using the depth of the image. (single-view) | SIFT features and HOG features. | 85% | No | Snap-n-Eat |

| Akpa et al. [42] 2016 | Volume estimation | 119 images | Image processing with chopstick | Error: 6.8% | No | - | |

| Rhyner et al. [37] 2016 | Carbohydrate estimation | 19 adults (n = 60 dishes; 6 dishes a day) | 3D model and segmentation. (multi-view) | Colour and texture. | (Error: 18.7%) | No | GoCarb |

| Okamoto and Yani [43] 2016 | Calorie estimation | 60 test images (20) | Quadratic curve estimation. (single-view) | 2D size of food | Error: 21.3% | No | |

| Silva et al. [22] 2018 | Estimate weight and calories | Food-101 | Estimated food volume from segmented food. With fingers as reference. (single-view) | CNN based features | (error +/− 5% and 8% of ground truth) | No | - |

| Tiankaew et al. [29] 2018 | Calorie estimation | (13) | Compute calories. (single-view) | User information and calorie table. | No | Calpal | |

| Gao et al. [44] | Volume estimation | SUEC Food | Multi-task CNN (single-view) | Deep CNN | Error: Chicken: 2.7% Fried pork: 12.3% Congee: −0.27% | No | MUSEFood |

| Sowah et al. [45] 2020 | Calorie estimation and recommendations | 300 (25) | Use Harris Benedict’s equation to determine calorie requirements. (single-view) | Patient data | No | - | |

| Tomescu [24] 2020 | Volume estimation | 80,000 (382) | CNN EfficientNet (Multi-view) | Depth maps, shape. | 10% volume overestimation. | No | - |

| Herzig et al. [46] 2020 | Volume estimation | 48 meals (128 items) | CNN for segmentation (single-view) | Depth sensing | Absolute error (SD): 35.1 g (42.8 g; 14% [12.2%]) | No | - |

3.4. Strengths and Weakness of Computer Vision Applications for Dietary Assessment

3.5. Explainability

3.6. Statistical Analyses

4. Discussion

4.1. Findings

4.2. Challenges and Outlook

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- WHO Regional Office for Europe. WHO European Regional Obesity Report. 2022. Available online: https://apps.who.int/iris/bitstream/handle/10665/353747/9789289057738-eng.pdf (accessed on 28 October 2022).

- Bray, G.A.; Popkin, B.M. Dietary fat intake does affect obesity! Am. J. Clin. Nutr. 1998, 68, 1157–1173. [Google Scholar] [CrossRef]

- Munger, R.G.; Folsom, A.R.; Kushi, L.; Kaye, S.A.; Sellers, T.A. Dietary Assessment of Older Iowa Women with a Food Frequency Questionnaire: Nutrient Intake, Reproducibility, and Comparison with 24-Hour Dietary Recall Interviews. Am. J. Epidemiol. 1992, 136, 192–200. [Google Scholar] [CrossRef]

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.K.; Lee, Y. The case for smartwatch-based diet monitoring. In Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MO, USA, 23–27 March 2015; pp. 585–590. [Google Scholar] [CrossRef]

- Jiang, L.; Qiu, B.; Liu, X.; Huang, C.; Lin, K. DeepFood: Food Image Analysis and Dietary Assessment via Deep Model. IEEE Access 2020, 8, 47477–47489. [Google Scholar] [CrossRef]

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. BioMed Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Gemming, L.; Utter, J.; Ni Mhurchu, C. Image-Assisted Dietary Assessment: A Systematic Review of the Evidence. J. Acad. Nutr. Diet. 2015, 115, 64–77. [Google Scholar] [CrossRef]

- Vu, T.; Lin, F.; Alshurafa, N.; Xu, W. Wearable Food Intake Monitoring Technologies: A Comprehensive Review. Computers 2017, 6, 4. [Google Scholar] [CrossRef]

- Boushey, C.J.; Spoden, M.; Zhu, F.M.; Delp, E.J.; Kerr, D.A. New Mobile Methods for Dietary Assessment: Review of Image-Assisted and Image-Based Dietary Assessment Methods. Proc. Nutr. Soc. 2017, 76, 283–294. [Google Scholar] [CrossRef]

- Lo, F.P.W.; Sun, Y.; Qiu, J.; Lo, B. Image-Based Food Classification and Volume Estimation for Dietary Assessment: A Review. IEEE J. Biomed. Health Inform. 2020, 24, 1926–1939. [Google Scholar] [CrossRef]

- Tahir, G.A.; Loo, C.K. A Comprehensive Survey of Image-Based Food Recognition and Volume Estimation Methods for Dietary Assessment. Healthcare 2021, 9, 1676. [Google Scholar] [CrossRef]

- Mezgec, S.; Seljak, B.K. Deep Neural Networks for Image-Based Dietary Assessment. J. Vis. Exp. 2021, 169, e61906. [Google Scholar] [CrossRef]

- Tucci, V.; Saary, J.; Doyle, T.E. Factors influencing trust in medical artificial intelligence for healthcare professionals: A narrative review. J. Med. Artif. Intell. 2022, 5, 4. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Chazette, L.; Brunotte, W.; Speith, T. Exploring Explainability: A Definition, a Model, and a Knowledge Catalogue. In Proceedings of the 2021 IEEE 29th International Requirements Engineering Conference (RE), Notre Dame, IN, USA, 20–24 September 2021. [Google Scholar]

- Stefana, E.; Marciano, F.; Rossi, D.; Cocca, P.; Tomasoni, G. Wearable Devices for Ergonomics: A Systematic Literature Review. Sensors 2021, 21, 777. [Google Scholar] [CrossRef]

- Shamseer, L.; Moher, D.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; PRISMA-P Group. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: Elaboration and explanation. BMJ 2015, 349, g7647. [Google Scholar] [CrossRef]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gotzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Healthcare Interventions: Explanation and Elaboration. BMJ (Clin. Res. Ed.) 2009, 339, b2700. [Google Scholar] [CrossRef]

- Stefana, E.; Marciano, F.; Cocca, P.; Alberti, M. Predictive models to assess Oxygen Deficiency Hazard (ODH): A systematic review. Saf. Sci. 2015, 75, 1–14. [Google Scholar] [CrossRef]

- Kawano, Y.; Yanai, K. Real-Time Mobile Food Recognition System. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “Snap-n-Eat”. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef]

- E Silva, B.V.R.; Rad, M.G.; Cui, J.; McCabe, M.; Pan, K. A Mobile-Based Diet Monitoring System for Obesity Management. J. Health Med. Inform. 2018, 9. [Google Scholar] [CrossRef]

- Qayyum, O.; Sah, M. IOS Mobile Application for Food and Location Image Prediction using Convolutional Neural Networks. In Proceedings of the 2018 IEEE 5th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bangkok, Thailand, 22–23 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Tomescu, V.-I. FoRConvD: An approach for food recognition on mobile devices using convolutional neural networks and depth maps. In Proceedings of the 2020 IEEE 14th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 21–23 May 2020; pp. 000129–000134. [Google Scholar] [CrossRef]

- Mezgec, S.; Seljak, B.K. NutriNet: A Deep Learning Food and Drink Image Recognition System for Dietary Assessment. Nutrients 2017, 9, 657. [Google Scholar] [CrossRef]

- Cornejo, L.; Urbano, R.; Ugarte, W. Mobile Application for Controlling a Healthy Diet in Peru Using Image Recognition. In Proceedings of the 2021 30th Conference of Open Innovations Association FRUCT, Oulu, Finland, 27-29 October 2021; pp. 32–41. [Google Scholar] [CrossRef]

- Tahir, G.A.; Loo, C.K. Explainable deep learning ensemble for food image analysis on edge devices. Comput. Biol. Med. 2021, 139, 104972. [Google Scholar] [CrossRef]

- Temdee, P.; Uttama, S. Food recognition on smartphone using transfer learning of convolution neural network. In Proceedings of the 2017 Global Wireless Summit (GWS), Cape Town, South Africa, 15–18 October 2017; pp. 132–135. [Google Scholar] [CrossRef]

- Tiankaew, U.; Chunpongthong, P.; Mettanant, V. A Food Photography App with Image Recognition for Thai Food. In Proceedings of the 2018 Seventh ICT International Student Project Conference (ICT-ISPC), Nakhonpathom, Thailand, 11–13 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Park, S.-J.; Palvanov, A.; Lee, C.-H.; Jeong, N.; Cho, Y.-I.; Lee, H.-J. The development of food image detection and recognition model of Korean food for mobile dietary management. Nutr. Res. Pract. 2019, 13, 521–528. [Google Scholar] [CrossRef]

- Freitas, C.N.C.; Cordeiro, F.R.; Macario, V. MyFood: A Food Segmentation and Classification System to Aid Nutritional Monitoring. In Proceedings of the 2020 33rd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Porto de Galinhas, Brazil, 7–10 November 2020; pp. 234–239. [Google Scholar] [CrossRef]

- Zheng, J.; Zou, L.; Wang, Z.J. Mid-level deep Food Part mining for food image recognition. IET Comput. Vis. 2018, 12, 298–304. [Google Scholar] [CrossRef]

- Kawano, Y.; Yanai, K. FoodCam: A real-time food recognition system on a smartphone. Multimedia Tools Appl. 2014, 74, 5263–5287. [Google Scholar] [CrossRef]

- Termritthikun, C.; Muneesawang, P.; Kanprachar, S. NU-InNet: Thai food image recognition using Convolutional Neural Networks on smartphone. J. Telecommun. Electron. Comput. Eng. 2017, 9, 63–67. [Google Scholar]

- Sahoo, D.; Hao, W.; Ke, S.; Xiongwei, W.; Le, H.; Achananuparp, P.; Lim, E.-P.; Hoi, S.C.H. FoodAI: Food image recognition via deep learning for smart food logging. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2260–2268. [Google Scholar] [CrossRef]

- Kayikci, S.; Basol, Y.; Dorter, E. Classification of Turkish Cuisine With Deep Learning on Mobile Platform. In Proceedings of the 2019 4th International Conference on Computer Science and Engineering (UBMK), Samsun, Turkey, 11–15 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Rhyner, D.K.; Loher, H.; Dehais, J.B.; Anthimopoulos, M.; Shevchik, S.; Botwey, R.H.; Duke, D.; Stettler, C.; Diem, P.; Mougiakakou, S. Carbohydrate Estimation by a Mobile Phone-Based System Versus Self-Estimations of Individuals With Type 1 Diabetes Mellitus: A Comparative Study. J. Med. Internet Res. 2016, 18, e101. [Google Scholar] [CrossRef]

- Morley-John, J.; Swinburn, B.A.; Metcalf, P.A.; Raza, F.; Wright, H. The Risks of Everyday Life: Fat content of chips, quality of frying fat and deep-frying practices in New Zealand fast food outlets. Aust. New Zealand J. Public Health 2002, 26, 101–107. [Google Scholar] [CrossRef]

- Lo, F.P.-W.; Sun, Y.; Qiu, J.; Lo, B.P.L. Point2Volume: A Vision-Based Dietary Assessment Approach Using View Synthesis. IEEE Trans. Ind. Inform. 2019, 16, 577–586. [Google Scholar] [CrossRef]

- Lu, Y.; Stathopoulou, T.; Mougiakakou, S. Partially Supervised Multi-Task Network for Single-View Dietary Assessment. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR); IEEE: Piscataway, NJ, USA, 2021; pp. 8156–8163. [Google Scholar] [CrossRef]

- Zhu, F.; Bosch, M.; Boushey, C.J.; Delp, E.J. An image analysis system for dietary assessment and evaluation. In Proceedings of the International Conference on Image Processing, ICIP, Hong Kong, China, 26–29 September 2010; pp. 1853–1856. [Google Scholar] [CrossRef]

- Akpa, E.A.H.; Suwa, H.; Arakawa, Y.; Yasumoto, K. Food weight estimation using smartphone and cutlery. In IoTofHealth 2016, Proceedings of the 1st Workshop on IoT-Enabled Healthcare and Wellness Technologies and Systems, Co-Located with MobiSys; Association for Computing Machinery: New York, NY, USA, 2016; pp. 9–14. [Google Scholar] [CrossRef]

- Okamoto, K.; Yanai, K. An automatic calorie estimation system of food images on a smartphone. In MADiMa 2016, Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Co-Located with ACM Multimedia; Association for Computing Machinery: New York, NY, USA, 2016; pp. 63–70. [Google Scholar] [CrossRef]

- Gao, J.; Tan, W.; Ma, L.; Wang, Y.; Tang, W. MUSEFood: Multi-sensor-based food volume estimation on smartphones. In Proceedings of the 2019 IEEE SmartWorld, Ubiquitous Intelligence and Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Internet of People and Smart City Innovation, SmartWorld/UIC/ATC/SCALCOM/IOP/SCI, Leicester, UK, 19–23 August 2019; pp. 899–906. [Google Scholar] [CrossRef]

- Sowah, R.A.; Bampoe-Addo, A.A.; Armoo, S.K.; Saalia, F.K.; Gatsi, F.; Sarkodie-Mensah, B. Design and Development of Diabetes Management System Using Machine Learning. Int. J. Telemed. Appl. 2020, 2020, 1–17. [Google Scholar] [CrossRef]

- Herzig, D.; Nakas, C.T.; Stalder, J.; Kosinski, C.; Laesser, C.; Dehais, J.; Jaeggi, R.; Leichtle, A.B.; Dahlweid, F.-M.; Stettler, C.; et al. Volumetric Food Quantification Using Computer Vision on a Depth-Sensing Smartphone: Preclinical Study. JMIR mHealth uHealth 2020, 8, e15294. [Google Scholar] [CrossRef]

- Dolui, K.; Michiels, S.; Hughes, D.; Hallez, H. Context Aware Adaptive ML Inference in Mobile-Cloud Applications. In Proceedings of the 2022 IEEE 23rd International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Belfast, UK, 14–17 June 2022; pp. 90–99. [Google Scholar] [CrossRef]

- Termritthikun, C.; Kanprachar, S. NU-ResNet: Deep residual networks for Thai food image recognition. J. Telecommun. Electron. Comput. Eng. 2018, 10, 29–33. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Kumar, I.E.; Venkatasubramanian, S.; Scheidegger, C.; Friedler, S. Problems with Shapley-value-based explanations as feature importance measures. arXiv 2020, arXiv:2002.11097. [Google Scholar]

- Frye, C.; Rowat, C.; Feige, I. Asymmetric Shapley values: Incorporating causal knowledge into model-agnostic explainability. arXiv 2019, arXiv:1910.06358. [Google Scholar]

- Islam, S.R.; Eberle, W.; Bundy, S.; Ghafoor, S.K. Infusing domain knowledge in AI-based ‘black box’ models for better explainability with application in bankruptcy prediction. arXiv 2019, arXiv:1905.11474. [Google Scholar]

- Min, W.; Jiang, S.; Liu, L.; Rui, Y.; Jain, R. A Survey on Food Computing. ACM Comput. Surv. 2020, 52, 92. [Google Scholar] [CrossRef]

- Subhi, M.A.; Ali, S.H.; Mohammed, M.A. Vision-Based Approaches for Automatic Food Recognition and Dietary Assessment: A Survey. IEEE Access 2019, 7, 35370–35381. [Google Scholar] [CrossRef]

- Yanai, K.; Kawano, Y. Food Image Recognition Using Deep Convolutional Network with Pre-Training and Fine-Tuning. In Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (Icmew), Turin, Italy, 29 June–3 July 2015. [Google Scholar]

- Park, H.; Bharadhwaj, H.; Lim, B.Y. Hierarchical Multi-Task Learning for Healthy Drink Classification. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

| Criteria | Definition |

|---|---|

| Language of manuscript: | English |

| Years of publication: | 2010–2022 |

| Fields: |

|

| The type of solutions considered: | Computer vision

|

| Types of device(s): | Mobile applications |

| Search Database | Search Keywords |

|---|---|

| PubMed | (Nutritional monitoring [Title/Abstract]) AND (computer vision [Title/Abstract]) AND (artificial intelligence [Title/Abstract]) AND (smartphone [Title/Abstract]) AND (mobile [Title/Abstract]) OR (food recognition [Title/Abstract]) OR (Food images recognition [Title/Abstract]) |

| IEEE Xplore | (“Abstract”: Nutritional monitoring) AND (“Abstract”: computer vision) OR (“Abstract”: Food images recognition) OR (“Abstract”: food image recognition) AND (“Abstract”: artificial intelligence) AND (“Abstract”: smartphone) AND (“Abstract”: Mobile) |

| Scopus | TITLE-ABS-KEY(“food image recognition” OR “food images recognition” OR “food volume estimation” OR “volume estimation” OR “nutritional monitoring”) AND TITLE-ABS-KEY-AUTH (“mobile device” OR “Mobile devices” OR “Smartphone” OR “Edge device”) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amugongo, L.M.; Kriebitz, A.; Boch, A.; Lütge, C. Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review. Healthcare 2023, 11, 59. https://doi.org/10.3390/healthcare11010059

Amugongo LM, Kriebitz A, Boch A, Lütge C. Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review. Healthcare. 2023; 11(1):59. https://doi.org/10.3390/healthcare11010059

Chicago/Turabian StyleAmugongo, Lameck Mbangula, Alexander Kriebitz, Auxane Boch, and Christoph Lütge. 2023. "Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review" Healthcare 11, no. 1: 59. https://doi.org/10.3390/healthcare11010059

APA StyleAmugongo, L. M., Kriebitz, A., Boch, A., & Lütge, C. (2023). Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review. Healthcare, 11(1), 59. https://doi.org/10.3390/healthcare11010059