1. Introduction

The role of radiotherapy in oncology has increased over the last decade as technological advances have continued [

1,

2]. Providing access to timely and appropriate radiotherapy services is crucial in order to minimize radiotoxicity and optimize patient outcomes [

3,

4,

5].

In Ontario, Canada, there are 15 regional cancer centers (RCCs) that provide radiotherapy services to its 14.5 million residents. As a means of promoting access, RCCs are distributed across the Province and vary in size, availability of specialized equipment, and extent of clinical expertise [

6]. While the provincial health authority devised a plan in 2015 to increase the performance of its cancer treatment centers through continuous improvement cycles, the challenge became how to compare, identify, and subsequently implement improvement opportunities and best practices to all its centers [

7]. Given the heterogeneity of center attributes and available resources, measuring their relative performances against the same benchmarks may not be a fair assessment. For RCCs with a similar set of resources (inputs), one would expect similar levels of performance, including, for example, the number of patients completing treatment and the percentage of patients starting treatment within pre-specified wait-time targets (outputs). Similarly, one may expect an RCC with fewer inputs to yield lower performance. In reality, however, some RCCs may be more (or less) efficient, producing greater (or fewer) outputs with the same or fewer inputs due to a variety of reasons, such as patient composition, types of services provided, and whether the center is a teaching hospital or not.

Data envelopment analysis (DEA) is a linear programming technique that is widely used to compare decision-making units (DMUs) that provide similar services but operate with differing levels of resources. DEA enables an improved comparison of the relative efficiency of each DMU, that is, how well each DMU is able to transform its set of inputs into desired outputs. Following DEA, regression analysis can be used to identify factors associated with inefficiency, and how a less-efficient DMU can improve its performance [

8,

9,

10,

11,

12]. (We use the terms DMU and RCC interchangeably).

This study employs DEA and regression analysis using data from 2013 to 2016 obtained from two provincial databases that report cancer-related patient-level activity and diagnoses. DEA is used to identify the factors associated with efficient planning and treatment of radiation therapy for patients treated for cancer in Ontario RCCs, and regression analysis is used to explain findings from the DEA model. We show how this analysis can be used in the development of continuous improvement initiatives, which is not typically discussed in DEA research. There is an opportunity to address gaps in the literature, specifically by contributing a DEA study focused on cancer care in a Canadian province with novel managerial insights regarding how to interpret and act upon efficiency scores. To the best of our knowledge, this is the first study to use DEA to evaluate radiation treatment center performance, and coupled with this are useful interpretations of model results that can initiate improvement efforts. With this study, more awareness is shone on challenges faced by provincial healthcare providers. We also demonstrate how integrated benchmarks can guide decision-makers and lead to potentially beneficial collaborations between RCCs.

2. Literature Review

Özcan [

13], Canter and Poh [

8], and Kohl et al. [

14] explored DEA in healthcare settings, providing several examples of applications to hospitals, nursing homes, and international health studies. In particular, Cantor and Poh [

8] reviewed articles that use DEA in combination with other technical approaches, such as regression or factor analysis, to measure healthcare system efficiency, and Kohl et al. [

14] provided a review of DEA applied specifically in hospital settings. Those authors note an important disconnect between the findings of DEA and the action taken to improve efficiency: being able to identify low-performing DMUs and quantifying inputs (or outputs) that would yield 100% relative efficiency are important first steps; however, DEA does not prescribe a process for using that knowledge to achieve those targets.

Efficiency in hospitals has been studied before [

10,

15,

16], along with the effectiveness of cancer screening programs as measured through detection rates [

17,

18] and cost [

19,

20]. However, few studies directly relate to our context, i.e., efficiency analysis of radiation centers. Langabeer and Özcan [

21] applied DEA Malmquist in their longitudinal study of inpatient cancer centers across a five-year period in the United States, and uncovered that greater specialization of treatment does not necessarily lead to higher efficiency or lower costs. This was one of few DEA studies dedicated to cancer care. Meanwhile, Allin et al. [

11] focused on comparing 89 health regions in Canada, examining the potential years of life lost that could be due to system inefficiencies, though their focus was not on cancer care.

Expanding on seminal work in DEA [

22,

23], Simar and Wilson [

24] and Lothgren and Tambour [

25] established bootstrapping frameworks for approximating a sampling distribution of the relative scale efficiencies, thereby enabling the construction of confidence intervals for these estimates. Bootstrap DEA can be applied to any industry sector, from banking [

26] to education [

27], to and healthcare supply chains [

28]. In each of these settings, regression analysis follows DEA to identify potential causes of inefficiency for the DMUs.

3. Problem Description

Each of the 15 cancer centers in Ontario is subject to specific levels of available resources and expertise (

Table 1). For example, some programs are located in teaching hospitals and others are not, and each center has its own level of specialized equipment required for radiotherapy, or medical resource level. Centers can be further distinguished by their treatment capabilities, or their “diversification”. It was observed that for every center, the majority of treatments delivered were to the pelvis and chest, but certain centers had wider or more

diversified “portfolios” of body regions treated (e.g., brain). This is in contrast to

specialization, which implies that a center would treat only certain body regions and not others. We express diversification as the proportion of radiation treatments delivered to body regions other than pelvis or chest. Finally, centers are situated across the province, where local populations vary. Catchment population refers to the census population within a 50-kilometer radius of the RCC as determined by population counts reported in the 2011 and 2016 census reports [

29,

30]. Annual population growth rates for Canada [

31] were used to estimate populations between 2012 and 2015, inclusive.

Measuring the performances of these distinct centers against the same benchmarks may not be the best assessment. Rather, their performances relative to their available inputs and respective outputs would provide a clearer picture of how efficiently they are operating compared to one another. The scope of our study is limited to cancer treatments delivered by linear accelerators, or LINACs.

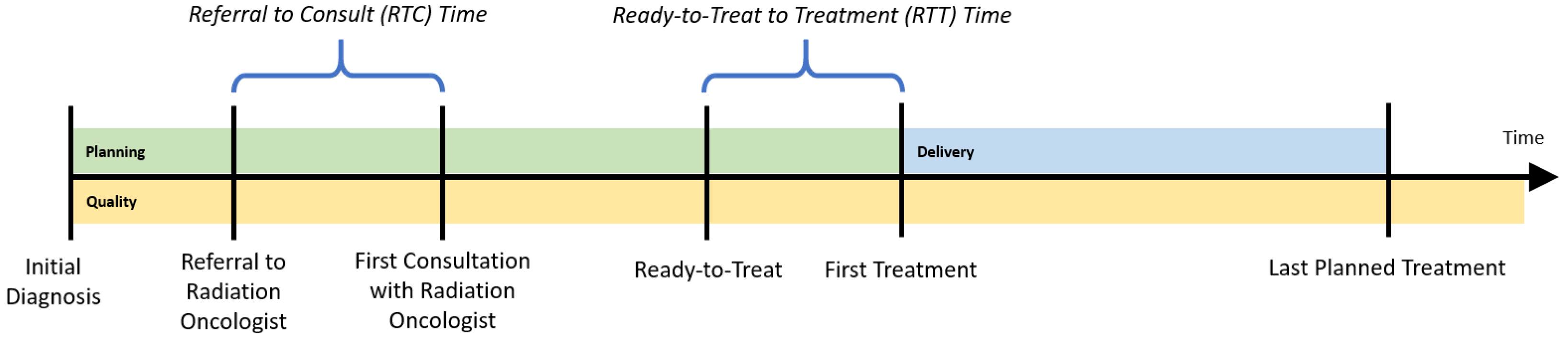

To determine the appropriate inputs and outputs for the regional cancer centers (RCCs), a patient’s radiation treatment journey is followed, while considering three dimensions of treatment:

planning,

delivery, and

quality. The planning dimension begins with the patient’s initial diagnosis date. The patient is then referred for a consultation with a radiation oncologist. The time between referral and the first consultation visit with a radiation oncologist is an important performance indicator, called referral-to-consult (RTC). If radiotherapy is indicated, the radiation oncologist will then develop a course of treatment, and will also determine the date that treatment could begin (the ready-to-treat date). The time between when the patient is physically ready to be treated and their first treatment is another important indicator, the ready-to-treat to first treatment (RTT) time. To monitor wait times for the radiation treatment program, the provincial health authority established a 14-day benchmark for both the RTC and RTT wait times for all RCCs; the proportion of patients whose wait time is within that target is tracked yearly. While each patient’s course of treatment (e.g., dosages and timing of treatments) could differ, this planning phase should be consistent for all patients (

Figure 1).

Once a plan is in place, radiation treatments are administered according to that plan (the delivery dimension of treatment). This requires patients to visit their respective regional cancer centers on specific dates and times (e.g., radiation is applied Monday through Friday for 3 weeks). The number and timing of treatments that can be delivered at a center depend upon that center’s availability and utilization of its resources, which comprise medical equipment such as LINACs.

Patient support visits and quality assurance visits could also be booked around these treatment visits to minimize the number of center visits required of the patient. Patient support visits consist of patient education and coordination/scheduling of radiation-related visits. Quality assurance visits include exposing the patient to a thermoluminescent dosimeter, acquisition of portal images or volumetric images, use of active breathing control, use of respiratory gating equipment, manual calculations, fluence/dosimetry checks and peer review. These activities are captured in the

quality dimension of treatment planning and delivery.

Figure 1 illustrates the approximate timing of each treatment dimension. Though several visits may occur simultaneously without requiring the patient to physically change locations within the center, we consider them to be distinct. This allows for resources, such as number of staff, technicians, and physicians, to be indirectly incorporated in the DEA model, should information pertaining to full-time equivalent levels not be easily accessible.

3.1. Data

Data between 2013 and 2016 were examined from two databases: Activity Level Reporting (ALR), which reports patient-level activity within the cancer system, and Ontario Cancer Registry (OCR), a database of residents in Ontario who have been diagnosed with cancer and residents who have died of cancer. From ALR, total number of visits by type (e.g., planning and simulation) and number of incident cases (i.e., number of new cases of diagnosed cancer) were gathered. The patient was assigned to an RCC based on the location of their first treatment. Number of deaths by year and by RCC was calculated by linking to the OCR and the Registered Persons Database, which hold information on Ontario residents’ access to public health services. The proportion of patients whose RTC and RTT wait times were within the provincial target of 14 days was calculated, along with total number of radiation treatments delivered to specific body regions. From both ALR and OCR, number of new cancer diagnoses after the first initial diagnosis for a patient as a surrogate for the subsequent cancer diagnosis rate was identified. Publicly available sources for teaching hospital designations were consulted [

32], and they were for data on medical resource (MR) capacity and utilization too [

33,

34].

3.2. Privacy and Software

This study was approved by the Western University Health Sciences Research Ethics Board. Data envelopment analysis was performed using optimization solver CPLEX 12.10 via the Python API (Python version 3.6) and regression analysis was performed with statistical software R (version 3.6.0).

4. Computing Relative Efficiencies

To compare the performances of RCCs between 2013 and 2016, a DEA model to compute the relative efficiency score for each center across the three treatment dimensions each year was constructed. We posit that the provincial health authority has more control over its inputs than outputs and that resources are limited in our problem setting (as is typical in healthcare environments), so the DEA was formulated as an input-oriented, variable-returns-to-scale (VRS) model, where economies of scale may exist (i.e., we do not assume there is a constant rate of substitution between inputs and outputs). In other words, the VRS assumption is more general and does not require that a change in the inputs produce a proportional change in the outputs. Details regarding the mathematical formulation of the input-oriented VRS DEA model,

, can be found in

Appendix A.1, and we note once again that RCCs are analogous to decision-making units (DMUs).

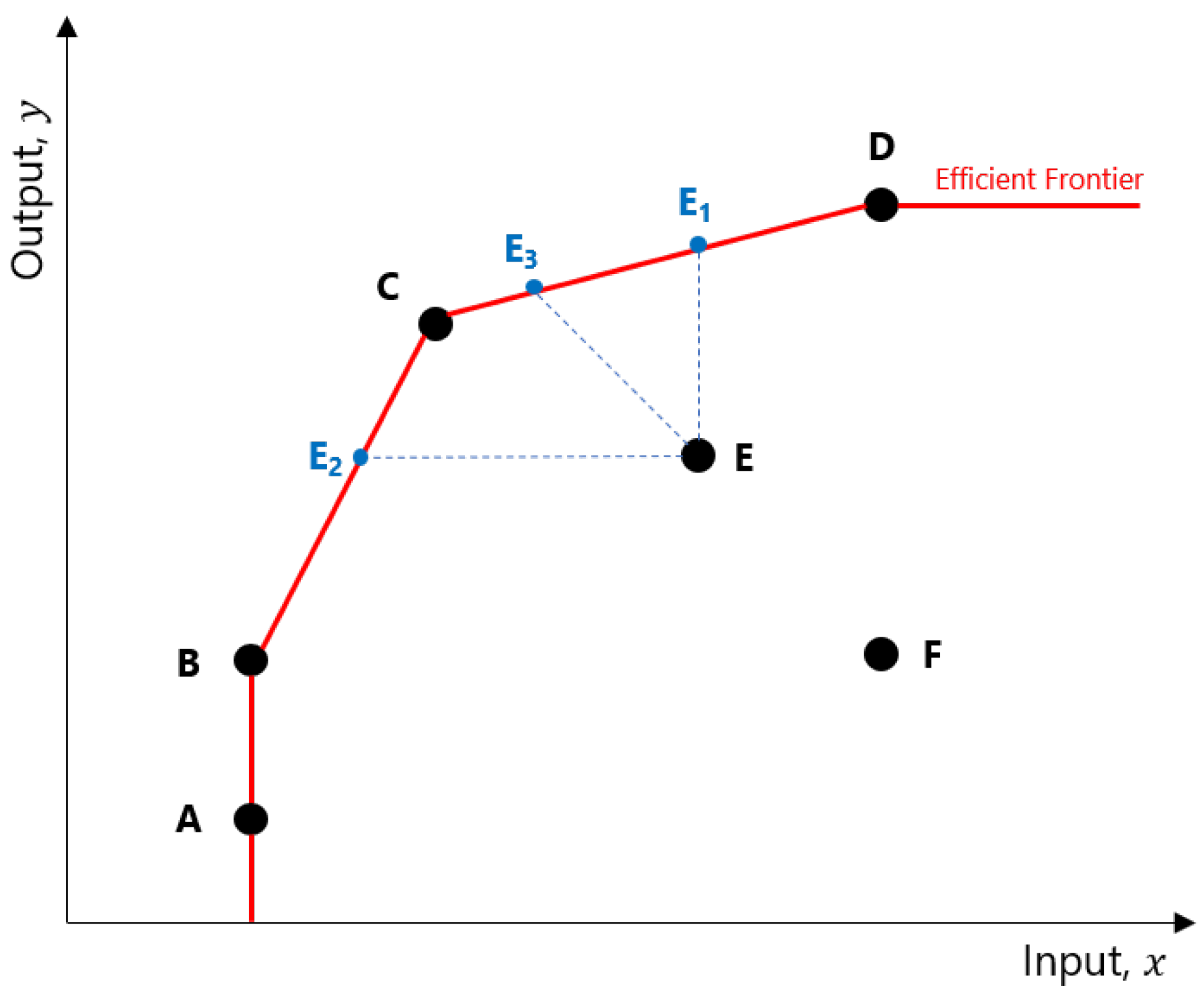

The relative efficiencies computed in a DEA model indicate how well an RCC is able to transform its inputs into outputs, relative to other RCCs. Consider

Figure 2. The points A through F represent different RCCs and the levels of output they can achieve based on their inputs. The DEA determined that RCCs A, B, C, and D are efficient, and joining their coordinates will form the

efficient frontier. An inefficient RCC such as E can improve its relative efficiency in several ways: increase output without changing its original input (move to E

1), use fewer inputs to produce the same level of outputs (E

2), or adjust both inputs and outputs in such a way as to reach the frontier (E

3).

Efficiency scores for the planning dimension were generated using the numbers of clinic visits, planning visits, and simulation visits as inputs; and the percentages of patients with RTC and RTT times ≤14 days as outputs (

Table 2). Efficiency scores for the delivery dimension used medical resource (MR) capacity and the inverse of RTT times ≤14 days as inputs; and MR utilization and the number of treatment visits as outputs. The

inverse of RTT was used because a smaller input value is desirable in an input-oriented DEA model (i.e., a lower inverse RTT implies that a higher proportion of patients are within the target wait time window). Similarly, a larger output value is desirable in this type of analysis.

Lastly, efficiency in the quality dimension used the number of patient support visits, quality assurance visits, and treatment visits as inputs, and survival rate and the inverse of the subsequent diagnosis rates as the outputs. The subsequent diagnosis rate was measured by first identifying for each patient in the population over all years in our study whether an incident (i.e., cancer diagnosis) was the first or a subsequent cancer diagnosis. Then, in a given year, the total number of subsequent cancer diagnoses across the patient population was divided by the total number of cancer incidents to determine the subsequent diagnosis rate. The complement of this value is used in this analysis, since larger outputs are more desirable in an input-oriented VRS model.

Solving

provides only a snapshot of relative efficiency scores, based on single measures of inputs and outputs at a given point in time. To better capture the variability in these efficiencies, a bootstrap DEA [

24,

35] allows computation of an average efficiency and confidence interval through sampling with replacement. This bootstrap approach (presented in

Appendix A.2) repeatedly samples inputs to generate a range of efficiencies, ultimately providing an

average efficiency score that better distinguishes efficient RCCs from one another, even if the differences are small.

Table 3,

Table 4 and

Table 5 show efficiency scores derived from

, along with the bias-corrected mean efficiencies and their 5th and 95th quantiles (denoted by

,

,

, and

, respectively). The ranking of RCCs remains, in general, consistent with results from solving

just once, so we see that results are robust.

5. Explaining Relative Efficiencies

We want to understand the differences in efficiencies to gain insights on how to interpret them. External factors that may be determinants of relative efficiency include:

- (1)

Center size;

- (2)

Center catchment population;

- (3)

Radiation treatment diversification;

- (4)

Teaching hospital designation.

Center size was estimated using the medical resource level (MRL) as a proxy (

Table 1). Catchment population and radiation treatment diversification were defined in

Section 3.

Table 6 presents the variables in our regression model, and shows mean and standard deviation values for these regression variables over the four years of study.

The regression model takes the following form:

where

denotes a transformed bias-corrected efficiency score. As

are censored at 1, (i.e.,

), this requires various cut-off points (and thus regression models) to be developed to compute meaningful efficiency estimates that remain within 0 and 1. However, by transforming scores so that they are left-censored at 0 only, a censored (or Tobit) regression can be applied and interpreted similarly to an ordinary least squares regression [

13]. We apply the following transformation to compute

:

Note that with this transformation,

and can be interpreted as an “inefficiency” score; i.e.,

indicates that RCC

j is 100% efficient.

For each model dimension, a left-censored (Tobit) regression analysis was performed with the

vglm function from the

VGAM library in R using the transformed efficiencies over all four years of our study. From the results in

Table 6, this combination of determinants explains roughly 64% and 38% of variation in the planning and quality efficiency scores, respectively, but does not adequately account for variation in delivery efficiency (

%).

Due to the transformation applied to efficiency scores, interpretation of coefficients must be handled carefully: a positive coefficient indicates that efficiency worsens as the dependent variable increases, and vice versa. For the planning dimension, higher efficiency scores were associated with smaller centers (lower levels of medical resources) () and centers with less diverse radiation treatment portfolios (), but not catchment populations () or teaching designation (). Smaller RCCs were also more efficient in the quality ().

6. From Rankings to Continuous Improvement

Treatment center policies can be informed by relative efficiency scores. Rather than focusing solely on the rankings that a DEA provides, we want to work towards actionable plans that contribute to the continuous improvement of RCCs. We developed several visualizations to identify potential courses of action for an RCC to improve upon its performance in a given dimension. In the following subsections, visualizations for a selection of computed results are presented; similar analysis can be performed for all dimensions, years, and combinations of RCCs.

6.1. Comparing Results by Dimension

For each of the three treatment dimensions, relative efficiency scores can be compared to identify whether any trends in performance are apparent.

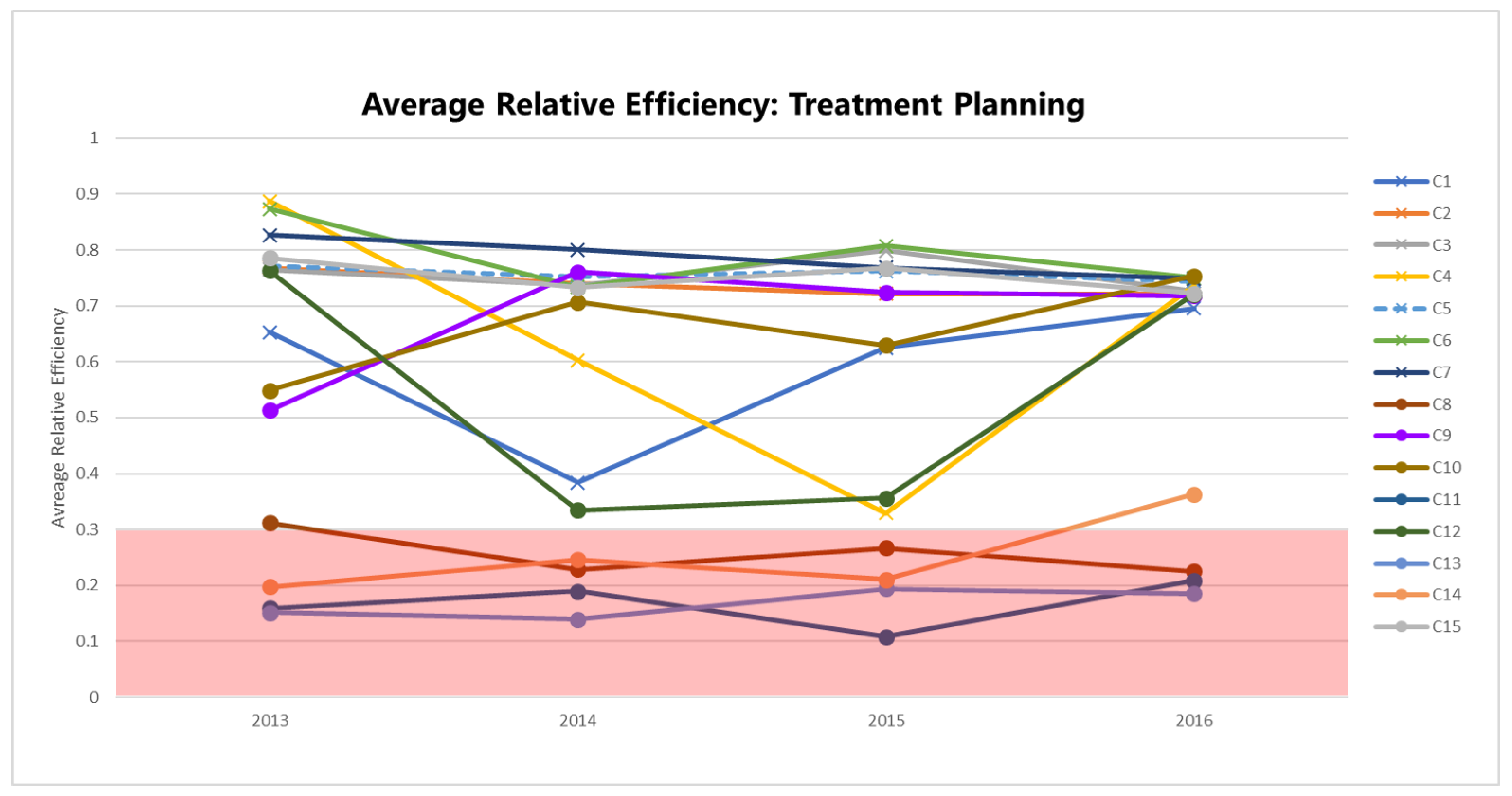

Figure 3 plots the bias-corrected mean efficiencies for the planning dimension (

), computed according to the method in

Appendix A.2 and shown in

Table 3 by year and RCC.

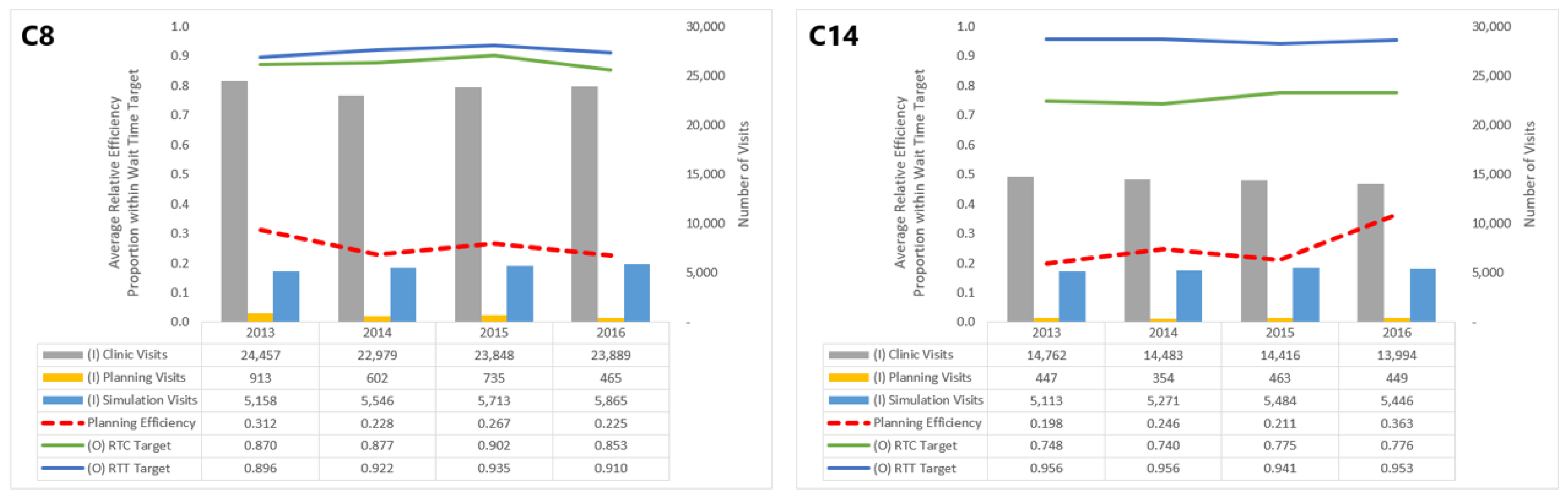

The efficiencies of some centers are distinctly lower than others: C8, C11, C13, and C14 consistently perform with

. Focusing on a specific center or group of centers within this range, say C8 and C14, allows us to understand which inputs and outputs impact their planning efficiency scores (

Figure 4).

Though both in the band of low efficiency, C14’s performance in the planning dimension has improved since 2013, whereas C8’s has seen a decline. These trends can be attributed numerous changes in inputs and outputs for C14 and C8. In particular, C14 saw a general decline in clinic visits and improvement in the proportion of patients meeting the RTC wait time target, which both outweigh the increase in simulation visits. For C8, however, while the number of planning visits decreased over this period, it did not offset increases in clinic and simulation visits or the worsening of the RTC target.

We do not recommend exhausting pairwise comparisons of centers; rather, decision-makers should identify “peer centers” (or, RCCs with similar performances in a treatment dimension) and scrutinize why those RCCs perform differently. In what specific measures does one RCC outperform the other, and how can each RCC strive for improvement?

6.2. Relative Comparison of RCCs

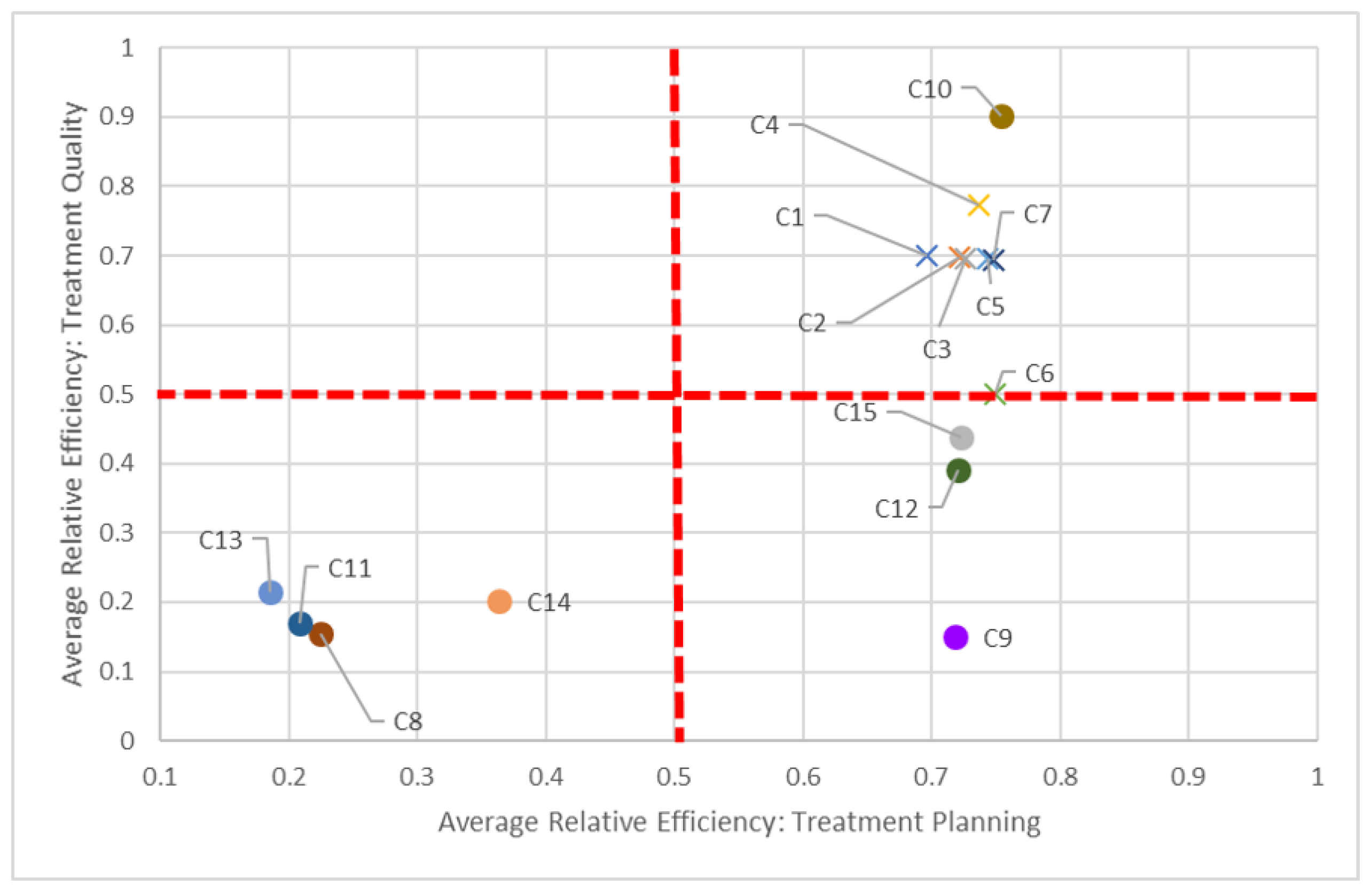

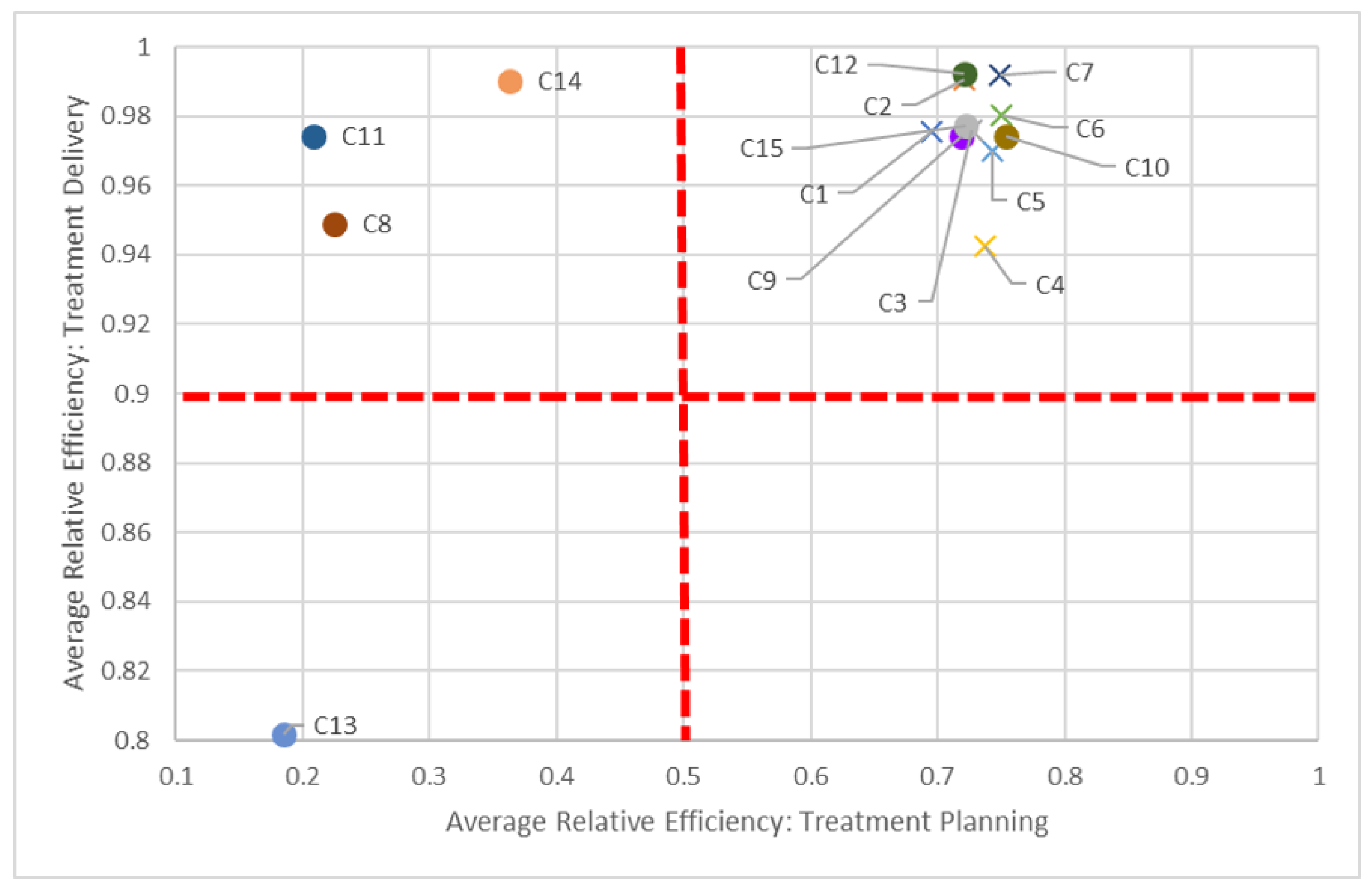

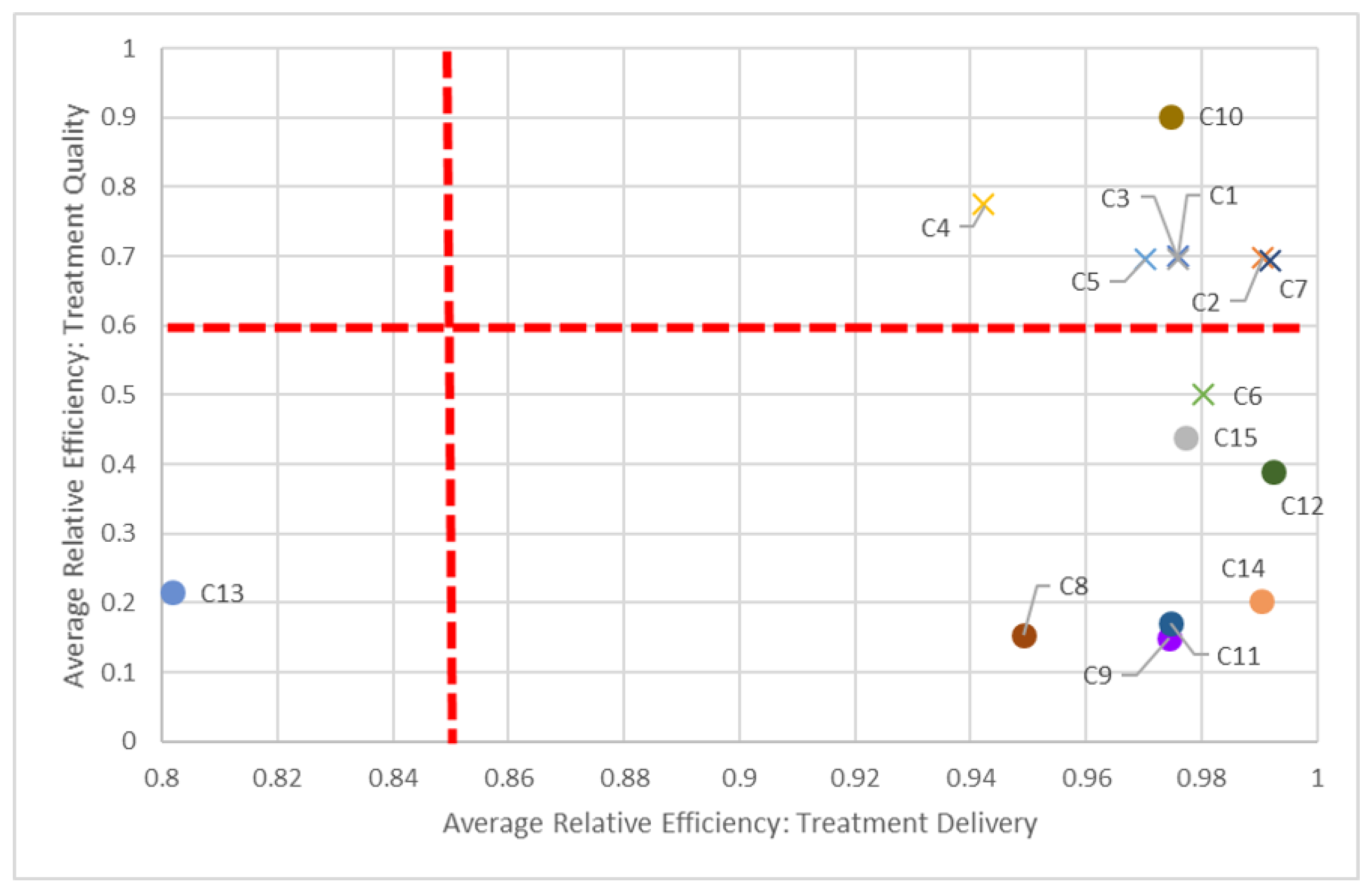

To assist in identifying these peer centers, we propose visualizations in

Figure 5,

Figure 6 and

Figure 7 to compare two dimensions in a given year (2016).

Say we compare centers based on both planning and quality dimensions (

Figure 5). In keeping with our C8 and C14 pairing, we can see how “far” away C8 is compared to C14, but also how close it is in performance to other centers, such as C11. While all centers should be striving to be in the top-right quadrant (i.e., 100% efficient in both dimensions), in the short-term, it is perhaps better to compare just certain groups of centers, quadrant-by-quadrant. This visualization also quickly identifies clusters of centers: According to this study, non-teaching hospitals (denoted by “×”) performed better in both planning and quality in 2016, in general, compared to their teaching counterparts. This clustering is also apparent when comparing planning and delivery dimensions (

Figure 6) and delivery and quality dimensions (

Figure 7) in 2016, as most non-teaching hospitals performed better than non-teaching hospitals in all three dimensions.

The dotted red lines in each of these quadrant charts represent arbitrarily chosen thresholds for performance, and should be adjusted appropriately by decision-makers. For example, in

Figure 7, if a 60% efficiency threshold in the quality dimension is considered too high to strive for, it can be lowered to some other value, say 30%. By focusing on fewer centers who have not met this 30% target, decision-makers can tailor strategic plans for those centers to get them past this milestone. Alternatively, centers scoring very high on quality (given managerial targets) may reduce efforts in this dimension to focus on under-performing aspects in other dimensions. Through these positive incremental changes, centers maybe be encouraged to adopt more continuous improvement initiatives.

7. Discussion

As part of measuring the performances of RCCs, the provincial health authority measures and reports various indicators, including RTC and RTT. Although this is an important and effective means of assessing improvement opportunities, indicators measured in isolation do not speak to the center’s efficiency. DEA, in contrast, provides a single numeric value that signifies each center’s relative efficiency and can identify system-level strategies towards performance improvement (e.g., increase medical resource levels).

We recognize that computing these scores is not enough; we must also consider how to influence managerial action from those scholarly insights [

14]. Typically, inefficient RCCs would seek to reduce their inputs while maintaining or increasing their outputs, with specific targets obtained using slack values from model

. These RCCs may also consider emulating a “weighted” combination of efficient RCCs based on results from model

. While knowing these target values is useful, how to achieve them operationally is another story. We present a variation of this benchmarking information to assist in identifying potential partnerships between centers. Visualizing DEA inputs, outputs, and resulting efficiency scores allows policy makers to quickly see how centers are performing across several radiation treatment dimensions and against other centers over time. By considering three separate treatment dimensions, we show that an RCC that is inefficient in one dimension can also be a leader in another. The parameters listed in

Table 2 were used as inputs and outputs. While there are other measures that could be incorporated, the number of RCCs we studied was a limiting factor: Özcan [

36] suggests that the number of inputs and outputs to consider in a DEA model should satisfy

, where

and

s denote the numbers of RCCs, inputs, and outputs, respectively. With

RCCs, we used

and

for any one DEA model.

Some determinants of relative efficiencies discussed in

Section 5 are not within the decision-making jurisdiction of an individual radiation treatment center. For example, center catchment population and teaching hospital designation cannot be controlled or changed by an RCC. Medical resource levels and treatment diversification, however, can be influenced by the specific needs of the population served by the RCC and the policies developed by radiation treatment center administrators.

Furthermore, the inputs and outputs used in our analysis were limited to values that are currently available. Other center-specific information that could have been more informative would include full-time equivalents (FTEs) for radiation treatment professionals, such as oncologists, dosimetrists, technicians, and administrators. Provincial funding also differs by center and is partly based on the center’s case mix, neither of which was provided by the health authority for use in our study. A center’s case mix would influence the complexity of radiation treatments planned and delivered, which could impact RTC and RTT wait times, medical resource utilization, and patient survival rates, but also the specialization of FTEs and types of equipment required to deliver specialized treatments. Without this more granular information, insights from DEA models are limited to high-level interpretations.

Finally, it is important to be mindful of metrics deemed important by provincial authorities to ensure the insights developed by DEA modeling are meaningful. For example, during the analysis period (2013 to 2016), RTC and RTT wait times were considered important indicators for how well RCCs were meeting provincial wait time targets. However, in their most recent plan, the provincial authority modified these indicators to measure instead wait time from diagnosis to first treatment date and the radiation integrated wait time [

37]. The DEA model should be revised to reflect these updates in provincial measurements. Regardless, the analytical approach we presented here can be followed with the appropriate value substitutions [

10,

11,

21].

8. Conclusions

Using data envelopment analysis, multiple and varying inputs and outputs were considered together by separating the patient’s radiation treatment journey into three phases: planning, delivery, and quality. With bias-corrected DEA scores computed from the bootstrap model, efficient centers are better distinguished from one another based on their mean efficiencies compared to using VRS DEA results directly. The censored (Tobit) regression analysis identifies external determinants of efficiency, namely, center size (measured by medical resource level), diversification of radiation treatment, center catchment population, and teaching hospital designation. These determinants account for roughly 64% and 38% of variation in efficiencies in the planning and quality dimensions, respectively (but they did not significantly explain variation in the delivery dimension).

We highlight that this analysis is not prescriptive: while it can identify problem areas, it does not actually prescribe the specific actions for centers to take to reach their targets. Rather, with a larger number of RCCs for comparison, it can point decision-makers in directions that could lead to learning opportunities and beneficial collaborations between regional cancer centers for meeting provincial goals.

There are interesting empirical and theoretical future research directions for our work. For example, how can we combine three different efficiency scores into a single measure and evaluate all the centers with this new score? A single score will be more practical and easier to understand and act upon. However, building such a theoretical and empirical framework is an open research question. Another option would be to compare all centers within a province to those in another province while taking into account provincial differences. This would allow policy makers to improve centers even more.

Author Contributions

Conceptualization, M.A.B. and F.F.R.; methodology, T.B., M.A.B. and F.F.R.; software, T.B.; formal analysis, T.B.; investigation, T.B., M.A.B. and F.F.R.; writing—original draft preparation, T.B. and M.A.B.; writing—review and editing, T.B., M.A.B., F.F.R. and D.B.; visualization, T.B.; funding acquisition, M.A.B., F.F.R. and D.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Cancer Care Ontario, Planning and Regional Programs.

Institutional Review Board Statement

This study was approved by the Western University Health Sciences Research Ethics Board. The project ID is 112072 and the approval date was 7 August 2018.

Informed Consent Statement

Not applicable.

Data Availability Statement

Ontario Health is prohibited from making the data used in this research publicly accessible if it includes potentially identifiable personal health information and/or personal information as defined in Ontario law, specifically the Personal Health Information Protection Act (PHIPA) and the Freedom of Information and Protection of Privacy Act (FIPPA). Upon request, data de-identified to a level suitable for public release may be provided by Ontario Health.

Acknowledgments

Parts of this material are based on data and information provided by Ontario Health (Cancer Care Ontario) and include data received by Ontario Health (Cancer Care Ontario) from the Canadian Institute for Health Information (CIHI), and Institute for Clinical Evaluative Services (ICES). The opinions, results, views, and conclusions reported in this publication are those of the authors and do not reflect those of Ontario Health (Cancer Care Ontario), CIHI, and/or ICES. No endorsement by Ontario Health (Cancer Care Ontario), CIHI, and/or ICES is intended or should be inferred. We thank Alexander Smith for his support in this project.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Bootstrap DEA Model Solution Approach

Appendix A.1. Input-Oriented VRS DEA Model

We follow the DEA frameworks established by [

22,

23], which are presented in [

13]. We begin by detailing the primal linear program from which we construct the dual model used in the bootstrap DEA. Let

denote the planning, delivery, and quality dimensions of the radiation treatment program, respectively. Let

denote the regional cancer centers (DMUs),

be the inputs, and

represent the outputs for each DMU. We use the following notation for every treatment dimension

c and year

t:

relative efficiency of DMU j

input i from DMU j

output r from DMU j

implicit price of input i

implicit price of output r

is an unrestricted in sign variable (urs)

For every DMU

; treatment dimension

; and year

, we have:

Constraint (A2) ensures the sum of outputs for a DMU is less than or equal to the sum of its inputs, while (A3) scales the inputs of DMU to equal 1. Constraints (A4)–(A6) are defining domains of the variables. The objective function maximizes the total outputs of DMU k: if the result equals 1, then DMU k is considered efficient; otherwise it is deemed inefficient. (Note that we omit superscripts c and t from here on to streamline our notation, but all models are solved for every treatment dimension c and year t.)

An inefficient DMU need not despair: information regarding how it might reach the efficient frontier can be obtained from solving the dual of

. After introducing weights,

for DMU

j and dual efficiency of DMU

k,

, the dual of

is formulated as follows:

Here, we minimize the dual efficiency of DMU k, where Constraint (A8) ensures the weighted sum of reference inputs i is less than or equal to the input for DMU k and Constraint (A9) ensures the weighted sum of reference outputs r is greater than or equal to the output of DMU k. Inequalities (A10) ensure weights are non-negative.

An efficient DMU t will yield . For an inefficient DMU k, solving will give . The reference set (or benchmarks) to this inefficient center is identified by observing the efficient DMUs whose .

Appendix A.2. Bootstrap DEA

We follow the approach outlined in [

38], which uses the Shephard input

(

) rather than the Farrell efficiency score

(where

and

). For each year

t and treatment dimension

c:

- (1)

Solve an input-oriented VRS DEA and calculate for .

- (2)

Let be a list of mirrored efficiencies.

- (3)

For :

- (a)

Generate a standardized realization of efficiencies through sampling with replacement from mirrored efficiencies;

- (b)

Using this sample, generate fictional reference inputs, ;

- (c)

Solve VRS DEA using original outputs and inputs, and use as reference inputs for DMUs .

- (4)

Calculate the bias-corrected mean efficiency, , and quantiles for each DMU j.

This bootstrap approach repeatedly samples inputs to generate a range of efficiencies, ultimately providing us with an

average efficiency score that better distinguishes efficient DMUs centers from one another, even if that difference is small.

Table 3,

Table 4 and

Table 5 show efficiency scores derived from

, along with the bias-corrected mean efficiencies and their 5th and 95th quantiles (denoted by

,

,

, and

, respectively). The ranking of DMUs remains, in general, consistent with results from solving

just once.

References

- Jaffray, D.A.; Gospodarowicz, M.K. Radiation Therapy for Cancer. In Cancer: Disease Control Priorities, 3rd ed.; Gelband, H., Jha, P., Sankaranarayanan, R., Horton, S., Eds.; The International Bank for Reconstruction and Development: Washington, DC, USA, 2015; Volume 3, Chapter 14. [Google Scholar] [CrossRef] [Green Version]

- Citrin, D.E. Recent developments in radiotherapy. N. Engl. J. Med. 2017, 377, 1065–1075. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J.; Harper, A.; Nichols, E.M.; Rao, G.G.; Mohindra, P.; Roque, D.M. Barriers to timely completion of radiation therapy in patients with cervical cancer in an urban tertiary care center. Cureus 2017, 9, e1681. [Google Scholar] [CrossRef] [Green Version]

- Lin, S.M.; Ku, H.Y.; Chang, T.C.; Liu, T.W.; Hong, J.H. The prognostic impact of overall treatment time on disease outcome in uterine cervical cancer patients treated primarily with concomitant chemoradiotherapy: A nationwide Taiwanese cohort study. Oncotarget 2017, 8, 85203. [Google Scholar] [CrossRef] [PubMed]

- Olivotto, I.A.; Lesperance, M.L.; Truong, P.T.; Nichol, A.; Berrang, T.; Tyldesley, S.; Germain, F.; Speers, C.; Wai, E.; Holloway, C.; et al. Intervals longer than 20 weeks from breast-conserving surgery to radiation therapy are associated with inferior outcome for women with early-stage breast cancer who are not receiving chemotherapy. J. Clin. Oncol. 2009, 27, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Harnett, N.; Bak, K.; Lockhart, E.; Ang, M.; Zychla, L.; Gutierrez, E.; Warde, P. The Clinical Specialist Radiation Therapist (CSRT): A case study exploring the effectiveness of a new advanced practice role in Canada. J. Med. Radiat. Sci. 2018, 65, 86–96. [Google Scholar] [CrossRef] [PubMed]

- Cancer Care Ontario. Ontario Cancer Plan 4: 2015–2019; Cancer Care Ontario: Toronto, ON, Canada, 2015. [Google Scholar]

- Cantor, V.J.M.; Poh, K.L. Integrated Analysis of Healthcare Efficiency: A Systematic Review. J. Med. Syst. 2018, 42, 8. [Google Scholar] [CrossRef]

- Dexter, F.; O’Neill, L. Data envelopment analysis to determine by how much hospitals can increase elective inpatient surgical workload for each specialty. Anesth. Analg. 2004, 99, 1492–1500. [Google Scholar] [CrossRef]

- Aletras, V.; Kontodimopoulos, N.; Zagouldoudis, A.; Niakas, D. The short-term effect on technical and scale efficiency of establishing regional health systems and general management in Greek NHS hospitals. Health Policy 2007, 83, 236–245. [Google Scholar] [CrossRef]

- Allin, S.; Veillard, J.; Wang, L.; Grignon, M. How can health system efficiency be improved in Canada? Healthc. Policy 2015, 11, 33. [Google Scholar] [CrossRef] [Green Version]

- Zakowska, I.; Godycki-Cwirko, M. Data envelopment analysis applications in primary health care: A systematic review. Fam. Pract. 2020, 37, 147–153. [Google Scholar] [CrossRef]

- Özcan, Y.A. Health Care Benchmarking and Performance Evaluation: An Assessment Using Data Envelopment Analysis, 2nd ed.; Springer Science + Business Media: New York, NY, USA, 2014. [Google Scholar]

- Kohl, S.; Schoenfelder, J.; Fügener, A.; Brunner, J.O. The use of Data Envelopment Analysis (DEA) in healthcare with a focus on hospitals. Health Care Manag. Sci. 2019, 22, 245–286. [Google Scholar] [CrossRef] [PubMed]

- Araújo, C.; Barros, C.P.; Wanke, P. Efficiency determinants and capacity issues in Brazilian for-profit hospitals. Health Care Manag. Sci. 2014, 17, 126–138. [Google Scholar] [CrossRef] [PubMed]

- Büchner, V.A.; Hinz, V.; Schreyögg, J. Health systems: Changes in hospital efficiency and profitability. Health Care Manag. Sci. 2016, 19, 130–143. [Google Scholar] [CrossRef] [PubMed]

- Sherlaw-Johnson, C.; Gallivan, S.; Jenkins, D. Evaluating cervical cancer screening programmes for developing countries. Int. J. Cancer 1997, 72, 210–216. [Google Scholar] [CrossRef]

- Jensen, A.; Vejborg, I.; Severinsen, N.; Nielsen, S.; Rank, F.; Mikkelsen, G.J.; Hilden, J.; Vistisen, D.; Dyreborg, U.; Lynge, E. Performance of clinical mammography: A nationwide study from Denmark. Int. J. Cancer 2006, 119, 183–191. [Google Scholar] [CrossRef]

- Van Luijt, P.; Heijnsdijk, E.; de Koning, H. Cost-effectiveness of the Norwegian breast cancer screening program. Int. J. Cancer 2017, 140, 833–840. [Google Scholar] [CrossRef] [Green Version]

- Cheng, C.Y.; Datzmann, T.; Hernandez, D.; Schmitt, J.; Schlander, M. Do certified cancer centers provide more cost-effective care? A health economic analysis of colon cancer care in Germany using administrative data. Int. J. Cancer 2021, 149, 1744–1754. [Google Scholar] [CrossRef]

- Langabeer, J.R.; Özcan, Y.A. The economics of cancer care: Longitudinal changes in provider efficiency. Health Care Manag. Sci. 2009, 12, 192–200. [Google Scholar] [CrossRef]

- Charnes, A.; Cooper, W.W.; Rhodes, E. Measuring the efficiency of decision making units. Eur. J. Oper. Res. 1978, 2, 429–444. [Google Scholar] [CrossRef]

- Banker, R.D.; Charnes, A.; Cooper, W.W. Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manag. Sci. 1984, 30, 1078–1092. [Google Scholar] [CrossRef] [Green Version]

- Simar, L.; Wilson, P.W. Sensitivity analysis of efficiency scores: How to bootstrap in nonparametric frontier models. Manag. Sci. 1998, 44, 49–61. [Google Scholar] [CrossRef] [Green Version]

- Lothgren, M.; Tambour, M. Testing scale efficiency in DEA models: A bootstrapping approach. Appl. Econ. 1999, 31, 1231–1237. [Google Scholar] [CrossRef]

- Périco, A.E.; Santana, N.B.; Rebelatto, D.A.N. Estimating the efficiency from Brazilian banks: A bootstrapped Data Envelopment Analysis (DEA). Production 2016, 26, 551–561. [Google Scholar] [CrossRef]

- Lee, B.L.; Worthington, A.; Wilson, C. Learning environment and primary school efficiency: A DEA bootstrap truncated regression analysis. Int. J. Educ. Res. 2019, 33, 678–697. [Google Scholar] [CrossRef] [Green Version]

- Kim, C.; Kim, H.J. A study on healthcare supply chain management efficiency: Using bootstrap data envelopment analysis. Health Care Manag. Sci. 2019, 22, 534–548. [Google Scholar] [CrossRef]

- Statistics Canada. Population and dwelling counts, for Canada and forward sortation areas as reported by the respondents, 2011 Census (table). Population and Dwelling Count Highlight Tables. 2011 Census. In Statistics Canada Catalogue No. 98-310-XWE2011002; Statistics Canada: Ottawa, ON, Canada, 2012. [Google Scholar]

- Statistics Canada. Population and dwelling counts, for Canada and forward sortation areas© as reported by the respondents, 2016 Census (table). Population and Dwelling Count Highlight Tables. 2016 Census. In Statistics Canada Catalogue No. 98-402-X2016001; Statistics Canada: Ottawa, ON, Canada, 2017. [Google Scholar]

- World Bank. World Development Indicators; World Bank: Washington, DC, USA, 2021. [Google Scholar]

- Ontario Ministry of Health and Long-Term Care. Group A: Classification of Hospitals. Available online: https://www.health.gov.on.ca/en/common/system/services/hosp/group_a.aspx (accessed on 4 May 2021).

- Cancer Care Ontario. Radiation Treatment Capital Investment Strategy April 2012; Cancer Care Ontario: Toronto, ON, Canada, 2012. [Google Scholar]

- Cancer Care Ontario. Radiation Treatment Capital Investment Strategy 2018; Cancer Care Ontario: Toronto, ON, Canada, 2018. [Google Scholar]

- Simar, L.; Wilson, P.W. A general methodology for bootstrapping in non-parametric frontier models. J. Appl. Stat. 2000, 27, 779–802. [Google Scholar] [CrossRef]

- Ozcan, Y.A.; Legg, J.S. Performance measurement for radiology providers: A national study. Int. J Healthc. Tech. Manag. 2014, 14, 209–221. [Google Scholar] [CrossRef]

- Cancer Care Ontario. Radiation Treatment Program: Implementation Plan 2019–2023; Cancer Care Ontario: Toronto, ON, Canada, 2019. [Google Scholar]

- Behr, A. Production and Efficiency Analysis with R; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).