Abstract

Mitochondrial dysfunction is considered to be a major cause of primary mitochondrial myopathy in children and adults, as reduced mitochondrial respiration and morphological changes such as ragged red fibers (RRFs) are observed in muscle biopsies. However, it is also possible to hypothesize the role of mitochondrial dysfunction in aging muscle or in secondary mitochondrial dysfunctions. The recognition of true histological patterns of mitochondrial myopathy can avoid unnecessary genetic investigations. The aim of our study was to develop and validate machine-learning methods for RRF detection in light microscopy images of skeletal muscle tissue. We used image sets of 489 color images captured from representative areas of Gomori’s trichrome-stained tissue retrieved from light microscopy images at a 20× magnification. We compared the performance of random forest, gradient boosting machine, and support vector machine classifiers. Our results suggested that the advent of scanning technologies, combined with the development of machine-learning models for image classification, make neuromuscular disorders’ automated diagnostic systems a concrete possibility.

1. Introduction

Mitochondrial diseases represent a group of metabolic disorders with a common link of impaired mitochondrial function producing a chronic state of energy failure.

With a prevalence of 1/12,000 individuals, mitochondrial diseases have an extremely variable phenotype and can present at any age [1]. The most involved tissues are those with a high metabolic demand, such as the central nervous system, skeletal muscle, and heart.

Further complexity arises as a result of the dual genomic expression of mitochondrial proteins from both nuclear and mitochondrial DNAs [2].

A multidisciplinary approach to the diagnosis of mitochondrial disease is to integrate information from clinical, histochemical and biochemical tests in order to target molecular genetic screening [1]. Recently, the traditional diagnostic approach requiring histopathological investigations in muscle biopsy and the study of the oxidative phosphorylation (OxPhos) enzyme in muscle samples (“biopsy first”) has been replaced by massive gene testing-adopting methodologies of next-generation sequencing which target a few hundred genes or the whole exome (“genetics first”) [3,4,5,6]. The latter approach attempts to disentangle the associated clinical phenotypes based on the genotype (“reverse phenotyping”) [7] but often lacks the direct view of the consequences in disease tissues necessary to clarify uncertain cases [8].

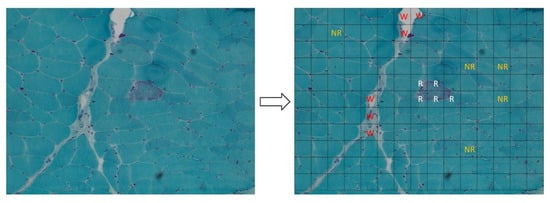

In fact, many patients with mitochondrial disorders show histological and histochemical changes indicative of OxPhos dysfunction such as cytochrome c oxidase-negative fibers or increased Succinic dehydrogenase stain and the presence of so-called “ragged red fibers” (RRFs) with Gomori’s trichrome stain (Figure 1). RRFs represent the accumulation of abnormal mitochondria below the plasma membrane of the muscle fiber and are considered a sort of “hallmark” of mitochondrial dysfunction. Their tissue recognition might facilitate gene prioritization in primary mitochondrial disorders.

Figure 1.

Left panel: ragged red fiber (RRF), hallmark of mitochondrial disorders, in Gomori’s trichrome stain (magnification: 20×; image acquired from the muscle of patient 8). Right panel: it shows the method used to create the dataset for our analysis: each acquired image was subdivided in 165 sub-images (15 × 11). All the sub-images that contain a portion of an RRF were added to the dataset and labeled as “ragged” (R label in the figure, written in white). After that, we arbitrarily collected a similar number of sub-images that do not contain RRFs, labeling them as “not ragged” (NR label, written in orange) and some “waste” sub-images (W label, written in red). The rest of the sub-images were discarded to avoid the unbalancing of the dataset.

With the introduction of slide scanners in the histology work-flow, the microscopic examination of many kinds of tissue can now be performed on specialized software [9]. In cancer research, for example, the use of digital slides to examine disease-specific biological characteristics and quantitatively assess tumor progression is a common practice [10].

Moreover, the lack of uniformity in the diagnosis is another important issue in the management of mitochondrial diseases. Artificial intelligence techniques in digital slides could contribute to the achievement of agreed and shared criteria, which are often missing especially in the diagnosis of ultra-rare disorders.

The aim of this study was to develop and validate machine-learning methods to detect RRFs in digital light microscopy images of skeletal muscle sections. We designed and tested a classification system based on color, texture, and wavelet transform features able to predict the presence of RRFs in the images.

2. Materials and Methods

Histological images data were collected from 9 patients affected by mitochondrial disease (Table S1). Each image contained at least one RRF. For light microscopy acquisitions, we used a Zeiss AxioCam MRc5 camera mounted on a Zeiss Imager.M2, and we used the acquisition software AxioVision SE64 rel 4.9.1. All images were acquired at a 20× magnification with a dimension of 1653 × 1239 pixels (format: JPEG; resolution: 96 DPI).

From each image acquired, we generated 165 sub-images with a size of 110 × 110 pixels (15 × 11 sub-images; Figure 1). We then collected all the sub-images that contained an RRF, and we manually annotated them as “ragged” (100 sub-images). After that, we arbitrarily collected a similar number of sub-images that did not contain RRFs, and we manually annotated them as “not ragged” (138 sub-images). Finally, we also arbitrarily collected some images manually annotated as “waste” (250 sub-mages). These sub-images included the areas of the image where:

- There were artifacts (bubbles, slice folding, staining artifacts, etc.) that did not allow a clear view;

- The percentage of the connective tissue was higher than in the muscle tissue.

With this method, we composed the dataset for machine-learning models, and it resulted in a total of 488 sub-images.

All the sub-images that comprised the dataset were used as inputs for generating features. The generated features were grouped into color, texture, and wavelet transform features.

Color features: the HSV (which stands for Hue, Saturation, Value) color model is a non-linear transformation from the RGB (red, green, blue) color space that can describe a perceptual color relationship more accurately. In this paper, the HSV color space was adopted for color feature extraction. All RGB color sub-images were transformed to HSV color sub-images. Thirteen first-order statistical features were extracted from each channel’s histogram, for a total of 39 color features.

Texture features: color sub-images were converted to grayscale images. A total of 43 second-order texture features were derived from three matrices:

- The grey-level co-occurrence matrix (GLCM) of size Ng × Ng is defined as P(i,j|δ,θ). The (i,j) element of this matrix represents the number of times. The combination of levels i and j occurs in two pixels in the image, which are separated by a distance of δ pixels along angle θ [11].Twenty-one second-order GLCM features were calculated.

- The grey-level run length matrix (GLRLM) quantifies gray-level runs, which are defined as the length in the number of pixels, of consecutive pixels that have the same gray-level value. In a gray-level run length matrix P(i,j|θ), the (i,j)th element describes the number of runs with gray level i and length j occur in the image along angle θ [12].Eleven second-order GLRLM features were calculated.

- The grey-level size zone matrix (GLSZM) quantifies gray-level zones in an image. A gray level zone is defined as the number of connected pixels that share the same gray level intensity. A pixel is considered connected, if the distance is 1, according to the infinity norm (8-connected region in two dimensions). In the gray-level size zone matrix P(i,j), the (i,j)th element equals the number of zones with gray level i and size j appears in the image. Contrary to the GLCM and the GLRLM, the GLSZM is rotation-independent, with only one matrix calculated for all directions in the image [13].Eleven second-order GLSZM features were calculated.

Wavelet transform features: the wavelet transform uses different basis functions to split images into multiple frequency scales described as high and low frequency details. In a two-dimensional (2D) wavelet, four quarter-sized images were generated through the combined action of low-pass and high-pass filters in each of the columns and rows of the image. The wavelet filter used in the processing of the wavelet transform is a least asymmetric Daubechies basis function with a length of 8. Thirteen first-order statistical features for each resulting 4 sub-images were computed.

The dataset was composed of 250 “waste” images, 100 “ragged” images and 138 “not ragged” images. A total of 134 features (39 color features, 43 texture features, and 52 wavelet transform features) were extracted from each sub-image. The features were normalized to a zero mean and unit variance.

We performed a double-step binary classification. The first step aimed to distinguish muscular tissue from the non-relevant zones of the images. For the first step, we used the whole dataset: the waste sub-images were labeled as “waste”, and the “ragged” and the “not ragged” sub-images were labeled as “tissue”. The second step aimed to distinguish RRFs from “healthy” muscle fibers. For the second step we used the “tissue” sub-images only: the ragged sub-images were labeled as “ragged”, and the “not ragged” sub-images were labeled as “not-ragged”.

In both steps, the dataset was split into a training set (80%) and a test set (20%) to train and test the machine-learning models. The training set contains a known output, and the model learns with these data in order to be generalized to new data.

Both classifiers were built choosing the best model between 3 supervised machine-learning algorithms:

- Random forest (RF): This is a widely used shallow machine-learning method that combines decision tree predictors following the bagging technique [14,15]. In this model, the class that receives the majority of votes from trees in the forest is considered the output result. This protocol relies on creating the number of models (n) and averaging predictions of all models for a finale prediction.

- Gradient boosting machine (GBM): This is an ensemble algorithm of decision trees [16,17]. The ensemble works by combining a set of weaker machine-learning algorithms to get an improved machine-learning algorithm in overall. The main difference between GBM and RF is the way of sampling. RF is based on uniform sampling with return. Instead, GBM gives higher weights to the wrongly predicted samples in the current weaker leaner, and then these samples will be paid more attention to when training the next weaker leaner.

- Support vector machine (SVM): This system divides the training set into two parts by constructing a hyper-plane in the feature space. Features in non-linear separation may be changed into linear separation, using kernel functions to map the original data to a feature space with a higher dimension [18,19].

In an attempt to prevent overfitting, we used 10-fold cross-validation to train classifiers. The training dataset was randomly divided into 10 roughly equally numbered, non-overlapping subsets, each called “a fold”. Then, nine folds were used as the training set, and the last one as the validation set. Using each of the 10 folds as the validation set, the above process was repeated 10 times.

The generations of each model for predictions required tuning of key hyperparameters. The value of each parameter was chosen by using random search at each step of the cross-validation.

For each model, the sensitivity (SEN), specificity (SPE), false positive rate (FPR), false negative rate (FNR), positive predicted value (PPV), negative predicted value (NPV), accuracy (ACC), and F1 measure (F1) were calculated to choose the best hyperparameters values. After retraining, the performance of the tuned models was further assessed on the test set by constructing the corresponding receiver-operating characteristics (ROCs). The ROC curve illustrates the tradeoff between the SEN and the SPE as a cut-off point for decision making. Finally, the area under the ROC curve (AUC) was estimated for each model. Figure S1 shows a summary outline that well explains the entire process.

All the aforementioned methods were performed using the programming language. R. radiomics package was used to extract color features and texture features. waveslim package was used to perform wavelet transforms on the images. Machine-learning models were built using the package mlr. ROC curve calculations were performed using the package ROSE.

3. Results

We designed an experimental setting to demonstrate automated learners’ ability to identify the following:

- The wasted zones in Gomori’s trichrome-stained muscle images;

- RRFs in a field of normal muscular fibers.

We benchmarked 3 machine-learning models in order to select the one with the best overall fit for both classification steps. Eight classification metrics were estimated using 10-fold cross-validation for the training set. Table 1 shows performance metrics. Each model was fine-tuned optimizing the hyperparameters in order to achieve the highest scores for each model (priority to F1 metrics). Such models were used to estimate the ROC curve and the AUC of the test set.

Table 1.

Performance metrics of each model: (a) waste-tissue classification results; (b) ragged–not ragged classification results.

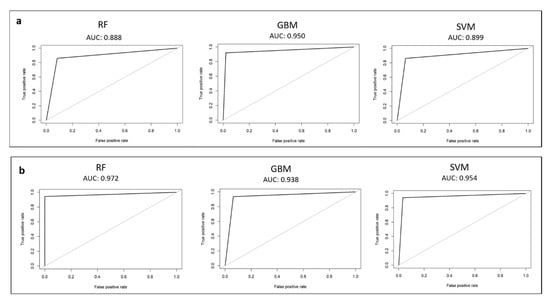

3.1. Waste-Tissue Classification

The F1 scores for RF, GBM, and SVM for the waste-tissue classification step were 0.87, 0.91, and 0.89, respectively. GBM exhibited the best F1 score. In terms of other metrics, the accuracies of RF, GBM, and SVM were 0.87, 0.91, and 0.90, respectively (Table 1a). The tuned values of the hyperparameters for each model are shown in Table S2. For each tuned and retrained model, the ROC curve and the AUC value were calculated on the test set. Figure 2a shows ROC curves. The AUCs of the tuned RF, GBM, and SVM were 0.89, 0.95, and 0.90, respectively. The tuned GBM model exhibited the higher AUC value of the test set, and the performance was significantly better than those of the rest models. We selected this model as the best waste-tissue classifier. Table 2a shows the confusion matrix of the test set.

Figure 2.

Receiver-operating characteristic (ROC) curve calculated on the test set for each model: (a) waste-tissue classification results; (b) ragged–not ragged classification results.

Table 2.

Confusion matrix of the test set for each model: (a) waste-tissue classification results; (b) ragged–not-ragged classification results.

3.2. Ragged–Not Ragged Classification

The F1 scores for RF, GBM, and SVM for the ragged–not ragged classification step are 0.96, 0.95, and 0.95, respectively. RF exhibited the best F1 measure score. In terms of other metrics, the accuracies of RF, GBM, and SVM were 0.96, 0.95, and 0.94, respectively (Table 1b). Supplementary Table S2 indicates the tuned values of the hyperparameters for each model. For each tuned and retrained model, the ROC curve and the AUC value were calculated on the test set. The ROC curve for each model is shown in Figure 2b. The AUC values for ragged–not ragged graphs are ≥0.93. This indicated that RF, GBM, and SVM were able to separate ragged from “not ragged” fibers with a high confidence. The tuned RF model exhibited the higher AUC value of the test set. We selected this model as the best ragged–not ragged classifier. The confusion matrix of the test set is shown in Table 2b.

4. Discussion

Taken together, in our system, GBM and RF were selected as the best performing algorithms for the first and the second steps, respectively. Through the aforementioned model, we were able to obtain AUC values of >0.95 for both classification steps, and it is therefore possible to recognize, with a good reliability, the presence of RRFs on a tile that was generated from a 20× magnification image of Gomori’s trichrome-stained muscle tissue. We are aware that our sample size was relatively small for a machine learning analysis. The application of machine-learning models in rare diseases data is challenging because of the limited availability of training datasets.

Machine learning has been implemented in many areas of medicine with different uses in clinical practice [20,21]. Automated learning algorithms have been successfully evaluated in several types of cancer [22,23,24] and in microbiology [25], adopting pathological studies of tissues. As regards the muscle tissue, automated learning algorithms have been successfully evaluated in immunofluorescence images in several studies [26,27], and there are very few papers regarding histological images [28,29]. Each of these reports utilized algorithms able to recognize and segment cellular and environmental details and achieved good accuracy and performance. It must be said that convolutional neural networks (CNNs) were used as automated classification algorithms instead of machine-learning models in the majority of these works. CNNs remain the state of the art for image classification tasks, although automated learning is also used in biomedical images [30].

There are innovative elements in this paper. First, we have presented the first proof-of-concept study, where a mitochondrial histological “hallmark” of the disease can be automatically assessed in digital images. Beside RRF mitochondrial myopathies may present other pathological patterns such as cytochrome c oxidase-negative fiber, and this approach could be used to detect many morphological features in digital light microscopy images. Second, we have generated digital histology properties based on color, texture, and wavelet transform features from the input sub-images, in order to discriminate three subcategories to improve our definition of an RRF. Last, we have defined GBM as the best machine-learning model to achieve the classification of the wasted zones in Gomori’s trichrome-stained muscle images. In addition, the same machine-learning models can differentiate more properly RRF from normal fibers.

5. Conclusions

The goal of this paper was to take the first step in the automated recognition of histopathological patterns in muscle images in patients affected by mitochondrial diseases. We defined GBM as the best performing model for the waste-tissue classification step and RF as the best performing model for the ragged–not ragged classification step. The accuracies and the F1 measures performed by the three models (RF, GBM, and SVM) are comparable and very high, even if they adopted different approaches, further validating our results.

We are well aware that the fact that we did not use CNNs is a limitation of our article. In perspective, we should find the best CNN architecture for our classification tasks. This could produce an even better accuracy.

In order to set up a computer-aided diagnostic system in neuromuscular disorders, future works will require an automated recognition of other histopathological patterns beside RRFs. As a future work, we are going to try the application of this protocol in other additional morphological features of mitochondrial diseases and also in patterns of other neuromuscular disorders. Recent technology advances allow digital histopathology by scanning stained microscopic slides through the use of a commercially available scanner [31]. This automated system converts a glass slide to a digital whole slide image (WSI) preserving image resolution up to a 40× magnification. Although each WSI represents “big data”, interpretation is now made feasible using new image processing algorithms [32]. The advent of scanning technologies, combined with the development of machine-learning models for image classification, makes neuromuscular disorders’ automated diagnostic systems a concrete possibility.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/healthcare10030574/s1, Table S1: Clinical, histological, and molecular features of the nine patients, Table S2: Hyperparameters values for each model after the tuning operation, Figure S1: protocol used to select the model with the best overall fit for both classification steps.

Author Contributions

Conceptualization, J.B., M.C. and A.R.; methodology, C.F.; writing—original draft, J.B.; writing—review and editing, F.M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Ricerca Corrente 2021 e RC 5X1000 voluntary contributions, Italian Ministry of Health. This work was in part supported by a grant from the research project InGene 2.0. InGene 2.0 is funded by Tuscany Region under the Bando Ricerca e Salute 2018 Programme.

Institutional Review Board Statement

This study was approved by the Tuscany Regional Pediatric Ethics committee. Protocol code: InGene 2.0. Date of approval: 30 June 2020. The study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent for muscle biopsy was obtained from all subjects or parents involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- McFarland, R.; Taylor, R.W.; Turnbull, D.M. A neurological perspective on mitochondrial disease. Lancet Neurol. 2010, 9, 829–840. [Google Scholar] [CrossRef]

- Calvo, S.E.; Mootha, V.K. The mitochondrial proteome and human disease. Annu. Rev. Genom. Hum. Genet. 2010, 11, 25–44. [Google Scholar] [CrossRef] [Green Version]

- Wortmann, S.B.; Koolen, D.A.; Smeitink, J.A.; van den Heuvel, L.; Rodenburg, R.J. Whole exome sequencing of suspected mitochondrial patients in clinical practice. J. Inherit. Metab. Dis. 2015, 38, 437–443. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neveling, K.; Feenstra, I.; Gilissen, C.; Hoefsloot, L.H.; Kamsteeg, E.-J.; Mensenkamp, A.; Rodenburg, R.; Yntema, H.G.; Spruijt, L.; Vermeer, S.; et al. A post-hoc comparison of the utility of sanger sequencing and exome sequencing for the diagnosis of heterogeneous diseases. Hum. Mutat. 2013, 34, 1721–1726. [Google Scholar] [CrossRef] [PubMed]

- Haack, T.B.; Haberberger, B.; Frisch, E.M.; Wieland, T.; Iuso, A.; Gorza, M.; Strecker, V.; Graf, E.; Mayr, J.A.; Herberg, U.; et al. Molecular diagnosis in mitochondrial complex I deficiency using exome sequencing. J. Med. Genet. 2012, 49, 277–283. [Google Scholar] [CrossRef] [Green Version]

- Kohda, M.; Tokuzawa, Y.; Kishita, Y.; Nyuzuki, H.; Moriyama, Y.; Mizuno, Y.; Hirata, T.; Yatsuka, Y.; Yamashita-Sugahara, Y.; Nakachi, Y.; et al. A comprehensive genomic analysis reveals the genetic landscape of mitochondrial respiratory chain complex deficiencies. PLoS Genet. 2016, 12, e1005679. [Google Scholar] [CrossRef]

- Lek, M.; Karczewski, K.J.; Minikel, E.V.; Samocha, K.E.; Banks, E.; Fennell, T.; O’Donnell-Luria, A.H.; Ware, J.S.; Hill, A.J.; Cummings, B.B.; et al. Analysis of protein-coding genetic variation in 60,706 humans. Nature 2016, 536, 285–291. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wortmann, S.B.; Mayr, J.; Nuoffer, J.M.; Prokisch, H.; Sperl, W. A Guideline for the Diagnosis of Pediatric Mitochondrial Disease: The Value of Muscle and Skin Biopsies in the Genetics Era. Neuropediatrics 2017, 48, 309–314. [Google Scholar] [CrossRef]

- Martel, A.L.; Hosseinzadeh, D.; Senaras, C.; Zhou, Y.; Yazdanpanah, A.; Shojaii, R.; Patterson, E.S.; Madabhushi, A.; Gurcan, M.N. An image analysis resource for cancer research: PIIP-pathology image informatics platform for visualization, analysis, and management. Cancer Res. 2017, 77, e83–e86. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gutman, D.; Cobb, J.; Somanna, D.; Park, Y.; Wang, F.; Kurc, T.; Saltz, J.H.; Brat, D.J.; Cooper, L.A.D.; Kong, J. Cancer digital slide archive: An informatics resource to support integrated in silico analysis of TCGA pathology data. J. Am. Med. Inform. Assoc. 2013, 20, 1091–1098. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Haralick, R.; Shanmugan, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Galloway, M.M. Texture analysis using gray level run lengths. Comput. Graph. Image Processing 1975, 4, 172–179. [Google Scholar] [CrossRef]

- Thibault, G.; Fertil, B.; Navarro, C.; Pereira, S.; Cau, P.; Levy, N.; Sequeira, J.; Mari, J. Texture Indexes and Gray Level Size Zone Matrix. Application to Cell Nuclei Classification. In Proceedings of the Pattern Recognition and Information Processing (PRIP), Minsk, Belarus, 19–21 May 2009; pp. 140–145. [Google Scholar]

- Xiao, Y.; Wu, J.; Lin, Z.; Zhao, X. A deep learning-based multi-model ensemble method for cancer prediction. Comput. Methods Programs Biomed. 2018, 153, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Li, Y. Study of cardiovascular disease prediction model based on random forest in eastern China. Sci. Rep. 2020, 10, 5245. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 785–794. [Google Scholar]

- Derara, D.R. Diagnosis of Diabetes Mellitus Using Gradient Boosting Machine (LightGBM). Diagnostics 2021, 11, 1714. [Google Scholar] [CrossRef]

- Duda, R.O.; Peter, E.H.; David, G.S. Pattern Classification; Wiley-Interscience: Hoboken, NJ, USA, 2012. [Google Scholar]

- Huang, S. Applications of Support Vector Machine (SVM) Learning in Cancer Genomics. Cancer Genom. Proteom. 2018, 15, 41–51. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.H.; Asch, S.M. Machine learning and prediction in medicine-beyond the peak of inflated expectations. N. Engl. J. Med. 2017, 376, 2507–2509. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cabitza, F.; Rasoini, R.; Gensini, G.F. Unintended consequences of machine learning in medicine. JAMA 2017, 318, 517–518. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, T.J.; Wild, P.J.; Moch, H.; Buhmann, J.M. Computational pathology analysis of tissue microarrays predicts survivalof renal clear cell carcinoma patients. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, New York, NY, USA, 6–10 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–8. [Google Scholar]

- Yu, K.-H.; Zhang, C.; Berry, G.J.; Altman, R.; Ré, C.; Rubin, D.L.; Snyder, M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016, 7, 12474. [Google Scholar] [CrossRef] [Green Version]

- Ertosun, M.G.; Rubin, D.L. Automated grading of gliomas using deep learning in digital pathology images: A modular approach with ensemble of convolutional neural networks. AMIA Annu. Symp. Proc. 2015, 2015, 1899–1908. [Google Scholar]

- Poostchi, M.; Silamut, K.; Maude, R.J.; Jaeger, S.; Thoma, G. Image analysis and machine learning for detecting malaria. Transl. Res. 2018, 194, 36–55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Encarnacion-Rivera, L.; Foltz, S.; Hartzell, H.C.; Choo, H. Myosoft: An automated muscle histology analysis tool using machine learning algorithm utilizing FIJI/ImageJ software. PLoS ONE 2020, 15, e0229041. [Google Scholar] [CrossRef] [PubMed]

- Kastenschmidt, J.M.; Ellefsen, K.L.; Mannaa, A.H.; Giebel, J.J.; Yahia, R.; Ayer, R.E.; Pham, P.; Rios, R.; Vetrone, S.A.; Mozaffar, T.; et al. QuantiMus: A Machine Learning-Based Approach for High Precision Analysis of Skeletal Muscle Morphology. Front. Physiol. 2019, 10, 2019. [Google Scholar] [CrossRef] [PubMed]

- Kabeya, Y.; Okubo, M.; Yonezawa, S.; Nakano, H.; Inoue, M.; Ogasawara, M.; Saito, Y.; Tanboon, J.; Indrawati, L.A.; Kumutpongpanich, T.; et al. Deep convolutional neural network-based algorithm for muscle biopsy diagnosis. Lab Investig. 2021, 102, 220–226. [Google Scholar] [CrossRef]

- Xie, Y.; Liu, F.; Xing, F.; Yang, L. Deep Learning for Muscle Pathology Image Analysis. In Deep Learning and Convolutional Neural Networks for Medical Imaging and Clinical Informatics. Advances in Computer Vision and Pattern Recognition; Lu, L., Wang, X., Carneiro, G., Yang, L., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Sarvamangala, D.R.; Kulkarni, R.V. Convolutional neural networks in medical image understanding: A survey. Evol. Intell. 2021, 3, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Fischer, A.H.; Jacobson, K.A.; Rose, J.; Zeller, R. Hematoxylin and eosin staining of tissue and cell sections. Cold Spring Harb. Protoc. 2008, 2008, pdb-prot4986. [Google Scholar] [CrossRef]

- Madabhushi, A.; Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016, 33, 170–175. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).