White Blood Cell Classification Using Texture and RGB Features of Oversampled Microscopic Images

Abstract

1. Introduction

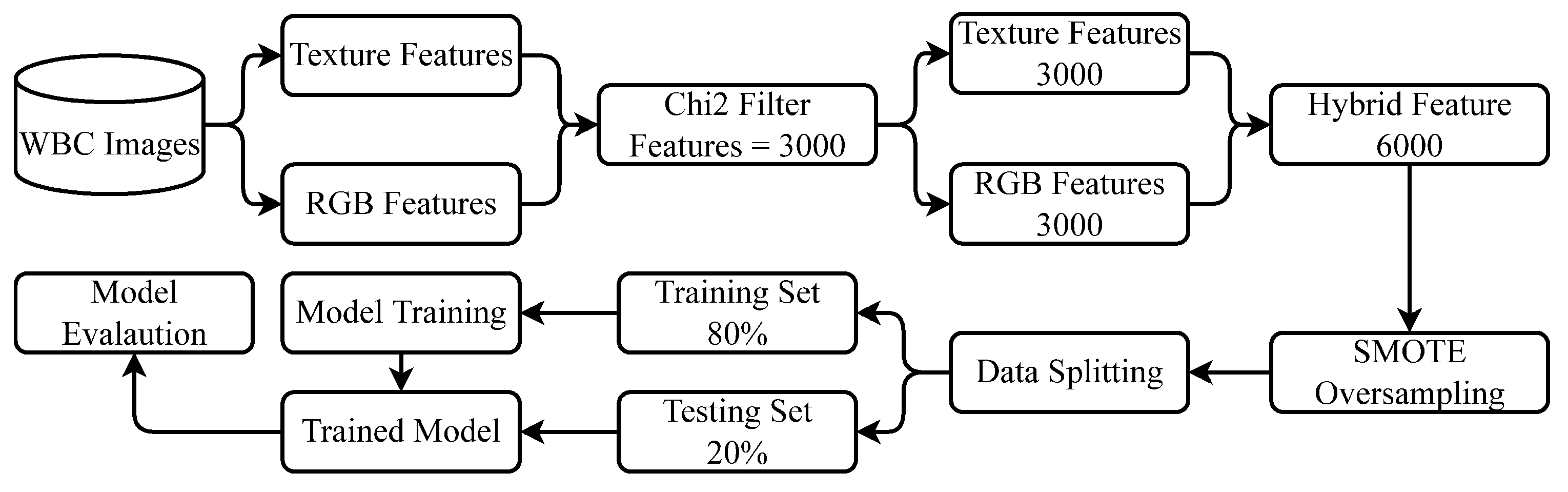

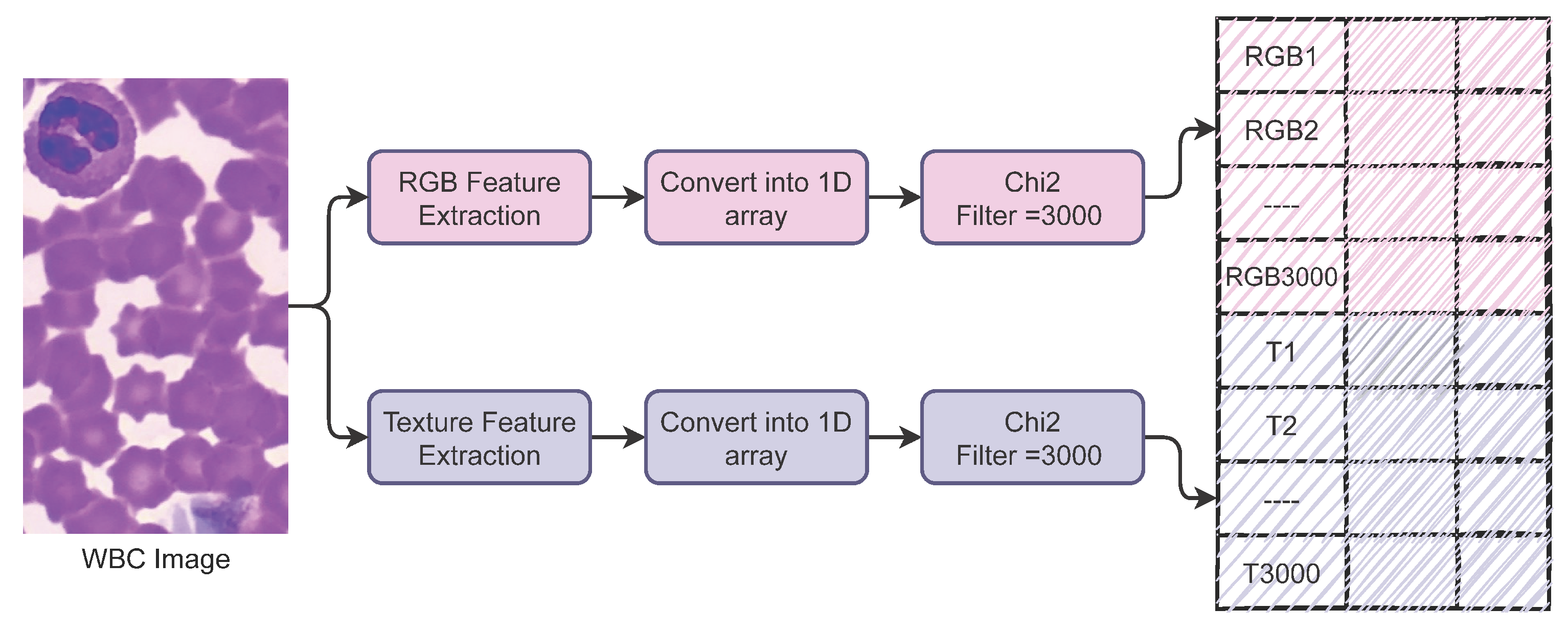

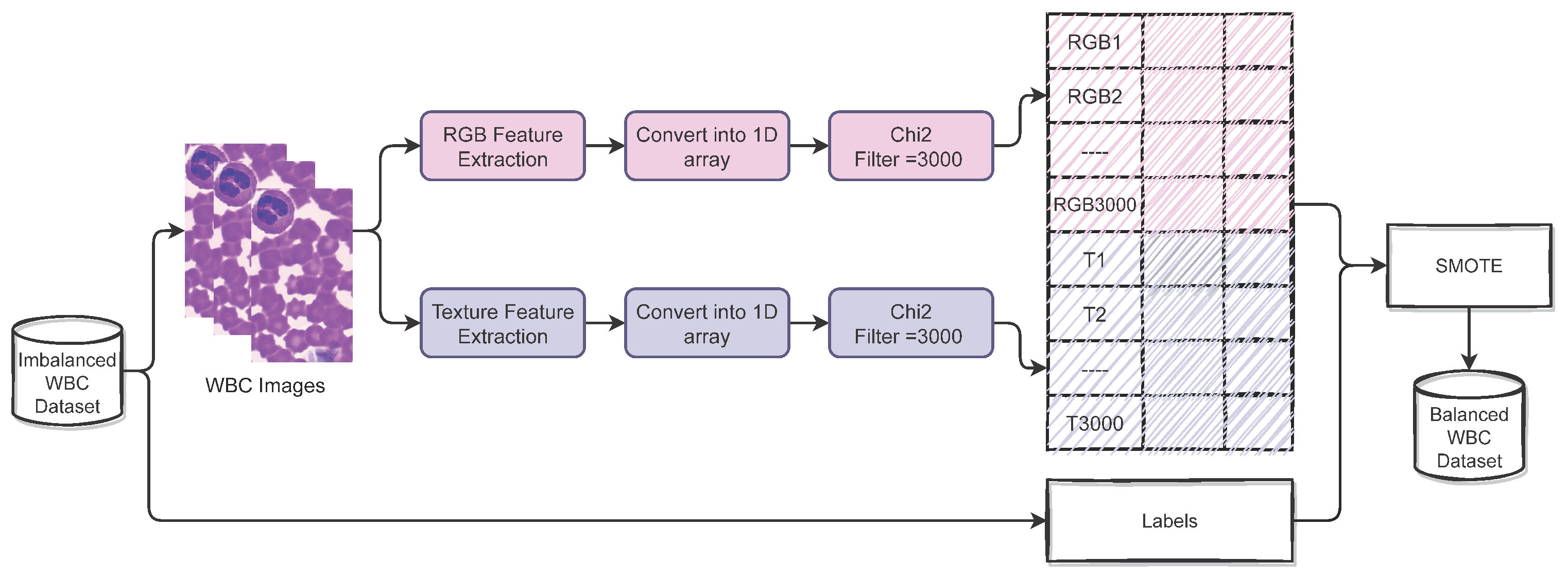

- An improved feature set is obtained by combining the texture features and RGB features to make a more correlated feature set with target classes to obtain a high accuracy. Later, Chi-squared (Chi2) is used to select an important and equal number of features for the models’ training. The models are evaluated using texture features and RGB features, in comparison to the proposed hybrid features.

- The imbalanced distribution of different classes of WBC is tackled using data resampling, which helps to reduce the model over-fitting. The synthetic minority oversampling technique (SMOTE) is applied for data resampling in this study for evaluating the influence of data resampling on the performance of machine learning models.

- Besides using various machine learning models such as decision tree (DT), random forest (RF), k-nearest neighbor (KNN), and support vector machine (SVM), state-of-the-art pre-trained deep learning models are also employed, including ResNet50 and VVG16. In addition, a custom-designed CNN is also used. Experiments are performed using the original dataset, augmented dataset, and oversampled dataset.

- The performances of all models is analyzed regarding different performance evaluation metrics. Furthermore, k-fold cross-validation and statistical t-tests are carried out. Additionally, the performance comparison with recent state-of-the-art approaches is made to analyze the performance of the proposed approach regarding accuracy and response time.

2. Related Work

3. Materials and Methods

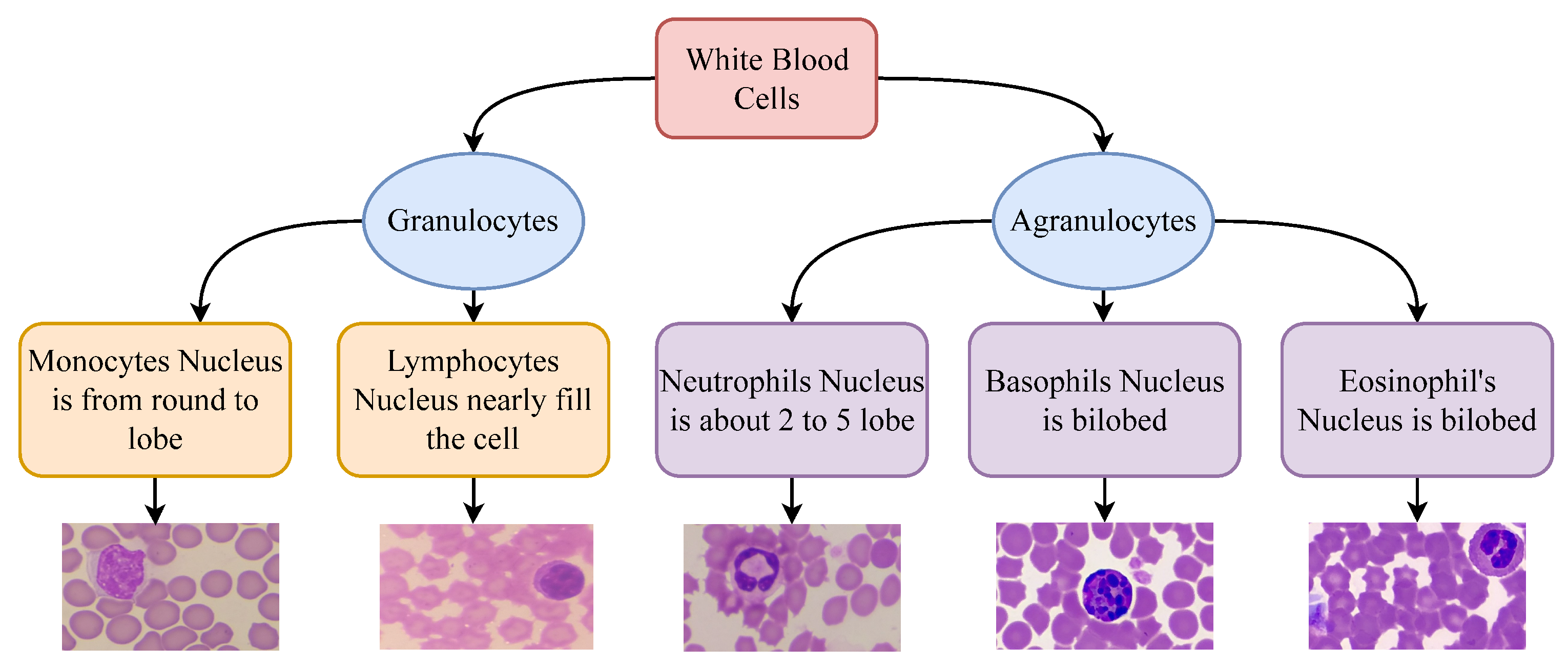

3.1. White Blood Cells

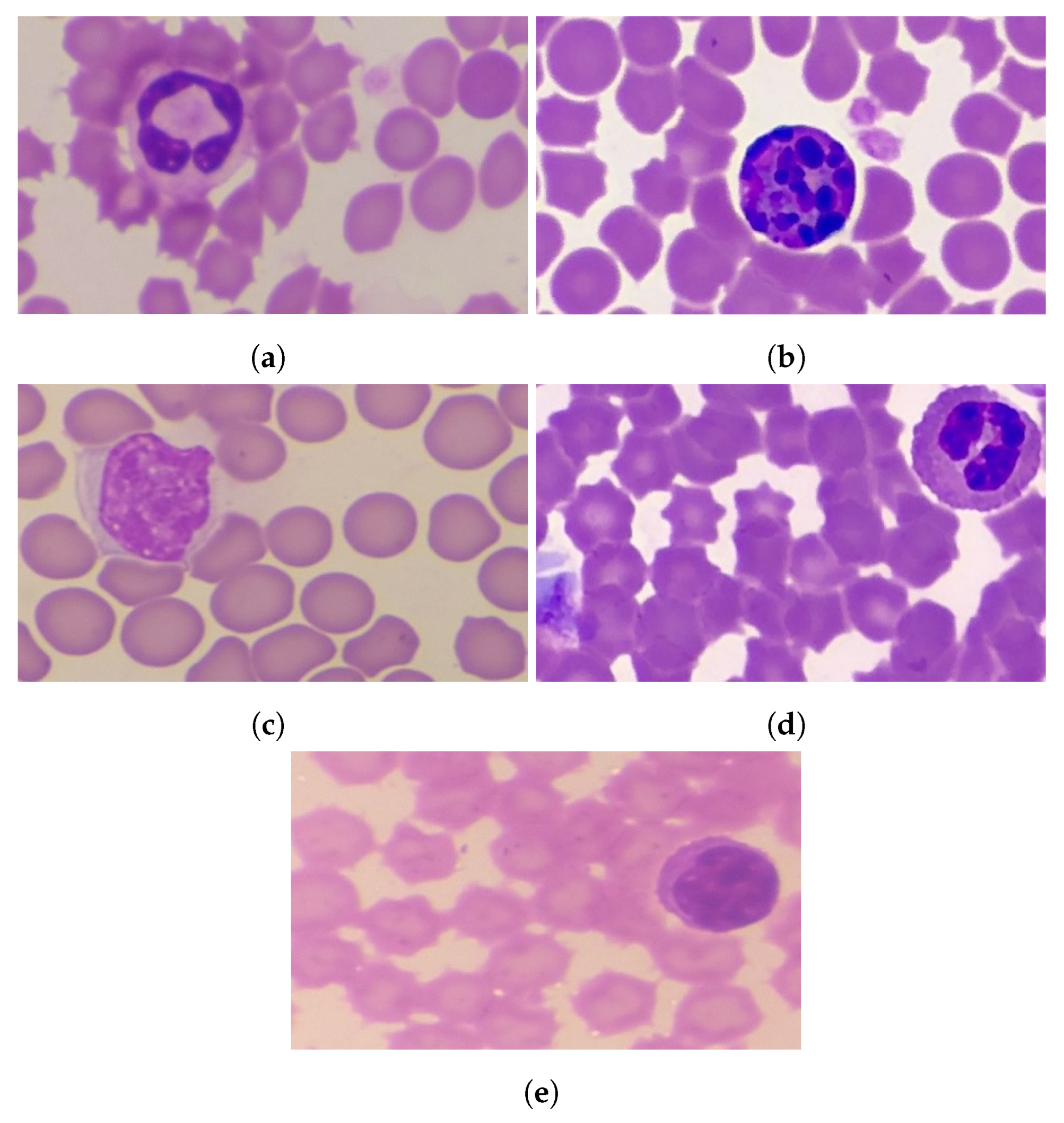

- Basophils comprise approximately less than 1% of WBCs. They are about twice the size of a red blood cell, with a bilobed nucleus. These cells release the protein called heparin, which prevents blood clotting. Basophils also release another protein called histamine, which causes inflammation. They release antibodies and antibiotics, which protect the body from the effects of any foreign objects. Figure 1b shows the types of basophils of WBCs Figure 1b.

- Monocytes forms approximately 3% of the total WBC. They are about two to three times larger than red blood cells. The nucleus is an almost round to lobed shape. It produces macrophages, which can destroy the larger particles via phagocytosis. The life span of macrophages in the blood is about 8 to 10 h, after which they move toward the lymphoid tissue and become a macrophage. The monocyte type of WBC is shown in Figure 1c.

- Eosinophils comprise about 2% of the WBC. They are about twice the size of a red blood cell, while their nucleus is bilobed. They inactivate the inflammation-producing substance, and attack parasites and worms. Figure 1d shows the sample image of the basophil types of WBC.

3.2. Flow of Implemented Methodology

3.3. WBC Dataset

3.4. Feature Engineering

3.4.1. RGB Features

3.4.2. Texture Features

3.4.3. Chi2

3.5. Synthetic Minority Oversampling Technique

3.6. Data Splitting

3.7. Machine Learning Models

3.8. WBC Classification

| Algorithm 1: WBC classification algorithm |

Input: WBC Images Result: WBC type detection ⟶ (Neutrophil Lymphocyte, Monocyte, Eosinophil, and Basophil) initialization; loop (Img in Images) RGB (Img) Txt (Img) loop end HF PFS Prediction Scores |

4. Results

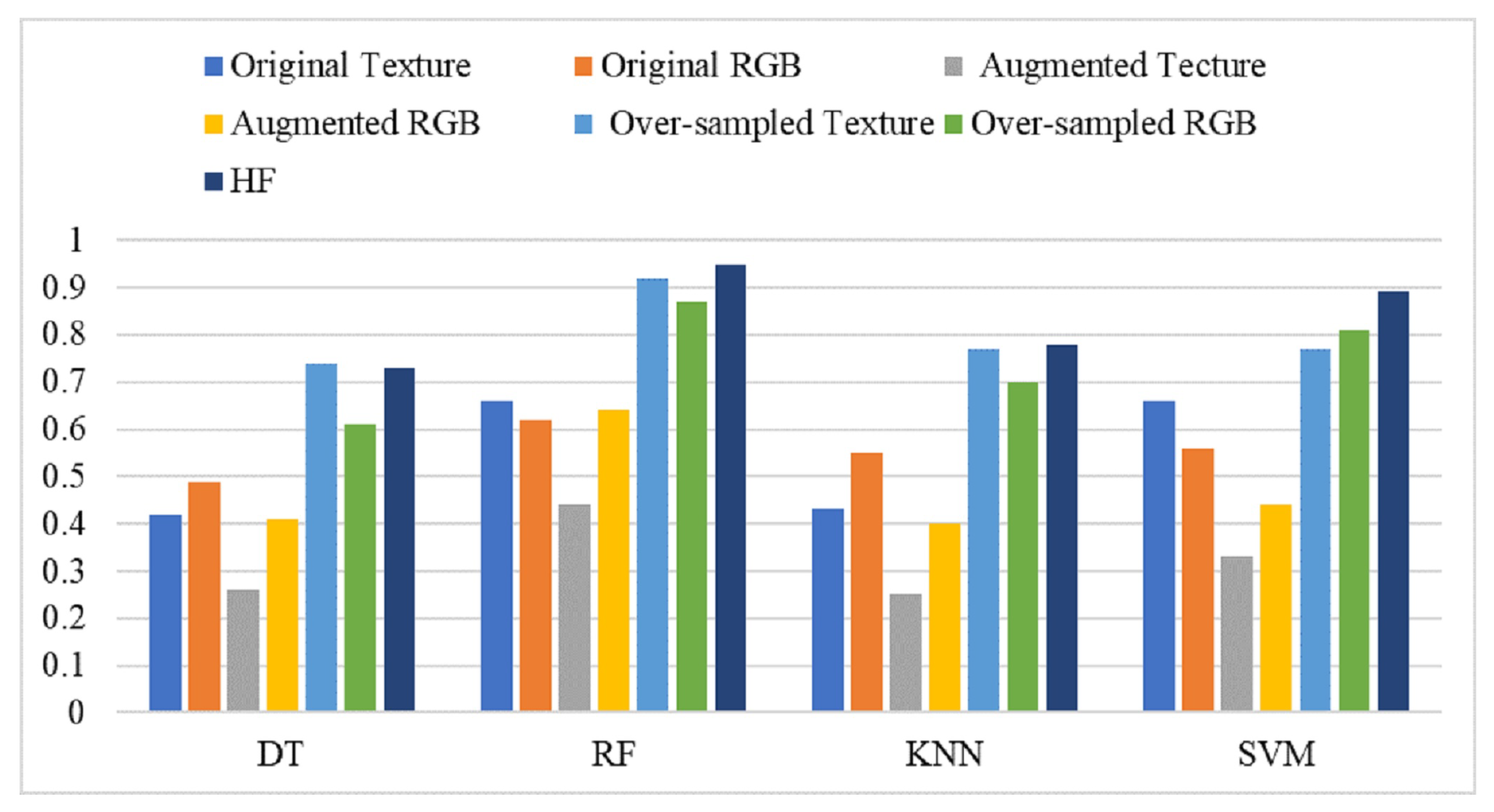

4.1. Results Using the Original Dataset

4.2. Results Using the Augmented Dataset

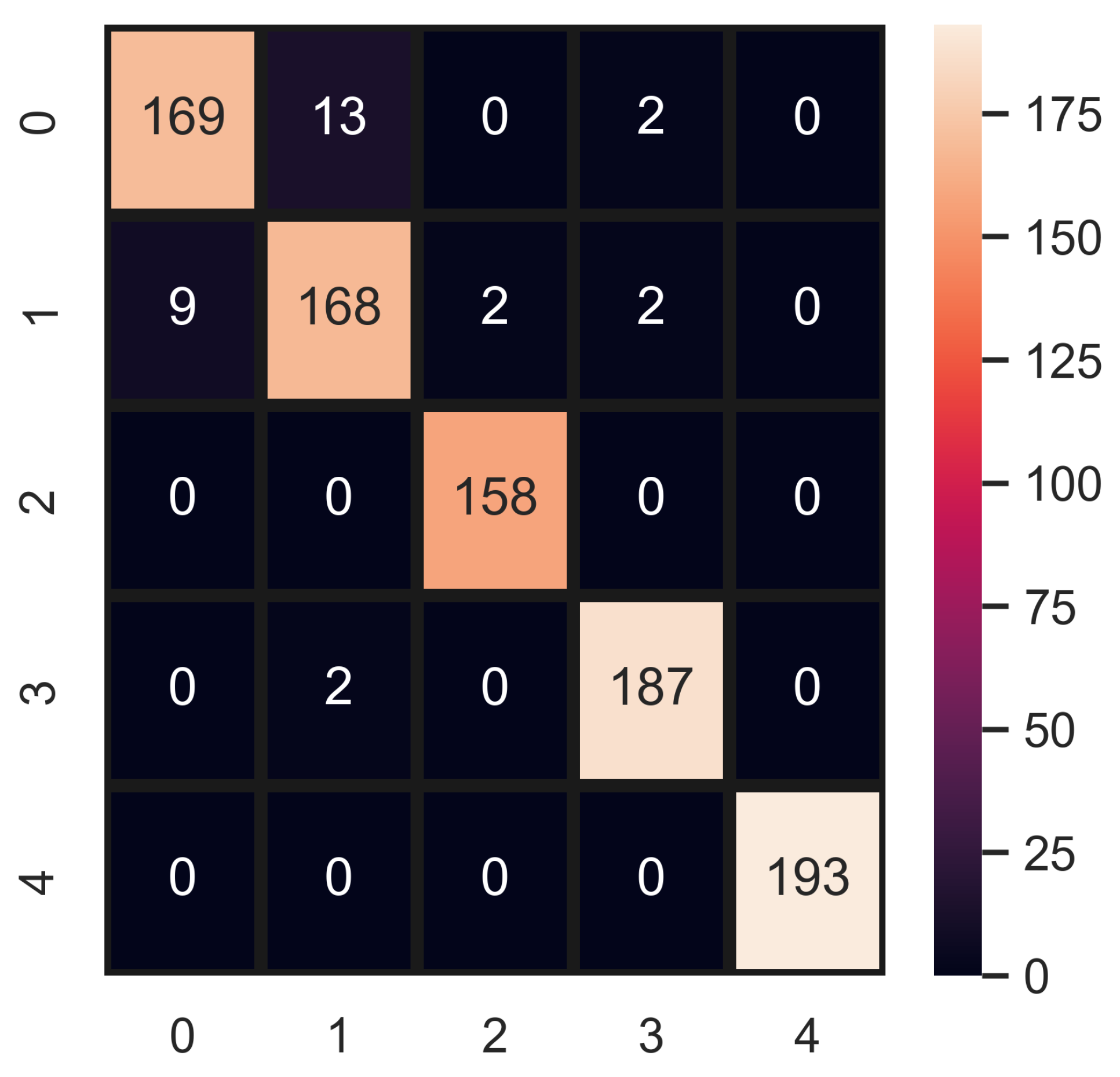

4.3. Performance of Models on Hybrid Features

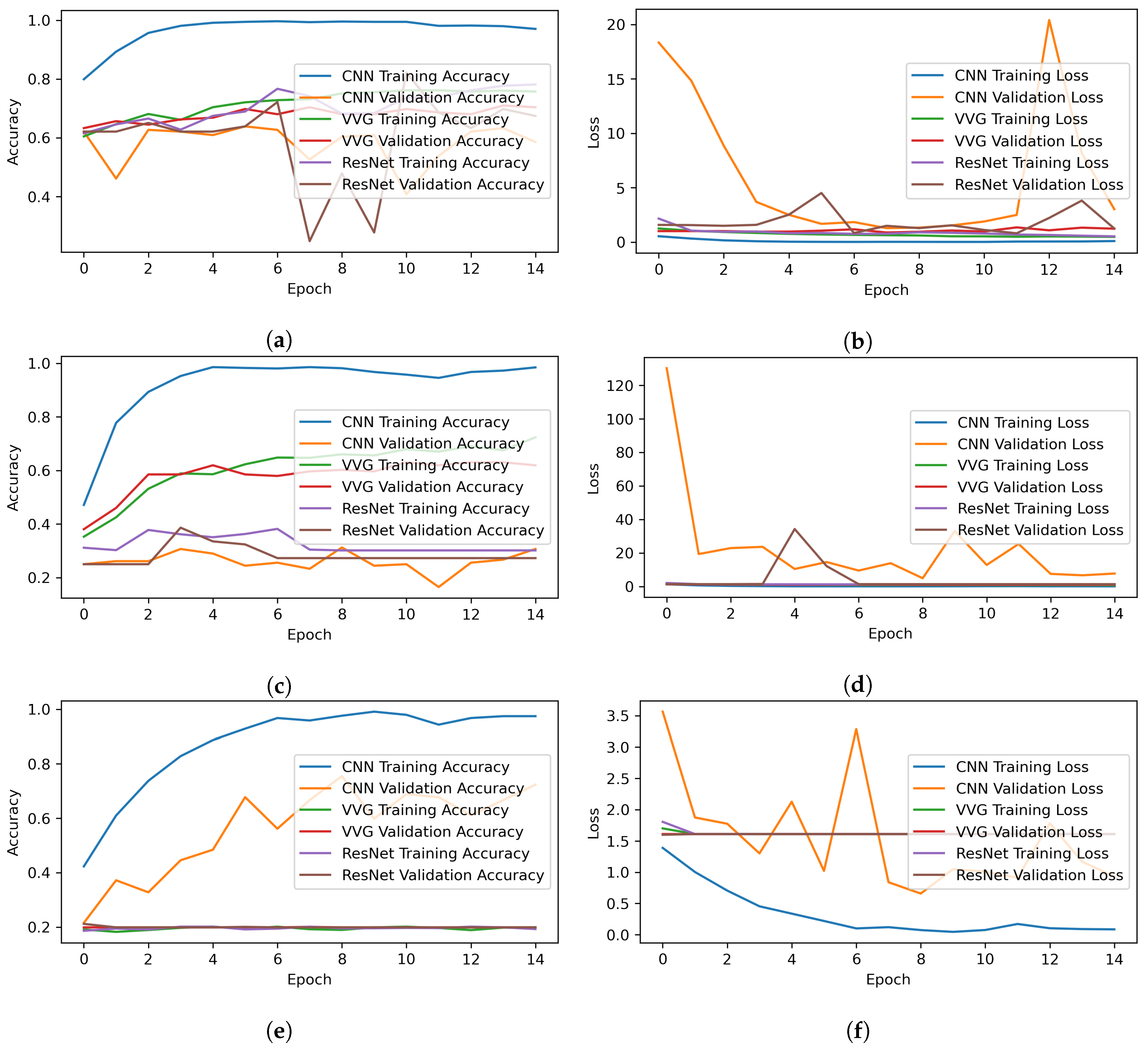

4.4. Performance Analysis of State-of-the-Art Deep Learning Models

4.5. Validation of the Proposed Approach

4.6. Best Performer Optimization Using Particle Swarm Optimization

4.7. Performance Comparison with State-of-the-Art Approaches

4.8. Statistical t-test

- Null Hypothesis (): if both compared results are statistically equal and there is no significant difference in results, it accepts the null hypothesis.

- Alternative Hypothesis (): if both compared results are not statistically equal and there is a significant difference in results, it rejects the null hypothesis and accepts the alternative hypothesis.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Phosri, S.; Jangpromma, N.; Chang, L.C.; Tan, G.T.; Wongwiwatthananukit, S.; Maijaroen, S.; Anwised, P.; Payoungkiattikun, W.; Klaynongsruang, S. Siamese crocodile white blood cell extract inhibits cell proliferation and promotes autophagy in multiple cancer cell lines. J. Microbiol. Biotechnol. 2018, 28, 1007–1021. [Google Scholar] [CrossRef] [PubMed]

- McKenzie, F.E.; Prudhomme, W.A.; Magill, A.J.; Forney, J.R.; Permpanich, B.; Lucas, C.; Gasser, R.A., Jr.; Wongsrichanalai, C. White blood cell counts and malaria. J. Infect. Dis. 2005, 192, 323–330. [Google Scholar] [CrossRef] [PubMed]

- Monteiro, A.C.B.; França, R.P.; Arthur, R.; Iano, Y. AI Approach Based on Deep Learning for Classification of White Blood Cells as a for e-Healthcare Solution. In Intelligent Interactive Multimedia Systems for e-Healthcare Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 351–373. [Google Scholar]

- White Blood Cell. Available online: https://www.britannica.com/science/white-blood-cell (accessed on 10 April 2022).

- Zhu, B.; Feng, X.; Jiang, C.; Mi, S.; Yang, L.; Zhao, Z.; Zhang, Y.; Zhang, L. Correlation between white blood cell count at admission and mortality in COVID-19 patients: A retrospective study. BMC Infect. Dis. 2021, 21, 574. [Google Scholar] [CrossRef] [PubMed]

- Özyurt, F. A fused CNN model for WBC detection with MRMR feature selection and extreme learning machine. Soft Comput. 2020, 24, 8163–8172. [Google Scholar] [CrossRef]

- Hegde, R.B.; Prasad, K.; Hebbar, H.; Singh, B.M.K. Comparison of traditional image processing and deep learning approaches for classification of white blood cells in peripheral blood smear images. Biocybern. Biomed. Eng. 2019, 39, 382–392. [Google Scholar] [CrossRef]

- Bandyopadhyay, S.; Maulik, U.; Roy, D. Gene identification: Classical and computational intelligence approaches. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 38, 55–68. [Google Scholar] [CrossRef]

- Min, S.; Lee, B.; Yoon, S. Deep learning in bioinformatics. Briefings Bioinform. 2017, 18, 851–869. [Google Scholar] [CrossRef]

- Bergeron, B.P. Bioinformatics Computing; Prentice Hall Professional: Hoboken, NJ, USA, 2003. [Google Scholar]

- Khouani, A.; El Habib Daho, M.; Mahmoudi, S.A.; Chikh, M.A.; Benzineb, B. Automated recognition of white blood cells using deep learning. Biomed. Eng. Lett. 2020, 10, 359–367. [Google Scholar] [CrossRef]

- Patil, A.; Patil, M.; Birajdar, G. White blood cells image classification using deep learning with canonical correlation analysis. IRBM 2021, 42, 378–389. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, J.; Zhou, M.; Li, Q.; Wen, Y.; Chu, J. A 3D attention networks for classification of white blood cells from microscopy hyperspectral images. Opt. Laser Technol. 2021, 139, 106931. [Google Scholar] [CrossRef]

- Manthouri, M.; Aghajari, Z.; Safary, S. Computational Intelligence Method for Detection of White Blood Cells Using Hybrid of Convolutional Deep Learning and SIFT. Comput. Math. Methods Med. 2022, 2022, 9934144. [Google Scholar] [CrossRef] [PubMed]

- Girdhar, A.; Kapur, H.; Kumar, V. Classification of White blood cell using Convolution Neural Network. Biomed. Signal Process. Control 2022, 71, 103156. [Google Scholar] [CrossRef]

- Cheuque, C.; Querales, M.; León, R.; Salas, R.; Torres, R. An Efficient Multi-Level Convolutional Neural Network Approach for White Blood Cells Classification. Diagnostics 2022, 12, 248. [Google Scholar] [CrossRef] [PubMed]

- Habibzadeh, M.; Jannesari, M.; Rezaei, Z.; Baharvand, H.; Totonchi, M. Automatic white blood cell classification using pre-trained deep learning models: ResNet and Inception. In Proceedings of the Tenth International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13–15 November 2017; International Society for Optics and Photonics: Bellingham, DC, USA, 2018; Volume 10696, p. 1069612. [Google Scholar]

- Sharma, S.; Gupta, S.; Gupta, D.; Juneja, S.; Gupta, P.; Dhiman, G.; Kautish, S. Deep Learning Model for the Automatic Classification of White Blood Cells. Comput. Intell. Neurosci. 2022, 2022, 7384131. [Google Scholar] [CrossRef] [PubMed]

- WBC Image Dataset. Available online: https://github.com/zxaoyou/segmentation_WBC (accessed on 23 October 2022).

- BCCD Dataset. Available online: https://www.kaggle.com/datasets/paultimothymooney/blood-cells (accessed on 23 October 2022).

- What Are White Blood Cells? Available online: https://www.urmc.rochester.edu/encyclopedia/content.aspx?ContentID=35&ContentTypeID=160 (accessed on 23 October 2022).

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T. Scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Alipo-on, J.R.; Escobar, F.I.; Novia, J.L.; Atienza, M.M.; Mana-ay, S.; Tan, M.J.; AlDahoul, N.; Yu, E. Dataset for Machine Learning-Based Classification of White Blood Cells of the Juvenile Visayan Warty Pig. 2022. Available online: https://ieee-dataport.org/documents/dataset-machine-learning-based-classification-white-blood-cells-juvenile-visayan-warty-pig (accessed on 15 July 2022).

- Álvarez, M.J.; González, E.; Bianconi, F.; Armesto, J.; Fernández, A. Colour and texture features for image retrieval in granite industry. Dyna 2010, 77, 121–130. [Google Scholar]

- Gosain, M.; Kaur, S.; Gupta, S. Review of Rotation Invariant Classification Methods for Color Texture Analysis. 2021. Available online: https://www.academia.edu/45598787/IRJET_Review_of_Rotation_Invariant_Classification_Methods_for_Color_Texture_Analysis?from=cover_page (accessed on 15 July 2022).

- Liu, H.; Setiono, R. Chi2: Feature selection and discretization of numeric attributes. In Proceedings of the 7th IEEE International Conference on Tools with Artificial Intelligence, Herndon, VA, USA, 5–8 November 1995; pp. 388–391. [Google Scholar]

- Rupapara, V.; Rustam, F.; Shahzad, H.F.; Mehmood, A.; Ashraf, I.; Choi, G.S. Impact of SMOTE on imbalanced text features for toxic comments classification using RVVC model. IEEE Access 2021, 9, 78621–78634. [Google Scholar] [CrossRef]

- Rustam, F.; Ashraf, I.; Mehmood, A.; Ullah, S.; Choi, G.S. Tweets classification on the base of sentiments for US airline companies. Entropy 2019, 21, 1078. [Google Scholar] [CrossRef]

- Mendieta, M.; Neff, C.; Lingerfelt, D.; Beam, C.; George, A.; Rogers, S.; Ravindran, A.; Tabkhi, H. A Novel Application/Infrastructure Co-design Approach for Real-time Edge Video Analytics. In Proceedings of the 2019 SoutheastCon, Raleigh, NC, USA, 10–14 April 2019; pp. 1–7. [Google Scholar]

- George, A.; Ravindran, A. Distributed middleware for edge vision systems. In Proceedings of the 2019 IEEE 16th International Conference on Smart Cities: Improving Quality of Life Using ICT & IoT and AI (HONET-ICT), Charlotte, NC, USA, 6–9 October 2019; pp. 193–194. [Google Scholar]

- Shafique, R.; Siddiqui, H.U.R.; Rustam, F.; Ullah, S.; Siddique, M.A.; Lee, E.; Ashraf, I.; Dudley, S. A novel approach to railway track faults detection using acoustic analysis. Sensors 2021, 21, 6221. [Google Scholar] [CrossRef]

- George, A.; Ravindran, A.; Mendieta, M.; Tabkhi, H. Mez: An adaptive messaging system for latency-sensitive multi-camera machine vision at the iot edge. IEEE Access 2021, 9, 21457–21473. [Google Scholar] [CrossRef]

- Rustam, F.; Reshi, A.A.; Aljedaani, W.; Alhossan, A.; Ishaq, A.; Shafi, S.; Lee, E.; Alrabiah, Z.; Alsuwailem, H.; Ahmad, A.; et al. Vector mosquito image classification using novel RIFS feature selection and machine learning models for disease epidemiology. Saudi J. Biol. Sci. 2022, 29, 583–594. [Google Scholar] [CrossRef] [PubMed]

- Reshi, A.A.; Rustam, F.; Mehmood, A.; Alhossan, A.; Alrabiah, Z.; Ahmad, A.; Alsuwailem, H.; Choi, G.S. An efficient CNN model for COVID-19 disease detection based on X-ray image classification. Complexity 2021, 2021, 6621607. [Google Scholar] [CrossRef]

- Brijain, M.; Patel, R.; Kushik, M.; Rana, K. A survey on decision tree algorithm for classification. Int. J. Eng. Dev. Res. 2014, 1, 5. [Google Scholar]

- Patange, A.; Jegadeeshwaran, R.; Bajaj, N.; Khairnar, A.; Gavade, N. Application of Machine Learning for Tool Condition Monitoring in Turning. Sound Vib 2022, 56, 127–145. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Qi, Y. Random forest for bioinformatics. In Ensemble Machine Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 307–323. [Google Scholar]

- Sarkar, M.; Leong, T.Y. Application of K-nearest neighbors algorithm on breast cancer diagnosis problem. In Proceedings of the AMIA Symposium, American Medical Informatics Association, Los Angeles, CA, USA, 4–8 November 2000; p. 759. [Google Scholar]

- Suthaharan, S. Support vector machine. In Machine Learning Models and Algorithms for Big Data Classification; Springer: Berlin/Heidelberg, Germany, 2016; pp. 207–235. [Google Scholar]

- Ji, Q.; Huang, J.; He, W.; Sun, Y. Optimized deep convolutional neural networks for identification of macular diseases from optical coherence tomography images. Algorithms 2019, 12, 51. [Google Scholar] [CrossRef]

- Thieu, N.V.; Mirjalili, S. MEALPY: A Framework of The State-of-the-Art Meta-Heuristic Algorithms in Python. Available online: https://pypi.org/project/mealpy/1.0.2/ (accessed on 15 July 2022).

- Deo, T.Y.; Patange, A.D.; Pardeshi, S.S.; Jegadeeshwaran, R.; Khairnar, A.N.; Khade, H.S. A white-box SVM framework and its swarm-based optimization for supervision of toothed milling cutter through characterization of spindle vibrations. arXiv 2021, arXiv:2112.08421. [Google Scholar]

- Rustam, F.; Siddique, M.A.; Siddiqui, H.U.R.; Ullah, S.; Mehmood, A.; Ashraf, I.; Choi, G.S. Wireless capsule endoscopy bleeding images classification using CNN based model. IEEE Access 2021, 9, 33675–33688. [Google Scholar] [CrossRef]

- Omar, B.; Rustam, F.; Mehmood, A.; Choi, G.S. Minimizing the overlapping degree to improve class-imbalanced learning under sparse feature selection: Application to fraud detection. IEEE Access 2021, 9, 28101–28110. [Google Scholar]

| Ref. | Year | Approach | Aim and Results | Dataset |

|---|---|---|---|---|

| [17] | 2018 | Pre-trained models ResNet and Inception | WBC detection, Achieved 99.84% and 99.46% accuracy rates with ResNet V1 152 and ResNet 101 | WBC microscopic image dataset [19]. |

| [7] | 2019 | CNN with handcrafted features | WBC detection: CNN achieved 99% accuracy | RBCs, WBCs images dataset [7]. The augmented dataset consists of 2697 cropped images in the `training set’ and 816 cropped images in the `test set’. |

| [6] | 2020 | CNN-based models features and ELM model | WBC detection, ELM achieved 96.03% accuracy. | BCCD dataset, Microscopic WBC dataset consists of 12,494 images, including 3120 eosinophils, 3108 lymphocytes, 3095 monocytes, and 3171 neutrophils [20]. |

| [12] | 2021 | Canonical Correlation Analysis (CCA) applied to (CNN + RNN) | WBC types classification, Model achieved 95.89% accuracy | BCCD dataset [20] and Kaggle dataset |

| [13] | 2021 | 3D CNN and 3D CNN with attention module | WBC types classification, Model achieved 97.72% accuracy score. | Three independent datasets with 215 patient samples. |

| [14] | 2022 | SIFT features with CNN model | WBC detection, Models achieved LISC = 95.84% and WBCis = 97.33% accuracies. | LISC and WBCis datasets [19]. |

| [15] | 2022 | CNN model | WBC types classification, Model achieved 98.55% accuracy score. | WBC Kaggle dataset [20]. |

| [16] | 2022 | Multi-level CNN model | WBC types classification, Model achieved 98.4% accuracy score. | Blood Cell Detection (BCD) dataset, Complete Blood Count (CBC) dataset, White Blood Cells (WBC) dataset, Kaggle Blood Cell Images (KBC) dataset, Leukocyte Images for Segmentation and Classification (LISC) dataset |

| [18] | 2022 | DenseNet121 model | WBC types classification, Model achieved 98.84% accuracy score. | KBC dataset |

| WBC Type | Original | Augmented |

|---|---|---|

| Neutrophil | 319 | 194 |

| Lymphocyte | 905 | - |

| Monocyte | 82 | 418 |

| Eosinophil | 82 | 405 |

| Basophil | 20 | 447 |

| Feature Type | No. of Features |

|---|---|

| RGB | 67,500 |

| Texture | 22,500 |

| Feature | Total No. of Features | Chi2 |

|---|---|---|

| RGB | 67,500 | 3000 |

| Texture | 22,500 | 3000 |

| HF | 6000 | |

| WBC Type | Original | Augmented | Oversampled |

|---|---|---|---|

| Neutrophil | 319 | 194 | 905 |

| Lymphocyte | 905 | - | 905 |

| Monocyte | 82 | 418 | 905 |

| Eosinophil | 82 | 405 | 905 |

| Basophil | 20 | 447 | 905 |

| Class | Oversampling Data | ||

|---|---|---|---|

| Training Set | Testing Set | Total | |

| Neutrophil | 709 | 196 | 905 |

| Lymphocyte | 728 | 177 | 905 |

| Monocyte | 719 | 186 | 905 |

| Eosinophil | 726 | 179 | 905 |

| Basophil | 738 | 167 | 905 |

| Original Data | |||

| Neutrophil | 249 | 70 | 319 |

| Lymphocyte | 732 | 173 | 905 |

| Monocyte | 69 | 13 | 82 |

| Eosinophil | 59 | 23 | 82 |

| Basophil | 17 | 3 | 20 |

| Model | Hyperparameters | Hyperparameters Tuning |

|---|---|---|

| DT | max_depth = 200 | max_depth = {2 to 500} |

| RF | max_depth = 200, n_estimators = 300 | max_depth = {2 to 500}, n_estimators = {50 to 500} |

| KNN | n_neighbors = 5 | n_neighbors = {2 to 10} |

| SVM | Kernel = poly, C = 4.0 | Kernel = {poly, linear, sigmoid} C = {1.0 to 5.0} |

| Feature | Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| Texture | DT | 0.42 | 0.19 | 0.19 | 0.19 |

| RF | 0.66 | 0.13 | 0.20 | 0.16 | |

| KNN | 0.43 | 0.19 | 0.23 | 0.19 | |

| SVM | 0.66 | 0.13 | 0.20 | 0.16 | |

| RGB | DT | 0.49 | 0.22 | 0.22 | 0.22 |

| RF | 0.62 | 0.36 | 0.21 | 0.19 | |

| KNN | 0.55 | 0.23 | 0.21 | 0.20 | |

| SVM | 0.56 | 0.25 | 0.23 | 0.23 |

| Feature | Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| Texture | DT | 0.26 | 0.24 | 0.24 | 0.24 |

| RF | 0.44 | 0.33 | 0.37 | 0.35 | |

| KNN | 0.25 | 0.06 | 0.25 | 0.10 | |

| SVM | 0.33 | 0.08 | 0.25 | 0.12 | |

| RGB | DT | 0.41 | 0.39 | 0.38 | 0.38 |

| RF | 0.64 | 0.63 | 0.57 | 0.54 | |

| KNN | 0.40 | 0.29 | 0.34 | 0.31 | |

| SVM | 0.44 | 0.41 | 0.41 | 0.41 |

| Feature | Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| Texture | DT | 0.74 | 0.74 | 0.74 | 0.74 |

| RF | 0.92 | 0.92 | 0.92 | 0.92 | |

| KNN | 0.77 | 0.65 | 0.75 | 0.68 | |

| SVM | 0.77 | 0.69 | 0.76 | 0.70 | |

| RGB | DT | 0.61 | 0.61 | 0.62 | 0.61 |

| RF | 0.87 | 0.87 | 0.87 | 0.87 | |

| KNN | 0.70 | 0.69 | 0.70 | 0.69 | |

| SVM | 0.81 | 0.81 | 0.81 | 0.81 |

| Feature | Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| HF | DT | 0.73 | 0.73 | 0.73 | 0.73 |

| RF | 0.97 | 0.97 | 0.97 | 0.97 | |

| KNN | 0.78 | 0.80 | 0.78 | 0.72 | |

| SVM | 0.89 | 0.89 | 0.89 | 0.89 |

| Sampling | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| Original | CNN | 0.58 | 0.19 | 0.22 | 0.20 |

| VVG16 | 0.74 | 0.29 | 0.31 | 0.30 | |

| ResNet-50 | 0.68 | 0.25 | 0.29 | 0.27 | |

| Augmention | CNN | 0.58 | 0.19 | 0.22 | 0.20 |

| VVG16 | 0.74 | 0.29 | 0.31 | 0.30 | |

| ResNet-50 | 0.68 | 0.25 | 0.29 | 0.27 | |

| Oversampling | CNN | 0.73 | 0.74 | 0.73 | 0.71 |

| VVG16 | 0.20 | 0.06 | 0.20 | 0.07 | |

| ResNet-50 | 0.20 | 0.04 | 0.20 | 0.06 |

| Model | Accuracy | SD |

|---|---|---|

| DT | 0.76 | ±0.03 |

| KNN | 0.79 | ±0.03 |

| RF | 0.95 | ±0.03 |

| SVM | 0.90 | ±0.02 |

| Epoch | Current Best | Global Best | Runtime | N_estimators | Max_Depth |

|---|---|---|---|---|---|

| Epoch 1 | 0.94 | 0.94 | 691 | 169 | 253 |

| Epoch 2 | 0.94 | 0.94 | 655 | 273 | 110 |

| Best Fitness | 0.94 | ||||

| Ref. | Year | Model | Acc. | T (s) |

|---|---|---|---|---|

| [34] | 2021 | CNN | 46% | 1244 |

| [44] | 2021 | MobileNet+CNN | 57% | 601 |

| [12] | 2021 | CNN+RNN | 51% | 9612 |

| [15] | 2022 | CNN | 72% | 1109 |

| This study | 2022 | RF | 97% | 34 |

| Case | T | ||

|---|---|---|---|

| HF + SMOTE V Original | 12.125 | 0.000 | Rejected |

| HF + SMOTE V Augmented | 25.698 | 0.000 | Rejected |

| HF + SMOTE V Over-sampled | 16.187 | 0.000 | Rejected |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rustam, F.; Aslam, N.; De La Torre Díez, I.; Khan, Y.D.; Mazón, J.L.V.; Rodríguez, C.L.; Ashraf, I. White Blood Cell Classification Using Texture and RGB Features of Oversampled Microscopic Images. Healthcare 2022, 10, 2230. https://doi.org/10.3390/healthcare10112230

Rustam F, Aslam N, De La Torre Díez I, Khan YD, Mazón JLV, Rodríguez CL, Ashraf I. White Blood Cell Classification Using Texture and RGB Features of Oversampled Microscopic Images. Healthcare. 2022; 10(11):2230. https://doi.org/10.3390/healthcare10112230

Chicago/Turabian StyleRustam, Furqan, Naila Aslam, Isabel De La Torre Díez, Yaser Daanial Khan, Juan Luis Vidal Mazón, Carmen Lili Rodríguez, and Imran Ashraf. 2022. "White Blood Cell Classification Using Texture and RGB Features of Oversampled Microscopic Images" Healthcare 10, no. 11: 2230. https://doi.org/10.3390/healthcare10112230

APA StyleRustam, F., Aslam, N., De La Torre Díez, I., Khan, Y. D., Mazón, J. L. V., Rodríguez, C. L., & Ashraf, I. (2022). White Blood Cell Classification Using Texture and RGB Features of Oversampled Microscopic Images. Healthcare, 10(11), 2230. https://doi.org/10.3390/healthcare10112230