Evaluating Measurement Properties of the Adapted Interprofessional Collaboration Scale through Rasch Analysis

Abstract

:1. Introduction

- How well do the single items of the IPC scale measure the perception of interprofessional collaboration? (Model fit/misfit)

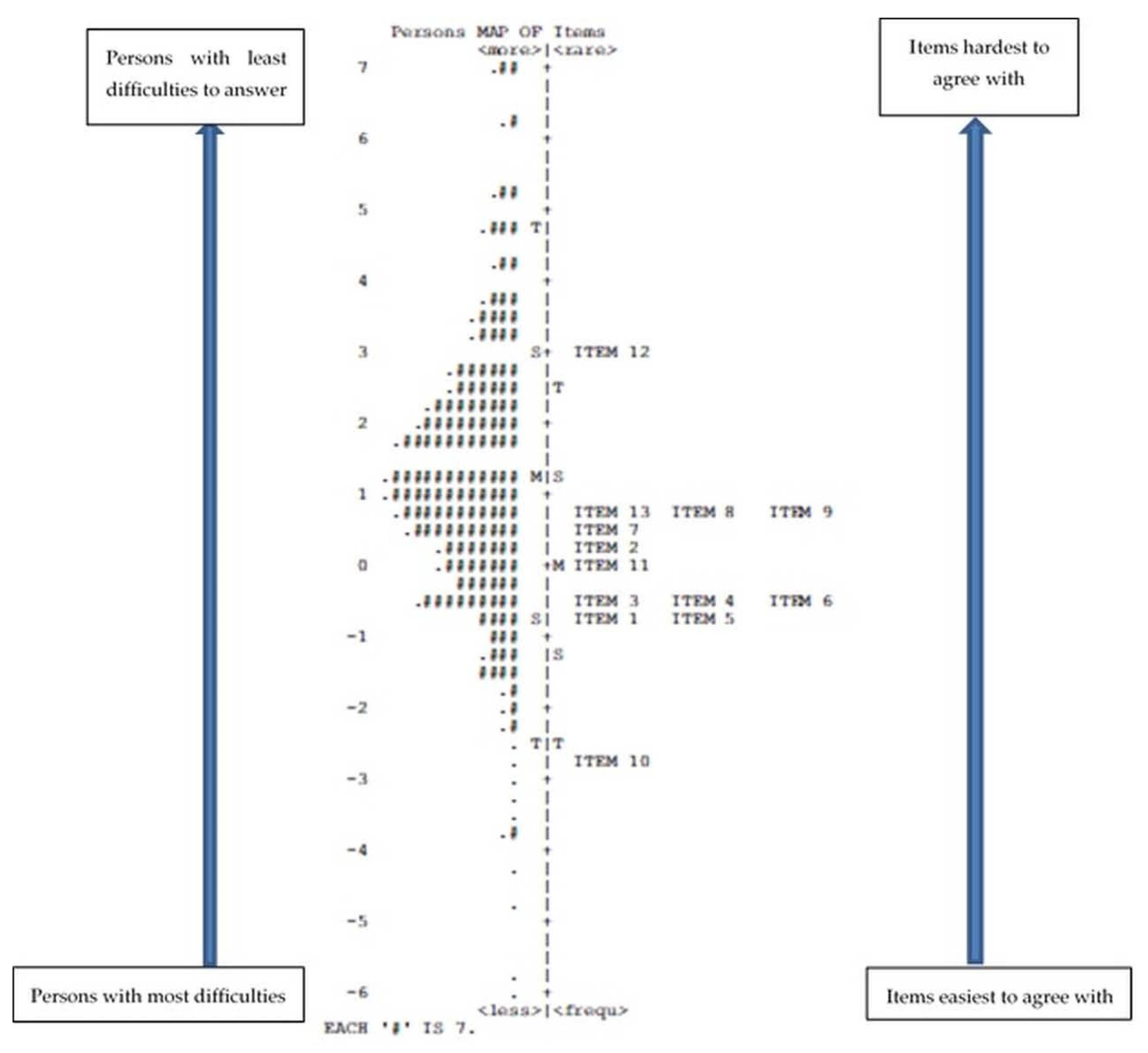

- How is the person–item difficulty for the single items of the IPC scale distributed? (Person–item map or Wright map)

- Are there items particularly critical to measure the construct? (Item polarity)

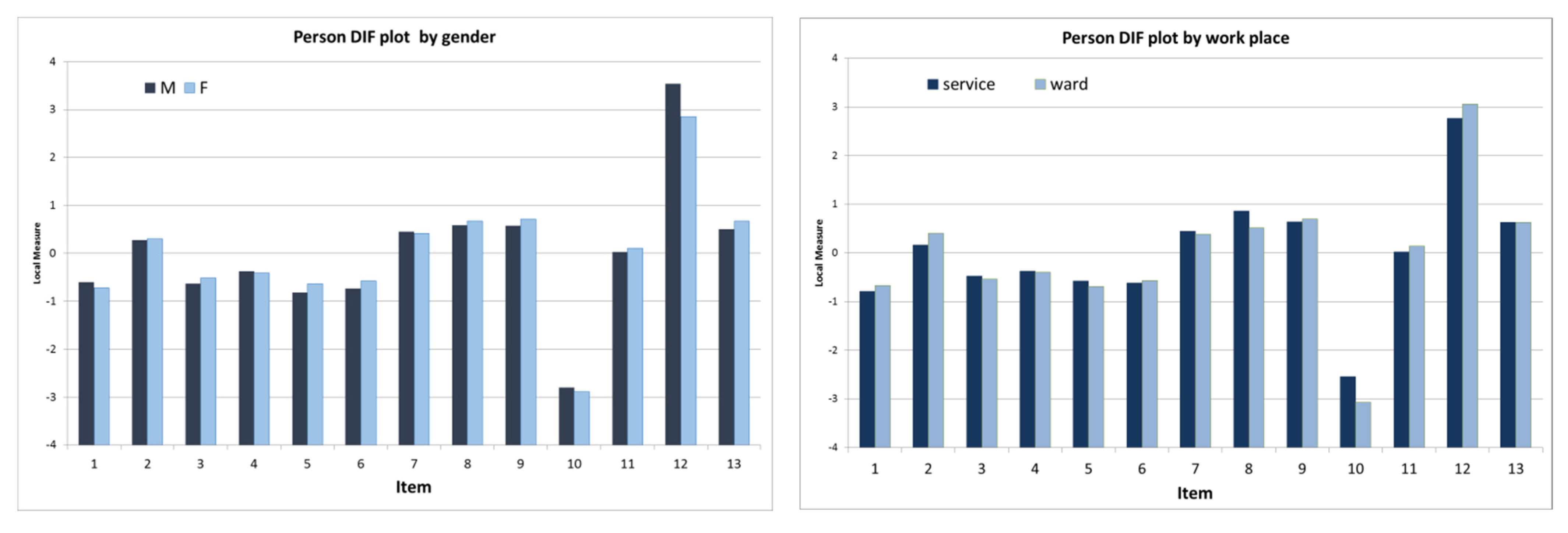

- Does the single-item difficulty relate to the main characteristics of the sample (language version, gender, work experience, age-class, and workplace)? (Differential item analysis, hereafter DIF)

- Is the IPC scale able to distinguish between persons with lower and greater collaborative behavior? (Person separation index)

2. Materials and Methods

2.1. Study Design and Sample

2.2. Instrument and Data Collection

2.3. Data Analysis

- INFIT and OUTFIT statistics are the most widely used diagnostic Rasch fit statistics. Comparison is made with an estimated value that is near to or far from the expected value. INFIT is more diagnostic when item measures are close to the person measures. OUTFIT is more diagnostic when item measures are far from the person measures.

- Observed average and outfit mean square values (MNSQ) were used to identify the compatibility of the data with the Rasch model.

- Uniform differential item functioning (DIF) was used to explore the stability of item difficulty to measure item invariance (item bias).

- The reliability of the scale was evaluated by calculating Cronbach’s alpha coefficient and a separation index for person and for items. The reliability reporting how reproducible the person and item measure orders are (i.e., their locations on the continuum) is shown in Table 1, together with the reference values for the previous indices. Item separation index and item reliability are interpreted using the same criteria. According to Rasch guidelines, if the item reliability and separation are below the required values, a bigger sample is necessary; if the person reliability and separation are below the required values, the test requires more items [20,22].

3. Results

3.1. Sample Characteristics

3.2. Reliability Measures and Separation Indexes

3.3. Item Polarity

3.4. Item Fit

3.5. Wright Map

3.6. Item Difference (DIF)

4. Discussion

Strengths and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Framework for Action on Interprofessional Education & Collaborative Practice; WHO: Geneva, Switzerland, 2010; pp. 1–64. [Google Scholar]

- Reeves, S.; Lewin, S.; Espin, S.; Zwarenstein, M. Interprofessional Teamwork in Health and Social Care; Wiley-Blackwell: Oxford, UK, 2010. [Google Scholar]

- Schot, E.; Tummers, L.; Noordegraf, M. Working on working together. A systematic review on how healthcare professionals contribute to interprofessional collaboration. J. Interprof. Care 2019, 34, 332–342. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xyrichis, A.; Reeves, S.; Zwarenstein, M. Examining the nature of interprofessional practice: An initial framework validation and creation of the InterProfessional Activity Classification Tool (InterPACT). J. Interprof. Care 2018, 32, 416–425. [Google Scholar] [CrossRef] [Green Version]

- Reeves, S.; Xyrichis, A.; Zwarenstein, M. Teamwork, collaboration, coordination, and networking: Why we need th distinghuish between different types of interprofessional practice. J. Interprof. Care 2018, 32, 1–3. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Walters, S.J.; Stern, C.; Robertson-Malt, S. The measurement of collaboration within healthcare settings: A systematic review of measurement properties of instruments. JBI Database Syst. Rev. Implement. Rep. 2016, 14, 138–197. [Google Scholar] [CrossRef] [Green Version]

- Kenazchuk, C.; Reeves, S.; Nicholas, D.; Zwarenstein, M. Validity and reliability of a multiple-group measurement scale for interprofessional collaboration. BMC HSR 2010, 10, 2–15. [Google Scholar] [CrossRef] [Green Version]

- Peltonen, J.; Leino-Kilpi, H.; Heikkila, H.; Rautava, P.; Tuomela, K.; Siekkinen, M.; Sulosaari, V.; Stolt, M. Instruments measuring interprofessional collaboration in healthcare-a scoping review. J. Interprof. Care 2020, 34, 147–161. [Google Scholar] [CrossRef] [PubMed]

- Mischo-Kelling, M.; Wieser, H.; Cavada, L.; Lochner, L.; Vittadello, F.; Fink, V.; Reeves, S. The state of interprofessional collaboration in Northern Italy: A mixed methods study. J. Interprof. Care 2015, 29, 79–81. [Google Scholar] [CrossRef]

- Vittadello, F.; Mischo-Kelling, M.; Wieser, H.; Cavada, L.; Lochner, L.; Naletto, C.; Fink, V.; Reeves, S. A multiple-group measurement scale for interprofessional collaboration: Adaptation and validation into Italian and German languages. J. Interprof. Care 2018, 32, 266–273. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Axelsson, M.; Kottorp, A.; Carlson, E.; Gudmundsson, P.; Kumlien, C.; Jakobsson, J. Translation and validation of the Swedish version of the IPECC-SET 9 item version. J. Interprof. Care 2022, 1–9. [Google Scholar] [CrossRef]

- Kottorp, A.; Keehn, M.; Hasnain, M.; Gruss, V.; Peterson, E. Instrument Refinement for Measuring Self-Efficacy for Competence in Interprofessional Collaborative Practice: Development and Psychometric Analysis of IPECC-SET 27 and IPECC-SET 9. J. Interprof. Care 2019, 33, 47–56. [Google Scholar] [CrossRef] [PubMed]

- Hasnain, M.; Gruss, V.; Keehn, M.; Peterson, E.; Valenta, A.L.; Kottorp, A. Development and validation of a tool to assess self-efficacy for competence in interprofessional collaborative practice. J. Interprof. Care 2017, 31, 255–262. [Google Scholar] [CrossRef] [PubMed]

- Wright, B.D.L.; John, M. Observations Are Always Ordinal; Measurements, However, Must Be Interval. Arch. Phys. Med. Rehabil. 1989, 70, 857–860. [Google Scholar] [PubMed]

- Granger, C. Rasch Analysis is Important to Understand and Use for Measurement. Rasch Meas. Trans. 2008, 21, 1122–1123. [Google Scholar]

- Wright, B. Logits? Rasch Meas. Trans. 1993, 7, 288. [Google Scholar]

- Wieser, H.; Mischo-Kelling, M.; Vittadello, F.; Cavada, L.; Lochner, L.; Fink, V.; Naletto, C.; Reeves, S. Perceptions of collaborative relationships between seven different health care professions in Northern Italy. J. Interprof. Care 2019, 33, 133–142. [Google Scholar] [CrossRef]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model. Fundamental Measurement in the Human Sciences, 3rd ed.; Taylor & Francis: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainments Tests; Danish Institute for Educational Research: Copenhagen, Denmark, 1960; Volume I. [Google Scholar]

- Wright, B. Solving measurement problems with the Rasch model. J. Educ. Meas. 1977, 14, 97–116. [Google Scholar] [CrossRef]

- Kenneth, D.R. Making Meaningful Measurement in Survey: A Demonstration of the Utility of the Rasch Model. IR Appl. 2010, 28, 1–16. [Google Scholar]

- Linacre, J. Winsteps Rasch Measurement Computer Program User’s Guide; Winsteps.com: Beaverton, OR, USA, 2012. [Google Scholar]

- Linacre, J.M. Bond & FoxSteps; Winsteps.com.: Chicago, IL, USA, 2007. [Google Scholar]

- George, D.; Mallery, P. SPSS for Windows Step by Step: A Simple Guide and Reference. 11.0 Update; Allyn & Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Wright, B.D.; Linacre, J.M.; Gustafson, J.E. Reasonable mean-square fit values. Rasch Meas. Trans. 1994, 8, 370. [Google Scholar]

- Boone, W.J. Rasch Analysis for Instrument Development: Why, When, and How? CBE Life Sci. Educ. 2016, 15, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Umberfield, E.; Ghaferi, A.A.; Krein, S.L.; Manojlovich, M. Using Incident Reports to Assess Communication Failures and Patient Outcomes. Jt. Comm. J. Qual. Patient Saf. 2019, 45, 406–413. [Google Scholar] [CrossRef]

- Rosenstein, A.H. Disruptive and Unprofessional Behaviors. In Physician Mental Health and Well-Being; Brower, K.J., Riba, M.B., Eds.; Springer International Publishing AG: Berlin/Heidelberg, Germany, 2017; pp. 61–85. [Google Scholar] [CrossRef]

- Mallinson, T.; Lotrecchiano, G.R.; Schwartz, L.S.; Furniss, J.; Leblanc-Beaudoin, T.; Lazar, D.; Falk-Krzesinski, H.J. Pilot analysis of the Motivation Assessment for Team Readiness, Integration, and Collaboration (MATRICx) using Rasch analysis. J. Investig. Med. 2016, 64, 1186–1193. [Google Scholar] [CrossRef] [PubMed]

- Anthoine, E.; Delmas, C.; Coutherut, J.; Moret, L. Development and psychometric testing of a scale assessing the sharing of medical information and interprofessional communication: The CSI scale. BMC Health Serv. Res. 2014, 14, 126. [Google Scholar] [CrossRef] [PubMed]

| Criteria | Statistical Index | Reference Values | |

|---|---|---|---|

| Person and item reliability | Person and item separation index (SE) | Separation index (SE) of ≥2.0 and reliability value ≥0.8 [18,22] | |

| Reliability of the scale | Cronbach’s alpha coefficient | Cronbach’s alpha | Internal consistency |

| α ≥ 0.9 | Excellent | ||

| 0.9 > α ≥ 0.8 | Good | ||

| 0.8 > α ≥ 0.7 | Acceptable | ||

| 0.7 > α ≥ 0.6 | Questionable | ||

| 0.6 > α ≥ 0.5 | Poor | ||

| 0.5 > α | Unacceptable | ||

| [24] | |||

| Item validity | Item polarity | Point measure correlation (PTMea Corr.) >0 [18] | |

| Item fit | Item dimensionality | Total mean square INFIT and OUTFIT within 0.5 to 1.5 [25] | |

| Item misfit | INFIT and OUTFIT mean square (MNSQ) | All items value ≥2.0 [18] | |

| Stability of item difficulty | Uniform differential item functioning (DIF) | (1) DIF contrast >0.5 logits, and (2) significance of the difference (t > 2.0) [22] | |

| Characteristics | Number (n = 1182) | Percentage (%) |

|---|---|---|

| Language | ||

| German | 875 | 74.0 |

| Italian | 307 | 26.0 |

| Gender | ||

| Female | 1030 | 87.1 |

| Male | 138 | 11.7 |

| Age (years) | ||

| 20–29 | 173 | 14.6 |

| 30–39 | 373 | 31.6 |

| 40–49 | 435 | 36.8 |

| 50–59 | 188 | 15.9 |

| >60 | 6 | 0.5 |

| Not answered | 7 | 0.6 |

| Workplace | ||

| Ward | 599 | 50.7 |

| Service | 390 | 33.0 |

| Not answered | 193 | 16.3 |

| Work experience in the profession (years) | Mean: 18.0 | S.D. 9.8 |

| Work experience in the current ward/service (years) | Mean: 10.9 | S.D. 8.5 |

| Item Number | Item Description | PTMea Corr. Item |

|---|---|---|

| ITEM 10 | Important information is always passed on from us to the other profession. | 0.39 |

| ITEM 12 | The other profession thinks their work is more important than ours. | 0.54 |

| ITEM 1 | We have a good understanding with the other profession about our respective responsibilities. | 0.66 |

| ITEM 4 | The other profession and us share similar ideas about how to care for patients. | 0.73 |

| ITEM 13 | The other profession is willing to discuss their new practices with us. | 0.73 |

| ITEM 9 | The other profession is anticipating when we will need their help. | 0.74 |

| ITEM 3 | I feel that patient care is adequately discussed between us and the other profession. | 0.74 |

| ITEM 11 | Disagreement with the other profession is often resolved. | 0.74 |

| ITEM 2 | The other profession is usually willing to take into account the convenience for us when planning their work. | 0.75 |

| ITEM 8 | The other profession does usually ask for our opinions. | 0.76 |

| ITEM 5 | The other profession is willing to discuss clinical issues with us. | 0.76 |

| ITEM 6 | The other profession cooperates with the way we organize patient care. | 0.76 |

| ITEM 7 | The other profession is willing to cooperate with us concerning new practices. | 0.78 |

| Entry Number | Item Logits Measures | Model S.E.M. | INFIT | OUTFIT | EXACT OBS% | MATCH EXP% | ||

|---|---|---|---|---|---|---|---|---|

| MNSQ | ZSTD | MNSQ | ZSTD | |||||

| ITEM 10 | −2.87 | 0.07 | 1.83 | 9.9 | 2.40 | 9.9 | 60.5 | 74.7 |

| ITEM 12 | 2.93 | 0.05 | 1.80 | 9.9 | 3.21 | 9.9 | 52.4 | 61.4 |

| ITEM 1 | −0.70 | 0.06 | 0.93 | −1.5 | 1.02 | 0.4 | 71.6 | 68.4 |

| ITEM 4 | −0.41 | 0.06 | 0.75 | −6.3 | 0.76 | −5.2 | 74.2 | 67.7 |

| ITEM 13 | 0.64 | 0.05 | 0.94 | −1.5 | 0.92 | −1.7 | 70.0 | 64.7 |

| ITEM 9 | 0.69 | 0.05 | 0.90 | −2.3 | 0.91 | −2.0 | 65.8 | 64.6 |

| ITEM 3 | −0.51 | 0.06 | 0.90 | −2.4 | 0.88 | −2.5 | 69.8 | 68.1 |

| ITEM 11 | 0.09 | 0.05 | 0.88 | −2.7 | 0.88 | −2.6 | 68.9 | 66.6 |

| ITEM 2 | 0.30 | 0.05 | 0.81 | −4.6 | 0.81 | −4.4 | 73.1 | 65.8 |

| ITEM 8 | 0.67 | 0.05 | 0.91 | −2.1 | 0.90 | −2.2 | 64.5 | 64.7 |

| ITEM 5 | −0.66 | 0.06 | 0.95 | −1.1 | 0.89 | −2.3 | 68.6 | 68.3 |

| ITEM 6 | −0.59 | 0.06 | 0.71 | −7.4 | 0.67 | −7.4 | 76.8 | 68.1 |

| ITEM 7 | 0.41 | 0.05 | 0.68 | −8.4 | 0.66 | −8.3 | 74.3 | 65.7 |

| MEAN | 0.00 | 0.06 | 1.00 | −1.6 | 1.15 | −1.4 | 68.5 | 66.8 |

| S.D. | 1.24 | 0.00 | 0.36 | 5.4 | 0.73 | 5.3 | 6.3 | 3.0 |

| Differential Item Functioning | IPC Scale |

|---|---|

| Gender | Item 12: higher agreement for males (0.69, p = 0.0015) |

| Language | No DIF |

| Work experience | No DIF |

| Workplace | No DIF |

| Age range | Item 8: higher agreement for nurses >50 vs. nurses 20–29 (0.56, p = 0.0151) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wieser, H.; Mischo-Kelling, M.; Cavada, L.; Lochner, L.; Fink, V.; Naletto, C.; Vittadello, F. Evaluating Measurement Properties of the Adapted Interprofessional Collaboration Scale through Rasch Analysis. Healthcare 2022, 10, 2007. https://doi.org/10.3390/healthcare10102007

Wieser H, Mischo-Kelling M, Cavada L, Lochner L, Fink V, Naletto C, Vittadello F. Evaluating Measurement Properties of the Adapted Interprofessional Collaboration Scale through Rasch Analysis. Healthcare. 2022; 10(10):2007. https://doi.org/10.3390/healthcare10102007

Chicago/Turabian StyleWieser, Heike, Maria Mischo-Kelling, Luisa Cavada, Lukas Lochner, Verena Fink, Carla Naletto, and Fabio Vittadello. 2022. "Evaluating Measurement Properties of the Adapted Interprofessional Collaboration Scale through Rasch Analysis" Healthcare 10, no. 10: 2007. https://doi.org/10.3390/healthcare10102007