Multi-Task Learning-Based Traffic Flow Prediction Through Highway Toll Stations During Holidays

Abstract

1. Introduction

- (1)

- This study employs a multi-task learning method to achieve the joint prediction of inbound and outbound highway traffic flow. Compared to separately forecasting inbound and outbound flow at highway toll stations, the multi-task learning framework enables the sharing of underlying spatiotemporal patterns between inbound and outbound flows, which helps the model better capture their latent feature representations and improves overall prediction performance.

- (2)

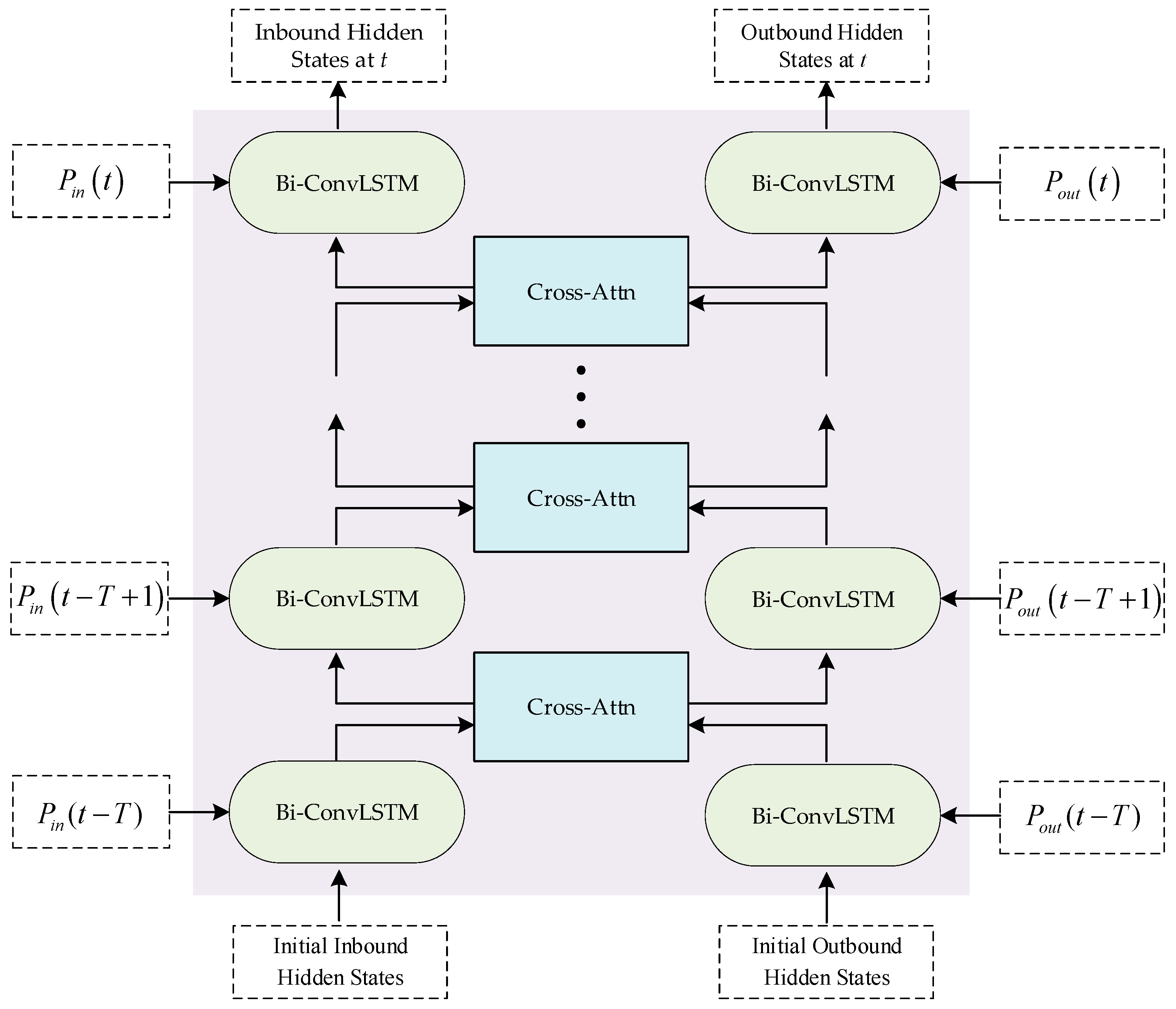

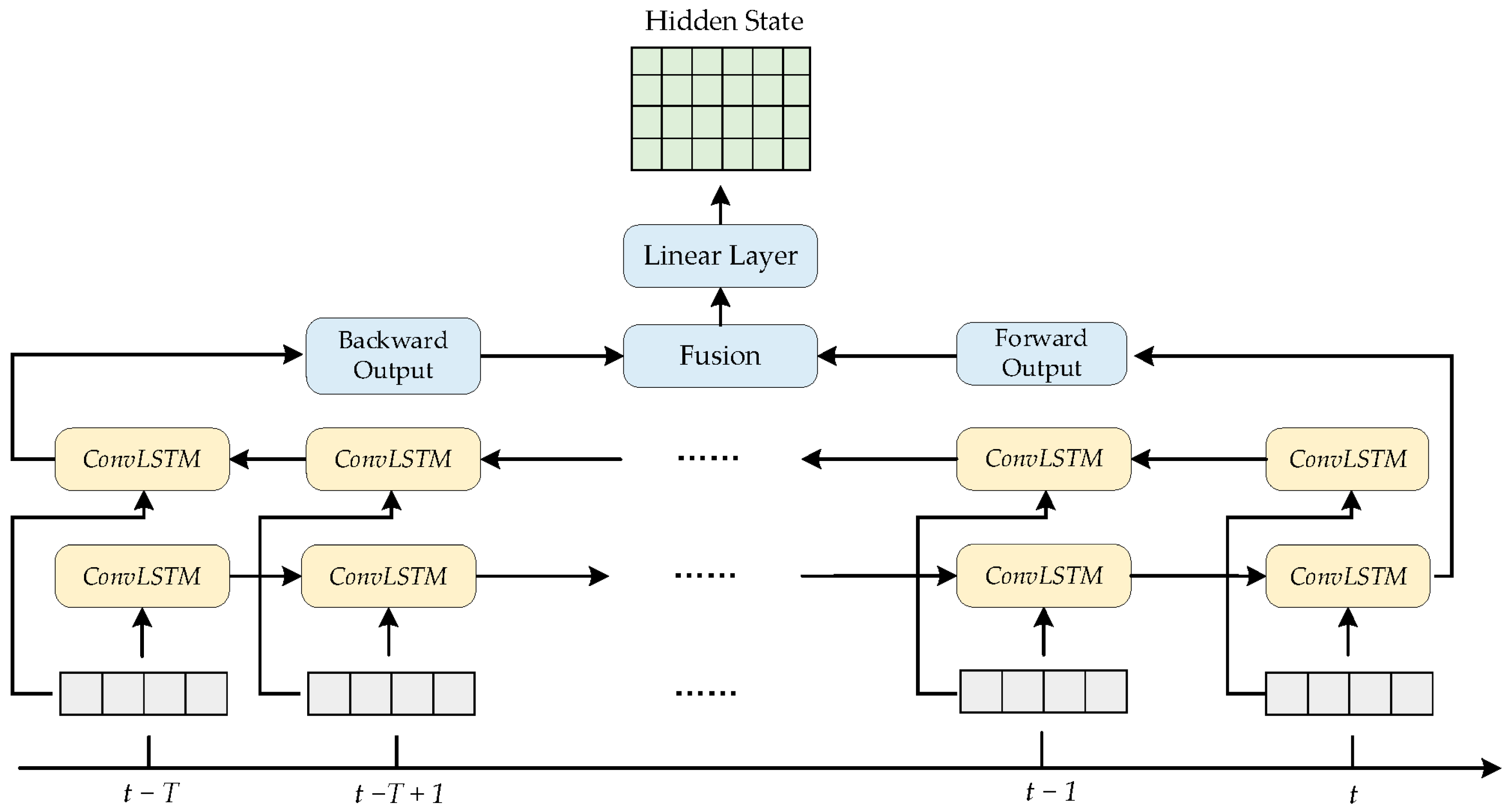

- This study proposes a novel spatial–temporal cross-attention network (ST-Cross-Attn) based on the bidirectional ConvLSTM (Bi-ConvLSTM) and the cross-attention mechanism (Cross-Attn). The proposed model not only captures the spatial–temporal dependencies of inbound and outbound flow, but also facilitates the interaction between their underlying spatial–temporal features.

- (3)

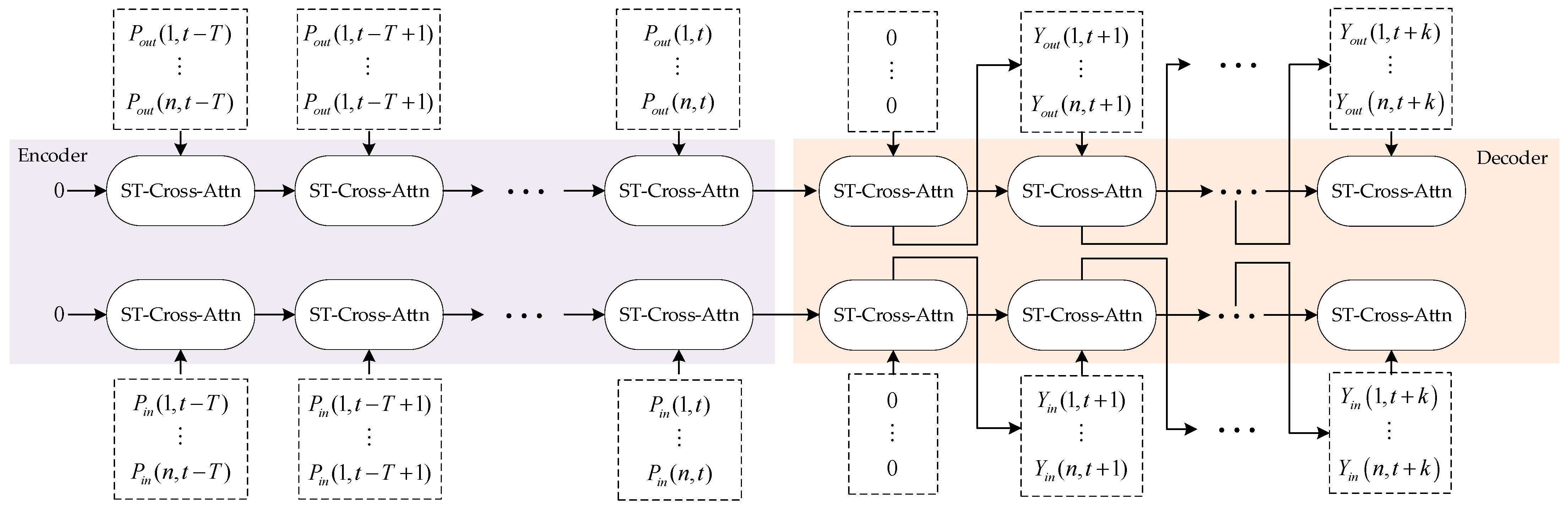

- This study employs a sequence-to-sequence (Seq2Seq) multi-step prediction framework, in which the encoder progressively captures the hidden spatiotemporal features of inbound and outbound flow at the current time step, while the decoder iteratively generates multi-step prediction results. Extensive experiments on a real-world highway traffic flow dataset demonstrate that the proposed model outperforms state-of-the-art baselines in multi-step prediction of inbound and outbound flows at toll stations during holidays. The results of the ablation studies further demonstrate the effectiveness of the multi-task learning framework in predicting highway inbound and outbound traffic flows.

2. Preliminaries

3. Methodology

3.1. The Inbound/Outbound Flow Branch

3.2. The Cross-Attention Branch

3.3. Sequence-to-Sequence (Seq2Seq) Prediction Framework

4. Evaluation

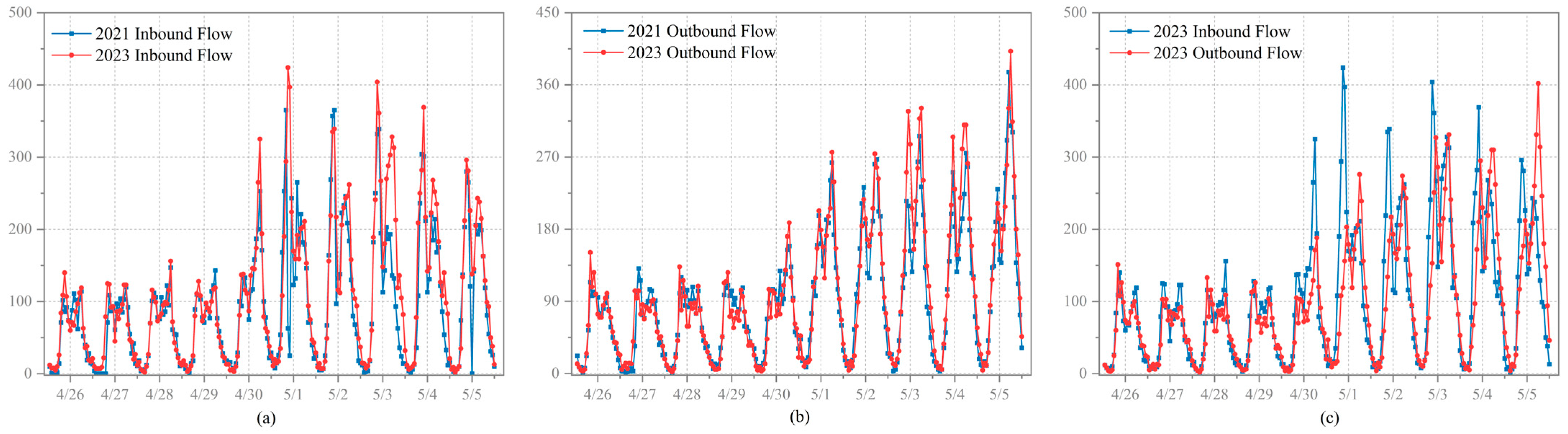

4.1. Dataset

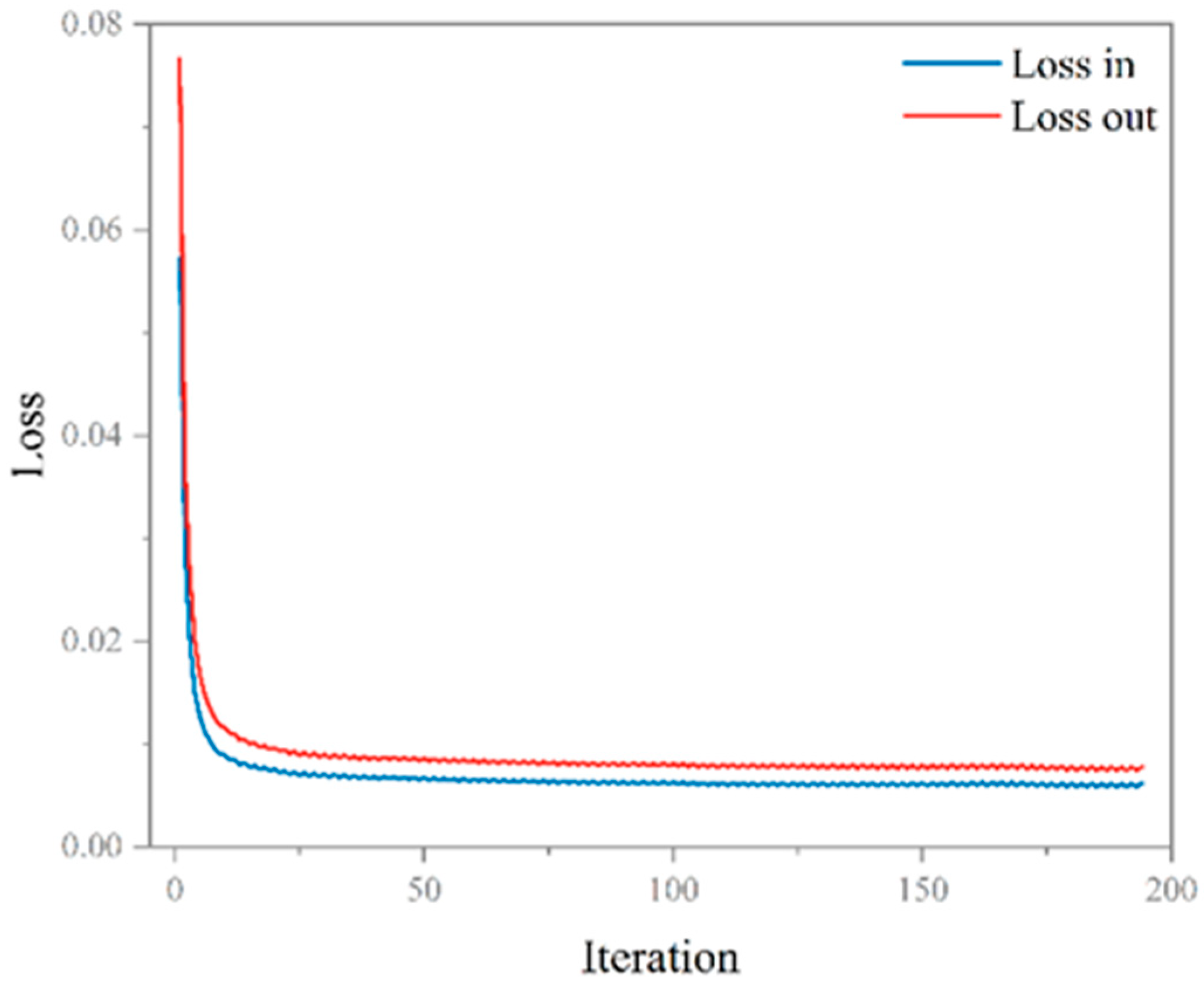

4.2. Model Settings and Evaluation Metrices

4.3. Baselines

- (1)

- SVR (Support Vector Regression): As a classical machine learning model, Support Vector Regression (SVR) has been widely applied in the field of traffic forecasting [35], primarily utilizing Support Vector Machine techniques for regression analysis of time series data. In this experiment, the radial basis function kernel is used, with the error tolerance parameter set to epsilon = 0.005 and the regularization parameter set to C = 3.

- (2)

- XGBoost (eXtreme Gradient Boosting) [36]: The Gradient Boosting Decision Tree algorithm is an efficient machine learning technique that combines multiple weak learners into a strong learner, thereby improving overall performance. In recent years, it has been widely applied in the field of traffic forecasting. In this study, the ‘gbtree’ booster is used, with a maximum tree depth of 6 and a learning rate of 0.1.

- (3)

- BPNN (Back Propagation Neural Network): As one of the most classic neural networks, the Back Propagation Neural Network (BPNN) has been shown to effectively capture the nonlinear characteristics of traffic flow [37]. The BPNN model in this study consists of two fully connected layers, with 1024 and 512 neurons in each layer, respectively. The optimizer used for the model is Adam, with a learning rate of 0.0005.

- (4)

- CNN (Convolutional Neural Network): Convolutional Neural Networks (CNNs) are known for their strong performance in capturing spatial correlations within data [38]. The convolutional kernels are effective in capturing the spatial dependencies between features in time series data. In this study, a CNN layer with a kernel size of 3 × 3 is first used to model the spatial features, followed by a fully connected layer for prediction, with ReLU as the activation function.

- (5)

- LSTM (Long Short-Term Memory): Long short-term memory (LSTM) networks effectively address the long-term dependency issue in conventional Recurrent Neural Networks (RNNs), enabling them to capture longer-term trends in time series data. As a result, LSTMs have certain advantages in traffic flow forecasting [39]. In this study, the model consists of one LSTM layer with four hidden layers and one fully connected layer, with ReLU as the activation function.

- (6)

- GRU (Gated Recurrent Unit): Gated Recurrent Units (GRUs) are a type of RNN that effectively solve the long-term dependency problem and the vanishing gradient issue during backpropagation. GRUs have been widely applied in time series processing tasks [40]. Compared to LSTMs, GRUs feature a simpler structure, improving training speed while maintaining good performance. The model is composed of three hidden layers and one fully connected layer, with 128 neurons in each hidden layer.

- (7)

- ST-ResNet (Spatial Temporal Residual Network) [7]: This network uses residual convolutional units to model the spatiotemporal dependencies of traffic flow, employing three branches to learn the recent, periodic, and trend-related temporal features of traffic flow. In this study, a residual convolutional layer is used to learn spatiotemporal features, with a kernel size of 3 × 3, and a fully connected layer is used for future traffic flow prediction.

- (8)

- Transformer: Initially proposed for Natural Language Processing (NLP) in 2017 [13], the Transformer model effectively captures correlations between any nodes without considering their distance. As a result, Transformer models have been widely applied in time series processing in recent years. In this study, a Transformer framework is constructed with two encoder layers and two decoder layers. The number of attention heads is set to 4, and the feature size d_model is set to 256. The output of the Transformer is then passed to a fully connected layer for prediction.

- (9)

- STMTL (Spatial–Temporal Multi-Task Learning) [25]: This model employs a Multi-Graph Channel Attention Network to capture both dynamic and static topological relationships in traffic flow, a Time-Encoding Gated Recurrent Unit (TE-GRU) to model the unique fluctuations during holidays, and an attention block to capture the interactive relationship between inflow and outflow. In this study, a dynamic similarity graph is constructed based on inflow and outflow data, while a static adjacency graph is not utilized.

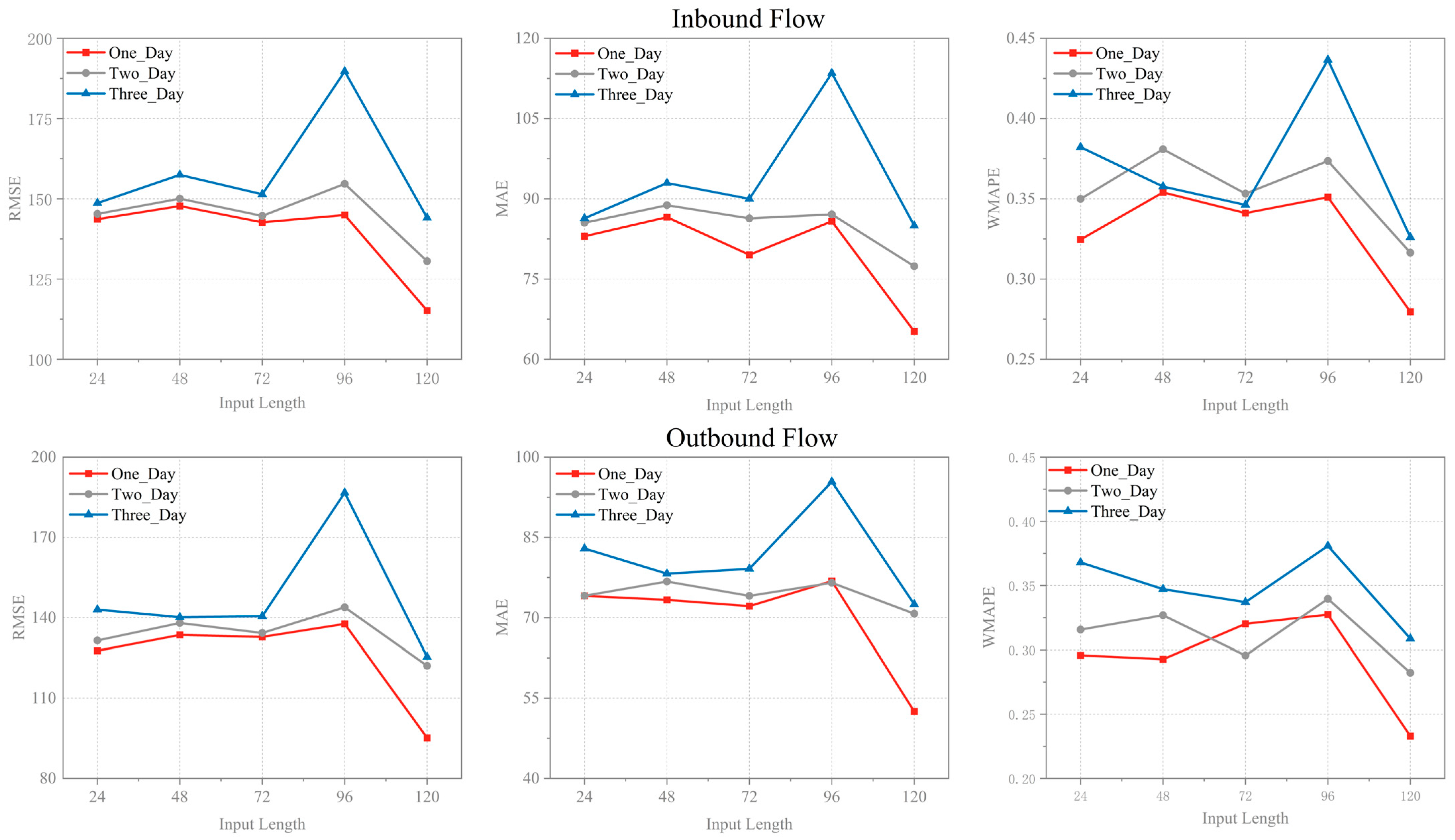

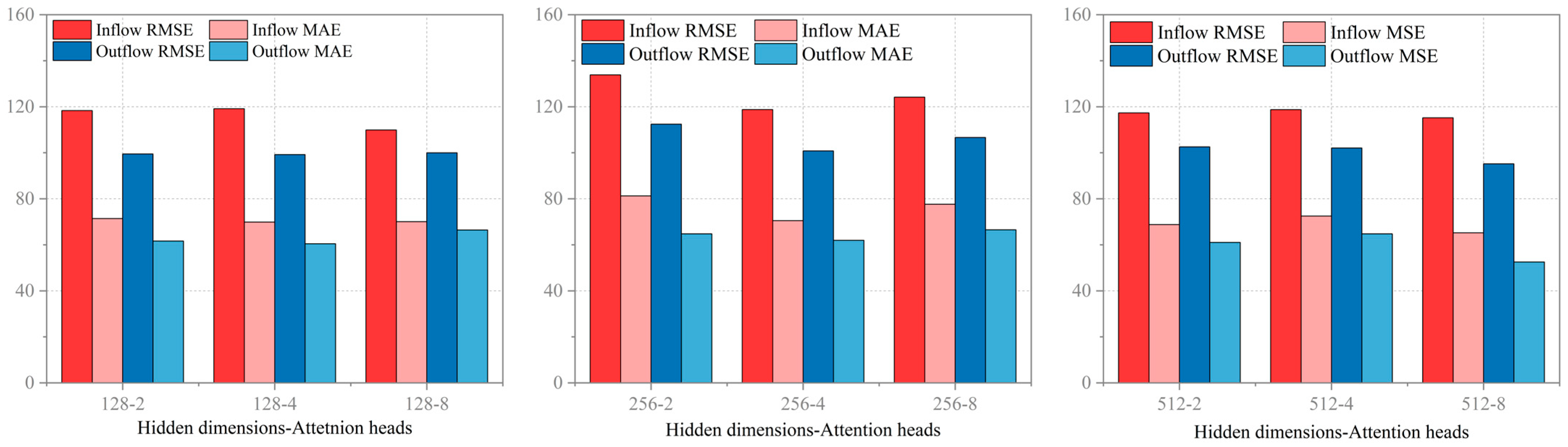

4.4. Study of Hyperparameters

4.5. Network-Wide Performance Comparison

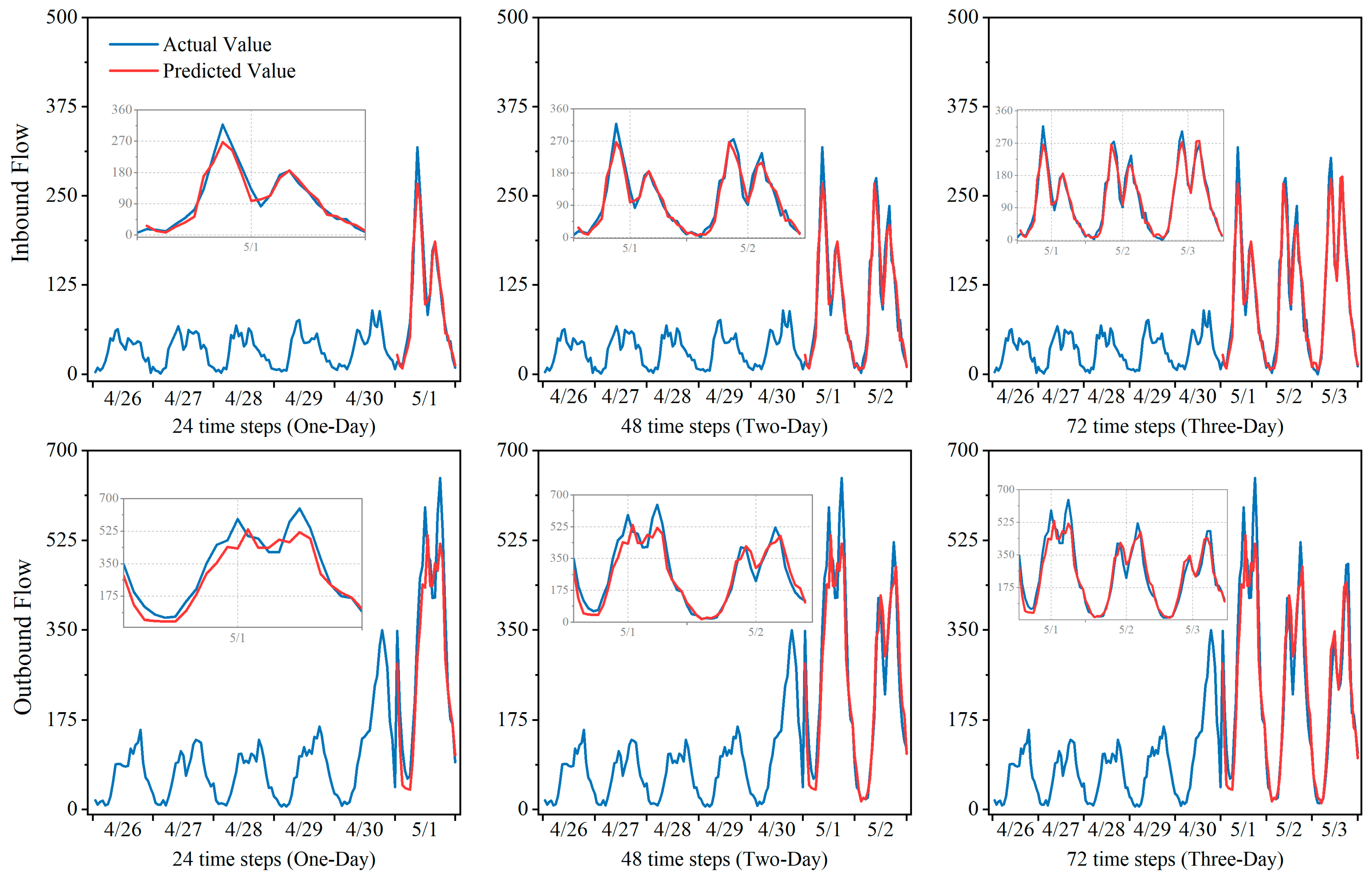

4.6. Prediction Performance of Individual Toll Stations

4.7. Prediction Performance of Ablation Studies

4.8. Prediction Performance of Multi-Task Learning V.S. Single-Task Learning

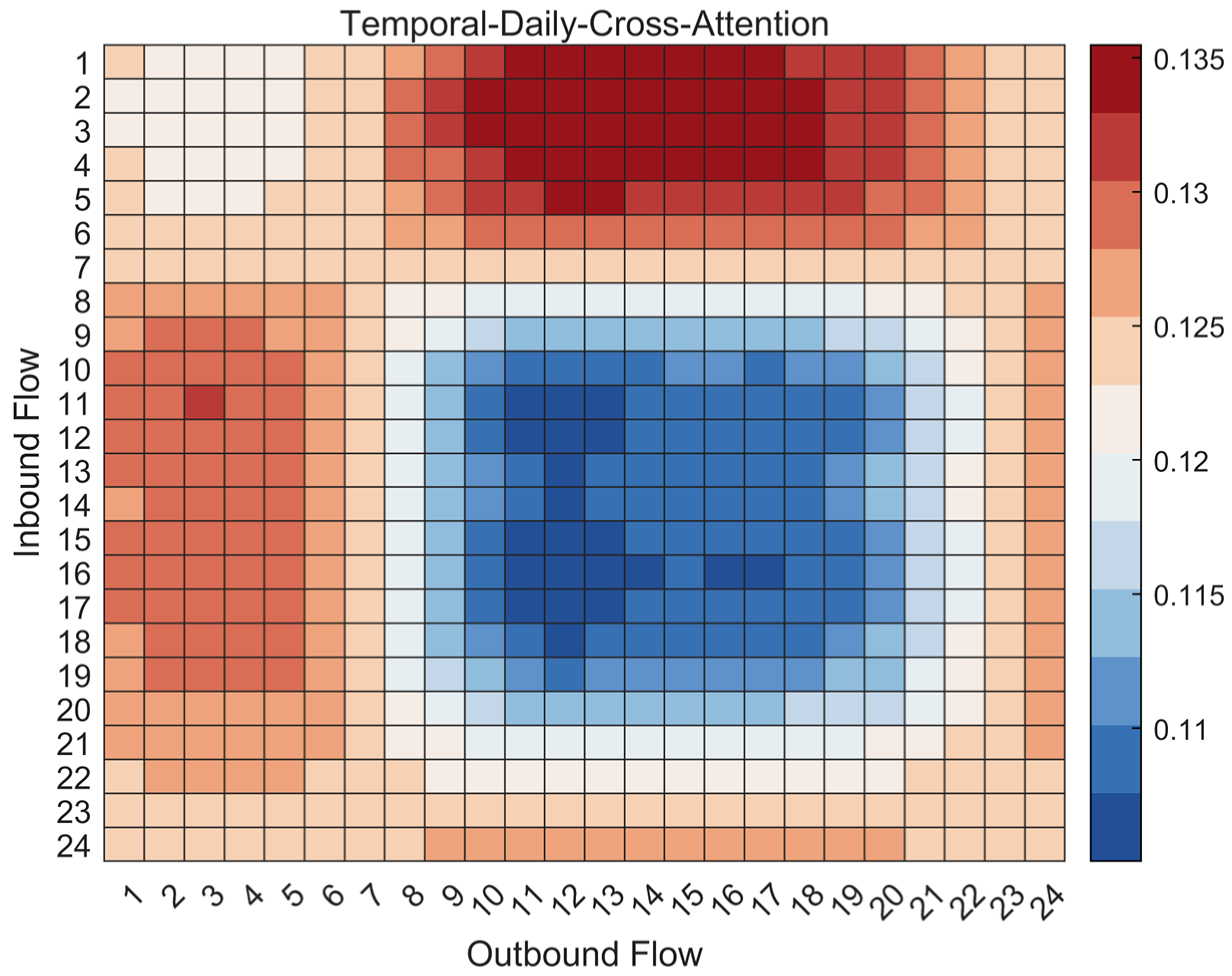

4.9. Analysis of Interactive Attention Between Inbound and Outbound Traffic Flows

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tian, J.; Zeng, J.; Ding, F.; Xu, J.; Jiang, Y.; Zhou, C.; Li, Y.; Wang, X. Highway traffic flow forecasting based on spatiotemporal relationship. Chin. J. Eng. 2024, 46, 1623–1629. [Google Scholar] [CrossRef]

- Feng, T. Research on Prediction of Freeway Traffic Flow in Holidays Based on EMD and GS-SVM. Master’s Thesis, Chang’an University, Xi’an, China, 2016. [Google Scholar]

- Zhang, J.; Mao, S.; Yang, L.; Ma, W.; Li, S.; Gao, Z. Physics-informed deep learning for traffic state estimation based on the traffic flow model and computational graph method. Inf. Fusion 2024, 101, 101971. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, S.; Zhao, H.; Yang, Y.; Liang, M. Multi-frequency spatial-temporal graph neural network for short-term metro OD demand prediction during public health emergencies. Transportation 2025, 1–23. [Google Scholar] [CrossRef]

- Zhou, Z.; Huang, Q.; Wang, B.; Hou, J.; Yang, K.; Liang, Y.; Zheng, Y.; Wang, Y. Coms2t: A complementary spatiotemporal learning system for data-adaptive model evolution. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–18. [Google Scholar] [CrossRef]

- Ma, Z.; Xing, J.; Mesbah, M.; Ferreira, L. Predicting short-term bus passenger demand using a pattern hybrid approach. Transp. Res. Part C Emerg. Technol. 2014, 39, 148–163. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A temporal graph convolutional network for traffic prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, F.; Guo, Y.; Li, X. Multi-graph convolutional network for short-term passenger flow forecasting in urban rail transit. IET Intell. Transp. Syst. 2020, 14, 1210–1217. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Zhang, C. Graph wavenet for deep spatial-temporal graph modeling. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 1907–1913. [Google Scholar]

- Zhang, S.; Zhang, J.; Yang, L.; Wang, C.; Gao, Z. COV-STFormer for short-term passenger flow prediction during COVID-19 in urban rail transit systems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 3793–3811. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Jiang, J.; Han, C.; Zhao, W.; Wang, J. Pdformer: Propagation delay-aware dynamic long-range Transformer for traffic flow prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4365–4373. [Google Scholar]

- Reza, S.; Ferreira, M.; Machado, J.; Tavares, J. A multi-head attention-based Transformer model for traffic flow forecasting with a comparative analysis to recurrent neural networks. Expert Syst. Appl. 2022, 202, 117275. [Google Scholar] [CrossRef]

- Zhang, J.; Mao, S.; Zhang, S.; Yin, J.; Yang, L.; Gao, Z. EF-former for short-term passenger flow prediction during large-scale events in urban rail transit systems. Inf. Fusion 2025, 117, 102916. [Google Scholar] [CrossRef]

- Yan, H.; Ma, X.; Pu, Z. Learning dynamic and hierarchical traffic spatiotemporal features with Transformer. IEEE Trans. Intell. Transp. Syst. 2021, 23, 22386–22399. [Google Scholar] [CrossRef]

- Xu, M.; Dai, W.; Liu, C.; Gao, X.; Lin, W.; Qi, G.; Xiong, H. Spatial-temporal transformer networks for traffic flow forecasting. arXiv 2020, arXiv:2001.02908. [Google Scholar]

- Zhang, S.; Zhang, J.; Yang, L.; Chen, F.; Li, S.; Gao, Z. Physics guided deep learning-based model for short-term origin-destination demand prediction in urban rail transit systems under pandemic. Engineering 2024, 41, 276–296. [Google Scholar] [CrossRef]

- Zang, D.; Ling, J.; Wei, Z.; Tang, K.; Cheng, J. Long-term traffic speed prediction based on multiscale spatio-temporal feature learning network. IEEE Trans. Intell. Transp. Syst. 2018, 20, 3700–3709. [Google Scholar] [CrossRef]

- Qu, L.; Li, W.; Li, W.; Ma, D.; Wang, Y. Daily long-term traffic flow forecasting based on a deep neural network. Expert Syst. Appl. 2019, 121, 304–312. [Google Scholar] [CrossRef]

- Wang, Z.; Su, X.; Ding, Z. Long-term traffic prediction based on lstm encoder-decoder architecture. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6561–6571. [Google Scholar] [CrossRef]

- Li, Y.; Chai, S.; Wang, G.; Zhang, X.; Qiu, J. Quantifying the uncertainty in long-term traffic prediction based on PI-ConvLSTM network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20429–20441. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, J.; Yang, L.; Yin, J. Spatiotemporal attention fusion network for short-term passenger flow prediction on New Year’s Day holiday in urban rail transit system. IEEE Intell. Transp. Syst. Mag. 2023, 15, 59–77. [Google Scholar] [CrossRef]

- Qiu, H.; Zhang, J.; Yang, L.; Han, K. Spatial–temporal multi-task learning for short-term passenger inbound flow and outbound flow prediction on holidays in urban rail transit systems. Transportation 2025, 1–30. [Google Scholar]

- Wang, A.; Ye, Y.; Song, X.; Zhang, S.; Yu, J.J. Traffic prediction with missing data: A multi-task learning approach. IEEE Trans. Intell. Transp. Syst. 2023, 24, 4189–4202. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, Z.; Zheng, L. Short-term prediction of passenger demand in multi-zone level: Temporal convolutional neural network with multi-task learning. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1480–1490. [Google Scholar] [CrossRef]

- Yi, Z.; Zhou, Z.; Huang, Q.; Chen, Y.; Yu, L.; Wang, X.; Wang, Y. Get Rid of Isolation: A Continuous Multi-task Spatio-Temporal Learning Framework. In Proceedings of the Thirty-Eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Yang, Y.; Zhang, J.; Yang, L.; Gao, Z. Network-wide short-term inflow prediction of the multi-traffic modes system: An adaptive multi-graph convolution and attention mechanism based multitask-learning model. Transp. Res. Part C Emerg. Technol. 2024, 158, 104428. [Google Scholar] [CrossRef]

- Zou, G.; Lai, Z.; Wang, T.; Liu, Z.; Li, Y. Mt-stnet: A novel multi-task spatiotemporal network for highway traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 8221–8236. [Google Scholar] [CrossRef]

- Azad, R.; Asadi-Aghbolaghi, M.; Fathy, M.; Escalera, S. Bi-directional ConvLSTM U-Net with densley connected convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Lin, Z.; Li, M.; Zheng, Z.; Cheng, Y.; Yuan, C. Self-attention ConvLSTM for spatiotemporal prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.; Wong, W.; Woo, W. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 18. [Google Scholar]

- Liu, L.; Zhu, Y.; Li, G.; Wu, Z. Online metro origin-destination prediction via heterogeneous information aggregation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3574–3589. [Google Scholar] [CrossRef]

- Sapankevych, N.; Sankar, R. Time series prediction using support vector machines: A survey. IEEE Comput. Intell. Mag. 2009, 4, 24–38. [Google Scholar] [CrossRef]

- Zhang, Y.; Shi, X.; Zhang, S.; Abraham, A. A XGBoost-based lane change prediction on time series data using feature engineering for autopilot vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19187–19200. [Google Scholar] [CrossRef]

- Wang, L.; Zeng, Y.; Chen, T. Back propagation neural network with adaptive differential evolution algorithm for time series forecasting. Expert Syst. Appl. 2015, 42, 855–863. [Google Scholar] [CrossRef]

- Wang, K.; Li, K.; Zhou, L.; Hu, Y. Multiple convolutional neural networks for multivariate time series prediction. Neurocomputing 2019, 360, 107–119. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar]

- Yamak, P.; Li, Y.; Gadosey, P. A comparison between ARIMA, LSTM, and GRU for time series forecasting. In Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 20–22 December 2019. [Google Scholar]

| Weight Coefficients [Inbound, Outbound] | Inbound Flow | Outbound Flow | ||||

|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | MAE | WMAPE | |

| [0.5, 0.5] | 139.3598 | 79.6379 | 0.3416 | 129.236 | 67.9112 | 0.3013 |

| [0.4, 0.6] | 115.167 | 65.2043 | 0.2796 | 95.164 | 52.5209 | 0.233 |

| [0.3, 0.7] | 119.34 | 70.7406 | 0.3033 | 102.788 | 62.0568 | 0.2753 |

| Model | 1 Day (24 Time Steps) | 2 Days (48 Time Steps) | 3 Days (72 Time Steps) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | MAE | WMAPE | RMSE | MAE | WMAPE | |

| SVR | 205.53 | 129.47 | 41.93% | 204.09 | 128.69 | 41.31% | 201.46 | 127.33 | 40.71% |

| XGBoost | 184.70 | 100.13 | 39.28% | 184.64 | 101.93 | 38.56% | 184.44 | 102.43 | 42.74% |

| BPNN | 161.26 1.23 | 85.82 0.83 | 33.06 1.63% | 191.70 2.12 | 103.15 1.24 | 39.35 2.03% | 187.66 2.97 | 96.23 1.01 | 34.56 .89% |

| CNN | 158.84 1.14 | 84.72 0.85 | 30.77 1.34% | 166.27 2.34 | 87.72 0.99 | 31.51 1.87% | 184.91 3.01 | 99.41 1.25 | 35.73 2.01% |

| LSTM | 163.29 1.11 | 84.88 0.79 | 30.66 1.45% | 159.66 2.01 | 83.48 1.02 | 29.82 | 161.41 3.32 | 88.69 1.45 | 34.16 2.54% |

| GRU | 180.55 1.05 | 94.12 0.94 | 34.06 1.22% | 175.07 2.44 | 90.44 0.95 | 32.36 1.94% | 159.82 3.43 | 87.30 1.36 | 34.14 2.28% |

| ST-ResNet | 151.07 0.99 | 79.28 0.88 | 28.78 1.56% | 161.18 1.90 | 85.18 1.16 | 30.58 2.05% | 180.75 3.22 | 99.57 1.39 | 35.76 2.06% |

| Transformer | 145.81 1.09 | 87.24 0.76 | 31.68 1.44% | 148.63 1.98 | 86.34 1.21 | 30.98 1.99% | 153.32 3.37 | 86.37 1.28 | 33.86 2.41% |

| STMTL | 151.70 1.17 | 78.66 0.97 | 28.94 1.33% | 163.92 1.90 | 84.10 1.09 | 30.20 1.89% | 184.12 3.01 | 93.72 1.18 | 33.67 2.21% |

| Our Model | 115.171.01 | 65.200.81 | 27.961.39% | 130.562.01 | 77.391.13 | 30.111.91% | 144.083.21 | 84.951.30 | 32.472.25% |

| Model | 1 Day (24 Time Steps) | 2 Days (48 Time Steps) | 3 Days (72 Time Steps) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | RMSE | MAE | WMAPE | MAE | RMSE | |

| SVR | 177.89 | 114.13 | 37.42% | 232.94 | 150.77 | 44.32% | 175.65 | 111.58 | 36.16% |

| XGBoost | 161.32 | 89.46 | 37.51% | 194.20 | 110.49 | 40.12% | 164.31 | 91.28 | 37.76% |

| BPNN | 142.34 1.31 | 75.65 0.99 | 28.41 1.34% | 158.47 2.17 | 86.28 1.31 | 33.53 2.15% | 152.34 3.34 | 81.43 1.66 | 29.87 1.98% |

| CNN | 126.68 1.24 | 69.58 0.93 | 25.69 1.28% | 145.30 2.09 | 81.07 1.22 | 29.74 2.07% | 161.56 3.58 | 94.59 1.82 | 34.69 2.33% |

| LSTM | 133.85 1.29 | 69.04 0.88 | 25.49 1.30% | 132.42 2.01 | 75.41 1.36 | 25.45 2.33% | 139.51 3.49 | 72.53 1.69 | 29.61 2.03% |

| GRU | 140.69 1.14 | 73.59 0.92 | 27.18 1.27% | 136.94 1.99 | 73.15 1.30 | 26.47 2.18% | 130.81 3.62 | 73.20 1.89 | 29.85 2.01% |

| ST-ResNet | 132.01 1.25 | 71.03 0.86 | 26.23 1.37% | 146.56 2.10 | 82.07 1.25 | 30.10 2.31% | 162.27 3.44 | 92.54 2.01 | 33.94 2.16% |

| Transformer | 117.49 1.22 | 75.56 0.83 | 27.89 1.19% | 127.39 2.20 | 73.71 1.33 | 25.94 2.14% | 132.44 3.28 | 76.64 1.92 | 28.38 2.28% |

| STMTL | 128.07 1.12 | 70.09 0.82 | 25.88 1.03% | 138.95 2.00 | 75.16 1.25 | 27.57 1.98% | 160.80 3.15 | 84.59 1.78 | 31.03 2.01% |

| Our Model | 95.161.19 | 52.520.90 | 23.331.25% | 125.392.04 | 72.481.29 | 25.372.10% | 122.073.31 | 70.741.74 | 28.222.19% |

| Variant | 1 Day (24 Time Steps) | 2 Days (48 Time Steps) | 3 Days (72 Time Steps) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | RMSE | MAE | WMAPE | MAE | RMSE | |

| Variant 1 (No Bi) | 137.55 | 68.56 | 29.25% | 143.21 | 72.04 | 29.19% | 155.94 | 87.61 | 33.42% |

| Variant 2 (LSTM) | 152.92 | 83.85 | 35.87% | 158.29 | 86.19 | 34.96% | 160.47 | 85.26 | 32.53% |

| Variant 3 (No Attn) | 139.84 | 74.35 | 31.71% | 145.76 | 77.67 | 31.47% | 169.76 | 83.35 | 31.80% |

| Variant 4 (No holidays) | 165.83 | 76.84 | 32.10% | 160.47 | 75.03 | 32.08% | 153.39 | 74.12 | 30.01% |

| ST-Cross-Attn | 136.89 | 66.91 | 28.55% | 142.32 | 70.27 | 28.47% | 148.34 | 72.08 | 27.47% |

| Variant | 1 Day (24 Time Steps) | 2 Days (48 Time Steps) | 3 Days (72 Time Steps) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | RMSE | MAE | WMAPE | MAE | RMSE | |

| Variant 1 (No Bi) | 117.23 | 55.88 | 24.79% | 124.22 | 61.72 | 26.87% | 132.51 | 72.22 | 28.82% |

| Variant 2 (LSTM) | 135.35 | 71.09 | 31.55% | 139.64 | 73.63 | 31.36% | 142.27 | 73.24 | 29.24% |

| Variant 3 (No Attn) | 119.10 | 60.01 | 26.62% | 125.71 | 64.83 | 27.61% | 162.37 | 79.87 | 31. 89% |

| Variant 4 (No holidays) | 167.52 | 65.37 | 29.02% | 154.16 | 72.71 | 30.83% | 138.08 | 71.69 | 28.63% |

| ST-Cross-Attn | 116.63 | 54.70 | 24.28% | 122.86 | 59.54 | 25.37% | 127.39 | 61.99 | 24.76% |

| 1 Day (24 Time Steps) | 2 Days (48 Time Steps) | 3 Days (72 Time Steps) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | WMAPE | RMSE | RMSE | MAE | WMAPE | MAE | RMSE | |

| MTL (inbound flow) | 136.89 | 66.91 | 28.55% | 142.32 | 70.27 | 28.47% | 148.34 | 72.08 | 27.47% |

| STL (inbound flow) | 138.44 | 71.10 | 30.32% | 144.04 | 73.90 | 29.94% | 149.66 | 75.03 | 28.59% |

| MTL (outbound flow) | 116.63 | 54.70 | 24.28% | 122.86 | 59.54 | 25.37% | 127.39 | 61.99 | 24.76% |

| STL (outbound flow) | 139.93 | 74.74 | 31.87% | 145.42 | 76.95 | 31.18% | 152.67 | 80.72 | 30.78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, Y.; Han, Z.; Qiu, H.; Zhang, S.; Zhang, J. Multi-Task Learning-Based Traffic Flow Prediction Through Highway Toll Stations During Holidays. Technologies 2025, 13, 287. https://doi.org/10.3390/technologies13070287

Liu X, Zhang Y, Han Z, Qiu H, Zhang S, Zhang J. Multi-Task Learning-Based Traffic Flow Prediction Through Highway Toll Stations During Holidays. Technologies. 2025; 13(7):287. https://doi.org/10.3390/technologies13070287

Chicago/Turabian StyleLiu, Xiaowei, Yunfan Zhang, Zhongyi Han, Hao Qiu, Shuxin Zhang, and Jinlei Zhang. 2025. "Multi-Task Learning-Based Traffic Flow Prediction Through Highway Toll Stations During Holidays" Technologies 13, no. 7: 287. https://doi.org/10.3390/technologies13070287

APA StyleLiu, X., Zhang, Y., Han, Z., Qiu, H., Zhang, S., & Zhang, J. (2025). Multi-Task Learning-Based Traffic Flow Prediction Through Highway Toll Stations During Holidays. Technologies, 13(7), 287. https://doi.org/10.3390/technologies13070287