Vase-Life Monitoring System for Cut Flowers Using Deep Learning and Multiple Cameras

Abstract

1. Introduction

2. Results

2.1. Changes in Vase-Life and Senescence Patterns According to Transport Methods

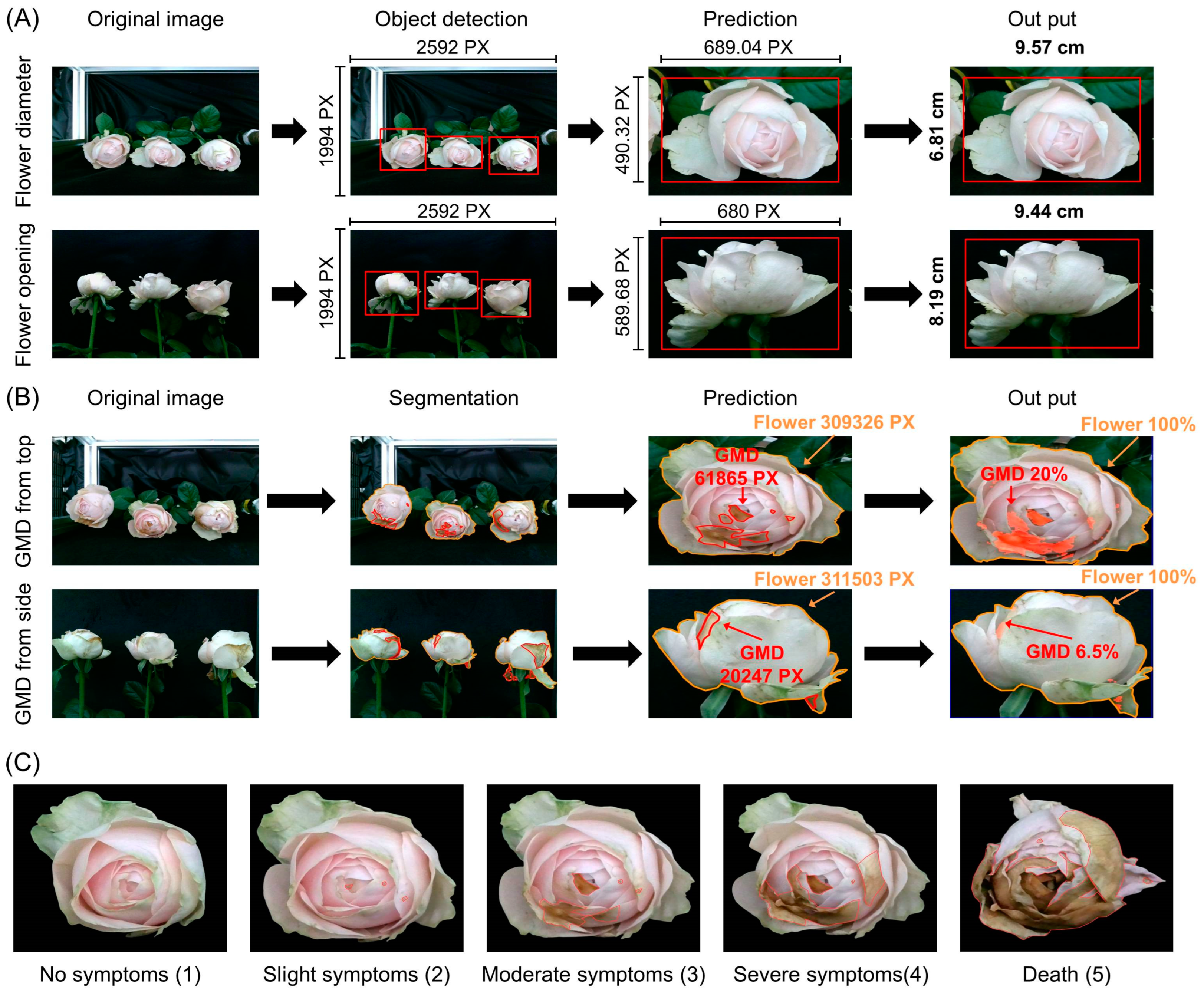

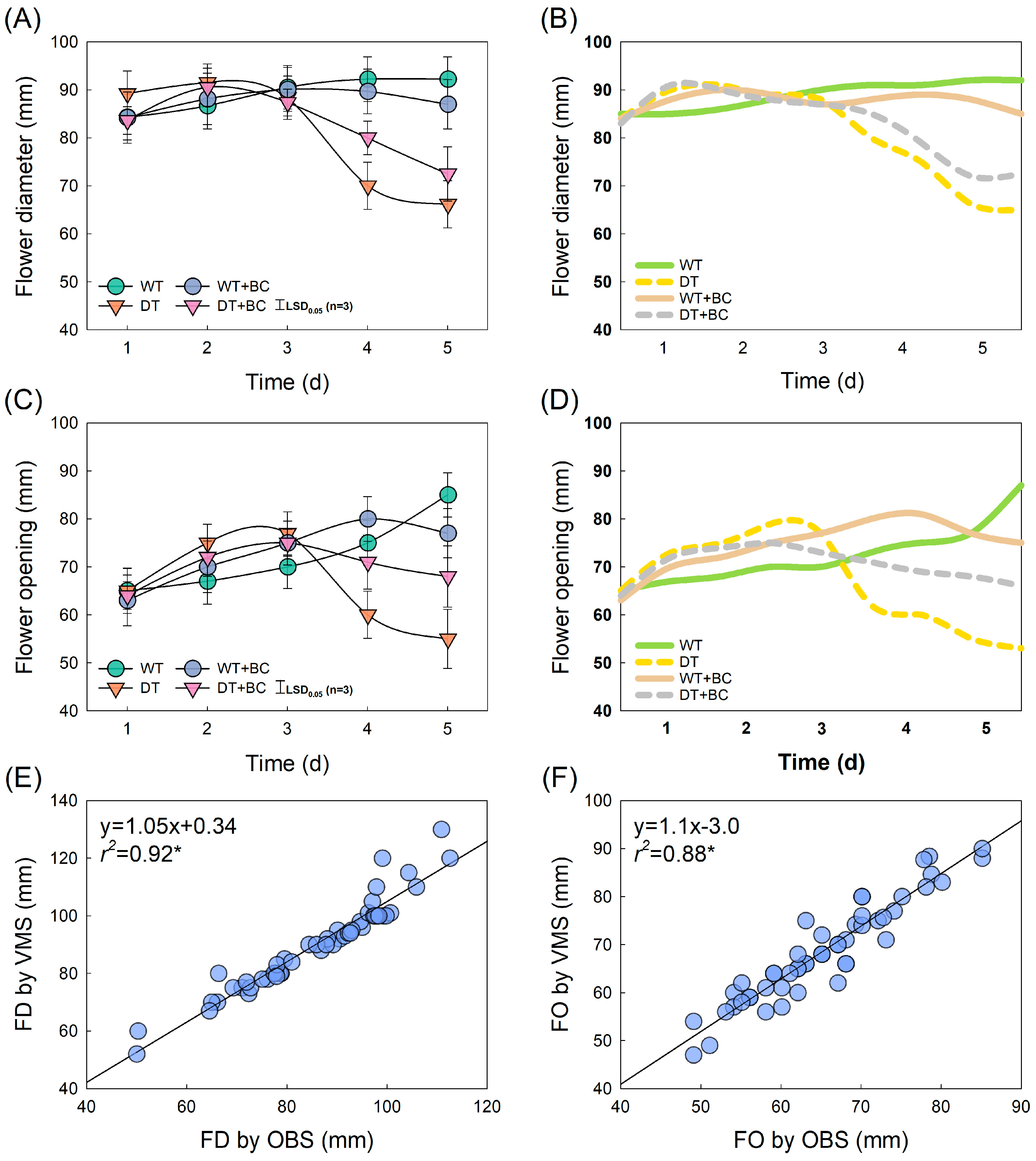

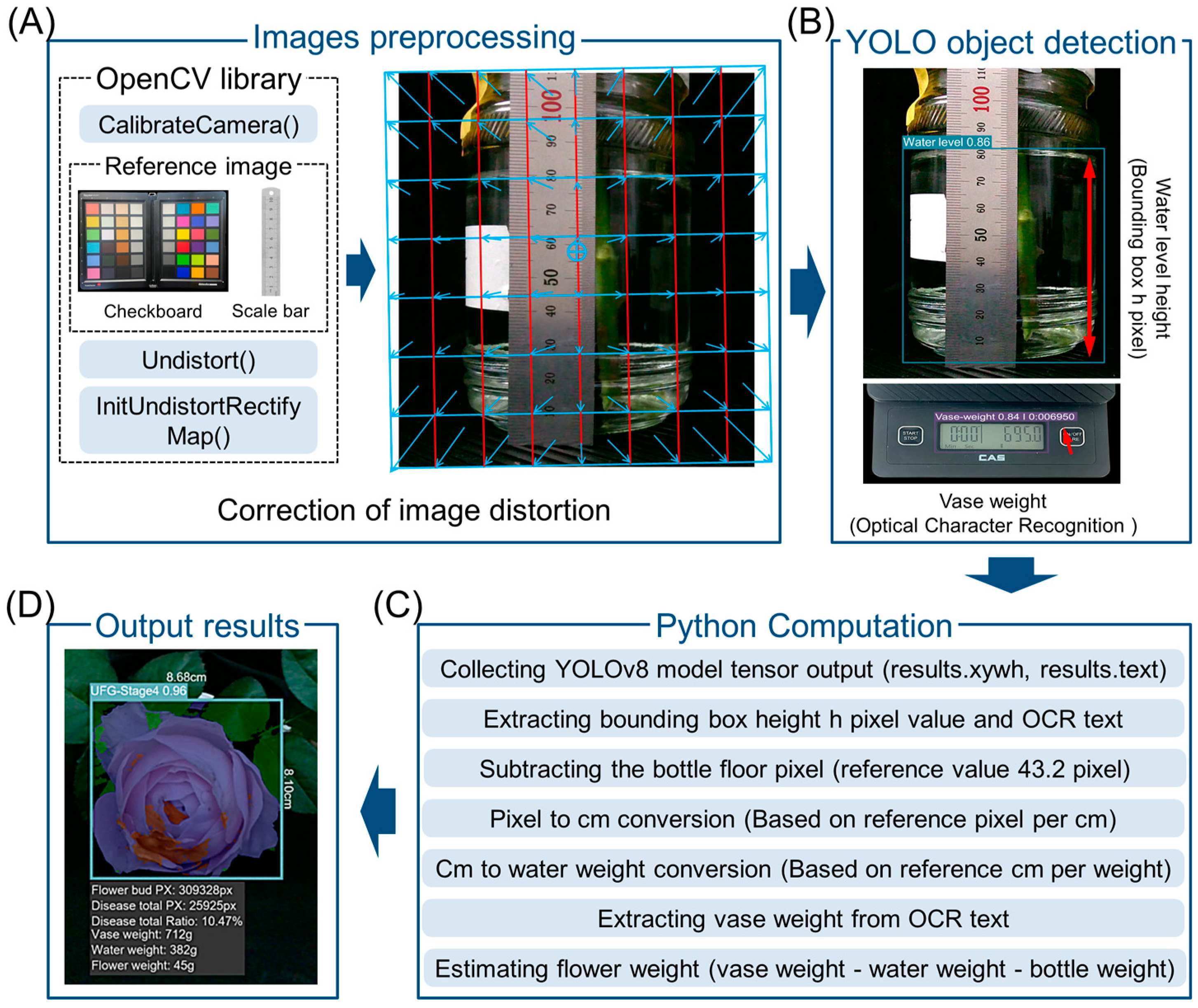

2.2. Development of Prediction Models for VMS

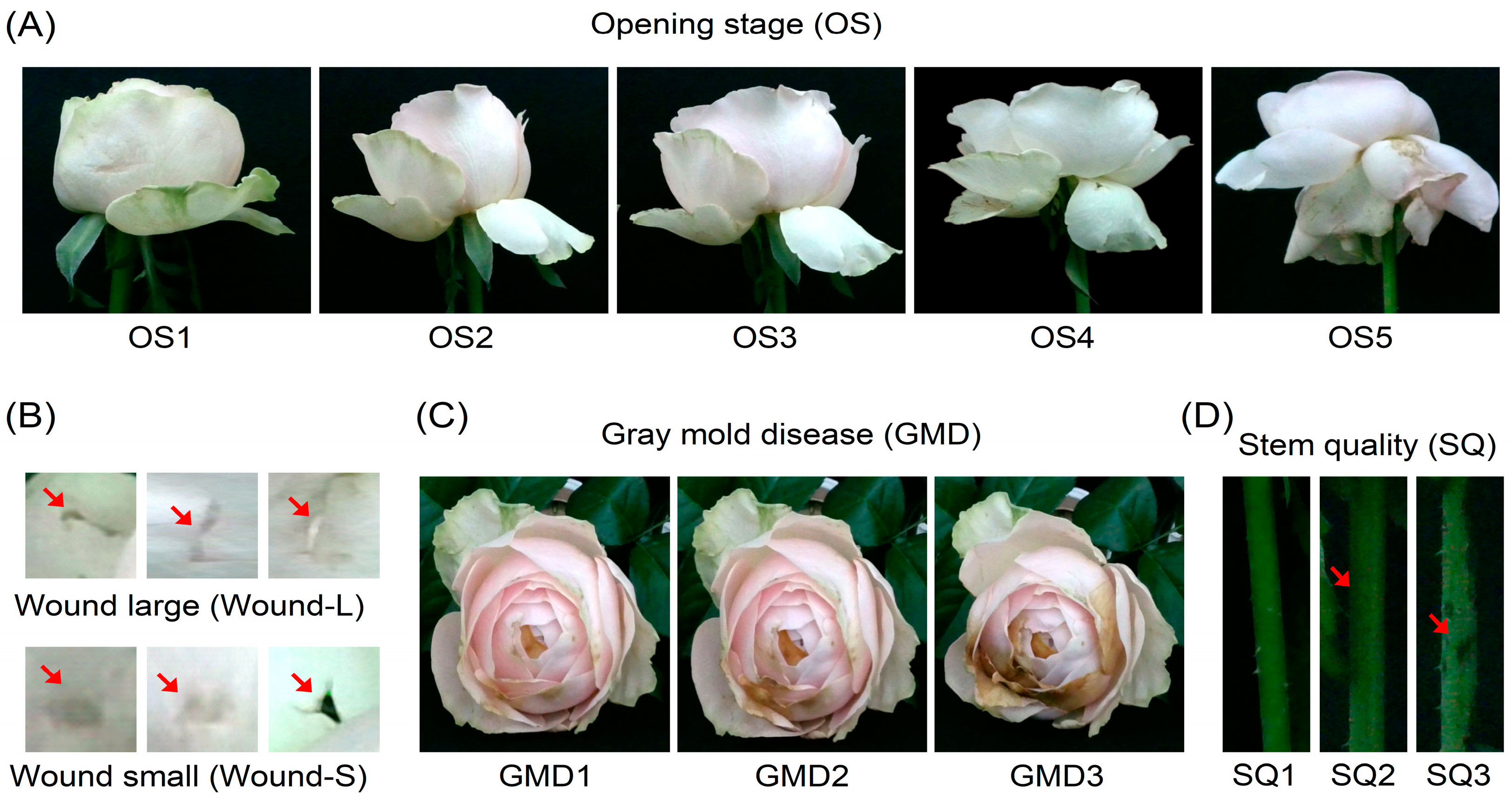

2.3. Prediction of Flower Opening and GMD Infection Using VMS

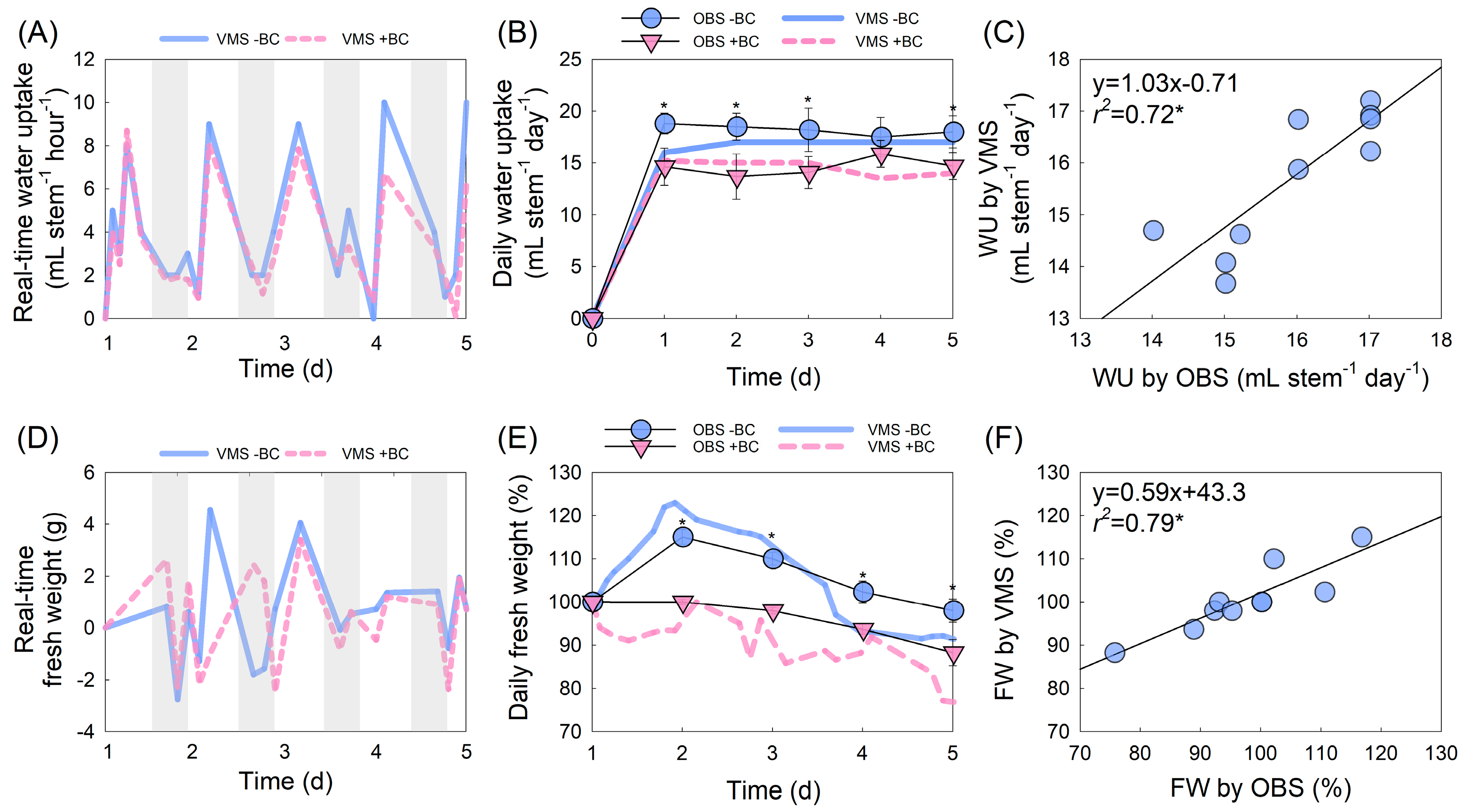

2.4. Evaluation of Fresh Weight and Water Uptake Changes Using VMS

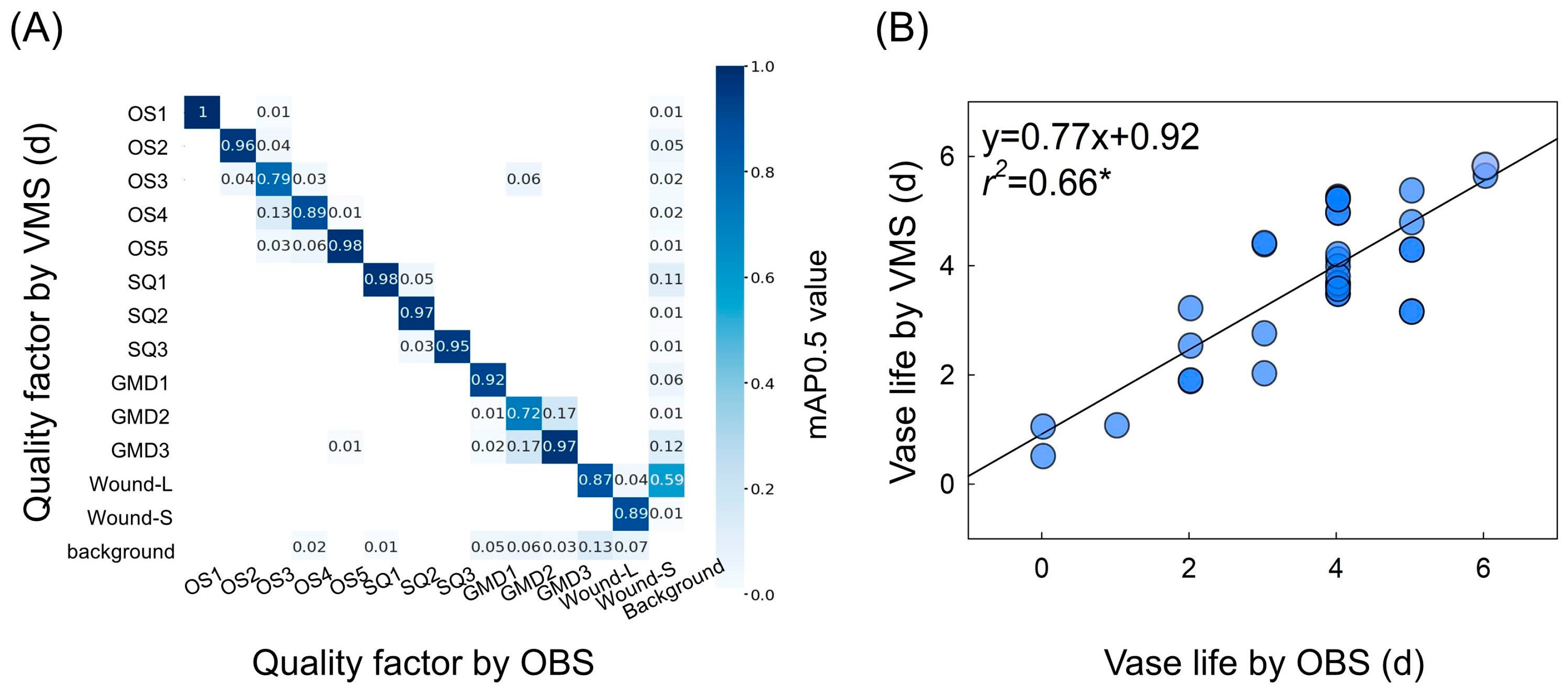

2.5. Prediction of Flower Quality and Vase Life Using VMS

3. Discussion

4. Materials and Methods

4.1. Plant Materials

4.2. Botrytis Cinerea Growth and Fungal Suspension Preparation

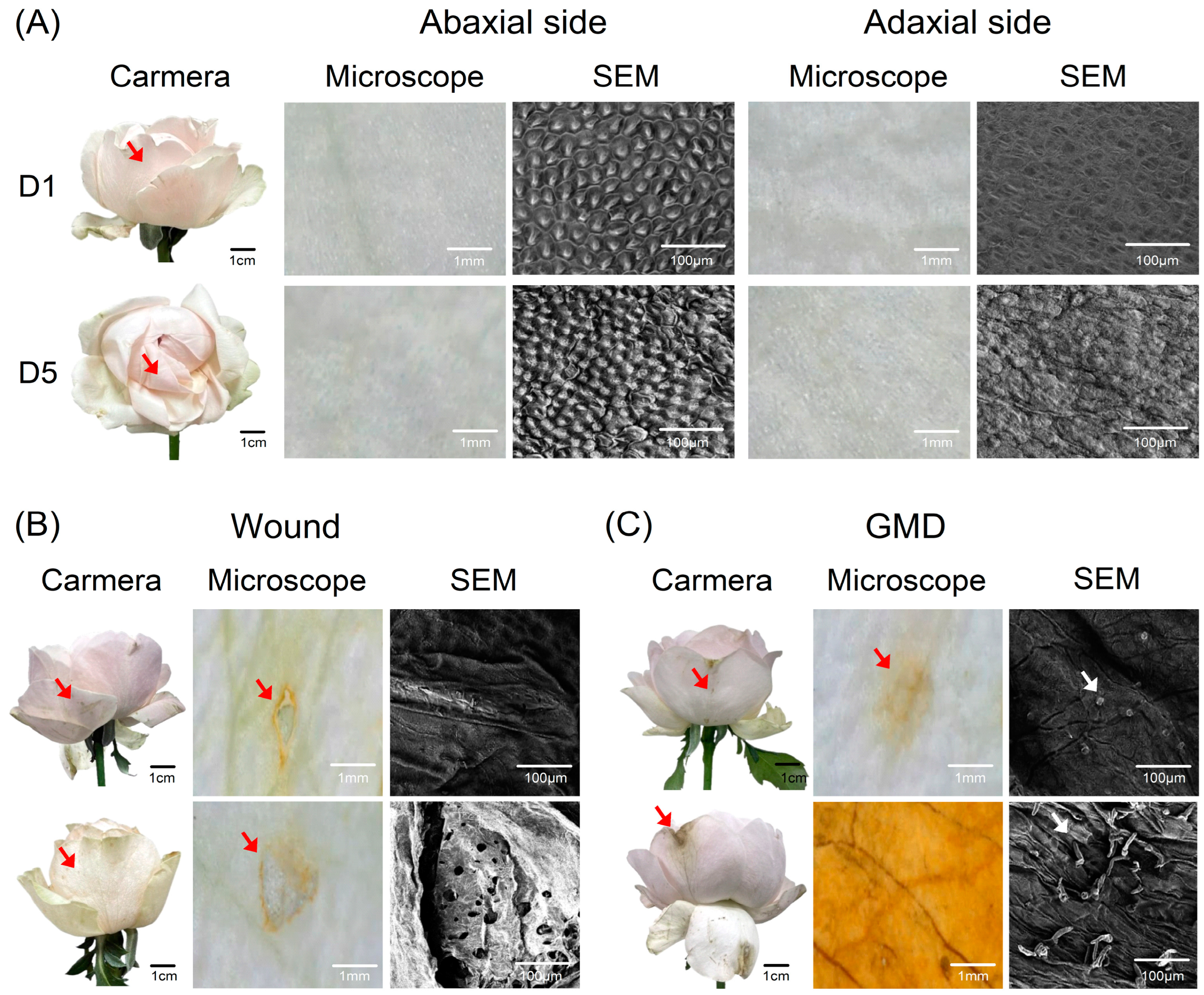

4.3. Experiment 1: Real-Time Monitoring Changes in Physiology and Morphology

4.4. Experiment 2: Measurement of VL and GMD Using VMS and Microscopy Cameras

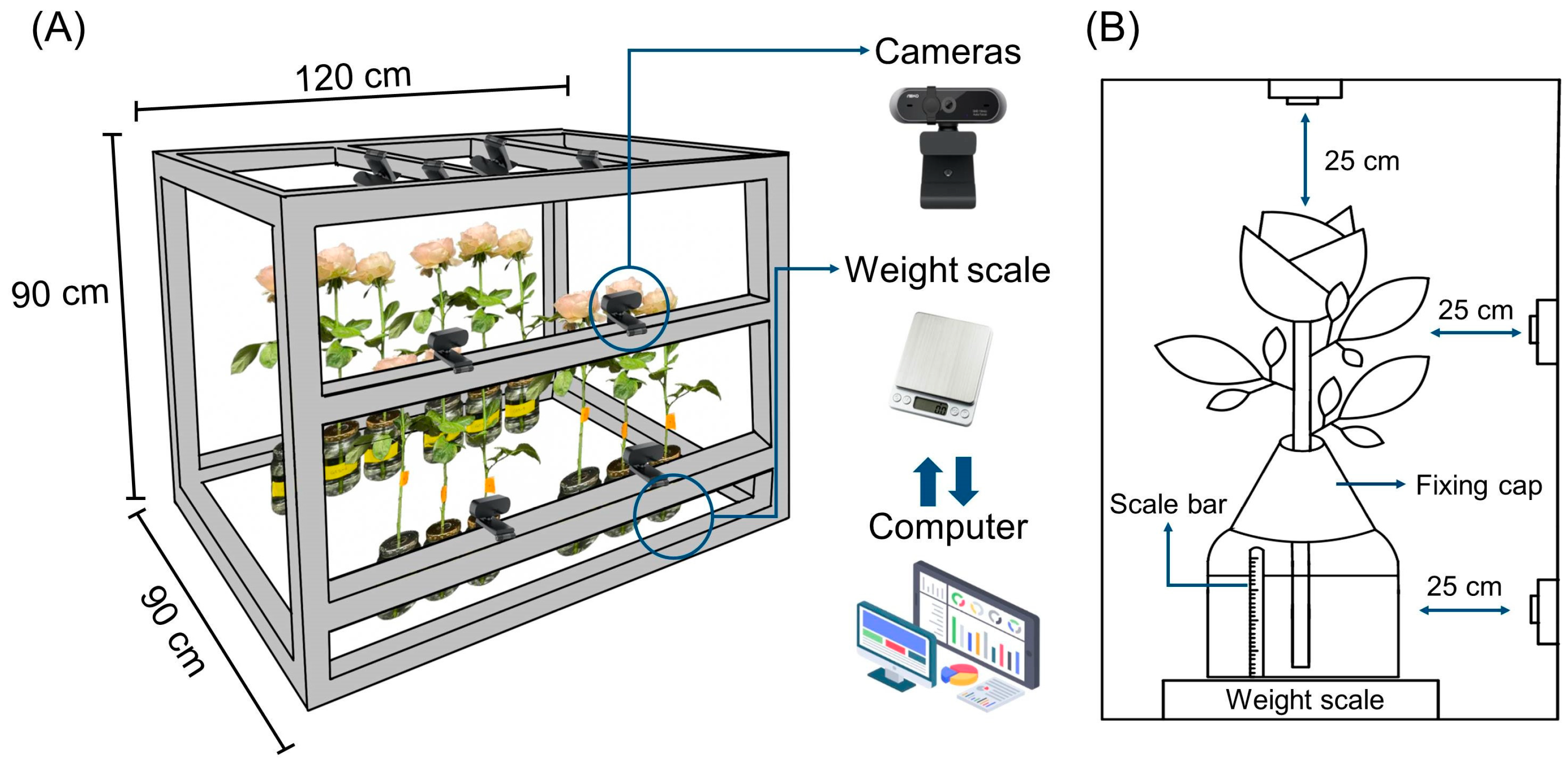

4.5. Installation of VMS

4.6. Assessment of Quality and Vase Life Using VMS

4.7. Image Acquisition and Processing

4.8. Microscope and SEM

4.9. Evaluations of Flower Quality, Vase Life, and GMD Infection

4.10. Experiment Design and Data Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shinozaki, Y.; Tanabata, T.; Ogiwara, I.; Yamada, T.; Kanekatsu, M. Application of digital image analysis system for fine evaluation of varietal differences and the role of ethylene in visible petal senescence of morning glory. J. Plant Growth Regul. 2011, 30, 229–234. [Google Scholar] [CrossRef]

- Rihn, A.L.; Yue, C.; Hall, C.; Behe, B.K. Consumer preferences for longevity information and guarantees on cut flower arrangements. HortScience 2014, 49, 769–778. [Google Scholar] [CrossRef]

- Woltering, E.J.; Paillart, M.J. Effect of cold storage on stomatal functionality, water relations and flower performance in cut roses. Postharvest Biol. Technol. 2018, 136, 66–73. [Google Scholar] [CrossRef]

- Hasanzadeh-Naemi, M.; Jari, S.K.; Zarrinnia, V.; Fatehi, F. The effect of exogenous methyl jasmonate and brassinosteroid on physicochemical traits, vase life, and gray mold disease of cut rose (Rosa hybrida L.) flowers. J. Saudi Soc. Agric. Sci 2021, 20, 467–475. [Google Scholar] [CrossRef]

- Ha, S.T.T.; Kim, Y.-T.; Yeam, I.; Choi, H.W.; In, B.-C. Molecular dissection of rose and Botrytis cinerea pathosystems affected by ethylene. Postharvest Biol. Technol. 2022, 194, 112104. [Google Scholar] [CrossRef]

- Ha, S.T.T.; Kim, Y.-T.; In, B.-C. Early detection of Botrytis cinerea infection in cut roses using thermal imaging. Plants 2023, 12, 4087. [Google Scholar] [CrossRef]

- Hao, Y.; Cao, X.; Ma, C.; Zhang, Z.; Zhao, N.; Ali, A.; Hou, T.; Xiang, Z.; Zhuang, J.; Wu, S. Potential applications and antifungal activities of engineered nanomaterials against gray mold disease agent Botrytis cinerea on rose petals. Front. Plant Sci. 2017, 8, 1332. [Google Scholar] [CrossRef]

- Fanourakis, D.; Pieruschka, R.; Savvides, A.; Macnish, A.J.; Sarlikioti, V.; Woltering, E.J. Sources of vase life variation in cut roses: A review. Postharvest Biol. Technol. 2013, 78, 1–15. [Google Scholar] [CrossRef]

- Zieslin, N. Postharvest control of vase life and senescence of rose flowers. Acta Hortic. 1988, 261, 257–264. [Google Scholar] [CrossRef]

- Verdonk, J.C.; van Ieperen, W.; Carvalho, D.R.; van Geest, G.; Schouten, R.E. Effect of preharvest conditions on cut-flower quality. Front. Plant Sci. 2023, 14, 1281456. [Google Scholar] [CrossRef]

- Macnish, A.J.; Morris, K.L.; de Theije, A.; Mensink, M.G.; Boerrigter, H.A.; Reid, M.S.; Jiang, C.-Z.; Woltering, E.J. Sodium hypochlorite: A promising agent for reducing Botrytis cinerea infection on rose flowers. Postharvest Biol. Technol. 2010, 58, 262–267. [Google Scholar] [CrossRef]

- Jafar, A.; Bibi, N.; Naqvi, R.A.; Sadeghi-Niaraki, A.; Jeong, D. Revolutionizing agriculture with artificial intelligence: Plant disease detection methods, applications, and their limitations. Front. Plant Sci. 2024, 15, 1356260. [Google Scholar] [CrossRef]

- Arnal Barbedo, J.G. Digital image processing techniques for detecting, quantifying and classifying plant diseases. Springerplus 2013, 2, 660. [Google Scholar] [CrossRef] [PubMed]

- Kuswidiyanto, L.W.; Noh, H.-H.; Han, X. Plant disease diagnosis using deep learning based on aerial hyperspectral images: A review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T. Identifying multiple plant diseases using digital image processing. Biosyst. Eng. 2016, 147, 104–116. [Google Scholar] [CrossRef]

- Fuentes-Peñailillo, F.; Carrasco Silva, G.; Pérez Guzmán, R.; Burgos, I.; Ewertz, F. Automating seedling counts in horticulture using computer vision and AI. Horticulturae 2023, 9, 1134. [Google Scholar] [CrossRef]

- Veerendra, G.; Swaroop, R.; Dattu, D.; Jyothi, C.A.; Singh, M.K. Detecting plant diseases, quantifying and classifying digital image processing techniques. Mater. Today Proc. 2022, 51, 837–841. [Google Scholar] [CrossRef]

- Caballero-Ramirez, D.; Baez-Lopez, Y.; Limon-Romero, J.; Tortorella, G.; Tlapa, D. An assessment of human inspection and deep learning for defect identification in floral wreaths. Horticulturae 2023, 9, 1213. [Google Scholar] [CrossRef]

- Kim, Y.-T.; Ha, S.T.T.; In, B.-C. Development of a longevity prediction model for cut roses using hyperspectral imaging and a convolutional neural network. Front. Plant Sci. 2024, 14, 1296473. [Google Scholar] [CrossRef]

- Ha, S.T.T.; In, B.-C. The retardation of floral senescence by simultaneous action of nano silver and AVG in cut flowers, which have distinct sensitivities to ethylene and water stress. Hortic. Environ. Biotechnol. 2023, 64, 927–941. [Google Scholar] [CrossRef]

- Lin, Z.; Fu, R.; Ren, G.; Zhong, R.; Ying, Y.; Lin, T. Automatic monitoring of lettuce fresh weight by multi-modal fusion based deep learning. Front. Plant Sci. 2022, 13, 980581. [Google Scholar] [CrossRef]

- Lü, P.; Huang, X.; Li, H.; Liu, J.; He, S.; Joyce, D.C.; Zhang, Z. Continuous automatic measurement of water uptake and water loss of cut flower stems. HortScience 2011, 46, 509–512. [Google Scholar] [CrossRef]

- Wijaya, R.; Hariono, B.; Saputra, T.W.; Rukmi, D.L. Development of plant monitoring systems based on multi-camera image processing techniques on hydroponic system. IOP Conf. Ser. Earth Environ. Sci. 2020, 411, 012002. [Google Scholar] [CrossRef]

- Almadhor, A.; Rauf, H.T.; Lali, M.I.U.; Damaševičius, R.; Alouffi, B.; Alharbi, A. AI-driven framework for recognition of guava plant diseases through machine learning from DSLR camera sensor based high resolution imagery. Sensors 2021, 21, 3830. [Google Scholar] [CrossRef] [PubMed]

- Horibe, T.; Yamada, K. Petals of cut rose flower show diurnal rhythmic growth. J. Jpn. Soc. Hortic. Sci. 2014, 83, 302–307. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, Y.; Hao, F.; Zhang, X.; Chen, J.; de Beurs, K.; He, Y.; Fu, Y.H. Comparison of different machine learning algorithms for predicting maize grain yield using UAV-based hyperspectral images. Int. J. Appl. Earth Obs. Geoinf 2023, 124, 103528. [Google Scholar] [CrossRef]

- Van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Wardlow, B.D.; Suyker, A.E.; Verma, S.B.; Shibayama, M. An alternative method using digital cameras for continuous monitoring of crop status. Agric. For. Meteorol. 2012, 154, 113–126. [Google Scholar] [CrossRef]

- Soleimanipour, A.; Chegini, G.R.; Massah, J.; Zarafshan, P. A novel image processing framework to detect geometrical features of horticultural crops: Case study of Anthurium flowers. Sci. Hortic. 2019, 243, 414–420. [Google Scholar] [CrossRef]

- Shoaib, M.; Shah, B.; Ei-Sappagh, S.; Ali, A.; Ullah, A.; Alenezi, F.; Gechev, T.; Hussain, T.; Ali, F. An advanced deep learning models-based plant disease detection: A review of recent research. Front. Plant Sci. 2023, 14, 1158933. [Google Scholar] [CrossRef]

- Zhang, P.; Li, D. EPSA-YOLO-V5s: A novel method for detecting the survival rate of rapeseed in a plant factory based on multiple guarantee mechanisms. Comput. Electron. Agric. 2022, 193, 106714. [Google Scholar] [CrossRef]

- Harkema, H.; Mensink, M.G.; Somhorst, D.P.; Pedreschi, R.P.; Westra, E.H. Reduction of Botrytis cinerea incidence in cut roses (Rosa hybrida L.) during long term transport in dry conditions. Postharvest Biol. Technol. 2013, 76, 135–138. [Google Scholar] [CrossRef]

- Hu, Y.; Doi, M.; Imanishi, H. Improving the longevity of cut roses by cool and wet transport. J. Jpn. Soc. Hortic. Sci. 1998, 67, 681–684. [Google Scholar] [CrossRef]

- Lee, Y.B.; Kim, W.S. Natural skullcap extracts substitute commercial preservatives during summer export for cut rose flowers. Flower Res. J. 2020, 28, 170–174. [Google Scholar] [CrossRef]

- Wu, S.; Wen, W.; Gou, W.; Lu, X.; Zhang, W.; Zheng, C.; Xiang, Z.; Chen, L.; Guo, X. A miniaturized phenotyping platform for individual plants using multi-view stereo 3D reconstruction. Front. Plant Sci. 2022, 13, 897746. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. Vegetable disease detection using an improved YOLOv8 algorithm in the greenhouse plant environment. Sci. Rep. 2024, 14, 4261. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A lightweight YOLOv8 tomato detection algorithm combining feature enhancement and attention. J. Agron. 2023, 13, 1824. [Google Scholar] [CrossRef]

- Duan, Z.; Liu, W.; Zeng, S.; Zhu, C.; Chen, L.; Cui, W. Research on a real-time, high-precision end-to-end sorting system for fresh-cut flowers. J. Agron. 2024, 14, 1532. [Google Scholar] [CrossRef]

- Carpenter, W.; Rasmussen, H. Water uptake by cut roses (Rosa hybrida) in light and dark. J. Am. Soc. Hortic. Sci. 1973, 98, 309–313. [Google Scholar] [CrossRef]

- Li, Q.; Ning, P.; Zheng, L.; Huang, J.; Li, G.; Hsiang, T. Effects of volatile substances of Streptomyces globisporus JK-1 on control of Botrytis cinerea on tomato fruit. Biol. Control 2012, 61, 113–120. [Google Scholar] [CrossRef]

- Dinh, S.; Joyce, D.; Irving, D.; Wearing, A. Histology of waxflower (Chamelaucium spp.) flower infection by Botrytis cinerea. Plant Pathol. J. 2011, 60, 278–287. [Google Scholar] [CrossRef]

- Almonte, L.; Pimentel, C.; Rodríguez-Cañas, E.; Abad, J.; Fernández, V.; Colchero, J. Rose petal effect: A subtle combination of nano-scale roughness and chemical variability. Nano Select 2022, 3, 977–989. [Google Scholar] [CrossRef]

- VBN. Evaluation Cards for Rosa; FloraHolland Aalsmeer: Aalsmeer, The Netherlands, 2014. [Google Scholar]

| Image | Precision (%) | Recall (%) | mAP0.5 (%) | mAP0.5–0.9 (%) |

|---|---|---|---|---|

| Top | 90.85 | 88.53 | 91.41 | 74.01 |

| Side | 86.46 | 91.94 | 91.45 | 70.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ham, J.Y.; Kim, Y.-T.; Ha, S.T.T.; In, B.-C. Vase-Life Monitoring System for Cut Flowers Using Deep Learning and Multiple Cameras. Plants 2025, 14, 1076. https://doi.org/10.3390/plants14071076

Ham JY, Kim Y-T, Ha STT, In B-C. Vase-Life Monitoring System for Cut Flowers Using Deep Learning and Multiple Cameras. Plants. 2025; 14(7):1076. https://doi.org/10.3390/plants14071076

Chicago/Turabian StyleHam, Ji Yeong, Yong-Tae Kim, Suong Tuyet Thi Ha, and Byung-Chun In. 2025. "Vase-Life Monitoring System for Cut Flowers Using Deep Learning and Multiple Cameras" Plants 14, no. 7: 1076. https://doi.org/10.3390/plants14071076

APA StyleHam, J. Y., Kim, Y.-T., Ha, S. T. T., & In, B.-C. (2025). Vase-Life Monitoring System for Cut Flowers Using Deep Learning and Multiple Cameras. Plants, 14(7), 1076. https://doi.org/10.3390/plants14071076