Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging

Abstract

1. Introduction

- We propose a novel attention module, named global attention network (GloAN), which can seamlessly integrate into CNN architectures and brings notable performance gains in rice lodging detection with minimal computational cost.

- Our proposed methods enable accurate rice lodging detection that can be deployed with limited computational resources, which is practical for real-world applications.

- Our proposed methods provide a new alternative to improve the performance of semantic segmentation models in rice lodging detection. Instead of directly changing the implemented models or the backbone networks, one can improve the segmentation performance more effectively by adopting GloAN.

2. Related Work

2.1. Remote Sensing of UAV on Rice Lodging Detection

2.2. Attention Mechanism and Knowledge Distillation

3. Materials and Methods

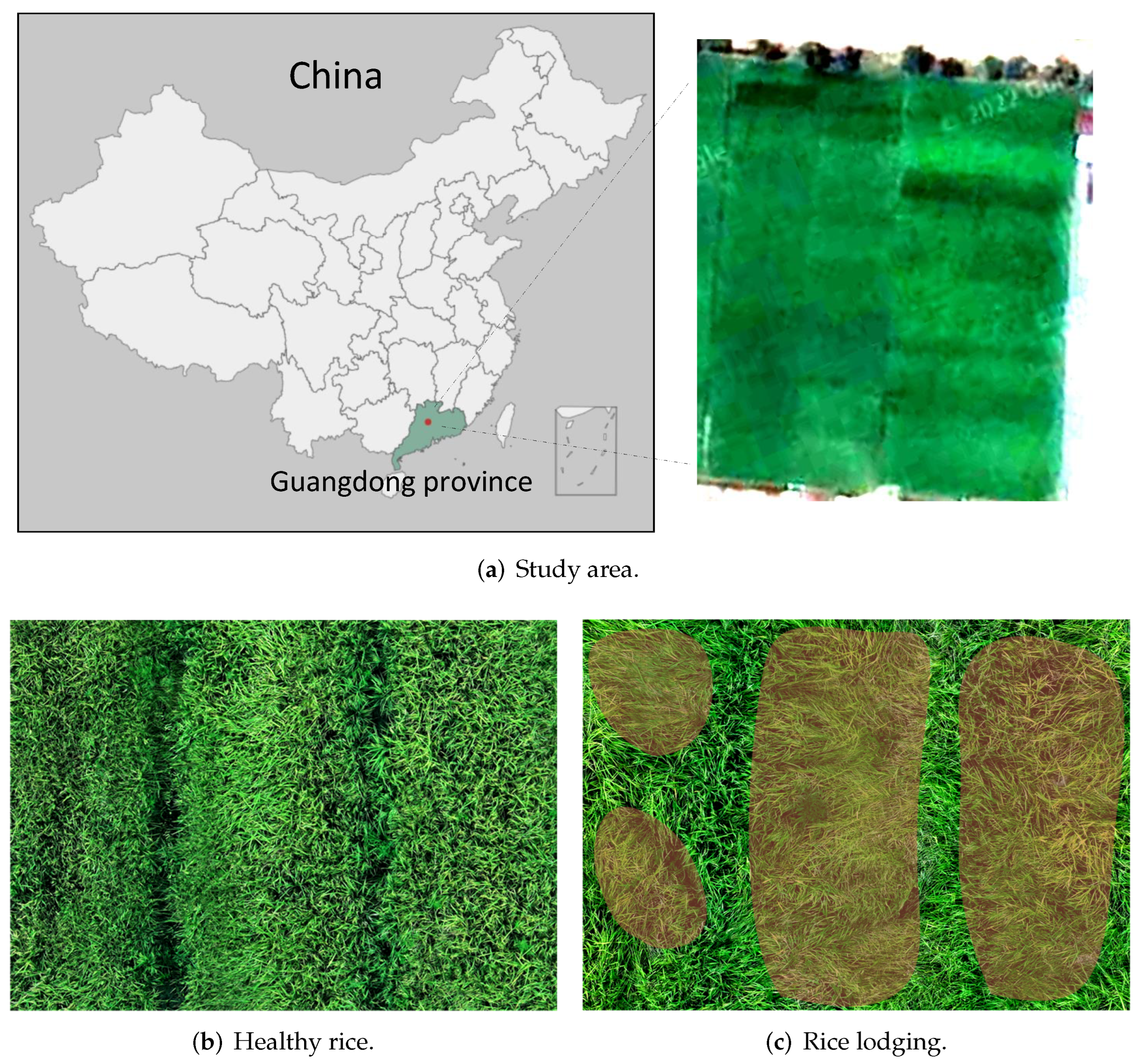

3.1. Dataset Overview

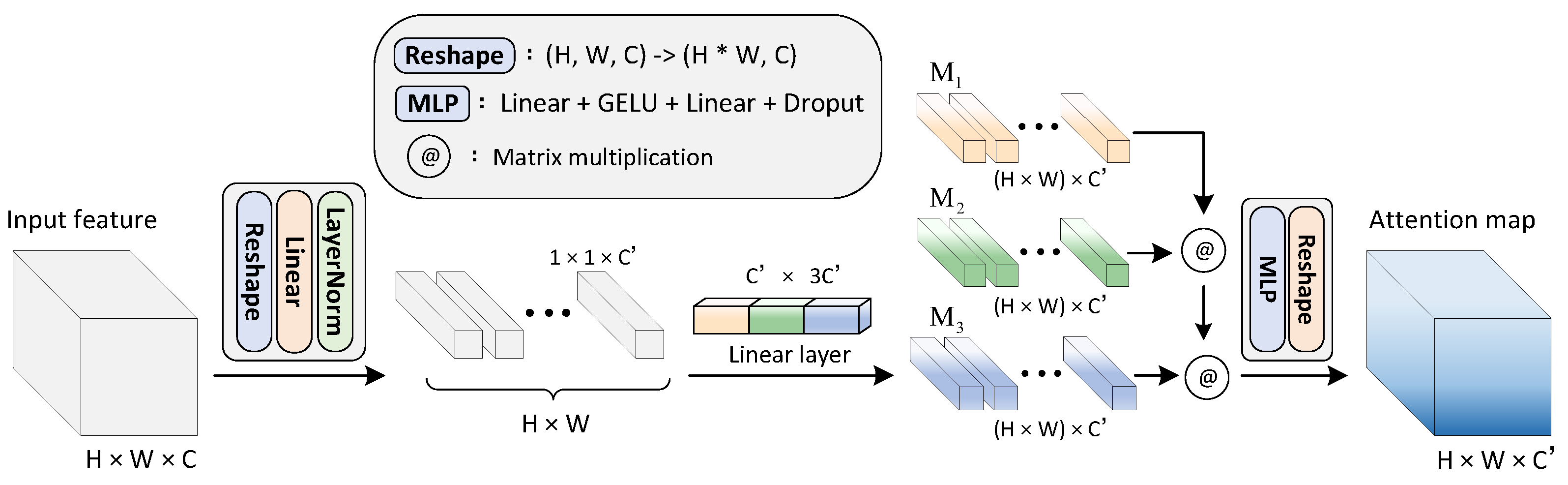

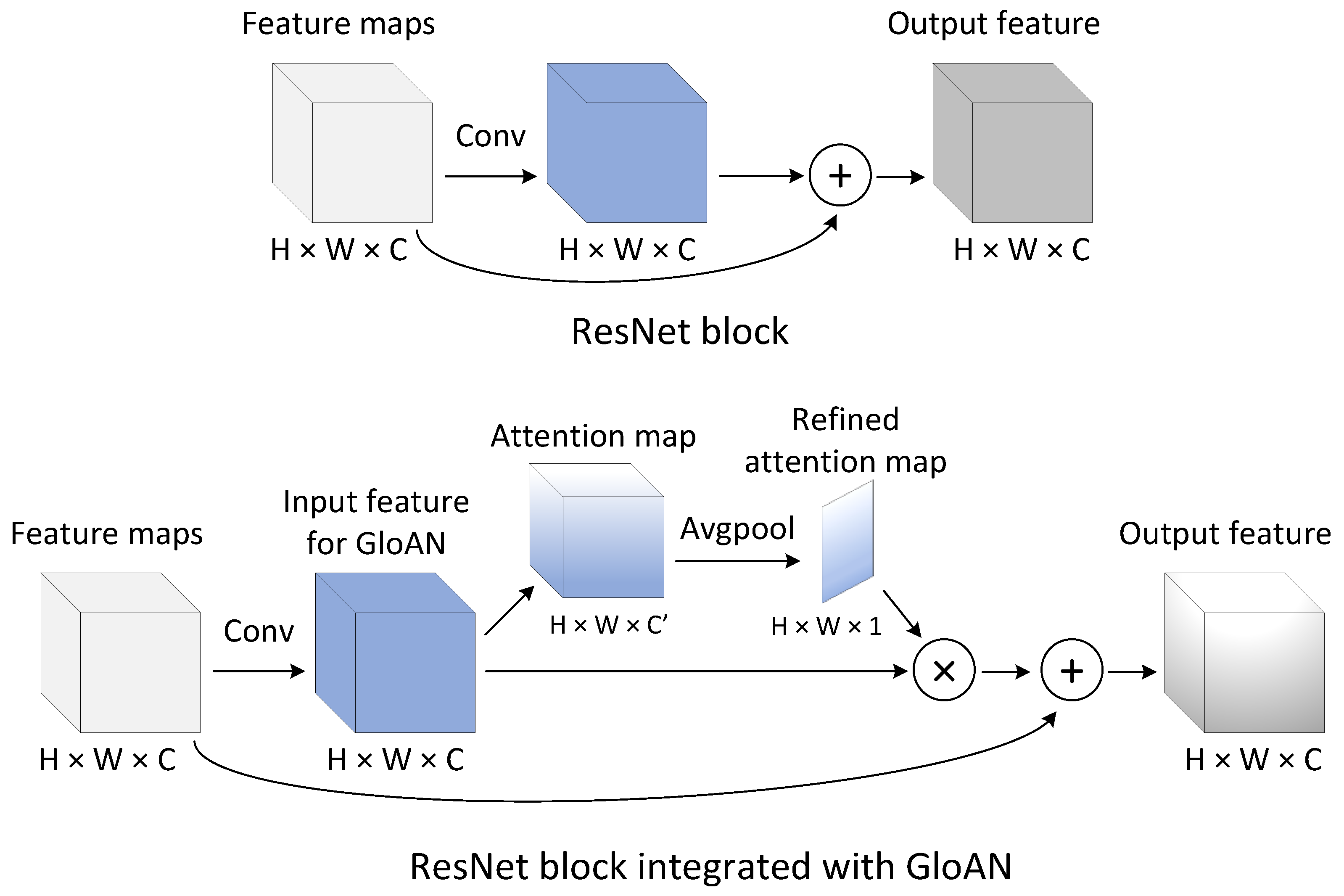

3.2. Global Attention Network

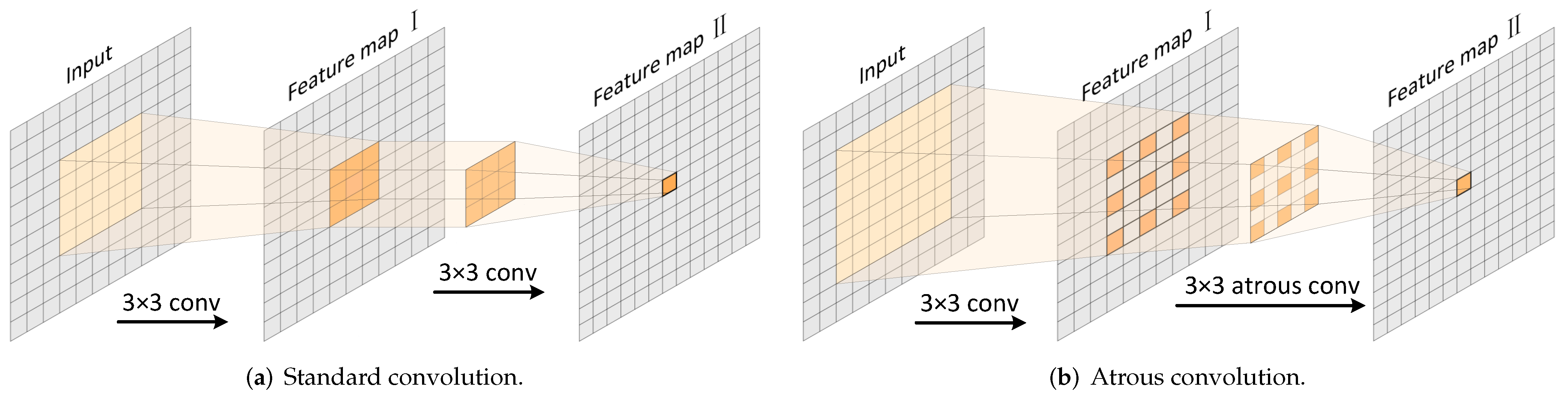

3.3. Semantic Segmentation Models

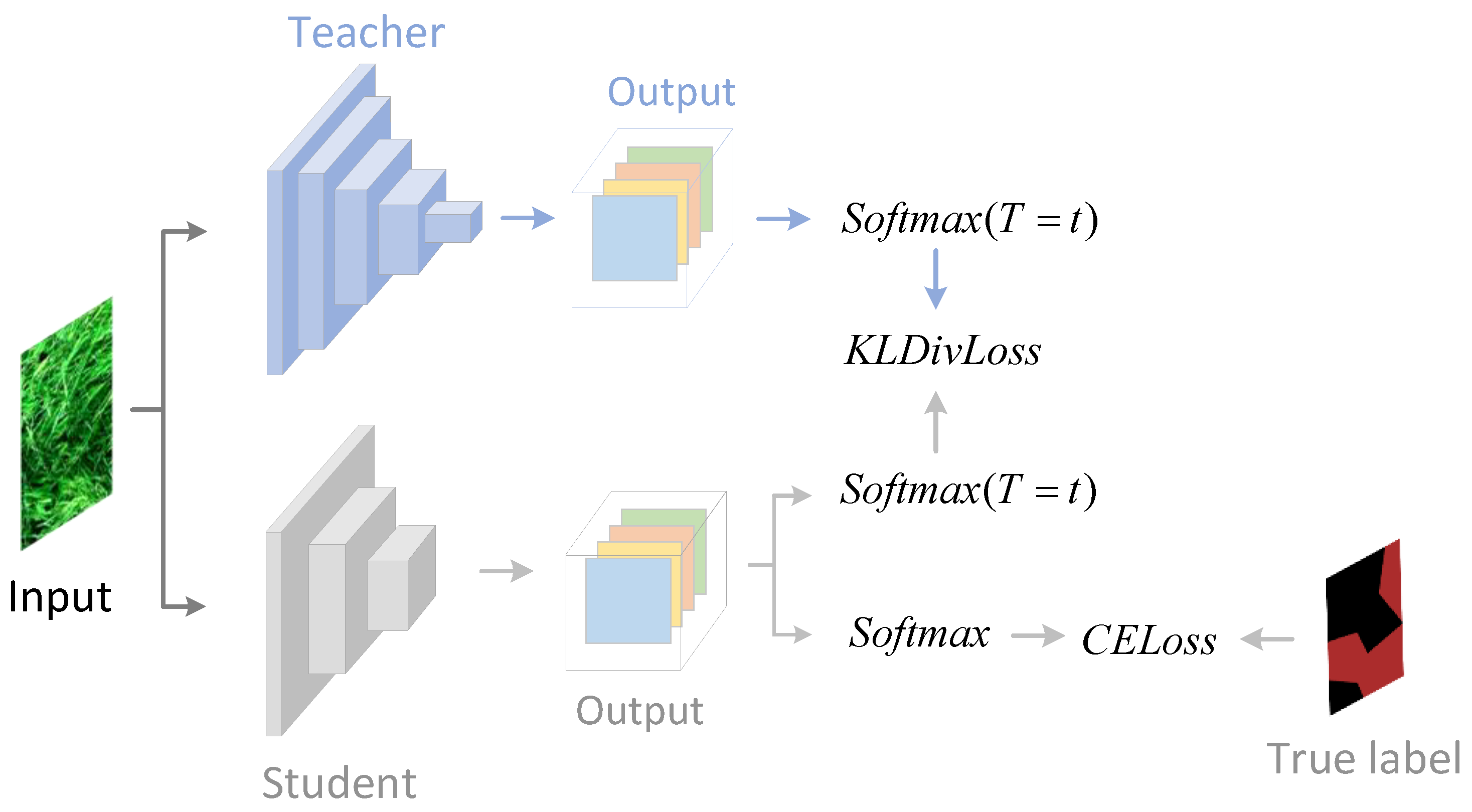

3.4. Knowledge Distillation

3.5. Transfer Learning Strategy

4. Results

4.1. Configuration of Experiment

4.2. GloAN Integrated into Semantic Segmentation Models

4.3. GloAN Integrated into Different Backbones

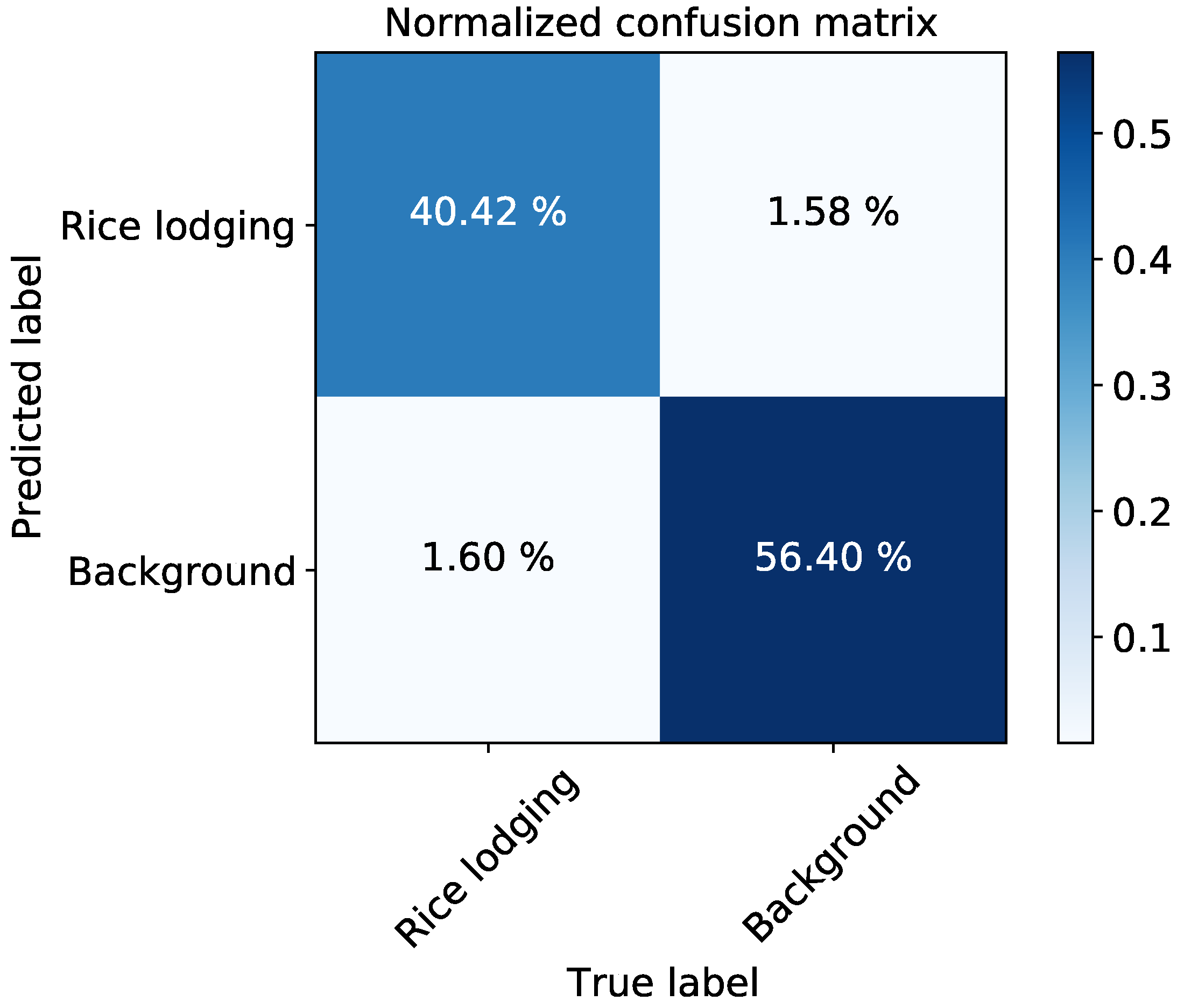

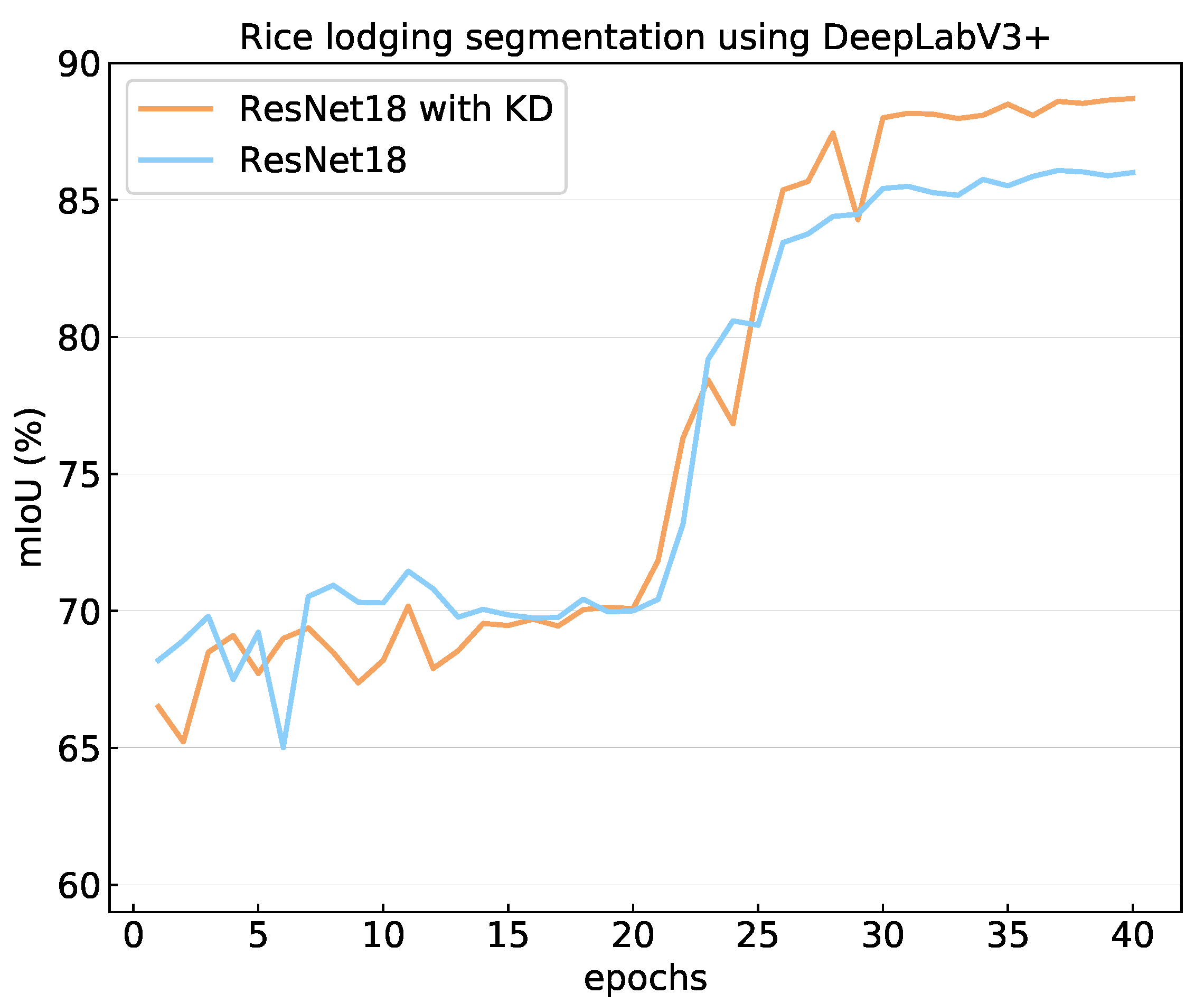

4.4. GloAN Combined with Knowledge Distillation

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lang, Y.Z.; Yang, X.D.; Wang, M.E.; Zhu, Q.S. Effects of lodging at different filling stages on rice yield and grain quality. Rice Sci. 2012, 19, 315–319. [Google Scholar] [CrossRef]

- Setter, T.L.; Laureles, E.V.; Mazaredo, A.M. Lodging reduces yield of rice by self-shading and reductions in canopy photosynthesis. Field Crops Res. 1997, 49, 95–106. [Google Scholar] [CrossRef]

- Yang, M.D.; Boubin, J.G.; Tsai, H.P.; Tseng, H.H.; Hsu, Y.C.; Stewart, C.C. Adaptive autonomous UAV scouting for rice lodging assessment using edge computing with deep learning EDANet. Comput. Electron. Agric. 2020, 179, 105817. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Zhang, D.; Ding, Y.; Chen, P.; Zhang, X.; Pan, Z.; Liang, D. Automatic extraction of wheat lodging area based on transfer learning method and deeplabv3+ network. Comput. Electron. Agric. 2020, 179, 105845. [Google Scholar] [CrossRef]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat lodging detection from UAS imagery using machine learning algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Hui, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7131–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the the ECCV 2018 Proceedings Focus on Learning for Vision, Human Vision, 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, X.; Pan, J.; Xie, F.; Zeng, J.; Li, Q.; Huang, X.; Liu, D.; Wang, X. Fast and accurate green pepper detection in complex backgrounds via an improved Yolov4-tiny model. Comput. Electron. Agric. 2021, 191, 106503. [Google Scholar] [CrossRef]

- Olivas, S.E.; Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods and Techniques; Information Science Reference: Hershey, PA, USA; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Peng, S.; Visperas, R.M.; Ereful, N.; Bhuiya, M.S.; Julfiquar, A.W. Lodging-related morphological traits of hybrid rice in a tropical irrigated ecosystem. Field Crops Res. 2007, 101, 240–248. [Google Scholar] [CrossRef]

- Tomar, V.; Mandal, V.P.; Srivastava, P.; Patairiya, S.; Singh, K.; Ravisankar, N.; Subash, N.; Kumar, P. Rice Equivalent Crop Yield Assessment Using MODIS Sensors’ Based MOD13A1-NDVI Data. IEEE Sens. J. 2014, 14, 3599–3605. [Google Scholar] [CrossRef]

- Keller, M.; Karutz, C.; Schmid, J.E.; Stamp, P.; Winzeler, M.; Keller, B.; Messmer, M.M. Quantitative trait loci for lodging resistance in a segregating wheat × spelt population. Theor. Appl. Genet. 1999, 98, 1171–1182. [Google Scholar] [CrossRef]

- Wen, D.; Xianguo, Q.; Yu, Y. Effects of applying organic fertilizer on rice lodging resistance and yield. Hunan Acad. Agric. Sci. 2010, 11, 98–101. [Google Scholar]

- Jie, L.; Hongcheng, Z.; Jinlong, G.; Yong, C.; Qigen, D.; Zhongyang, H.; Ke, X.; Haiyan, W. Effects of different planting methods on the culm lodging resistance of super rice. Sci. Agric. Sin. 2011, 44, 2234–2243. [Google Scholar]

- Okuno, A.; Hirano, K.; Asano, K.; Takase, W.; Masuda, R.; Morinaka, Y.; Ueguchi-Tanaka, M.; Kitano, H.; Matsuoka, M. New approach to increasing rice lodging resistance and biomass yield through the use of high gibberellin producing varieties. PLoS ONE 2014, 9, e86870. [Google Scholar] [CrossRef]

- Chen, J.; Chen, W.; Zeb, A.; Yang, S.; Zhang, D. Lightweight Inception Networks for the Recognition and Detection of Rice Plant Diseases. IEEE Sens. J. 2022, 22, 14628–14638. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V.; Meyering, B.; Albrecht, U. Citrus rootstock evaluation utilizing UAV-based Remote Sensing and artificial intelligence. Comput. Electron. Agric. 2019, 164, 104900. [Google Scholar] [CrossRef]

- Radoglou–Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, Z.; Yang, S.; Ding, D.; Ning, J. Identifying Sunflower Lodging based on image fusion and deep semantic segmentation with UAV Remote Sensing Imaging. Comput. Electron. Agric. 2020, 179, 105812. [Google Scholar] [CrossRef]

- Wilke, N.; Siegmann, B.; Klingbeil, L.; Burkart, A.; Kraska, T.; Muller, O.; van Doorn, A.; Heinemann, S.; Rascher, U. Quantifying lodging percentage and lodging severity using a UAV-based canopy height model combined with an objective threshold approach. Remote Sens. 2019, 11, 515. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of unmanned aerial vehicle imagery and deep learning unet to extract rice lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Kitano, B.T.; Mendes, C.C.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn plant counting using deep learning and UAV images. IEEE Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Rensink, R.A. The dynamic representation of scenes. Visual Cognit. 2000, 7, 17–42. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Zhang, X.; Wang, T.; Qi, J.; Lu, H.; Wang, G. Progressive Attention Guided Recurrent Network for Salient Object Detection. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 714–722. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 510–519. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Georg, H.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Un, J.S. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, J.; Shuai, B.; Hu, J.-F.; Lin, J.; Zheng, W.-S. Improving Fast Segmentation with Teacher-student Learning. arXiv 2018, arXiv:1810.08476. [Google Scholar]

- Shu, C.; Liu, Y.; Gao, J.; Yan, Z.; Shen, C. Channel-Wise Knowledge Distillation for Dense Prediction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 5311–5320. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, L. Attention is All you Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Karen, S.; Andrew, Z. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vision 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, L. icrosoft COCO: Common Objects in Context. In Proceedings of the ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Hariharan, B.; Arbeláez, P.; Bourdev, L.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2011, Barcelona, Spain, 6–13 November 2011; pp. 991–998. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

| Model | Parameters | GFLOPs | mIoU |

|---|---|---|---|

| SegNet * [17] | 25.63M | 135.36 | 52.29% |

| U-Net * [49] | 28.04M | 196.89 | 56.92% |

| DeepLabV3+ * [16] | 20.16M | 105.98 | 30.41% |

| SegNet [17] | 25.63M | 135.36 | 83.91% |

| U-Net [49] | 28.04M | 196.89 | 86.61% |

| DeepLabV3+ [16] | 20.16M | 105.98 | 91.16% |

| SegNet [17] + GloAN | 25.82M | 136.18 | 87.71% |

| U-Net [49] + GloAN | 28.23M | 197.72 | 90.41% |

| DeepLabV3+ [16] + GloAN | 20.35M | 106.70 | 92.85% |

| Backbone | Parameters | GFLOPs | mIoU |

|---|---|---|---|

| ResNext-101 [38] | 105.63M | 111.36 | 92.66% |

| Xception [47] | 37.64M | 65.06 | 87.34% |

| VGG-16 [46] | 20.16M | 105.98 | 91.16% |

| ResNet-18 [39] | 17.12M | 31.77 | 86.07% |

| MobileNetV2 [48] | 5.81M | 26.39 | 83.92% |

| Xception [47] + GloAN | 38.61M | 66.67 | 90.90% |

| VGG-16 [46] + GloAN | 20.35M | 106.70 | 92.85% |

| ResNet-18 [39] + GloAN | 17.50M | 33.09 | 89.30% |

| MobileNetV2 [48] + GloAN | 5.94M | 27.00 | 85.66% |

| ResNet-18 * | 17.12M | 31.77 | 88.70% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, G.; Wang, J.; Zeng, F.; Cai, Y.; Kang, G.; Yue, X. Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging. Plants 2023, 12, 1595. https://doi.org/10.3390/plants12081595

Kang G, Wang J, Zeng F, Cai Y, Kang G, Yue X. Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging. Plants. 2023; 12(8):1595. https://doi.org/10.3390/plants12081595

Chicago/Turabian StyleKang, Gaobi, Jian Wang, Fanguo Zeng, Yulin Cai, Gaoli Kang, and Xuejun Yue. 2023. "Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging" Plants 12, no. 8: 1595. https://doi.org/10.3390/plants12081595

APA StyleKang, G., Wang, J., Zeng, F., Cai, Y., Kang, G., & Yue, X. (2023). Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging. Plants, 12(8), 1595. https://doi.org/10.3390/plants12081595