Metrological Characterization of a Vision-Based System for Relative Pose Measurements with Fiducial Marker Mapping for Spacecrafts

Abstract

1. Introduction

- The effect of several potentially influencing parameters is analyzed for the measurement errors and uncertainties of the satellite poses to highlight which ones exhibit a greater numerical correlation with the obtained errors and/or uncertainties. Since the considered parameters can be adjusted by a proper selection and positioning of the fiducial markers on the satellite surface, the aim of the proposed analysis is to yield useful advice in the design of future systems for relative pose measurement.

- The BA approach is well known and widely employed in the robotics and computer vision fields. In the experimental verification of the BA approach applied to the pose measurement with fiducial markers, the evaluated errors and uncertainties demonstrate a superior performance of the BA approach, also taking into account the known numerical results described in References [4,5,6,7,8].

- A more detailed uncertainty analysis is performed, taking into account more uncertainty sources than in Reference [8], for the 2D positions of the markers in the images, such as the possible inaccuracy of the corner detection algorithm.

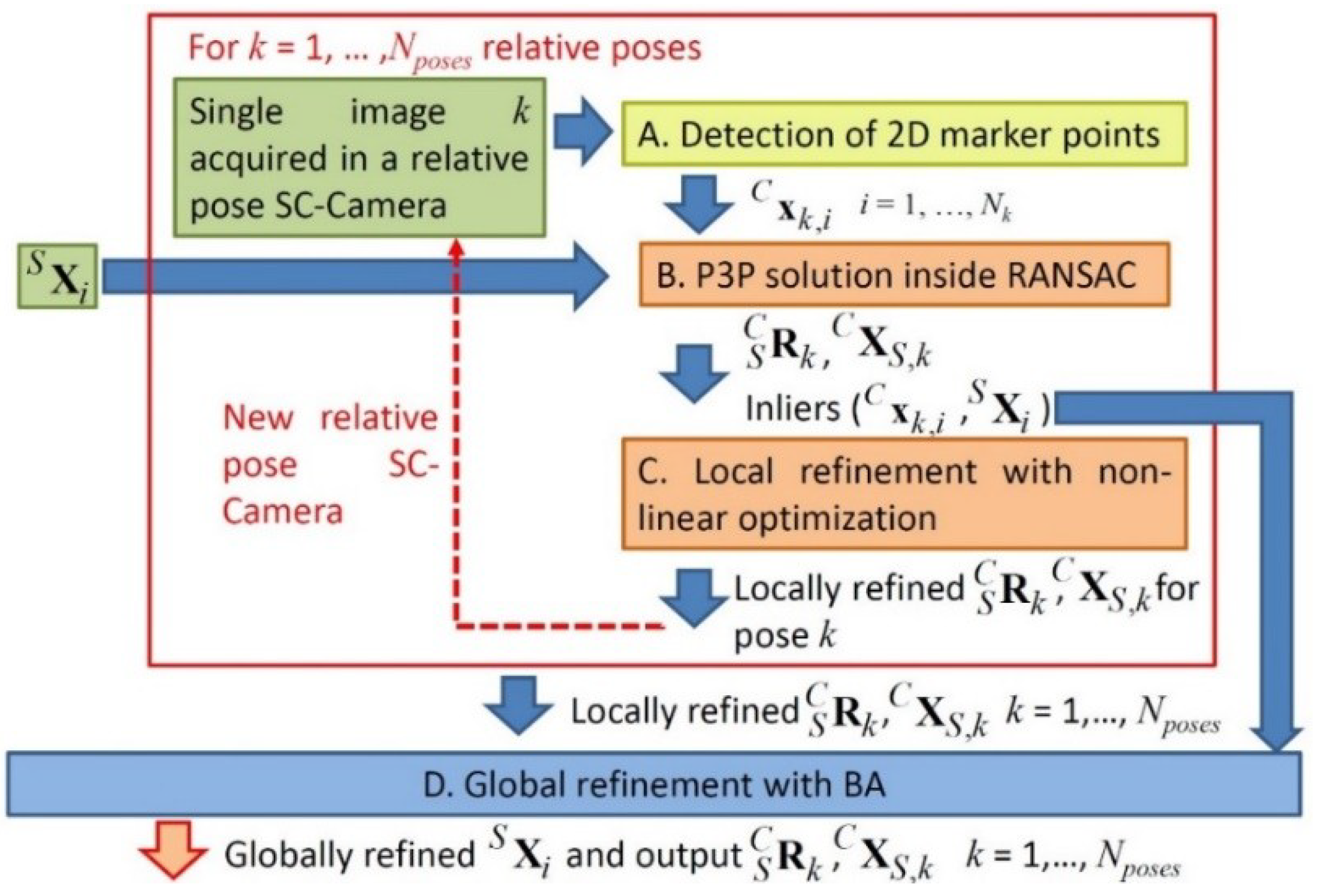

2. Measurement Algorithm

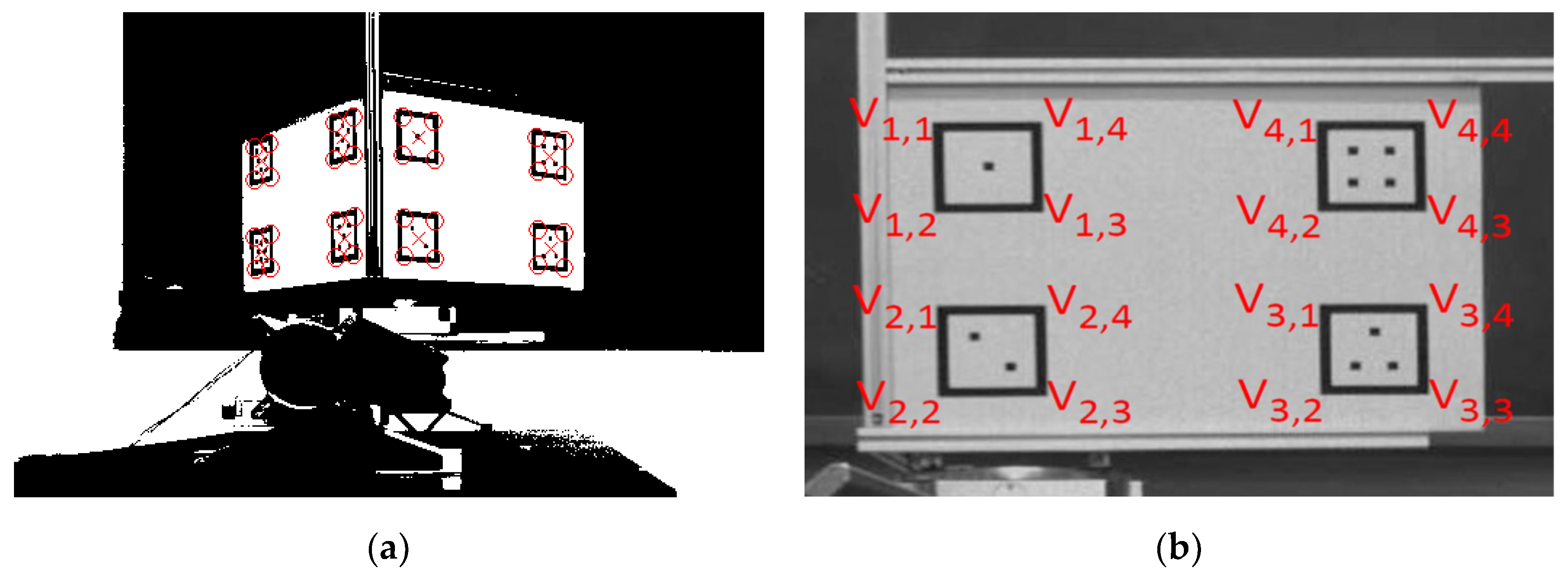

2.1. Step A: Detection of the 2D Marker Points

- the image binarization using a selected threshold and removal of all small connected regions having less than a set number of pixels; the selected number of pixels is 4, and it depends on the maximum distance of the observed markers and the noise level of the images. This removal reduces the image clutter and the effect of noise, but could make the internal dot detection less reliable.

- the extraction of the internal contour of each square marker, using the Moore-Neighbor algorithm described in Reference [26]; for each detected boundary its perimeter and the number of internal boundaries can be evaluated; only the objects having a number of internal boundaries lower than the maximum number of internal dots and having dimensions compatible with the markers are analyzed. In this way, each observed marker can be identified.

- polygonal simplification using the Douglas–Peucker algorithm, which is called “the most widely used high-quality curve simplification algorithm” in Reference [27]. Each contour extracted in the previous step is a closed polygon with several vertices and the Douglas–Peucker algorithm reduces it to a four-vertices polygon, allowing us to detect the four internal vertices of each square fiducial marker. Then, from the four vertices, the marker centroid is calculated.

2.2. Step B: P3P Solution inside RANSAC

- two adjacent vertices v1,x and v1,y are considered;

- the algorithm verifies if they are aligned with two vertices of marker 4 or marker 2; suppose they are aligned with two vertices of marker 4;

- the algorithm evaluates which one is farther from the vertices of mark 4; suppose v1,x is farther; it means v1,x could be v1,1 or v1,2, while v1,y could be v1,3 or v1,4;

- the other vertex v1,z adjacent to v1,x is considered;

- the algorithm evaluates if v1,x or v1,z is nearer to the vertices of marker 2; suppose v1,x is nearer;

- in this case v1,x = v1,2, v1,y = v1,3, v1,z = v1,1, and the fourth vertex of marker 1 remains identified.

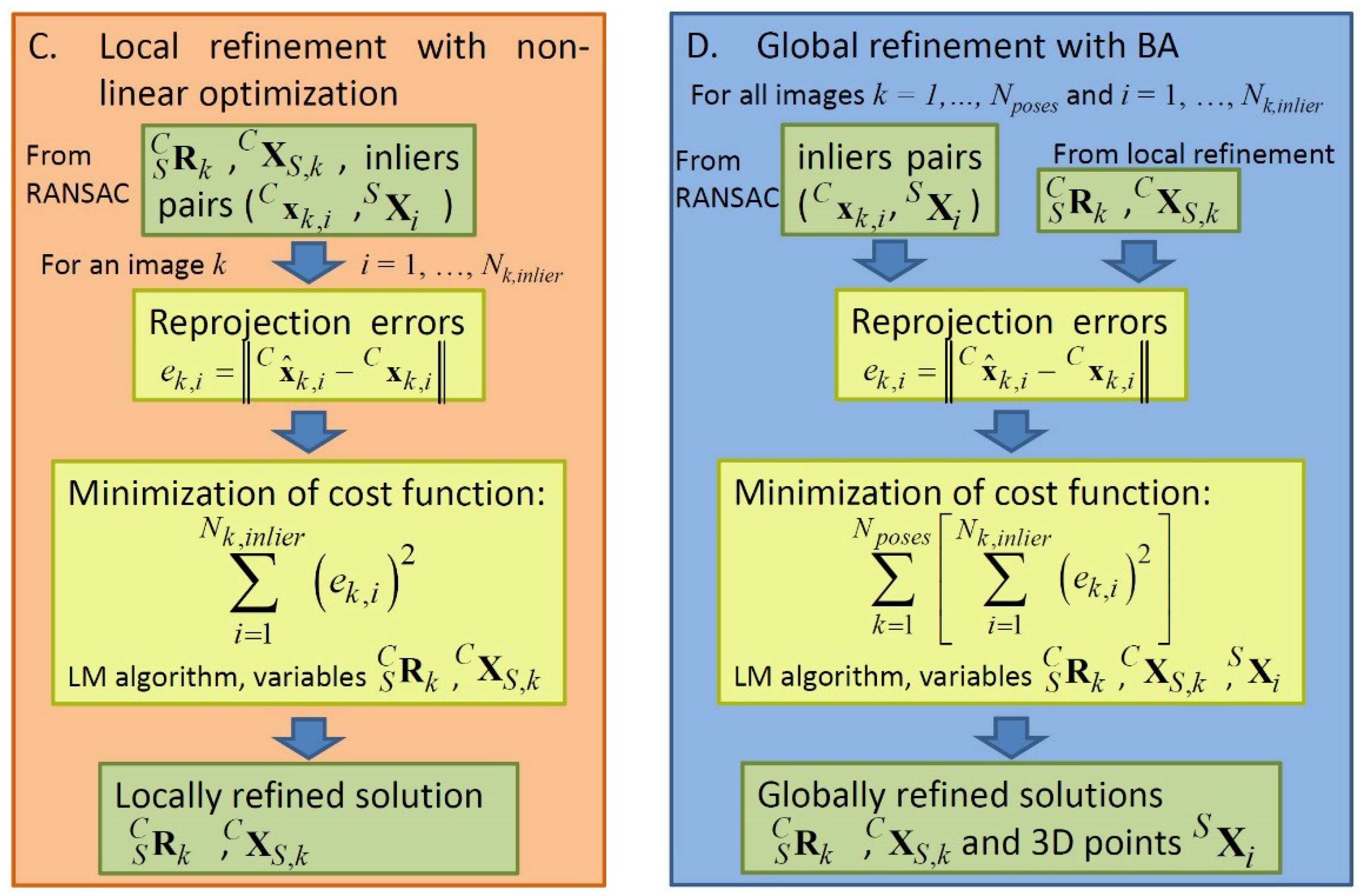

2.3. Step C: Local Refinement with Non-Linear Optimization

2.4. Step D: Global Refinement with BA

3. Uncertainty Evaluation

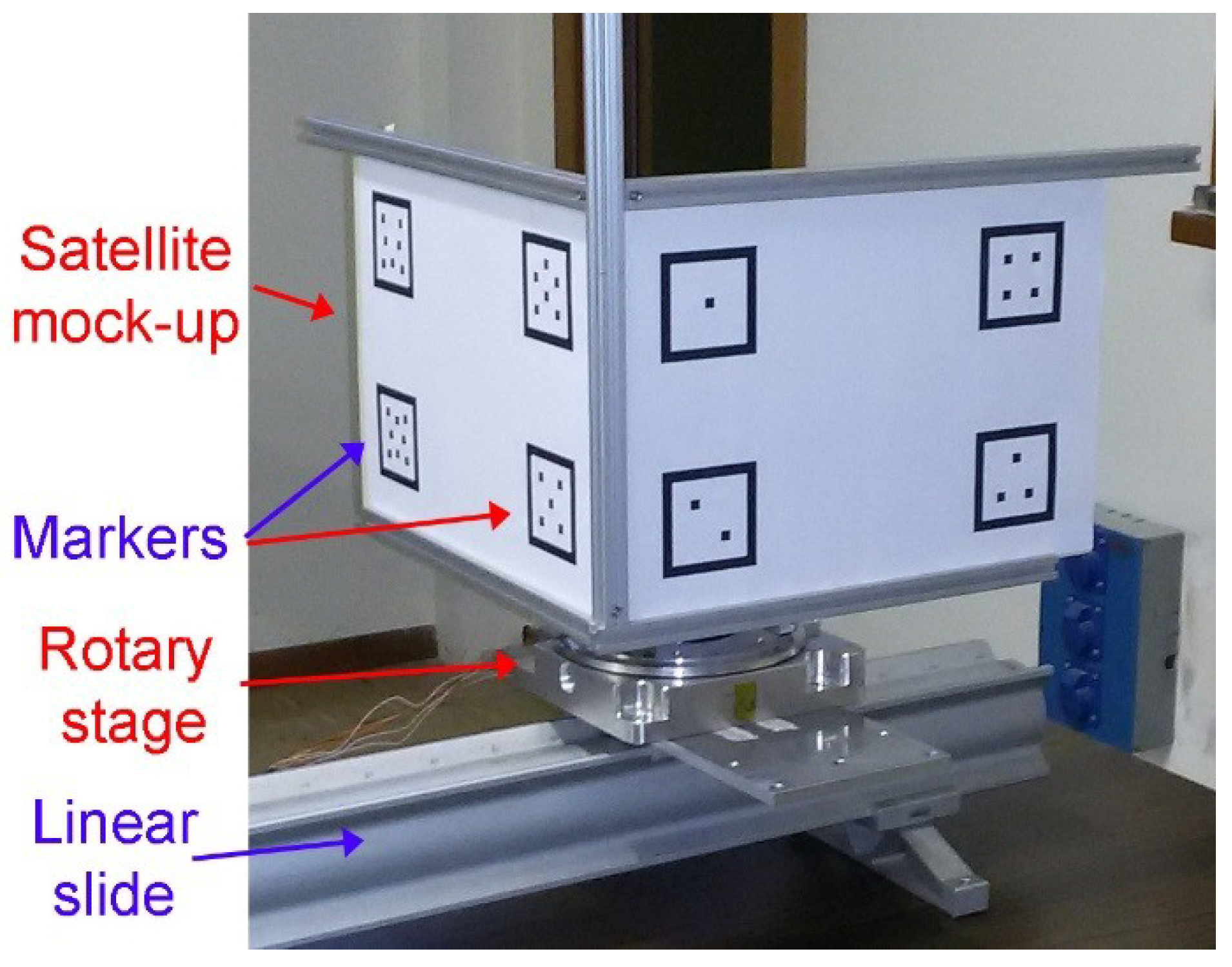

4. Experimental Set-Up

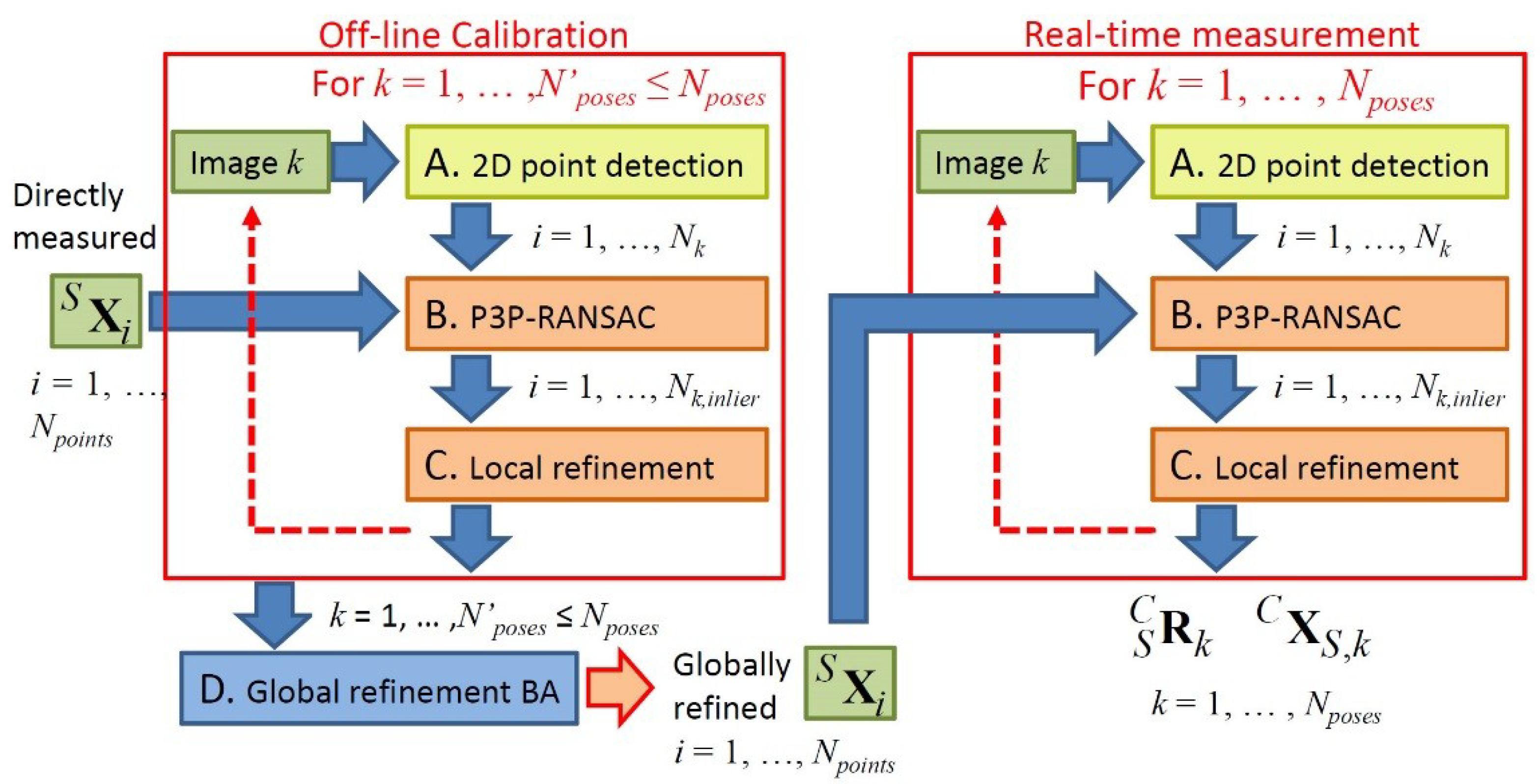

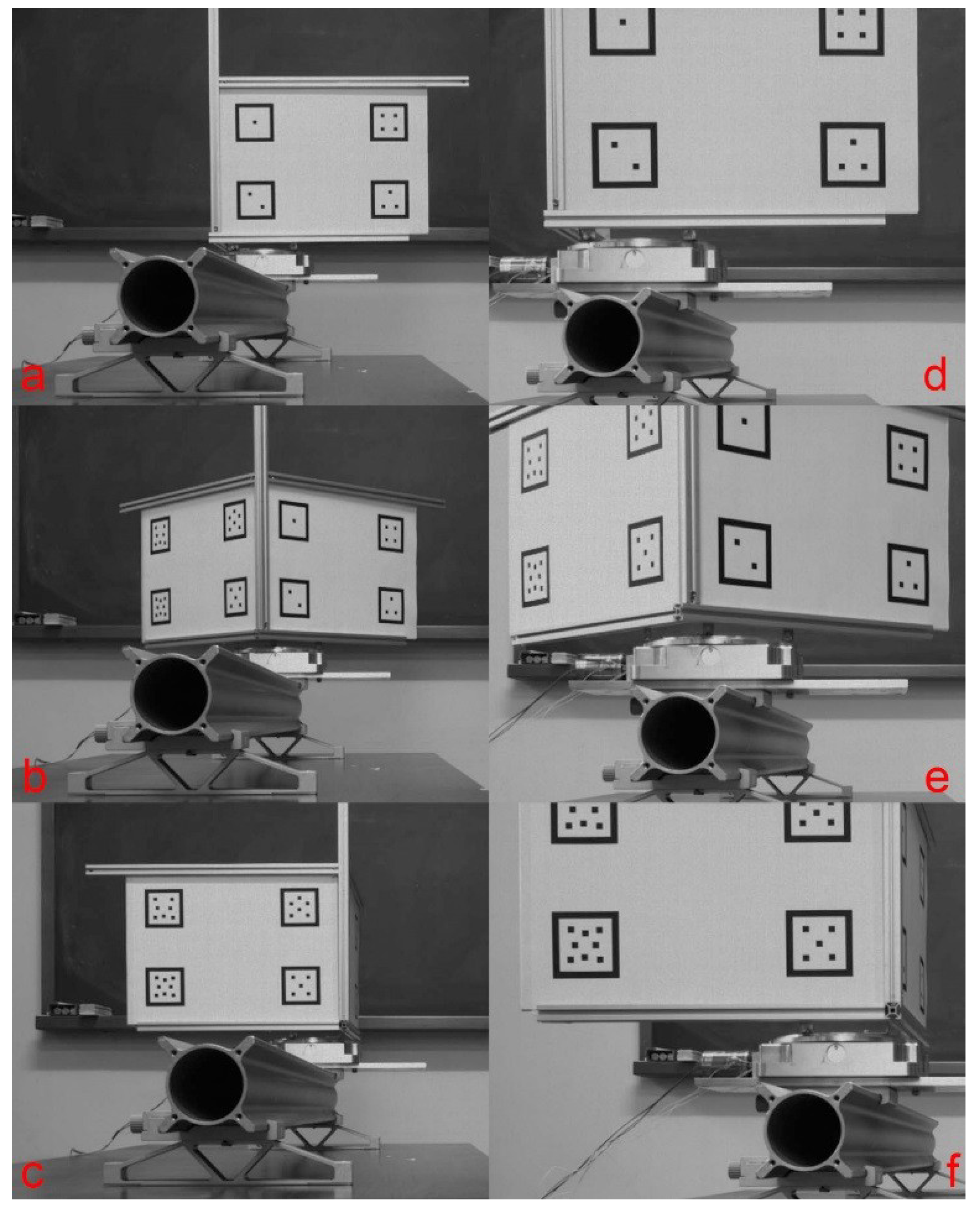

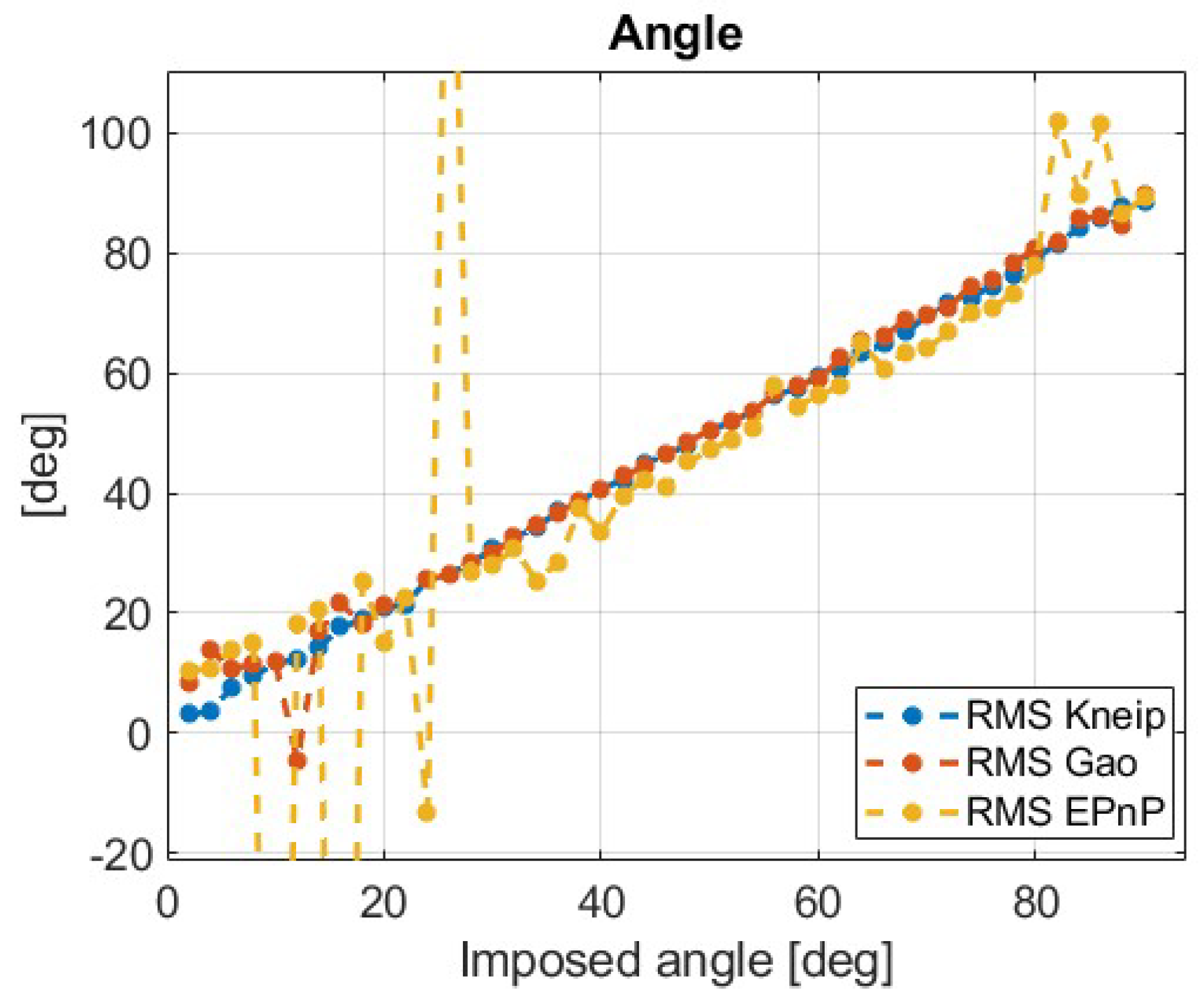

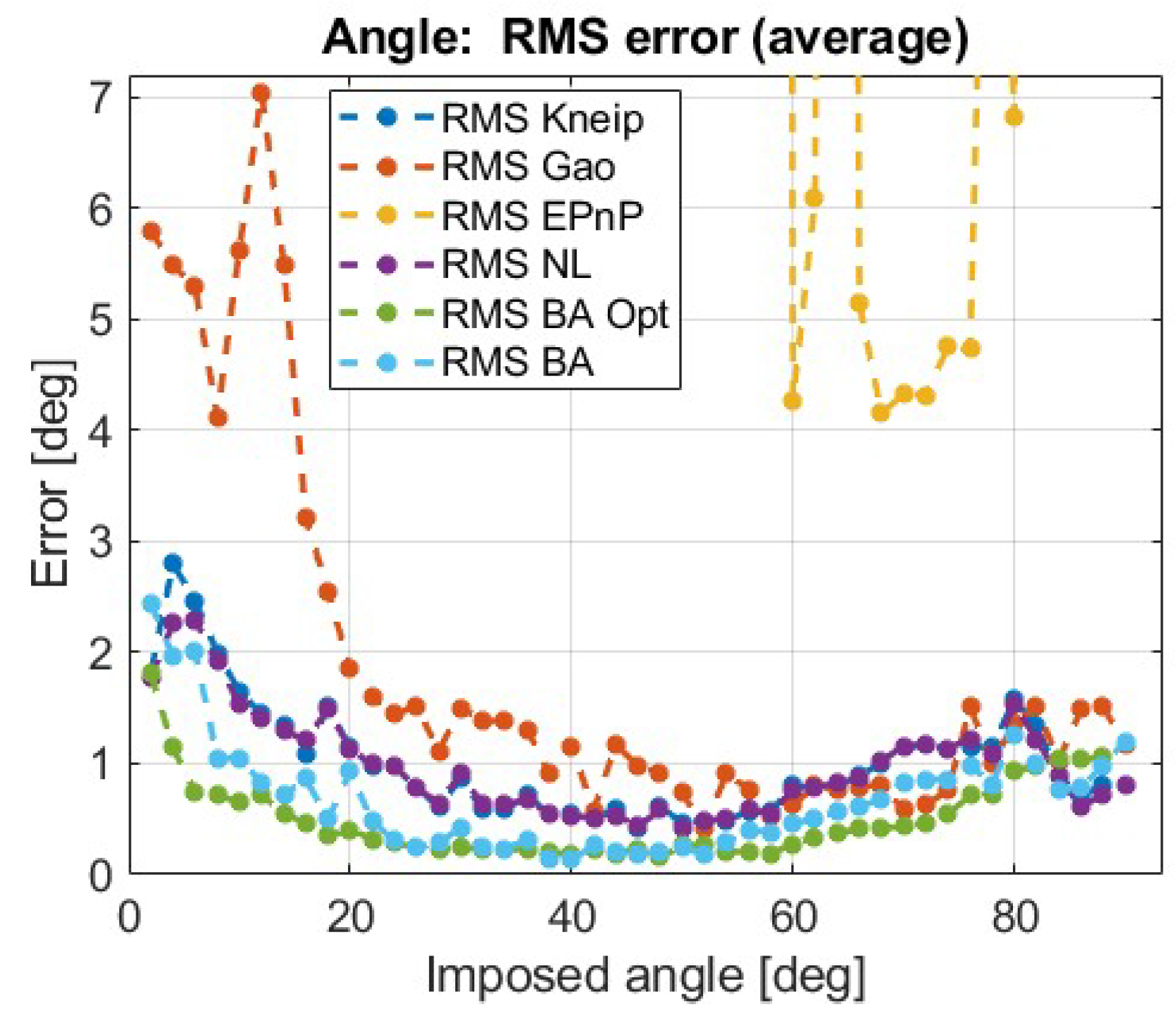

5. Results: Preliminary Method Comparison

- after the steps A–C, i.e., after the local refinement with the non-linear minimization (purple line);

- after the steps A–D, i.e., after the global refinement with the BA approach according to the first implementation (light blue line); in this case, the BA approach is applied considering all the available relative poses Nposes.

- According to the second implementation of the BA approach (green line).

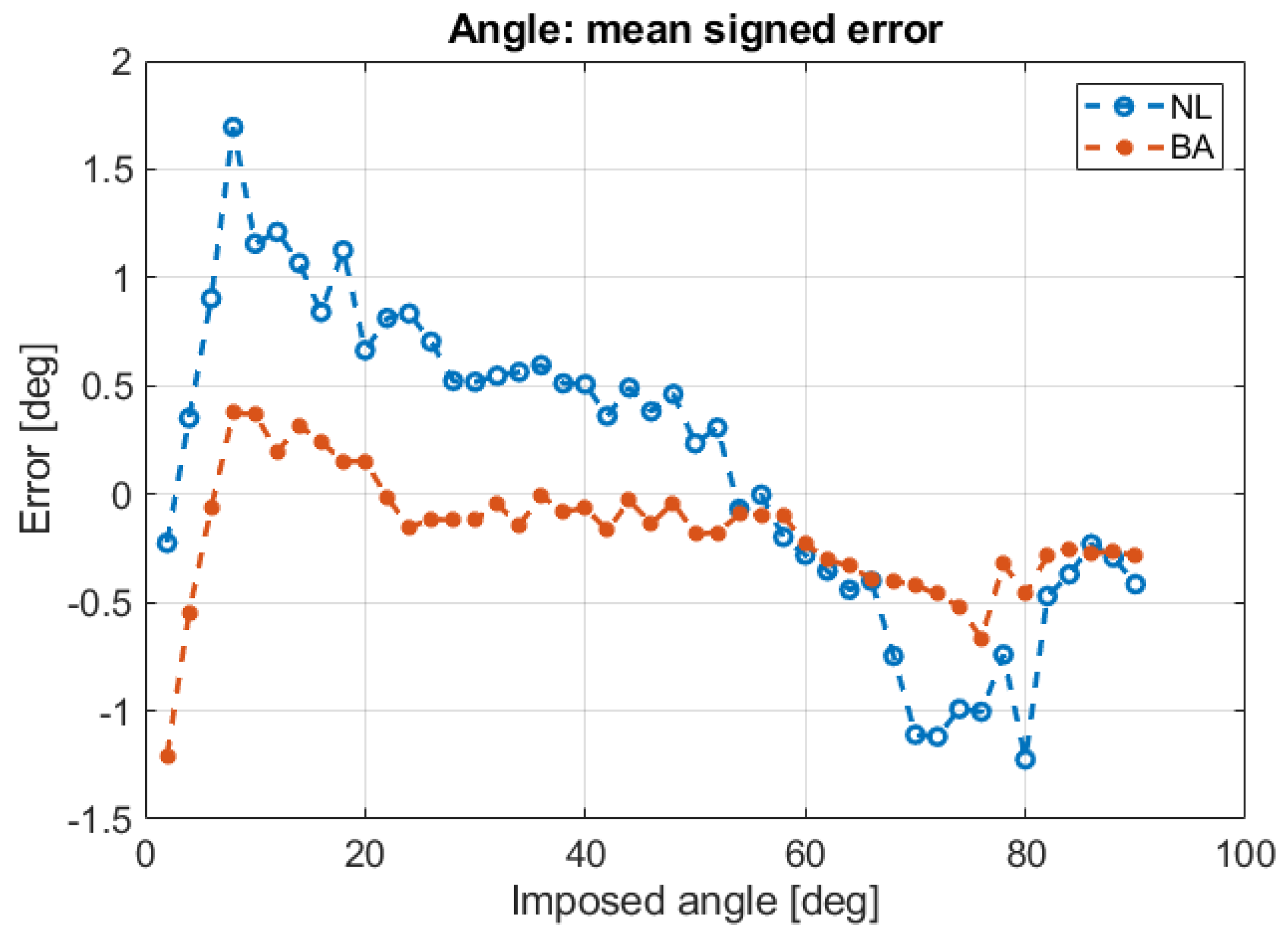

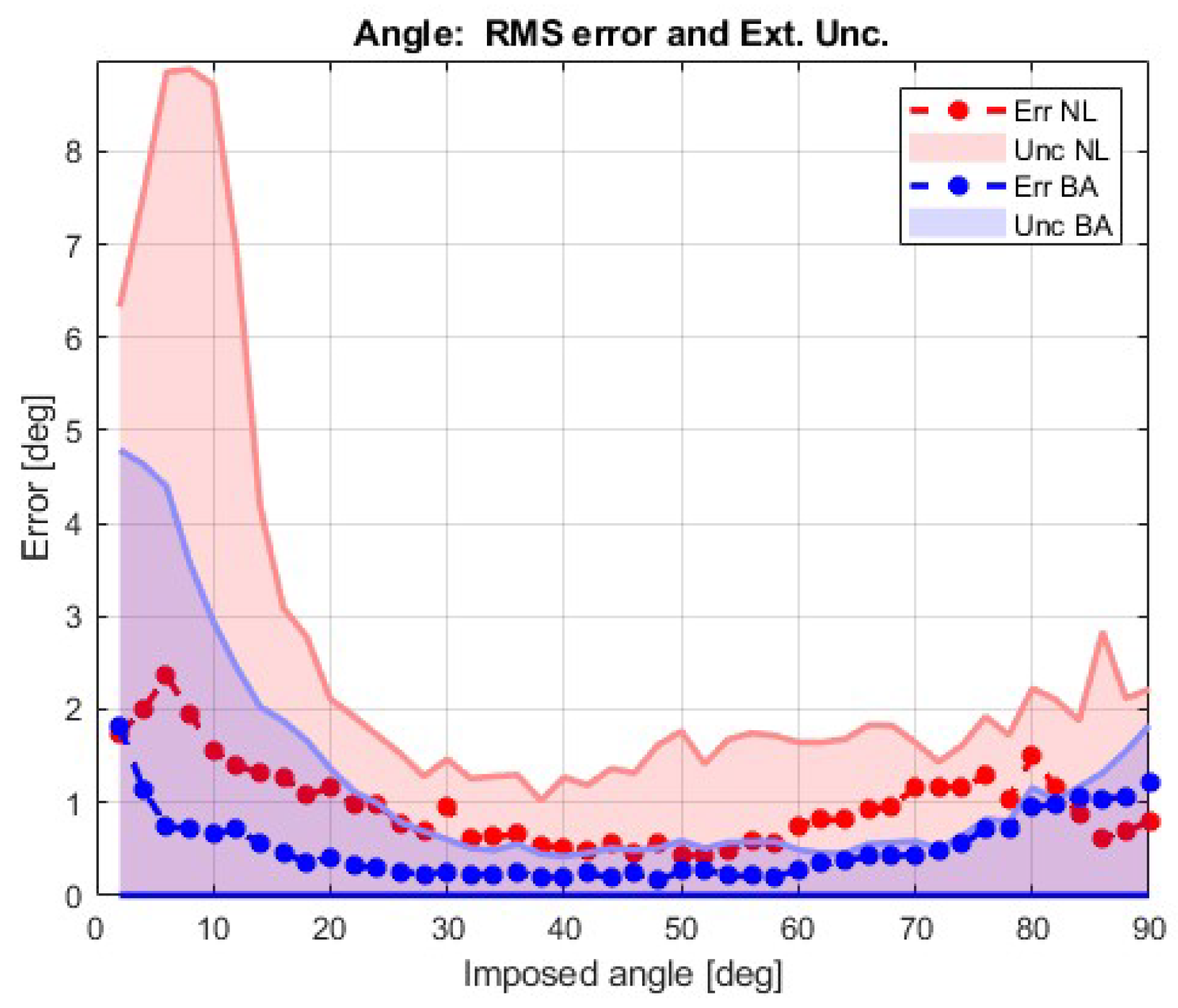

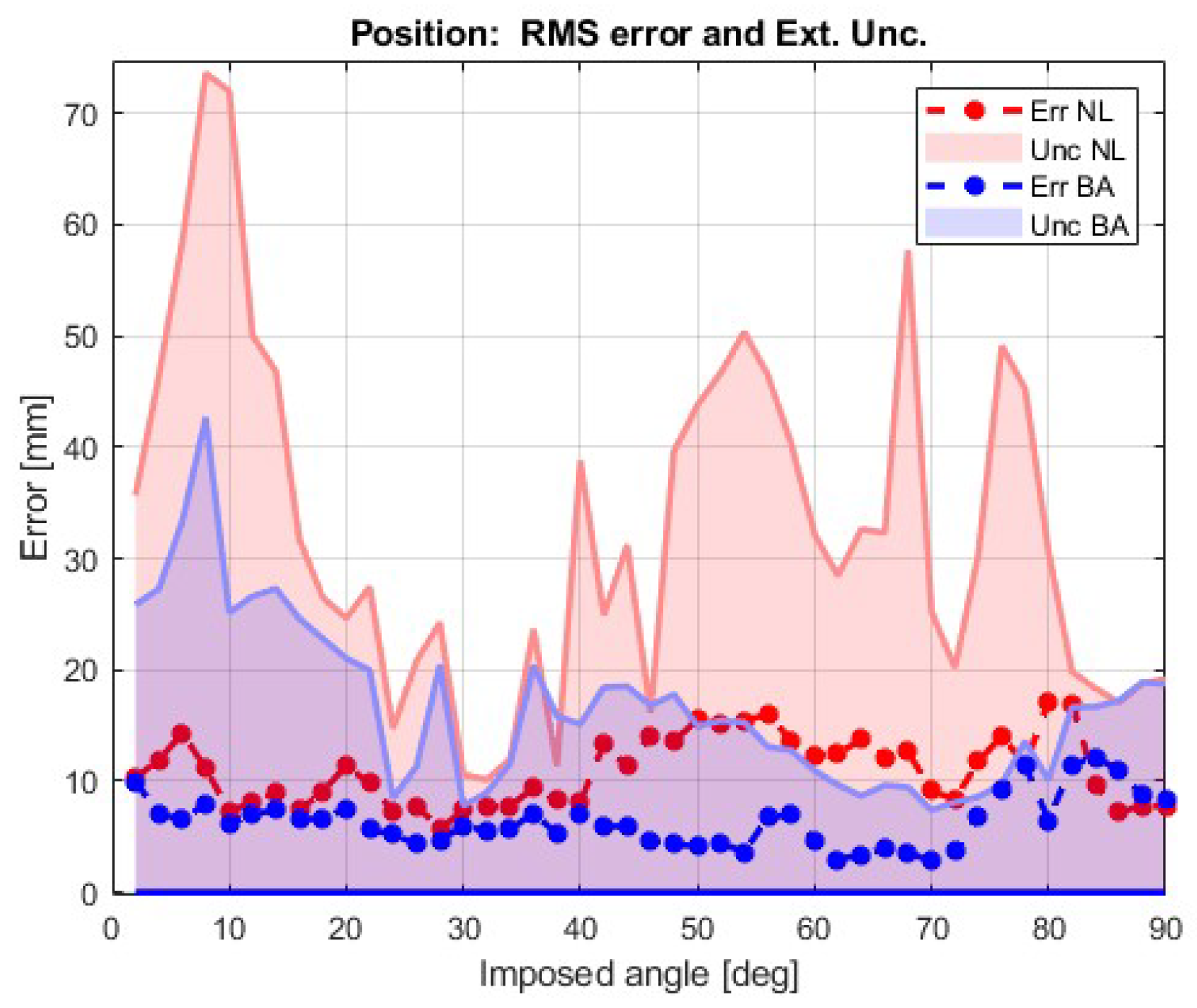

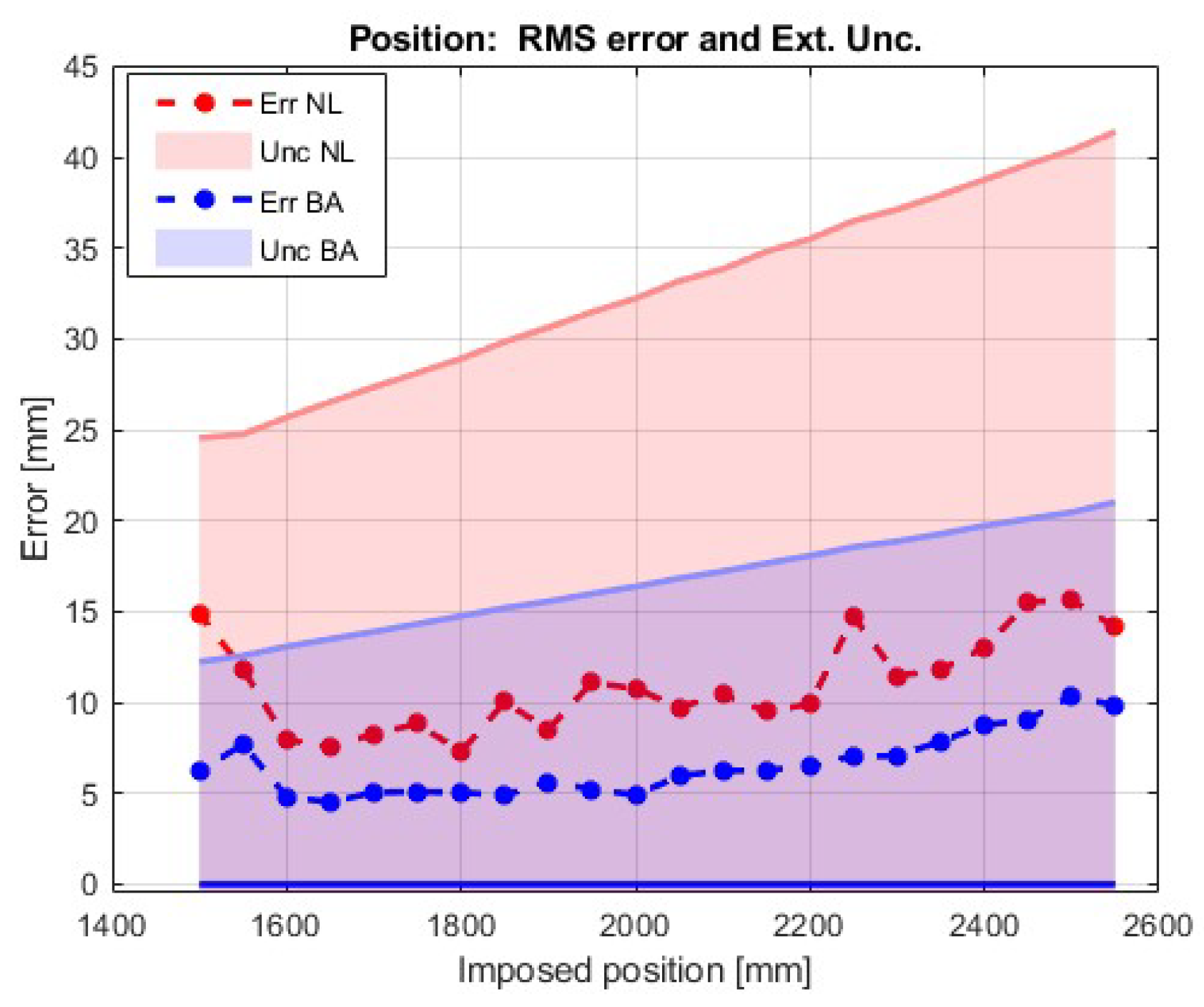

6. Results: Effect of the Bundle Adjustment (BA)

7. Results: Influencing Parameters

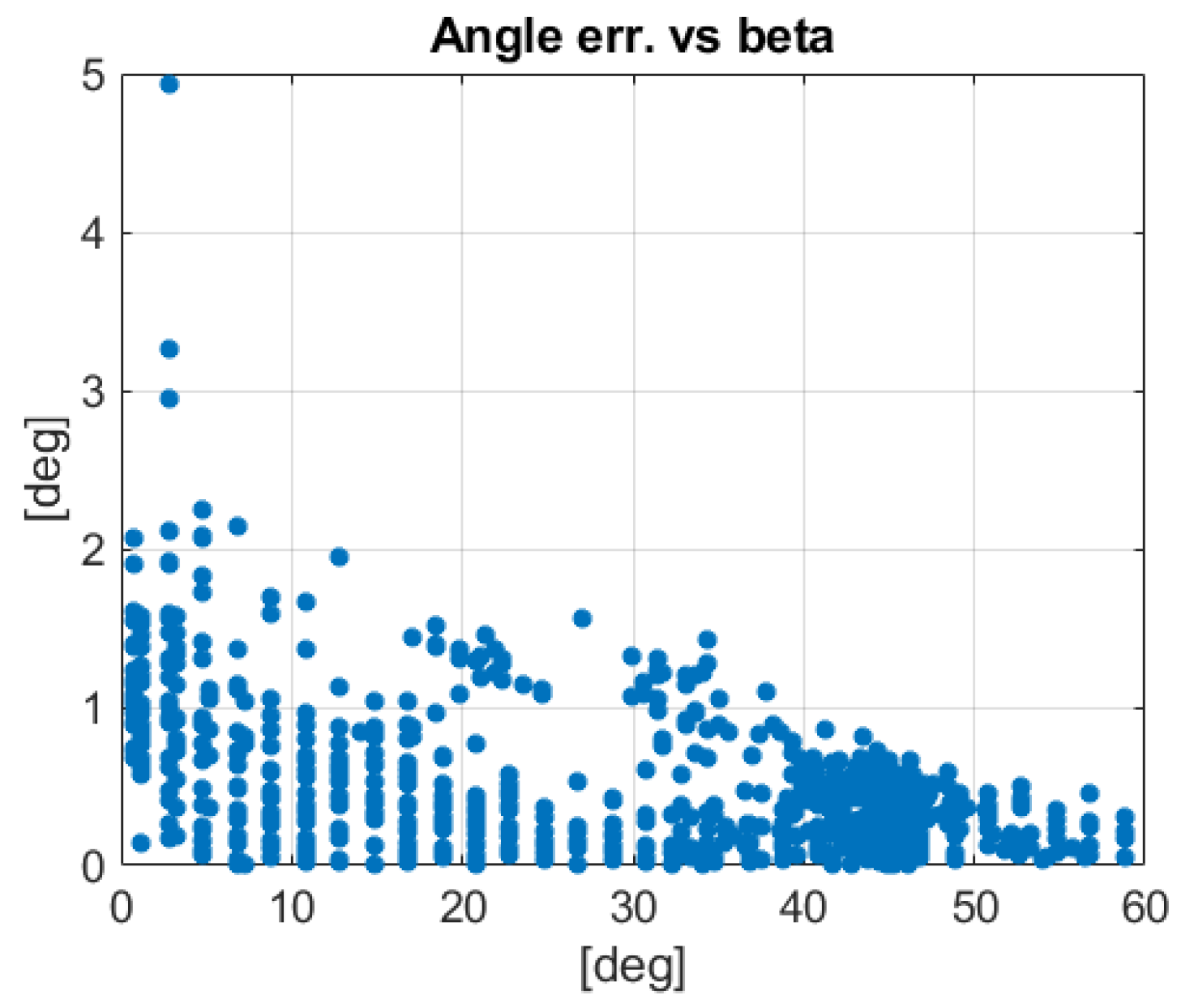

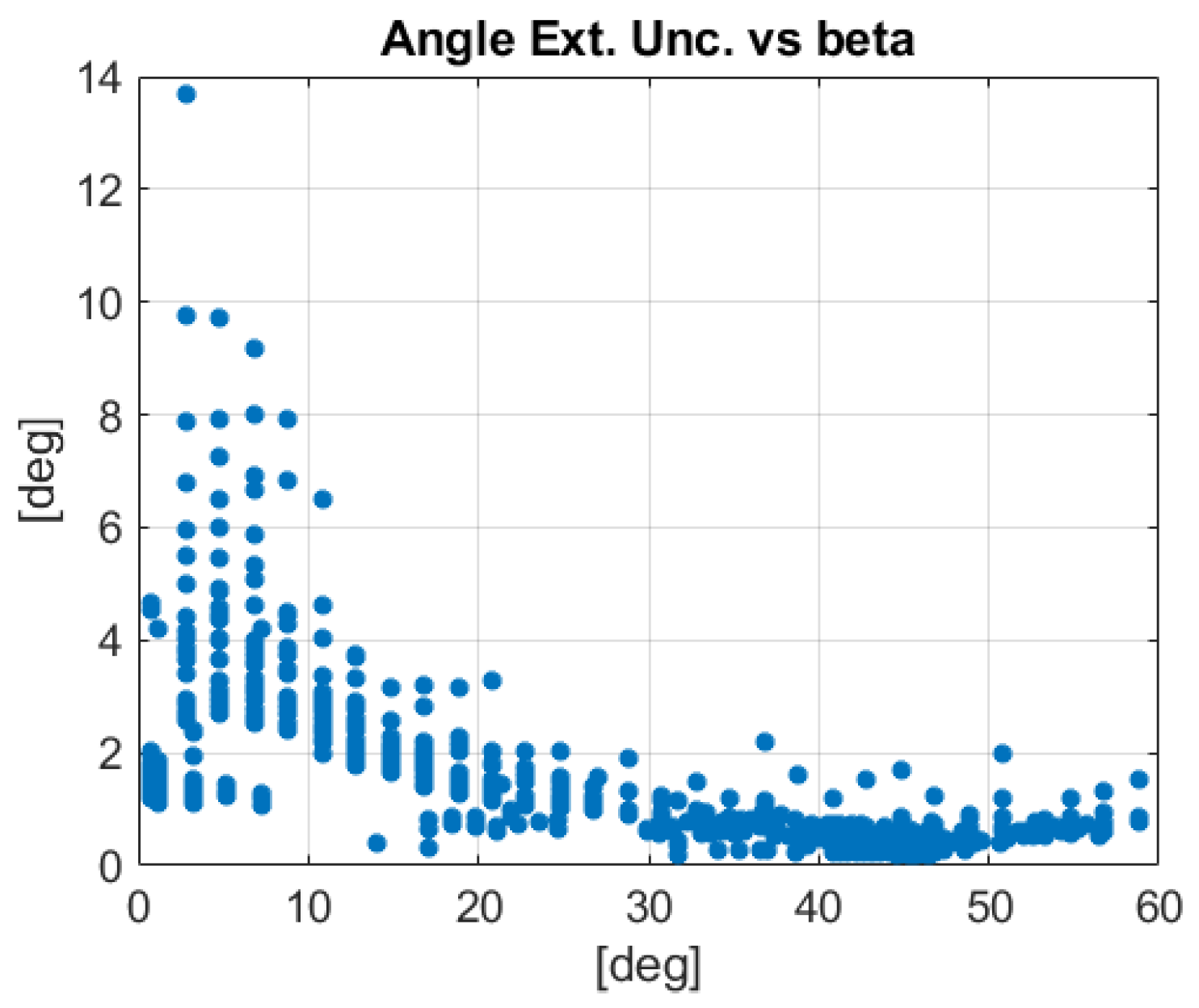

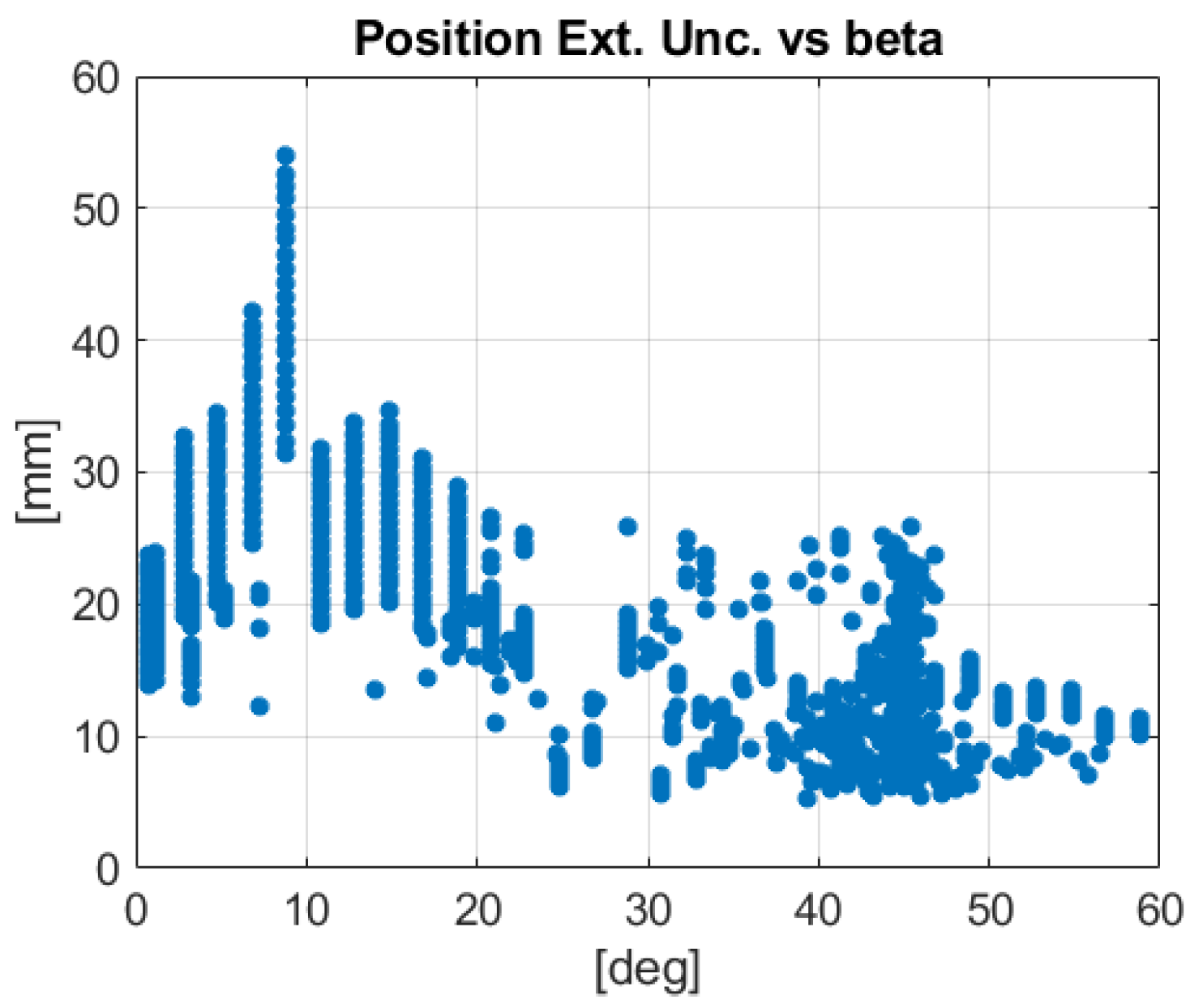

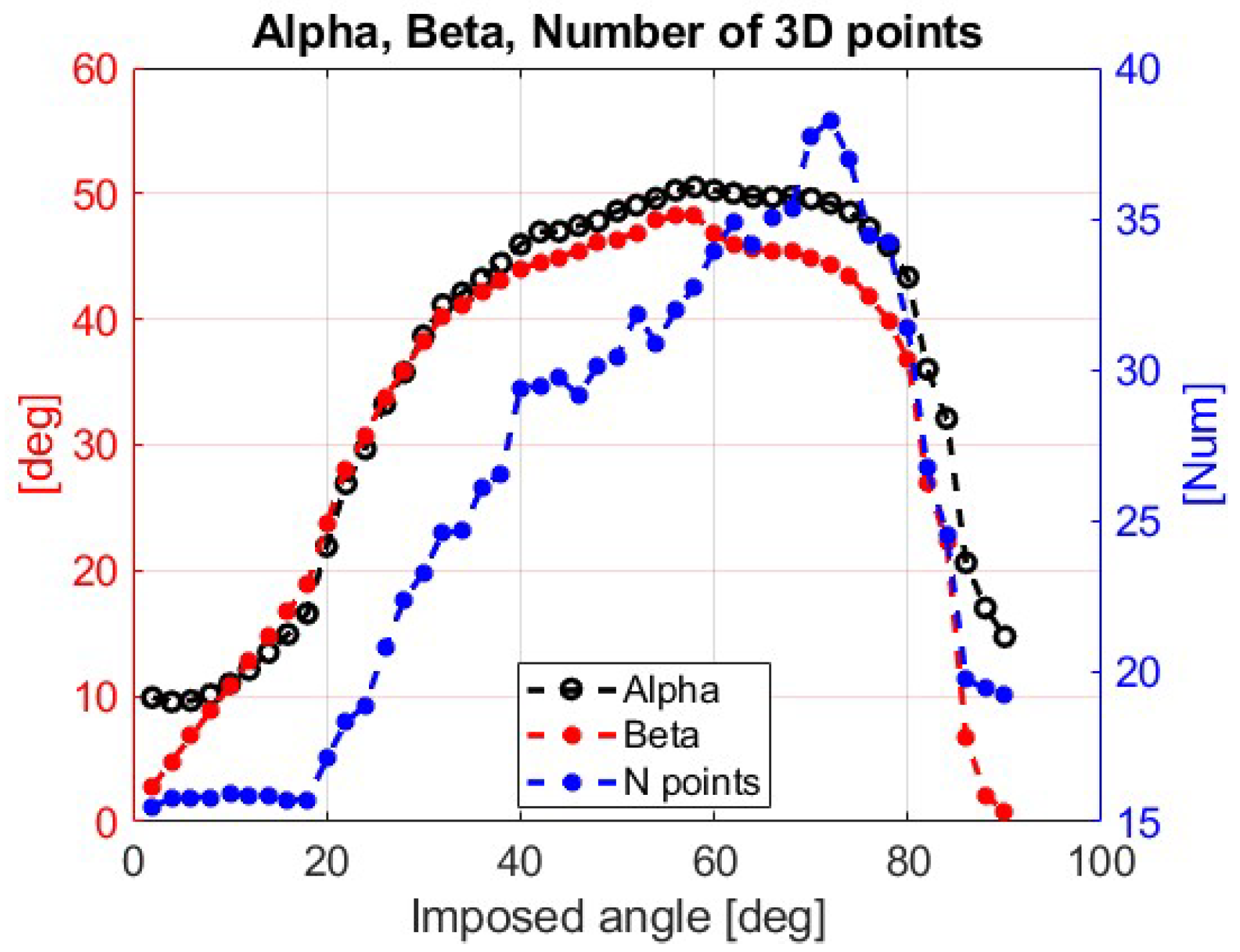

- The mean angle β between the optical axis and the normal to the marker plane of each point that passes the RANSAC selection, see Figure 15a.

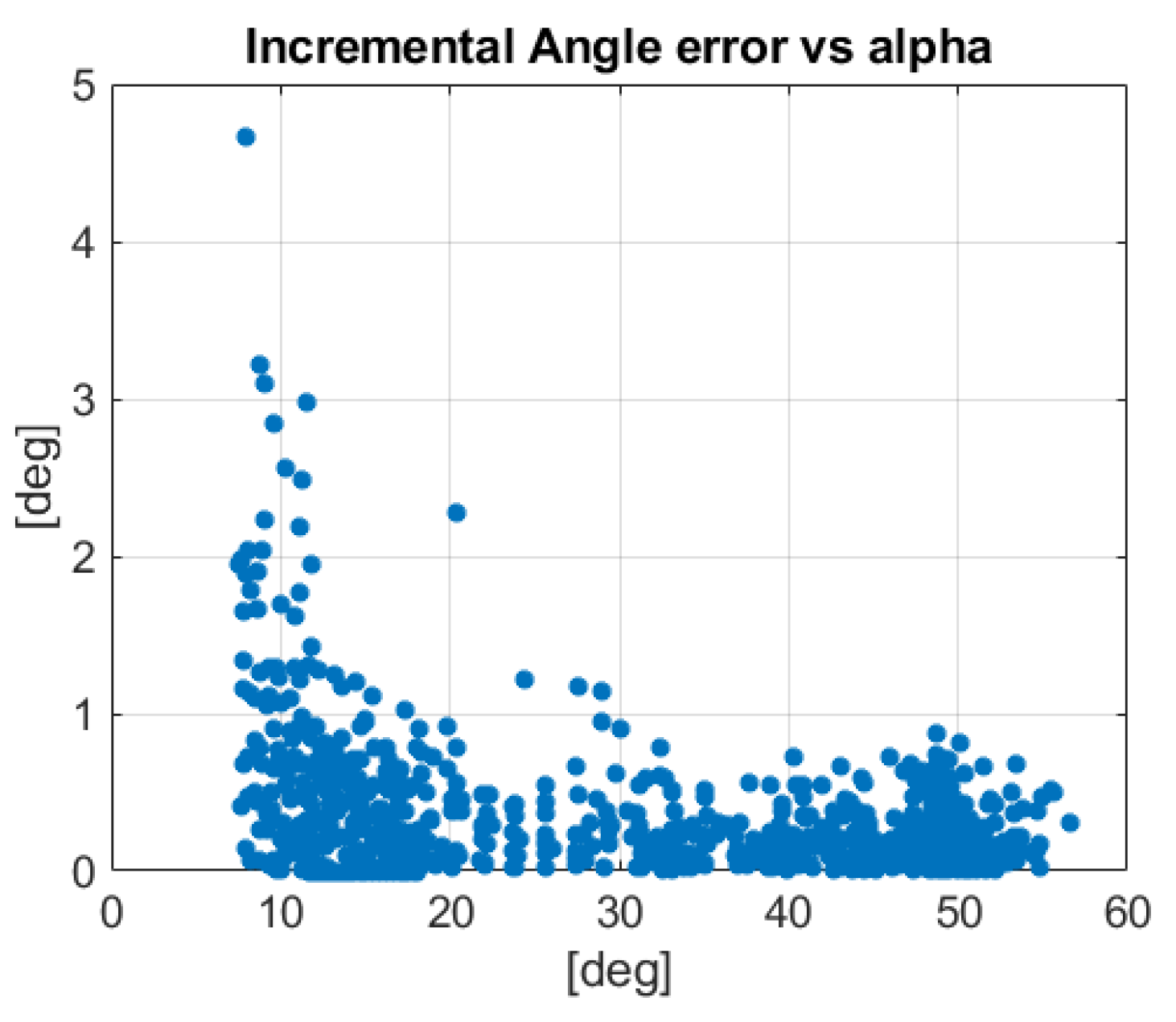

- The mean angle α between the camera projection line and the normal to the marker plane of each point that passes the RANSAC selection, see Figure 15b.

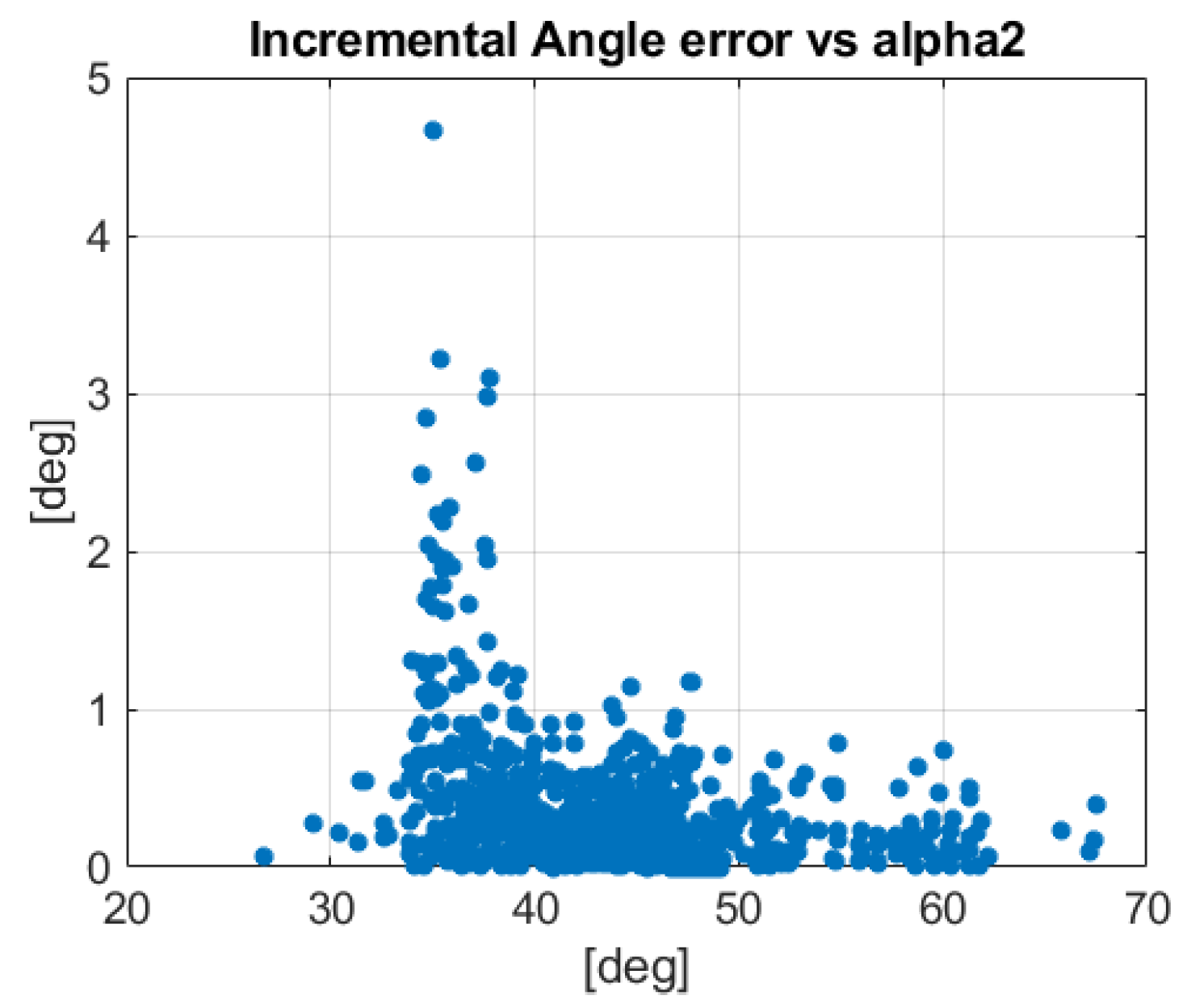

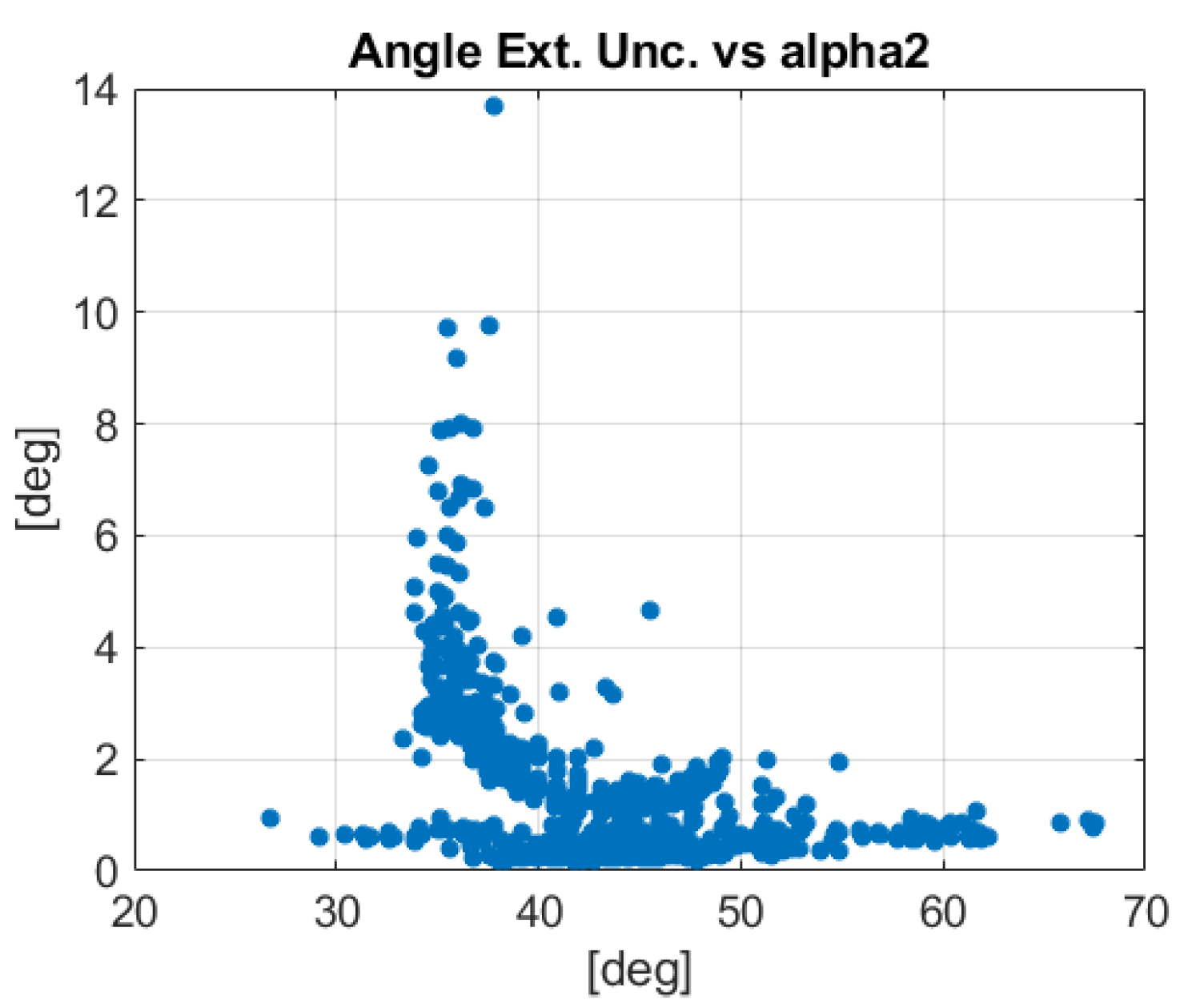

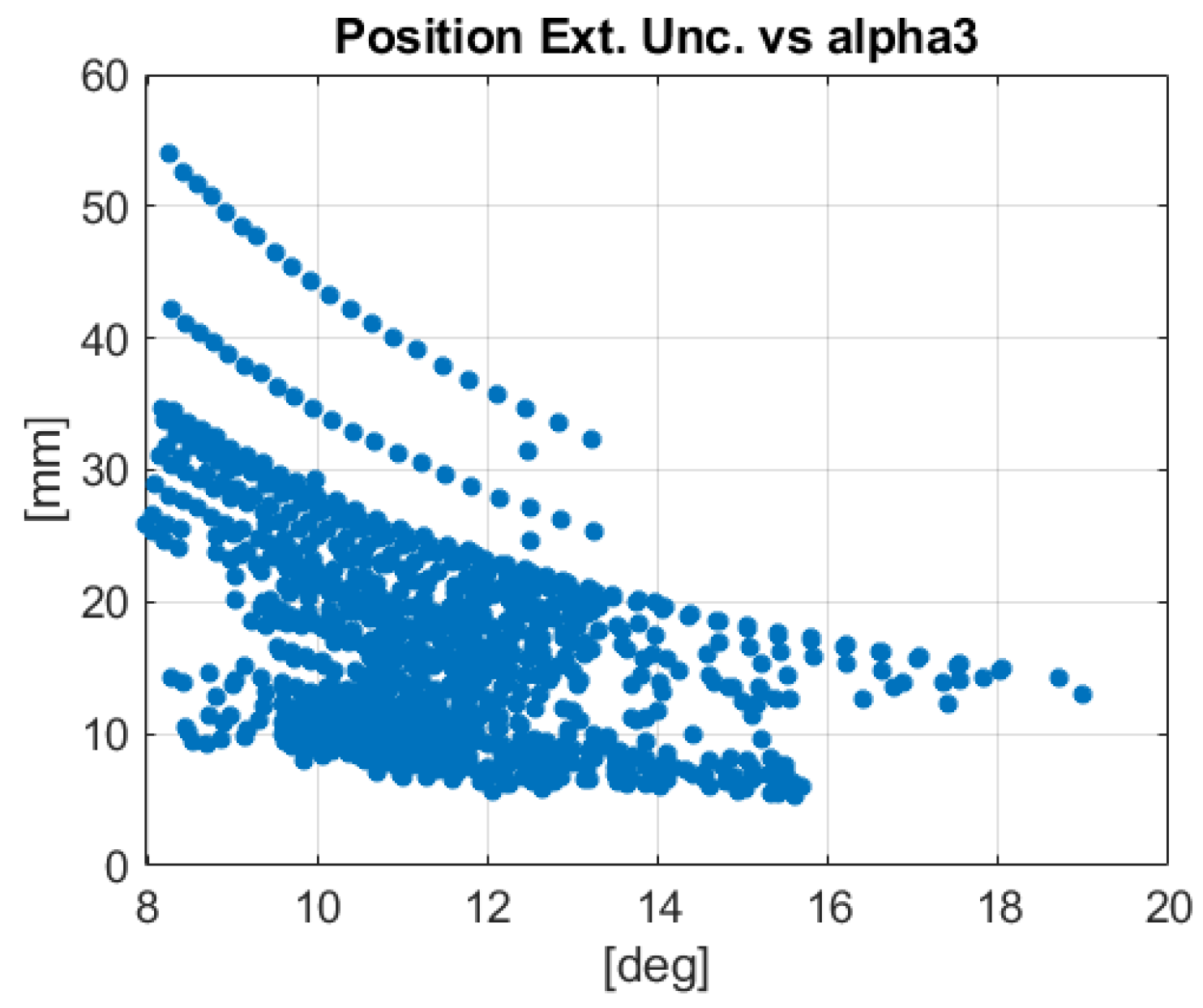

- The mean angle α2 or α3 between the tangential or local velocity and the camera projection line of each point that passes the RANSAC selection. When the rotations are examined, the tangential velocity with reference to the rotation center is employed as depicted in Figure 15c, for the calculation of α2. If the positions are analyzed, the local velocity of the translation motion is considered for each marker. In the linear displacement case, the direction of the local velocity is the same for all the markers and is considered parallel to the longitudinal slide of the set-up for the calculation of the mean angle α3.

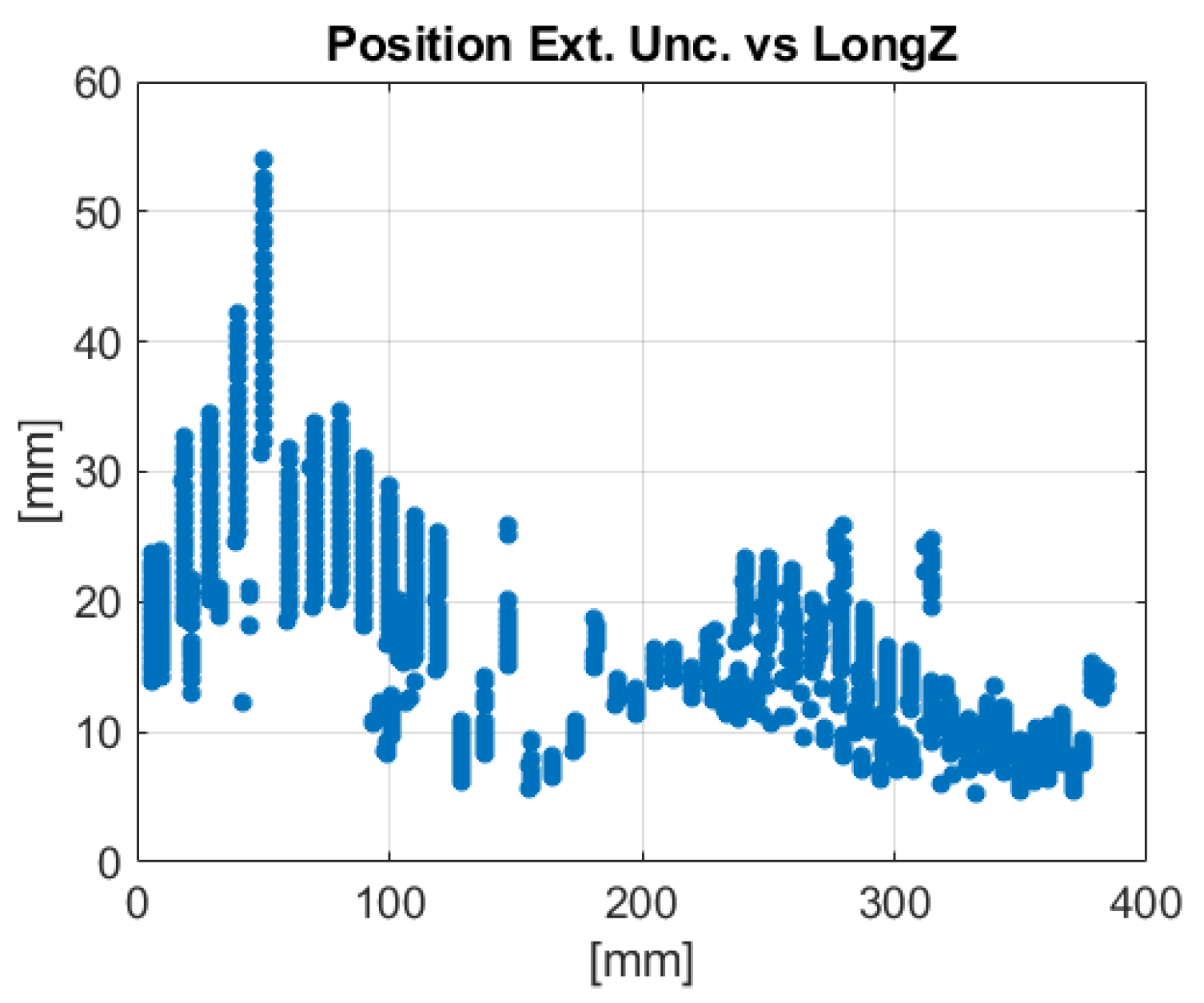

- The maximum longitudinal distance LongZ of the 3D points, see Figure 15d.

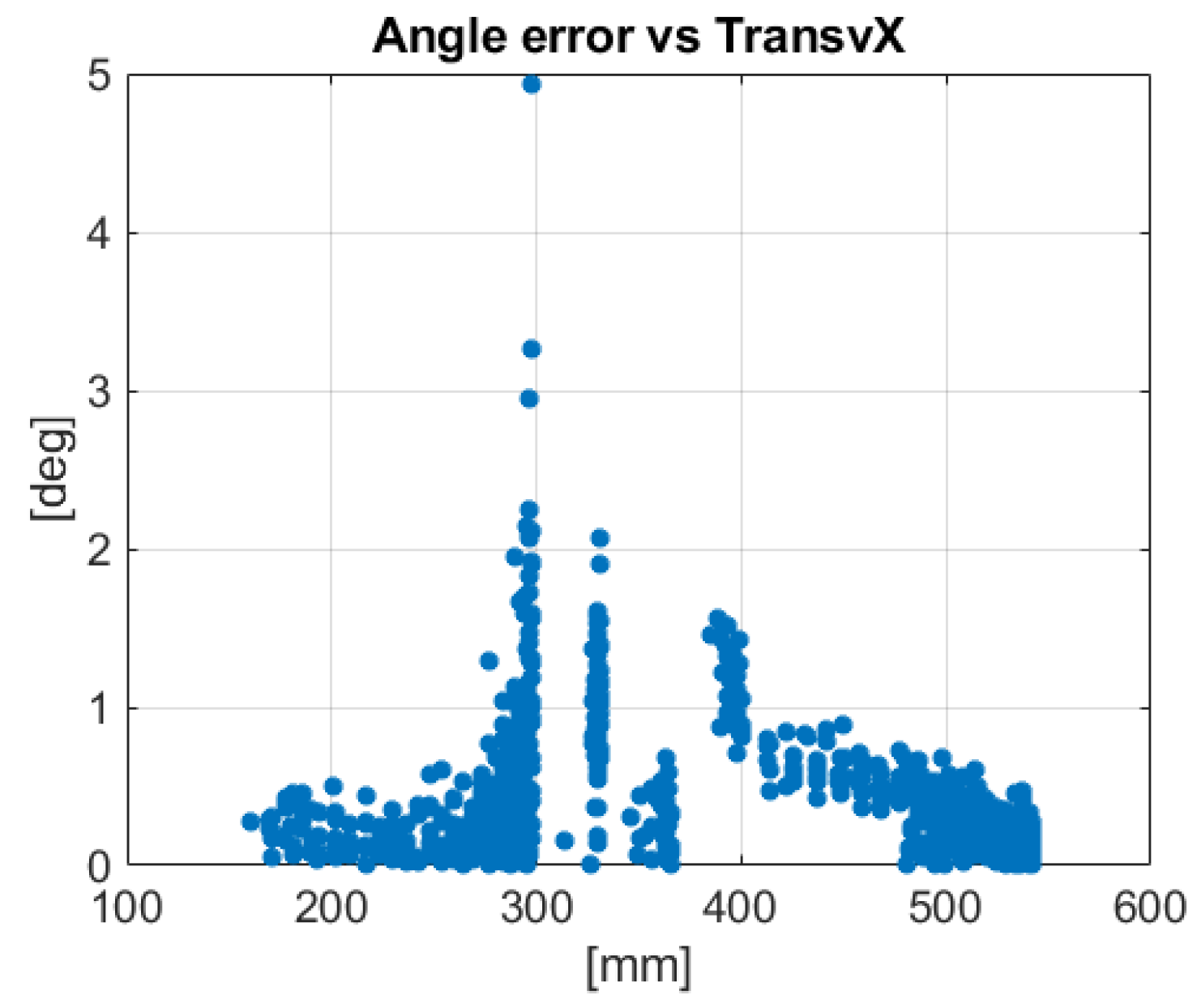

- The maximum transverse distance TransvX of the 3D points, see Figure 15e.

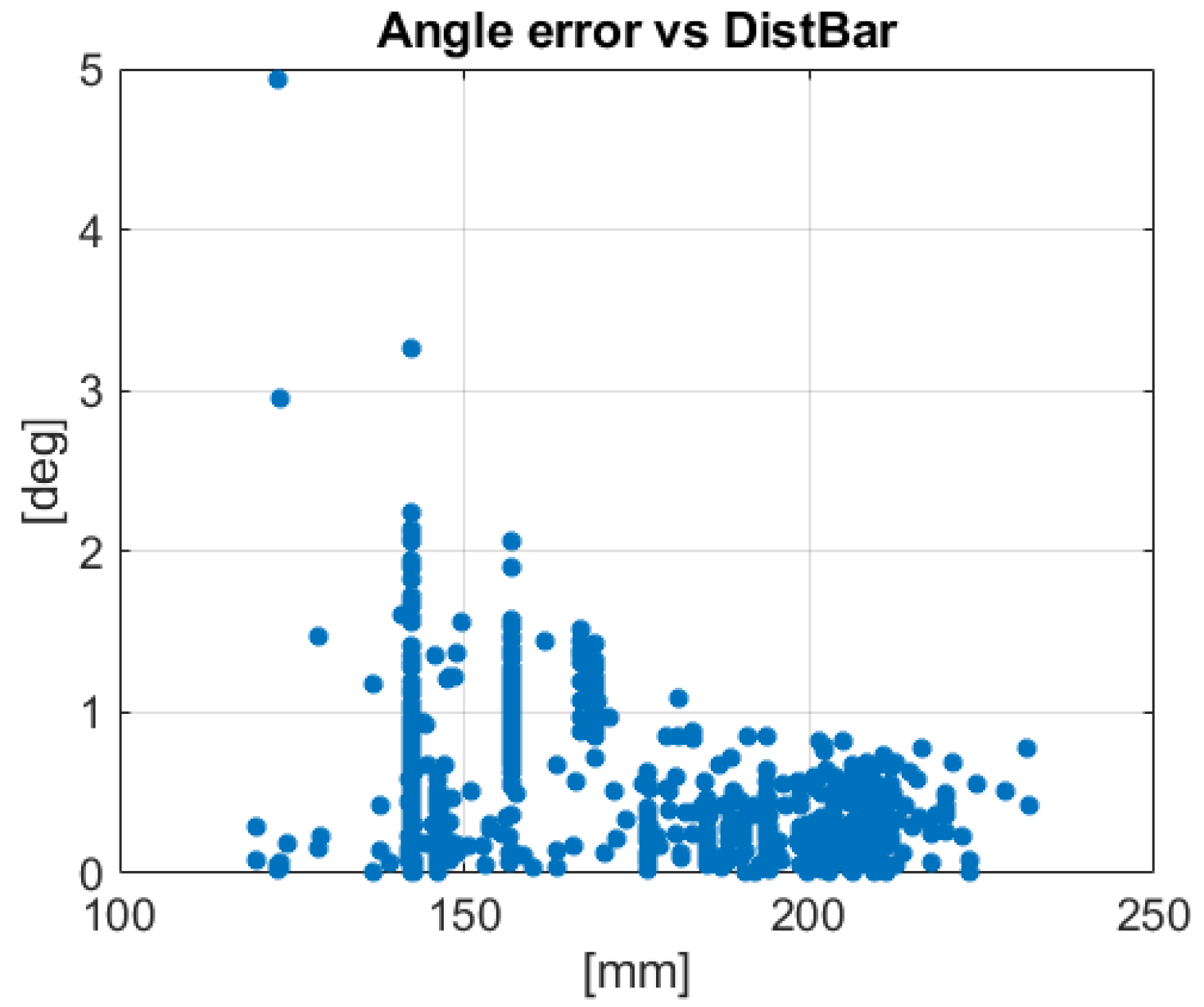

- The mean distance DistBar of the 3D points from their barycenter, see Figure 15f.

- The number Npoints of 2D points observed in each image that pass the RANSAC selection. The number Npoints takes into account both the observability of the markers, which is generally lower at short distances (see Figure 7d–f) or when only one face of the mock-up is visible (see Figure 7a,c,d,f), and the consensus set yielded by the RANSAC procedure.

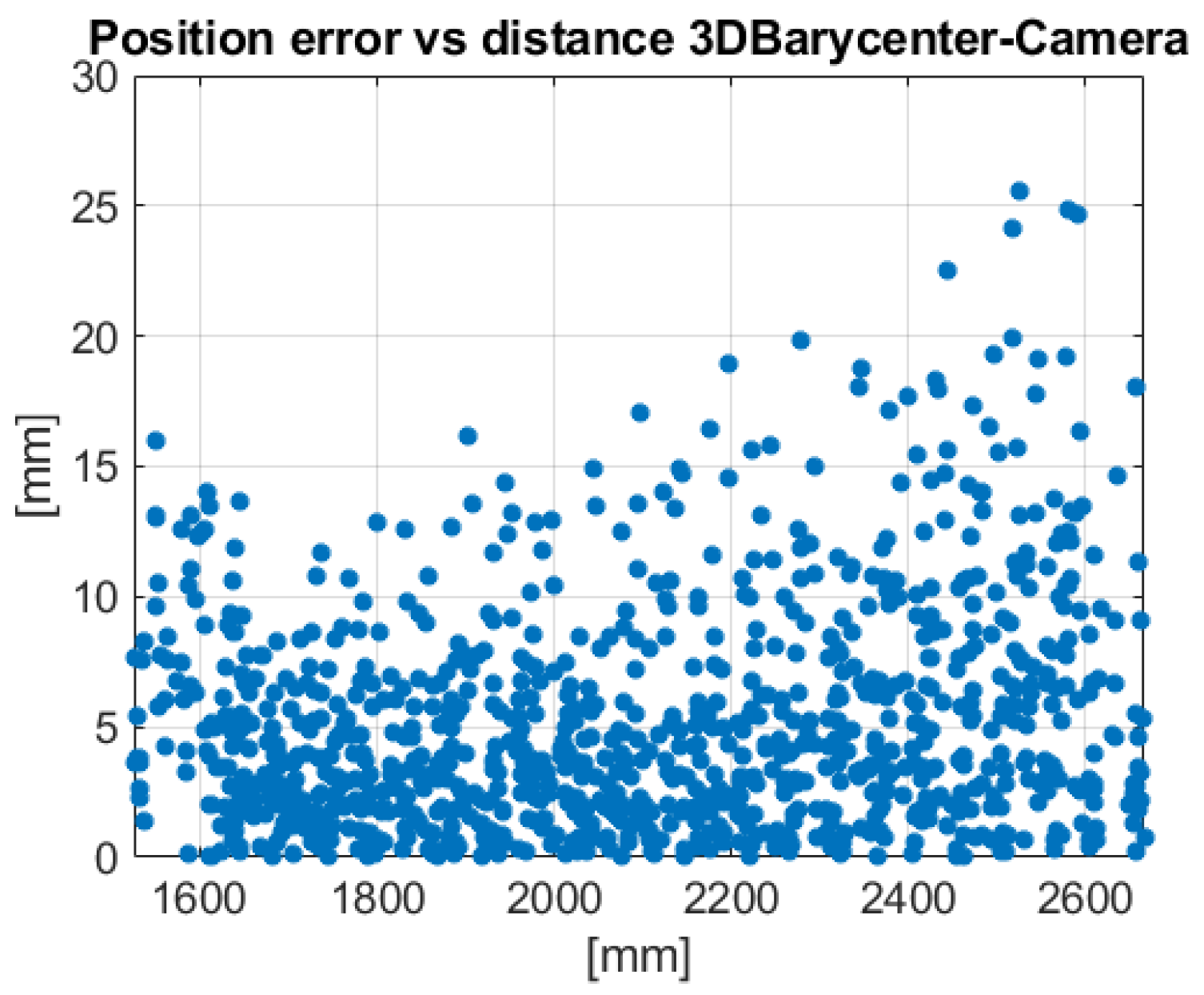

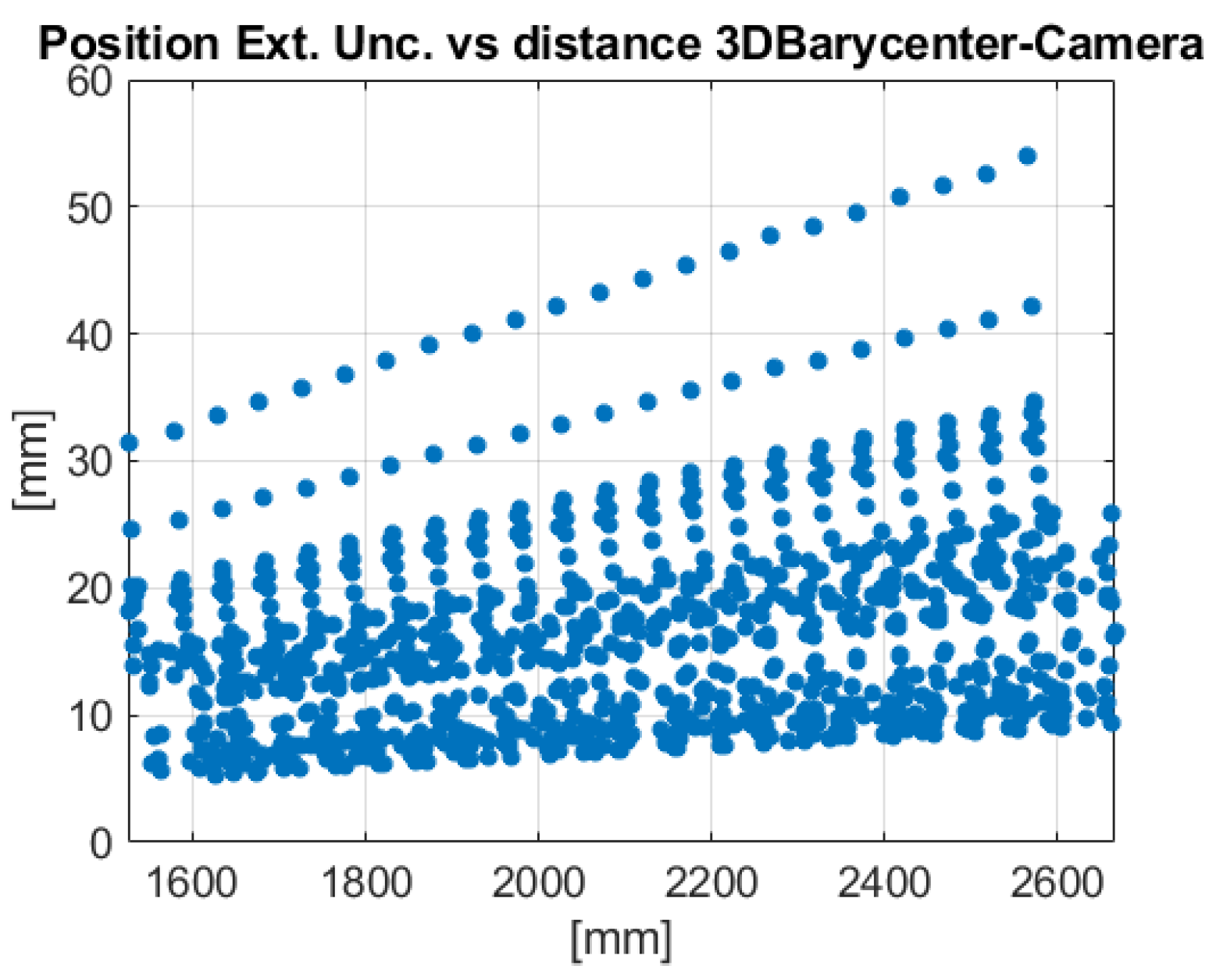

- The distance Dist_BC between the camera (the origin of the C frame) and the barycenter of the 3D points that pass the RANSAC selection.

7.1. Parameter a: Mean Angle β

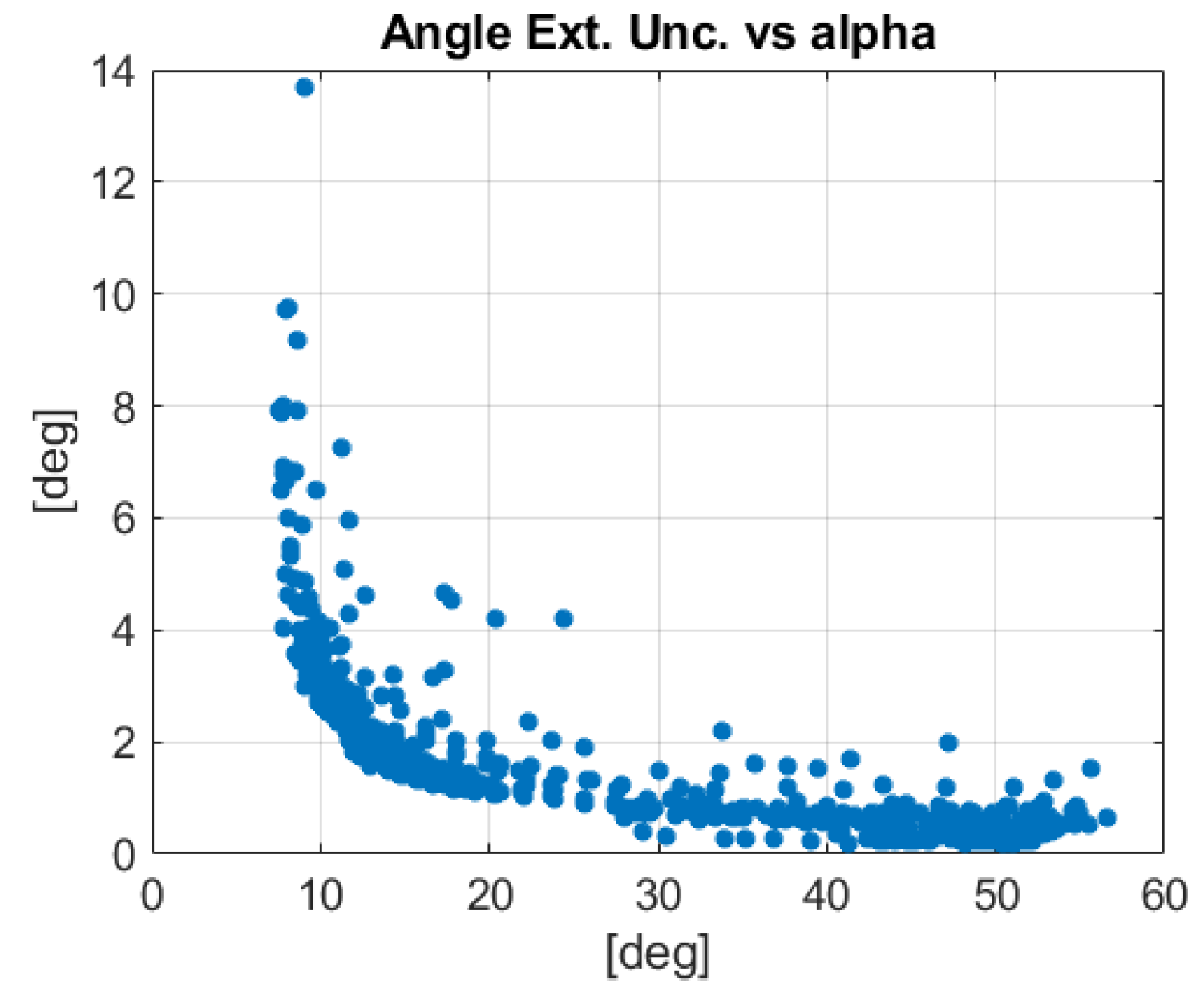

7.2. Parameter b: Mean Angle α

7.3. Parameter c: Mean Angles α2 and α3

7.4. Parameter d: Maximum Longitudinal Distance LongZ

7.5. Parameter e: Maximum Transverse Distance TransvX

7.6. Parameter f: Mean Distance DistBar of the 3D Points from Their Barycenter

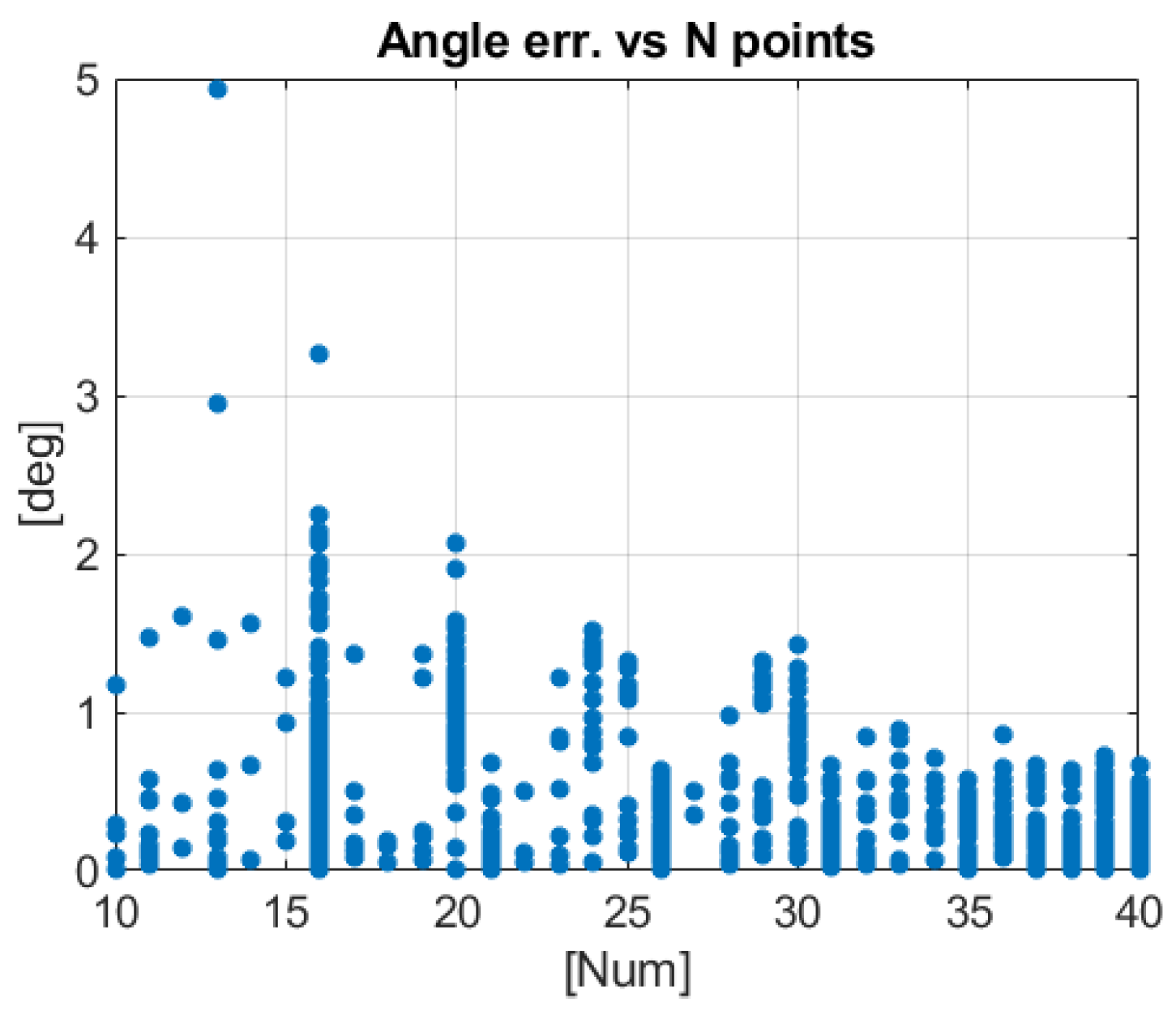

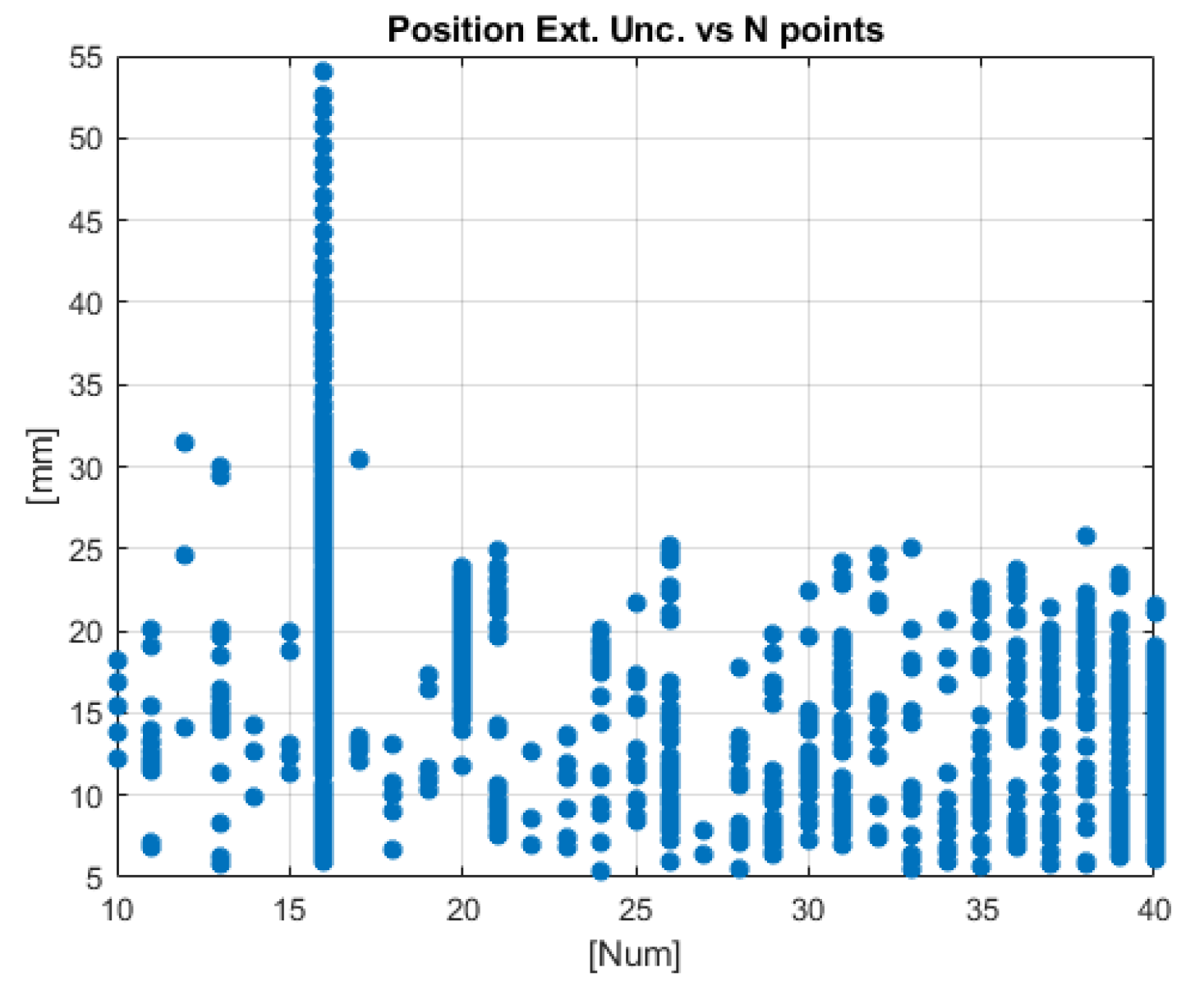

7.7. Parameter g: Number of Points Npoints

7.8. Parameter h: Distance Dist_BC Barycenter—Camera

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mokuno, M.; Kawano, I. In-orbit demonstration of an optical navigation system for autonomous rendezvous docking. AIAA J. Spacecr. Rockets 2011, 48, 1046–1054. [Google Scholar] [CrossRef]

- Howard, R.; Heaton, A.; Pinson, R.; Carrington, C. Orbital Express Advanced Video Guidance Sensor. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008. [Google Scholar]

- Howard, R.T.; Heaton, A.F.; Pinson, R.M.; Carrington, C.L.; Lee, J.E.; Bryan, T.C.; Robertson, B.A.; Spencer, S.H.; Johnson, J.E. The Advanced Video Guidance Sensor: Orbital Express and the next generation. In AIP Conference Proceedings; AIP: College Park, MD, USA, 2008; Volume 969, pp. 717–724. [Google Scholar]

- Bodin, P.; Noteborn, R.; Larsson, R.; Karlsson, T.; D’Amico, S.; Ardaens, J.S.; Delpech, M.; Berges, J.C. PRISMA formation flying demonstrator: overview and conclusions from the nominal mission. Adv. Astronaut. Sci. 2012, 144, 441–460. [Google Scholar]

- Tweddle, B.E. Relative Computer Vision Based Navigation for Small Inspection Spacecraft. In Proceedings of the AIAA Guidance, Navigation and Control Conference, Portland, OR, USA, 8–11 August 2011. [Google Scholar]

- Tweddle, B.E.; Saenz-Otero, A. Relative Computer Vision-Based Navigation for Small Inspection Spacecraft. J. Guid. Control Dyn. 2014, 38, 969–978. [Google Scholar] [CrossRef]

- Sansone, F.; Branz, F.; Francesconi, A. A Relative Navigation Sensor for Cubesats Based on Retro-reflective Markers. In Proceedings of the International Workshop on Metrology for Aerospace, Padua, Italy, 21–23 June 2017. [Google Scholar]

- Pertile, M.; Mazzucato, M.; Chiodini, S.; Debei, S.; Lorenzini, E. Uncertainty evaluation of a vision system for pose measurement of a spacecraft with fiducial markers. In Proceedings of the International Workshop on Metrology for Aerospace, Benevento, Italy, 4–5 June 2015. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Ma, Y.; Soatto, S.; Kosecka, J.; Sastry, S.S. An Invitation to 3-D Vision; Springer Science & Business Media: Berlin, Germany, 2004. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Haralick, R.; Lee, C.; Ottenberg, K.; Nolle, M. Review and Analysis of Solutions of the Three Point Perspective Pose Estimation Problem. Int. J. Comput. Vis. 1994, 13, 331–356. [Google Scholar] [CrossRef]

- Quan, L.; Lan, Z. Linear N-point camera pose determination. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 774–780. [Google Scholar] [CrossRef]

- Gao, X.; Hou, X.; Tang, J.; Cheng, H. Complete solution classification for the perspective-three-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 930–943. [Google Scholar]

- Nister, D.; Stewenius, H. A minimal solution to the generalized 3-point pose problem. J. Math. Imaging Vis. 2006, 27, 67–79. [Google Scholar] [CrossRef]

- Kneip, L.; Scaramuzza, D.; Siegwart, R. A Novel Parametrization of the Perspective-Three-Point Problem for a Direct Computation of Absolute Camera Position and Orientation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Christian, J.A.; Robinson, S.B.; D’Souza, C.N.; Ruiz, J.P. Cooperative Relative Navigation of Spacecraft Using Flash Light Detection and Ranging Sensors. J. Guid. Control Dyn. 2014, 37, 452–465. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Jeong, J.; Kim, S.; Suk, J. Parametric study of sensor placement for vision-based relative navigation system of multiple spacecraft. Acta Astronaut. 2017, 141, 36–49. [Google Scholar] [CrossRef]

- Yousefian, P.; Durali, M.; Jalali, M.A. Optimal design and simulation of sensor arrays for solar motion estimation. IEEE Sens. J. 2017, 17, 1673–1680. [Google Scholar] [CrossRef]

- Jackson, B.; Carpenter, B. Optimal placement of spacecraft sun sensors using stochastic optimization. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 6–13 March 2004; Volume 6, pp. 3916–3923. [Google Scholar]

- Kato, H.; Billinghurst, M. Marker tracking and HMD calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality, IWAR 099, IEEE Computer Society, Washington, DC, USA, 20–21 October 1999; pp. 85–94. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Ahn, S.J.; Warnecke, H.J.; Kotowski, R. Systematic Geometric Image Measurement Errors of Circular Object Targets: Mathematical Formulation and Correction. Photogramm. Rec. 1999, 16, 485–502. [Google Scholar] [CrossRef]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. PAMI 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2004. [Google Scholar]

- Heckbert, P.S.; Garland, M. Survey of Polygonal Surface Simplification Algorithms; Multiresolution Surface Modeling Course SIGGRAPH 97, Carnegie Mellon University Technical Report; Carnegie Mellon University School of Computer Science: Pittsburgh, PA, USA, 1997. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Moré, J.J. The Levenberg-Marquardt Algorithm: Implementation and Theory. In Numerical Analysis; Lecture Notes in Mathematics 630; Watson, G.A., Ed.; Springer: Berlin, Germany, 1977; pp. 105–116. [Google Scholar]

- Lourakis, M.I.A.; Argyros, A.A. SBA: A Software Package for Generic Sparse Bundle Adjustment. ACM Trans. Math. Softw. 2009. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.; Hartley, R.; Fitzgibbon, A. Bundle Adjustment: A Modern Synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- BIPM; IEC; IFCC; ILAC; ISO; IUPAC; IUPAP; OIML. Evaluation of Measurement Data—Guide to the Expression of Uncertainty in Measurement; International Organization for Standardization: Geneva, Switzerland, 2008. [Google Scholar]

- BIPM; IEC; IFCC; ILAC; ISO; IUPAC; IUPAP; OIML. Evaluation of Measurement Data—Supplement 1 to the Guide to the Expression of Uncertainty in Measurement—Propagation of Distributions Using a Monte Carlo Method; International Organization for Standardization: Geneva, Switzerland, 2008. [Google Scholar]

| Influencing Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1/α | β | 1/LongZ | 1/α2 | 1/DistBar | 1/Npoints | TransvX | DistBC | |

| RE/dRE | 0.54 | −0.50 | 0.47 | 0.36 | 0.36 | 0.34 | −0.29 | 0.04 |

| 1/α | β | LongZ | 1/Npoints | 1/DistBar | 1/α2 | TransvX | DistBC | |

| RU/dRU | 0.87 | −0.71 | −0.65 | 0.61 | 0.60 | 0.53 | −0.50 | −0.02 |

| LongZ | β | α | DistBC | 1/DistBar | Npoints | TransvX | 1/α3 | |

| PE | −0.30 | −0.26 | −0.22 | 0.20 | 0.17 | −0.17 | −0.16 | 0.08 |

| 1/α | LongZ | β | 1/α3 | 1/DistBar | Npoints | TransvX | DistBC | |

| PU | 0.79 | −0.64 | −0.64 | 0.48 | 0.48 | −0.44 | −0.34 | 0.26 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pertile, M.; Chiodini, S.; Giubilato, R.; Mazzucato, M.; Valmorbida, A.; Fornaser, A.; Debei, S.; Lorenzini, E.C. Metrological Characterization of a Vision-Based System for Relative Pose Measurements with Fiducial Marker Mapping for Spacecrafts. Robotics 2018, 7, 43. https://doi.org/10.3390/robotics7030043

Pertile M, Chiodini S, Giubilato R, Mazzucato M, Valmorbida A, Fornaser A, Debei S, Lorenzini EC. Metrological Characterization of a Vision-Based System for Relative Pose Measurements with Fiducial Marker Mapping for Spacecrafts. Robotics. 2018; 7(3):43. https://doi.org/10.3390/robotics7030043

Chicago/Turabian StylePertile, Marco, Sebastiano Chiodini, Riccardo Giubilato, Mattia Mazzucato, Andrea Valmorbida, Alberto Fornaser, Stefano Debei, and Enrico C. Lorenzini. 2018. "Metrological Characterization of a Vision-Based System for Relative Pose Measurements with Fiducial Marker Mapping for Spacecrafts" Robotics 7, no. 3: 43. https://doi.org/10.3390/robotics7030043

APA StylePertile, M., Chiodini, S., Giubilato, R., Mazzucato, M., Valmorbida, A., Fornaser, A., Debei, S., & Lorenzini, E. C. (2018). Metrological Characterization of a Vision-Based System for Relative Pose Measurements with Fiducial Marker Mapping for Spacecrafts. Robotics, 7(3), 43. https://doi.org/10.3390/robotics7030043