Investigating Learning Assistance by Demonstration for Robotic Wheelchairs: A Simulation Approach

Abstract

1. Introduction

2. Background

2.1. Target Population

2.2. Limitations of Commercial Solutions

2.3. Related Works

2.4. Summary

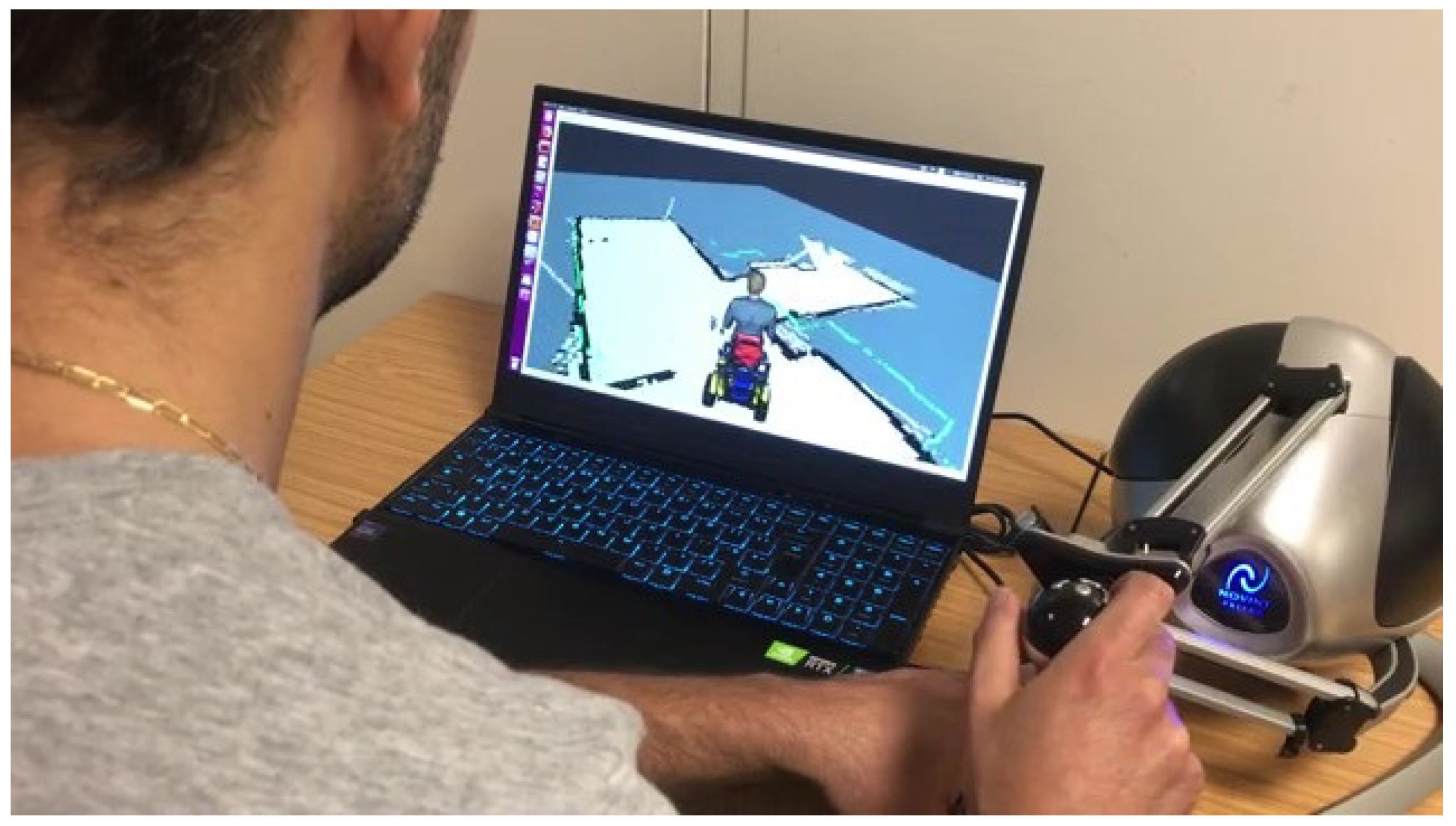

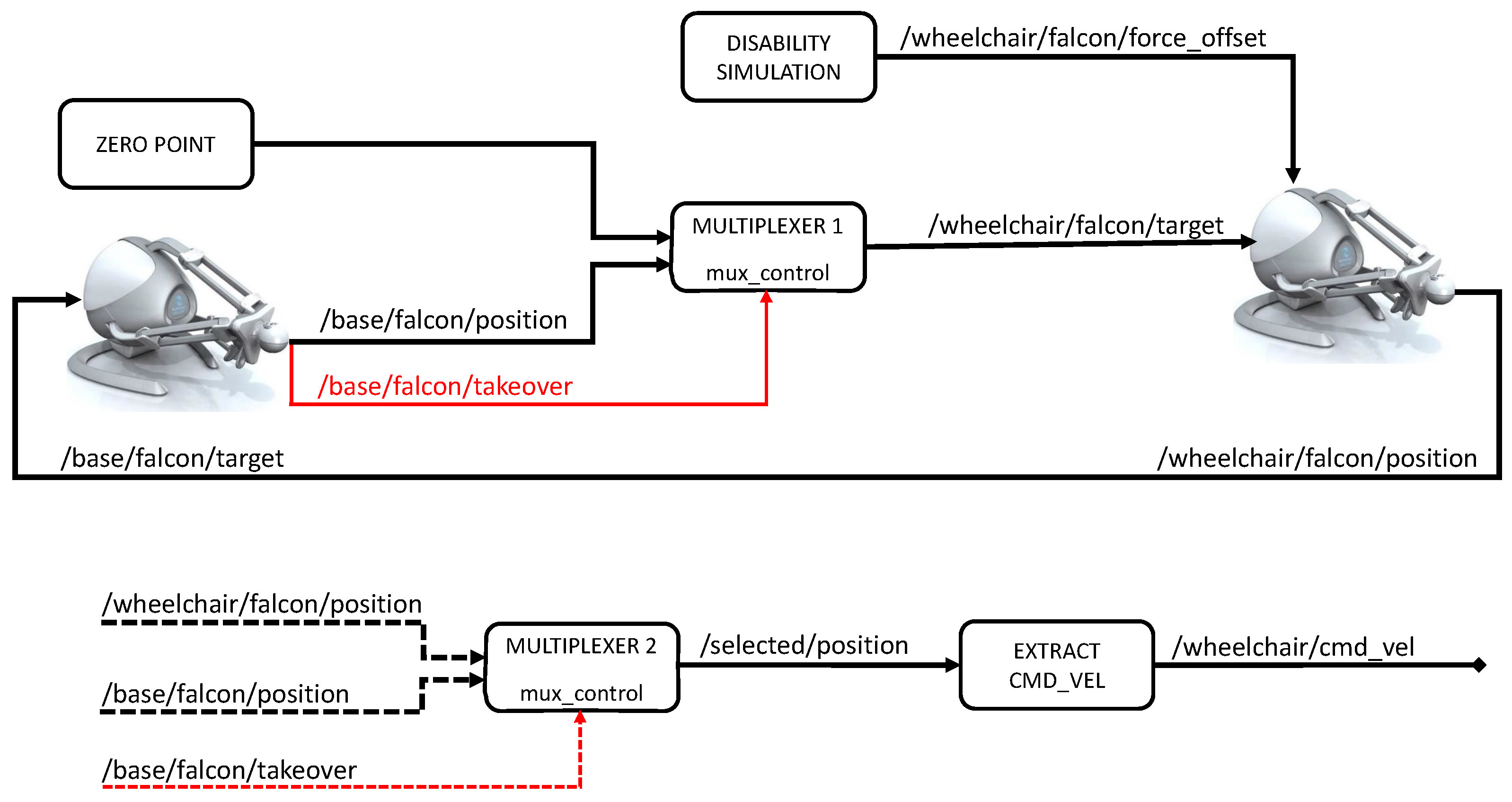

3. Simulator Design and Implementation

3.1. Wheelchair Navigation

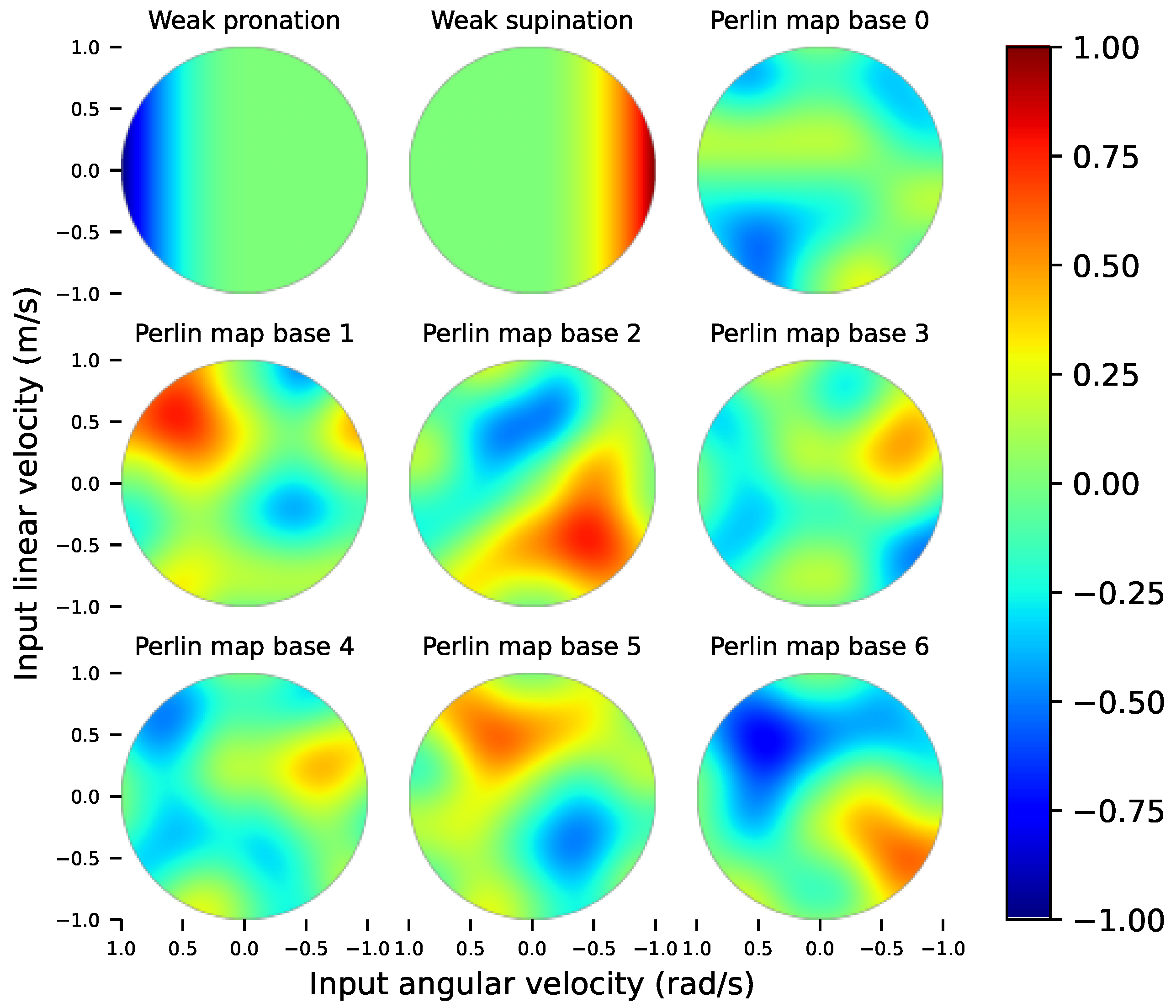

3.2. Hand Control Impairments

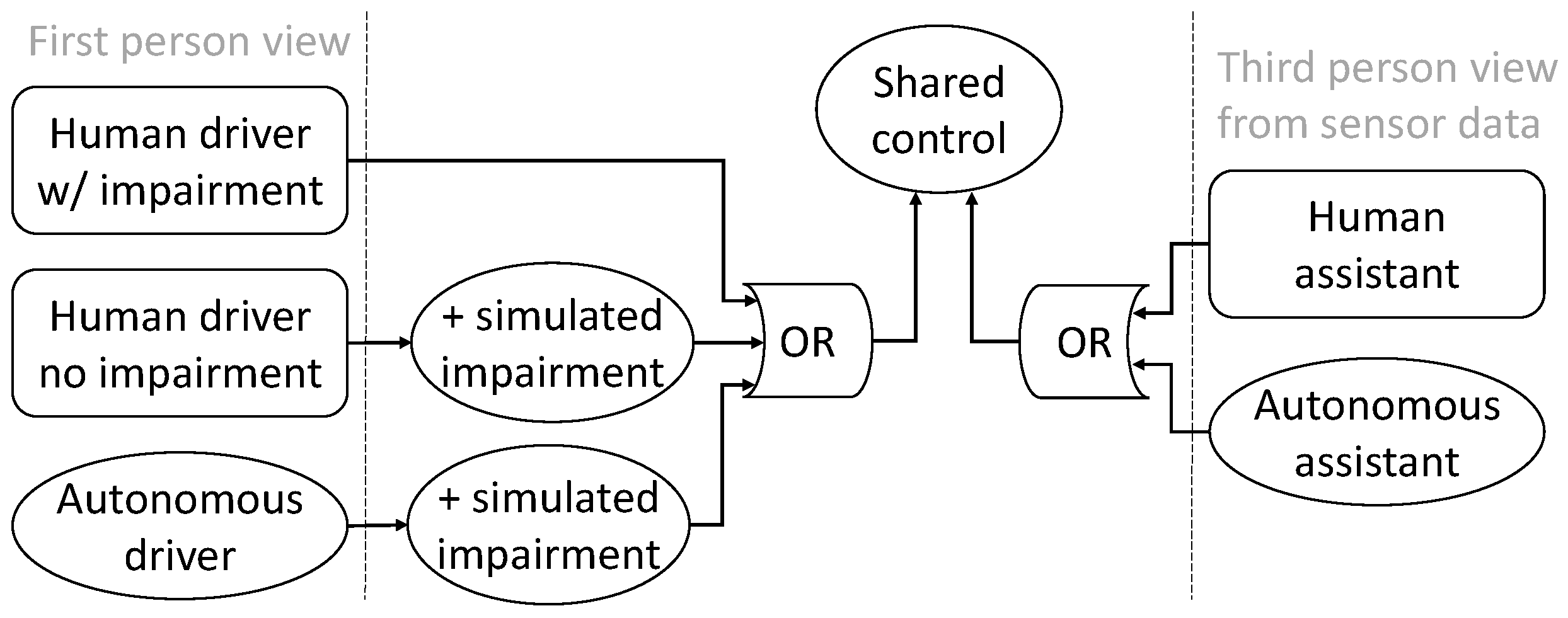

3.3. Triadic Interactions

3.4. Repeatable Interactions

4. Learning to Assist

4.1. Data Preprocessing

4.2. Model Design

Hyperparameter Optimisation

5. Investigating Features of LAD

5.1. Metrics

- Time to complete a lap: the total time required to complete one lap of a dedicated test course;

- Average distance from the planned path: the absolute distance between the wheelchair’s current position and the closest point on the planned path, sampled every 0.1 s and averaged over the lap;

- Fraction of time spent clearing collisions: the ratio of time spent in autonomous collision recovery behaviour to the total lap time;

- Number of instructor interventions: If the autonomous driver remains stuck for more than 10 s, a human instructor supervising the test intervenes to guide the wheelchair back to the planned path manually. This metric counts the number of such interventions per lap.

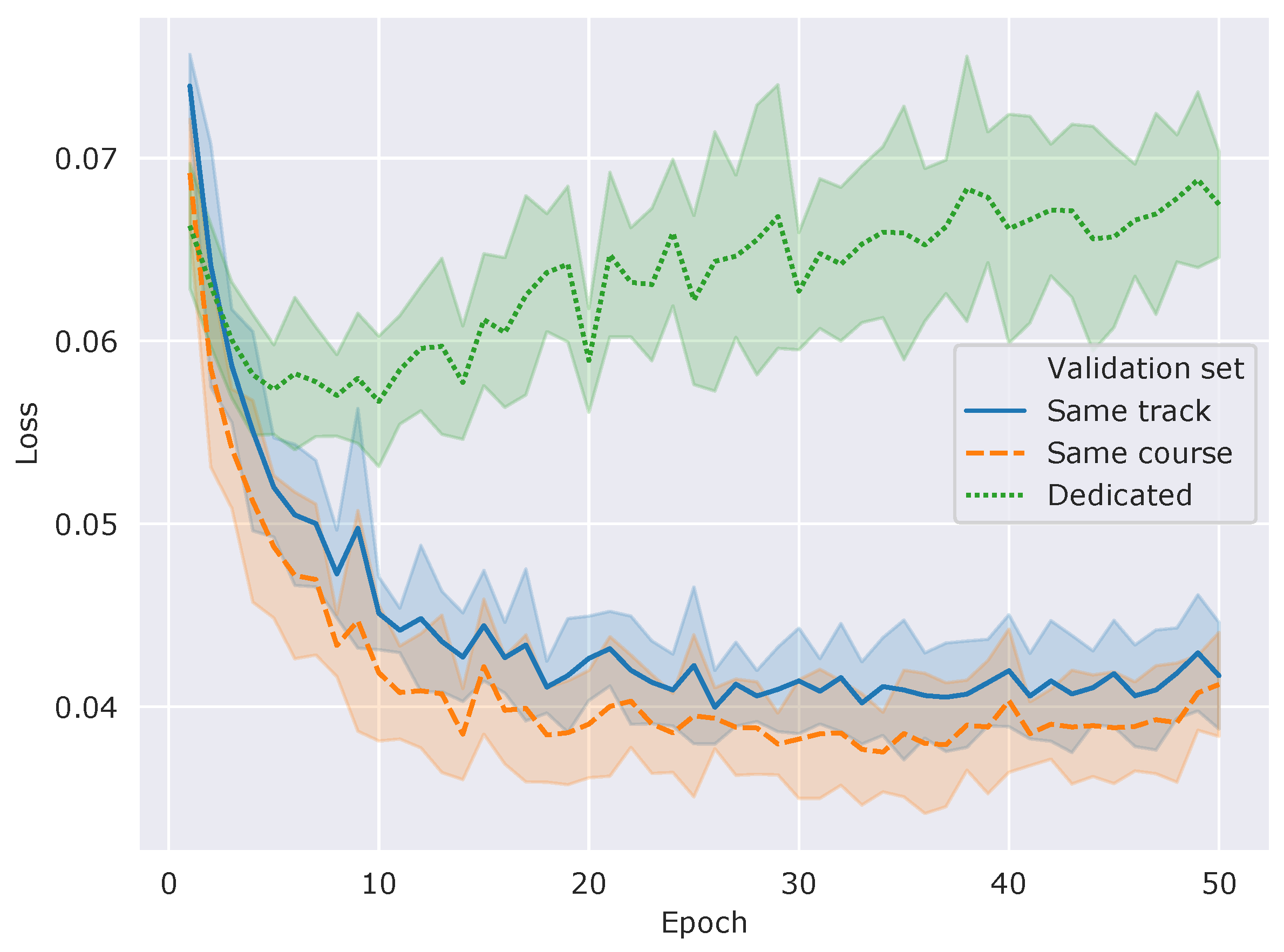

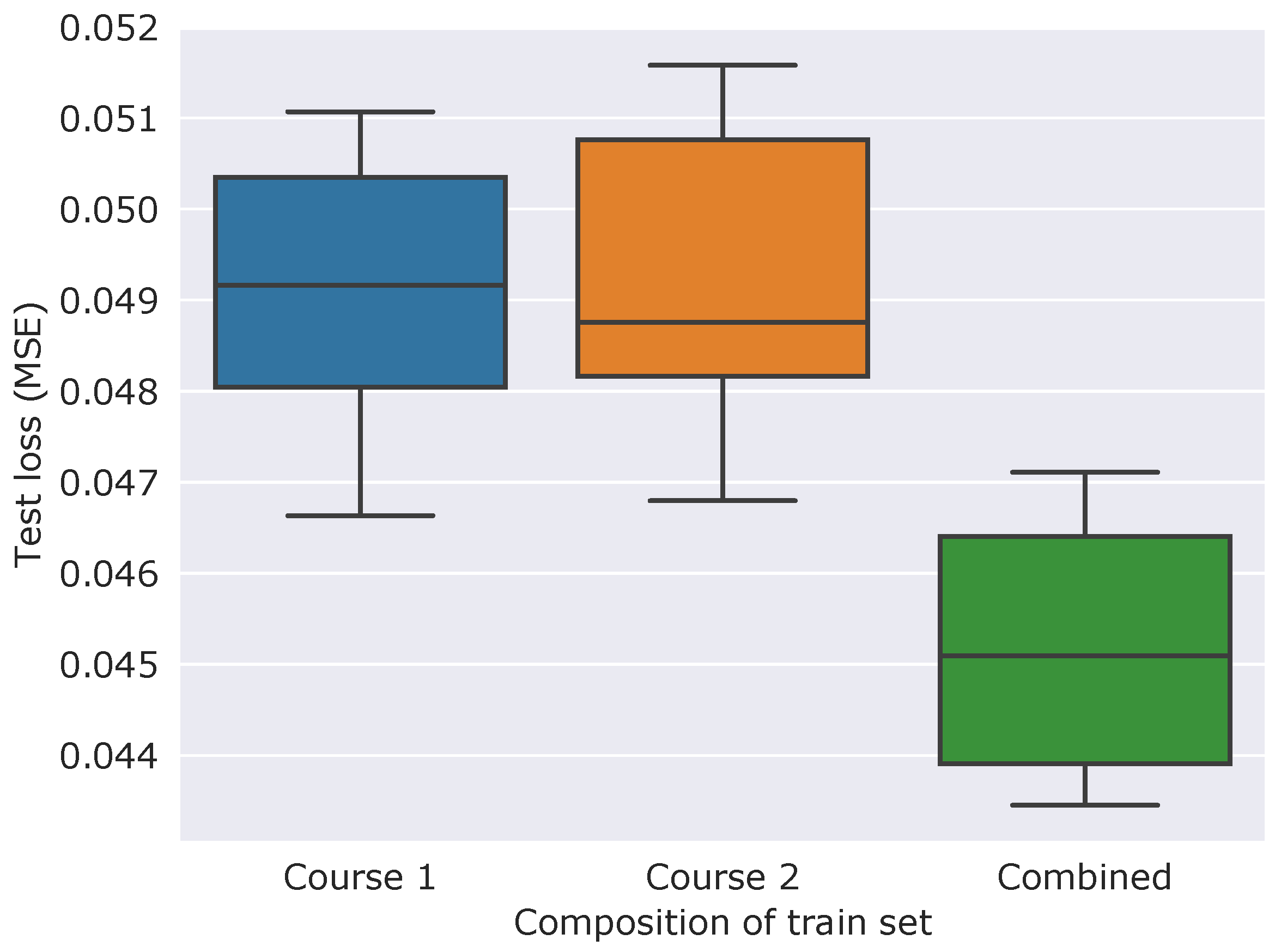

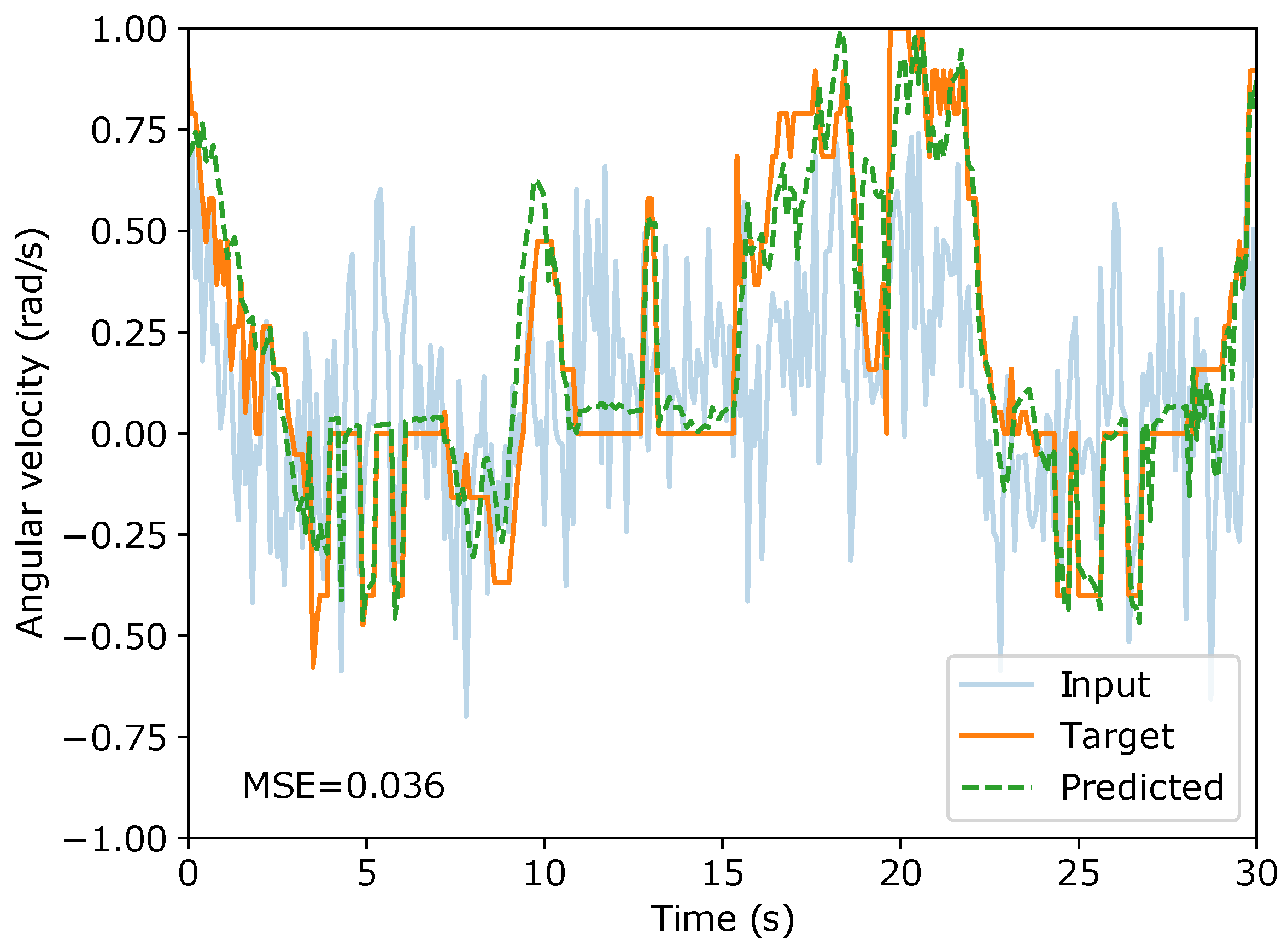

5.2. Generalisation

5.3. Assistive Performance

5.4. Robustness

5.5. Personalisation

6. Discussion

7. Conclusions

Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leaman, J.; La, H.M. A Comprehensive Review of Smart Wheelchairs: Past, Present, and Future. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 486–489. [Google Scholar] [CrossRef]

- Sivakanthan, S.; Candiotti, J.L.; Sundaram, S.A.; Duvall, J.A.; Sergeant, J.J.G.; Cooper, R.; Satpute, S.; Turner, R.L.; Cooper, R.A. Mini-review: Robotic wheelchair taxonomy and readiness. Neurosci. Lett. 2022, 772, 136482. [Google Scholar] [CrossRef]

- Narayanan, V.K.; Spalanzani, A.; Babel, M. A semi-autonomous framework for human-aware and user intention driven wheelchair mobility assistance. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4700–4707. [Google Scholar] [CrossRef]

- Lei, Z.; Tan, B.Y.; Garg, N.P.; Li, L.; Sidarta, A.; Ang, W.T. An Intention Prediction Based Shared Control System for Point-to-Point Navigation of a Robotic Wheelchair. IEEE Robot. Autom. Lett. 2022, 7, 8893–8900. [Google Scholar] [CrossRef]

- Sezer, V. An Optimized Path Tracking Approach Considering Obstacle Avoidance and Comfort. J. Intell. Robot. Syst. 2022, 105, 21. [Google Scholar] [CrossRef]

- Burhanpurkar, M.; Labbe, M.; Guan, C.; Michaud, F.; Kelly, J. Cheap or Robust? The practical realization of self-driving wheelchair technology. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 1079–1086. [Google Scholar] [CrossRef]

- Sanders, D.A. Using Self-Reliance Factors to Decide How to Share Control Between Human Powered Wheelchair Drivers and Ultrasonic Sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1221–1229. [Google Scholar] [CrossRef] [PubMed]

- Udupa, S.; Kamat, V.R.; Menassa, C.C. Shared autonomy in assistive mobile robots: A review. Disabil. Rehabil. Assist. Technol. 2023, 18, 827–848. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.J.; Hazlett-Knudsen, R.; Culver-Godfrey, H.; Rucks, G.; Cunningham, T.; Portee, D.; Bricout, J.; Wang, Z.; Behal, A. How Autonomy Impacts Performance and Satisfaction: Results From a Study with Spinal Cord Injured Subjects Using an Assistive Robot. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2012, 42, 2–14. [Google Scholar] [CrossRef]

- Erdogan, A.; Argall, B.D. The effect of robotic wheelchair control paradigm and interface on user performance, effort and preference: An experimental assessment. Robot. Auton. Syst. 2017, 94, 282–297. [Google Scholar] [CrossRef]

- Teodorescu, C.S.; Carlson, T. AssistMe: Using policy iteration to improve shared control of a non-holonomic vehicle. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 941–948. [Google Scholar] [CrossRef]

- Bastos-Filho, T.F.; Cheein, F.A.; Muller, S.M.T.; Celeste, W.C.; de la Cruz, C.; Cavalieri, D.C.; Sarcinelli-Filho, M.; Amaral, P.F.S.; Perez, E.; Soria, C.M.; et al. Towards a New Modality-Independent Interface for a Robotic Wheelchair. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 567–584. [Google Scholar] [CrossRef]

- Wästlund, E.; Sponseller, K.; Pettersson, O.; Bared, A. Evaluating gaze-driven power wheelchair with navigation support for persons with disabilities. J. Rehabil. Res. Dev. 2015, 52, 815–826. [Google Scholar] [CrossRef]

- MacIel, G.M.; Pinto, M.F.; Da Júnior, I.C.; Coelho, F.O.; Marcato, A.L.; Cruzeiro, M.M. Shared control methodology based on head positioning and vector fields for people with quadriplegia. Robotica 2022, 40, 348–364. [Google Scholar] [CrossRef]

- Kutbi, M.; Li, H.; Chang, Y.; Sun, B.; Li, X.; Cai, C.; Agadakos, N.; Hua, G.; Mordohai, P. Egocentric Computer Vision for Hands-Free Robotic Wheelchair Navigation. J. Intell. Robot. Syst. 2023, 107, 10. [Google Scholar] [CrossRef]

- Kundu, A.S.; Mazumder, O.; Lenka, P.K.; Bhaumik, S. Hand Gesture Recognition Based Omnidirectional Wheelchair Control Using IMU and EMG Sensors. J. Intell. Robot. Syst. 2018, 91, 529–541. [Google Scholar] [CrossRef]

- Ropper, A.H.; Adams, R.; Victor, M.; Samuels, M.A. Adams and Victor’s Principles of Neurology; McGraw Hill: New York, NY, USA, 2005. [Google Scholar]

- Kairy, D.; Rushton, P.; Archambault, P.; Pituch, E.; Torkia, C.; El Fathi, A.; Stone, P.; Routhier, F.; Forget, R.; Demers, L.; et al. Exploring Powered Wheelchair Users and Their Caregivers’ Perspectives on Potential Intelligent Power Wheelchair Use: A Qualitative Study. Int. J. Environ. Res. Public Health 2014, 11, 2244–2261. [Google Scholar] [CrossRef]

- Padir, T. Towards personalized smart wheelchairs: Lessons learned from discovery interviews. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Milan, Italy, 25–29 August 2015; pp. 5016–5019. [Google Scholar] [CrossRef]

- Soh, H.; Demiris, Y. When and how to help: An iterative probabilistic model for learning assistance by demonstration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3230–3236. [Google Scholar] [CrossRef]

- Zheng, B.; Verma, S.; Zhou, J.; Tsang, I.W.; Chen, F. Imitation Learning: Progress, Taxonomies and Challenges. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 6322–6337. [Google Scholar] [CrossRef]

- Ravichandar, H.; Polydoros, A.S.; Chernova, S.; Billard, A. Recent Advances in Robot Learning from Demonstration. Annu. Rev. Control Robot. Auton. Syst. 2020, 3, 297–330. [Google Scholar] [CrossRef]

- Schettino, V.; Demiris, Y. Improving Generalisation in Learning Assistance by Demonstration for Smart Wheelchairs. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 5474–5480. [Google Scholar] [CrossRef]

- Schettino, V.; Demiris, Y. Inference of user-intention in remote robot wheelchair assistance using multimodal interfaces. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4600–4606. [Google Scholar] [CrossRef]

- WHO. Global Strategy on Human Resources for Health: Workforce 2030; Technical Report; World Health Organization (WHO): Geneva, Switzerland, 2016.

- Schettino, V. Learning to Assist in Triadic Human-Robot Interaction. Ph.D. Thesis, Imperial College London, London, UK, 2021. Available online: http://hdl.handle.net/10044/1/97948 (accessed on 8 August 2025).

- Najafi, M.; Adams, K.; Tavakoli, M. Robotic learning from demonstration of therapist’s time-varying assistance to a patient in trajectory-following tasks. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 888–894. [Google Scholar] [CrossRef]

- Lauretti, C.; Cordella, F.; Guglielmelli, E.; Zollo, L. Learning by Demonstration for Planning Activities of Daily Living in Rehabilitation and Assistive Robotics. IEEE Robot. Autom. Lett. 2017, 2, 1375–1382. [Google Scholar] [CrossRef]

- Fong, J.; Rouhani, H.; Tavakoli, M. A Therapist-Taught Robotic System for Assistance During Gait Therapy Targeting Foot Drop. IEEE Robot. Autom. Lett. 2019, 4, 407–413. [Google Scholar] [CrossRef]

- Ewerton, M.; Rother, D.; Weimar, J.; Kollegger, G.; Wiemeyer, J.; Peters, J.; Maeda, G. Assisting Movement Training and Execution With Visual and Haptic Feedback. Front. Neurorobotics 2018, 12, 24. [Google Scholar] [CrossRef]

- Meccanici, F.; Karageorgos, D.; Heemskerk, C.J.M.; Abbink, D.A.; Peternel, L. Probabilistic Online Robot Learning via Teleoperated Demonstrations for Remote Elderly Care. In Advances in Service and Industrial Robotics (RAAD 2023); Springer Nature: Cham, Switzerland, 2023; Volume 135, pp. 12–19. [Google Scholar] [CrossRef]

- Losey, D.P.; Srinivasan, K.; Mandlekar, A.; Garg, A.; Sadigh, D. Controlling Assistive Robots with Learned Latent Actions. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 378–384. [Google Scholar] [CrossRef]

- Losey, D.P.; Jeon, H.J.; Li, M.; Srinivasan, K.; Mandlekar, A.; Garg, A.; Bohg, J.; Sadigh, D. Learning latent actions to control assistive robots. Auton. Robot. 2022, 46, 115–147. [Google Scholar] [CrossRef]

- Qiao, C.Z.; Sakr, M.; Muelling, K.; Admoni, H. Learning from Demonstration for Real-Time User Goal Prediction and Shared Assistive Control. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 3270–3275. [Google Scholar] [CrossRef]

- Goil, A.; Derry, M.; Argall, B.D. Using machine learning to blend human and robot controls for assisted wheelchair navigation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Seattle, WA, USA, 24–26 June 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Matsubara, T.; Miro, J.V.; Tanaka, D.; Poon, J.; Sugimoto, K. Sequential intention estimation of a mobility aid user for intelligent navigational assistance. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 444–449. [Google Scholar] [CrossRef]

- Poon, J.; Cui, Y.; Miro, J.V.; Matsubara, T.; Sugimoto, K. Local driving assistance from demonstration for mobility aids. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5935–5941. [Google Scholar] [CrossRef]

- Casado, F.E.; Demiris, Y. Federated Learning from Demonstration for Active Assistance to Smart Wheelchair Users. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 9326–9331. [Google Scholar] [CrossRef]

- Bozorgi, H.; Ngo, T.D. Beyond Shared Autonomy: Joint Perception and Action for Human-In-The-Loop Mobile Robot Navigation Systems. J. Intell. Robot. Syst. 2023, 109, 20. [Google Scholar] [CrossRef]

- Soh, H.; Demiris, Y. Learning Assistance by Demonstration: Smart Mobility With Shared Control and Paired Haptic Controllers. J. Hum.-Robot Interact. 2015, 4, 76. [Google Scholar] [CrossRef]

- Kucukyilmaz, A.; Demiris, Y. One-shot assistance estimation from expert demonstrations for a shared control wheelchair system. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 438–443. [Google Scholar] [CrossRef]

- Kucukyilmaz, A.; Demiris, Y. Learning Shared Control by Demonstration for Personalized Wheelchair Assistance. IEEE Trans. Haptics 2018, 11, 431–442. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S.; Dragan, A.D.; Levine, S. Shared Autonomy via Deep Reinforcement Learning. In Proceedings of the Robotics: Science and Systems 2018, Pittsburgh, PA, USA, 26–30 June 2018. [Google Scholar]

- Kapusta, A.; Erickson, Z.; Clever, H.M.; Yu, W.; Liu, C.K.; Turk, G.; Kemp, C.C. Personalized collaborative plans for robot-assisted dressing via optimization and simulation. Auton. Robot. 2019, 43, 2183–2207. [Google Scholar] [CrossRef]

- Clegg, A.; Erickson, Z.; Grady, P.; Turk, G.; Kemp, C.C.; Liu, C.K. Learning to Collaborate From Simulation for Robot-Assisted Dressing. IEEE Robot. Autom. Lett. 2020, 5, 2746–2753. [Google Scholar] [CrossRef]

- Erickson, Z.; Gangaram, V.; Kapusta, A.; Liu, C.K.; Kemp, C.C. Assistive Gym: A Physics Simulation Framework for Assistive Robotics. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10169–10176. [Google Scholar] [CrossRef]

- Erickson, Z.; Gu, Y.; Kemp, C.C. Assistive VR Gym: Interactions with Real People to Improve Virtual Assistive Robots. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 299–306. [Google Scholar] [CrossRef]

- John, N.W.; Pop, S.R.; Day, T.W.; Ritsos, P.D.; Headleand, C.J. The Implementation and Validation of a Virtual Environment for Training Powered Wheelchair Manoeuvres. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1867–1878. [Google Scholar] [CrossRef]

- Arlati, S.; Colombo, V.; Ferrigno, G.; Sacchetti, R.; Sacco, M. Virtual reality-based wheelchair simulators: A scoping review. Assist. Technol. 2020, 32, 294–305. [Google Scholar] [CrossRef]

- Vailland, G.; Grzeskowiak, F.; Devigne, L.; Gaffary, Y.; Fraudet, B.; Leblong, E.; Nouviale, F.; Pasteau, F.; Breton, R.L.; Guegan, S.; et al. User-centered design of a multisensory power wheelchair simulator: Towards training and rehabilitation applications. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 77–82. [Google Scholar] [CrossRef]

- Morère, Y.; Hadj Abdelkader, M.; Cosnuau, K.; Guilmois, G.; Bourhis, G. Haptic control for powered wheelchair driving assistance. IRBM 2015, 36, 293–304. [Google Scholar] [CrossRef]

- Devigne, L.; Babel, M.; Nouviale, F.; Narayanan, V.K.; Pasteau, F.; Gallien, P. Design of an immersive simulator for assisted power wheelchair driving. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, London, UK, 17–20 July 2017; pp. 995–1000. [Google Scholar] [CrossRef]

- Devigne, L.; Pasteau, F.; Carlson, T.; Babel, M. A shared control solution for safe assisted power wheelchair navigation in an environment consisting of negative obstacles: A proof of concept. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 1043–1048. [Google Scholar] [CrossRef]

- Di Gironimo, G.; Matrone, G.; Tarallo, A.; Trotta, M.; Lanzotti, A. A virtual reality approach for usability assessment: Case study on a wheelchair-mounted robot manipulator. Eng. Comput. 2013, 29, 359–373. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. ICRA Workshop Open Source Syst. 2009, 3, 5. [Google Scholar]

- Perlin, K. An image synthesizer. ACM SIGGRAPH Comput. Graph. 1985, 19, 287–296. [Google Scholar] [CrossRef]

- Viswanathan, P.; Zambalde, E.P.; Foley, G.; Graham, J.L.; Wang, R.H.; Adhikari, B.; Mackworth, A.K.; Mihailidis, A.; Miller, W.C.; Mitchell, I.M. Intelligent wheelchair control strategies for older adults with cognitive impairment: User attitudes, needs, and preferences. Auton. Robot. 2017, 41, 539–554. [Google Scholar] [CrossRef]

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization. J. Mach. Learn. Res. 2018, 18, 1–52. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian optimization of machine learning algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 4, pp. 2951–2959. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Belkhale, S.; Cui, Y.; Sadigh, D. Data Quality in Imitation Learning. In Proceedings of the Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 80375–80395. [Google Scholar]

- Dicianno, B.E.; Cooper, R.A.; Coltellaro, J. Joystick Control for Powered Mobility: Current State of Technology and Future Directions. Phys. Med. Rehabil. Clin. N. Am. 2010, 21, 79–86. [Google Scholar] [CrossRef]

- Niu, S.; Liu, Y.; Wang, J.; Song, H. A Decade Survey of Transfer Learning (2010–2020). IEEE Trans. Artif. Intell. 2020, 1, 151–166. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2018, Rhodes, Greece, 4–7 October 2018; Volume 11141, pp. 270–279. [Google Scholar] [CrossRef]

- Soh, H.; Demiris, Y. Towards Early Mobility Independence: An Intelligent Paediatric Wheelchair with Case Studies. In Proceedings of the IROS Workshop on Progress, Challenges and Future Perspectives in Navigation and Manipulation Assistance for Robotic Wheelchairs, Vilamoura, Portugal, 12 October 2012. [Google Scholar]

| Name | Type | Range/Values | Description |

|---|---|---|---|

| conv_blocks | Int | 1–3 | Number of convolutional blocks |

| conv_block_style | Choice | 1, 2, 3, 4 | Conv block type: 1 = Conv, 2 = Conv-Conv, 3 = Conv-Pool, 4 = Conv-Conv-Pool |

| conv_filters1 | Int | 16–48 (step 16) | Filters in 1st convolutional block |

| conv_filters2 | Int | 48–96 (step 16) | Filters in 2nd block (if conv_blocks ≥ 2) |

| conv_filters3 | Int | 64–128 (step 32) | Filters in 3rd block (if conv_blocks = 3) |

| conv_ksize1 | Int | 7–15 (step 2) | Kernel size in 1st block |

| conv_ksize2 | Int | 7–9 (step 2) | Kernel size in 2nd block (if conv_blocks ≥ 2) |

| conv_ksize3 | Int | 3–5 (step 2) | Kernel size in 3rd block (if conv_blocks = 3) |

| conv_batchnorm | Boolean | True/False | BatchNorm after conv block |

| conv_drop | Boolean | True/False | Dropout after conv block |

| conv_drop_rate | Float | 0.1–0.3 (step 0.1) | Dropout rate (if conv_drop is True) |

| rnn_type | Choice | SimpleRNN, LSTM, GRU | Type of recurrent layer |

| rnn_layers | Int | 1–2 | Number of recurrent layers |

| rnn_units_scan | Int | 16–512 (step 248) | RNN units for scan input |

| rnn_units_vel | Int | 48–90 (step 16) | RNN units for velocity input |

| rnn_batchnorm | Boolean | True/False | BatchNorm after RNN layers |

| merge_batchnorm | Boolean | True/False | BatchNorm after merging scan/vel paths |

| dense_layers | Int | 1–2 | Number of dense layers before output |

| dense_units1 | Int | 256–768 (step 256) | Units in first dense layer |

| dense_units2 | Int | 8–32 (step 8) | Units in second dense layer (if dense_layers = 2) |

| dense_batchnorm | Boolean | True/False | BatchNorm after dense layer |

| dense_drop | Boolean | True/False | Dropout after dense layer |

| dense_drop_rate | Float | 0.1–0.3 (step 0.1) | Dropout rate after dense layer |

| learning_rate | Float | 0.001–0.005 (step 0.001) | Learning rate for Adam optimiser |

| No Disability | Disability | LAD | Recovery | |

|---|---|---|---|---|

| Time to complete a lap (s) | 179.3 (4.8) | 325.7 (23.8) | 247.9 (11.6) | 53.2% |

| Avg. dist. from planned path (cm) | 8.7 (0.3) | 23.0 (1.6) | 16.2 (1.1) | 47.5% |

| Frac. of time clearing collisions (%) | 2.0 (0.8) | 23.3 (1.4) | 3.6 (0.8) | 92.4% |

| Num. of instructor interventions | 0.0 (0.0) | 3.2 (1.2) | 0.2 (0.4) | 93.8% |

| No Disability | Disability | LAD | Recovery | |

|---|---|---|---|---|

| Time to complete a lap (s) | 179.3 (4.8) | 298.7 (26.9) | 252.8 (9.8) | 38.4% |

| Avg. dist. from planned path (cm) | 8.7 (0.3) | 20.8 (0.2) | 15.0 (1.3) | 48.0% |

| Frac. of time clearing collisions (%) | 2.0 (0.8) | 21.8 (5.2) | 5.4 (1.8) | 82.8% |

| Num. of instructor interventions | 0.0 (0.0) | 2.0 (0.6) | 0.8 (0.4) | 60.0% |

| Time to Complete a Lap (s) | Avg. Dist. from Planned Path (cm) | ||||

|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 1 | Model 2 | ||

| Driver 1 | 23.9% | −35.5% | Driver 1 | 29.4% | −47.1% |

| Driver 2 | −31.2% | 15.3% | Driver 2 | −42.7% | 27.8% |

| Frac. of time clearing collisions (%) | Num. of instructor interventions | ||||

| Model 1 | Model 2 | Model 1 | Model 2 | ||

| Driver 1 | 84.6 | 58.9 | Driver 1 | 93.8% | −31.2% |

| Driver 2 | 42.3 | 75.2 | Driver 2 | −10.0% | 60.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schettino, V.B.; Santos, M.F.d.; Mercorelli, P. Investigating Learning Assistance by Demonstration for Robotic Wheelchairs: A Simulation Approach. Robotics 2025, 14, 136. https://doi.org/10.3390/robotics14100136

Schettino VB, Santos MFd, Mercorelli P. Investigating Learning Assistance by Demonstration for Robotic Wheelchairs: A Simulation Approach. Robotics. 2025; 14(10):136. https://doi.org/10.3390/robotics14100136

Chicago/Turabian StyleSchettino, Vinícius Barbosa, Murillo Ferreira dos Santos, and Paolo Mercorelli. 2025. "Investigating Learning Assistance by Demonstration for Robotic Wheelchairs: A Simulation Approach" Robotics 14, no. 10: 136. https://doi.org/10.3390/robotics14100136

APA StyleSchettino, V. B., Santos, M. F. d., & Mercorelli, P. (2025). Investigating Learning Assistance by Demonstration for Robotic Wheelchairs: A Simulation Approach. Robotics, 14(10), 136. https://doi.org/10.3390/robotics14100136