1. Introduction

Electric vehicles (EVs) have experienced rapid growth in the last decade due to their environmental friendliness, contribution to energy security, and savings in operating/maintenance costs. However, this growth has increased the use of lithium-ion batteries (LiBs). The volume of expended LiBs is anticipated to reach 2 million metric tonnes per year by 2030 [

1]. Therefore, the need to dispose of these batteries has surged. Although LiBs are a key component of home energy storage systems, their production and disposal processes can lead to various environmental challenges. This sector is increasing its efforts to effectively recycle used household batteries and develop second-age applications [

2], which are being prioritized in the circular economy for the monetary savings and benefits they can provide.

While the rise of EVs highlights their environmentally friendly and economic advantages, an important area for the sustainability of this technology is the recycling of LiBs using robotic technology. In this context, robotic battery recycling technology, which has drawn great attention but is still in the research phase, has the potential to offer an effective solution to the recycling process. The development of this technology is generally included in studies focusing on special tasks such as screw removal [

3,

4,

5,

6,

7,

8], grasping [

9], and cutting [

10].

Disassembling batteries is a critical operation within industrial robotics and automation processes, particularly relevant to EV applications. These applications often encompass the maintenance, repair, or recycling of EV battery packs. Central to automating these operations are task planners, which equip robots with the capabilities to identify, manipulate, and accurately sequence the placement of objects. A proficient task planner is essential for the precise, safe, and logical execution of battery removal tasks. Moreover, achieving the timely, efficient, and safe dismantling of batteries is imperative for meeting economic and sustainability objectives.

Choux et al. [

11] and Wang et al. [

12] previously proposed task planners with a robot arm framework for EV battery disassembly. However, task-planning processes with a single robot can lead to limitations in terms of efficiency, especially when faced with complex and large-scale tasks. In this context, existing studies in the literature generally do not include multi-robot systems, and this has limited the time performance, especially in time-critical applications.

The differences between disassembly methods are summarized in

Figure 1. The multi-robot system excels in most categories, notably in process time and workspace adaptability, positioning it as the most effective option despite a marginally increased risk of collision. The single-robot system represents a middle ground, performing well overall but with its efficiency constrained by its operational workspace. However, it offers high safety and a low collision risk. The manual method presents varied outcomes; it is efficient and poses a low collision risk but falls short in safety and only moderately adapts to workspace constraints. Hence, this paper emphasizes the importance of using multiple robots in disassembly settings. Some studies demonstrate that the time difference between a manual process and a robot-automated process is minimal [

13], with a robot taking only 1.05 s for every second a human takes for a task. It is also shown that adding more humans can increase the disassembly speed. Considering this human-to-robot factor, it can be assumed that more robots will perform tasks faster and within a larger workspace.

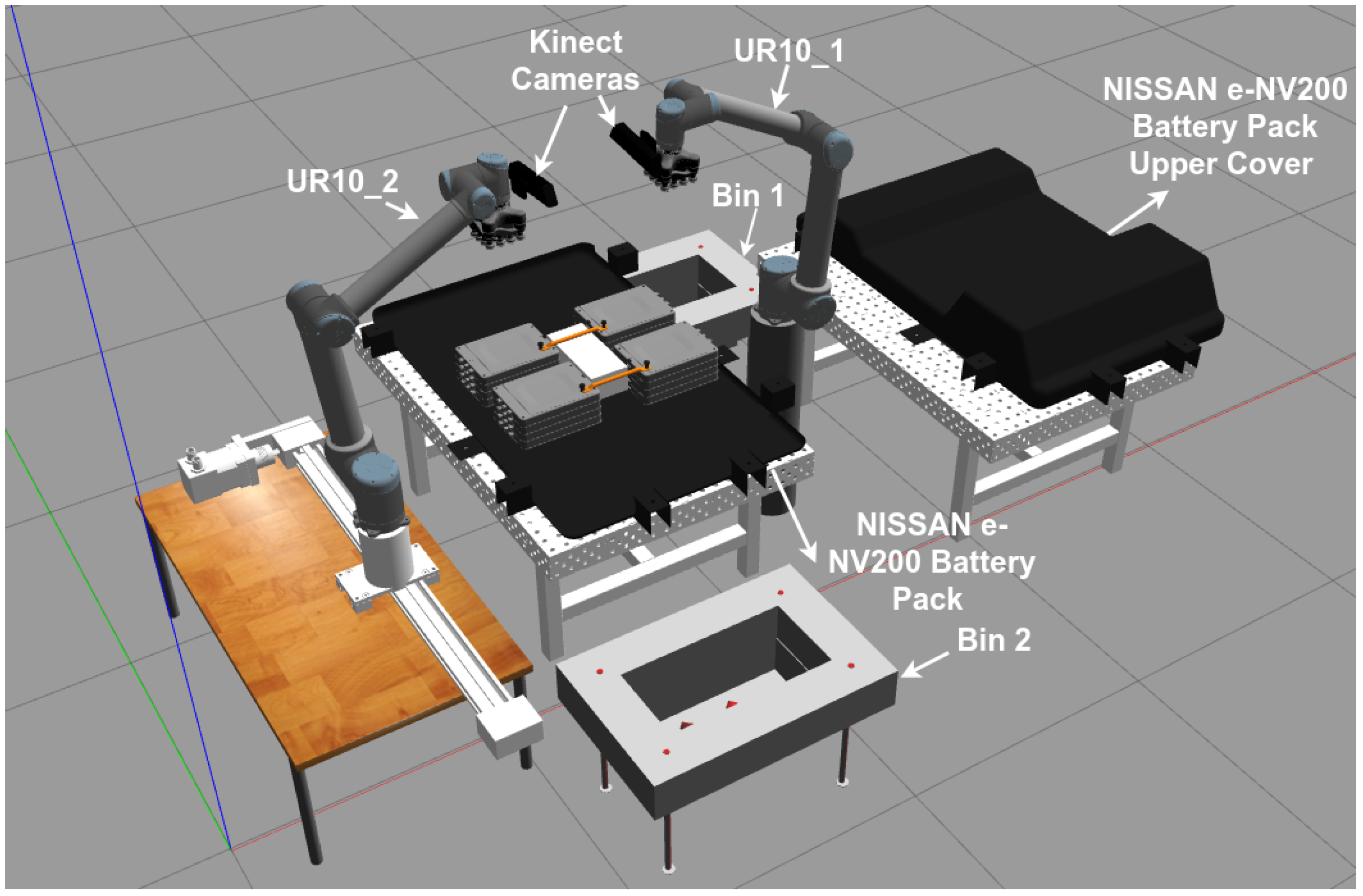

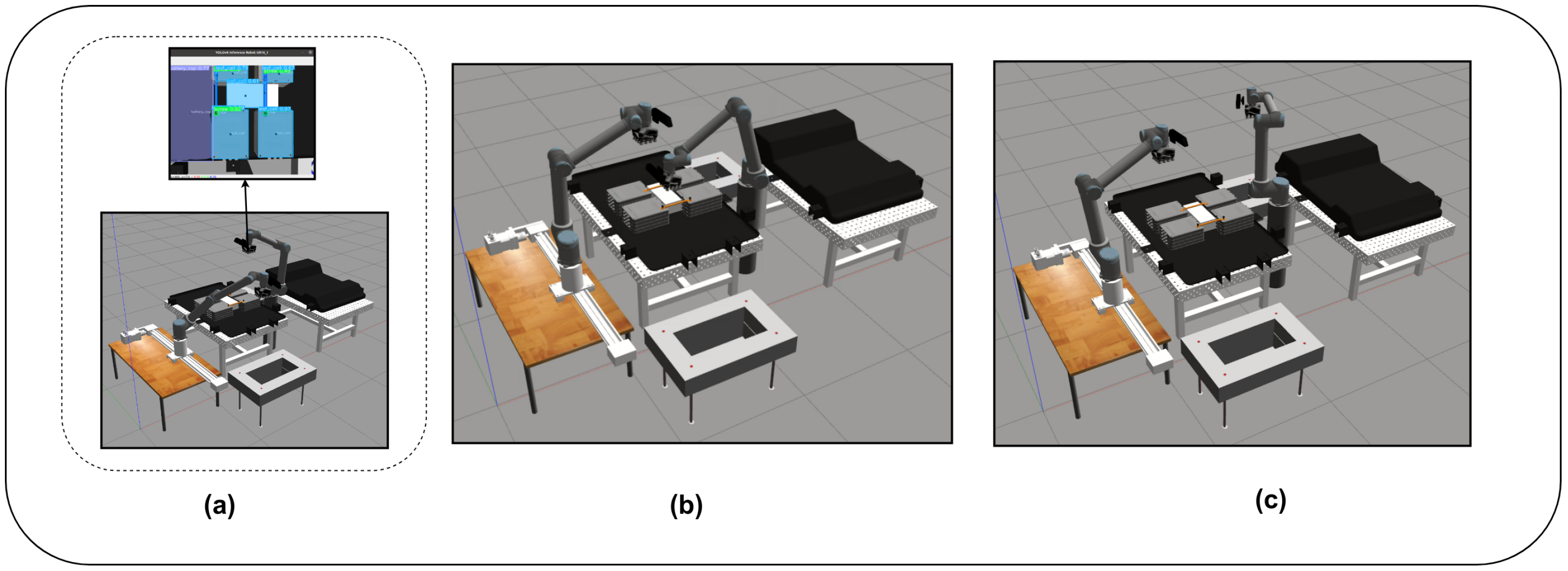

The main aim of this study is to design a task planner (

Figure 2) that can perform the disassembly of an EV battery pack without prior knowledge of a specific product location, creating automatic disassembly plans and sequences. The key functions of the proposed task planner consist of determining the LIB components and their locations, calculating the movement cost of the components according to the distance between them and the robots, logically sorting the objects according to the distance, and finally, picking and placing the objects by moving the robot to the determined disassembly locations. By using more than one robot in the environment, the disassembly and removal tasks, which are the main steps of battery removal, are accomplished without collision. The disassembly steps were simulated in Gazebo [

14], a simulation environment. To demonstrate this, the object detection approach was performed with YOLOV8 to obtain the object positions and dynamically allow the robots to select which task and object to disassemble to complete the primary task. The approach, successfully implemented, was validated through experiments using different motion planners in the Gazebo environment. These experiments involved the disassembly of a simplified version of the NISSAN e-NV200 battery pack, which contained its various components. This served as a proof of concept for our proposed multi-robot hybrid task planner, which is designed to operate in various scenes.

2. Related Works

Many studies have investigated battery disassembly, typically categorized into two groups: semi-autonomous and fully autonomous. Semi-autonomous studies involve robots assisting humans in collaborative tasks, whereas fully autonomous studies entail robots undertaking complex and repetitive tasks independently. Semi-autonomous approaches often raise safety concerns, as humans and robots share the same workspace, potentially complicating human–robot interaction and necessitating the precise management of safety protocols. Conversely, autonomous systems offer significant advantages in terms of speed, reliability, and performance while reducing reliance on human labour.

Wegener et al. [

4] and Chen et al. [

5] presented semi-autonomous scenarios in which robots perform simpler tasks, such as removing screws and bolts, while humans engage in more complex and diversified tasks. The work by Kay et al. [

10] highlighted an approach designed to support human–robot cooperation. It analysed operations, mainly monitoring human workers, including their times and actions, which included cutting and grasping, to understand the disassembly process. In another study [

15], the focus was on tracking different types of robots in a modular combination using a behaviour tree-based framework, with battery parts placed in a straight line, but failed to focus on a logical disassembly process and just dealt with the obtention of previously disassembled components.

In fully autonomous operations, disassembly is typically carried out using a single robot. Wang et al. [

12] conducted a study aiming to separate nested objects using a simulated environment. In a study by Choux et al. [

11], the robot performed a hierarchical disassembly process but was limited to the predefined hierarchy, and a single robot was used. Our framework implements something similar but with dynamic hierarchy updates and with multiple robots. Furthermore, autonomous research endeavours to enhance disassembly efficiency by enabling robots to plan and execute repetitive activities in unstructured situations while receiving visual and tactile inputs. Haptic devices have been utilized in the literature to aid in robotic disassembly [

16,

17]. For instance, tactile devices were employed to develop a screwdriver robot that transfers the sensations felt by humans during the removal of screws to robots [

17].

Continuous developments in the field of robotics have led to significant advances in the design and implementation of robot systems capable of performing complex tasks. Autonomous systems encompass those developed with a single robot as well as those involving the cooperation of multiple robots. The utilization of multiple collaborative robots has gained prominence in the industry due to its effectiveness in minimizing labour costs and production errors. When robots share the same environment, they can typically cooperate smoothly, with minimal risk of collision, as long as they do not intrude into each other’s workspaces. However, coordinating multiple arms introduces new challenges, including computational difficulties stemming from increased degrees of freedom and the combinatorial increase in operations that multiple manipulators can perform, such as handoffs between arms. Palleschi et al. [

18] addressed the complexity arising when multiple robots share the same environment. Although their approach reduces planning complexity, the solutions found do not allow for the simultaneous execution of actions specific to multi-robot systems, which our framework does allow.

Tianyang and other researchers have reported on a method developed to coordinate tasks performed by multiple robots simultaneously [

19]. This approach consists of a motion planner using the RRT-connect algorithm and a time scheduler solved by permutation. While this method could be extended to up to five robots, it was observed that it timed out in the case of six robots due to computational limitations. A study by Zhu et al. focusing on the flexible job scheduling of multi-robot manipulators operating in a dynamic environment and the dual-resource FJSP (flexible job shop scheduling problem) was carried out [

20]. The paper used a gate layer neural network feature that addresses multitask coordination. However, since this feature was designed to be subjective within their framework, it led to a poor discrimination of differences between multiple tasks. To avoid this problem, in our proposed task planner, multi-robot multitasking complexity is prevented by calculating the distance cost of robots to objects and synchronized movement. In this way, robots select objects in the environment based on distance and update the image before each robot begins its targeted task.

Touzani et al. presented an effective iterative algorithm that produces a high-quality solution to overcome the task-sequencing problem that arises from the use of multiple robots [

21]. Moreover, their proposed framework (or algorithm or pipeline) includes robot–robot and robot–obstacle collision avoidance. They also planned a collision-free trajectory towards the targeted position. Our proposed task planner plans a collision-free trajectory, similar to the method mentioned in the work of Touzani et al. Therefore, the risk of collision arising from the use of multiple robots is effectively prevented.

Inspired by human behaviour, another study aimed to build a wooden block using dual arms [

22]. In line with this targeted work, an assembly planner is proposed to plan the optimal assembly sequence. Although the use of multiple robots has become quite common recently, a limited number of studies have focused on the disassembly process. Fleischer et al. [

23] discuss the importance of sustainable processing strategies for end-of-life products, with a particular focus on the disassembly process of traction batteries and motors in battery EVs. And they proposed the Agile Disassembly System. In the presented concept, two operation-specific six-axis robots are used kinematically. One robot is responsible for handling operations, while the other robot is used for sorting operations. Similar to the aforementioned study, our proposed task planner enables multiple robots to perform their tasks effectively by sorting objects according to distance and logic from the robots. In our planner, there is no task distinction between robots; instead, each robot can perform all operations based on dynamic logic and hierarchy. This approach allows tasks to be completed more quickly and efficiently while also reducing the risk of collisions. With these features, the proposed planner offers a high-performance solution to successfully manage complex tasks of multi-robot systems.

Task planners increase flexibility and efficiency in robotic applications, effectively managing multiple components and modules. Recently, there has been a lot of research focusing on behaviour trees to automate robotic manipulation tasks [

24,

25]. In these studies, the behaviour tree library generally emerged as the most widely used method. Behaviour Trees (BTs) serve as models for designing robotic behaviours and emerged in response to real-world challenges. BTs are tree structures that outline the steps robots must follow to complete certain tasks, thus modelling robots’ decision-making processes and allowing them to adapt to environmental conditions. In addition, Torres et al. [

8], in a study on the effective use of multiple robots in the same working environment, aimed to assign different tasks to each robot at different time intervals. In this decision tree-based study, the authors distinguished two categories of tasks: common and parallel. They used decision trees to allocate work across robots, reducing disassembly time. The suggested task planner prevents robot collisions by assigning each robot a specified task at a specific moment, considering each robot’s workspace. However, this is incompatible with operational robots performing the same task concurrently. In general, reviews in the literature indicate that automatic disassembly operations for EV batteries are mostly carried out with the use of a single robot with vision systems and some task planning, but they lack the ability to cope with uncertainties that arise during disassembly and are mostly incapable of being generalized to new battery models [

11,

12]. This study aims to address this lack of multi-robot systems and the need for adaptation to changing component positions and task order, resulting in an enhancement of efficiency for automated disassembly operations. The proposed task planner has the ability to swiftly adapt to changing object locations, giving it an advantage in terms of flexibility and practical applicability, especially when having multiple robots interacting with the disassembly components. Consequently, the variations and uncertainties that arise in the process can be effectively addressed, leading to more efficient and reliable operations.

This article is organized into several key sections.

Section 2 discusses in detail how the conducted study relates to the literature. In

Section 3, general information is presented about the created simulation environment and the task-planner-driven algorithms used in this environment.

Section 4 discusses the experimental setup in detail, providing information about the hardware, software, and other relevant components used for the experiments. Finally,

Section 5 contains the results of the conducted study, presenting a detailed performance evaluation of the proposed task planner using different planners in a simulation environment.

5. Results and Discussion

In this section, a detailed evaluation of the object segmentation and robot motion-planning processes performed in a simulation environment is presented. The proposed framework is designed to offer flexibility and adjustability to accommodate diverse research requirements. The battery model, robot model, vision algorithm, and planning and control algorithm can be modified as required. Users have the flexibility to tailor components according to their specific needs by following the methodologies outlined in this paper.

5.1. Results of Object Segmentation

After training, various metrics were obtained to evaluate the performance of the model, including the F1 score, precision–recall curve, and class-wise accuracy.

Figure 10a shows that the performance of the model improves as the confidence level increases, particularly demonstrating good results at low confidence thresholds. The shape of the curve suggests that it may be advantageous to run the model at lower confidence levels, especially in scenarios requiring high precision (i.e., minimizing missed true positives). However, using lower confidence thresholds may increase false positives, meaning that the model may incorrectly identify objects that are not present.

Figure 10c shows that the recall value is close to 1, whereas the precision value is significantly lower. This demonstrates that the model effectively identifies true positives (correct predictions) but also generates a high number of false positives (incorrect predictions). Thus, the model reliably detects objects, minimizing misses, but tends to yield false alarms due to redundant or erroneous predictions. This indicates strong recall performance but highlights a need for precision enhancement.

Through the process of training the data, the model learns to correctly detect and classify objects. The training results are shown in

Figure 11. When running object detection, the vision system equipped with YOLOV8 takes a total of 36.02 s to detect and localize all objects in the scene during the initial startup procedure. Subsequent updates to the items occur at an average rate of 8 fps.

5.2. Examining Task Planner Performance

In this section, the performance of the task planner in a simulation environment is evaluated by comparing different planners present in MoveIt. RRT, RRTConnect, and RRTStar planners were preferred in the process of testing the proposed task planner in the simulation environment. Pre-planning algorithms rely on full knowledge of the environment model to predetermine a course of action. This speeds up the planning process but may fail in practice due to model errors or changing conditions. However, instance-based algorithms, such as RRT, make instantaneous decisions and adaptively respond to uncertainties. In these algorithms, the environment is explored by taking random samples at each step, and the process of determining the course of action proceeds in real time. The scalability and effectiveness of the selected algorithms in operating successfully in complex, changing environments were key factors in their selection. All three planners have various advantages and disadvantages. Although the RRT planner can be easily applied to different kinematic and dynamic robot models and has the advantage of being fast in environment exploration, it does not guarantee optimality, meaning it does not guarantee that the paths found are optimal. Only RRTStar guarantees optimal solutions based on the defined optimization objective. This is why RRTStar usually takes the longest planning time. On the other hand, RRTConnect guarantees a solution (asymptotic solution) since it grows two RRT trees, one from the start and one from the target, in the configuration space of the robot. In this study, battery component disassembly was performed using these planners, enabling the robots to successfully plan trajectories for tasks, even when the constraints of these trajectories were complex due to the positioning of other robots. These trajectory planners are among the most widely used and easily accessible within MoveIt. Nevertheless, any other trajectory planners could be used with our task planner, as these are merely the means to reach a position. The crucial aspect of task completion is more dependent on our task planner framework. The obtained results are given in

Table 1. In the table, the names of the objects used in the experimental environment and the robot carrying out the operation are also provided.

As observed in

Table 1, there are no significant performance differences between the completion processes of the disassembly process using the RRT, RRTConnect, and RRTStar planners. However, the disassembly process using the RRTConnect planner was faster than with the RRT and RRTStar planners. It is important to note that the vision algorithm experienced some slowdowns due to computational limitations in the simulations, and due to this, only the disassembly process times were recorded.

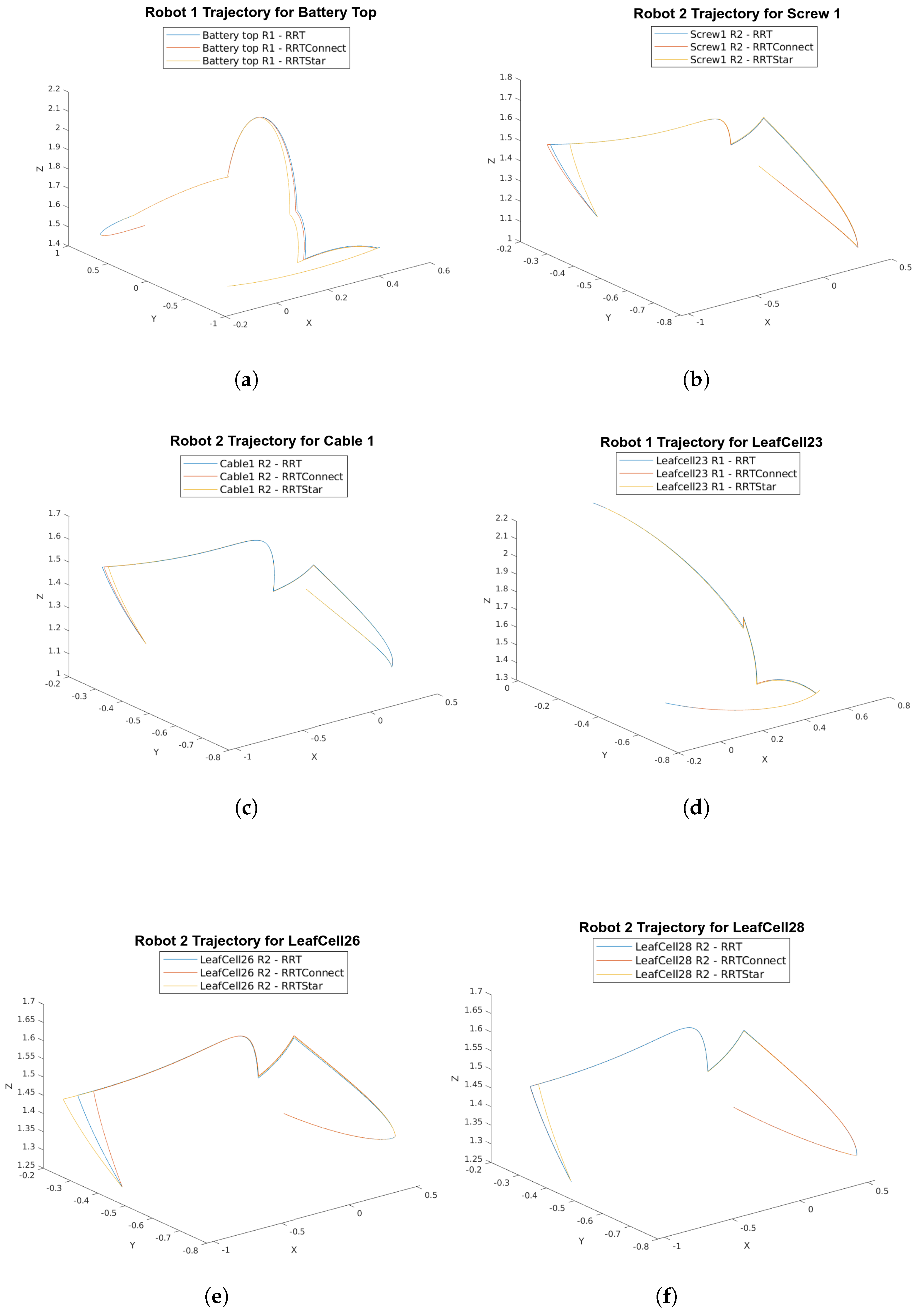

While disassembling the objects, the length of the trajectory followed by the robots during the disassembly process was calculated using different planners. As seen in

Table 2, the differences between the path lengths are quite small. When we look at the total distances tracked on a robot basis, Robot 1 completed the tasks in the shortest distance when using the RRT planner, while the distance increased from 3528.66 to 3532.93 when performing the same process using the RRTStar planner. For the second robot, this was the opposite. While the total distance tracked using the RRT planner was 3397.33, the distance tracked using the RRTStar planner was 3384.68. It is important to note that these variations are not significant and can be due to the detection of different centre positions in the vision algorithm.

Graphs of the trajectories followed for six randomly selected objects are given in

Figure 12. According to these graphs, although it is observed that similar trajectories are produced by the three different planners during the disassembly of objects, there are small differences in the paths. The differences in the trajectories produced by the three different planners are due to changes in the pixel values of the coordinates detected by the robots. These changes occur when each planner calculates a different motion path and, as a result, receives the robot’s physical position and goal differently.

This paper has met its stated goals. It integrates multiple research areas, such as computer vision, robotics, task planning, and the disassembly of battery packs, which were successfully performed and compared across planners. The primary hardware elements, including an industrial robot, a 3D camera, and a vacuum gripper, have been interconnected to carry out fundamental system tasks such as object detection, pose estimation, decision-making, and component sorting. Consequently, the system can recognize the essential components of the objects slated for disassembly, accurately locate them, and guide the robots to their designated positions in a dynamically defined sequence. A feature of the enhanced task planner is the advantage gained from using more than one robot. This feature enables one robot to access areas that another cannot reach or that are considered blind spots. Thus, trajectory planning is performed more efficiently, and the capabilities of each robot are maximized. Additionally, using multiple robots increases the speed of the dismantling process, allowing it to be completed more quickly. Simulation experiments were conducted to assess the performance and precision of the developed task planner. While the investigations were focused on the NISSAN e-NV200 pack, the approach is adaptable to any EV battery pack. Furthermore, the proposed task planner can be used to be effective not only in battery disassembly but also in dismantling various other objects, utilizing the YOLOV8 vision algorithm as demonstrated for dynamic planning and the disassembly of other objects.

6. Conclusions

In this paper, a comprehensive task planner for multi-robot battery recycling is presented. The vision, planning, and control algorithms proposed in this task planner are detailed. The performance of the proposed task planner was evaluated in a simulation environment using RRT, RRTConnect, and RRTStar planners. As the main components of the simulated hardware system, an overall EV battery pack consisting of 16 leaf cells (grouped into quads) with interconnected screws and cables and a Kinect RGB-D camera connected to the robot’s end-effector were integrated into the environment. For the simulation environment, CAD models of the battery pack and its components were imported into Gazebo for object interactions. In this simulation environment, the two robots started disassembly operations in a synchronized manner according to the information from the camera and completed the disassembly process without collision. Our logical planner has been demonstrated to be planning-algorithm-agnostic, with an average of 544.07 s and a mean of 3530.89 (UR10_1) and 3391.77 cm (UR10_2) travelled for the completion of our proposed task across the different trajectory planners. The standard deviation between the trajectory planners was only 2.31 s, with standard deviations of 1.75 cm and 5.28 cm across the planners, respectively. The minor variations in time and travelled distance do not affect the effectiveness of the logical task planner, which successfully adapted to the task regardless of the trajectory planner. The number of robots and objects used in the simulation environment in this paper can be extended, and the proposed task planner can be used not only for battery disassembly but also for the disassembly of any object. The adaptive structure of the proposed logic task planner offers the ability to easily adapt to different objects and avoid collisions in multi-robot use. Although only two robots were used in this study, the number of robots can easily be increased and adapted to different disassembly processes in future studies. In future studies, we will aim to obtain more concrete results by modelling the interaction of the experimental environment created in the Gazebo environment with real objects in the real environment. We will also address some of the limitations, including the processing time of the object detection algorithm, by reducing the calculated frames per second (FPS) and increasing the training data to improve speed and reliability. Additionally, we will tackle camera space limitations by enabling the robots to navigate through the scene in advance to capture positions that are not normally visible. Environmental collisions will be addressed by integrating the scene’s point cloud.