An MBSE Approach for Development of Resilient Automated Automotive Systems

Abstract

:1. Introduction

2. Resilience Contracts

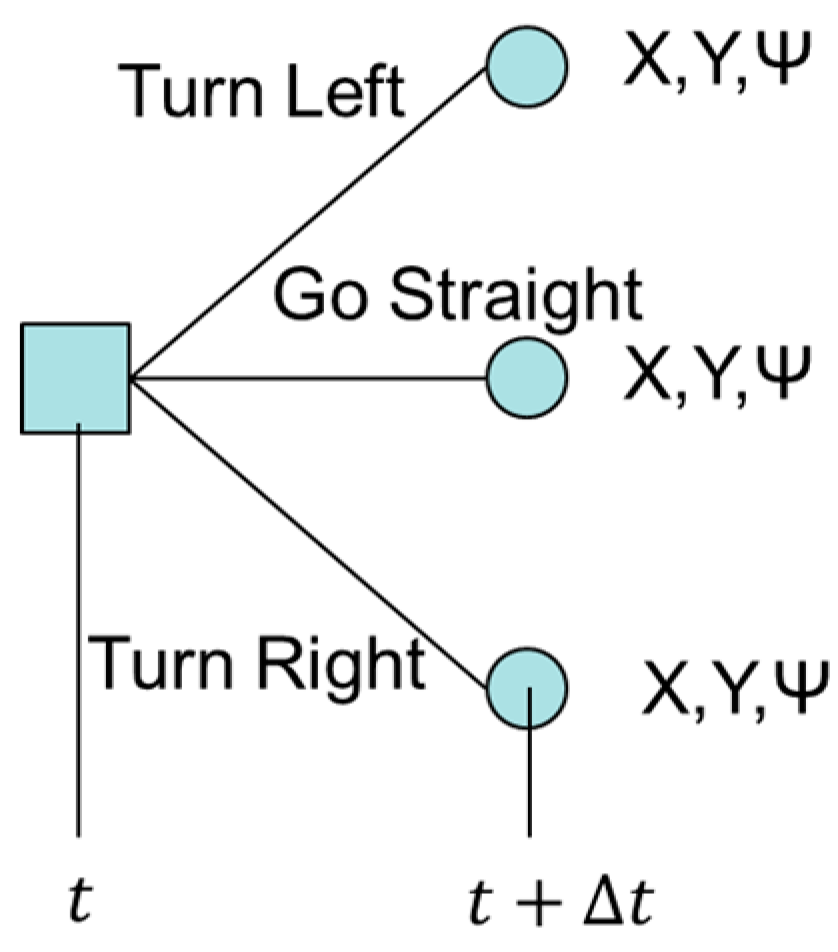

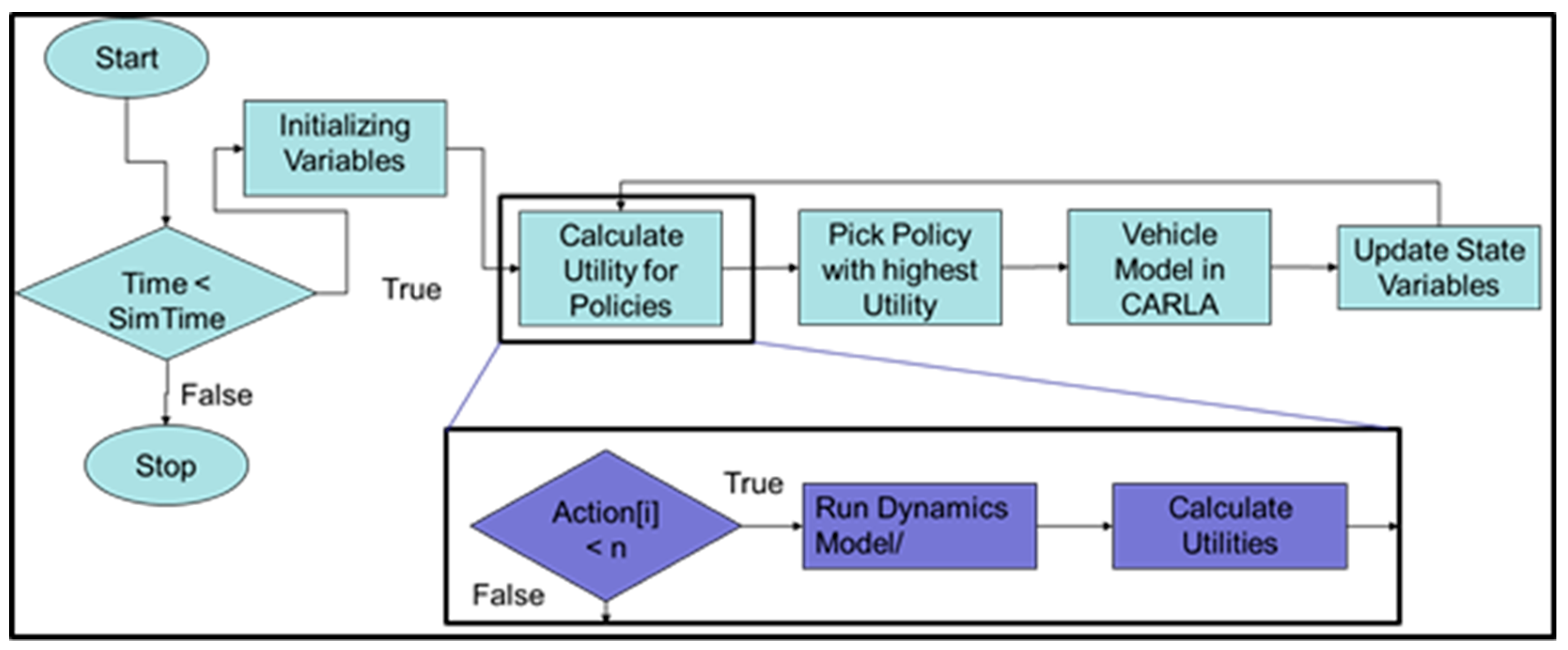

2.1. Developing a Utility Function for Resilient Decision Making

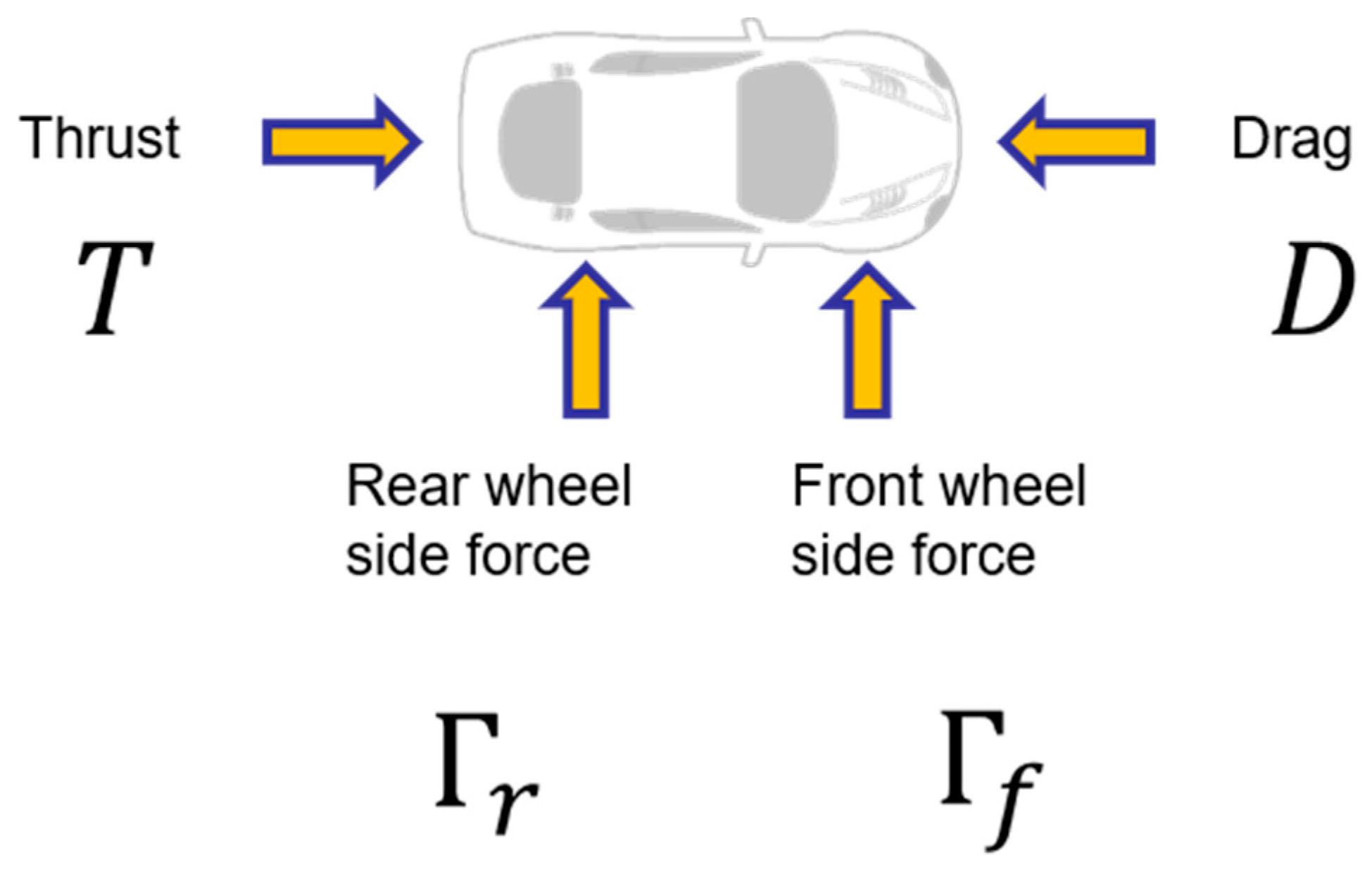

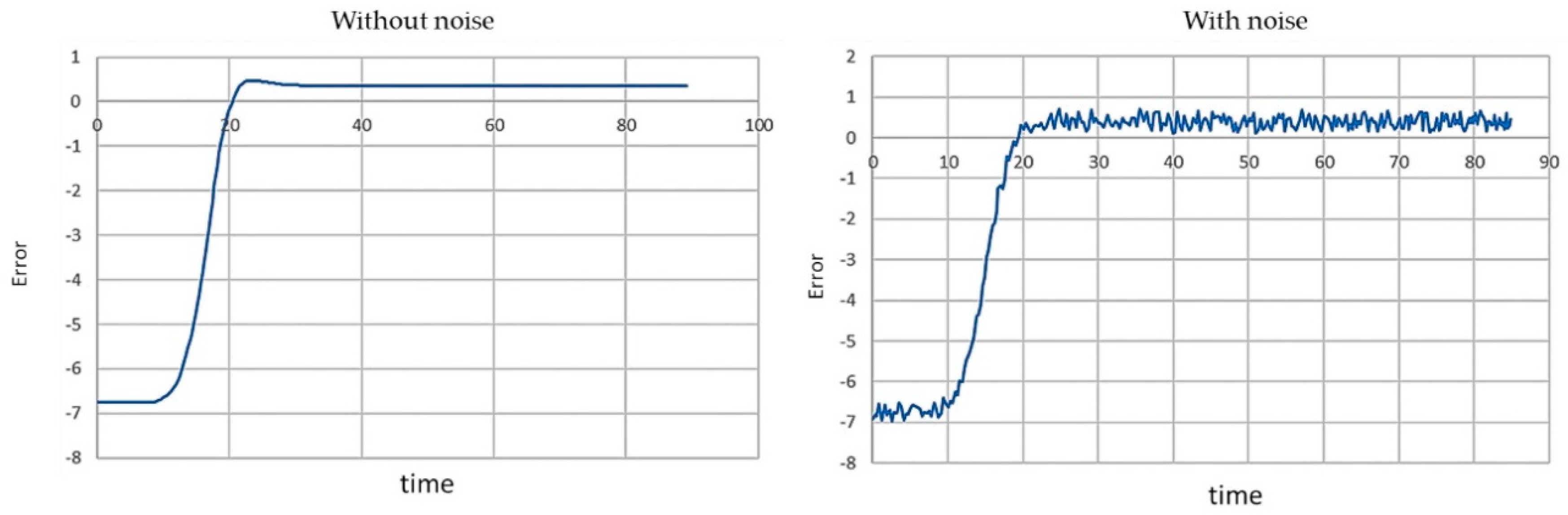

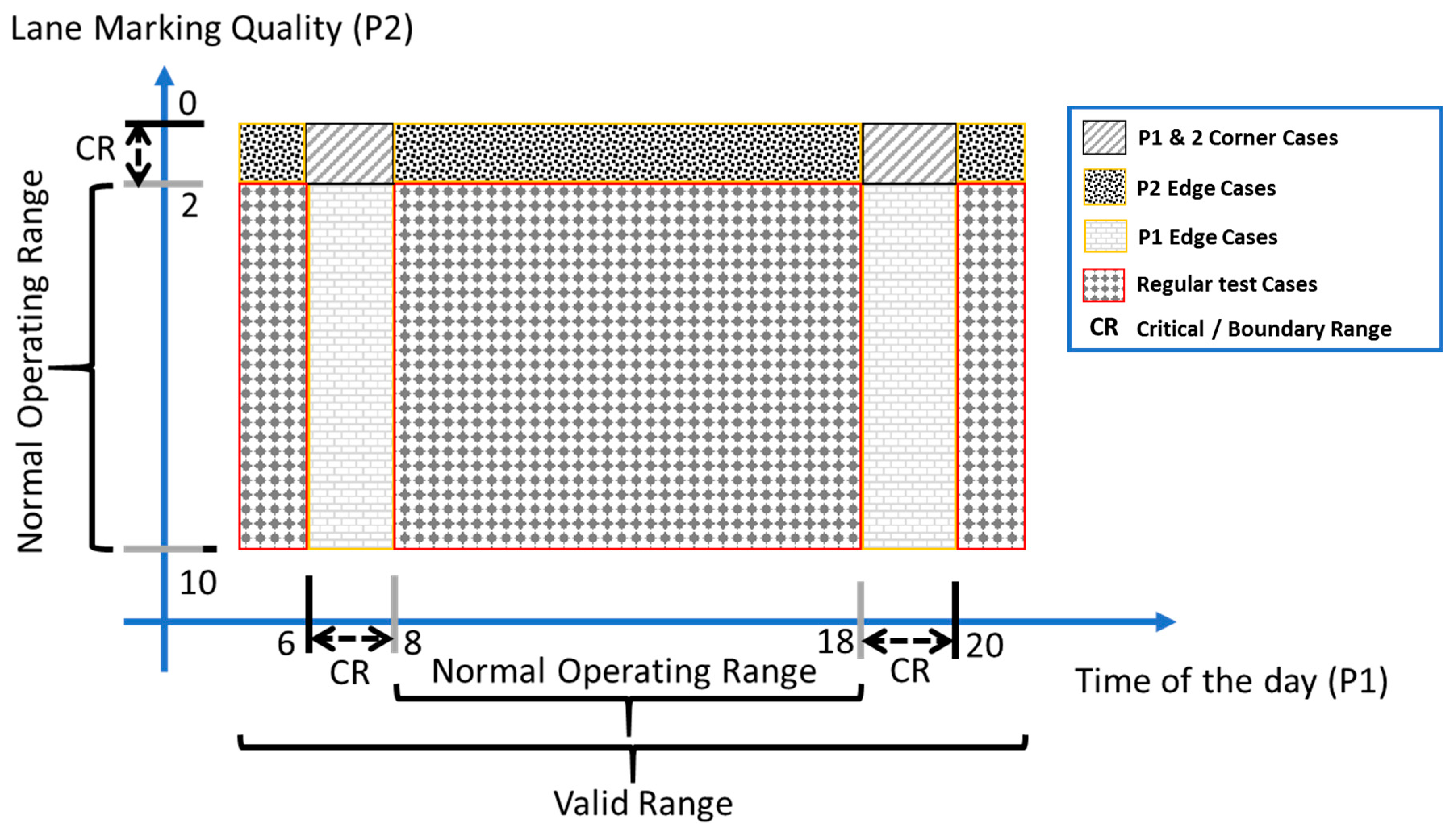

2.1.1. Dynamics Model

- β—Slip angle—Angle between car’s velocity vector and body angle

- ψ—Body angle—Angle of car’s centerline in global coordinate frame

- ψ′—Angular velocity—Derivative of body angle

- v—Velocity—Velocity in direction of centerline

- x—X position—X-position in global coordinate frame

- y—Y position—Y-position in global coordinate frame

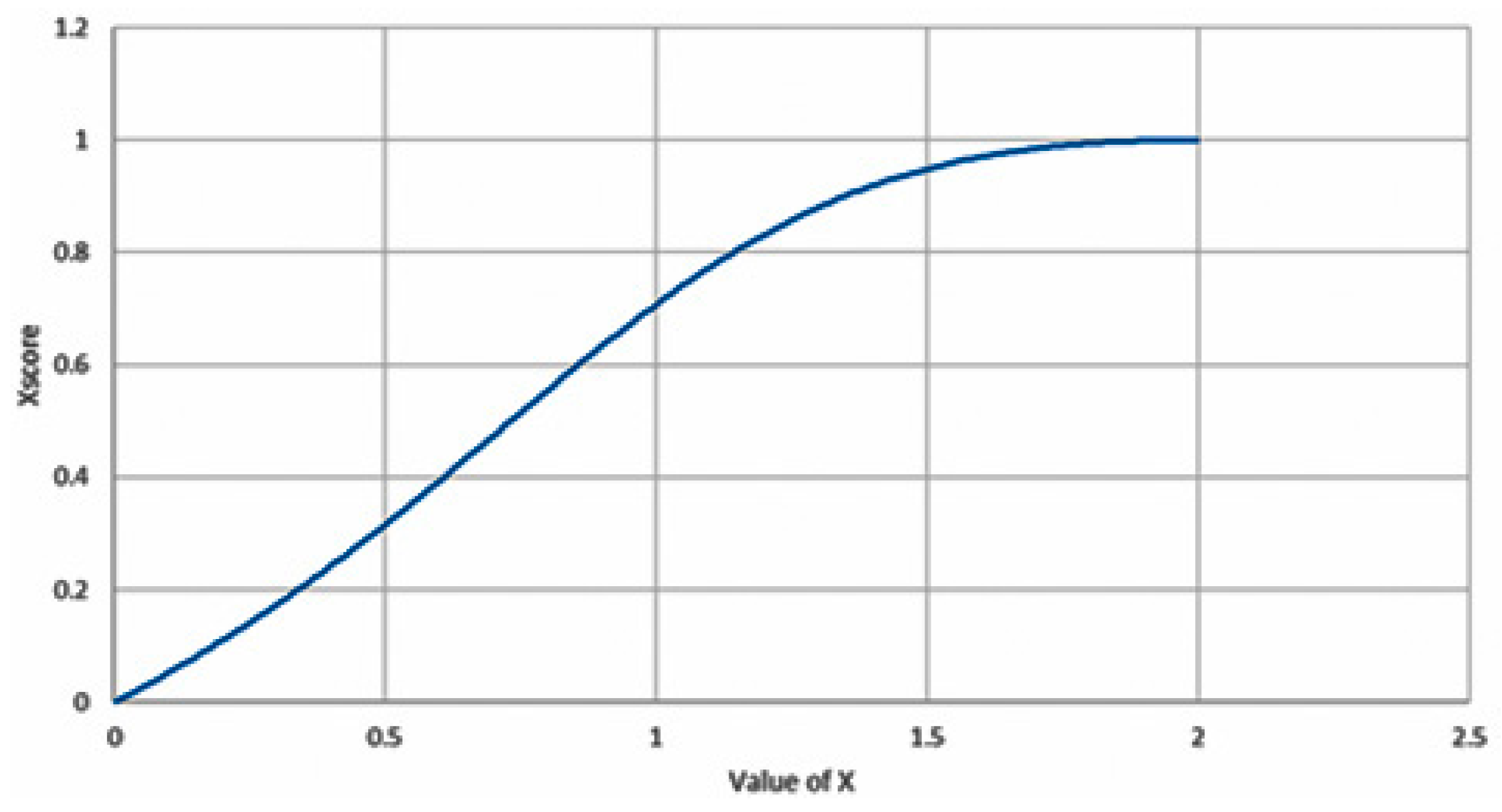

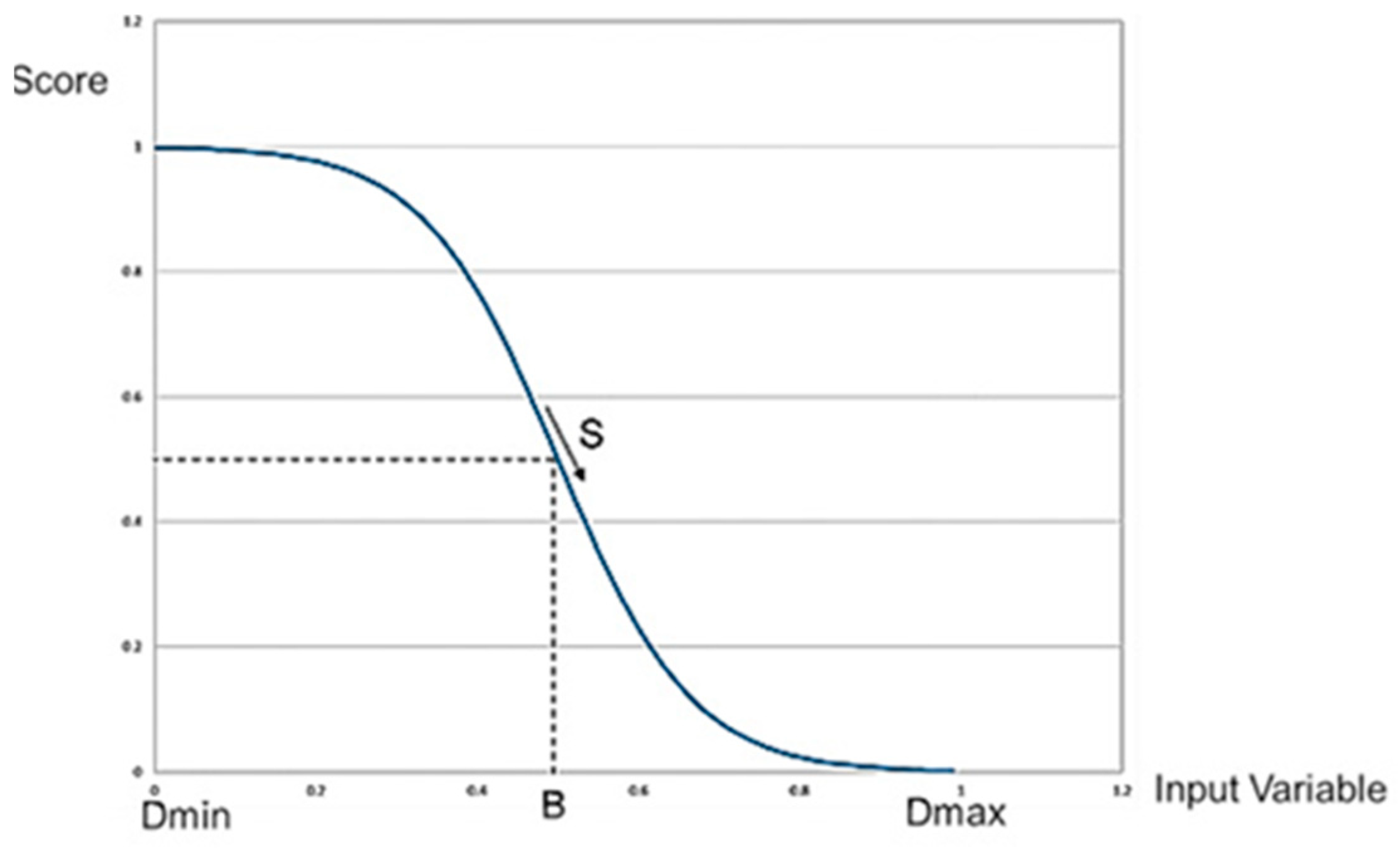

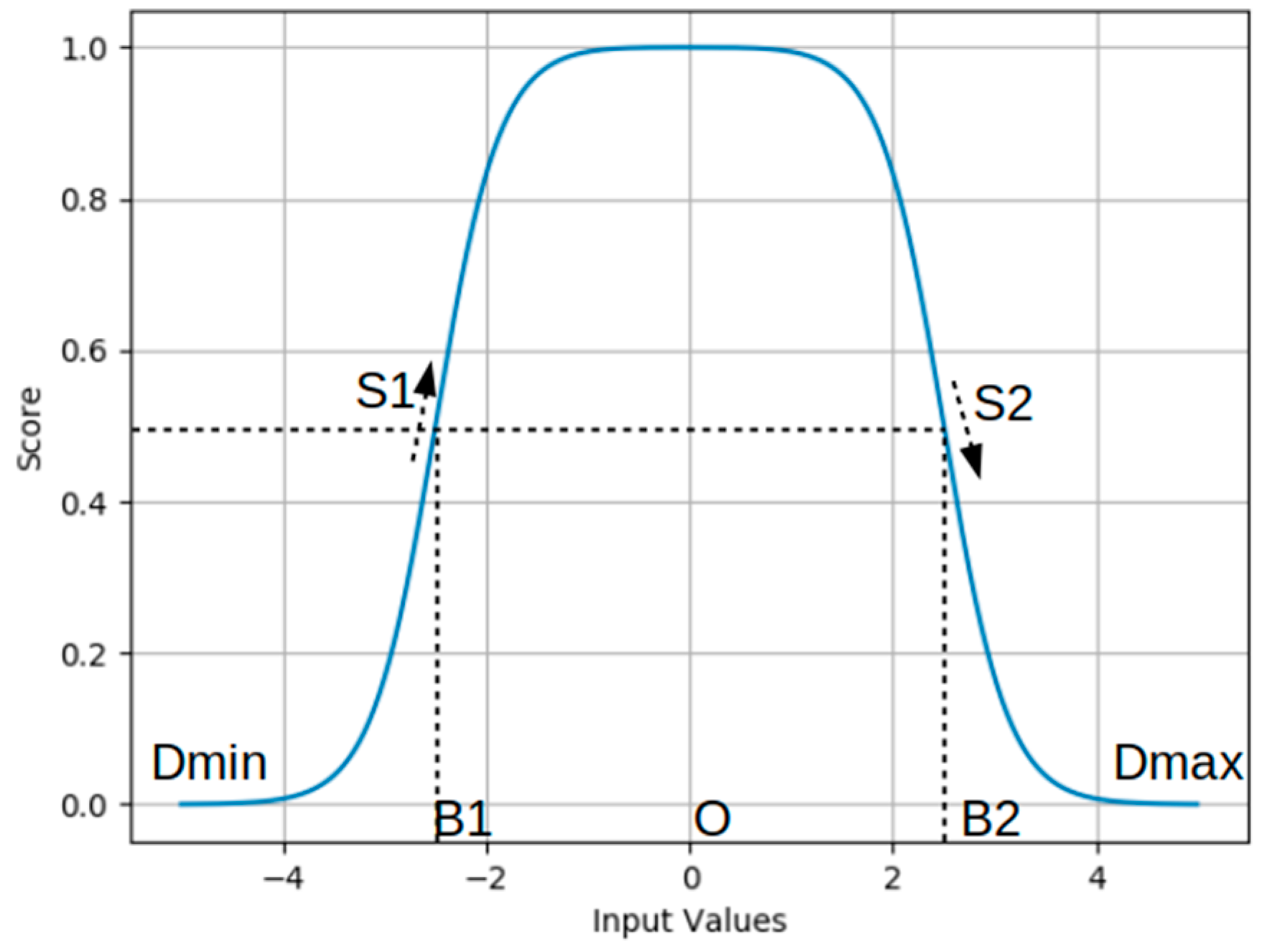

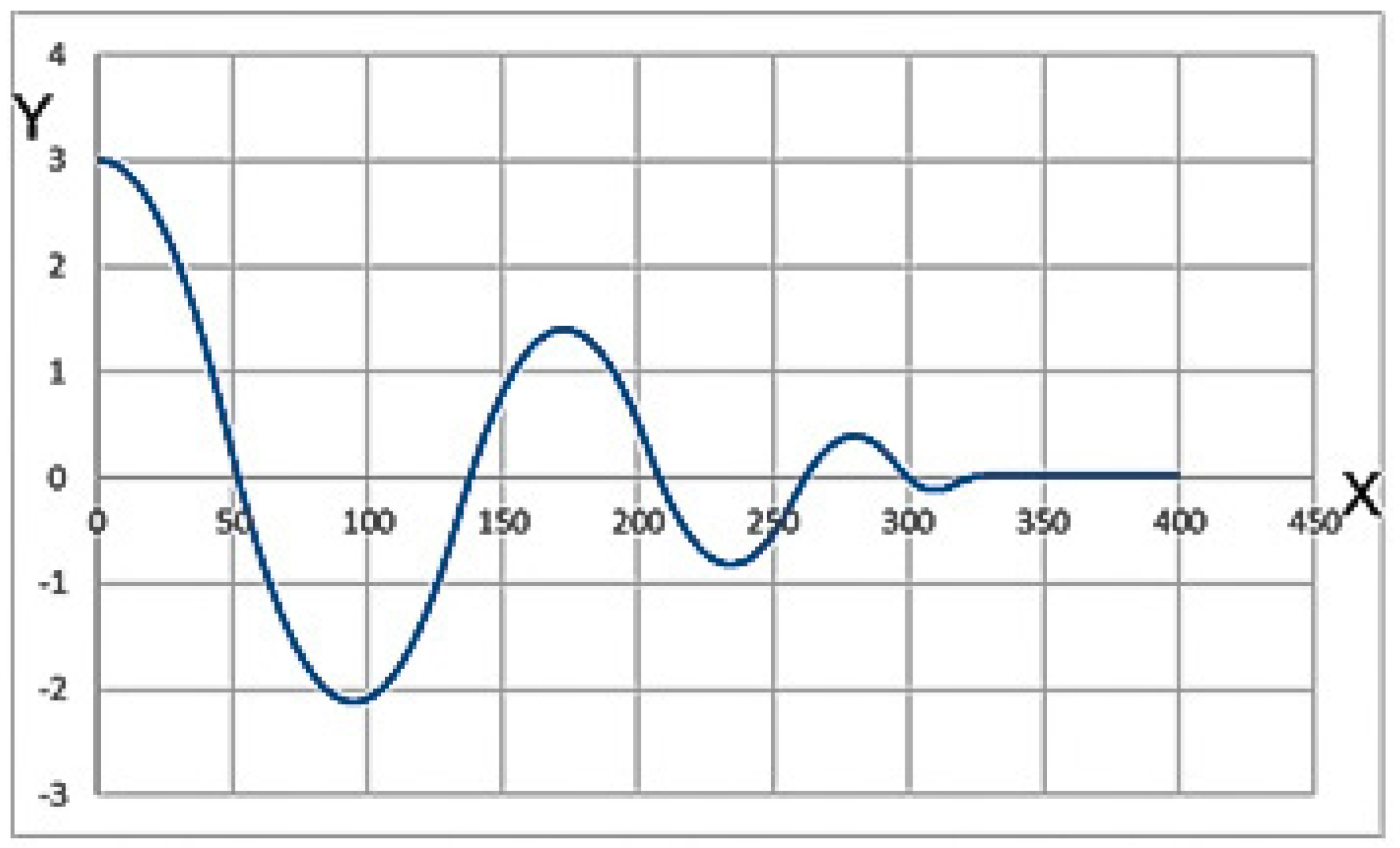

2.1.2. Constructing a Utility Function for Lane Centering

- B is called the baseline

- S is the slope of the function at the baseline

- v is the value of the input parameter, i.e., Ψ

- Dmin and Dmax are the maximum and minimum values of the input value

- SSF4(B,−S,v) is the Wymore’s 4th standard scoring function.

3. Simulation-Based Verification and Validation of Automotive Features

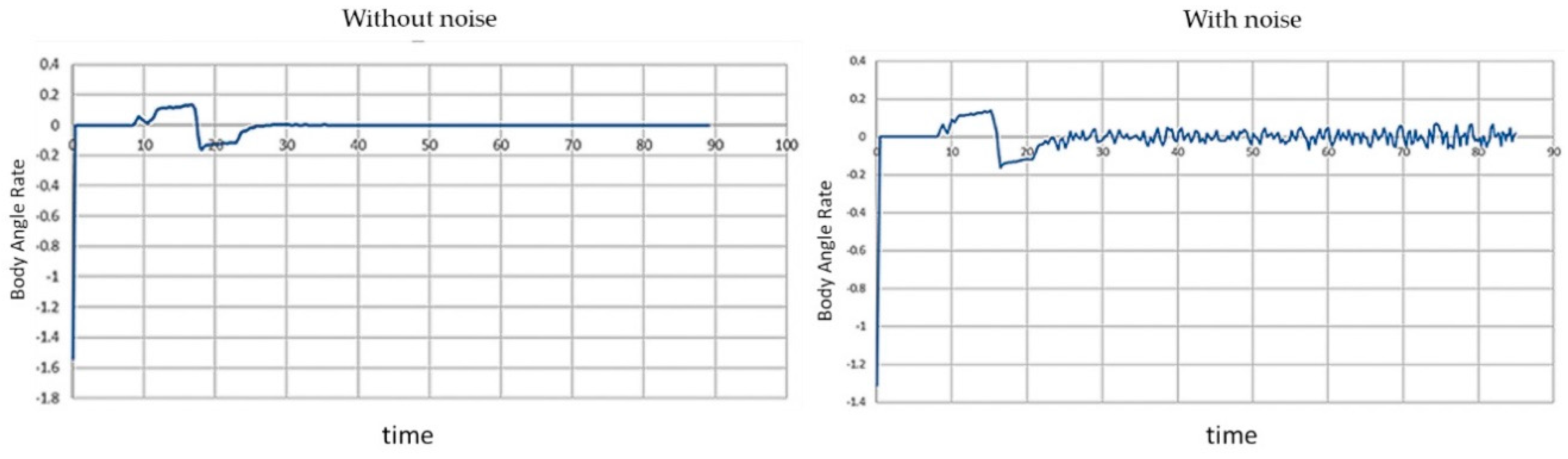

3.1. Simulation Environment Ontology for Automotive Systems

3.2. Definition of Testing Scenarios Classes

3.2.1. Definition of Known Scenarios

3.2.2. Definition of Unknown Scenarios

- A participating vehicle moving towards the Ego Vehicle lane or cutting across the lane

- A child runs in front of the Ego Vehicle from a hidden area

- Rock or landslide in front of the Ego Vehicle

- Mal/not functioning traffic lights

- A traffic rule breaking participating vehicle

- Procession/convoy that stops the Ego Vehicle to follow the traffic rules

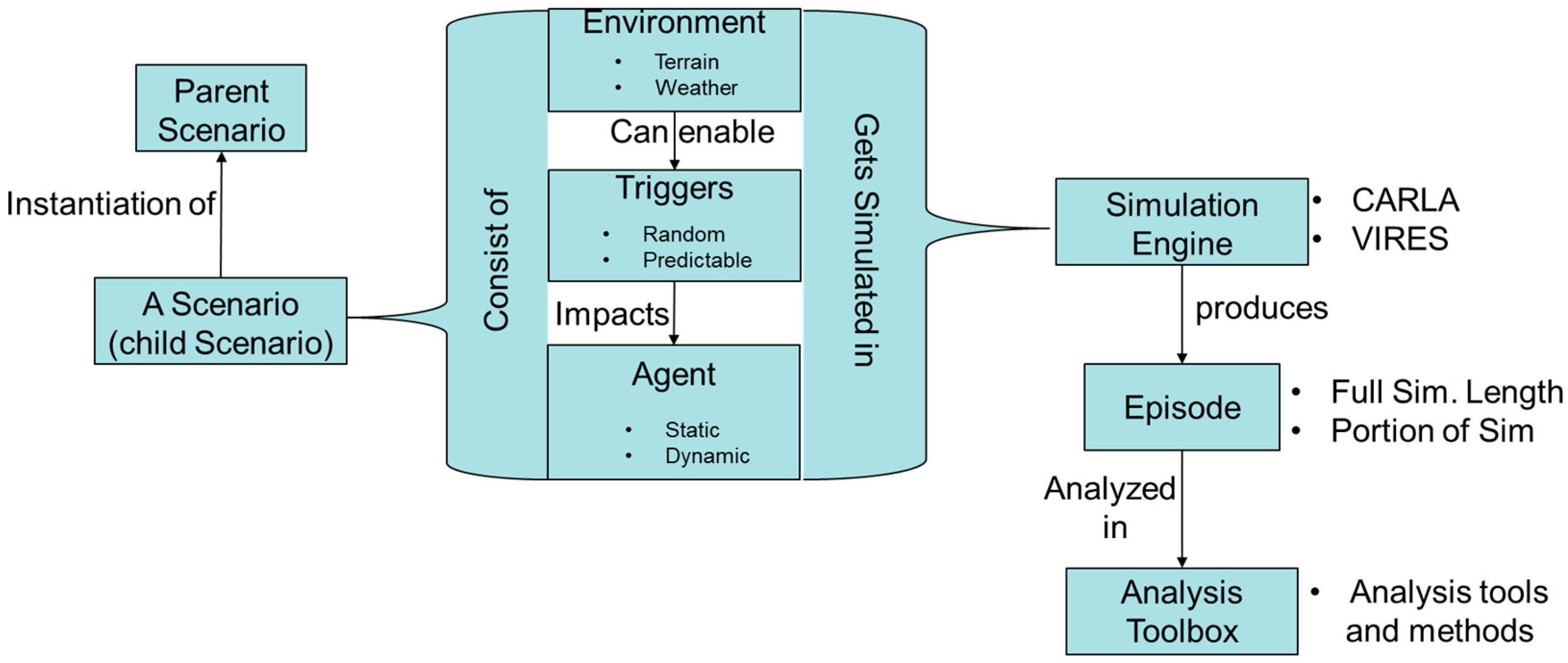

3.3. Test Scenario Generation for Simulation

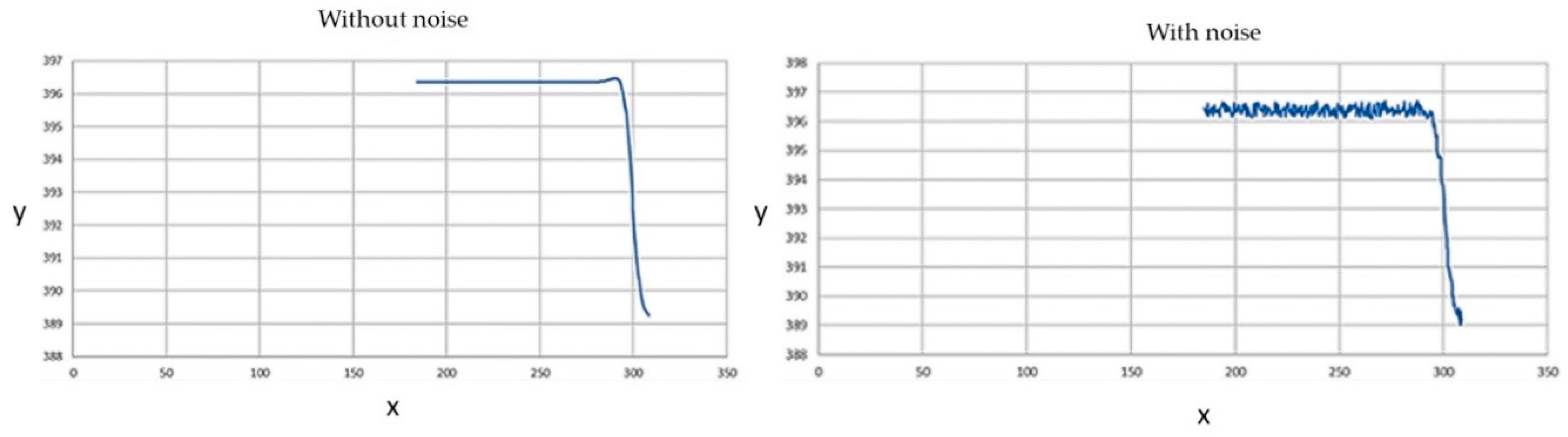

3.4. Monitors for Simulation Episode Analysis

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- ISO 26262 Road Vehicles—Functional Safety; International Organization for Standardization: Geneva, Switzerland, 2011.

- ISO PAS 21448 Safety of the Intended Functionality; International Organization for Standardization: Geneva, Switzerland, 2018.

- Madni, A.M.; Sievers, M.W.; Humann, J.; Ordoukhanian, E.; D’Ambrosio, J.; Sundaram, P. Model-Based Approach for Engineering Resilient System-of-Systems: Application to Autonomous Vehicle Networks. In Disciplinary Convergence in Systems Engineering Research; Madni, A., Boehm, B., Ghanem, R., Erwin, D., Wheaton, M., Eds.; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Madni, A.M.; Sievers, M.W.; Humann, J.; Ordoukhanian, E.; Boehm, B.; Lucero, S. Formal Methods in Resilient Systems Design: Application to Multi-UAV System-of-Systems Control. In Disciplinary Convergence in Systems Engineering Research; Madni, A., Boehm, B., Ghanem, R., Erwin, D., Wheaton, M., Eds.; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Wymore, A.W. Model-Based Systems Engineering; CRC Press: Boca Raton, FL, USA, 1993. [Google Scholar]

- Daniels, J.; Werner, P.W.; Bahill, A.T. Quantitative methods for tradeoff analyses. Syst. Eng. 2001, 4, 190–212. [Google Scholar] [CrossRef] [Green Version]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Duran, J.W.; Ntafos, S.C. An evaluation of random testing. IEEE Trans. Softw. Eng. 1984, 4, 438–444. [Google Scholar] [CrossRef]

- Zhu, H.; Hall, P.A.; May, J.H. Software unit test coverage and adequacy. ACM Comput. Surv. 1997, 29, 366–427. [Google Scholar] [CrossRef]

- Denise, A.; Gaudel, M.C.; Gouraud, S.D. A generic method for statistical testing. In Proceedings of the IEEE International Symposium on Software Reliability Engineering, Bretagne, France, 2–5 November 2004; pp. 25–34. [Google Scholar]

- Cohen, M.B.; Gibbons, P.B.; Mugridge, W.B.; Colbourn, C.J. Constructing test suites for interaction testing. In Proceedings of the International Conference on Software Engineering, Portland, OR, USA, 3–10 May 2003; IEEE Computer Society: Washington, DC, USA, 2003; pp. 38–48. [Google Scholar]

- Kuhn, D.R.; Wallace, D.R.; Gallo, A.M. Software fault interactions and implications for software testing. IEEE Trans. Softw. Eng. 2004, 30, 418–421. [Google Scholar] [CrossRef]

|

|

| Parameter | Value Range | Non-Critical Range | Critical Range |

|---|---|---|---|

| Time of the Day | 0 to 24 | All except 6 to 8 & 18 to 20 | 6–8 h & 18–20 h (high reflection & Glare due to sun rise & set) |

| Vehicle Speed | 0 to 90 mi/h | 30 to 60 | 0 to 29 & 61 to 90 |

| Lane Marking Quality | 0 (Bad) to 10 (good) | 3 to 10 | 0 to 2 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

D’Ambrosio, J.; Adiththan, A.; Ordoukhanian, E.; Peranandam, P.; Ramesh, S.; Madni, A.M.; Sundaram, P. An MBSE Approach for Development of Resilient Automated Automotive Systems. Systems 2019, 7, 1. https://doi.org/10.3390/systems7010001

D’Ambrosio J, Adiththan A, Ordoukhanian E, Peranandam P, Ramesh S, Madni AM, Sundaram P. An MBSE Approach for Development of Resilient Automated Automotive Systems. Systems. 2019; 7(1):1. https://doi.org/10.3390/systems7010001

Chicago/Turabian StyleD’Ambrosio, Joseph, Arun Adiththan, Edwin Ordoukhanian, Prakash Peranandam, S. Ramesh, Azad M. Madni, and Padma Sundaram. 2019. "An MBSE Approach for Development of Resilient Automated Automotive Systems" Systems 7, no. 1: 1. https://doi.org/10.3390/systems7010001

APA StyleD’Ambrosio, J., Adiththan, A., Ordoukhanian, E., Peranandam, P., Ramesh, S., Madni, A. M., & Sundaram, P. (2019). An MBSE Approach for Development of Resilient Automated Automotive Systems. Systems, 7(1), 1. https://doi.org/10.3390/systems7010001