1. Introduction

With advances in automation, human cognitive modeling, applied artificial intelligence, and machine learning, human-machine teaming (HMT) can now take many more forms than merely supervisory control by humans, or decision support by machines [

1,

2]. Furthermore, HMT can be adaptive with the human having the ability to intervene in machine operations at different levels to redirect resources, re-allocate tasks, modify workflow parameters, or adjust task sequences [

3,

4]. This added complexity associated with adaptive HMT requires a formal modeling and analysis framework to explore and evaluate HMT options in terms of joint human-machine performance and safety in various simulated operational contexts [

5]. The type of questions that such a framework can potentially address include determining the impact of dynamic task re-allocation on human attention, situation awareness, and cognitive load. Answering such questions requires an experimentation testbed that employs a modeling, simulation, and analysis framework for systematic exploration of HMT options [

6,

7]. Some of the important capabilities that this architectural framework and experimentation testbed need to support include the ability to:

assign human and machine (agents) to specific roles in collaborative tasks [

8,

9,

10];

specify human-machine function and task allocation options for a variety of routine and contingency operational scenarios [

6,

7];

specify conditions/criteria to re-evaluate and possibly change function and task allocations [

1,

2];

explore adaptive task allocation schemes to effectively manage human cognitive load [

1,

2];

explore consequences of what-if injects (e.g., failures, external disruption) on human-machine team performance [

1,

2];

explore innovative machine roles in HMT by exploiting technological advances such as electrophysiological sensors, social media, selective fidelity human behavior modeling, AI, and machine learning [

1,

2]; and

incorporate machine learning methods (e.g., supervised, unsupervised, reinforcement learning) to continuously improve human-machine team performance [

1,

2].

This paper is organized as follows.

Section 2 provides a historical perspective on HMT.

Section 3 discusses key considerations in human-machine teaming (HMT).

Section 4 presents key challenges in adaptive HMT.

Section 5 presents the key elements of an architectural framework for evaluating HMT options.

Section 6 presents machine roles and their interactions in adaptive human-machine teams.

Section 7 presents an illustrative example of adaptive HMT in the context of perimeter security of a landed aircraft.

Section 8 presents conclusions and prospects for the future.

2. Historical Perspective

The rationale for HMT stems from the recognition that as automation has become more complicated, it has also become more brittle and less transparent, necessitating greater human oversight. However, human oversight itself has become more cognitively taxing, which defeats the whole purpose for automation in the first place. It is this recognition that has spurred the shift to HMT. In the HMT paradigm, the machine is viewed as a teammate, not as a henchman. HMT requires transparency in machine operations, bi-directional human-machine interaction (typically associated with shared initiative decision-making), contextual awareness to understand changes in priorities and performance conditions, the ability for the human to intervene at different levels in ongoing machine processes to redirect resources, revise goals, and add or delete constraints. These interventions imply more complex human-machine relations.

There are several misperceptions that need to be dispelled before addressing human-machine relations in this new light. The first misperception is that automation will replace or offload humans, thereby making human role less critical. The reality is that with increasing automation, there is an increasing need for training because the automation invariably does not replace the human; rather, it changes the role of the human from that of an operator to that of a monitor/supervisor. For example, with increasing automation in an aircraft, the role of the human changed from flying the aircraft to managing the automation (e.g., flight deck automation). Importantly, this automation needs to be highly reliable (i.e., failure-proof). Otherwise, the human will have to step in to take over flying the aircraft if the automation malfunctions. Furthermore, it is well-documented in the aviation literature [

11] that there is inevitable skill erosion with automation making human intervention to fly the aircraft a dicey proposition at best. Further compounding the problem are issues of trust and reliance. There is ample evidence in the literature, of the dire consequences that can ensue from lack of trust in automation, and over-reliance and under-reliance on automation. It is the recognition of these unresolved concerns that led to the advent of the new HMT paradigm. In this paradigm, the machine is a teammate, and not a henchman. Furthermore, the human is an asset with innovative abilities that need to be exploited, and not a liability that needs to be shored up and compensated for during task performance.

Most recently, the Indonesian Lion Air crash reveals the dire consequences that can ensue from poor HMT [

12]. Data from the jetliner that crashed into the Java Sea in October 2018 reveals the pilots’ struggle to save the plane almost from the moment it took off. The black box data showed that the nose of the Boeing 737 was being repeatedly forced down by an automatic system that was receiving incorrect sensor readings. It quickly became a fatal tug-of-war between man and machine over a painful 11-min time span during which time the nose of the plane was forced down more than two dozen times creating palpable threat to the lives of passengers and crew. The pilot valiantly fought the plane’s tendency to nose-dive several times before losing control of the airplane. The plane plummeted toward the ocean at 450 mph killing all 189 passengers onboard. Such crashes can rarely be blamed on a single, catastrophic malfunction. Invariably, it is a series of missed steps in maintenance, weak government oversight, incomplete flight checks, inadequate mental model of the unfolding catastrophe, and inappropriate actions arising from that incomplete understanding that conspire to produce the catastrophic loss. However, what cannot be denied in this instance is that the humans found themselves working at cross-purposes with the machine, and

not as a team. These and many more such examples in both the commercial and defense sectors have drawn attention to the need for a shift in human-machine relations and system design paradigm. The emergence of HMT is the result of this shift in thinking.

One of the key implications of the shift to the HMT paradigm is that the human no longer has to supervise the machine at all times and in all contexts. This recognition, in turn, implies that the machine has greater autonomy but at the same time needs to earn and keep human trust within the boundary defined by the joint human-machine performance envelope. As the role of the machine changes from automation (that requires human oversight) to autonomy (that does not require human oversight), the concept of

full autonomy in both aircraft and cars remains elusive. Therefore, in the foreseeable future, it is imperative that advances are made in effective human teaming with semi-autonomous systems. The specific challenges that will need to be addressed in the meantime include: assuring

transparency in machine operations to facilitate human understanding and increase machine predictability; allowing the human to

intervene at the right level in ongoing machine processes to successfully address situations that fail outside the human-machine system’s designed performance envelope; and increasing

shared awareness. The latter is key to ensuring that the human stays apprised of the information that the machine uses to perform tasks (and thereby circumvent over-trust and under-trust in the machine), and the machine is aware of human cognitive, physical and emotional state. Trust in this context is defined as the attitude that an agent will help achieve an individual’s goals in a situation characterized by uncertainty and vulnerability [

13]. Unwarranted trust or distrust can potentially lead to over-reliance on the machine, or underuse of the machine. Therefore, to achieve warranted trust and reliance, the machine should exhibit transparency and predictable behavior during execution and be able to explain its line of reasoning if called upon. It is also highly desirable that the machine be capable of offering solution options or recommending key considerations that go into the generation of a viable solution. These characteristics are essential for semi-autonomous systems if they are to effectively team with humans.

In the light of the foregoing discussion, the term “autonomy” needs elaboration [

14,

15]. The term “autonomy” pertains to the task being performed. In other words, a system that is said to be autonomous with respect to a specific task or set of tasks, not all tasks. In fact, there is no such thing as a fully autonomous system that is capable of performing all tasks in all contexts. In fact, this recognition is what makes autonomy tractable. As important, the different types of human-machine relations also need to be clarified, so their implications are clear. For our purposes, there are three types of autonomous systems: (a) fully autonomous systems, (b) supervised autonomous systems, and (c) semi-autonomous systems. Fully autonomous systems are characterized as “

human-out-of-loop” systems that are designed to perform a finite set of tasks. In other words, the human has no means to intervene in the operation of these systems in real-time. Supervised autonomous systems, also known as “

human-on-the-loop” systems, are systems in which the human has the ability to intervene in real-time in ongoing system operations. Such systems are being pursued in both the defense and commercial sectors. Semi-autonomous systems are also referred to as “

human-in-the loop” systems. In these systems, the machine awaits human input before taking any action. It is important to recognize that while autonomy is the ability of a machine to perform a task on its own (i.e., without human intervention), autonomy does not imply intelligence. In fact, the intelligence needed to perform a task determines the level of autonomy. Thus, a system can be autonomous but not intelligent. Similarly, a system can be intelligent, but not fully autonomous. It is the latter that is of interest to HMT.

One of the earliest serious attempts in HMT was the Pilot’s Associate (PA) Program, 1983–1992. The PA Program was sponsored by the Defense Advanced Research Projects Agency (DARPA) under its Strategic Computing Program (SCP) Initiative. The PA Program was a United States Air Force (USAF) effort under the SCP Initiative. Its objective was to demonstrate the feasibility and potential impact of real-time artificial intelligence (AI) in enhancing mission effectiveness of future combat aircraft (Madni et al. [

16], 1985; Lizza et al. [

17], 1991). The PA Program was executed in three phases: concept definition phase (1983–1985), system development phase (1986–1988), and real-time demonstration phase (1989–1992). Five study contracts were awarded in the concept definition phase, and two design contracts were awarded in the system development phase. One team was led by Lockheed (Lockheed-Martin now), and the other by McDonnell Douglas (now part of Boeing). The Lockheed effort focused on air-to-air missions of a generic, low observable fighter aircraft, while the McDonnell Douglas effort focused on air-to-ground missions of an F-18 type platform. Lockheed won the third phase and pursued the goal of demonstrating enhanced functionality in a real-time full mission simulator. While the Pilot’s Associate technology today is primarily associated with the Lockheed version of the PA, Boeing employed the McDonnell Douglas version of the PA in its successful bid of the Army’s Rotorcraft Pilot’s Associate Program (RPA Program).

The original PA construct comprised four cooperating expert systems (i.e., mission planner, tactics planner, situation assessor, system status assessor). Subsequently, two other expert systems (i.e., pilot-vehicle interface, executive manager) were added. Towards the end of 1991, the PA was the most advanced demonstrator of real-time AI. To achieve real-time performance, the Lockheed team re-implemented the PA and incorporated a common vocabulary in the form of a Plan-Goal Graph to support the high-density communication between the various subsystems, and to guide systems integration.

While DARPA and USAF suggested the F-22 as the application vehicle, it was the US Army’s RPA that became the vehicle for implementing and testing PA technology for next generation tactical helicopters. Eventually, the PA technology was incorporated into the Apache helicopter. This event marked an early successful milestone in the history of HMT.

More recently, there have been a few research initiatives in HMT in the area of unmanned vehicles. A well-known example is that of the “autonomous wingman,” which employs individual unmanned aerial vehicles (UAVs) in support of manned aircraft [

18]. Other examples include: multi-agent systems for group tasks involving unmanned land systems [

19]; and intelligent agents for team of UAVs [

20]. These systems all assume the presence of a “human controller” or a “human team leader.”

3. Adaptive Human-Machine Teaming

Before discussing adaptive HMT, it is worth taking a step back to distinguish between the human and machine in terms of factors that affect their joint performance. To begin with, there are major differences in the way humans and machines observe, reason, and act. Humans have brains, eyes, and ears to make sense of the world and pursue objectives. Machines have digital processors, sensors, and actuators to do the same. While these differences can pose integration challenges, they provide opportunities to exploit potential synergy between the two to achieve goals that cannot be achieved by each individually. As important, they offer opportunities to exploit their complementarity in responding to disruptions. Second, lack of consistency and variability are two human characteristics that distinguish them from machines. Humans tend to not perform the same task the same way (a consistency issue) for a variety of reasons (e.g., loss of focus, fatigue, decision to ignore instructions or best practices). However, humans have the ability to adapt to changing circumstances better than machines, and unlike machines, have the ability to improvise and innovate their way out of tough situations. Machines (e.g., CPS), on the other hand, are consistent in task performance (a predictability issue), but they tend to fail without graceful degradation leaving little time for damage control. Finally, humans on occasion need to be motivated to perform assigned tasks through inducements/incentives (e.g., monetary compensation, recognition) and/or penalties. Clearly, machines require no such incentives or penalties to exhibit consistent performance.

In the light of the foregoing, to facilitate HMT, a human behavior model is needed to facilitate human-machine team design and human-machine integration. The model needs to be aware of human capabilities and limitations, customizable to a particular human assigned to a particular role, and cognizant of that human’s qualifications (training, test scores, authorizations, certifications). The model needs to have a dynamic component that tracks changing context (e.g., cognitive load, fatigue level, emotional state) and human availability. These characteristics will allow the model to contribute to personnel assignments, dynamic function allocation, and task re-allocation. These characteristics also inform our definition of key terms such as human-machine teams and human-machine teaming (HMT).

We define a human-machine team as “a purposeful combination of human and cyber-physical elements that collaboratively pursue goals that are unachievable by either individually.” We define human-machine teaming (HMT) as “the dynamic arrangement of humans and cyber-physical elements into a team structure that capitalizes on the respective strengths of each while circumventing their respective limitations in pursuit of shared goals.” We define adaptive human-machine teaming as “a context-aware re-organization/reconfiguration of human and cyber-physical elements into a fluid team structure that assures manageable cognitive load while exploiting the respective strengths of human and cyber-physical elements.”

Several important factors that need to be addressed in adaptive HMT [

1,

2]. These include: joint human-machine performance; human cognitive workload; shared knowledge between human and machine (including mutual awareness of system and environment states); continued interoperability in the face of dynamic task re-allocation; shared human-machine decision-making that assures elimination of human oversight (slips) and reduction in human error; and human-machine team security before, during, and after adaptation.

Joint Performance. The key concern here is the ability of the human-machine team to satisfy joint performance requirements. Assessing joint performance requires knowing key performance parameters (KPPs) and ensuring that they are observable and measurable during the conduct of operational missions.

Cognitive Workload. The key concern here is to assure a manageable human workload by balancing workload distribution between human and cyber-physical (CP) elements with changing contexts [

1,

2]. As important, is understanding the tradeoff between performance and cognitive load [

6,

7], and the cognitive strategies humans typically employ to reduce workload to manageable levels [

3,

4].

Shared Knowledge of State. The key concern here is the ability of the human-machine team to maintain a common understanding of the state of the human-machine system and the external environment. The humans in human-machine teams need the right information with appropriate context to make sound decisions. The machine (i.e., the cyber-physical elements) needs knowledge of the environment, own state, and human state (e.g., cognitively overloaded, fatigued, cognitive underload) to make the right decisions.

Interoperability. The key concerns here are the ability to gracefully introduce the human-machine team into a larger system-of-systems (SoS) context and stay connected during and after adaptive task allocation [

3,

4]. This capability requires the ability to ensure connectivity and data flow as the system/SoS evolves and expands, and tasks are dynamically re-assigned between the human and machine [

21,

22].

Shared Decision Making. Effective sharing of tasks is central to HMT performance [

1,

2,

23]. Humans are well-suited to goal setting, creative option generation, and responding to novel situations [

24]. The machine (i.e., the cyber-physical elements) is well-suited to intent inferencing, trade-space analysis, evaluation of decision/action alternatives, information aggregation, parallel search, and machine learning. Machine learning is key to adaptive decision-making and archiving greater symbiosis within HMT. In this regard, the focus needs to be on bi-directional human-machine knowledge and a decision support system that minimizes human oversight and human error.

Human-Machine Team Security. This concern stems from the need to protect HMT processes, mechanisms, physical elements, data, and services from unintended/unauthorized access and use, as well as damage and destruction [

25,

26]. The control security aspects are confidentiality, integrity, and availability. Confidentiality pertains to enforcing and maintaining proper restrictions on access to and disclosure of information contained in the system. Integrity implies guarding against improper modification or destruction of system and the information contained, including ensuring non-repudiation and authenticity of content. Availability implies ensuring timely and reliable access to and use of system functions [

25,

26].

4. Technical Challenges

There are several technical challenges that need to be dealt with in adaptive HMT including: maintaining shared context especially during assignment of new tasks or dynamic task re-allocation; inferring human intent from electrophysiological signals [

1,

2]; incorporating strong time semantics to assure proper synchronization, sequencing, and adaptation during task performances [

27,

28,

29]; minimizing human oversight (slips) and human error (mistakes) during joint decision-making [

1,

2]; incorporating learning ability for human and CP elements [

1,

2]; and assuring generalizability of the approach across domains. Inferring human intent from electrophysiological systems and incorporating strong time semantics are being pursued by the cyber-physical systems (CPS) community. The other three challenges fall under the purview of CPHS.

Maintaining shared context is a challenge because context can change dynamically with the occurrence of systemic faults/failures or external events (e.g., disruptions), or dynamic re-allocation of tasks to enhance performance [

30]. Context is defined in terms of the state of the system and the environment, health status of the cyber-physical system (CPS) and the human, and environmental uncertainty. Context can be conveniently defined by the state and status of the variables in the mnemonic, METT-TC, for military missions. Typically associated with commander’s intent, METT-TC stands for mission-enemy-troops-terrain-time-available-civilian considerations. Not every mission will be characterized by all these variables. For example, humanitarian missions will not have an enemy, and possibly no time constraints. Search and rescue missions will mostly not have enemy, or civilian population considerations to worry about. Context changes need to be monitored and context needs to be managed during both nominal and contingency operations. Knowledge of context informs the HMT strategy that best serves the need of the operational missions [

30].

Inferring human intent from electrophysiological sensors is a challenge because of sensor noise and other artifacts [

1,

2]. Therefore, intent inferencing needs other supporting evidence. This evidence can be provided by having access to surrounding context as defined above. Also, minimizing human oversight and error in a shared-initiative human machine decision system requires common understanding of capabilities and limitations of the human and the machine. This challenge is being pursued by the cyber-physical systems (CPS) community.

Strong time semantics are needed to assure proper task, sequencing, and synchronized execution and adaptation during operational missions. Thus, temporal constraints such as “no sooner than” and “no later than” have specific meaning during task execution. Also, performance penalties can be associated with the degree to which a constraint is violated in some cases [

27,

28,

29]. This challenge is being pursued by the cyber-physical systems (CPS) community.

Incorporating learning ability is an essential aspect of adaptive HMTs [

1,

2]. Machine learning comes into play in learning the priorities and preference structure of the human in a controlled experimentation environment in which the human performs multiple representative simulated missions [

31]. The machine also needs to learn the state of the human-machine system and the environment in a partially-observable environment using reinforcement learning techniques such as partially observable Markov decision process (POMDP) model.

Assuring generalizability of the approach means the ability to transfer the approach from one problem domain to another different but related domain. For example, the architecture and models created for parked aircraft perimeter security mission should be transferable to search and rescue mission.

5. Architectural Framework for Evaluating Adaptive HMT Options

An architectural framework is an organizing construct for the concepts and relationships needed to model, explore, and evaluate candidate adaptive HMT options. The key elements of this architectural framework are: an HMT ontology that defines the key concepts and relationships that need to be included in the architectural framework; an HMT reference architecture to guide HMT design and integration; a human (cognitive and emotional) state determination algorithm based on electrophysiological signal processing, cognitive workload theories (e.g., multiple resource theory model, spare mental capacity model), and machine learning; and a dynamic context management module that keeps track of human cognitive and emotional state, and heuristics for dynamic function/task allocation between the humans and machine based on specific criteria (

Table 1).

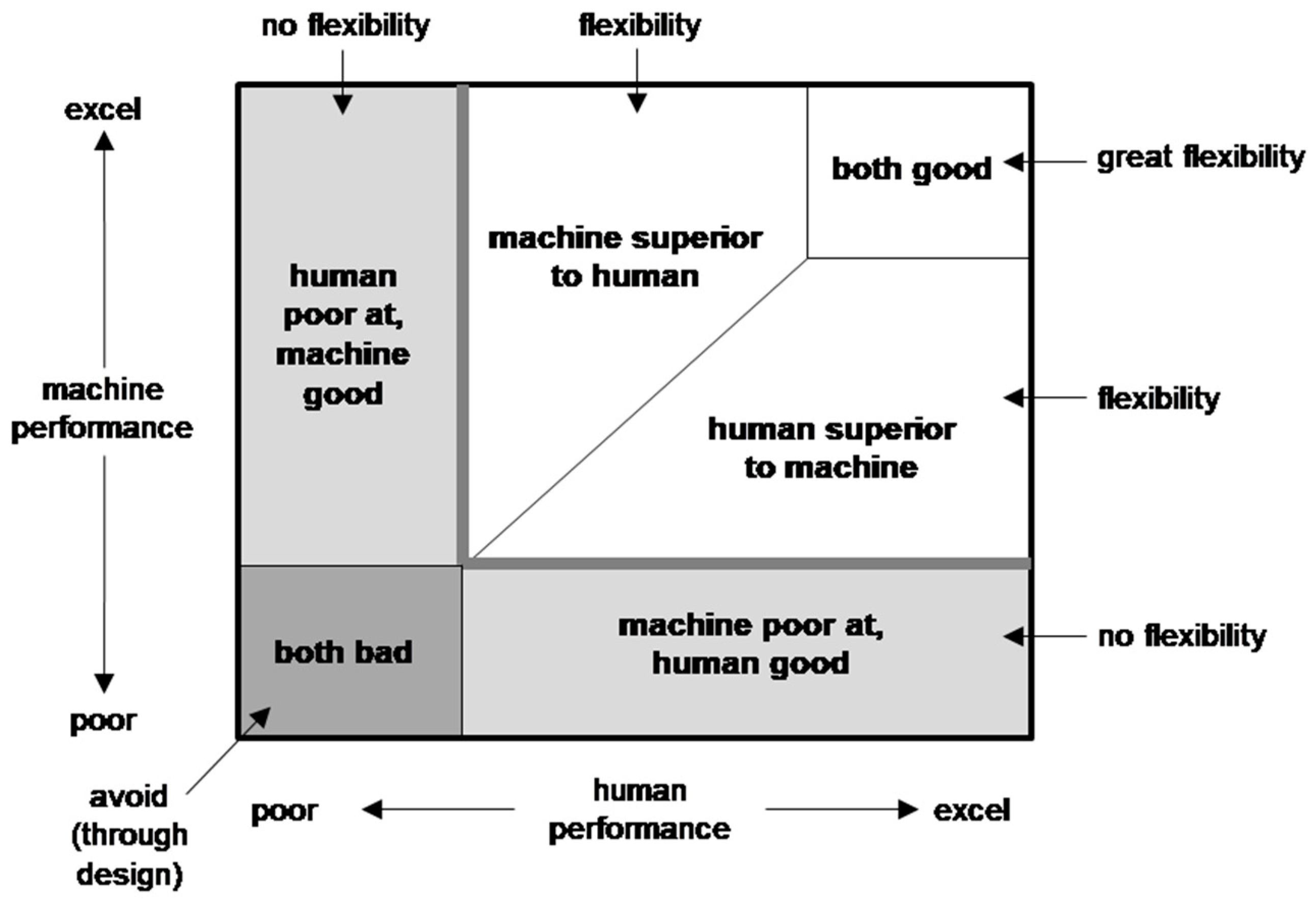

Heuristics operationalize dynamic task allocation based on tasks that: (a) humans perform well, but machines do not; (b) machines perform well, but humans do not; (c) both perform reasonably well; (d) neither performs well; and (e) require participation of both human and machine. It is important to note that (c) depends on factors, such as cognitive load of the human, processing load of the machine, their respective availability, etc., while (d) needs to be avoided at all costs through proper design of the concept of operations (CONOPS) in upfront engineering.

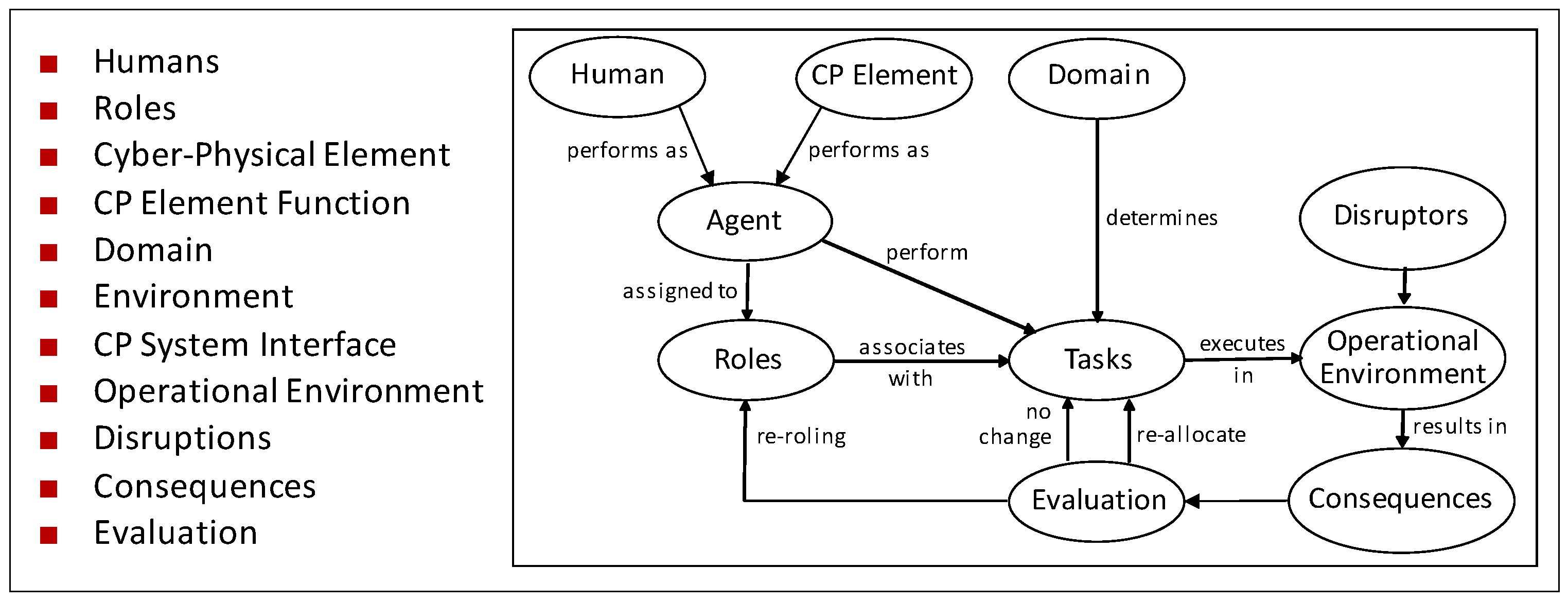

HMT ontology: The starting point for the development of an HMT architectural framework is defining an HMT ontology (information model) that explicitly represents the key concepts and relationships associated with adaptive HMT [

32].

In the HMT ontology, the human(s) and CP elements are agents that are assigned to roles associated with tasks that are executed in real or simulated operational environments. Task execution results in performance outcomes that are evaluated with a view to determining whether task reallocation between humans and CP elements is warranted, and whether humans and CP elements need to be assigned different roles in the light of changes in operational context.

Figure 1 presents the key concepts and their relationships in an HMT ontology.

Specifically, the HMT ontology helps with: answering HMT questions, specifying HMT architecture, and facilitating human-machine team integration [

32]. The key elements of the ontology are presented in

Table 2.

HMT Reference Architecture: The HMT reference architecture provides the starting point (in the form of a template) for generating domain-specific HMT architectures. Specifically, it provides the required elements and a common vocabulary for guiding HMT implementation in a manner that emphasizes commonality. The reference HMT architecture is based on the HMT ontology. It captures the key elements needed for planning, design, and engineering of adaptive human-machine teams. The reference architecture also informs and guides the definition of HMT use cases and scenarios. It provides consistent semantics for composing human-machine teams for various domains. The reference architecture also provides the starting point for specifying architectural adaptations in human-machine team configurations needed to respond to a fault, failure, or disruption. The reference architecture also serves as a guide for integrating human-machine teams.

Shared Task Execution: In human-machine teams, responsibilities are shared between human(s) and machine (i.e., cyber-physical elements). Task sharing can vary based on whether the human-machine team is engaged in nominal operations or contingency situations such as responding to disruptions [

1,

2]. Under nominal operations, humans are invariably responsible for high level planning and decision making while machines execute detailed actions. In contingency situations (e.g., responding to disruptions), the nominal allocation may be superseded in the interest of human safety, or over-ridden to avoid potentially hazardous machine task(s), or suspend faulty machine operation. In the same vein, the machine can take over human task(s) upon human request, after a period of human inactivity exceeds a time threshold, or machine queries go unanswered by the human during that time interval [

1,

2].

An erroneous assumption that is often made is that machines are good at tasks that humans are poor at, and vice versa. This is an incorrect assumption because there are tasks that both can do well (e.g., option selection) and there are tasks that neither does well (e.g., rapid estimation/assessment of risks). This recognition brings us to define six task performance regimes: (1) machines excel at, but humans are poor at; (2) humans excel at, but machines are poor at; (3) humans and machine (automation) can both perform well; (4) neither does well, and therefore should be avoided through proper system design; (5) machines have an advantage over humans; and (6) humans have an advantage over machines [

33].

Figure 2 presents these different regimes in an easy-to-understand, compact representation.

Selective Fidelity Human Behavior Modeling. Modeling human behavior is an important aspect of adaptive HMT. The key questions that need to be answered are: (a) what aspects of humans should be modeled for adaptive human-machine teams? (b) Is there a methodological basis for determining a reduced order human model (i.e., sparse representation) for specific types of tasks performed by adaptive human-machine teams?

A comprehensive human model is one that reflects: perceptual, cognitive, and psychomotor capabilities; kinematic and cognitive constraints; and human cognitive and emotional state. However, for planning and decision-making tasks, there is no need to include kinematic constraints. This is one type of simplification. The abstraction level of the model is another area of potential simplification. The model needs to be sensitive to the influences of changes in environmental conditions and disruptions. Based on the usage context, human behavior models can range from generic to specific and high level to low level. For example, high-level behavior modeling is appropriate for planning and decision-making, while detailed narrow models are needed for specialized control. For example, in modeling a smart thermostat, where the goal is to save energy, hidden Markov models (HMM) have been used to model human occupancy and sleep patterns of residents [

35,

36]. This is a relatively high-level human behavior model that needs to produce the data required to calculate energy consumption. Contrast this model with the model needed to determine when to administer insulin to a diabetic. The impulsive injection of insulin employs mathematical models for diabetes mellitus. This model determines the need for insulin injection by monitoring glucose level relative to the threshold level for administering insulin. This is a narrow, low-level model. When it comes to planning and decision-making tasks, human behavior models need to be sensitive to cognitive load/overload, emotional state, fatigue level, temporal stress, infrequently occurring events and their impact on human vigilance, and high frequency events and their impact on a human’s ability to keep up with the event stream. For most practical HMT applications, human behavioral model elements need to include: task (along with required knowledge and skills); person (along with qualification, availability, location); role (along with defining attributes such as qualification requirement, training requirement, experience requirement, and location); and constraints, including those that are cognitive, attentional, locational, logistical, and spatiotemporal. Fatigue, emotional state, and work overload are important to measure because they can lead to human performance degradation. Human performance degradation typically occurs when the human begins to sacrifice performance on the secondary task to bring cognitive load to a manageable level. In an extreme overload situation, the human can start shedding secondary tasks. Knowing human cognitive load and emotional state can enable the machine to take over those tasks that the machine is also capable of performing (possibly not as well as the human) and thereby circumvent precipitous human performance degradation. It is important to note that there are tasks that both the human and machine are capable of performing, in which case the tasks can be assigned to either based on other factors such as availability and existing cognitive load. If this is not the case, then knowing the human cognitive state and fatigue level (from electrophysiological sensors) can cause the machine to help the human in task prioritization decisions. It is important to realize that humans tend to change their cognitive strategies under extreme stress and cognitive overload by initially sacrificing performance on secondary tasks, and eventually shedding secondary task(s). In these situations, the machine can ensure that the human is informed and clear on primary and secondary tasks, i.e., know task priorities. In real-time task performance, task “stacks” (i.e., tasks that need to be performed concurrently) and their cognitive content are effective proxy measures of cognitive load [

7].

State Determination: In a human-machine team, it is important to keep track of human cognitive and emotional state with, for example, electrophysiological signals which are acquired and analyzed using signal processing and machine learning algorithms. Cognitive state indicates cognitive load and fatigue level, while emotional state indicates a person’s anxiety level. This knowledge can inform task allocation decisions between humans and CP elements [

1,

2].

Dynamic Context Management: Knowledge of context is important in choosing the most effective HMT configuration. Context is more than human role and location. For operational missions, METT-TC is an excellent descriptor of context as noted earlier. Dynamic context management can be implemented using the publish-subscribe pattern [

30].

Dynamic Function/Task Allocation: In a dynamic environment, the allocation of the function/task between humans and cyber-physical elements is a function of context, the availability of human(s) and CP elements, and human cognitive and emotional states. Heuristics can be employed to represent the criteria for function/task allocation between human(s) and CP elements (

Table 1).

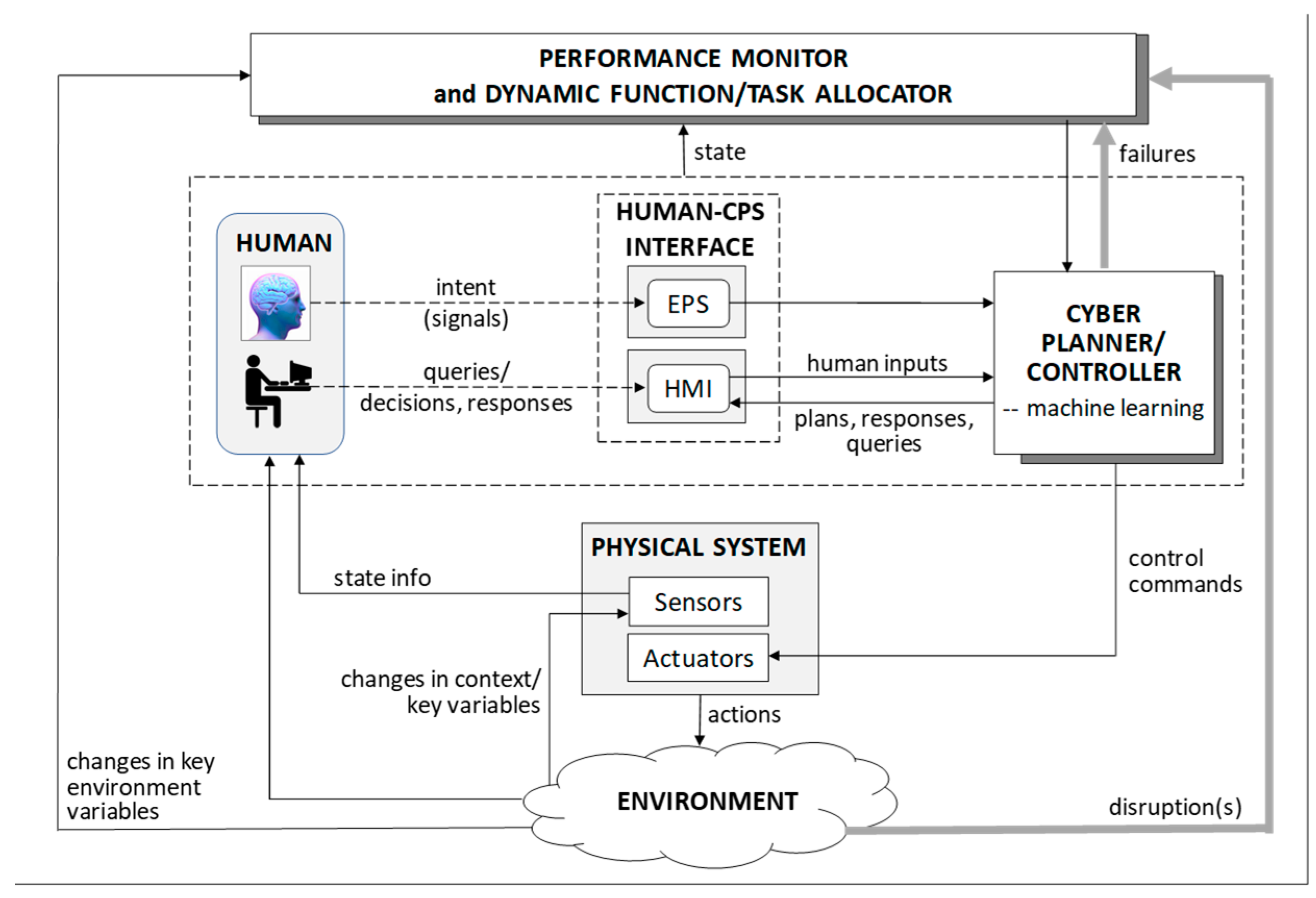

Figure 3 presents an adaptive HMT architectural framework for modeling and analyzing HMT options and for developing the concept of operation of an adaptive human-machine team [

33].

As shown in this figure, human electrophysiological signals captured through sensors are analyzed using stochastic modeling techniques (e.g., Bayesian Belief Networks (BBNs)) to infer human intent. This information flows through the network fabric to the cyber planner/controller (hardware and software), which generates control signals that drive the actuators of the physical system, which in turn acts on the operational environment. The state of the physical system is provided as feedback to the human, while environment changes are sensed by the sensors of the physical system and conveyed to the machine controller. This generic architecture reflects the behavior of an adaptive cyber-physical human system (CPHS), which is a type of adaptive human-machine system with tight coupling and stringent temporal constraints.

Machine Learning: There are ample opportunities for machine learning (supervised, unsupervised, reinforcement) in adaptive human-machine teams. During the planning stages, operational scenario simulations can be employed in which humans perform tasks and exhibit their information seeking behaviors. Supervised machine learning algorithms can be employed to learn human information preferences and priorities during simulated task performance [

21]. During operational mission execution, reinforcement (machine) learning can be employed in partially observable, uncertain environments. Sensors, networks, and people are all potential sources of learning in operational environments.

6. Machine Roles and Interactions in Adaptive Human-Machine Teams

The machine (i.e., CP elements) can assume a variety of roles in human-machine teams. These include: personal assistant, teamwork facilitator, teammate (or associate), and collective moderator. These innovative roles and their capabilities are presented in

Table 3.

A

personal assistant is a task-oriented agent that supports an individual team member. It can also provide personalized support to individual team members [

31]. It can exploit context-awareness (i.e., knowledge of context) to prefetch information and retrieve information. Furthermore, of course, it can respond to human queries in different contexts.

A teamwork facilitator is a coordination-oriented agent that aids communication, facilitates coordination among human teammates, and focuses attention.

An associate (or Teammate) is an agent that is capable of cognitive task performance like a human teammate. It is capable of reasoning and estimation. It needs to be effective in both task work and teamwork. In this case, the team is well-defined with a single mission.

A collective moderator is an agent capable of rapidly setting up ad hoc collectives comprising traditional teammates, and temporary, new team members acquired through social media and crowdsourcing to address problem that require rapid and innovative idea generation and problem solving. The collective moderator builds on recent research on crowdsourcing that has developed human-machine moderator devices for use by ad hoc collections specifically assembled to solve complex problems. By bringing together diverse viewpoints and providing moderated facilitation, collaborative innovation becomes possible. For example, there is recent research indicating that collaborative innovation is more likely when validated paradoxes to a situation are made clear and are trusted by the participants (i.e., the paradoxes can simply be pronounced by fiat but emerge from the collective). The machine moderator can monitor the collective discussion, invite appropriate members, then determine if paradoxes are failing to emerge, and suggest to the collective that such paradoxes are needed. Machine learning can be expected to play a key role in data-rich collaboration environments.

Interactions between human-machine teams depend on the respective roles of human(s) and machine (i.e., cyber-physical elements) counterpart. Two common human and machine roles are: human in a supervisory role [

37]; and machine in an active/passive monitoring role.

A human in a supervisory role directly controls the machine (i.e., CP elements), and can intervene in control algorithms, and adjust set points. The machine (i.e., CP elements) then carries out commands, reports results, and continues operation until it receives a new command or adjustment from the human.

A machine in a monitoring role can perform in an open loop or closed loop fashion [

27,

28,

29]. An example of open loop monitoring is a sleep tracking device that determines sleep quality [

35]. The device also monitors sound, light, temperature, and motion sensors to record environmental conditions during sleep (i.e., context). The device presents information to the user on a device such as a smart phone. The information pertains to possible causes of sleep disruption. The human is in-the-loop in this case but does not directly control the device. Also, the machine does not take proactive action to improve sleep quality. In sharp contrast, a smart thermostat is a closed-loop system with humans-in-the-loop [

36]. It uses sensors to detect occupancy and sleep patterns in the home. It uses these patterns to proactively turn off HVAC system to save energy.

A simple use case for adaptive control is that of quadcopter autonomously flying a reconnaissance pattern. The intent of the field commander (observer) to changes in the environment is reflected in the commander’s electrophysiological signals. Electrophysiological sensors record these signals and pass them to the intent inferencing software. This module decodes human intent from the electrophysical sensors (EPS) and contextual knowledge and passes it through the network fabric to the quadcopter controller. The quadcopter autonomously adjusts its reconnaissance pattern in response to field commander’s intent. This simple adaptation approach consists of: adjustable trajectory; human intent inferencing; and intent-driven surveillance pattern adaptation [

1,

2].

Adjustable Trajectory: The quadcopter flies a pre-determined pattern with ability to adjust trajectory pattern if needed. An underlying stochastic model of the quadcopter maintains health and status information. This model employs an optimal utility function to autonomously correct quadcopter position and attitude when environmental or mechanical disruptions necessitate altering its flight pattern. This is an example of localized control to conduct autonomous reconnaissance patterns.

Human Intent Inferencing: EPS capture intent-related electrophysiological signals that can be decoded using stochastic modeling approaches such as Bayesian Belief Networks (BBNs). This approach is consistent with the concept of sensor fusion and dependence among nodes and states [

1,

2].

Intent-driven Surveillance Pattern Adaptation: The inferred intent is employed to update the quadcopter surveillance pattern. Quadcopter model parameters are modified as needed before the quadcopter begins to perform its new surveillance pattern.

7. Illustrative Example: Perimeter Security of a Landed Aircraft

Figure 4 presents an example of a military scenario in which a human-machine team needs to collaborate and adapt as needed to counter potential intrusion of a perimeter defense set up to ensure physical security of a landed and currently parked C-130 aircraft [

1,

2]. The C-130 is a cargo and personnel carrier.

The landing strip is partially protected by unattended ground sensors (UGS). Buildings adjacent to the runway are equipped with video cameras and long wave infrared (LWIR) cameras. Robotic sentries also patrol the area with UAV conducting surveillance of the area. As the deplaning troops head out to pursue their mission, a few stay behind to assure aircraft security. The commander in charge of maintaining aircraft security is equipped with a laptop for monitoring, planning, and decision-making. The laptop is equipped with a smart dashboard and wireless connection to sensors, robotic sentries, UAVs, UGS, video cameras, and LWIR cameras.

The HMT ontology, a key element of the HMT framework, can now be applied to this problem. We begin by identifying the human roles. In our example, the two main human roles are the commander and the UAV operator. Next, we identify the functions/tasks associated with the different roles as a function of the level of autonomy. The commander can serve as: a supervisor, who issues high level commands to the different agents including the UAV swarm; or a monitor of autonomous agents, who intervenes only when needed to revise goals or redirect assets (i.e., resources) based on available external intelligence. The UAV operator can perform one of three functions: issue high level commands to UAVs; monitor UAVs during task performance and only intervene to specify a new waypoint, a new reconnaissance trajectory pattern, or a new goal; or manually control the UAV.

The role of the commander can change from that of a monitor (who intervenes infrequently to redirect resources to new regions or regions where intrusion is suspected, or revise a goal based on intelligence from higher headquarters) to that of a supervisory controller who issues high level commands that are carried out by machine agents if the commander perceives the agents to be confused or not behaving as expected. Similarly, the role of the UAV operator can change from a high-level supervisory controller to a manual controller if the UAV encounters an unknown situation (e.g., unknown threat) and requests human intervention, in which case the human-in-the-loop can steer the UAV to a safe state by taking control of the trajectory.

The machine comprises the commander’s laptop with machine learning, planning, execution monitoring, and resource allocation software, the different types of sensors, and UAVs. The key functions of the machine are to: monitor segmented regions for intruders; alert commander of potential intrusion(s); support commander’s planning and execution monitoring along with context-sensitive visualizations; reconfigure perimeter defense based on pre-planned protocols or commander’s direction; adapt UAV surveillance pattern based on commander’s direction; infer commander intent using EPS and probabilistic analysis software; learn commander’s priorities and preferences in different contexts; and learn system and environment state in partially observable environments using reinforcement learning.

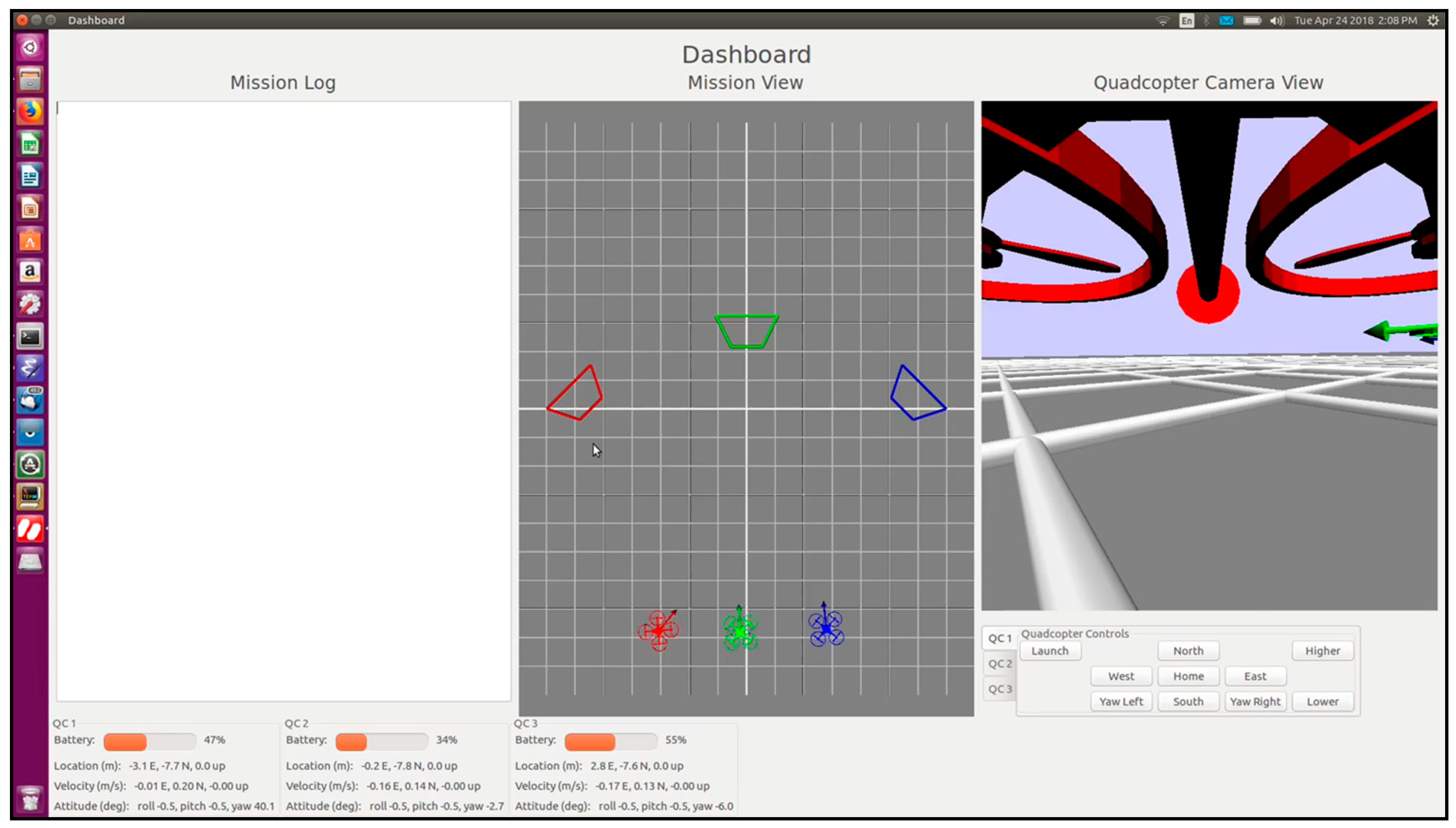

The commander’s user interface comprises two monitors: one for monitoring status of individual surveillance regions, and real-time views based on updates from the security cameras; and one monitoring execution status of actions taken by the commander using the user interface (UI). The monitors provide real-time state and status information in color-coded format (

Figure 5).

As shown in this figure, the left monitor is a situation awareness display. It shows the eight different regions that are being surveilled. Color codes such as green or red mean that the region is safe, or a potential intruder has been detected, respectively. Real-time views of the regions reflect data from security cameras. Detailed information on each region is provided based on actions taken by the user.

When a region’s color code turns red, the user clicks on that region’s icon to acquire detailed information. The right monitor provides detailed information on sensors, motion, location, cameras, etc. The actions that the commander (user) can take include calling in a security crew, turning on alarms, reporting the incident to higher headquarters, etc.

Figure 6 shows a prototype dashboard implementation for multi-quadcopter management. This dashboard in this prototype shows three quadcopters, which are tasked with collaboratively pursuing a mission. Each quadcopter can be launched independently. The dashboard provides a plan view showing the progress of the three quadcopters toward their goal. When the user selects a quadcopter icon, the dashboard provides a camera view for the selected quadcopter, along with the status information for that quadcopter in terms of location, battery level, etc. The human (agent) can intervene and redirect one or all quadcopters. The dashboard maintains an audit trail for each quadcopter and the quadcopter team. The dashboard is implemented in Python. It employs a Mavlink protocol to communicate with a simulated or real quadcopter. The communication is identical for both simulated and real quadcopters [

1,

2].

8. Conclusions and Future Prospects

This paper has presented an architectural framework to conceptualize, design, and evaluate adaptive human-machine teaming (HMT) options under a variety of what-if scenarios depicting alternate futures. While human teams have been extensively studied in the psychological and training literature, and agent teams have been similarly investigated in the artificial intelligence research community, research in human-machine teams tends to be fragmented and relatively sparse. This paper has combined findings from this fragmented literature (e.g., adaptive human-machine function allocation [

1,

2], adaptable systems [

5], human-systems integration [

3,

4,

38,

39,

40], cognitive neuroscience, behavioral psychology, computer science, machine learning, and system/system-of-systems integration) to define new machine roles and an overall system concept for human-machine teaming (HMT). An exemplar military scenario was used as a backdrop to develop an HMT prototype that illuminates exemplar human and machine roles in adaptive human-machine teams.

Advances in adaptive HMT research can be expected to include multiple applications of machine learning and sparse human behavior representations that can be exploited in developing sophisticated mixed-initiative adaptive human-machine decision systems with dramatically lower incidence of human oversight and human error [

1,

2,

41]. Currently, adaptive HMT is an important research area in the Department of Defense (DoD), with a strong commitment from the Air Force Research Laboratory (AFRL), the Office of Naval Research (ONR), the Space and Naval Warfare Systems Command (SPAWAR), and the Army Research Laboratory (ARL). We can expect this area to advance steadily as government, academia, and industry work side-by-side to advance adaptive HMT technology.