Abstract

Decision management is the systems engineering life cycle process for making program/system decisions. The purpose of the decision management process is: “…to provide a structured, analytical framework for objectively identifying, characterizing and evaluating a set of alternatives for a decision at any point in the life cycle and select the most beneficial course of action”. Systems engineers and systems analysts need to inform decisions in a digital engineering environment. This paper describes a Decision Analysis Data Model (DADM) developed in model-based systems engineering software to provide the process, methods, models, and data to support decision management. DADM can support digital engineering for waterfall, spiral, and agile development processes. This paper describes the decision management processes and provides the definition of the data elements. DADM is based on ISO/IEC/IEEE 15288, the INCOSE SE Handbook, the SE Body of Knowledge, the Data Management Body of Knowledge, systems engineering textbooks, and journal articles. The DADM was developed to establish a decision management process and data definitions that organizations and programs can tailor for their system life cycles and processes. The DADM can also be used to assess organizational processes and decision quality.

1. Introduction

To address the problem of the lack of a widely available and reusable model-based decision support system for systems engineers, the INCOSE Decision Analysis Working Group developed a Decision Analysis Data Model (DADM) in Model-Based Systems Engineering software that provides decision management guidance to support multi-factored decisions, such as design comparisons or trade studies, while leveraging a model-based environment to improve how those decisions are analyzed and communicated. This data model was developed using the decision management methodology defined in the INCOSE Systems Engineering Handbook [1], and defines the steps in the decision management process and identifies the data exchanged between those steps. The model can accelerate trade-off analyses, increase consistency, and support the documentation of decision outcomes in a digital model, enabling collaboration in a digital ecosystem in all life cycle stages.

The importance of this work is amplified by the INCOSE Systems Engineering Vision 2035 [2], which outlines several challenges that must be realized to achieve the vision for the future state of systems engineering, including the following:

- Enable trusted collaboration and interactions through a digital ecosystem;

- Provide analytical frameworks for managing the lifecycle of complex systems;

- Widely adopt reuse practices.

1.1. Review of Research on Decision Analysis Data in Systems Engineering

The need for systems engineers to obtain a comprehensive set of data to support system engineering decision making has been documented and reinforced across many decades.

- MIL-STD 499B [3], published in 1994, describes a requirement for a decision data base, which is “a repository of information used and generated by the systems engineering process, at the appropriate level of detail. The intent of the decision data base is that, when properly structured, it provides access to the technical information, decisions, and rationale that describe the current state of system development and its evolution”.

- The NASA Systems Engineering Handbook was initially published as NASA/SP-6105 in 1995. The 2007 revision describes a need to obtain a comprehensive set of data to support decision making while the initial 1995 publication does not. The most recent version of the NASA handbook [4], published in 2019, states, “Once the technical team recommends an alternative to a NASA decision-maker (e.g., a NASA board, forum, or panel), all decision analysis information should be documented. The team should produce a report to document all major recommendations to serve as a backup to any presentation materials used. The important characteristic of the report is the content, which fully documents the decision needed, assessments done, recommendations, and decision finally made.” In addition to prescribing the need to document decision analysis information, the NASA Systems Engineering Handbook prescribes that the process must be risk-informed, which may include both qualitative and quantitative techniques.

- The INCOSE Systems Engineering Handbook was initially published in 1997. Version 4 of the Handbook, published in 2015, was the first version to describe the need to obtain a comprehensive set of data to support decision making. The INCOSE Systems Engineering Handbook [1], published in 2023, states “Decisions should be documented using digital engineering artifacts. Reports that include the analysis, decisions, and rationale are important for historical traceability and future decisions”. The INCOSE Systems Engineering Handbook prescribes that the process must identify uncertainties and conduct probabilistic analysis.

1.2. Review of Research on Architectural Patterns, Reference Models, and Reference Architectures for Engineering Decisions

Bass, Clements, and Kazman [5] provide a framework for developing software that can be applied to develop a Decision Analysis Data Model to capture digital engineering artifacts that document decisions. They define three architectural structures:

- An architectural pattern is a description of element and relation types together with a set of constraints on how they may be used. A pattern can be thought of as a set of constraints on an architecture—on the element types and their patterns of interaction—and these constraints define a set or family of architectures that satisfy them. One of the most useful aspects of patterns is that they exhibit known quality attributes.

- A reference model is a division of functionality together with data flow between the pieces. A reference model is a standard decomposition of a known problem into parts that cooperatively solve the problem. Reference models are a characteristic of mature domains.

- A reference architecture is a reference model mapped into software elements (that cooperatively implement the functionality defined in the reference model) and the data flows between them. Whereas a reference model divides the functionality, a reference architecture is the mapping of that functionality onto a system decomposition.

There are many examples of architectural patterns, reference models, and reference architectures that have contributed to the advancement of digital engineering. Some important ones are shown in Table 1 in historical order.

Table 1.

Patterns, reference models, and reference architectures in engineering.

Early efforts in digital engineering focused on computer-aided manufacturing. The U.S. Air Force Program for Integrated Computer-Aided Manufacturing (ICAM) developed a series of models to increase manufacturing productivity through systematic application of computer technology [6]. Two of the models were (1) IDEF0, a functional model, and (2) IDEF1, an information or data model, and IDEF2, a dynamics model. Eventually, the National Institute of Standards released standards for IDEF0 and IDEF1 that were later withdrawn [7,8].

The Purdue Enterprise Reference Architecture (PERA) was a reference model for enterprise architecture, developed by Theodore J. Williams and members of the Industry-Purdue University Consortium for Computer Integrated Manufacturing [9]. The model allowed end users, integrators, and vendors to share information across five levels in an enterprise:

- Level 0—The physical manufacturing process.

- Level 1—Intelligent devices in the manufacturing process such as process sensors, analyzers, actuators, and related instrumentation.

- Level 2—Control systems for the manufacturing process such as real-time controls and software, human–machine interfaces, and supervisory control and data acquisition (SCADA) software.

- Level 3—Manufacturing workflow management such as. batch management; manufacturing execution/operations management systems (MES/MOMS); laboratory, maintenance, and plant performance management systems; and data collection and related middleware.

- Level 4—Business logistics management such as enterprise resource planning (ERP), which establishes the plant production schedule, material use, shipping, and inventory levels.

The PERA was adopted and promulgated globally as a series of industry standards, known as ISA-95, that has had a significant impact on automation in the industrial supply chain worldwide [10,11,12,13,14,15,16,17].

Early efforts in reference models for systems engineering were summarized by Long in 1995 and included the Functional Flow Block Diagram (1989), the Data Flow Diagram (1979), the N2 Chart (1968), the IDEF0 Diagram (1989), and the Behavior Diagram (1968) [18].

The Unified Modeling Language (UML) provides system architects, software engineers, and software developers with tools for analysis, design, and implementation of software-based systems as well as for modeling business and similar processes [19]. UML has three diagram types [26]:

- Structure, which includes Class, Component, Composite Structure, Deployment, Object, and Package diagrams;

- Behavior, which includes Activity, State machine, and Use Case diagrams;

- Interaction, which includes Collaboration—Communication, Interaction Overview, Sequence, and Timing diagrams.

Business Process Model and Notation (BPMN) has become the de facto standard for business processes diagrams [19]. It is used for designing, managing, and implementing business processes, and it is precise enough to be translated into executable software systems. It uses a flowchart-like notation that is independent of any implementation environment. The four basic elements of BPMN are flow objects that represent events, activities, and gateways; connecting objects that represent sequence flows, message flows, and associations; swim lanes that are a mechanism of organizing and categorizing activities; and artifacts that represent data objects, groups, and annotations.

Mendonza and Fitch developed the Decision Network Framework to link business, technology and design choices [21,22]. It provides decision networks that can be used to set both the business vision and product concept for any product, system, or solution. It also has a decision-centric information model of the critical decisions and models the relationships between decision data, the requirements that drive these decisions, and the consequences that flow from them. Risk and opportunity identification and analysis are included in the framework, but the framework does not prescribe quantitative, probability-based methods risk and opportunity identification and analysis. This framework was implemented in software and marketed as a proprietary product named Decision Driven® Design (US Trademark 75771618) from 2003 to 2024.

Systems Modeling Language (SysML) is a general-purpose modeling language for model-based systems engineering (MBSE) [23]. It provides the capability to represent a system using the three UML diagram types and adding a fourth diagram type [26]:

- Structure, which includes Class—renamed to be Block, Package, and Parametric (new) diagrams and eliminates Component, Composite Structure, Deployment, and Object diagrams;

- Behavior, which includes Activity (modified), State Machine, and Use Case diagrams;

- Interaction, which includes Sequence diagrams and eliminate Collaboration—Communication, Interaction Overview, and Timing diagrams; and

- Requirements (new), which includes the Requirement (new) diagram.

SysML has been adopted widely within the systems engineering community—a search for the term “SysML” in the 154 papers that were presented at the 2024 INCOSE International Symposium yielded 34 papers that included the term at least once [27].

Decision Model and Notation [24] is a standard approach for describing and modeling repeatable operational decisions within organizations to ensure that decision models are interchangeable across organizations. The standard includes three main elements:

- Decision Requirements Diagrams show how the elements of decision-making are linked into a dependency network.

- Decision tables to represent how each decision in such a network can be made.

- Business context for decisions such as the roles of organizations or the impact on performance metrics.

DMN is designed to work alongside BPMN the Case Management Model and Notation (CMMN) [28], providing a mechanism to model the decision-making associated with processes and cases. The decisions that can be modeled in DMN are deterministic decision responses to deterministic inputs, i.e., they are prescriptive models like programming flowcharts. DMN includes examples where risk, affordability, and eligibility are incorporated via qualitative categorization that serve as deterministic inputs to the decision prescriptions.

The Agile Systems Engineering Life Cycle Model (ASELCM) MBSE Pattern [25] accounts for information that is produced and consumed by systems engineering and other life cycle management processes. The ASELCM MBSE Pattern uses information models, not just process models, and it emphasizes learning in the presence of uncertainty. The information models in the MBSE pattern are intended to be built using composable knowledge. Davis states, “Composability is the capability to select and assemble components in various combinations to satisfy specific user requirements meaningfully. In modeling and simulation, the components in question are themselves models and simulations…. It is also useful to think of composability as a property of the models rather than of the particular programs.” [29].

Specking et al. use MBSE with Set-Based Design for Unmanned Aeronautical Vehicles [30]. Fang et al. use architecture design space generation with MBSE [31].

1.3. Main Aim of DADM and Principal Conclusions

The main aim of DADM was to develop a model that has the following characteristics:

- Enables trusted collaboration and interactions through a digital ecosystem;

- Is deployable and configurable for multiple decision domains;

- Provides an analytical framework for decision making across the lifecycle stages of complex systems;

- Will be widely adopted;

- Enables traceability and reuse of analysis, decisions, and rationale for decisions;

- Incorporate guidelines for identifying uncertainties and conducting probabilistic analysis and for documenting the rationale and results;

- Provides information models built using composable knowledge and process models that emphasize learning in the presence of uncertainty;

- Can be tailored to agile development for DEVOPS.

To meet these aims, the DADM was developed using composable SysML activity diagrams for process modeling and block definition diagrams for information modeling. The Magic System of Systems Architect was selected to implement the model, as it is widely designed for trusted digital collaboration, allows for traceability and reuse, allows the capturing of guidelines and documentation, and can be tailored.

2. Methods

The methods used in developing DADM follow data modeling by the International Data Management Association (DAMA) and were informed by the INCOSE Agile Systems Engineering Life Cycle Model (ASELCM). Both DAMA and ASELCM shaped the approach described below.

2.1. Data Modeling

According to DAMA, Data Management is a wide-ranging set or activities, which includes the abilities to make consistent decisions about how to get strategic value using data [32]. These activities are organized into the Data Management Framework, which identifies ten (10) categories of data management activities, which interact with an organization’s data governance to inform the data, information, and content lifecycle. These ten (10) data management activities begin with the definition of data architecture and design using data models. “Data modeling is the process of discovering, analyzing, and scoping data requirements, and then representing and communicating these data requirements in a precise form called the data model. This process is iterative and involves conceptual, logical, and physical models” [32].

The Decision Analysis Data Model mapped the inputs and outputs of the Decision Management processes defined in ISO/IEC/IEEE 15288 [33], the INCOSE Systems Engineering Handbook [1], and the SEBoK [34] to identify the high-level ‘decision concepts’ needed to execute the high-level Decision Management process. From there, the conceptual data model was decomposed and traced to an implementation-agnostic logical model, which defines data-driven processes and the data needs connecting them. Finally, implementation-specific physical data models would be tailored from the logical data model on a project-by-project basis.

2.1.1. Conceptual Data Model (CDM)

“A conceptual data model captures the high-level data requirements as a collection of related concepts. It contains only the basic and critical business entities within a given realm and functions.” [32]. In this regard, the conceptual DADM provides an executive-friendly definition of the key concepts that apply to business needs for their decision making. For example, the concept of a decision should include definitions of the decision itself, including the decision-maker(s), the alternatives being considered, and the values against which those alternatives will be evaluated. A summary of the DADM’s conceptual model is provided in Section 3.1.

2.1.2. Logical Data Model (LDM)

A CDM is too high-level to be implemented. The CDM defines needs without mapping those needs to data solutions. “A logical data model (LDM) captures the detailed data requirements within the scope of the CDM.” [32]. For example, whereas our CDM defines the decision concept as including a decision-maker, a set of alternatives, and a set of values, our LDM maps those to specific data points to include a decision authority, courses of action, and objectives. As the LDM was further refined, those data needs also included properties such as data attributes and domains, and the structure of data began to take shape without discussing additional properties of the data structure, such as format and validation rules, which are reserved for the physical data model. A summary of the DADM’s logical data model is captured in Section 3.2.

2.1.3. Physical Data Model

“Logical data models require modifications and adaptations in order to have the resulting design perform well within storage applications.” [32]. Therefore, the implementation specifics for any given solutions are defined in the Physical Data Model for that individual system. Note, the purpose of the Decision Analysis Data Model is to provide a reference for implementation for systems engineers and developers. Therefore, the DADM does not include a Physical Data Model.

2.2. Agile Systems Engineering Life Cycle Model

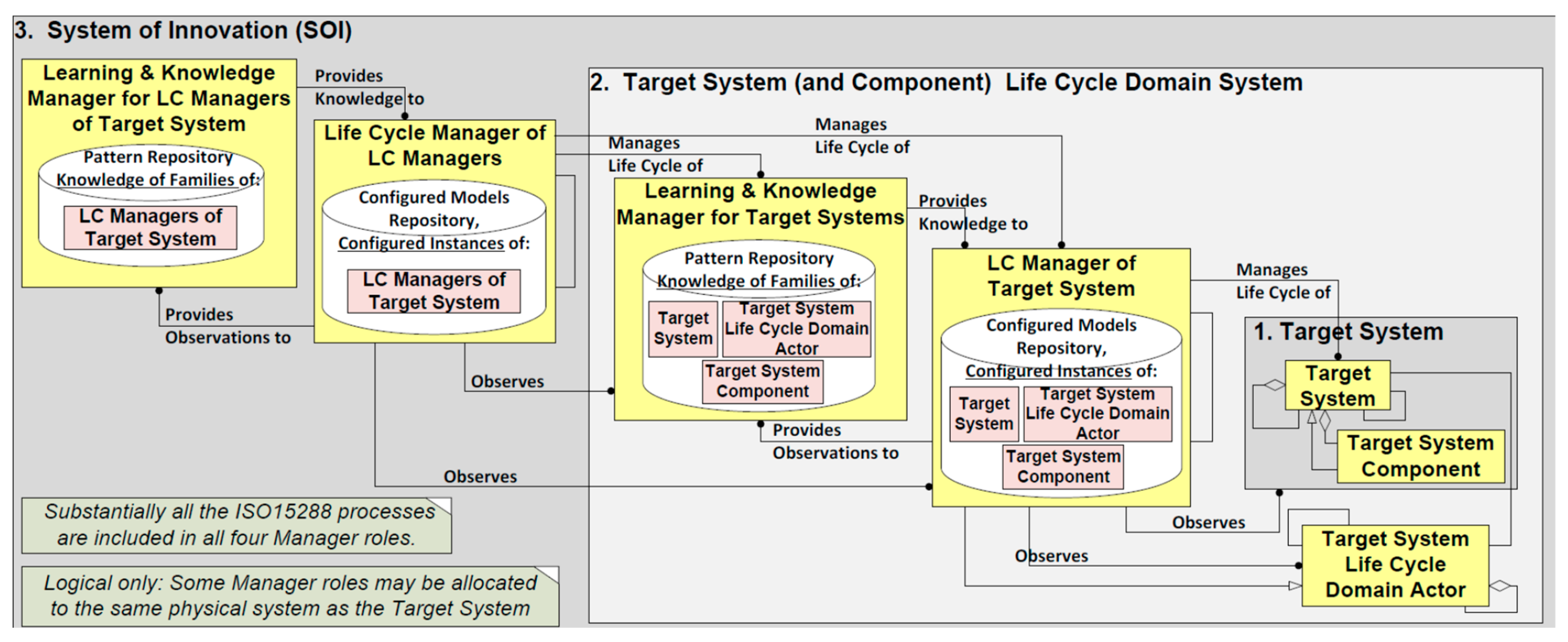

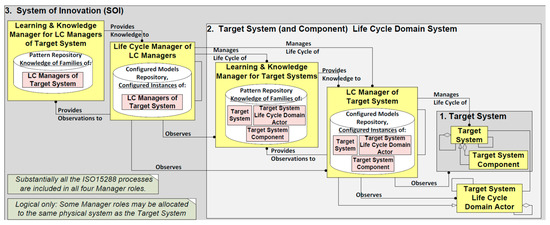

The INCOSE Agile Systems Engineering Life Cycle Model (ASELCM) has three major systems (Figure 1). The INCOSE ASELCM is a model of learning patterns that describes how systems improve through three interconnected levels: (1) The Target System; (2) the Lifecycle Domain System; and (3) The System of Innovation. System 1 of the ASELCM represents an engineered system of intertest (and its components) about which development and operations decisions are being made. Note that many different instances of Systems 1 may be present over time. Examples include aircraft, automobiles, telephones, satellites, software systems, data centers, and health care delivery systems. System 2 is the life cycle domain system, which is the system within which different instantiations of System 1 will exist during their life cycle. This includes any system that directly manages the life cycle of an instance of a target system during its development, production, integration, maintenance, and operations. If System 1 is managed as a program over its life cycle, then System 2 can be thought of as a program office responsible for the life cycle stages of System 1 (and the program’s associated processes). Different instances of System 2 can occur, e.g., one instance could be a program office responsible for development, production, and integration; and another instance could be a program office responsible for maintenance and operations. System 3 is the system of innovation that includes System 1 and System 2, and that is additionally responsible for managing the life cycles of instances of any System 2. System 3 develops, deploys, and manages System 2 work processes and evaluates them for improvements. System 3 can be thought of as the enterprise innovation system, e.g., an organization that develops, produces, and integrates many diverse kinds of systems, or a user that operates, integrates, and maintains many different systems. The System of Innovation produces better lifecycle processes and better lifecycle processes are implemented to produce better target systems.

Figure 1.

Top-Level Agile System Engineering Life Cycle Model [25].

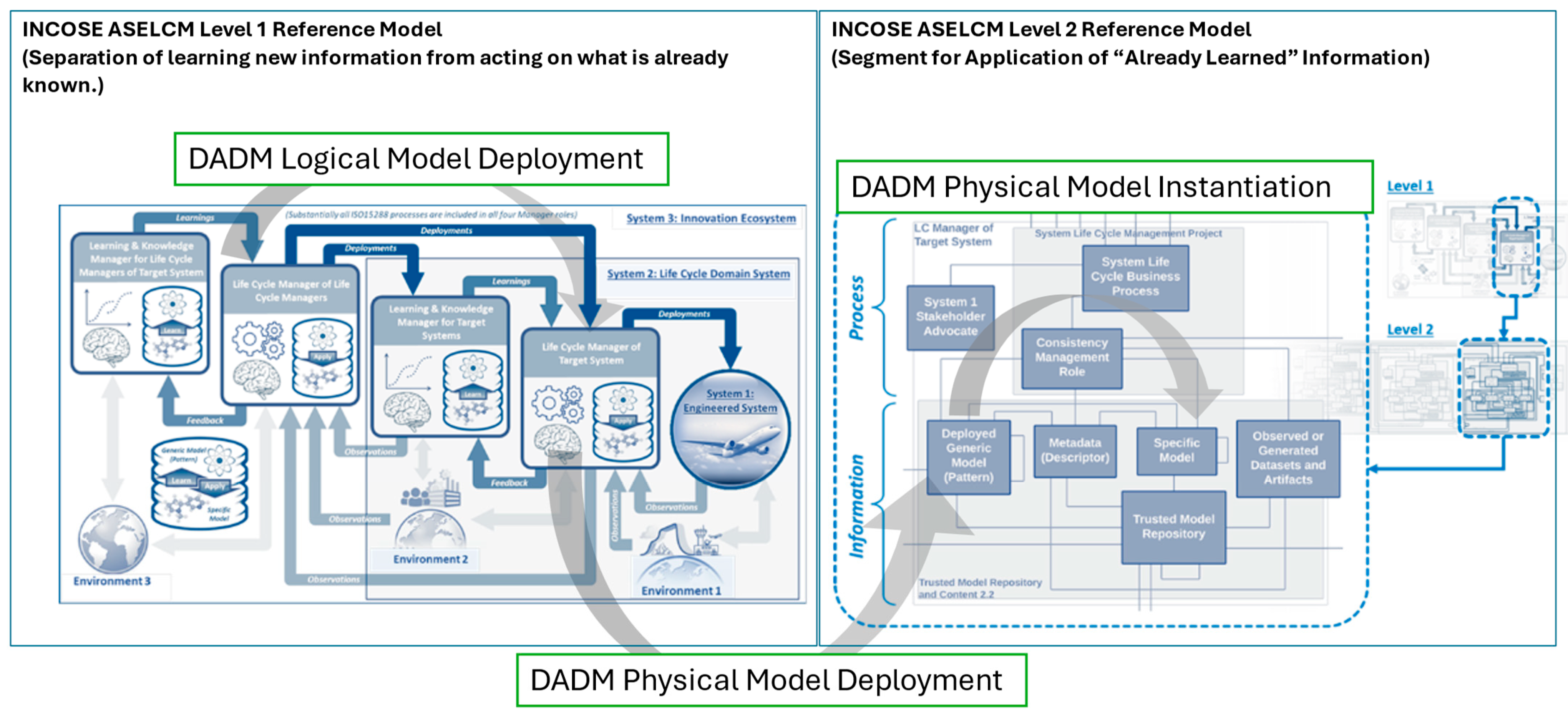

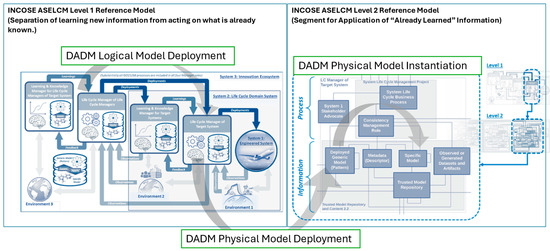

Figure 2 shows how DADM can be embedded in the ASELCM to support digital engineering. The System 3 enterprise-level system of innovation can deploy an instance of the DADM logical model to a System 2 program management entity within the enterprise. The System 2 life cycle manager is responsible for using the logical process model to develop a physical model of the DADM artifacts. Physical models can be implemented using a combination of various methods such as model-based systems engineering software, product lifecycle management software, and relational databases. In theory, even paper-based documentation libraries could be used to implement a physical model; however, the purpose of DADM is to enable a fully digital implementation of the physical model. The physical models can be seen as structured templates that are populated with the actual data for a specific life cycle decision that is being made for a specific System 1 instance.

Figure 2.

DADM is a deployed generic model (pattern) to be applied for making decisions about an engineered system [35].

2.3. Data Model Validation

From a design perspective, data models are validated by mapping inputs and outputs through an organization’s business processes, by assuring traceability across the conceptual, logical, and physical models, by justifying the models’ activities and data conform to best practices that are documented in the literature, and by independent review of the model by subject matter experts. The business process for the DADM are the Decision Management processes defined in ISO/IEC/IEEE 15288 [33], the INCOSE Systems Engineering Handbook [1], and the SEBoK [34]. Traceability for the DADM is achieved by decomposing the conceptual data model activities and data in a traceable manner to the logical model. Validating that the models’ activities and data conform to best practices is done by following relevant standards, bodies of knowledge, systems engineering textbooks, and journal articles in developing the models. Conformance to best practices is demonstrated in this article by the extensive citations in Section 3 and Appendix A. DADM has been reviewed by the INCOSE Impactful Products Committee and approved for releafse in the INCOSE Systems Engineering Laboratory [36].

Validation of the feasibility and effectiveness of the DADM model will be achieved by seeking government and industry users who will deploy the DADM MBSE implementation and provide feedback on its use in their organizations.

3. Results—The Decision Analysis Data Model

This section describes the DADM conceptual and logical data model for each of the ten steps in the Decision Management Process. Section 3.1 describes the ten steps in the process model and provides illustrative data artifacts for each step. For each step, we describe the purpose and some of the system engineering methods used to create decision management data. We also provide tailoring and reuse guidance for each step. Section 3.2 provides the Logical Process Model Activity Diagram and illustrates key concepts for each of the ten steps. The Activity Diagrams provide the activity flow and the information flow to develop the decision data elements. Appendix A has definitions of all the decision data elements, and Appendix B has a Block Definition Diagram (BDD) for each the ten steps that show the relationships between the data elements. In addition, we illustrate the major data artifacts and provide references to the systems engineering and decision analysis literature.

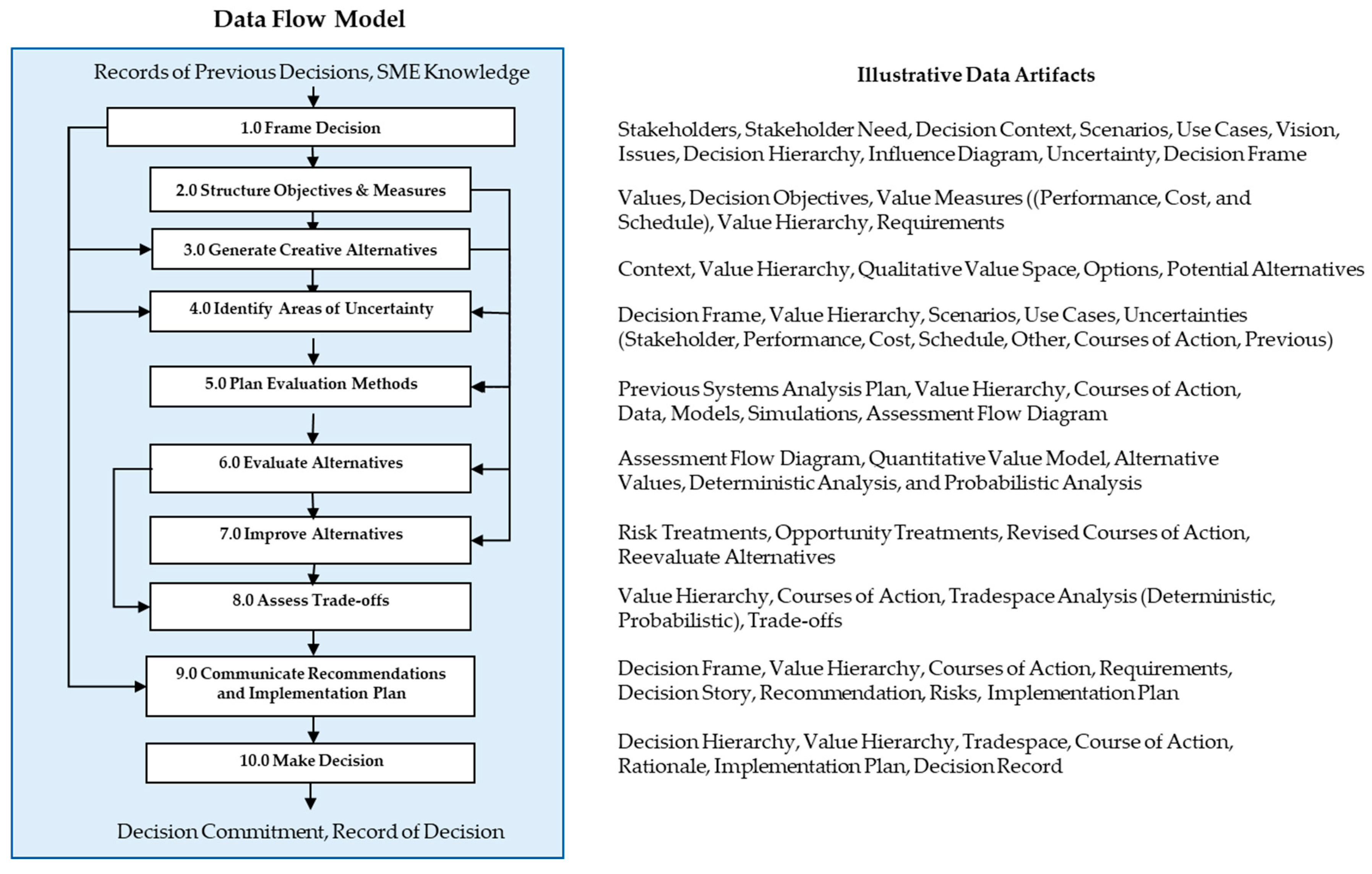

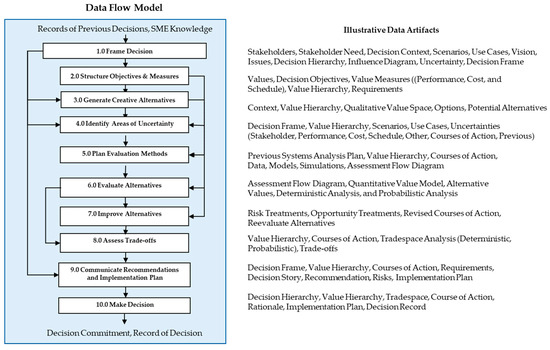

3.1. DADM Conceptual Data Modeling

The foundation of DADM can be summarized via a data flow model, Figure 3, that identifies a sequence of conceptual management steps that are executed sequentially in the numerical order shown without skipping any of the steps. The data flow model shows the inputs to the first step, the outputs from the last step, and has arrows to indicate the general flow of data between the steps. The figure lists the illustrative data artifacts generated for each step in the process. Each step describes an important activity and includes data artifacts used to inform the decision management team. More detailed discussion of the data and conceptual management steps will be provided in the subsequent sections. The data flow and the data artifacts can be reused with appropriate modifications in subsequent system life cycle stages.

Figure 3.

DADM data flow model and illustrative data artifacts. Some artifacts are updated in subsequent steps.

In this section, we define the step name, describe its purpose, list alternative methods in the systems engineering literature, and provide guidance for tailoring each step to your digital engineering implementation.

Table 2 provides the name of each of the ten steps, the purpose of each step, and common methods used in other systems engineering decision models and the system engineering literature.

Table 2.

DADM purpose and alternative methods for each DADM conceptual model process step.

Table 3 provides important guidance for reuse and tailoring for each step to the decision management process for your organization (System 2) and your system (System 3). The quality of each step should be validated with the decision-makers(s) and key stakeholders. We do not recommend skipping any step, but your organization may choose to combine steps. For example, the Systems Decision Process [37] uses Problem Definition (Steps 1 and 2), Solution Design (Steps 3 and 7), Decision Making (Steps 5 and 8) and Solution Implementation (Steps 9 and 10).

Table 3.

DADM tailoring and reuse guidance.

3.2. Logical Data Modeling

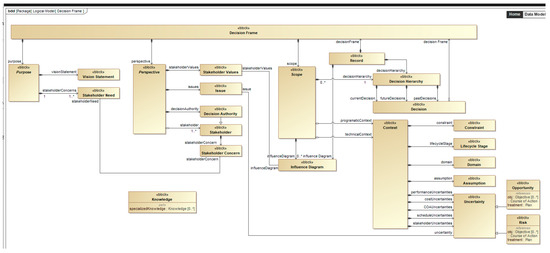

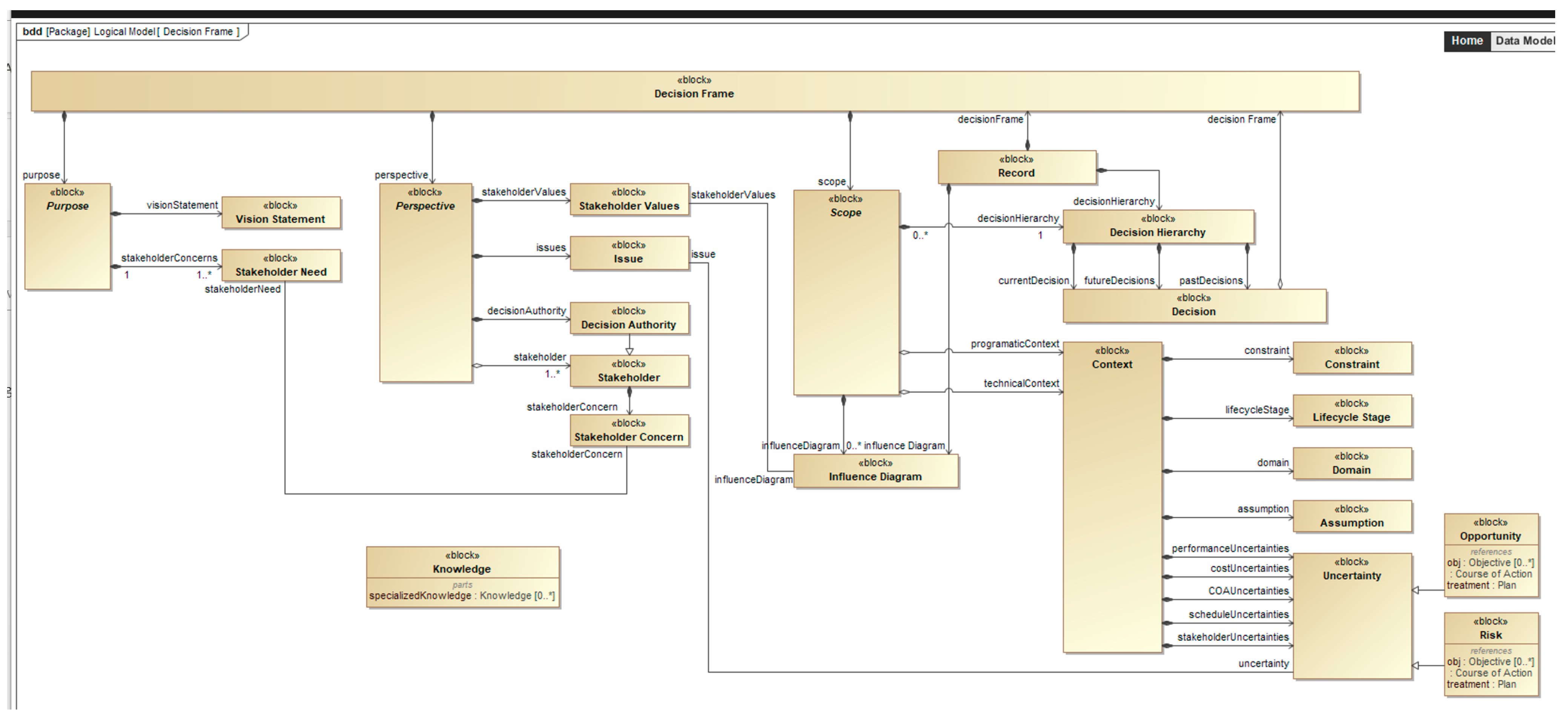

3.2.1. Step 1: Frame Decision

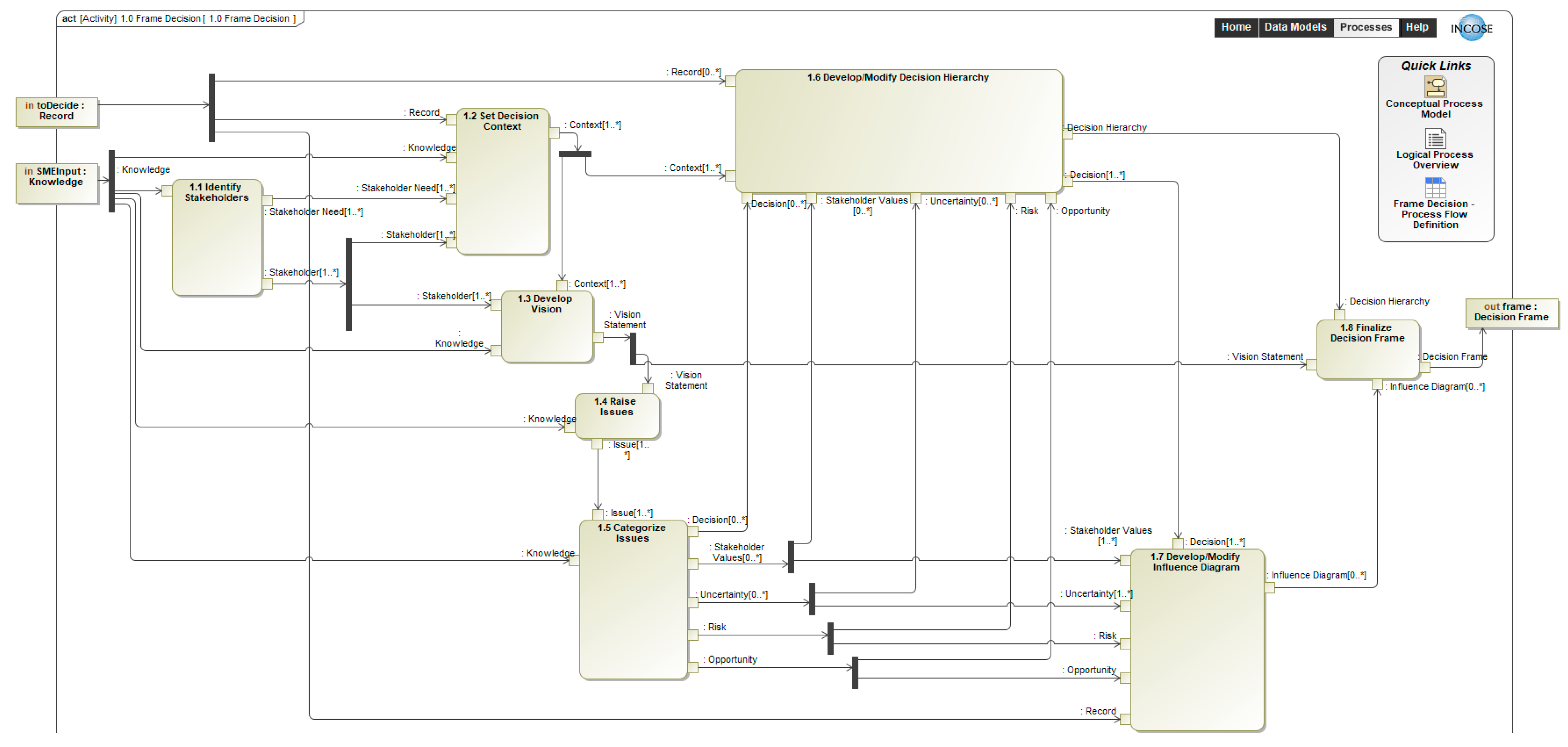

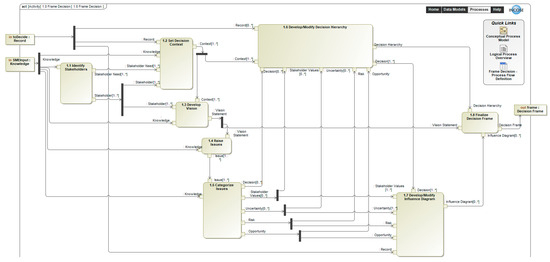

Spetzler, Winter, and Meyer define the decision frame as a collection of artifacts that answer the question: “What problem (or opportunity) are we addressing?” This comprises three components: (1) our purpose in making the decision; (2) the scope, what will be included and excluded; and (3) our perspective including, our point of view, how we want to approach the decision, what conversations will be needed, and with whom [38]. Figure 4 uses an activity diagram to depict the logical process for defining a decision frame.

Figure 4.

Logical process model: activity diagram for Step 1, frame decision.

The frame decision process is described by the 8 steps (1.1 to 1.8) shown in Figure 4. The process uses inputs from past decisions and from subject matter experts (SME’s). The process ends with a well-formed decision frame. The first step is to 1.1 identify the stakeholders that need to be included in the decision at hand. Every decision is potentially unique and may require different stakeholders. By using interviews, surveys and facilitated brainstorming sessions, the stakeholders’ needs will be captured to help 1.2 set the decision context and 1.3 develop the vision that will define the decision purpose and help keep the decision analysis efforts focused. In addition to the context and vision, it is also important to 1.4 raise issues that may have been identified by the stakeholders, SME knowledge or past decisions and 1.5 categorize issues. The issues, stakeholder needs, context, and vision are used to help identify uncertainty, risk, and or opportunity. This information is used to 1.6 develop and modify a decision hierarchy. It is also used to 1.7 develop/modify influence diagrams. The context and vision for the decision are combined with the decision hierarchy and influence diagram resulting in the 1.8 decision frame.

As a result of performing the activities in the 1. Frame the Decision process, there are several data artifacts to capture and manage. The remainder of this section provides guidance on best practices for how to develop these data artifacts.

For 1.1 identify the stakeholders, a generic list of stakeholders to be considered by subject matter experts when identifying stakeholders for the decision is as follows [37]:

- Decision authority. The stakeholder(s) with ultimate decision gate authority to approve and implement a system solution.

- Client. The person(s) or organization(s) that solicit systems decision support for a project or program.

- Owner. The person(s) or organization(s) responsible for proper and purposeful system operation.

- User. The person(s) or organization accountable for proper and purposeful system operation.

- Consumer. The person(s) or organization(s) that realizes direct or indirect benefits from the products or services provided by the system or its behavior.

- Collaborator. The person(s) or organization(s) external to the system boundary that are virtually or physically connected to the system, its behaviors, its input, or output and who realize benefits, costs, and/or risks resulting from the connection.

According to the Systems Engineering Handbook [1], an external view of a system must introduce elements that specifically do not belong to the system but do interact with the system. This collection of elements is called the system environment and is a result of the 1.2 set the decision context process activity [1]. A generic list of environmental factors to be considered when setting the decision context is as follows [37]:

- Technological. Availability of “off-the-shelf” technology. Technical, cost, and schedule risks of innovative technologies.

- Economic. Economic factors such as a constrained program budget within which to complete a system development and impacts on the economic environment within which the system operates.

- Political. Political factors such as external interest groups impacted by the system and governmental bodies that provide financing and approval.

- Legal. National, regional and community legal requirements such as safety and emissions regulations, codes, and standards.

- Health and Safety. The impact of a system on the health and safety of humans and other living beings.

- Social. The impact on the way that humans interact.

- Security. Securing the system and the products, and services from harm by potential threats.

- Ecological. Positive and negative impacts of the system on a wide range of ecological elements.

- Cultural. Considerations that constrain the form and function of the system.

- Historical. Impact on historical landmarks and facilities.

- Moral/ethical. Moral or ethical issues that arise in response to the question: “Are we doing the right thing here?”

- Organizational. Positive and negative impacts on the organization that is charged with making the decision.

- Emotional. Personal preferences or emotional issues of decision makers or key stakeholders regarding the system of interest or potential system solutions.

According to Parnell and Tani, the 1.3 develop the vision process activity results in a vision statement that answers three questions [39]:

- What are we going to do?

- Why are we doing this?

- How will we know that we have succeeded?

The vision statement refers to the process of making the decision, not the consequences of the decision on the cost, performance, and schedule of the system that is being engineered. So, the third question (“How will we know that we have succeeded?”) should be understood to mean success at the time the decision is taken, not when the final impact of the decision on the engineered system is known.

In 1.4 raise issues process activity, issue-raising brings to light the perspectives held by the stakeholders that can be used to view the decision situation. A well-formed set of issues considers the vision statement and the environmental factors that are related to the decision context. The next step is to categorize the issues into four groups as follows [39]:

- Decisions. Issues suggesting choices that can be made as part of the decision.

- Uncertainties. Issues suggesting uncertainties that should be considered when making the decision.

- Values. Issues that refer to the measures with which decision alternatives should be compared.

- Other. Issues not belonging to the other categories, such as those referring to the decision process itself.

The 1.5 categorize issues process activity and 1.6 develop and or modify a decision hierarchy provide the basis for defining the decision hierarchy, the uncertainties to be considered and analyzed, and the measures used in evaluating the decision alternatives.

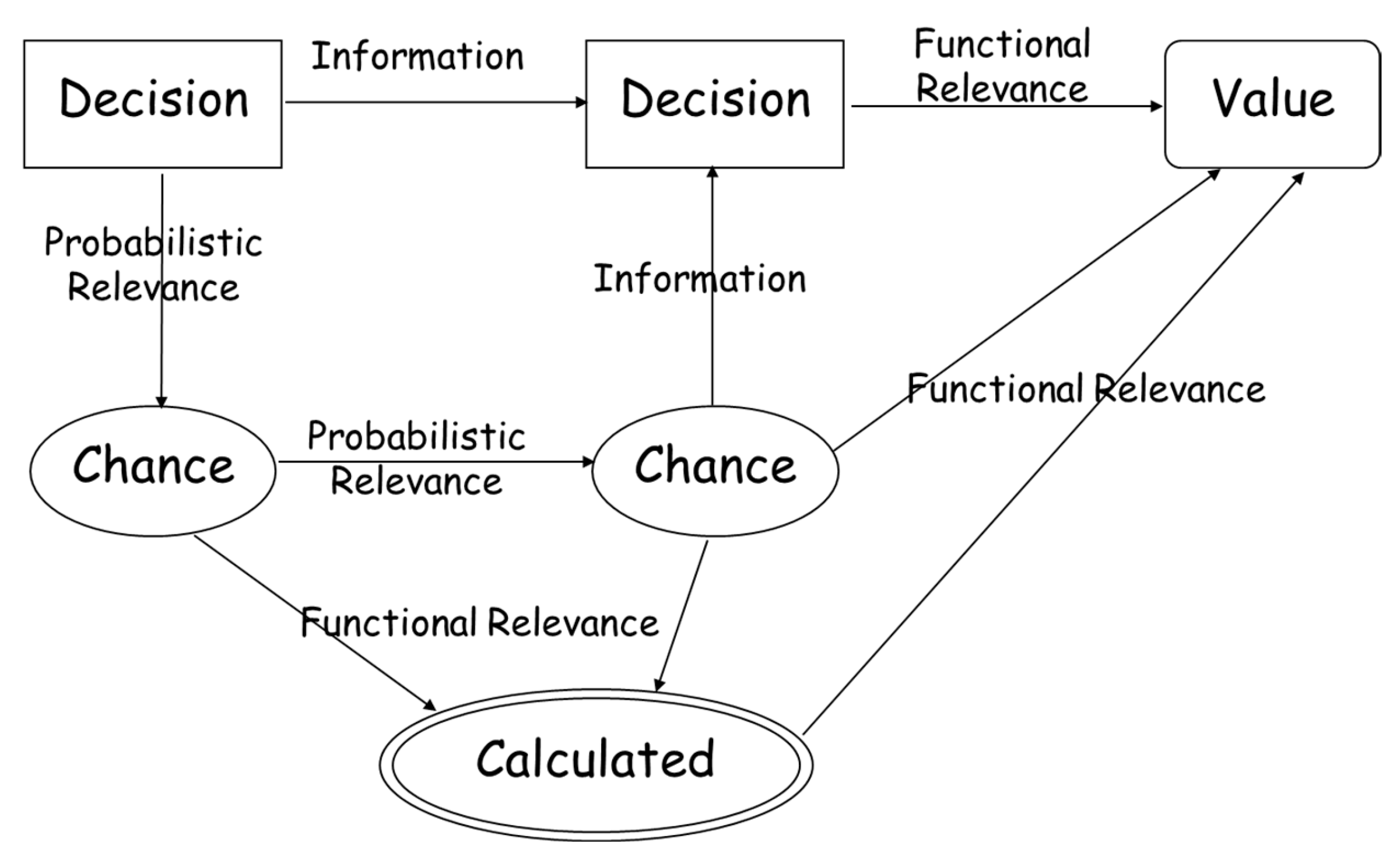

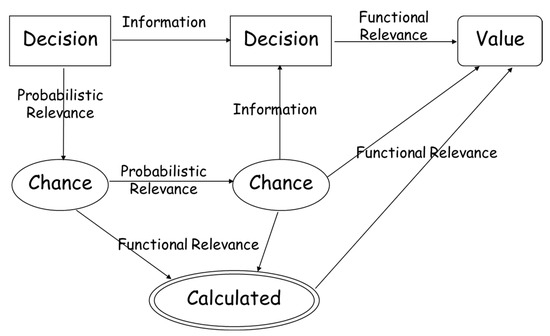

Typically, the categorized issues are interrelated, and their interrelationships can be captured in 1.7 develop/modify influence diagrams, which is a network representation for decision modeling where the nodes correspond to variables which can be decisions, uncertain quantities, deterministic functions, or values [40]. Figure 5 captures the semantics and syntax of an influence diagram. Semantically, the boxes represent decisions, the ovals represent uncertain quantities known as chance nodes, the double oval represents a calculated quantity, the round tangle represents a value measure, and the arrows represent different influences. The meaning of the arrows is determined syntactically:

Figure 5.

Generic influence diagram (Adapted from Buede and Miller [41]).

- An arrow that enters a decision represents information that is available at the time the decision is taken.

- An arrow leading into a chance node represents a probabilistic dependency (or relevance) on the node from which the arrow is drawn.

- An arrow leading into a calculated or value node represents a functional relevance to the receiving node from which the arrow is drawn. In this instance, the receiving node is calculated as a function of all the nodes that have arcs leading into the receiving node.

Syntactical rules of influence diagrams are:

- No loops: It must not be possible to return to any node in the diagram by following arrows in the direction that they point.

- One value measure: There must be one final value node that has no arrows leading out of it and that represents the single value measure or objective that is maximized to determine the optimal set of decisions.

- No forgetting: Any information known when a decision is made is assumed to be known when a subsequent decision is made.

The decision hierarchy captures three levels to the decisions identified by categorizing the issues. The top level includes those choices that are out of scope because they are assumed to have been made already. The middle level of the decision hierarchy contains those choices that are within the scope of the decision under consideration. The lowest level of the decision hierarchy has choices that can be deferred until later or delegated to others

The 1.8 decision frame process activity combines the context and vision for the decision with the decision hierarchy and influence diagram resulting in the decision frame. As defined in Figure 5, the decision frame has three components: (1) Purpose—what the decision is trying to accomplish; (2) Perspective—the point of view about this decision, consideration of other ways to approach it, how others might approach it; and the (3) Scope—what to include and exclude in the decision.

3.2.2. Step 2: Structure Objectives and Measures

We believe that it is a systems decision-making best practice to identify the decision objectives and measures used to evaluate attainment of the objectives before determining the alternatives [42]. Parnell et al. [43] provides a detailed discussion of the challenges and best practices for structuring objectives and measures.

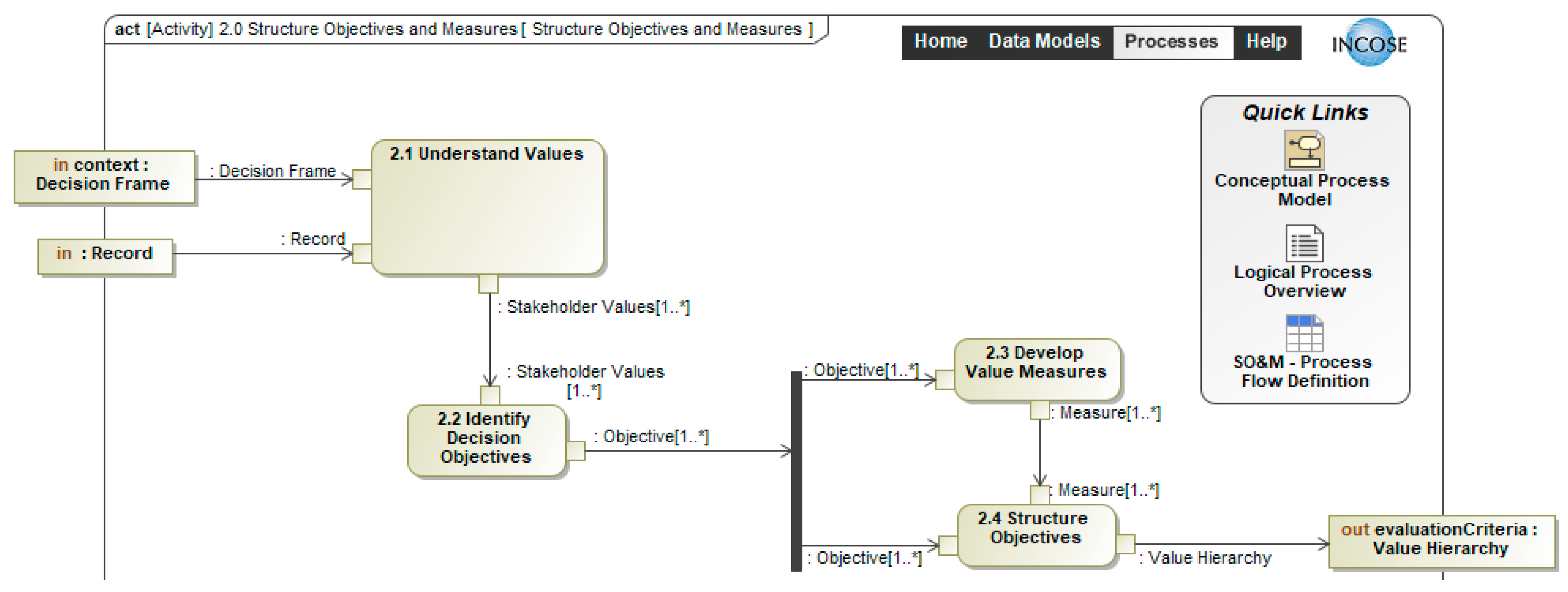

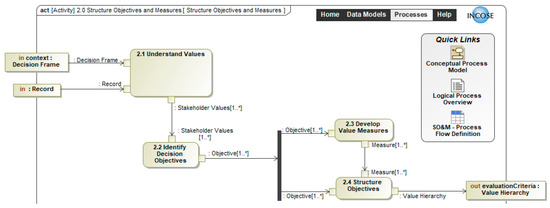

Figure 6 provides the Logical Model activity diagram for Step 2, Structure Objectives and Measures. The decision frame provides important input information on to 2.1 Understand Values that guide 2.2 Identify Decision Objectives and 2.3 Develop Value Measures and 2.4 Structure Objectives. Identifying objectives is more art than science. The major challenges are (1) identifying a full set of objectives and measures, (2) differentiating fundamental (i.e., what we care about achieving) and means (i.e., how we can achieve) objectives, and (3) structuring a comprehensive set of fundamental objectives and measures for validation by the decision maker(s) and stakeholders and use in the evaluation of alternatives [43].

Figure 6.

Logical process model: activity diagram for Step 2, structure objectives and measures.

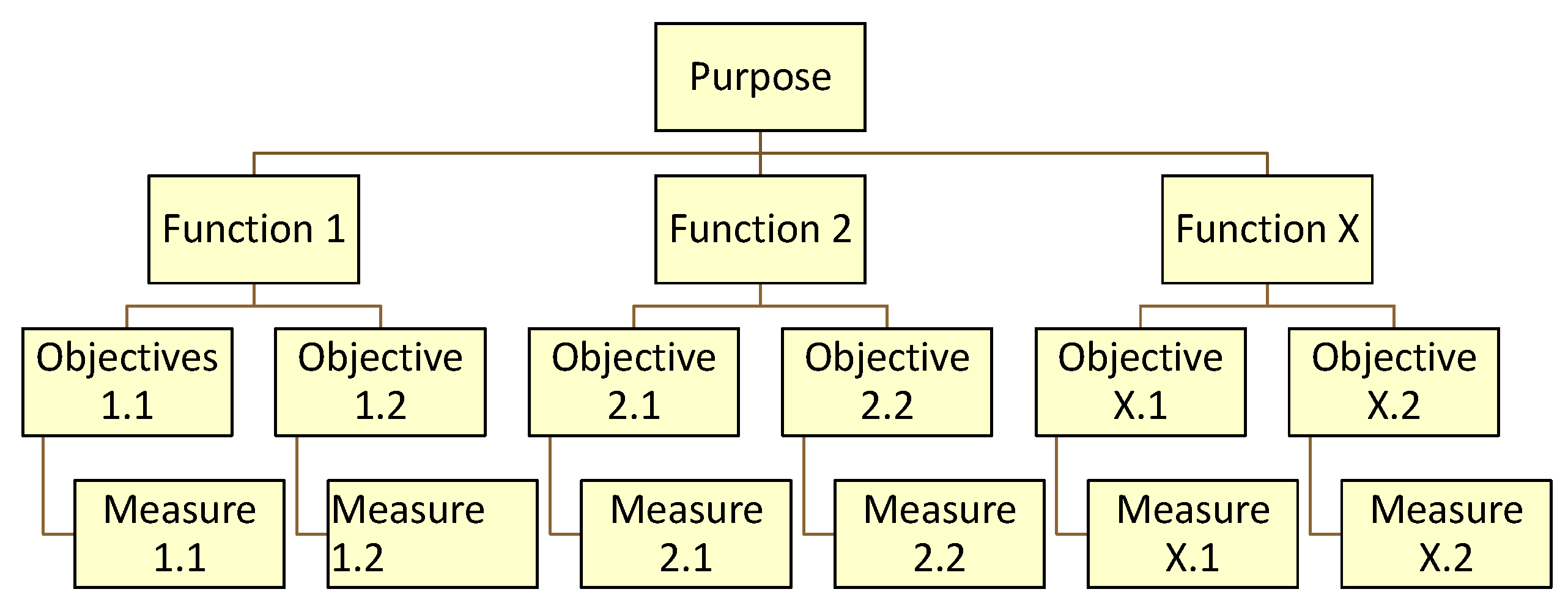

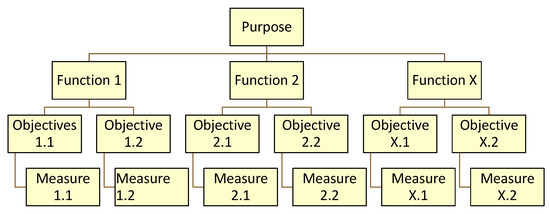

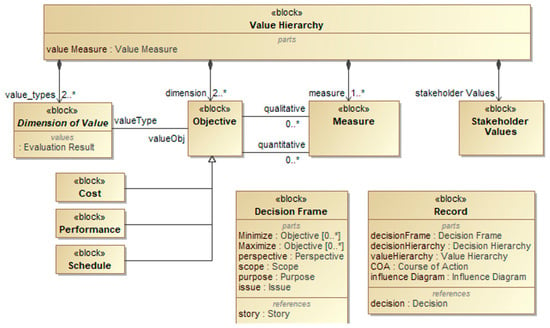

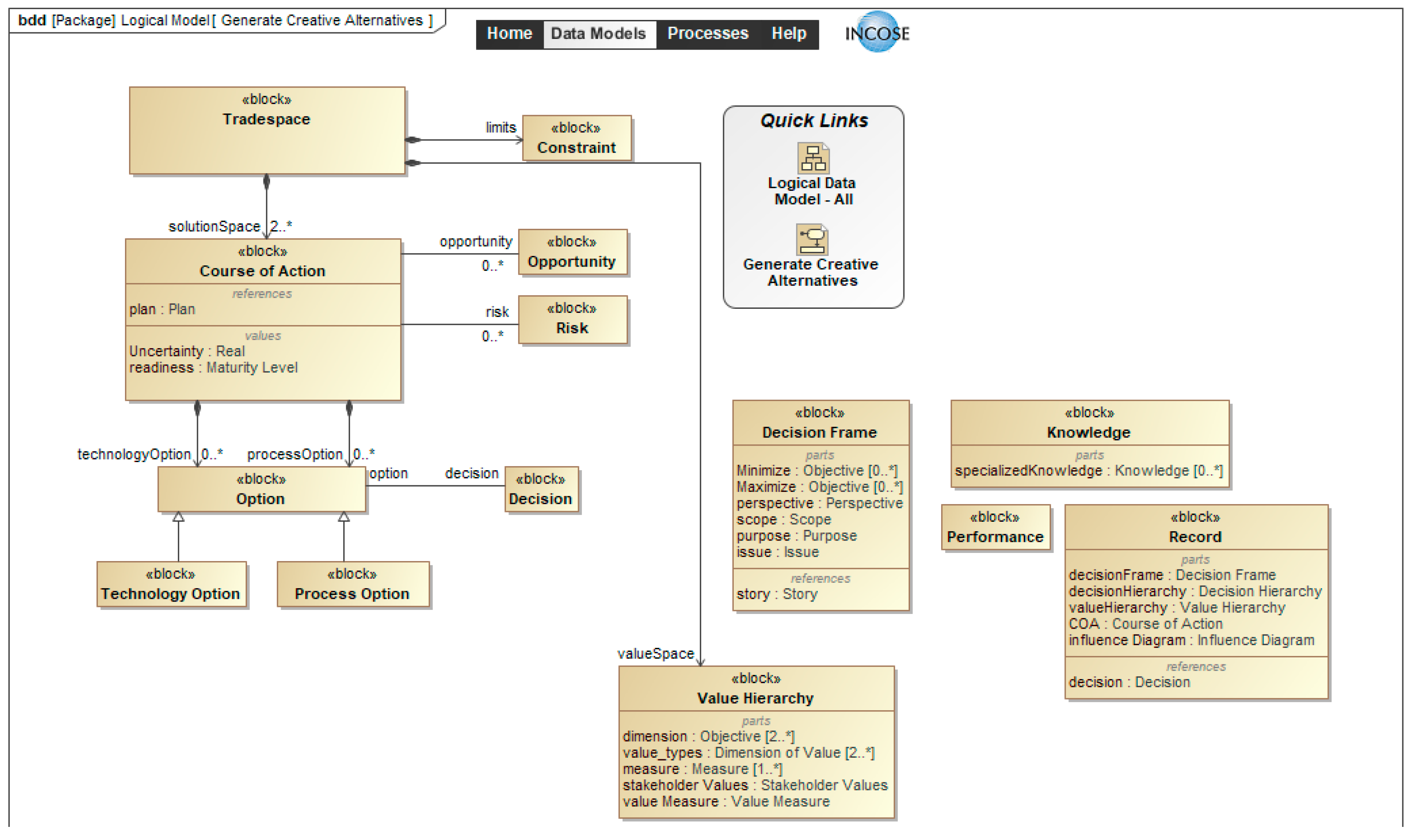

The value measures and structured objectives are represented as a value hierarchy (also called objectives hierarchy or value tree) which is a graphical tool for structuring objectives and measures [42]. Value hierarchies are easier to understand and validate when they are logically structured [43]. For systems with multiple functions, the objectives can be structured by time sequenced functions. The value hierarchy is the output of this process step. Figure 7 provides an example of a value hierarchy organized by functions. If functions are not appropriate, the objectives can be structured by criteria.

Figure 7.

Illustrative Value Hierarchy.

Decision makers and stakeholders are interested in four dimensions of value: performance of the system, cost of the system, the system schedule, and external impacts [44]. The best practice is to develop an objectives hierarchy for the performance and external impacts of the system, a financial model or a system life cycle cost model, and a system development schedule to be used to evaluate the alternates developed in the next step in the logical model. Private companies may also develop a net present value model to assess the potential financial benefits of a new product(s) or service(s) that the system will provide to their customers.

Kirkwood identified two useful dimensions for value measures: alignment with the objective and type of measure [45]. Alignment with the objective can be direct or proxy. A direct measure focuses on the objective, e.g., the percentage of time available for system availability. A proxy measures only part of the objective, e.g., reliability is a proxy for availability. The type of measure can be natural or constructed. Natural measures are commonly used, such as dollars. A constructed measure scale must be developed, such as a five-star scale for automobile safety. To be useful, constructed measures require clear definition of the measurement scales.

One measure should be used for each objective. Table 4 provides preferences for the four types of value measures [43]. Priorities 1 and 4 are obvious. We prefer direct and constructed to proxy and natural for two reasons. First, alignment with the objective is more important than the type of scale. Second, one direct, constructed measure can replace many natural and proxy measures.

Table 4.

Preference for types of value measure [43].

The value hierarchy provides the qualitative value model. Developing the quantitative value mode is described in 6.0 Evaluate Alternatives under activity 6.2, Determine Value Function and Weight for each Value Measure.

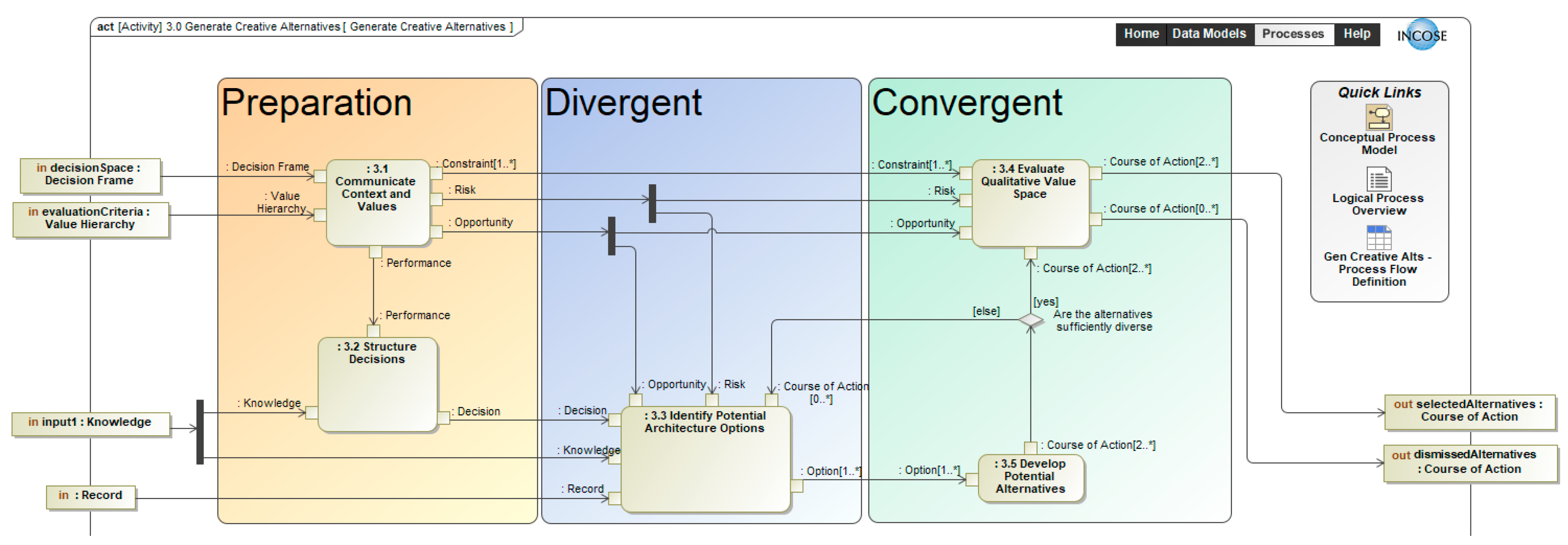

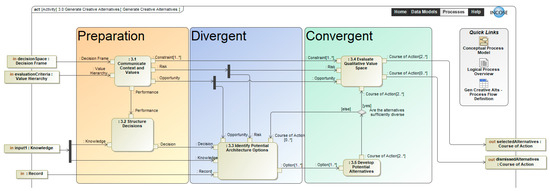

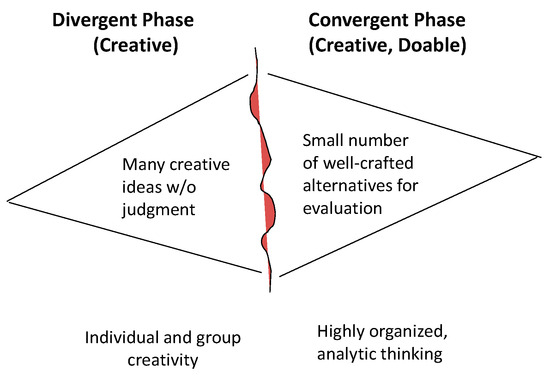

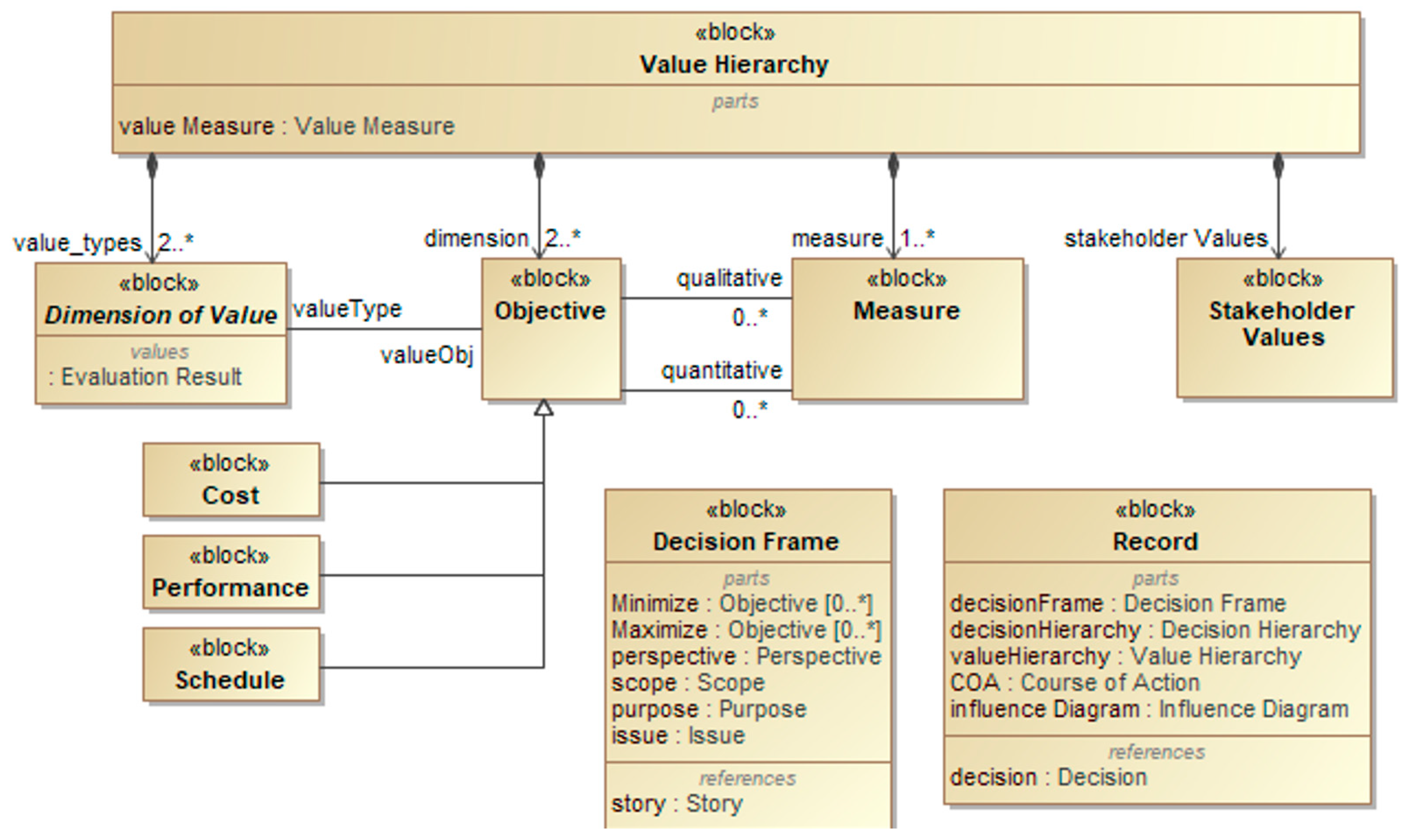

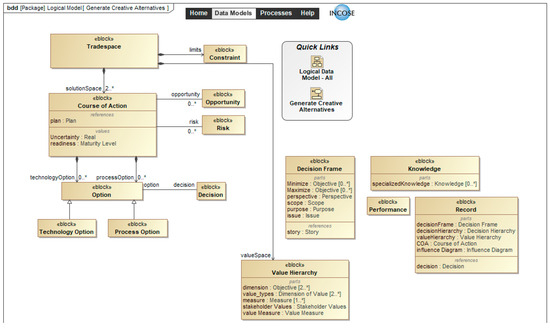

3.2.3. Step 3: Generate Creative Alternatives

Figure 8 uses an activity diagram to depict the logical process for generating creative alternatives. There are five process activities to accomplish Step 3, Generate Creative Alternatives. The 5 activities are performed across three phases to generate alternatives: a preparation phase, a divergent phase, and a convergent phase. Our decision can only be as good as the best alternative we identify. The divergent phase is important to make sure that we identify and explore the full range of potential solutions. The convergent phase is important since we usually only have the resources to evaluate a limited number of alternatives.

Figure 8.

Logical process model: activity diagram for Step 3, generate creative alternatives.

The Preparation Phase uses the decision frame and value hierarchy as inputs to 3.1 Communicate Context and Values process activity. It also takes SME knowledge as input to support 3.2 Structure Decision process activity. In the Divergent phase, Risk and Opportunity along with decision, knowledge, record, and course of action are used as inputs to support 3.3 Identify Potential Architecture Options process activity which provides an output of options. In the Convergent phase constraints, risk, opportunity and course of action are inputs to 3.4 Evaluate Qualitative Value Space process activity which provides course of action as outputs. If the alternatives are not sufficiently diverse, they are modified and updated as new courses of action to consider for further evaluation. The output of this step results in a selected alternative and a list of dismissed alternatives.

The preparation phase ensures that alternatives are generated that are within the scope of the decision problem that is described in the decision frame and the alternatives provide the most potential value following two principles of Value-Focused Thinking [42]:

- Specifying the meaning of the fundamental objective by defining value measures can suggest alternatives.

- Identifying means that contribute to the achievement of the value measures can also indicate potential alternatives.

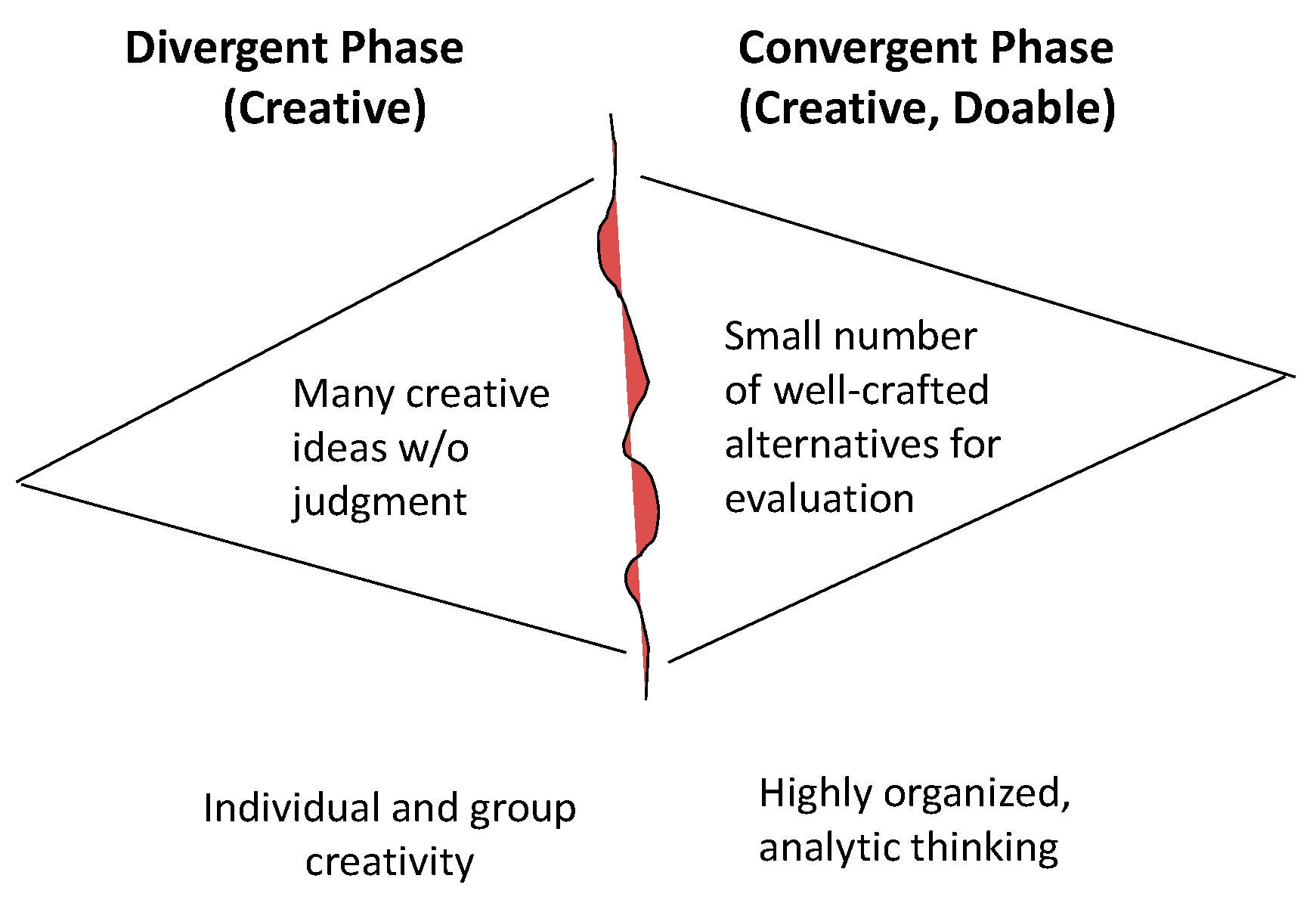

Figure 9 depicts how the divergent phase results in creating many ideas and how the convergent phase requires organized, analytic thinking to identify a smaller number of well-crafted, feasible alternatives that span the decision space.

Figure 9.

Two phases of alternative development (adapted from Bresnick and Parnell [46]).

Table 5 presents the assessment of the alternative generation techniques by identifying the life cycle stage appropriate for their use and their potential contributions in the divergent and convergent phases of alternative generation [44]. Several representative structured methods among the many available are brainstorming, Reversal, SCAMPER, Six Thinking Hats, Gallery, and Force Field. All the techniques are dependent on the expertise, diversity, and creativity of individual team members, on the effectiveness of the team leadership, and on the processes used.

Table 5.

Assessment of alternative development techniques [44].

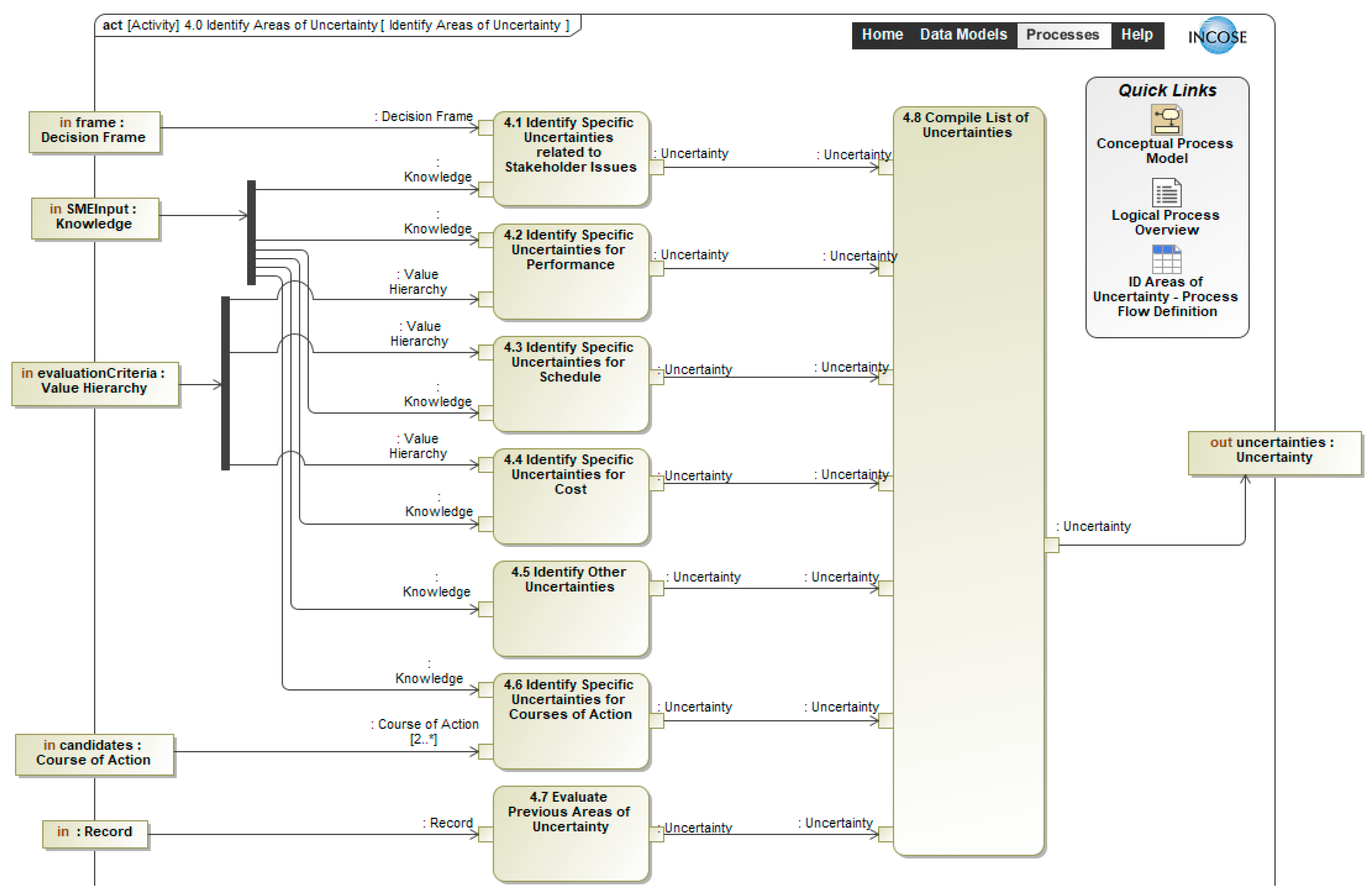

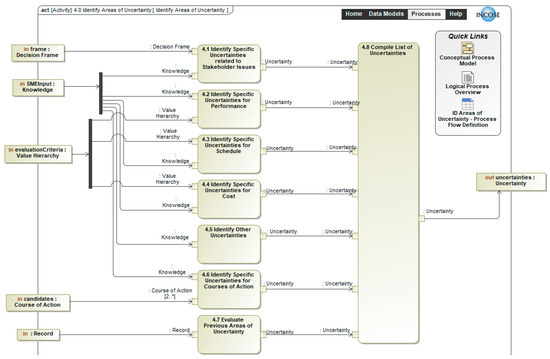

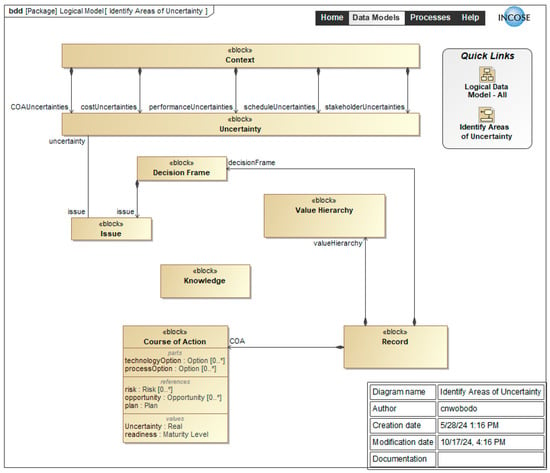

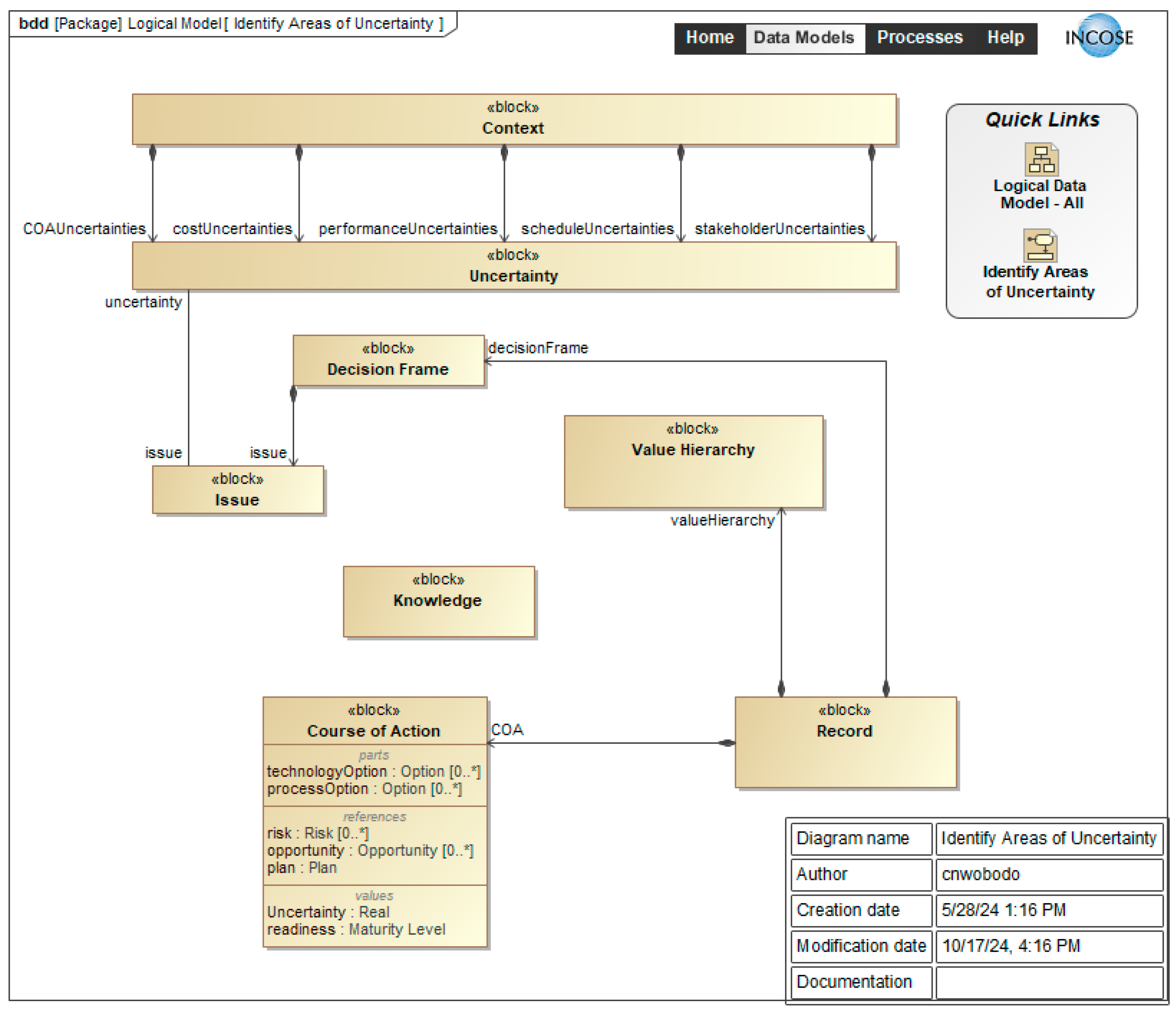

3.2.4. Step 4: Identify Areas of Uncertainty

Figure 10 uses an activity diagram to depict the logical process for identifying areas of uncertainty. The decision frame, SME knowledge, the value hierarchy, the courses of action, and the decision record provide important inputs to 4.1 to 4.6 Identify Uncertainty process activities. There are many sources of uncertainty in system development that impact the risk areas. Table 6 provides a mapping of the major sources of uncertainty to the areas of uncertainty. Table 6 is illustrative and is neither an exhaustive list nor a checklist. Additional sources of uncertainty and issues are provided in the Department of Defense Risk, Issue, and Opportunity (RIO) Management Guide for Defense Acquisition Programs [47] and in ISO/IEC/IEEE 16085:2021 [48]. Any of these sources may have been included in 4.7 Evaluate Previous Areas of Uncertainty process activity. 4.8 Compile List of Uncertainties process activity provides the complete list of uncertainties to be considered for this decision.

Figure 10.

Logical process model: activity diagram for Step 4, identify sources of uncertainty.

Table 6.

Mapping sources of uncertainty to areas of uncertainty.

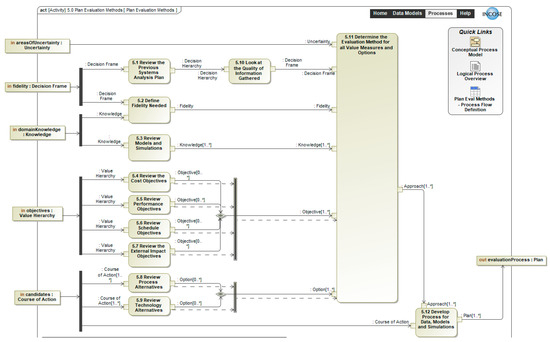

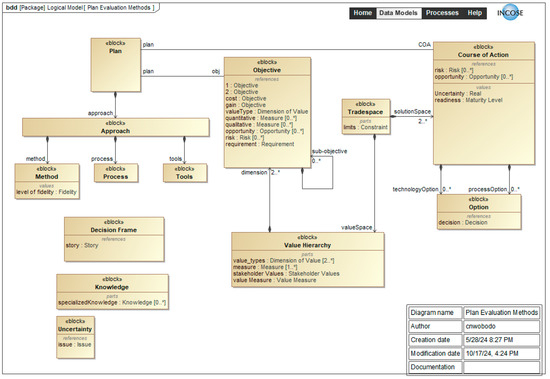

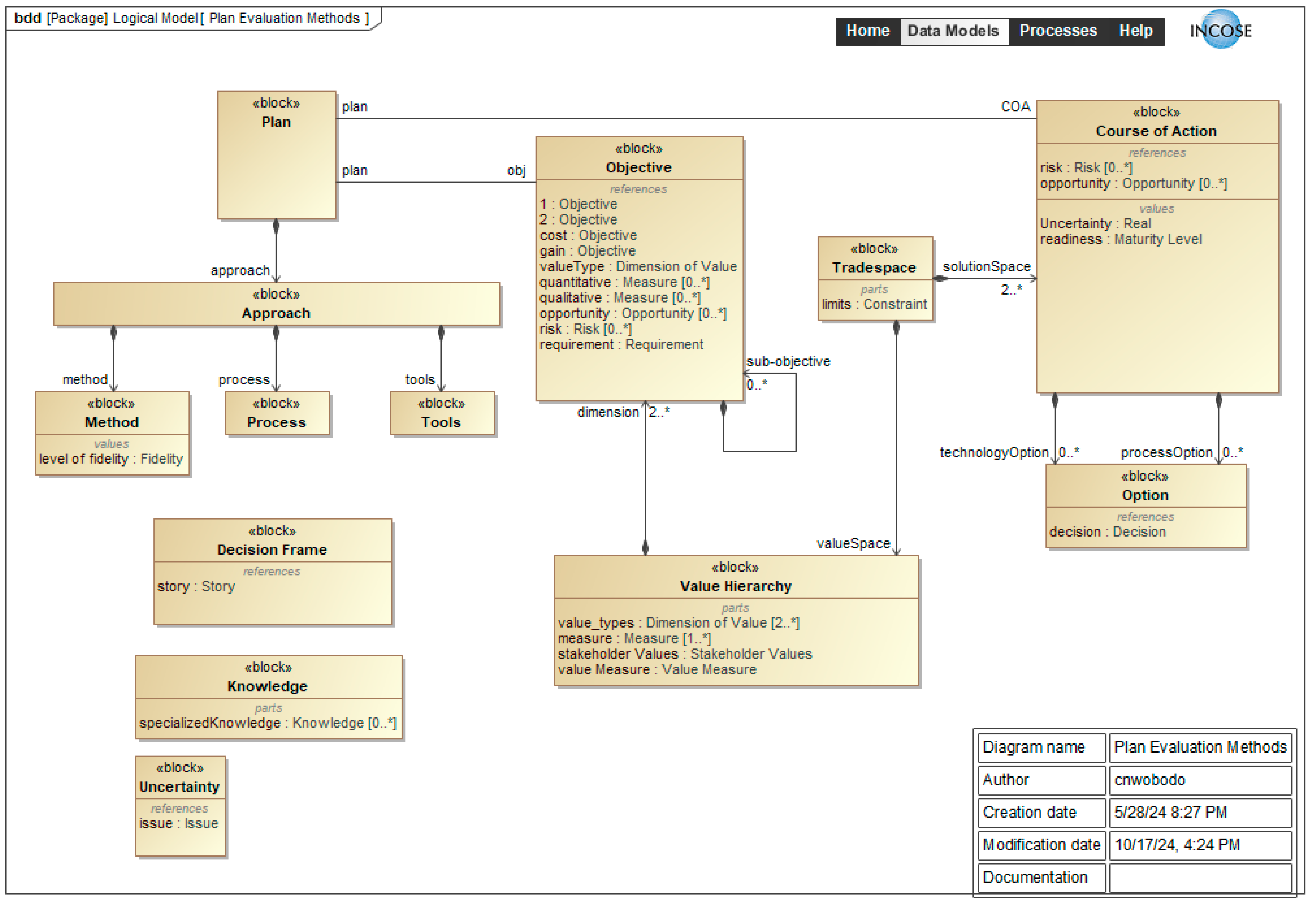

3.2.5. Step 5: Plan Evaluation Methods

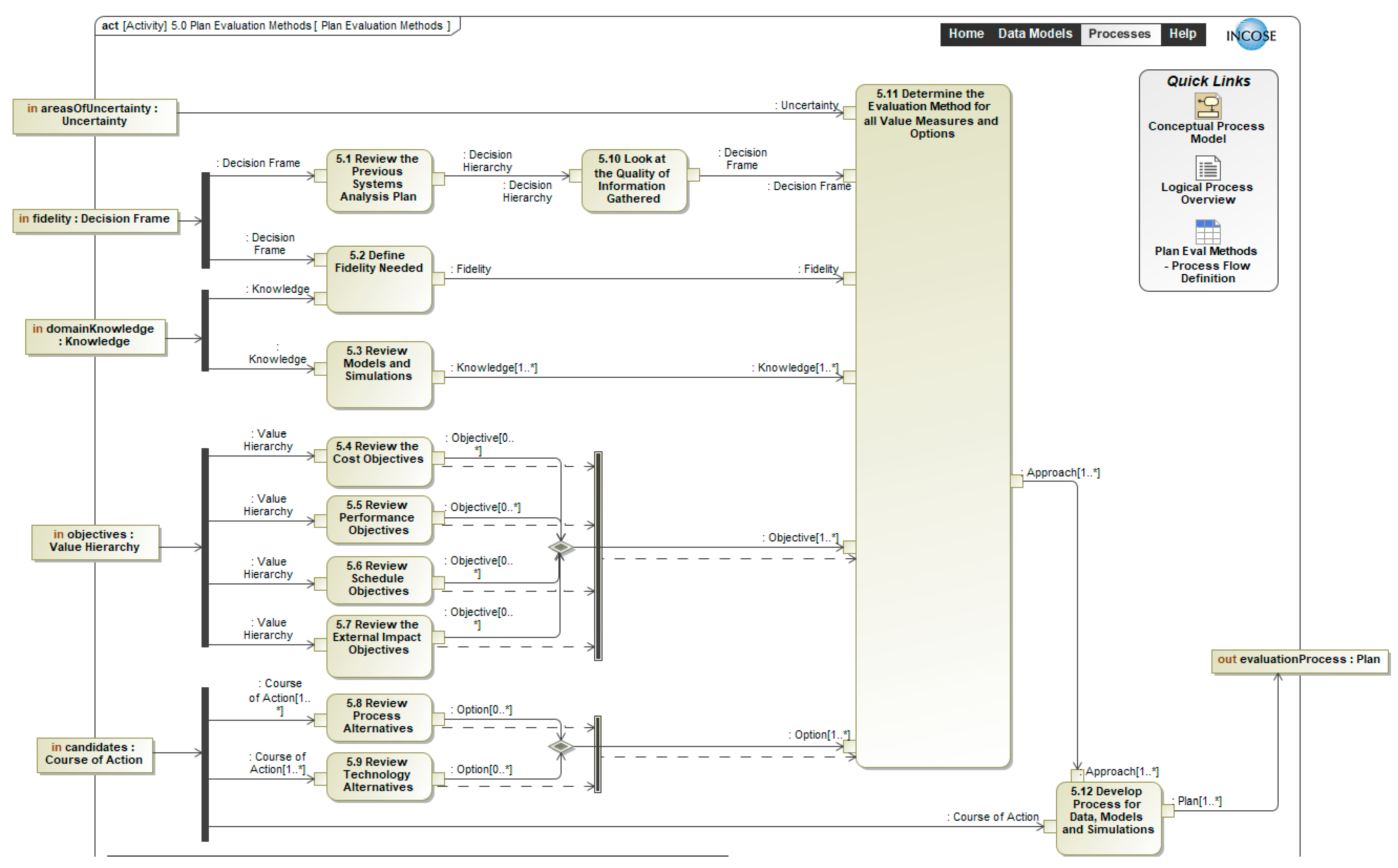

Figure 11 uses an activity diagram to depict the logical process for planning evaluation methods for each measure of each alternative.

Figure 11.

Logical process model: activity diagram for Step 5, plan evaluation methods.

The inputs to the 12 process activities in Step 5, Plan Evaluation Methods, include Uncertainty, Decision Frame, Knowledge, Value Hierarchy, and Couse of Action. Uncertainty is considered for each objective and option being evaluated. The Decision Frame is used to support 5.1 Review Previous Systems Analysis Plan and be sure to 5.10 Look at the Quality of Information Gathered.

The Decision Frame and Domain Knowledge is used to 5.2 Define Fidelity Needed. Use the domain knowledge to 5.3 Review Models and Simulations and provide knowledge to 5.11 Determine the Evaluation Methods that the models and simulation will need to support.

For each entry in the value hierarchy, and each option, the evaluation method is determined based on 5.4 Review Cost Objectives, 5.5 Review Performance Objectives, 5.6 Review Schedule Objectives, and 5.7 Review External Impact Objectives. There should be measures for each objective in the value hierarchy.

Each course of action in the 5.8 Review Process Alternatives and 5.9 Review Technology Alternatives activities are used to, 5.11 Determine the Evaluation Method for Each Option.

We use the inputs of uncertainty, Decision Frame, Fidelity, Knowledge Objectives and Options in 5.11 Determine the Evaluation Method for all Value Measures and Options to establish the evaluation approach for each option. We used the approaches and each course of action in 5.12 Develop Process for Data, Models and Simulations, to develop the Evaluation Plan.

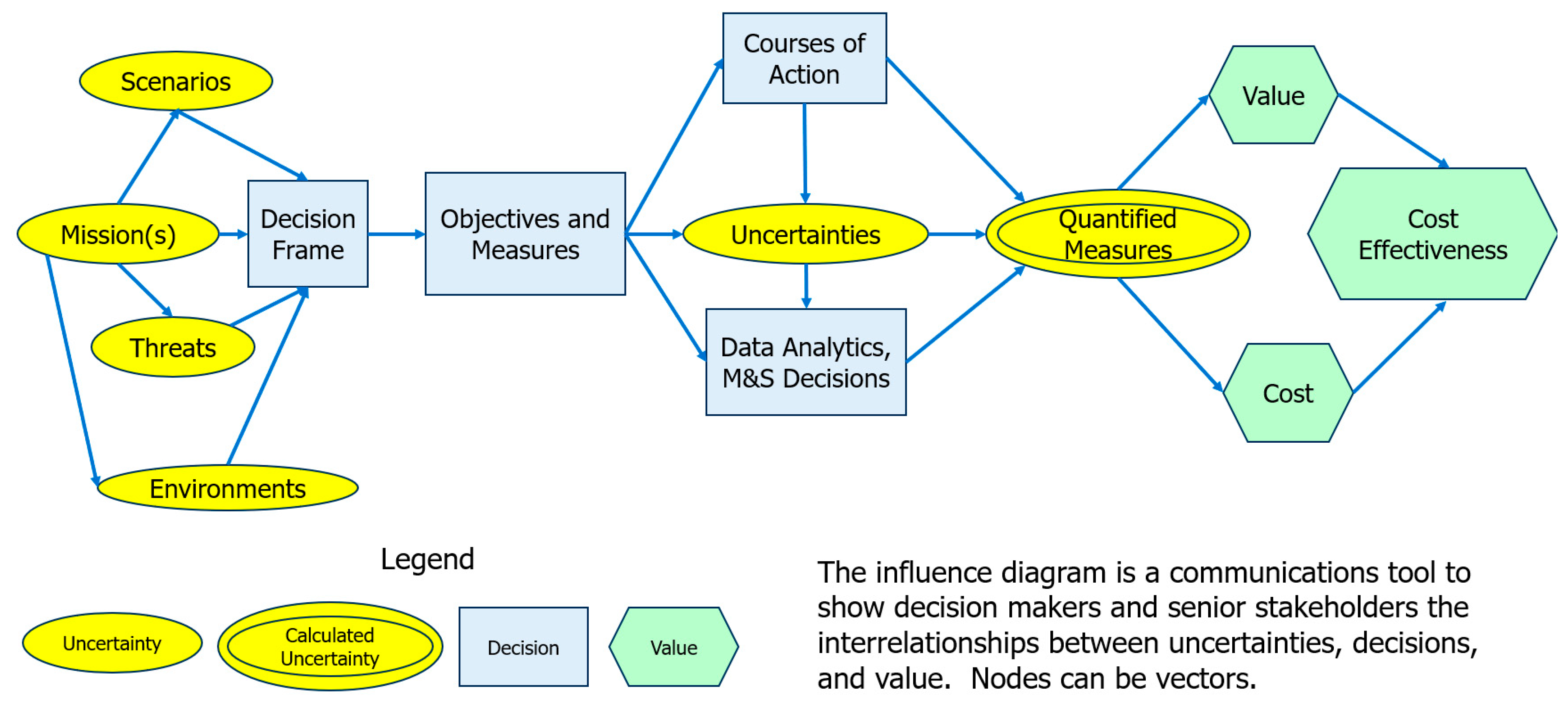

5.11 Determine the Evaluation Method for all Value Measures and Options process activity is the goal for Step 5, Plan Evaluation Methods. To accomplish this, determining the evaluation methods for all the value measures and costs and the courses of action can be represented as an influence diagram (Figure 12). The missions, scenarios, threats, and environments used for the evaluation are defined in the Frame the Decision step. Because of the “no forgetting” rule for influence diagrams, the selected items are treated as sources of uncertainty for the downstream decisions of selecting objectives and measures, courses of action, and the data analytics, modeling, simulations, test data, and operational data to be used for evaluation. These choices all lead to calculating quantified measures that are uncertain due to these measures being functions of uncertainties. The quantified measures are used to calculate the value achieved and the cost incurred for each course of action that is being evaluated.

Figure 12.

Influence diagram for plan evaluation methods.

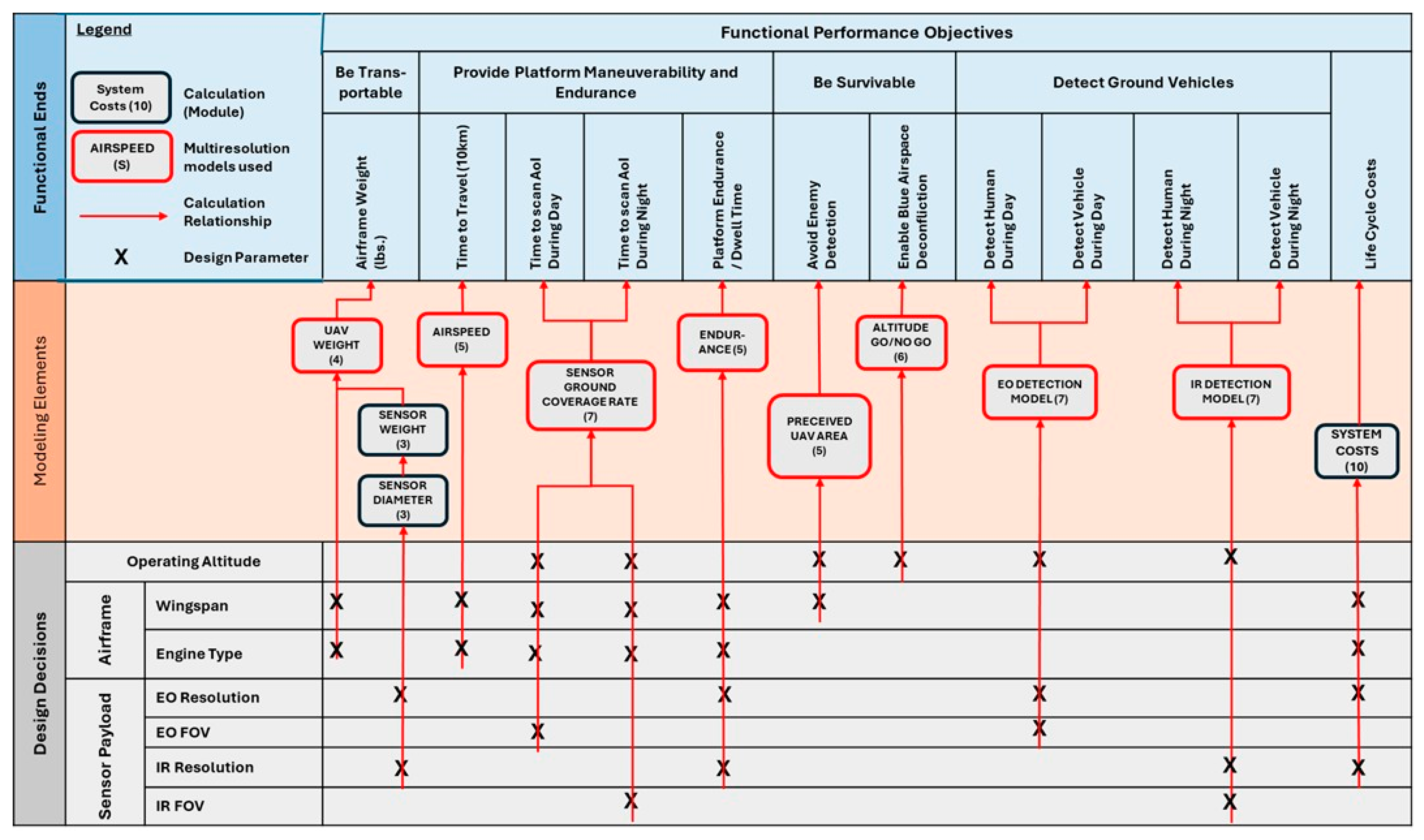

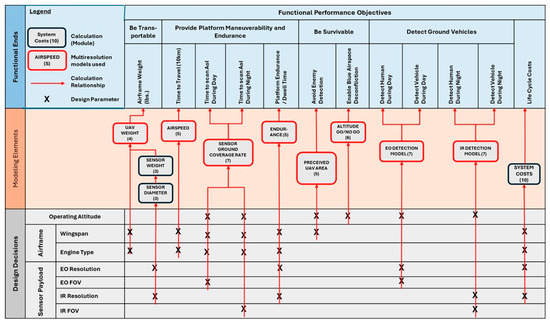

Assessment flow diagrams may be used to trace functional performance objectives to design decisions and modeling elements. Figure 13 is an example of such a diagram, which can help individual subject matter experts understand how their expertise fits into the larger evaluation picture, where inputs to feed their model will be coming from and where their outputs will be used. The lead systems engineer/systems analyst can use these diagrams to organize, manage, and track COA evaluation activities. By showing the pedigree of the data used in the decision support model, these diagrams also give stakeholders confidence that the evaluation rests on a solid quantitative foundation.

Figure 13.

Assessment flow diagram for a hypothetical Unmanned Aeronautical Vehicle [49].

Specifically for the Unmanned Aeronautical Vehicle example in Figure 13, the functional performance objectives outline what the system must achieve, including:

- Mobility & Survivability: Be transportable, maneuverable, and capable of enduring missions while avoiding detection.

- Detection Capabilities: Detect ground vehicles and humans both day and night.

- Efficiency Metrics: Optimize life cycle costs, airframe weight, travel time, scanning time, platform endurance, and blue airspace deconfliction.

- Modeling elements and design decisions influence how the objectives are met. They include:

- Design Decisions: Airframe type, wingspan, engine type, sensor payload, EO/IR resolution and field-of-view, and operating altitude.

- Modeling Techniques: Use of multiresolution models and detection models (EO and IR).

- Calculation relationships visually map how key parameters contribute to system costs and performance metrics. They include:

- Design Decisions: Airframe type, wingspan, engine type, sensor payload, EO/IR resolution and field-of-view, and operating altitude.

- Modeling Techniques: Use of multiresolution models and detection models (EO and IR).

Figure 12 indicates that uncertainties are dependent on the physical means (course of action). An example of how uncertainties are dependent on the choice of the course of action is shown in Table 7. The three courses of action have three different energy sources: (1) a Li-Ion Battery, (2) a Li-S Battery, and (3) JP-8 kerosene fuel. The uncertainty in the time that a UAV can dwell at an area of interest is dependent on the choice of energy technology and the models to predict the dwell time would be different. There also are two different payload data links: (1) fixed-antenna VHF and (2) an electronically steered phased array Ka band. Also, the uncertainty in the time that a UAV needs to send ISR data to the command station is dependent on the choice of technology and the models to predict the transmission time would be different.

Table 7.

Three small unmanned aerial vehicle courses of action [50].

Types of models that might be used for evaluation include descriptive models, predictive models, and predictive models [51]. Descriptive models explain relationships between previously observed states. Examples of descriptive models are catalog descriptions of COTS items that describe cost and performance as a function of the attributes of the items, e.g., pricing of cloud services as a function of processing throughput, software functionality, and data storage being purchased. Predictive models attempt to forecast future actions and resulting states, frequently employing forecast probabilities, seasonal trends, or even subject matter expert opinions (i.e., educated guesses). Prescriptive models seek COAs that, given initial state, lead to the best anticipated ending state. Examples of prescriptive models are optimization algorithms used to simulate behavior of threats to the system of interest when evaluating system survivability.

According to Kempf [52], attributes of the analytic tools that come in to play when selecting them to be used for evaluation are as follows:

- Analytical Specificity or Breadth;

- Access to Data;

- Execution Performance;

- Visualization Capability;

- Data Scientist Skillset;

- Vendor Pricing;

- Team Budget;

- Sharing and Collaboration.

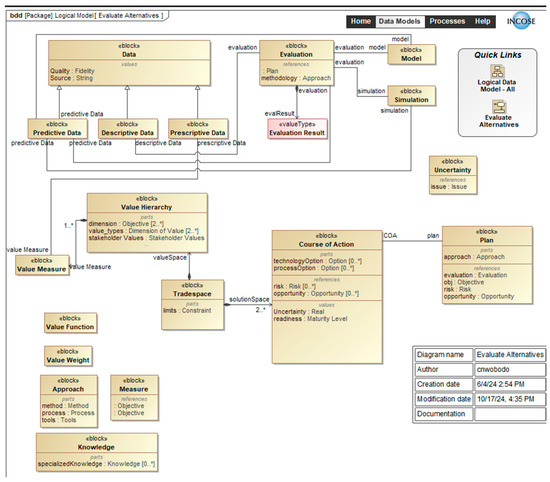

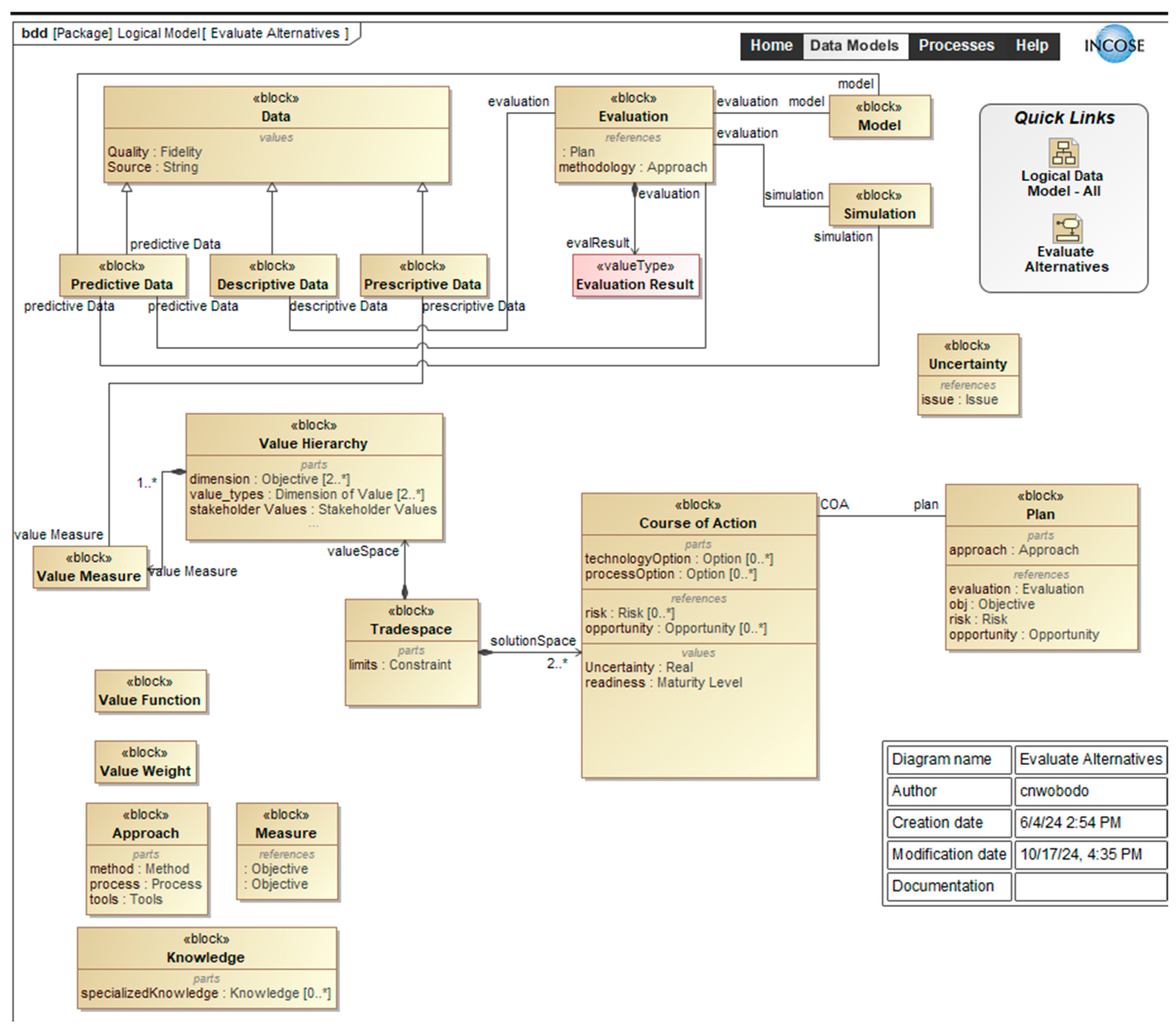

3.2.6. Step 6: Evaluate Alternatives

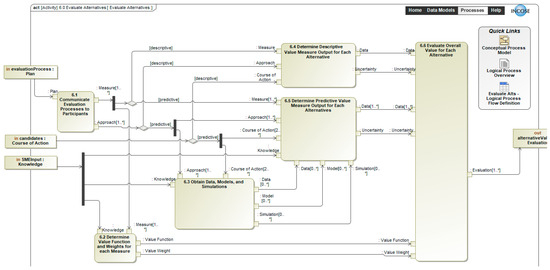

The data from the Assessment Flow Diagram provides the data for the evaluation of alternatives. Figure 14 uses an activity diagram to depict the logical process for the evaluate alternatives activity.

Figure 14.

Logical process model: Step 6, evaluate alternatives.

Activity 6.1 Communicate Evaluation Processes to Participants uses the assessment flow diagram plan to the SMEs and stakeholders who will perform the analysis to assess each value measure for each alternative. In Activity 6.2, when there are multiple objectives and a value measure for each objective, the most common value model to evaluate the alternatives is the additive value model shown in Equations (1) and (2) [37,42,45,50] to calculate the value of each alternative. The single dimensional value functions convert each alternative’s score on each measure to a dimensionless common value. The swing weights provide the relative value of the swing in the value measure from its lowest level to its highest level. The above references provide methods for assessing the value functions and the swing weights.

where for a set of value measure scores given by vector x,

i = 1 to n is the index of the value measure

xi is the alternative’s score of the ith value measure (from activity 6.4 or 6.5)

vi(xi) = is the single dimensional y-axis value of an x-axis score of xi

wi is the swing weight of the ith value measure

v(x) is the alternative’s value of x

- and the swing weights are normalized.

Activity 6.2 develops the value functions and swing weights for each value measure. The data from the Assessment Flow Diagram provides the data for the evaluation of alternatives (Activities 6.4 and 6.5). Activity 6.6 uses this and Equation (1) to calculate the overall value for each alternative, v(x). Since value measures are aligned with fundamental objectives (and not means objectives), there is no index for the alternative in the two equations since the single dimensional value functions and the swing weights do not depend on the alternatives (i.e., the means).

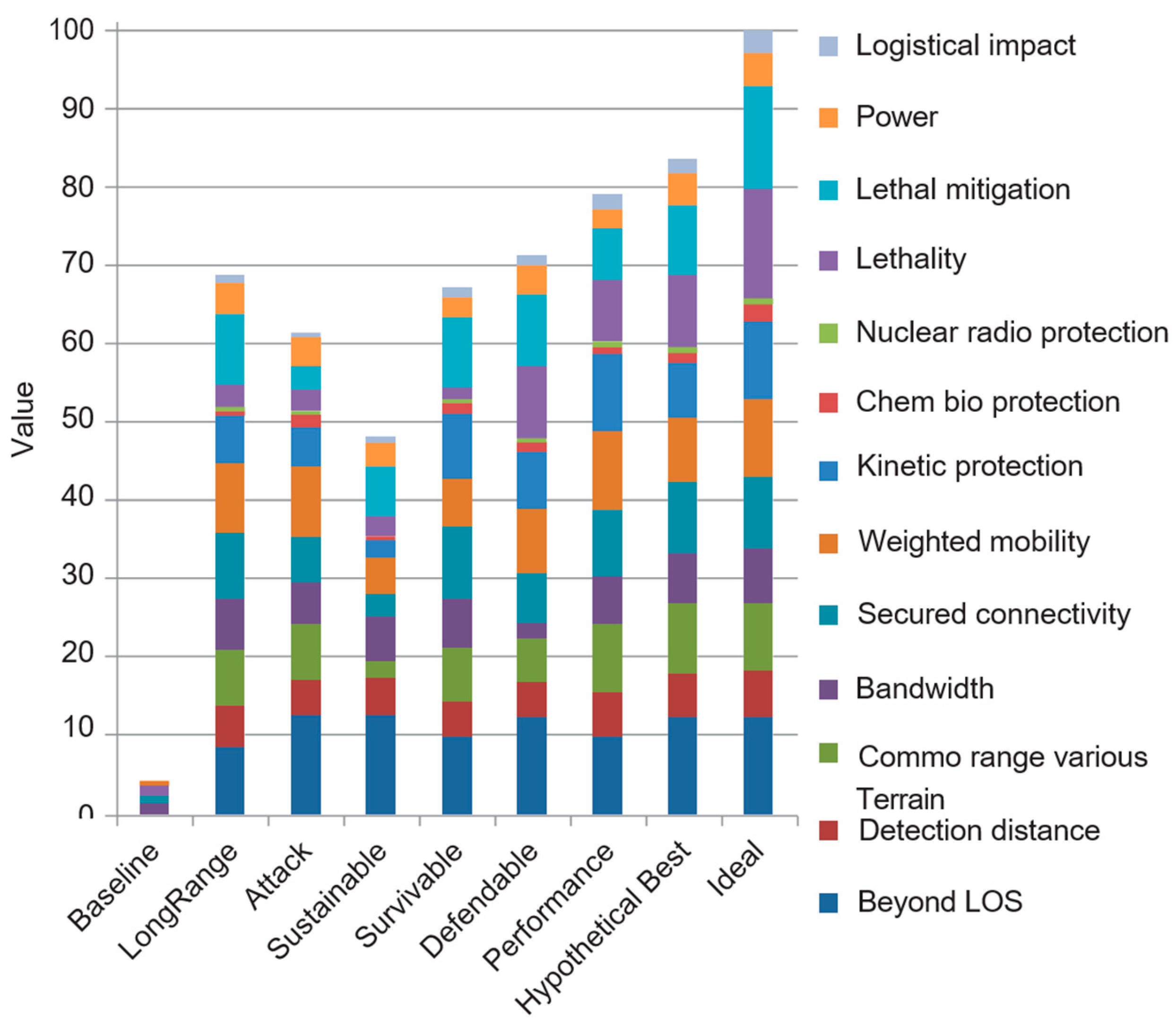

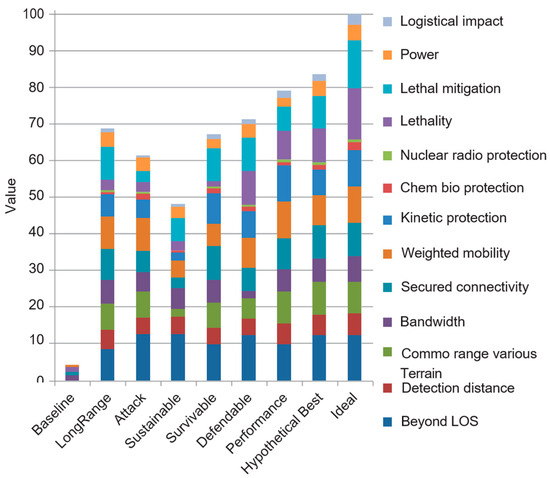

MacCalman et al. [53] analyzed the conceptual design for future Army Infantry squad technologies to enhance Infantry squad effectiveness. An Infantry squad is a nine-person unit that consists of a squad leader and two teams of four. The Infantry squad system is the collection of integrated technologies that consists of soldiers, sensors, weapons, exoskeletons, body armor, radios, unmanned aerial vehicles (UAV), and robots. The analysis used 6 design concepts and 13 value measures. We use this example to illustrate DADM Steps 6, 7, and 8. Figure 15 provides the value component chart [53] for the deterministic value for each alternative. The chart shows the value contribution based on the value model and the estimated value measure performance. The ideal alternative assumes the best value for each value measure and size of each value measure’s contribution is determined by the swing weights. The hypothetical best alternative is obtained by taking the best alternative score for each value measure. This chart shows that the alternatives are all improvements over the baseline and that Performance is close the Hypothetical Best Alternative.

Figure 15.

Value component chart [53].

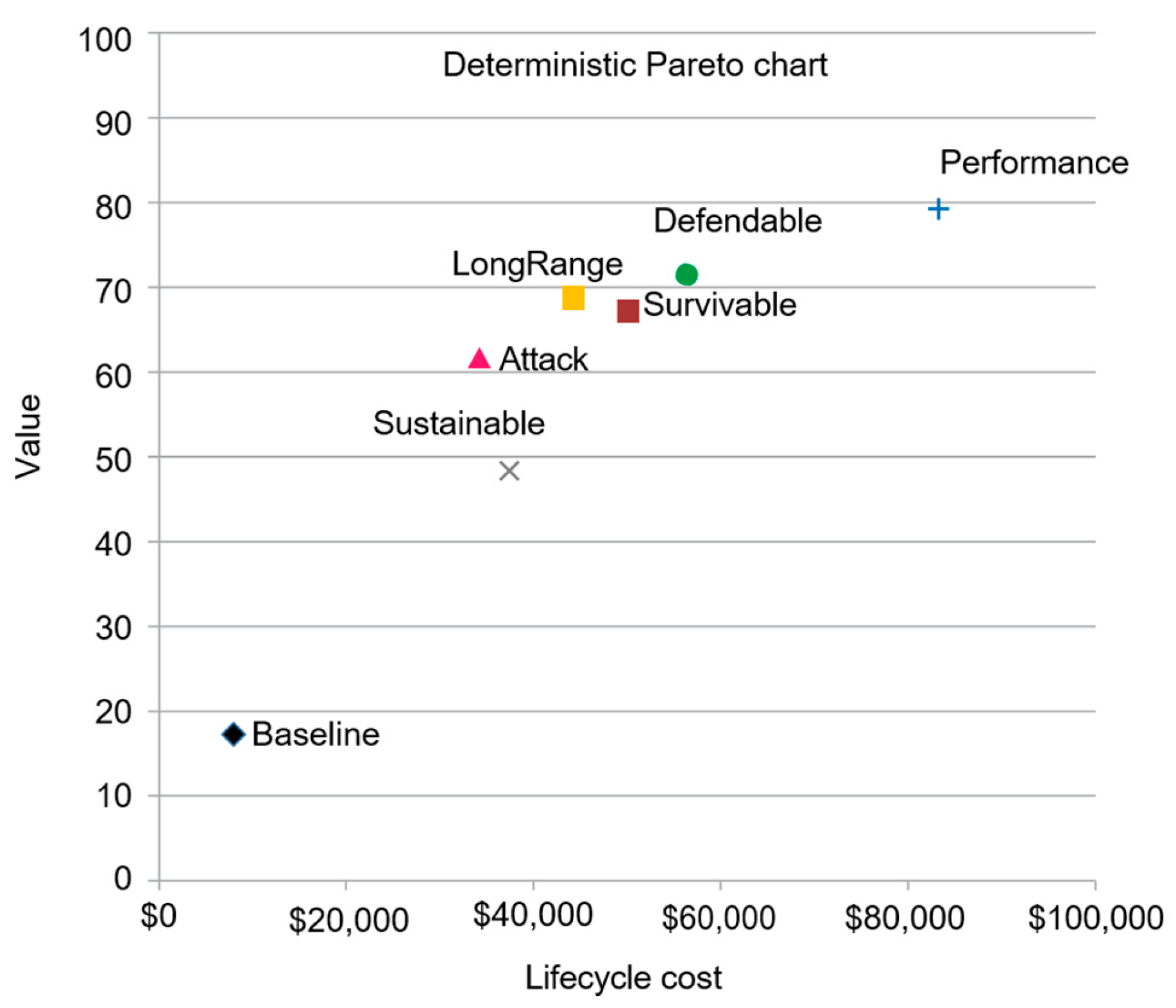

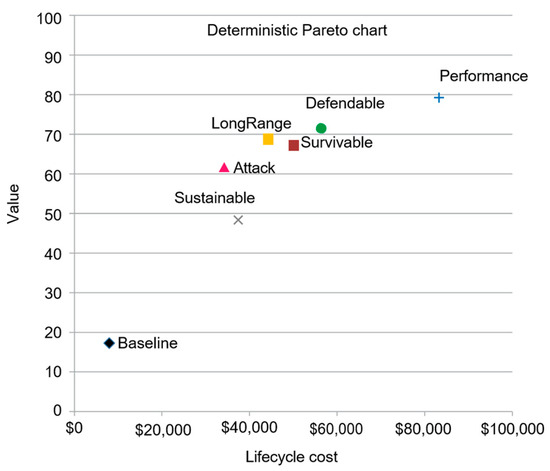

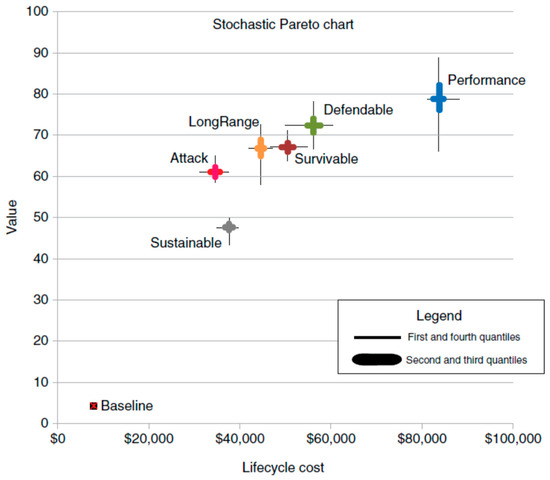

Figure 16 provides the cost vs. value chart [53] for this analysis. This figure shows that Sustainable and Survivable are dominated by Attack and LongRange, respectively. The non-dominated alternatives have a range of value from about 62 to 78 for a life cycle cost of USD 38 to 82k.

Figure 16.

Cost vs. value chart [53].

Section 3.2.7 provides probabilistic analysis and illustrates how it could be used to improve the alternatives. Section 3.2.8 will use the figures in Section 3.2.6 and Section 3.2.7 to describe the trade-offs between the alternatives.

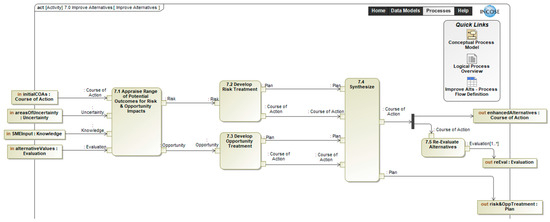

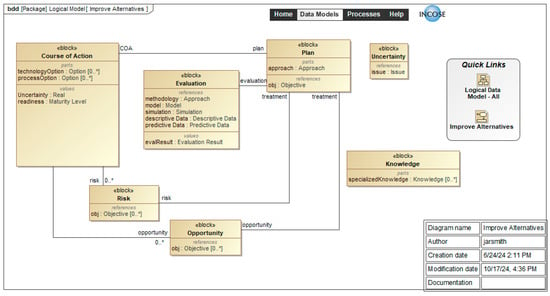

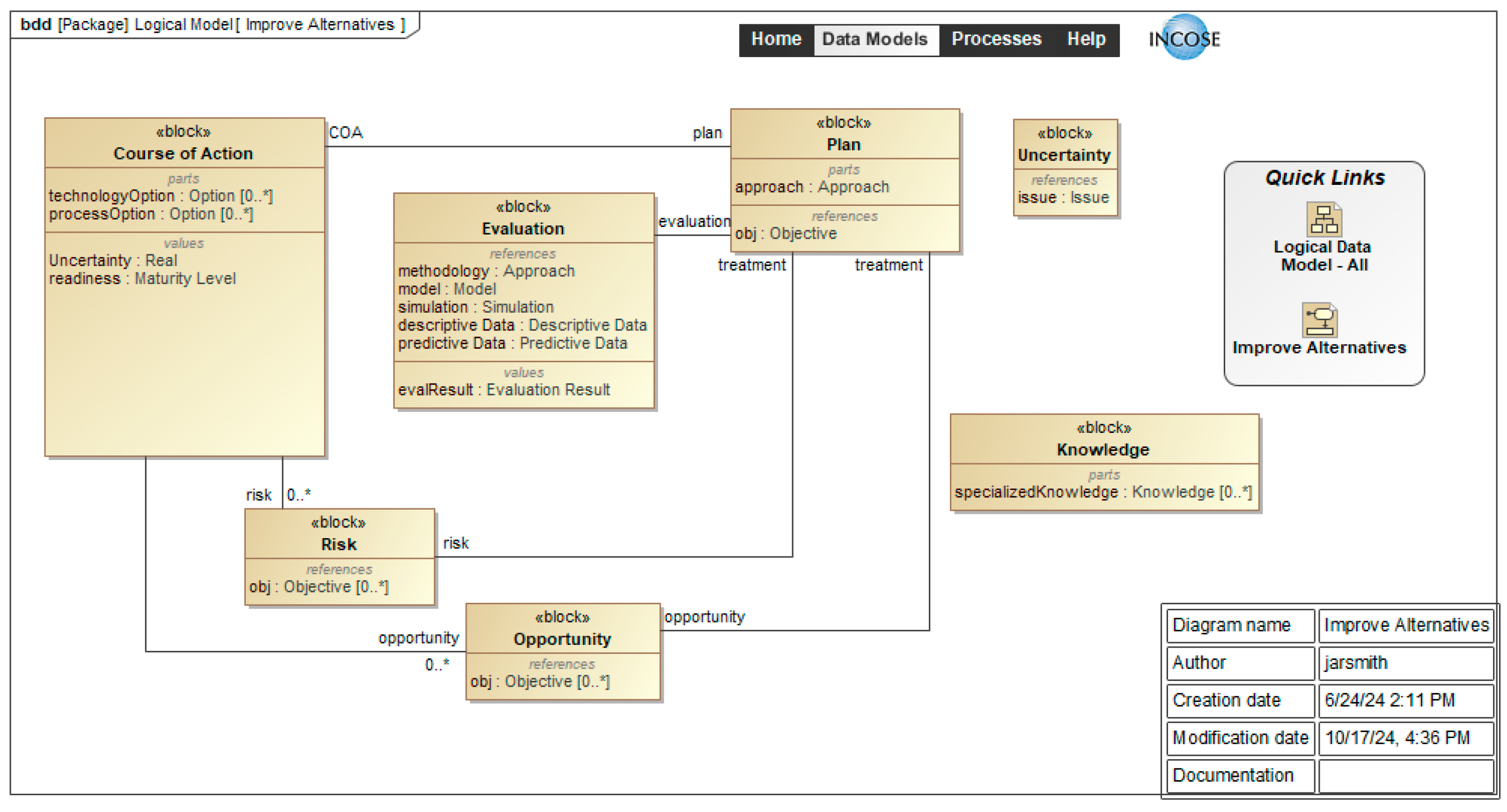

3.2.7. Step 7: Improve Alternatives

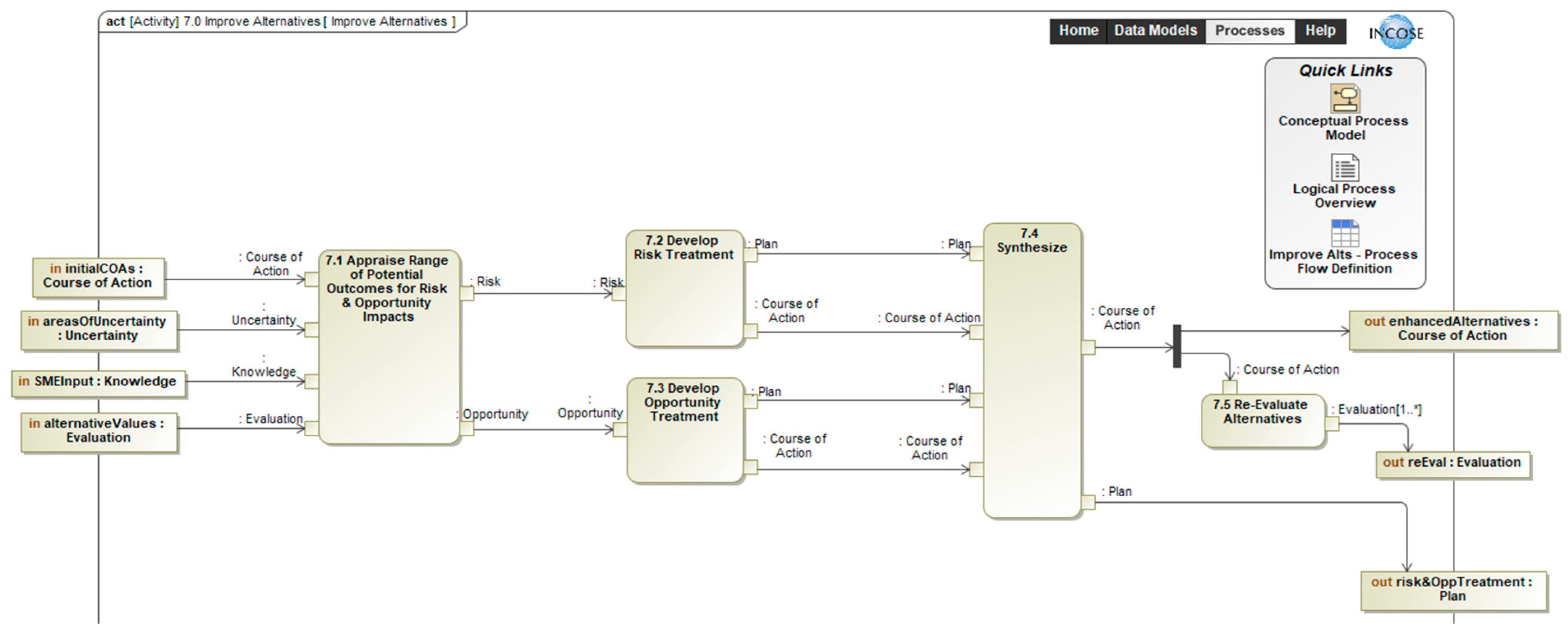

Figure 17 uses an activity diagram to depict the logical process for improving alternatives.

Figure 17.

Logical process model: activity diagram for Step 7, improve alternatives.

According to the Department of Defense Risk, Issue, and Opportunity (RIO) Management Guide for Defense Acquisition Programs [47]:

- A risk is a potential future event or condition that may have a negative effect on achieving program objectives for cost, schedule, and performance. A risk is defined by (1) the likelihood that an undesired event or condition will occur and (2) the consequences, impact, or severity of the undesired event, were it to occur. An issue is an event or condition with negative effect that has occurred (such as a realized risk) or could occur that should be addressed.

- An opportunity offers potential future benefits to the program’s cost, schedule, or performance baseline.

It should be noted that the negative effects of risks and the benefits of opportunities are the result of outcomes that are uncertain events.

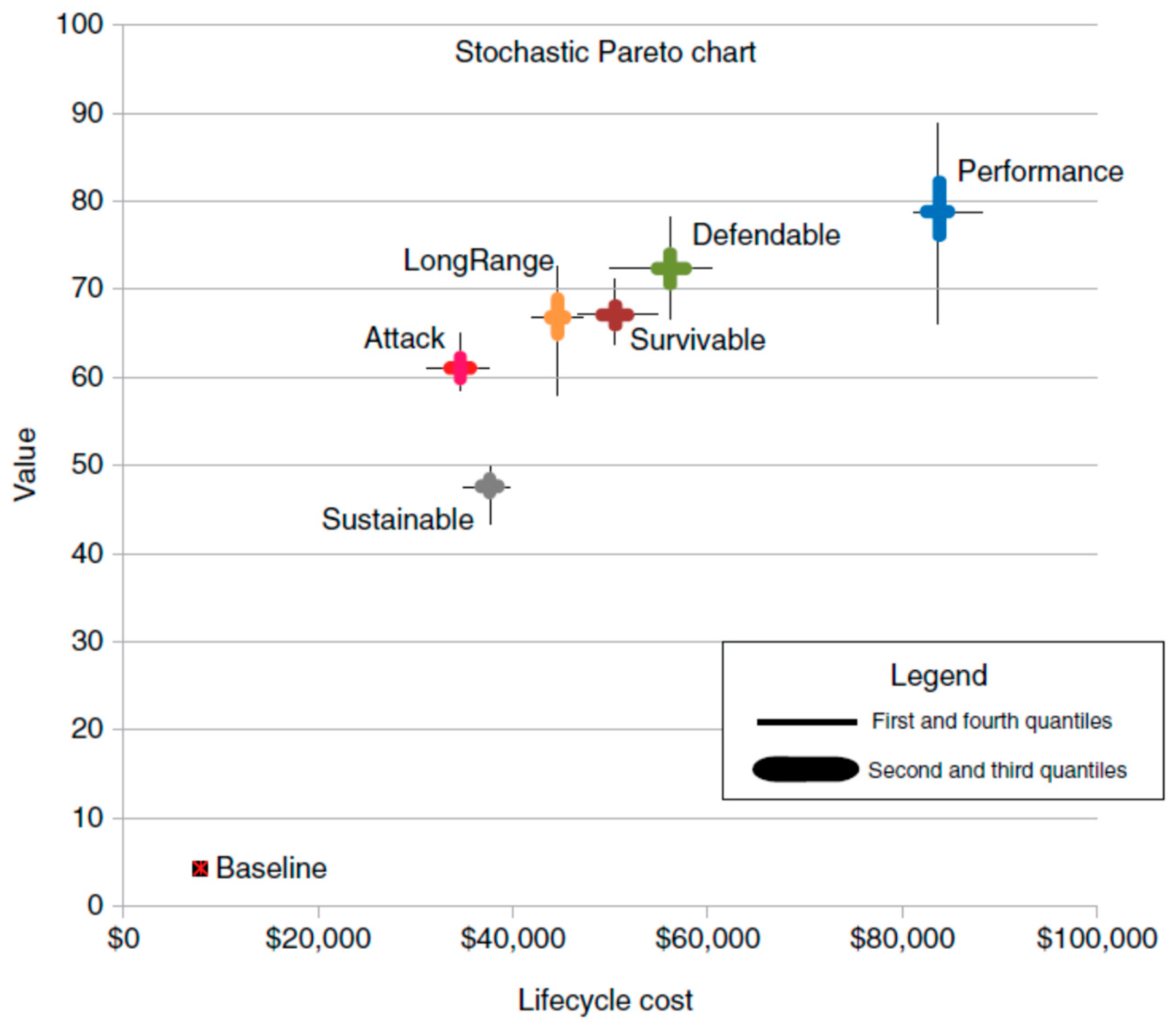

A value-based quantitative approach to identifying risk and opportunities is described by MacCallum, Parnell, and Savage [53] for the system design problem that involves the development of new infantry squad technologies. After evaluating the alternatives, a stochastic Pareto chart of cost versus value can be created as shown in Figure 18, which is a bivariate box-and-whisker plot for each alternative’s cost and value. The boxes (the thick lines) along each axis represent the second and third quartiles of the output data from the cost and value models used for evaluating the courses of action while the lines (whiskers) represent the first and fourth quartiles. Based on the stochastic Pareto chart, Sustainable is stochastically dominated by all other alternatives and can be eliminated from consideration. The first step in improving the non-dominated alternatives is to identify the risks and opportunities based on the stochastic Pareto chart. LongRange has a large downside risk for low value and that Defendable may have opportunities for cost savings. Pursuing a cost-effective risk treatment for LongRange would be an improvement to the LongRange. Similarly, investigation of the source of cost uncertainty for Survivable and LongRange may yield an opportunity to reduce the cost uncertainty.

Figure 18.

Stochastic Pareto chart for the assault scenario [53].

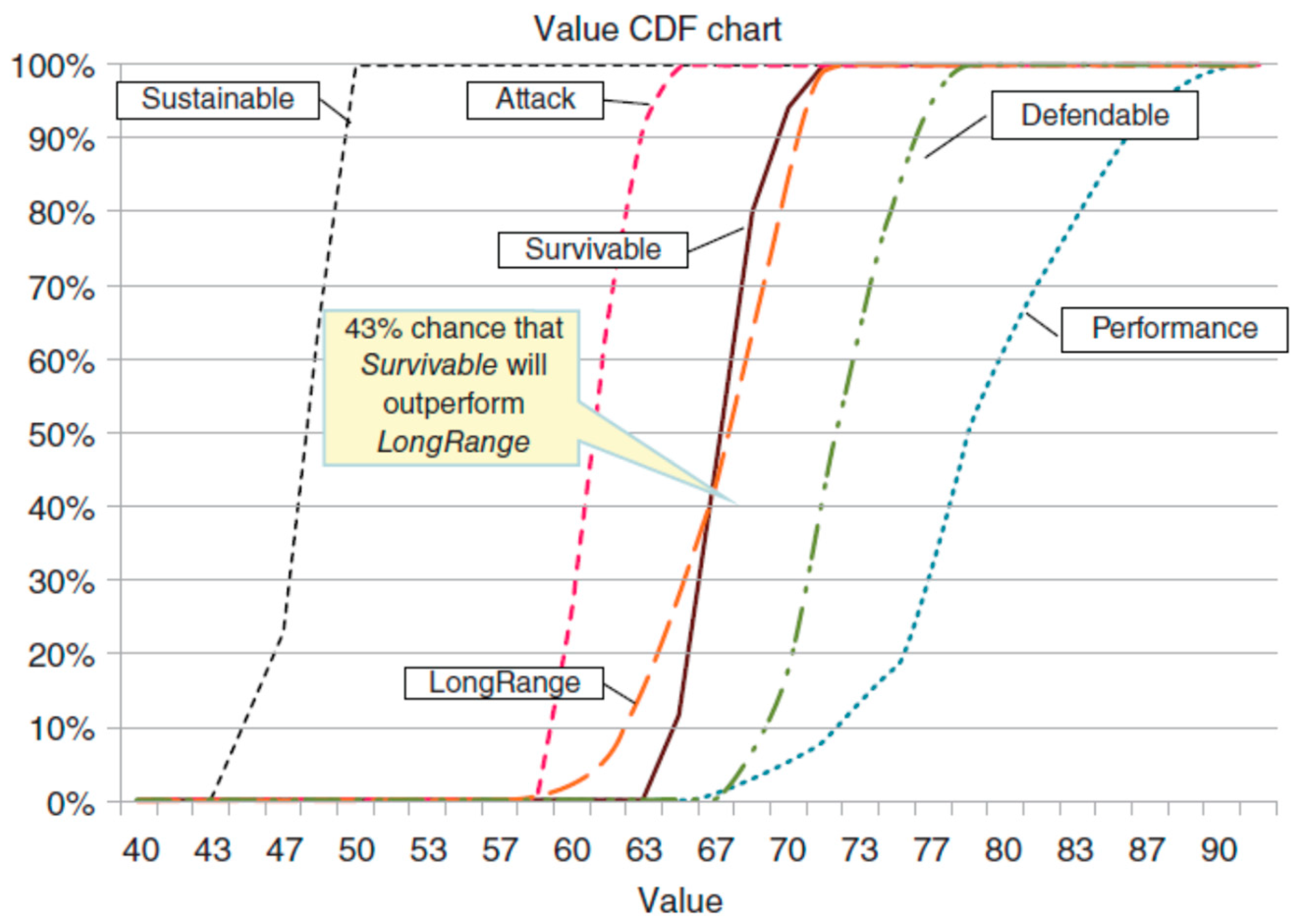

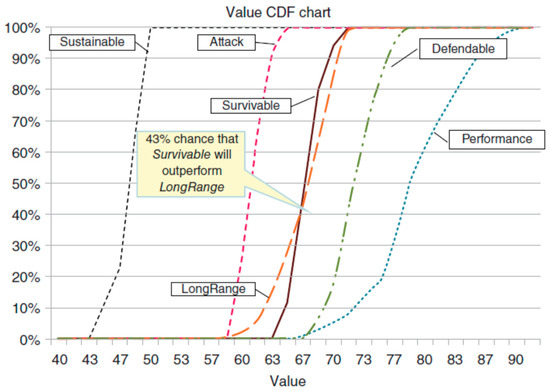

To gain further understanding of the risk and opportunities, cumulative distribution charts (CDF) can be developed using the output data from the cost and value models used for evaluating the courses of action. Figure 19 shows a CDF chart with six S-curves that represent the uncertain alternative value outcomes. Sustainable is stochastically dominated by the other five alternatives, because its CDF is to the left of the other, and likewise, Attack is stochastically dominated by Survivable, Defendable, and Performance. The Performance and Defendable alternatives stochastically dominate all others because their S-curves are positioned completely to the right of all others. The risk profiles of Survivable and LongRange value cross, indicating that there is no clear winner between the two; we may want to accept a higher cost by choosing Survivable to mitigate the risk associated with LongRange. The CDF chart tells us that there is a significant chance Survivable will outperform LongRange and that Survivable has less risk due to its steeper risk profile.

Figure 19.

Cumulative distribution of value for the assault scenario [53].

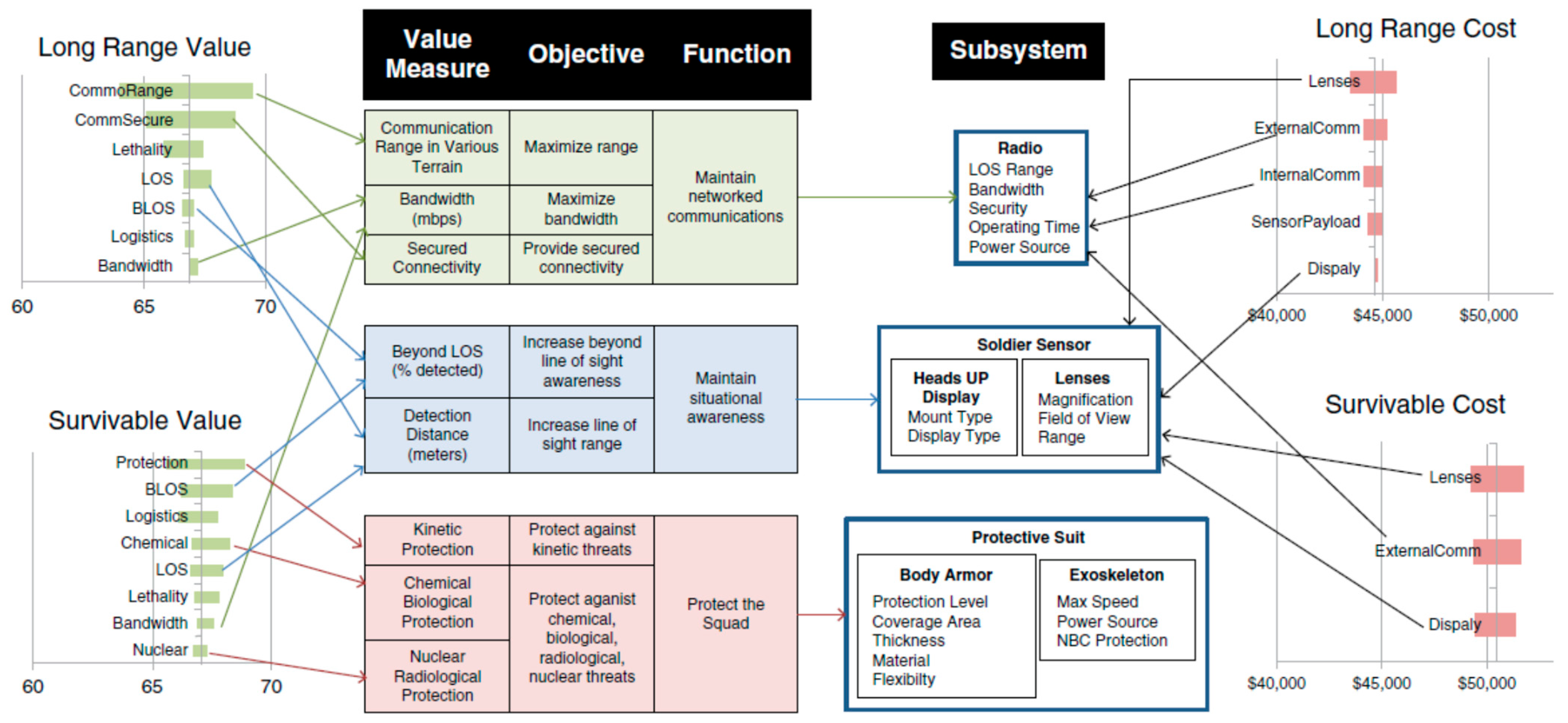

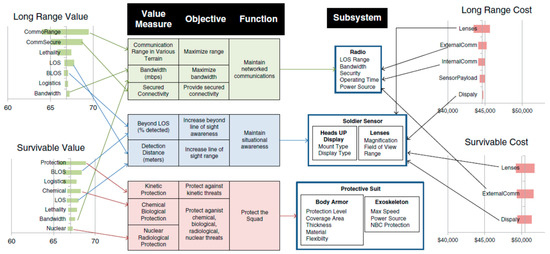

Tornado diagrams allow us to compare the relative importance of each uncertain input variable. Figure 20 has tornado charts for the output from stochastic value and cost for the Long Range and Survivable courses of action. The bars are sorted so that the longest bars are at the top; sorting the bars in this way makes the diagram look like a tornado. The left end of each bar is the average of the output from the value or cost model when the input is fixed at its 30th percentile value, and the right end of each bar is the average of the output from the value or cost model when the input is fixed at its 70th percentile. In Figure 20, the input variables for value are the value measures and the input variables for cost are the cost components. In addition to tornado charts, Figure 20 traces the value measures to the systems functions; the functions to the subsystems that perform the functions; and the subsystems to the components that are inputs to the cost model. From the LongRange Value tornado chart, it can be determined that the radio drives the value risk for the LongRange course of action while soldier sensor drives its cost uncertainty. The protective suit drives the value risk for the Survivable course of action and while the soldier sensor drives its cost uncertainty. These insights provide a clearer understanding of what drives risk and uncertainty in improving the alternatives. For example, the risk of the LongRange course of action might be reduced by investing more resources into improving the radio’s range, security, and bandwidth features. For both courses of action, there may be an opportunity to reduce the cost and its uncertainty by pursuing an off-the-shelf lantern that performs adequately in terms of Beyond Line-of-Sight detection and Detection Distance.

Figure 20.

Value and cost tornado charts for the Long Range and Survivable courses of action [53].

Additional information on developing risk and opportunity treatments is provided in the Department of Defense Risk, Issue, and Opportunity (RIO) Management Guide for Defense Acquisition Programs [47] and in ISO/IEC/IEEE 16085:2021 [48].

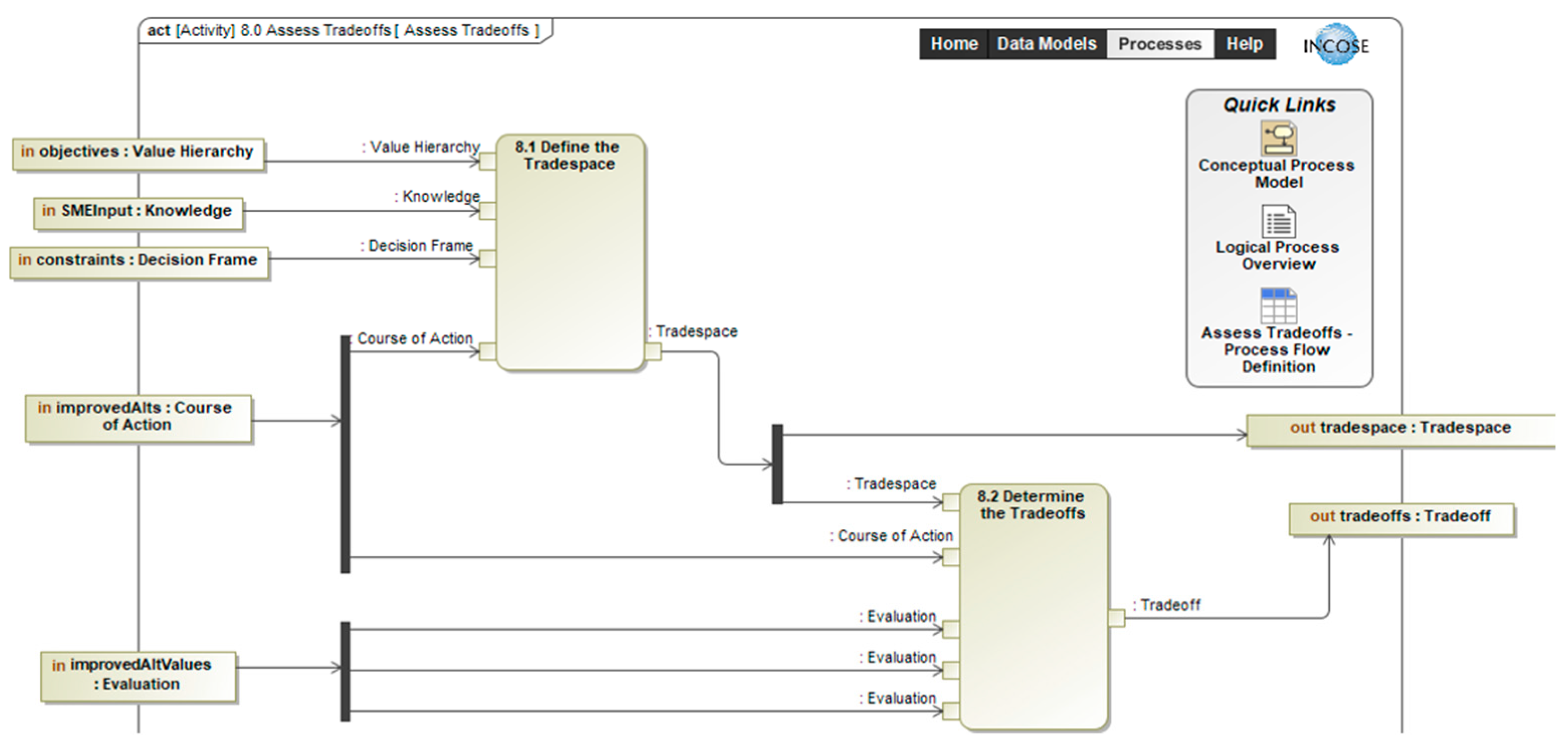

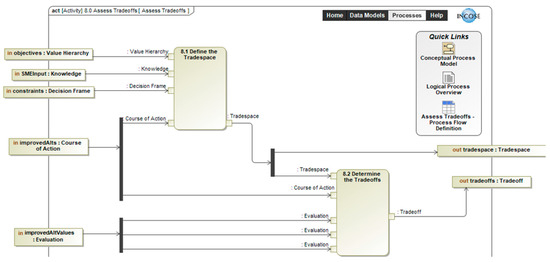

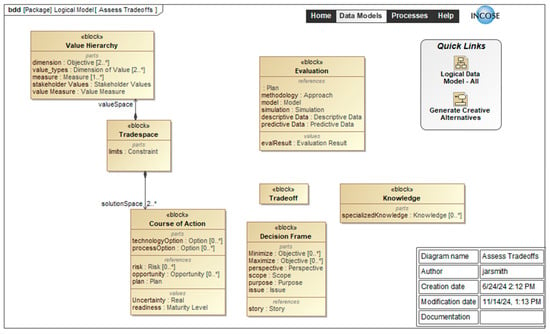

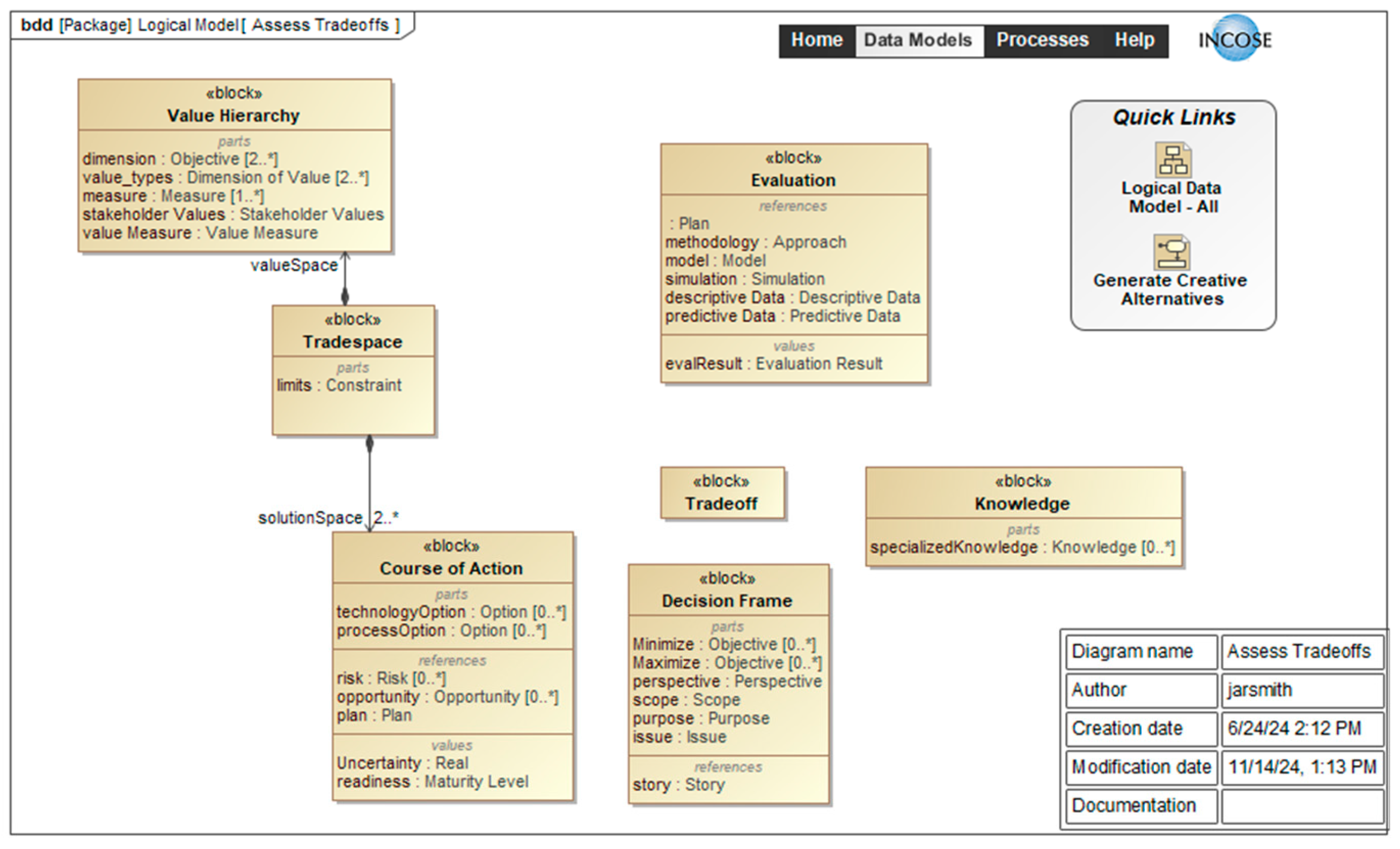

3.2.8. Step 8: Assess Trade-Offs

Figure 21 uses an activity diagram to depict the logical process model for Assess Trade-offs. The step uses the analysis provided in the two previous steps.

Figure 21.

Logical process model: activity diagram for Step 8, Assess Trade-offs.

The deterministic tradespace is provided in the value component chart in Figure 15 and cost vs. value chart in Figure 16. The cost vs. value chart shows that Sustainable and Survivable are dominated by Attack and LongRange, respectively. The non-dominated alternatives have a range of value from about 62 to 78 for a life cycle cost of USD 38 to 82k.

The probabilistic tradespace is shown in Figure 18 and Figure 19. These figures show that there is more variation in value than in the life cycle cost. In addition, there is significant variation in value of the Performance alternative. Also, LongRange does not dominate Survivable. These insights reinforce the importance of performing probabilistic analysis.

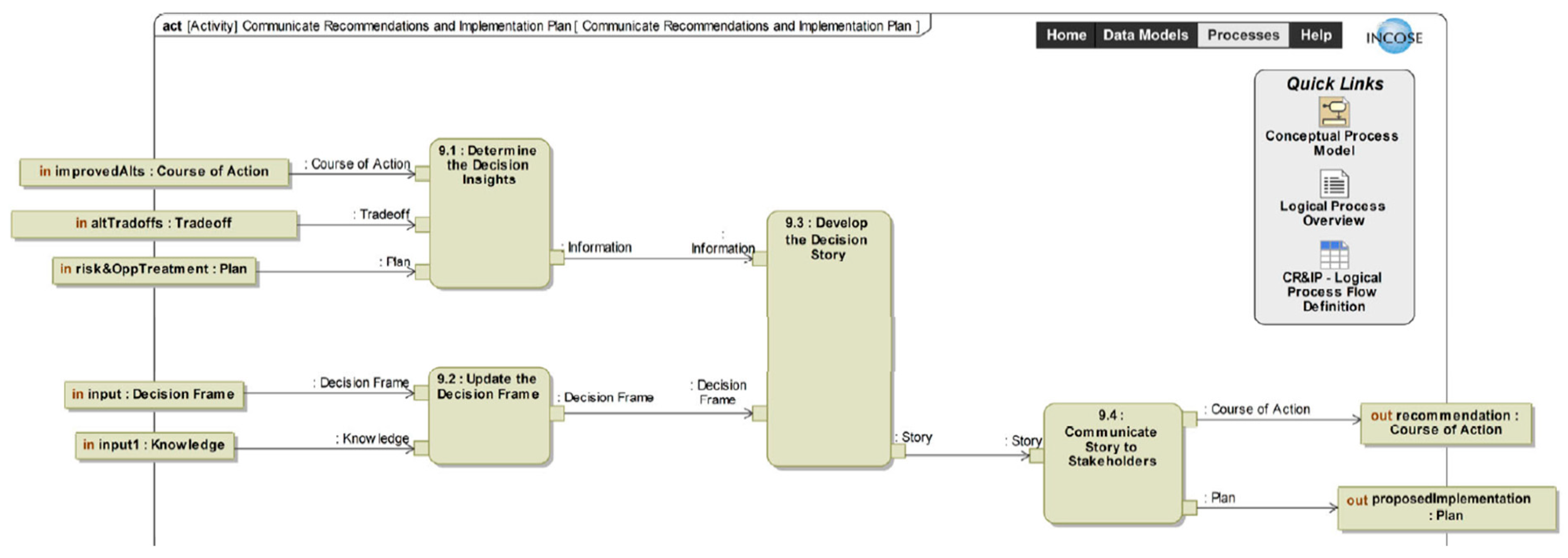

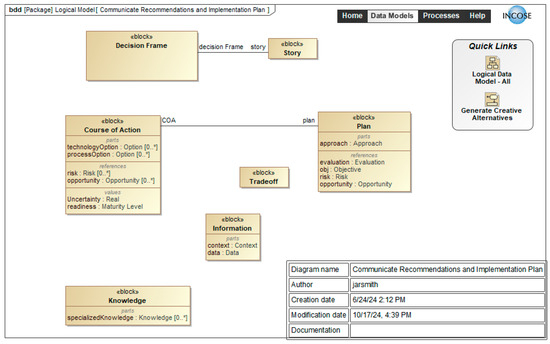

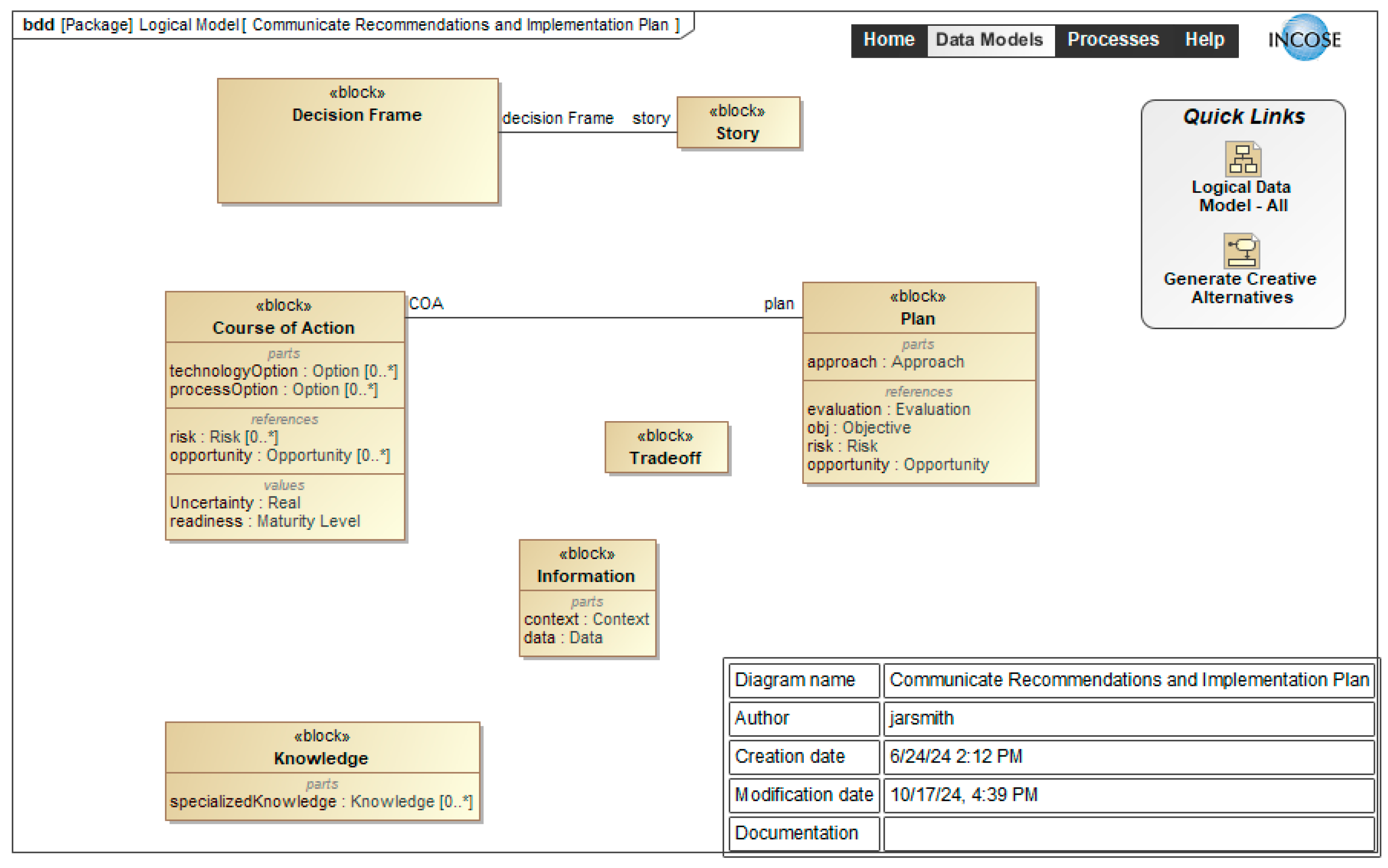

3.2.9. Step 9: Communicate Recommendations and Implementation Plan

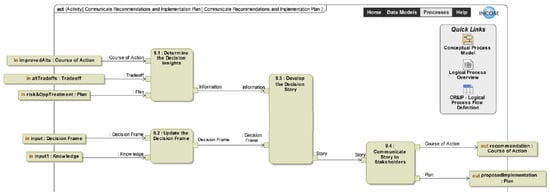

Figure 22 uses an activity diagram to depict the logical process model for Communicate Recommendations and Implementation Plan.

Figure 22.

Logical process model: activity diagram for Step 9, Communicate Recommendations, and Implementation Plan.

Table 8 provides the rationale for including each of the data elements in the Make Decision Process. It is important to remember that major stakeholders and decision makers are busy, and project managers/systems analysts/systems engineers must provide clear and concise communications [54]. In addition, organizational guidance and individual leader preferences should be considered in developing decision-making documents and presentations.

Table 8.

Communication recommendations and implementation plan.

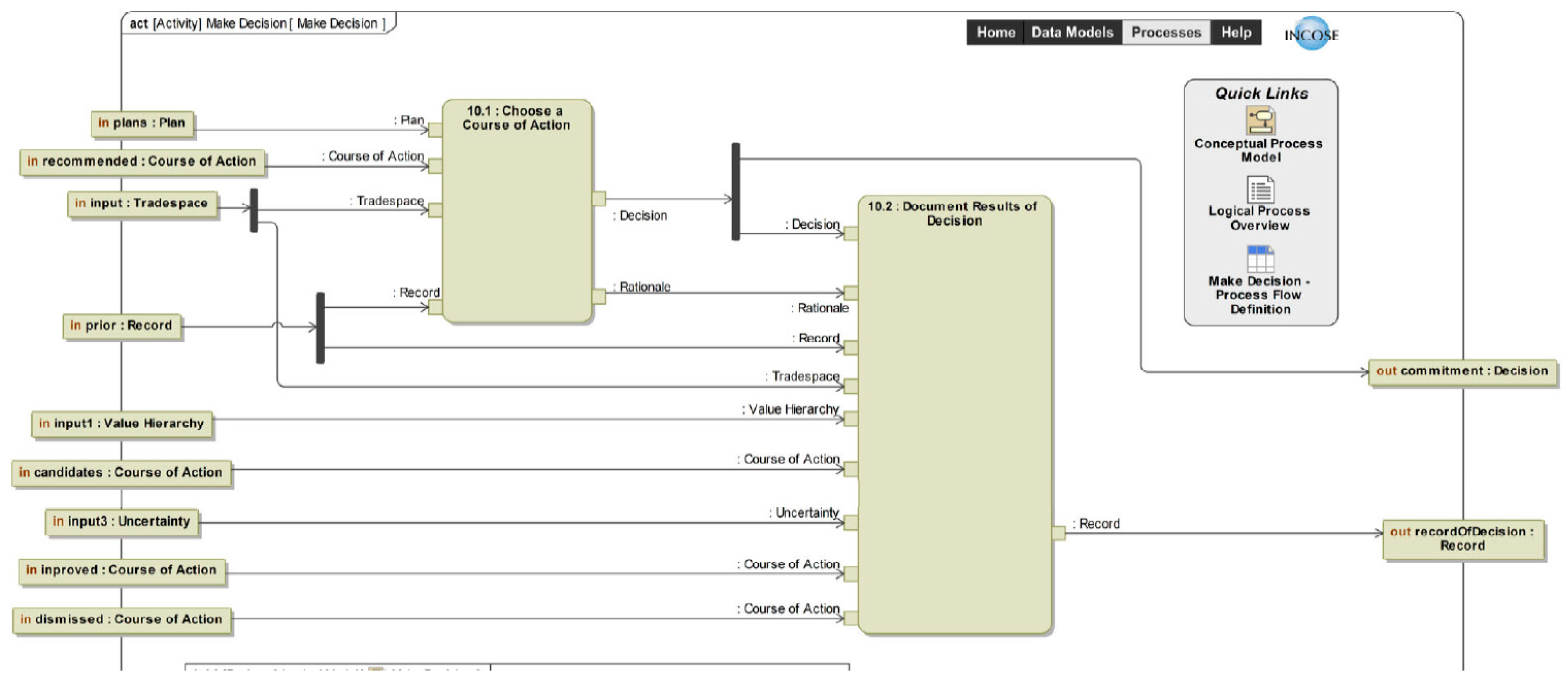

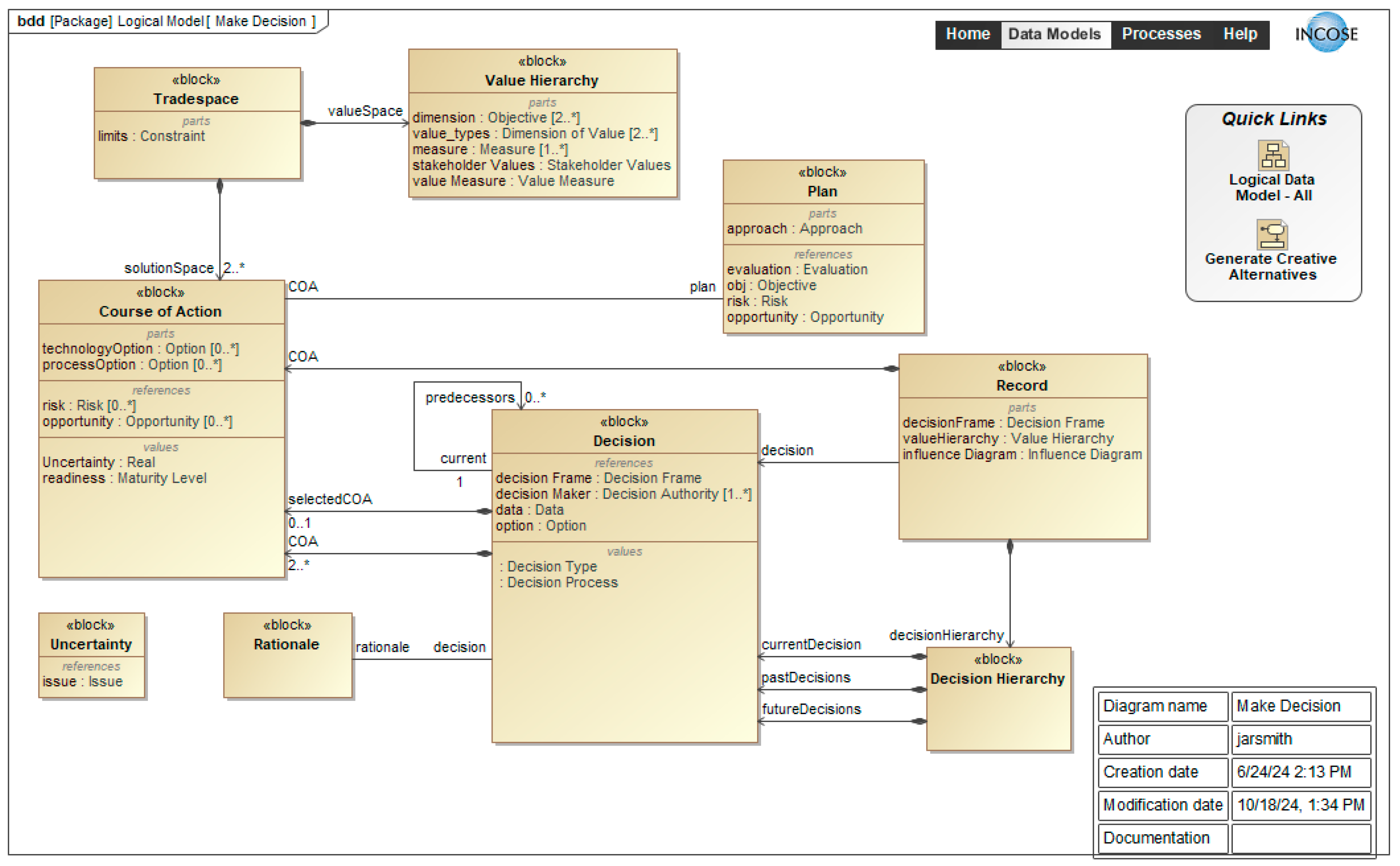

3.2.10. Step 10: Make Decision

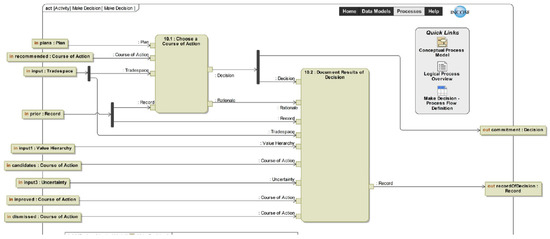

Major decisions usually involve major stakeholders and decision-makers at multiple decision levels. DADM includes several digital artifacts that are included in the decision-making process. The most common artifacts are included in Figure 23, the activity diagram for the logical process model for Make Decision. Of course, any DADM data artifact would be digitally available for use in the decision-making process.

Figure 23.

Logical process model: activity diagram for Step 10, Make Decision.

It is important to remember that major stakeholders and decision makers are very busy and project managers/systems analysts/systems engineers must provide clear and concise communications [53,54]. In addition, organizational guidance and individual leader preferences should be considered in developing decision-making documents and presentations. The data elements in the Make Decision Process that should be communicated to provide the rationale are as follows:

- Decision Hierarchy: Decision-makers are busy. It is important to identify the major decisions that have been made, the decision(s) to be made and major future decisions. It may be necessary to review the decision frame.

- Value Hierarchy: The system objectives include performance, cost, and schedule. The value hierarchy includes the performance objectives and value measures.

- Major Requirements: Decision-makers may want to verify the recommended COA that meets the major system requirements.

- Courses of Action (COAs): The decision-makers and key stakeholders need to understand the COAs that were identified and evaluated. It is common that decision-makers or key stakeholders have preferences for one or more COAs. They may distrust the systems analysis or not decide if their preferred COA is not included.

- Tradespace: The decision-makers and key stakeholders are usually interested in knowing the COA tradespace, the dominated COAs and the trade-offs between the non-dominated COAs. Decision-makers and key stakeholders typically want to know why their preferred COAs are not recommended.

- Uncertainty: Decision-makers and key stakeholders know that system development involves many uncertainties (See Step 4). They will want to understand the major remaining uncertainties that create future risks and opportunities.

- Decision (Selected COA): The recommended COA, the rationale for the decision, and the estimated cost, schedule, and performance of that COA.

- (Decision) Rationale: A clear and concise summary of the non-dominated COAs and the rationale for the recommended COA.

- (Implementation) Plan: The implementation plan usually includes the key program personnel, responsibilities, milestones, major risks, and risk reduction activities.

- (Decision) Record: The decision record should include the decision made and the rationale for that decision. The record may include other DADM data based on organizational and program requirements.

4. Discussion

The DADM can be used in the Concept Stage and reused to inform Life Cycle Decisions throughout the system life cycle. In Table 9, we use the Generic Life Cycle [1,33] to provide decisions opportunities to improve the system value that are commonly encountered throughout a system’s life cycle. Many of these decisions would benefit from a DADM that integrates the data produced by performance, value, cost, and schedule models that are meaningful to the decision makers and stakeholders. The table also lists the types of data that would be available in DADM for each life cycle stages.

Table 9.

Illustrative decisions and data availability throughout the System Life Cycle (adapted from [1]).

Looking ahead, the DADM has a clear path for incremental development through a series of targeted future activities. The next step is the development of example implementations and case studies. By piloting DADM in real-world contexts—such as system acquisitions, technology selection, or lifecycle planning—we intend to demonstrate its practical value, collect lessons learned, and gather specific feedback to further refine the model. These case studies will help build a stronger evidence base for DADM’s utility and support broader adoption by offering concrete examples and guidance for potential users.

We will continue iterating on the model alongside this effort, aiming for the release of DADM v2.0 in late 2025. This version will include a physical data model, shaped by insights from pilot use cases, and will serve as a detailed reference for organizations evaluating DADM. Maintaining this feedback-driven refinement process will help ensure the model continues to meet the needs of the systems engineering community.

Technical integration is another near-term opportunity. Aligning DADM with SysML v2 and BPMN will promote interoperability with contemporary MBSE tools and strengthen traceability from requirements through decision analytics. Another active area of exploration is the incorporation of artificial intelligence (AI)—especially Large Language Models (LLMs)—to assist with data ingestion, recommendation generation, and automation of alternative evaluation or risk assessment. A longer-term goal is to enable auditable, AI-enabled decision support by capturing rationale and potential bias, thereby supporting regulatory and ethical compliance.

In parallel, pursuing formal standardization remains important. As we validate and improve DADM through pilot projects, workshops, and collaboration with industry, steps toward a standards designation will encourage wider, more consistent adoption across organizations. Through these targeted activities, DADM will continue to advance toward enabling data-driven, transparent, and auditable decision-making in MBSE and digital engineering environments.

Author Contributions

Conceptualization, D.C., G.S.P. and C.R.K.; methodology, D.C., C.R.K., G.S.P. and F.S.; software, J.S. and C.N.; validation, C.R.K.; formal analysis, G.S.P., C.R.K. and D.C.; investigation, G.S.P. and C.R.K.; resources, D.C. and J.S.; data curation, C.N.; writing—original draft preparation, G.S.P., C.R.K. and D.C.; writing—review and editing, F.S. and J.S.; visualization, J.S., C.N. and D.C.; supervision, D.C., G.S.P. and C.R.K.; project administration, J.S., S.D. and F.S.; conference funding acquisition, F.S. All authors have read and agreed to the published version of the manuscript.

Funding

Funding was provided by INCOSE (www.incose.org) Technical Operations for travel funds to present DADM at two professional conferences.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to restrictions related to membership access to INCOSE Teamwork Cloud environment.

Acknowledgments

We would like to acknowledge the advice of several INCOSE members including William Miller, INCOSE Technical Operations, the INCOSE Impactful Products Committee, the SE Lab managers, and members of the Decision Analysis Working Group that provided important comments on DADM during its development.

Conflicts of Interest

Authors Devon Clark, Jared Smith, Chiemeke Nwobodo and Sheena Davis were employed by Deloitte Consulting LLP. Author Frank Salvatore was employed by SAIC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following acronyms are used in this manuscript:

| Abbreviation | Description |

| CDF | Cumulative Distribution Function |

| COA | Course of Action |

| DADM | Decision Analysis Data Model |

| DAMA | Data Management Association |

| DAWG | INCOSE Decision Analysis Working Group |

| DMBoK | Data Management Body of Knowledge |

| INCOSE | International Council on Systems Engineering |

| ISO | International Organization for Standardization |

| MBSE | Model Based Systems Engineering |

| MOE | Measure of Effectiveness |

| MOP | Measure of Performance |

| SEBoK | Systems Engineering Body of Knowledge |

| SH | Stakeholder |

| SME | Subject Matter Expert |

| SysML | Systems Modeling Language |

Appendix A. DADM Definitions

We used the following sources for the DADM definitions in priority order: ISO Standards, e.g., ISO/IEC/IEEE 15288-2023 [33]; Handbooks, e.g., INCOSE Systems Engineering Handbook [1]; bodies of knowledge, e.g., systems engineering textbooks [55]; SeBoK [34] and PMI [56]; refereed papers; and the Merriam-Webster Dictionary [57].

Table A1.

DADM Definitions.

Table A1.

DADM Definitions.

| Term | Definition |

|---|---|

| Analysis | A detailed examination of anything complex in order to understand its elements, essential features, or their relationships [57]. |

| Approach | The taking of preliminary steps toward a particular purpose [57]. |

| Assumption | A factor in the planning process that is considered to be true, real, or certain, without proof or demonstration [56]. |

| Baseline | An agreed-to description of the attributes of a product at a point in time, which serves as the basis for defining change [1]. |

| Component | A constituent part [57]. |

| Conceptual | The highest level of data organization that outlines entities and relationships without implementation details. [32] |

| Conceptual Data Model | Provides a framework for understanding data requirements, expressed in terms of entities and relationships to identify necessary representations [32]. |

| Conceptual Process | An abstract representation outlining the high-level steps and functions involved in a given process [34]. |

| Configuration Management | A discipline applying technical and administrative direction and surveillance to: identify and document the functional and physical characteristics of a configuration item, control changes to those characteristics, record and report change processing and implementation status, and verify compliance with specified requirements [34]. |

| Constraint | A limiting factor that affects the execution of a project, program, portfolio, or process [56]. |

| Context | An external view of a system must introduce elements that specifically do not belong to the system but do interact with the system. This collection of elements is called the system environment or context [1]. |

| Cost | Cost is a key system attribute. Life Cycle Cost is defined as the total cost of a system over its entire life [1]. |

| Course of Action | A means available to the decision maker by which the objectives may be attained [58]. |

| Current Condition | A state of physical fitness or readiness for use [57]. |

| Data | System element that can be implemented to fulfill system requirements [1]. |

| Data Element | The smallest units of data that have meaning within a context typically representing a single fact or attribute [32]. |

| Data Model | A conceptual representation of the data structures that are required by a database, defining how data is connected, stored, and retrieved [32]. |

| Decision | An irrevocable allocation of resources [59]. |

| Decision Authority | The stakeholder(s) with ultimate decision gate authority to approve and implement a system solution [37]. |

| Decision Frame | A collection of artifacts that answer the question: “What problem (or opportunity) are we addressing?” That is comprised of three components: (1) our purpose in making the decision; (2) the scope, what will be included and excluded; and (3) our perspective including, our point of view, how we want to approach the decision, what conversations will be needed, and with whom [38]. |

| Decision Hierarchy | The specific decisions at hand are divided into three groups: (1) those that have already been made, which are taken as given; (2) those that need to be focused on now, and (3) those that can be made either later or separately [38]. |

| Decision Management | The methods and processes involved in making informed and effective decisions regarding actions and strategies [1]. |

| Descriptive Data | What happened, e.g., historical data [60] |

| Dimension of Performance | A quantitative measure characterizing a physical or functional attribute relating to the execution of a process, function, or activity [1]. |

| Dimensions of Value | The three most common dimensions of value are performance, financial/cost, and schedule [54]. |

| Evaluation | Determination of the value, nature, character, or quality of something or someone [57]. |

| External Impacts | Influences or effects originating outside a specific system or entity, which can be positive, negative, or neutral, and significantly impact the behavior and outcomes of the system [61]. |

| Function | A function is defined by the transformation of input flows to output flows, with defined performance [34]. |

| Influence Diagram | An influence diagram is a network representation for probabilistic and decision analysis models. The nodes correspond to variables which can be constants, uncertain quantities, decisions, or objectives [40]. |

| Information | Knowledge obtained from investigation, study, or instruction [57]. |

| Integration | A process that combines system elements to form complete or partial system configurations in order to create a product specified in the system requirements [34]. |

| Interface | A shared boundary between two systems or system elements, defined by functional characteristics, common physical characteristics, common physical interconnection characteristics, signal characteristics or other characteristics [1]. |

| Issue | A current condition or situation that may have an impact on the project objectives [56]. |

| Iteration | The action or process of repeating [57]. |

| Knowledge | A mixture of experience, values and beliefs, contextual information, intuition, and insight that people use to make sense of new experiences and information [56]. |

| Life Cycle | The stages through which a system progresses from conception through retirement [33]. |

| Life Cycle Cost | Total cost of a system over its entire life [1]. |

| Lifecycle Stage | A period within the lifecycle of an entity that relates to the state of its description or realization [1]. |

| Logical | A framework detailing how data elements will relate and interact, focusing on attributes and data integrity without physical storage considerations [32]. |

| Logical Data Model | An expanded version of the conceptual model detailing entities, attributes, and relationships while remaining agnostic to physical storage [32]. |

| Logical Process | A detailed specification of the sequence and interactions of activities in a process without concern for its implementation [34]. |

| MBSE | Model Based Systems Engineering, an approach that uses models to support systems engineering processes [34]. |

| Measure | A variable to which a value is assigned as the result of the process of experimentally obtaining one or more quantity values that can reasonably be attributed to a quantity [62]. |

| Method | A way, technique, or process of or for doing something [57]. |

| Model | A physical, mathematical, or otherwise logical representation of a system, entity, phenomenon, or process [63]. |

| Model-based | An approach that uses models as the primary means of communication and information exchange in systems development [34]. |