Author Contributions

A.T.R.: conceptualization, data curation, formal analysis, investigation, methodology, software, validation, visualization, writing—original draft preparation, and writing—review and editing; B.F.C.: conceptualization and writing—review and editing; O.J.P.F.: conceptualization and writing—review and editing; A.P.B.: data curation, formal analysis, investigation, methodology, software, validation, visualization, and writing—review and editing; R.T.W.: writing—review and editing; D.N.M.: writing—review and editing. All authors have read and agreed to the published version of the manuscript.

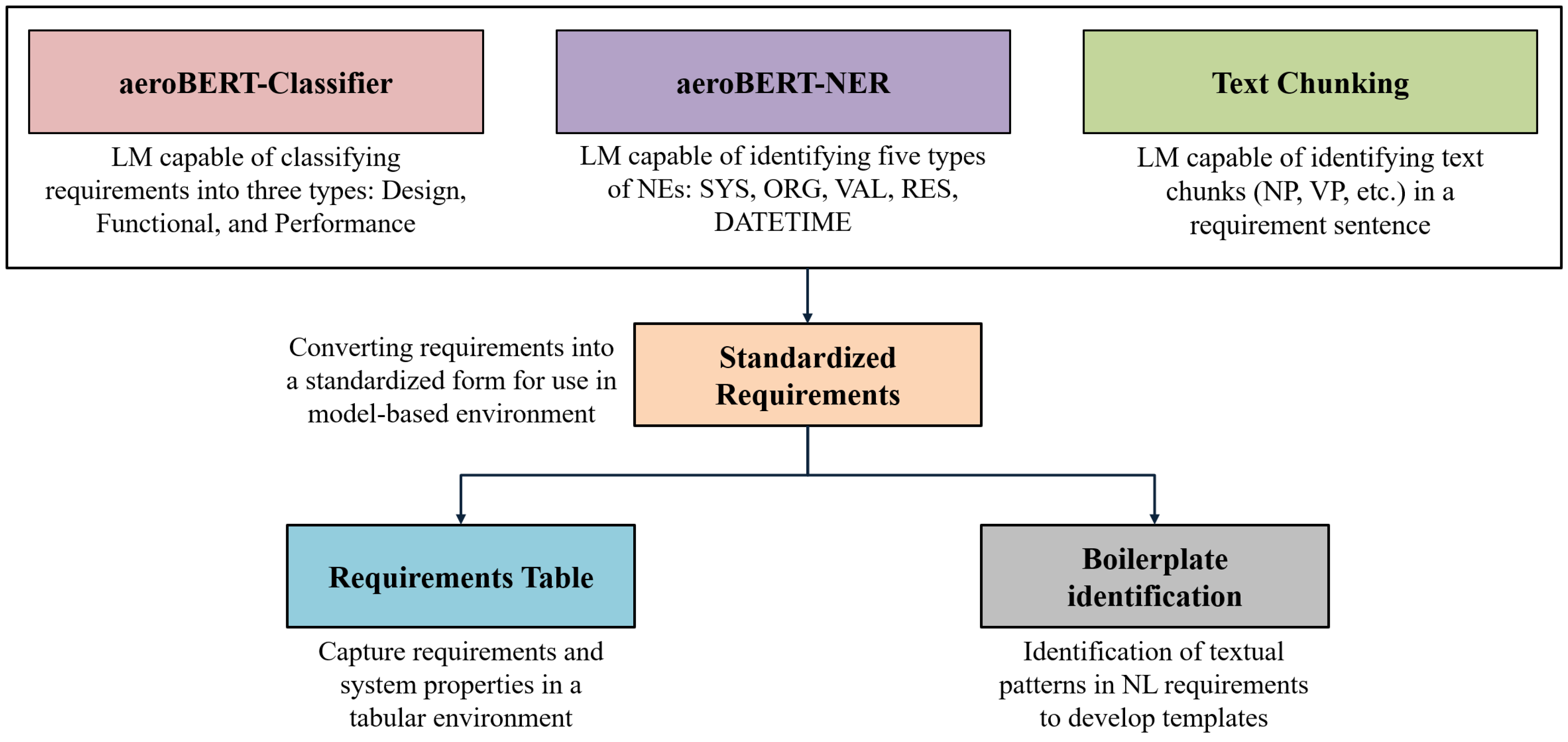

Figure 1.

Pipeline for converting NL requirements to standardized requirements using various LLMs.

Figure 1.

Pipeline for converting NL requirements to standardized requirements using various LLMs.

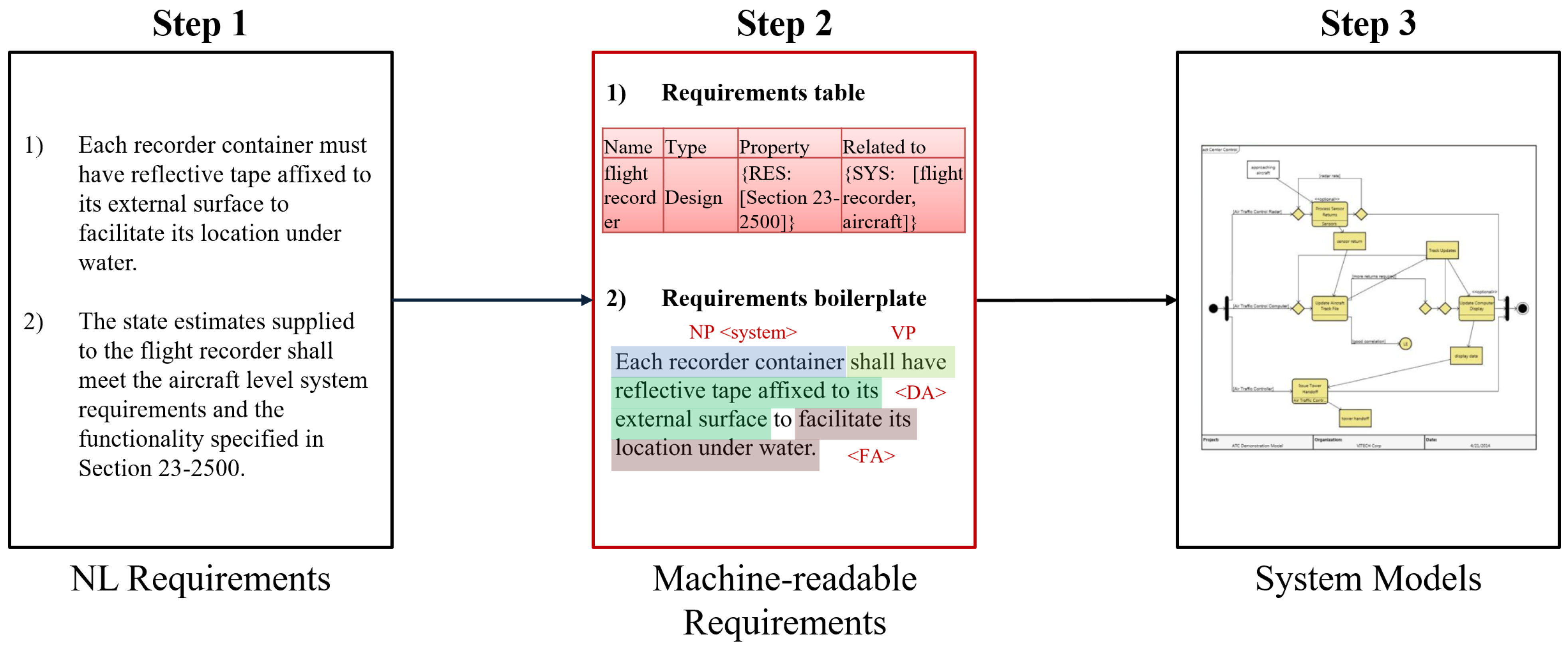

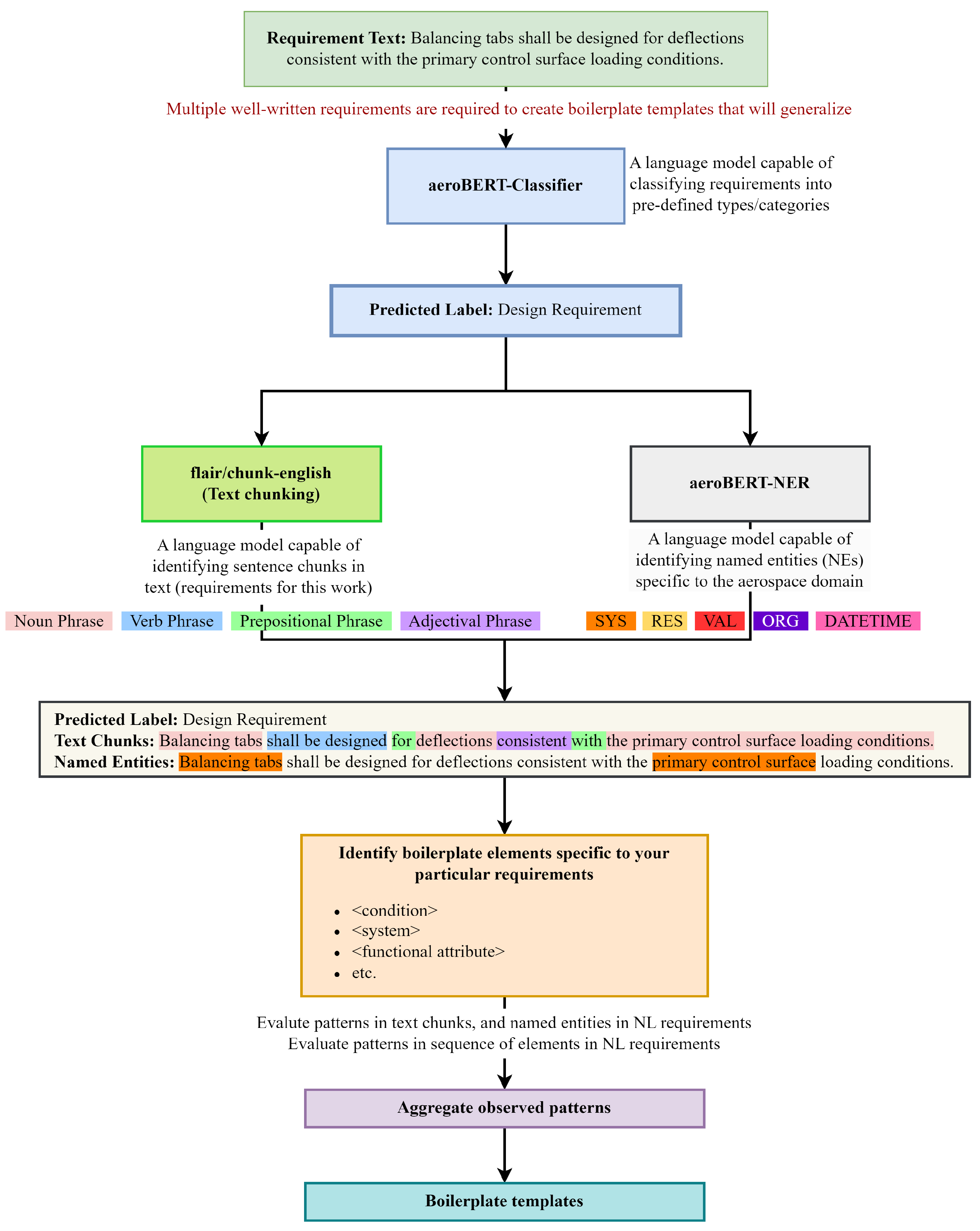

Figure 2.

Steps of requirements engineering, starting with gathering requirements from various stakeholders, followed by using NLP techniques to standardize them, and, lastly, converting the standardized requirements into models. The main focus of this work is to convert NL requirements into machine-readable requirements (where parts of the requirement become data objects) as shown in Step 2.

Figure 2.

Steps of requirements engineering, starting with gathering requirements from various stakeholders, followed by using NLP techniques to standardize them, and, lastly, converting the standardized requirements into models. The main focus of this work is to convert NL requirements into machine-readable requirements (where parts of the requirement become data objects) as shown in Step 2.

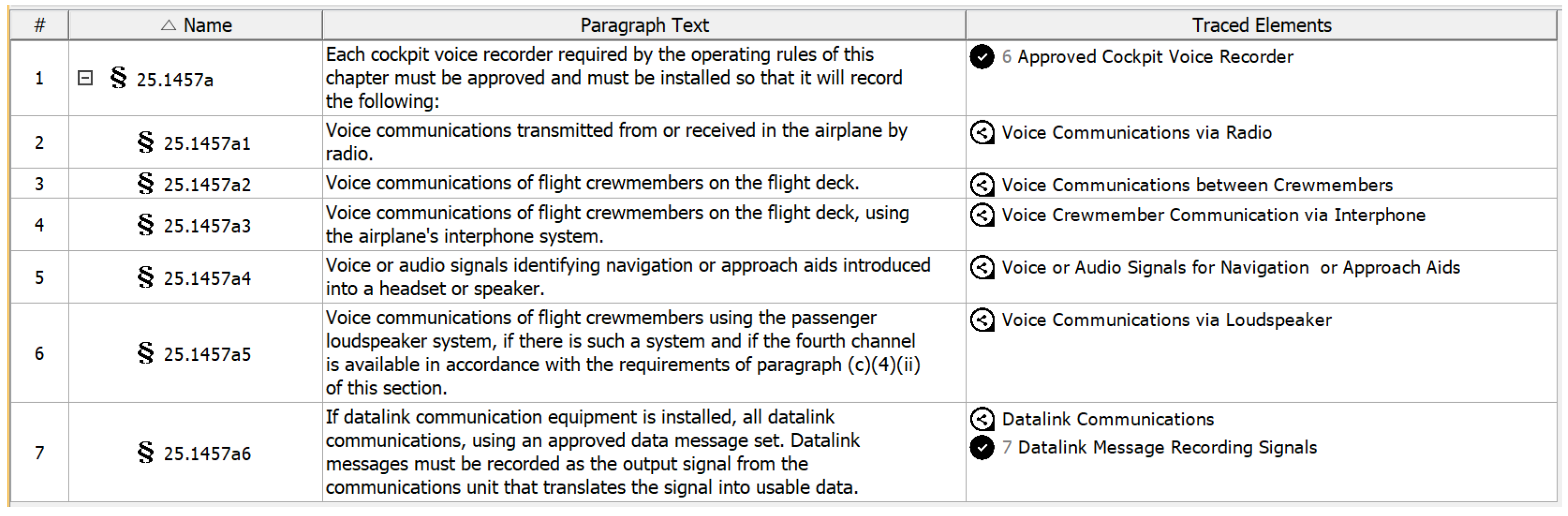

Figure 3.

A SysML requirement table with three columns, namely, Name, Paragraph Text, and Traced Elements. More columns can be added to capture other properties pertaining to the requirements.

Figure 3.

A SysML requirement table with three columns, namely, Name, Paragraph Text, and Traced Elements. More columns can be added to capture other properties pertaining to the requirements.

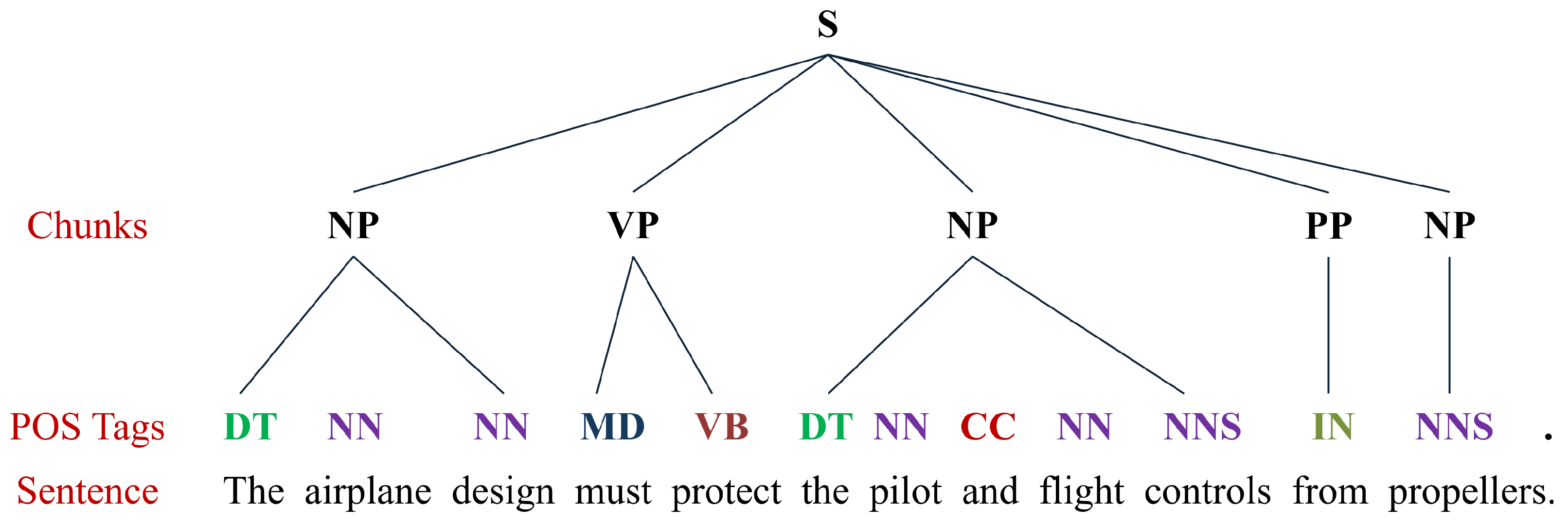

Figure 4.

An aerospace requirement along with its POS tags and sentence chunks. Each word has a POS tag associated with it, which can then be combined together to obtain a higher-level representation called sentence chunks (NP: Noun Phrase; VP: Verb Phrase; PP: Prepositional Phrase).

Figure 4.

An aerospace requirement along with its POS tags and sentence chunks. Each word has a POS tag associated with it, which can then be combined together to obtain a higher-level representation called sentence chunks (NP: Noun Phrase; VP: Verb Phrase; PP: Prepositional Phrase).

Figure 5.

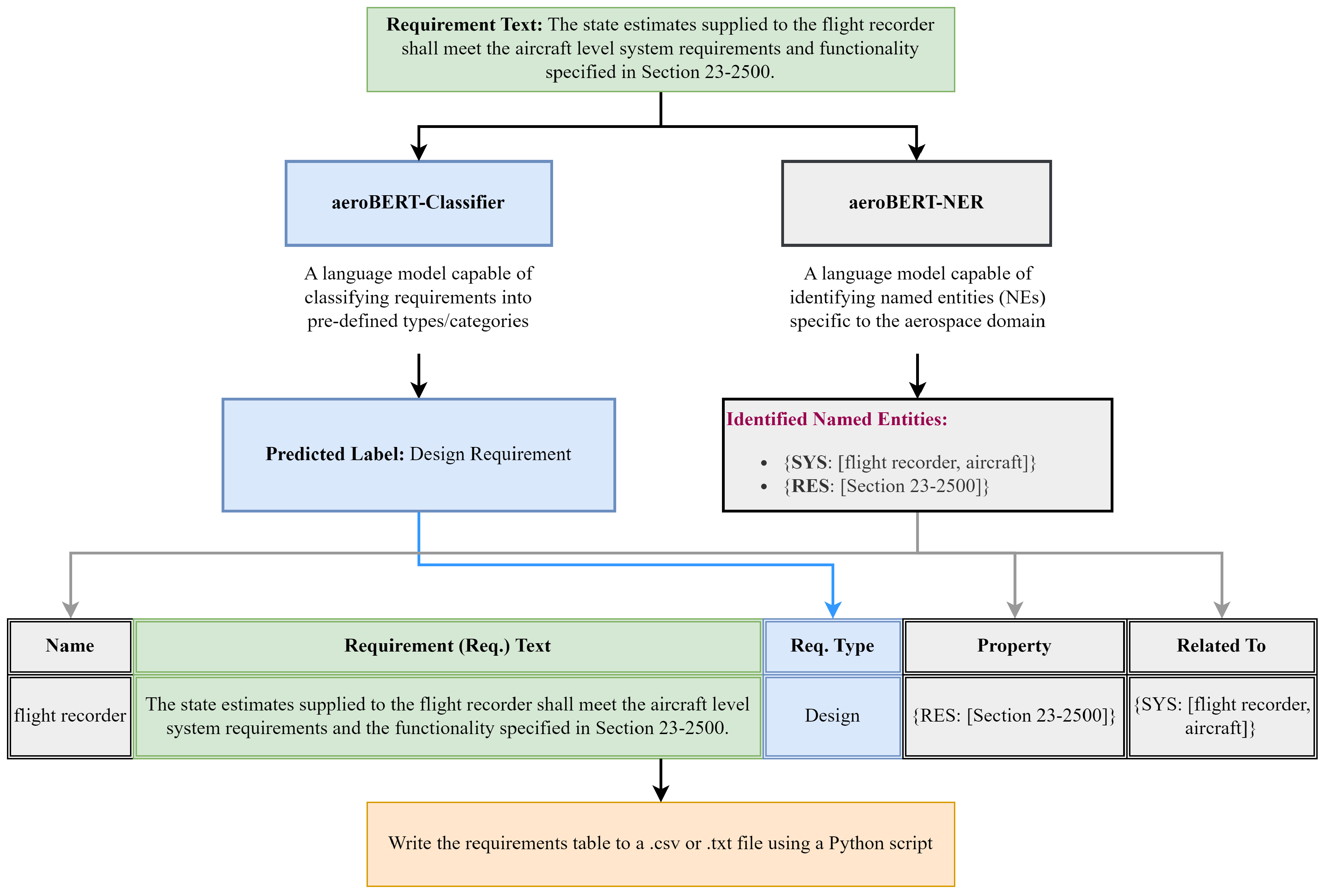

Flowchart showcasing the creation of the requirements table for the requirement “

The state estimates supplied to the flight recorder shall meet the aircraft level system requirements and functionality specified in Section 23–2500” using two LMs, namely, aeroBERT-Classifier [

9] and aeroBERT-NER [

8], to populate various columns of the table. A zoomed-in version of the figure can be found

here and more context can be found in [

10].

Figure 5.

Flowchart showcasing the creation of the requirements table for the requirement “

The state estimates supplied to the flight recorder shall meet the aircraft level system requirements and functionality specified in Section 23–2500” using two LMs, namely, aeroBERT-Classifier [

9] and aeroBERT-NER [

8], to populate various columns of the table. A zoomed-in version of the figure can be found

here and more context can be found in [

10].

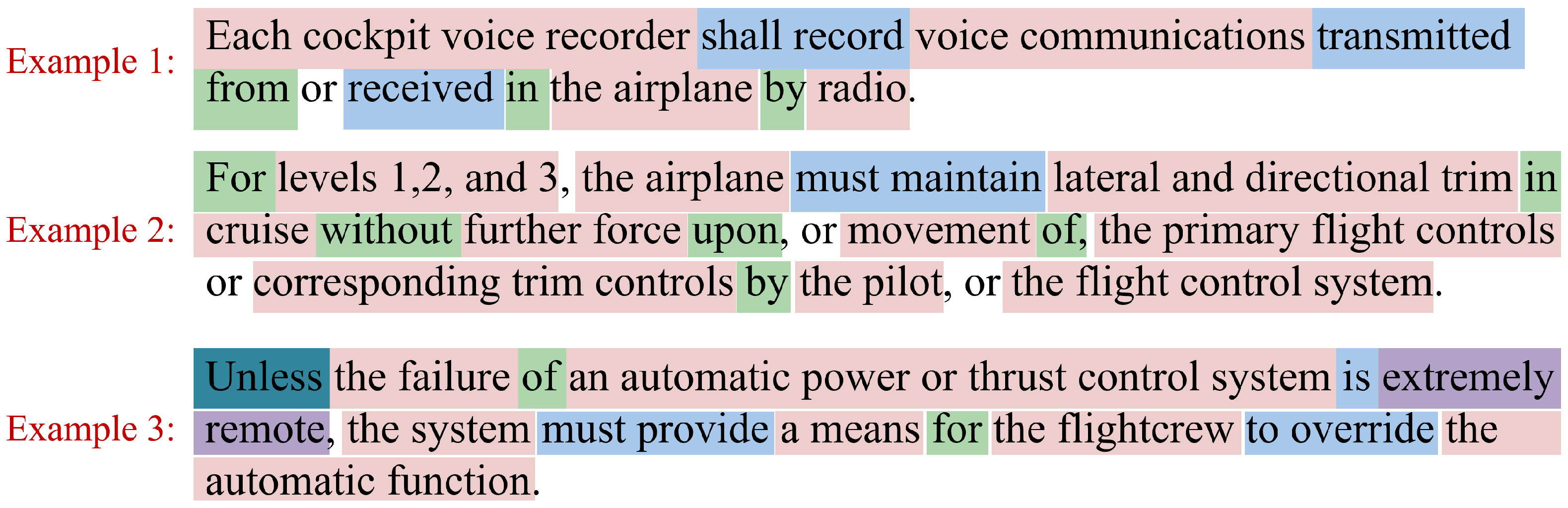

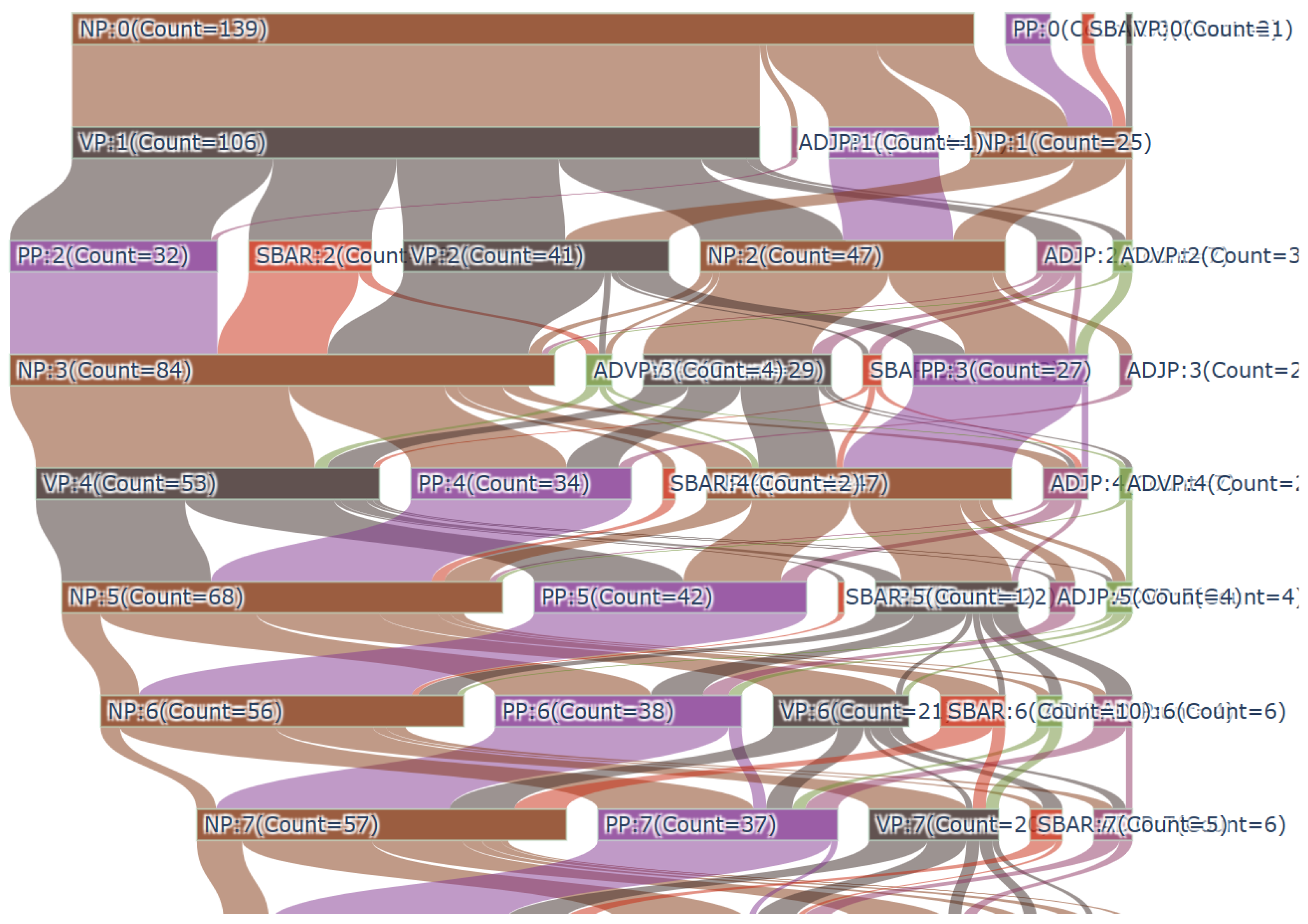

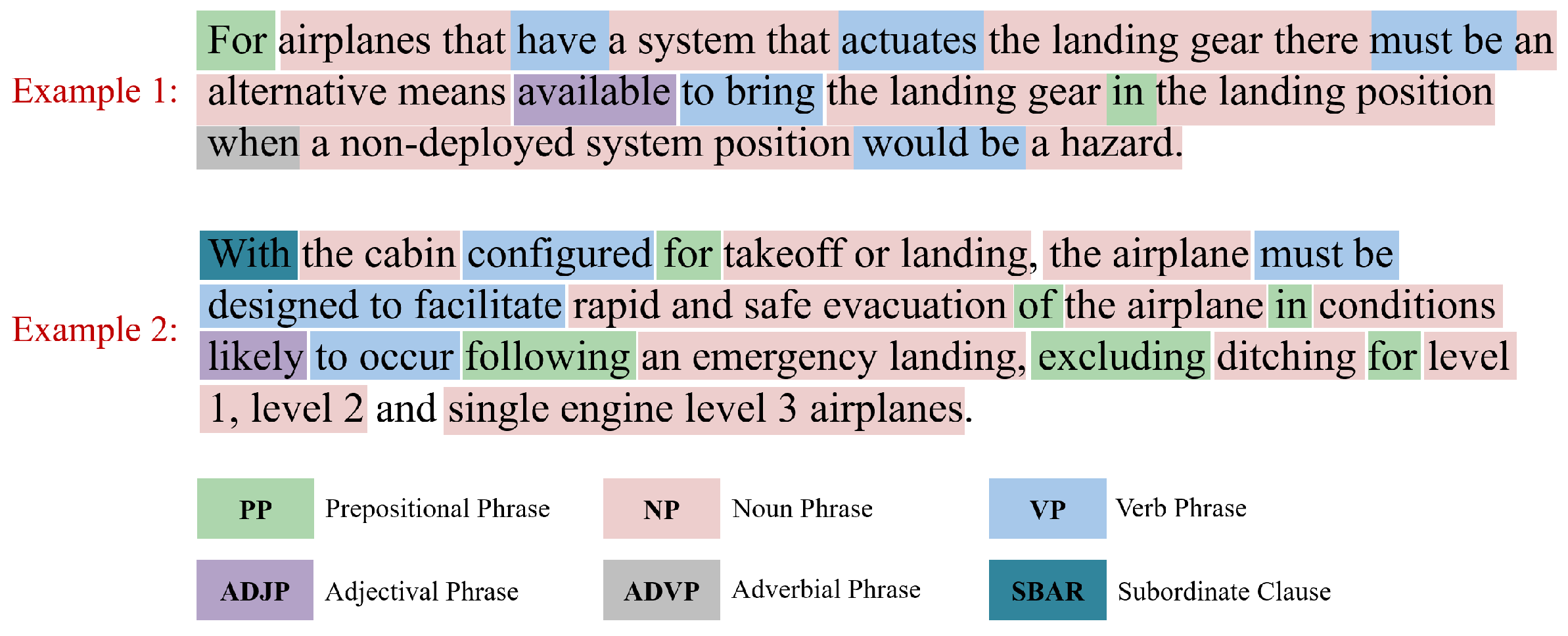

Figure 8.

Examples 1 and 2 show a design requirement beginning with a prepositional phrase (PP) and subordinate clause (SBAR), which is uncommon in the requirements dataset used for this work. The uncommon starting sentence chunks (PP, SBAR) are, however, followed by a noun phrase (NP) and verb phrase (VP). Most of the design requirements start with a NP.

Figure 8.

Examples 1 and 2 show a design requirement beginning with a prepositional phrase (PP) and subordinate clause (SBAR), which is uncommon in the requirements dataset used for this work. The uncommon starting sentence chunks (PP, SBAR) are, however, followed by a noun phrase (NP) and verb phrase (VP). Most of the design requirements start with a NP.

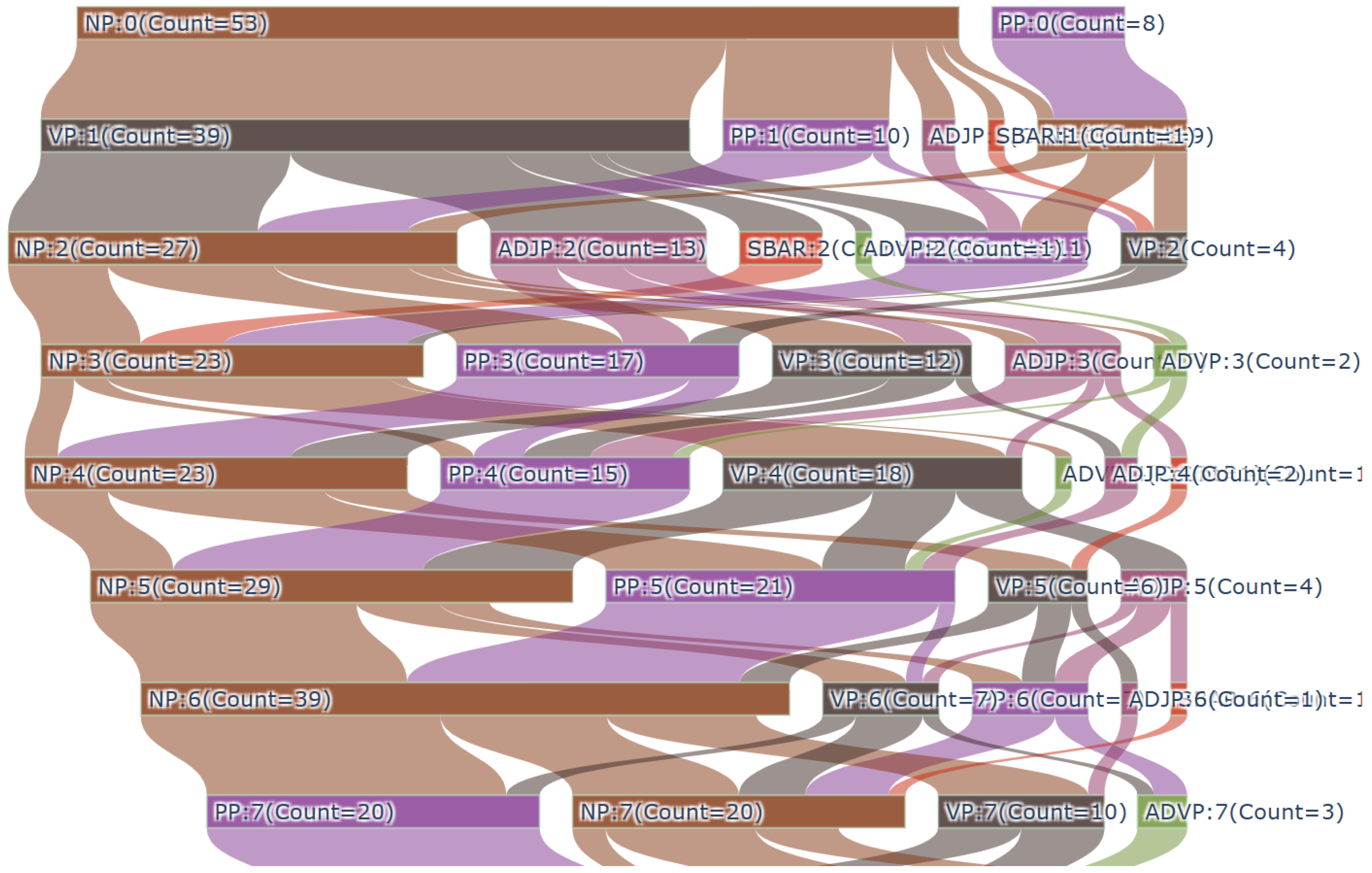

Figure 9.

Example 3 shows a design requirement starting with a verb phrase (VP). Example 4 shows the requirement starting with a noun phrase (NP), which was the most commonly observed pattern.

Figure 9.

Example 3 shows a design requirement starting with a verb phrase (VP). Example 4 shows the requirement starting with a noun phrase (NP), which was the most commonly observed pattern.

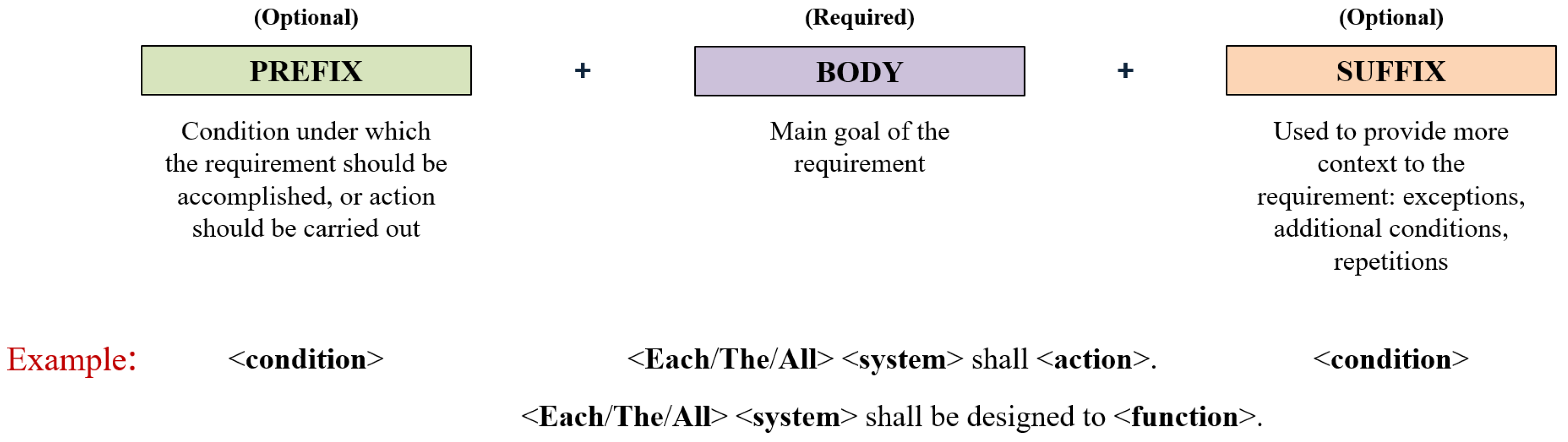

Figure 11.

The general textual pattern observed in requirements was <Prefix> + <Body> + <Suffix> out of which Prefix and Suffix are optional and can be used to provide more context about the requirement. The different variations in the requirement Body, Prefix, and Suffix are shown as well [

10].

Figure 11.

The general textual pattern observed in requirements was <Prefix> + <Body> + <Suffix> out of which Prefix and Suffix are optional and can be used to provide more context about the requirement. The different variations in the requirement Body, Prefix, and Suffix are shown as well [

10].

Figure 12.

The schematics of the first boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 74 of the 149 design requirements (∼50%) used for this study and is tailored toward requirements that mandate the way a <system> should be designed and/or installed, its location, and whether it should protect another <system/sub-system> given a certain <condition> or <state>. Parts of the NL requirements shown here are matched with their corresponding boilerplate elements via the use of the same color scheme. In addition, the sentence chunks and named entity tags are displayed below and above the boilerplate structure, respectively.

Figure 12.

The schematics of the first boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 74 of the 149 design requirements (∼50%) used for this study and is tailored toward requirements that mandate the way a <system> should be designed and/or installed, its location, and whether it should protect another <system/sub-system> given a certain <condition> or <state>. Parts of the NL requirements shown here are matched with their corresponding boilerplate elements via the use of the same color scheme. In addition, the sentence chunks and named entity tags are displayed below and above the boilerplate structure, respectively.

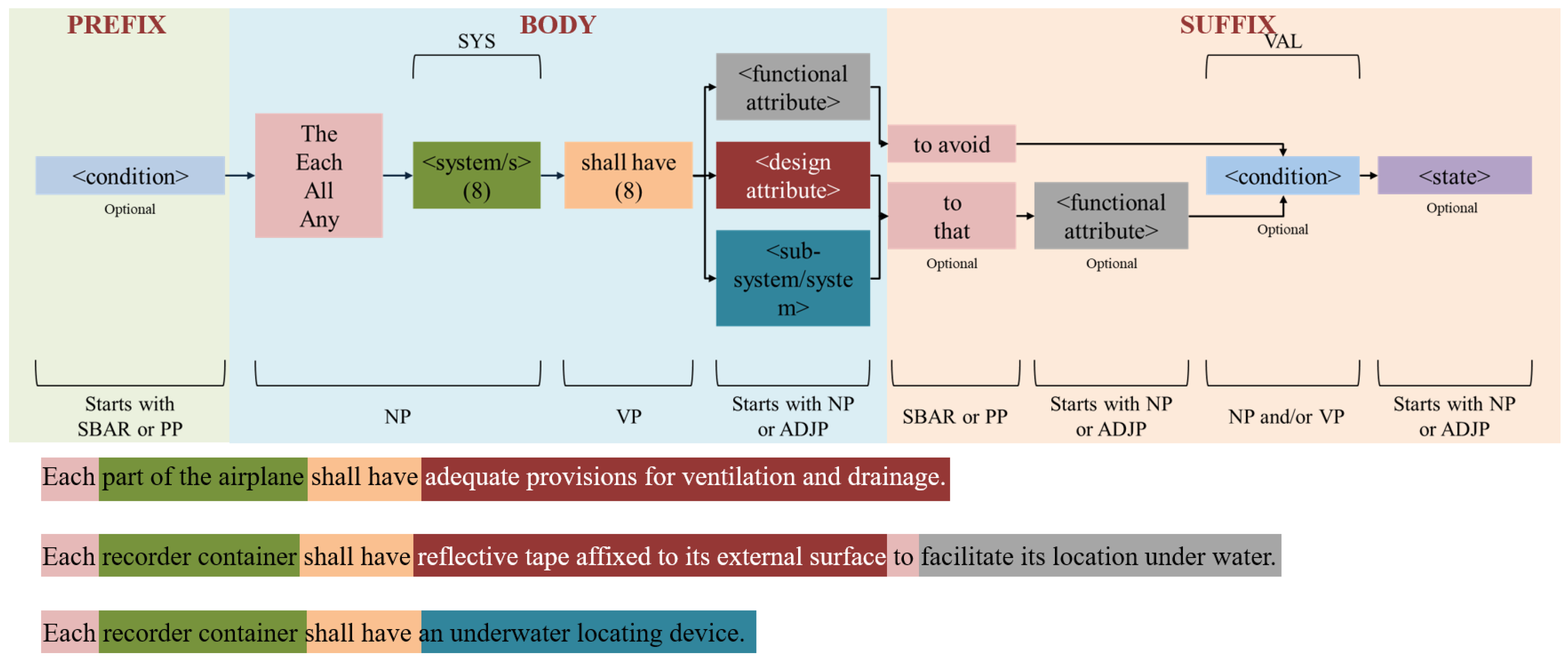

Figure 13.

The schematics of the second boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 8 of the 149 design requirements (∼5%) used for this study and focuses on requirements that mandate a <functional attribute>, <design attribute>, or the inclusion of a <system/sub-system> by design. Two of the example requirements highlight the <design attribute> element, which emphasizes additional details regarding the system design to facilitate a certain function. The last example shows a requirement where a <sub-system> is to be included in a system by design.

Figure 13.

The schematics of the second boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 8 of the 149 design requirements (∼5%) used for this study and focuses on requirements that mandate a <functional attribute>, <design attribute>, or the inclusion of a <system/sub-system> by design. Two of the example requirements highlight the <design attribute> element, which emphasizes additional details regarding the system design to facilitate a certain function. The last example shows a requirement where a <sub-system> is to be included in a system by design.

Figure 14.

The schematics of the first boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 20 of the 100 functional requirements (20%) used for this study and is tailored toward requirements that describe the capability of a <system> to be in a certain <state> or have a certain <functional attribute>. The first example requirement focuses on the handling characteristics of the system (airplane in this case).

Figure 14.

The schematics of the first boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 20 of the 100 functional requirements (20%) used for this study and is tailored toward requirements that describe the capability of a <system> to be in a certain <state> or have a certain <functional attribute>. The first example requirement focuses on the handling characteristics of the system (airplane in this case).

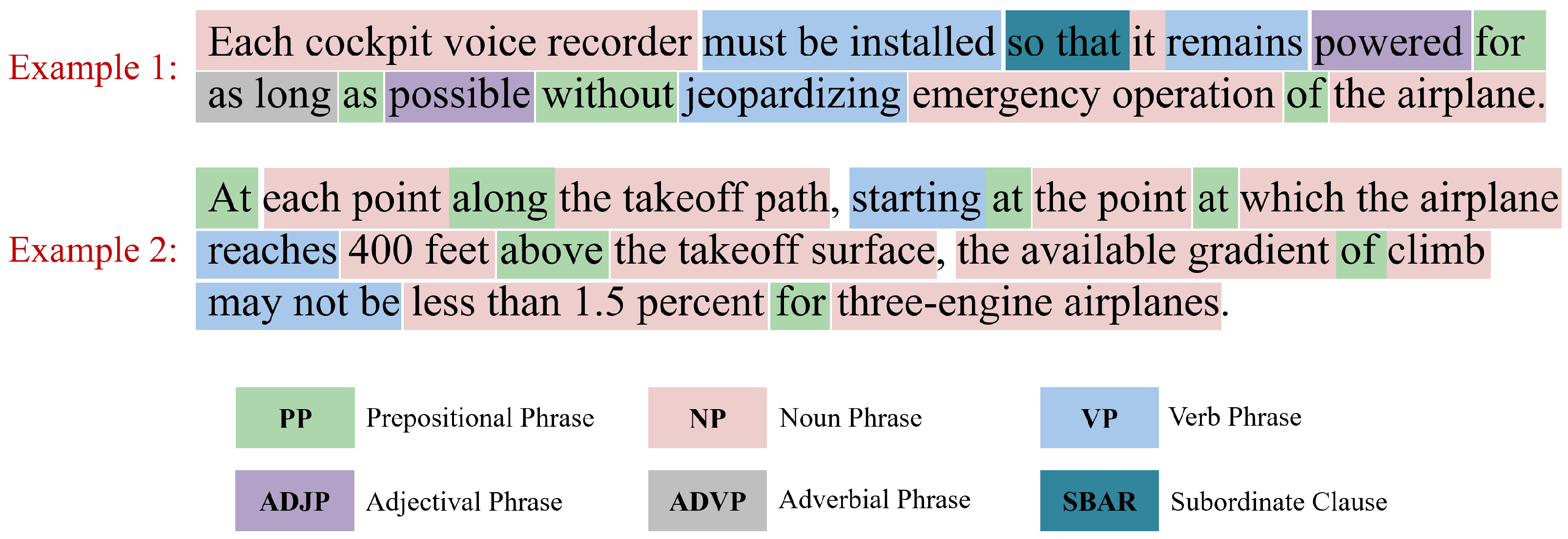

Figure 15.

The schematics of the second boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to have a certain <functional attribute> or maintain a particular <state>.

Figure 15.

The schematics of the second boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to have a certain <functional attribute> or maintain a particular <state>.

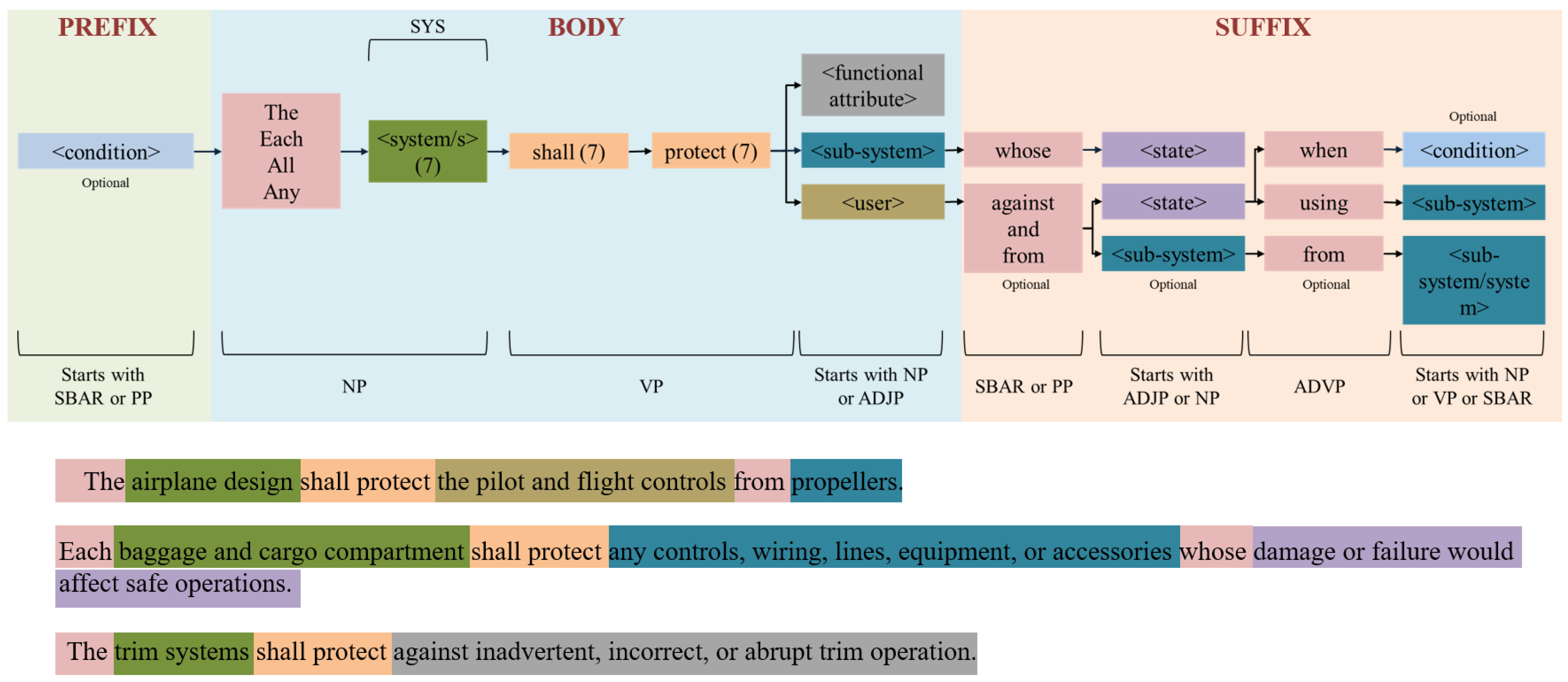

Figure 16.

The schematics of the third boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 7 of the 100 functional requirements (7%) used for this study and is tailored toward requirements that require the <system> to protect another <sub-system/system> or <user> against a certain <state> or another <sub-system/system>.

Figure 16.

The schematics of the third boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 7 of the 100 functional requirements (7%) used for this study and is tailored toward requirements that require the <system> to protect another <sub-system/system> or <user> against a certain <state> or another <sub-system/system>.

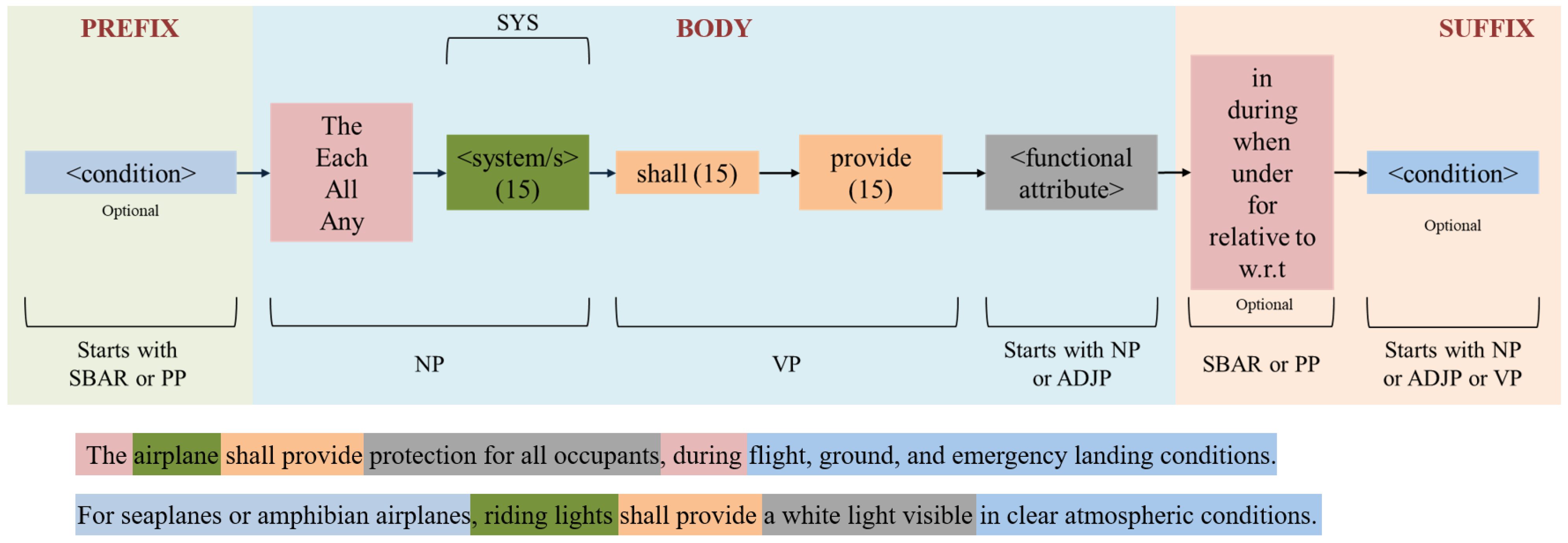

Figure 17.

The schematics of the fourth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to provide a certain <functional attribute> given a certain <condition>.

Figure 17.

The schematics of the fourth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to provide a certain <functional attribute> given a certain <condition>.

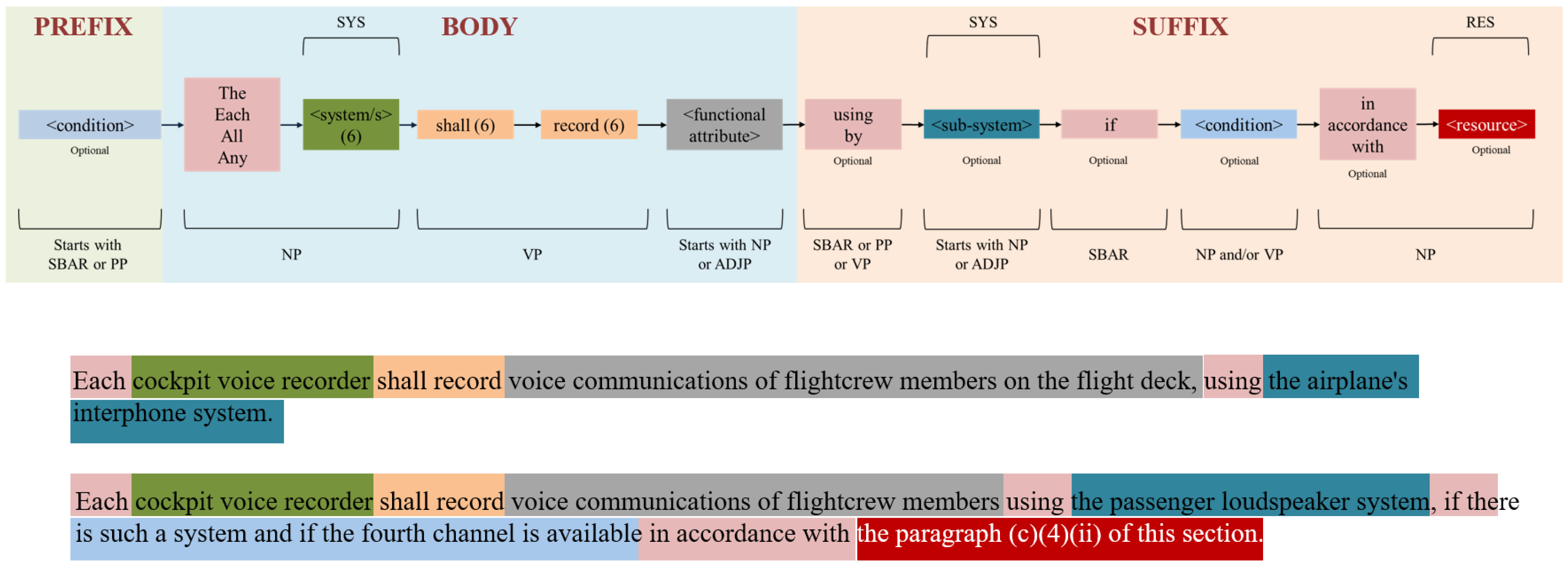

Figure 18.

The schematics of the fifth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 6 of the 100 design requirements (6%) used for this study and is specifically focused on requirements related to the cockpit voice recorder since a total of six requirements in the entire dataset were about this particular system and its <functional attribute> given a certain <condition>.

Figure 18.

The schematics of the fifth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 6 of the 100 design requirements (6%) used for this study and is specifically focused on requirements related to the cockpit voice recorder since a total of six requirements in the entire dataset were about this particular system and its <functional attribute> given a certain <condition>.

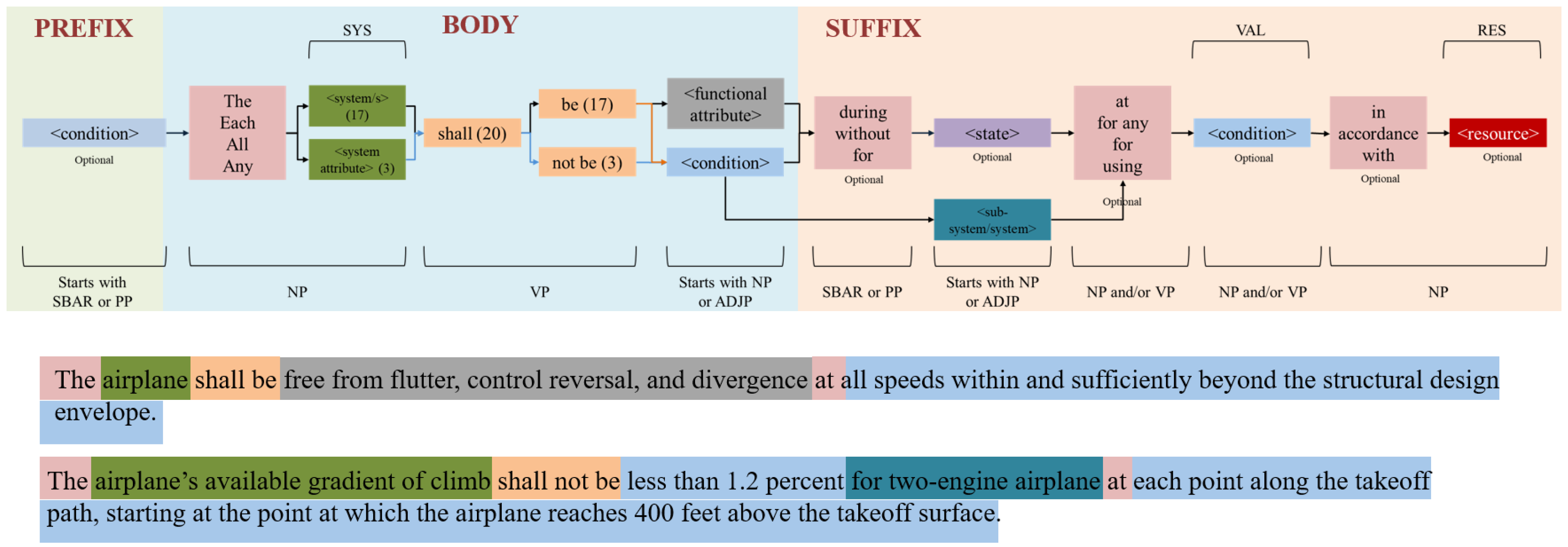

Figure 19.

The schematics of the first boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 20 of the 61 performance requirements (∼33%) used for this study. This particular boilerplate has the element <system attribute>, which is unique as compared to the other boilerplate structures. In addition, this boilerplate caters to the performance requirements specifying a <system> or <system attribute> to satisfy a certain <condition> or have a certain <functional attribute>.

Figure 19.

The schematics of the first boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 20 of the 61 performance requirements (∼33%) used for this study. This particular boilerplate has the element <system attribute>, which is unique as compared to the other boilerplate structures. In addition, this boilerplate caters to the performance requirements specifying a <system> or <system attribute> to satisfy a certain <condition> or have a certain <functional attribute>.

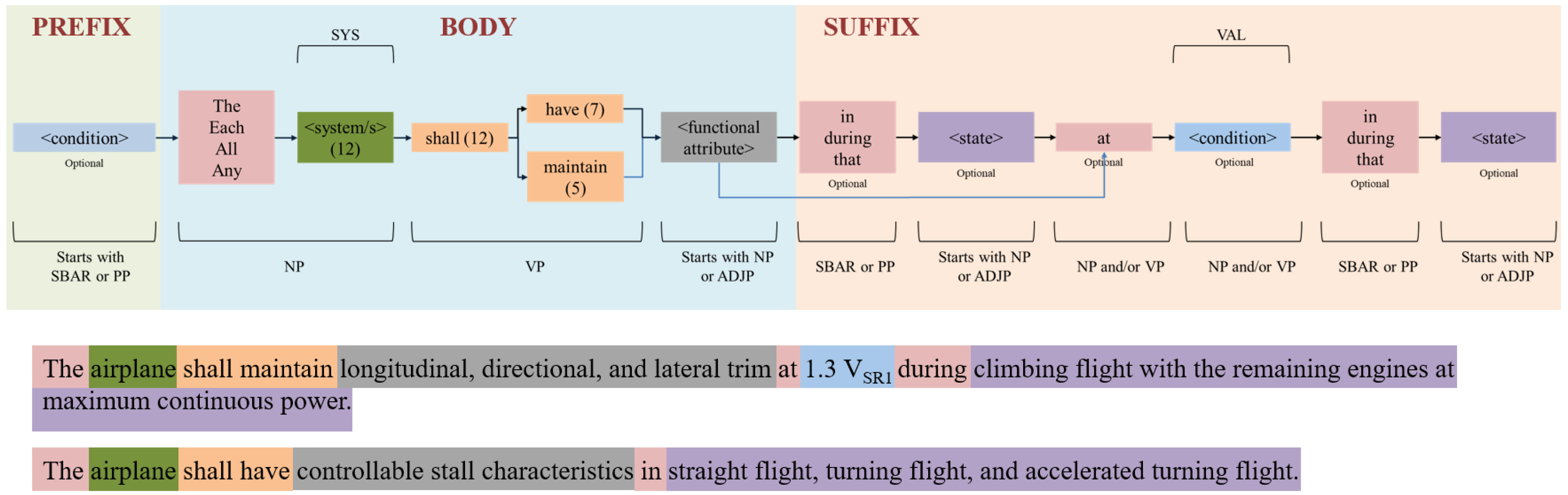

Figure 20.

The schematics of the second boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 12 of the 61 performance requirements (∼20%) used for this study. This boilerplate focuses on performance requirements that specify a <functional attribute> that a <system> should have or maintain given a certain <state> or <condition>.

Figure 20.

The schematics of the second boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 12 of the 61 performance requirements (∼20%) used for this study. This boilerplate focuses on performance requirements that specify a <functional attribute> that a <system> should have or maintain given a certain <state> or <condition>.

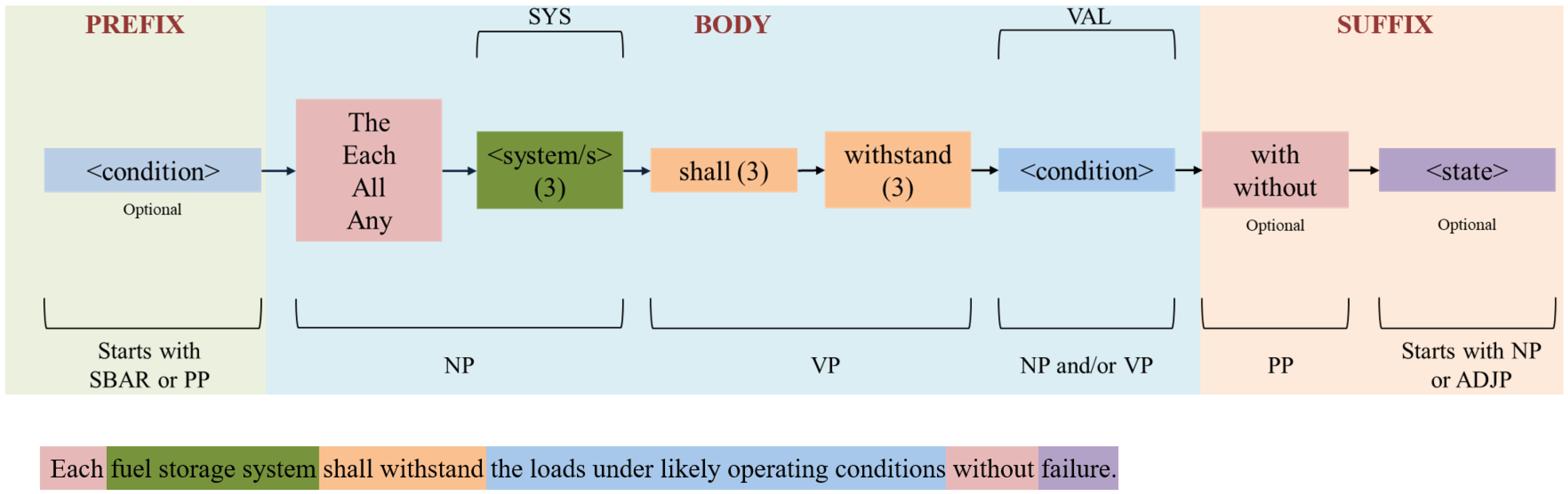

Figure 21.

The schematics of the third boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 3 of the 61 performance requirements (∼5%) used for this study and focuses on a <system> being able to withstand and certain <condition> with or without ending up in a certain <state>.

Figure 21.

The schematics of the third boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 3 of the 61 performance requirements (∼5%) used for this study and focuses on a <system> being able to withstand and certain <condition> with or without ending up in a certain <state>.

Table 1.

aeroBERT-NER is capable of identifying five types of named entities. The BIO tagging scheme was used for annotating the NER dataset [

8].

Table 1.

aeroBERT-NER is capable of identifying five types of named entities. The BIO tagging scheme was used for annotating the NER dataset [

8].

| Category | NER Tags | Example |

|---|

| System | B-SYS, I-SYS | nozzle guide vanes, flight recorder, fuel system |

| Value | B-VAL, I-VAL | 5.6 percent, 41,000 feet, 3 s |

| Date time | B-DATETIME, I-DATETIME | 2017, 2014, 19 September 1994 |

| Organization | B-ORG, I-ORG | DOD, NASA, FAA |

| Resource | B-RES, I-RES | Section 25–341, Sections 25–173 through 25–177, Part 25 subpart C |

Table 2.

A subset of text chunks along with definitions and examples is shown here [

11,

39]. The

blue text highlights the type of text chunk of interest. This is not an exhaustive list.

Table 2.

A subset of text chunks along with definitions and examples is shown here [

11,

39]. The

blue text highlights the type of text chunk of interest. This is not an exhaustive list.

| Sentence Chunk | Definition and Example |

|---|

| Noun Phrase (NP) | Consists of a noun and other words modifying the noun (determinants, adjectives, etc.); |

| | Example:The airplane design must protect the pilot and flight controls from propellers. |

| Verb Phrase (VP) | Consists of a verb and other words modifying the verb (adverbs, auxiliary verbs, prepositional phrases, etc.); |

| | Example: The airplane design must protect the pilot and flight controls from propellers. |

| Subordinate Clause (SBAR) | Provides more context to the main clause and is usually introduced by subordinating conjunction (because, if, after, as, etc.) |

| | Example: There must be a means to extinguish any fire in the cabin such that the pilot, while seated, can easily access the fire extinguishing means. |

| Adverbial Clause (ADVP) | Modifies the main clause in the manner of an adverb and is typically preceded by subordinating conjunction; |

| | Example: The airplanes were grounded until the blizzard stopped. |

| Adjective Clause (ADJP) | Modifies a noun phrase and is typically preceded by a relative pronoun (that, which, why, where, when, who, etc.); |

| | Example: I can remember the time when air-taxis didn’t exist. |

Table 3.

List of language models used to populate the columns of requirement table.

Table 3.

List of language models used to populate the columns of requirement table.

| Column Name | Description | Method Used to Populate |

|---|

| Name | System (SYS named entity) that the requirement pertains to | aeroBERT-NER [8] |

| Text | Original requirement text | Original requirement text |

| Type of Requirement | Classification of the requirement as design, functional, or performance | aeroBERT-Classifier [9] |

| Property | Identified named entities belonging to RES, VAL, DATETIME, and ORG categories present in a requirement related to a particular system (SYS) | aeroBERT-NER [8] |

| Related to | Identified system named entity (SYS) that the requirement properties are associated with | aeroBERT-NER [8] |

Table 4.

Different elements of an aerospace requirement. The

blue text highlights a specific element of interest in a requirement [

10].

Table 4.

Different elements of an aerospace requirement. The

blue text highlights a specific element of interest in a requirement [

10].

| Requirement Element | Definition and Example |

|---|

| <condition> | Specifies details about the external circumstance, system configuration, or system activity currently happening for the system while it performs a certain function, etc.; |

| | Example:with the cabin configured for takeoff or landing, the airplane shall have means of egress, that can be readily located and opened from the inside and outside. |

| <system> | Name of the system that the requirement is about; |

| | Example: all pressurized airplanes shall be capable of continued safe flight and landing following a sudden release of cabin pressure. |

| <functional attribute> | The function to be performed by a system or a description of a function that can be performed under some unstated circumstance; |

| | Example: the insulation on electrical wire and electrical cable shall be self-extinguishing. |

| <state> | Describes the physical configuration of a system while performing a certain function; |

| | Example: the airplane shall maintain longitudinal trim without further force upon, or movement of, the primary flight controls. |

| <design attribute> | Provides additional details regarding a system’s design; |

| | Example: each recorder container shall have reflective tape affixed to its external surface to facilitate its location under water. |

| <sub-system/system> | Specifies any additional system/sub-system that the main system shall include, or shall protect in case of a certain operational condition, etc.; |

| | Example: each recorder container shall have an underwater locating device. |

| <resource> | Specifies any resource (such as another part of the Title 14 CFRs, a certain paragraph in the same part, etc.) that the system shall be compliant with; |

| | Example: the control system shall be designed for pilot forces applied in the same direction, using individual pilot forces not less than 0.75 times those obtained in accordance with Section 25-395. |

| <context> | Provides additional details about the requirement; |

| | Example: with the cabin configured for takeoff or landing, the airplane shall have means of egress that can be readily located and opened from the inside and outside. |

| <user> | Specifies the user of a system, usually a pilot in the case of flight controls, passengers in the case of emergency exits in the cabin, etc.; |

| | Example: the airplane design shall protect the pilot and flight controls from propellers. |

| <system attribute> | Some requirements do not start with a system name but rather with a certain characteristic of a said system; |

| | Example: the airplane’s available gradient of climb shall not be less than 1.2 percent for a two-engine airplane at each point along the takeoff path, starting from the point at which the airplane reaches 400 feet above the takeoff surface. |

Table 5.

Requirement table containing columns extracted from NL requirements using language models. This table can be used to aid the creation of SysML requirementTable [

10].

Table 5.

Requirement table containing columns extracted from NL requirements using language models. This table can be used to aid the creation of SysML requirementTable [

10].

| Serial No. | Name | Text | Type of Requirement | Property | Related to |

|---|

| 1 | nozzle guide vanes | All nozzle guide vanes should be weld-repairable without a requirement for strip coating. | Design | | {SYS: [nozzle guide vanes]} |

| 2 | flight recorder | The state estimates supplied to the flight recorder shall meet the aircraft-level system requirements and the functionality specified in Section 23–2500. | Design | {RES: [Section 23–2500]} | {SYS: [flight recorder, aircraft]} |

| 3 | pressurized airplanes | Pressurized airplanes with a maximum operating altitude greater than 41,000 feet must be capable of detecting damage to the pressurized cabin structure before the damage could result in rapid decompression that would result in serious or fatal injuries. | Functional | {VAL: [greater than, 41,000 feet]} | {SYS: [pressurized airplanes, pressurized cabin structure]} |

| 4 | fuel system | Each fuel system must be arranged so that any air that is introduced into the system will not result in power interruption for more than 20 s for reciprocating engines. | Performance | {VAL: [20 s]} | {SYS: [fuel system, reciprocating engines]} |

| 5 | structure | The structure must be able to support ultimate loads without failure for at least 3 s. | Performance | {VAL: [3 s]} | {SYS: [structure]} |

Table 6.

The table exhibits the outcomes from a coverage analysis performed on the proposed boilerplate templates. The analysis revealed that 67 design, 37 functional, and 26 performance requirements did not align with the boilerplate templates developed during this study.

Table 6.

The table exhibits the outcomes from a coverage analysis performed on the proposed boilerplate templates. The analysis revealed that 67 design, 37 functional, and 26 performance requirements did not align with the boilerplate templates developed during this study.

| Requirement Type | Boilerplate Number | Number of Requirements That Fit Boilerplate | Number of Requirements That Do Not Fit Any Boilerplate |

|---|

| Design (149) | 1 | 74 (∼50%) | 67 (∼45%) |

| 2 | 8 (∼5%) | |

| Functional (100) | 1 | 20 (20%) | 37 (37%) |

| 2 | 15 (15%) |

| 3 | 7 (7%) |

| 4 | 15 (15%) |

| 5 | 6 (6%) |

| Performance (61) | 1 | 20 (∼33%) | 26 (∼42%) |

| 2 | 12 (∼20%) |

| 3 | 3 (∼5%) |