Fluid Ability (Gf) and Complex Problem Solving (CPS)

Abstract

:1. Introduction

- Under a narrow, tight definition of CPS, in which tasks are classified as CPS tasks by common features and correlations in performance of them (i.e., reflective measures [26]), fluid ability can be viewed as the more general construct, with complex problem solving (CPS) as a task type or lower order construct that largely can be accounted for by fluid ability. As is the case with other lower-order constructs, such as quantitative, deductive, or inductive reasoning, this relationship does not preclude CPS from having unique features, such as a dynamic character and time sensitivity, in addition to features that overlap with other fluid ability factors, such as requiring inductive or deductive reasoning. Note that in the differential psychology literature, abilities are typically defined at three orders (or strata) of generality [10,27]: at the top (third) order, there is a general factor influencing performance on any cognitive task [28]; at the second order there are broad group factors, such as fluid, crystallized, and spatial ability; and at the first order there are narrower factors pertaining to types of cognitive processing activities, such as deductive reasoning, or inductive reasoning (the g-VPR model [29] also is based on a hierarchical arrangement of factors varying in generality). It is here that we would place a narrowly defined CPS—at the first order, within the span of fluid ability tasks, alongside inductive reasoning tasks (such as progressive matrices), or deductive reasoning tasks (such as three-term series tasks).

- Under a broader definition of CPS, one that classifies a task as a CPS task based on meeting a set of criteria, whether or not the resulting set of tasks are correlated with one another, there may be alternative characterizations of the meaning of the CPS construct, such as treating it as a formative latent variable construct; that is, one defined by formative or cause indicators [26]. As such, fluid ability can be seen as an important and strong predictor of success on CPS tasks, with the strength of the relation varying depending on the particular CPS task.

1.1. Complex Problem Solving (CPS)

1.1.1. General Tradition

1.1.2. German Tradition

- there are many variables;

- which are interconnected;

- there is a dynamic quality in that the variables change as the test taker interacts with the system;

- the structure and dynamics of the variables are not disclosed, the test taker must discover them; and

- the goals of interacting with the system must be discovered.

1.2. Fluid Ability (Gf)

1.3. Formative vs. Reflective Latent Variable Constructs

1.4. Conceptual Overlap between CPS and Fluid Ability

1.5. Relationship between CPS and Fluid Ability in the World of Work

- (a)

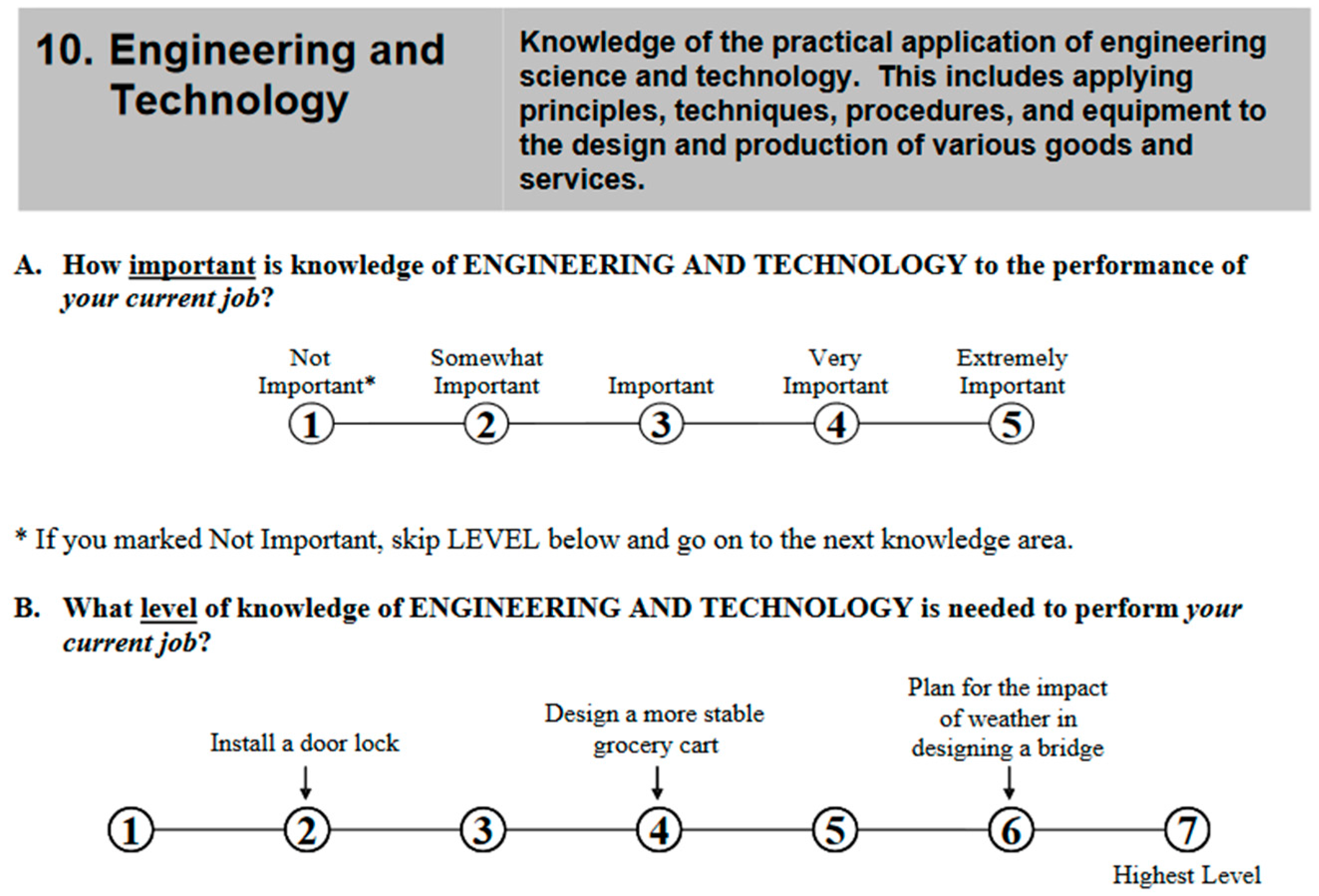

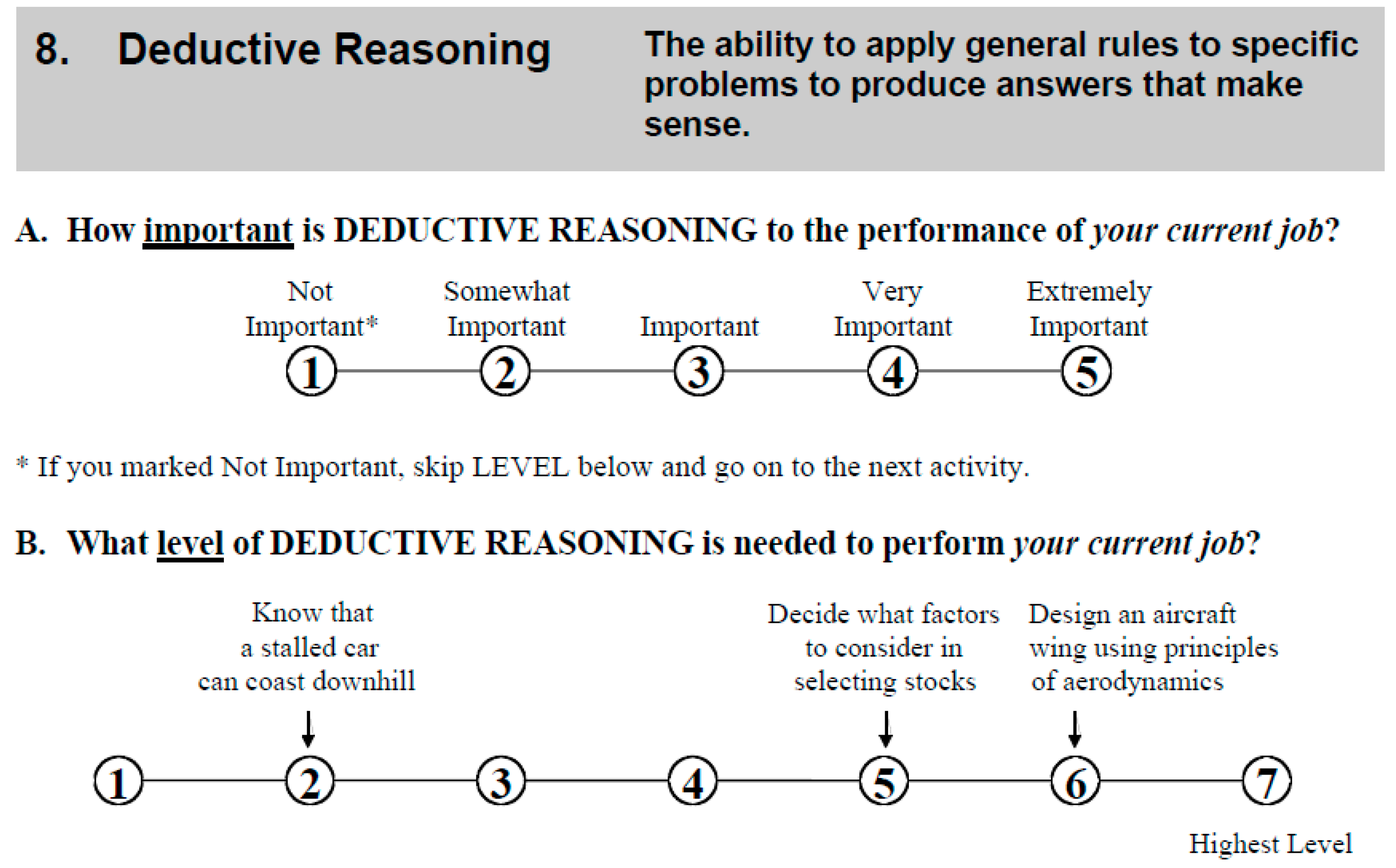

- the 23 cognitive abilities in the worker characteristics domain (items 1 to 23 on the O*NET Abilities Questionnaire) (see Table 1);

- (b)

- the 33 knowledge areas in the worker requirements domain (items 1 to 33 on the O*NET Knowledge Questionnaire); and

- (c)

- a single Complex Problem Solving (CPS) rating in the cross-functional skills set within the worker requirements domain (item 17 on the O*NET Skills Questionnaire).3

2. Materials and Methods

3. Results

3.1. Correlations among Skills

3.2. Overall Regression Analysis of Occupation Median Wages

3.3. Within Job Zone Regression Analyses of Log Median Wages

4. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

References

- Frensch, P.A.; Funke, J. Complex Problem Solving: The European Perspective; Routledge: Abingdon, UK, 1995. [Google Scholar]

- Sternberg, R.J.; Frensch, P.A. Complex Problem Solving: Principles and Mechanisms; Routledge: Abingdon, UK, 1991. [Google Scholar]

- Organisation for Economic Co-Operation and Development (OECD). Field Operations in Pisa 2003; OECD Publishing: Paris, France, 2005. [Google Scholar]

- Organisation for Economic Co-Operation and Development (OECD). Assessing problem-solving skills in pisa 2012. In PISA 2012 Results: Creative Problem Solving; OECD Publishing: Paris, France, 2014; Volume V, pp. 25–46. [Google Scholar]

- Organisation for Economic Co-Operation and Development (OECD). Pisa 2015 Results in Focus; 2226–0919; Organisation for Economic Co-Operation and Development (OECD): Paris, France, 2016. [Google Scholar]

- National Center for O*NET Development. O*NET Online. Available online: https://www.onetonline.org/ (accessed on 10 July 2017).

- Casner-Lotto, J.; Barrington, L. Are They Really Ready to Work? Employers' Perspectives on the Basic Knowledge and Applied Skills of New Entrants to the 21st Century U.S. Workforce; The Conference Board, Partnership for 21st Century Skills, Corporate Voices for Working Families, Society for Human Resources Management: Washington, DC, USA, 2006. [Google Scholar]

- Quesada, J.; Kintsch, W.; Gomez, E. Complex problem-solving: A field in search of a definition? Theor. Issues Ergon. Sci. 2005, 6, 5–33. [Google Scholar] [CrossRef]

- Valentin Kvist, A.; Gustafsson, J.-E. The relation between fluid intelligence and the general factor as a function of cultural background: A test of catte’lls investment theory. Intelligence 2008, 36, 422–436. [Google Scholar] [CrossRef]

- Carroll, J.B. Human Cognitive Abilities: A Survey of Factor Analytic Studies; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Greif, S.; Stadler, M.; Sonnleitner, P.; Wolff, C.; Martin, R. Sometimes less is more: Comparing the validity of complex problem solving measures. Intelligence 2015, 50, 100–113. [Google Scholar] [CrossRef]

- Kretzschmar, A.; Neubert, J.C.; Wüstenberg, S.; Greiff, S. Construct validity of complex problem solving: A comprehensive view on different facets of intelligence and school grades. Intelligence 2016, 54, 55–69. [Google Scholar] [CrossRef]

- Kroner, S.; Plass, J.; Leutner, D. Intelligence assessment with computer simulations. Intelligence 2005, 33, 347–368. [Google Scholar] [CrossRef]

- Lotz, C.; Sparfeldt, J.R.; Greiff, S. Complex problem solving in educational contexts—Still something beyond a “good g”? Intelligence 2016, 59, 127–138. [Google Scholar] [CrossRef]

- Stadler, M.; Becker, N.; Gödker, M.; Leutner, D.; Greiff, S. Complex problem solving and intelligence: A meta-analysis. Intelligence 2015, 53, 92–101. [Google Scholar] [CrossRef]

- Wüstenberg, S.; Greiff, S.; Funke, J. Complex problem solving—More than reasoning? Intelligence 2012, 40, 1–14. [Google Scholar] [CrossRef]

- Wüstenberg, S.; Greiff, S.; Vainikainen, M.-P.; Murphy, K. Individual differences in students’ complex problem solving skills: How they evolve and what they imply. J. Educ. Psychol. 2016, 108, 1028–1044. [Google Scholar] [CrossRef]

- Kyllonen, P.C. Is working memory capacity spearman’s g? In Human Abilities: Their Nature and Measurement; Dennis, I., Tapsfield, P., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1996; pp. 49–76. [Google Scholar]

- Kyllonen, P.C.; Christal, R.E. Reasoning ability is (little more than) working-memory capacity?! Intelligence 1990, 14, 389–433. [Google Scholar] [CrossRef]

- Ceci, S.J. How much does schooling influence general intelligence and its cognitive components? A reassessment of the evidence. Dev. Psychol. 1991, 27, 703–722. [Google Scholar] [CrossRef]

- Cliffordson, C.; Gustafsson, J.-E. Effects of age and schooling on intellectual performance: Estimates obtained from analysis of continuous variation in age and length of schooling. Intelligence 2008, 36, 143–152. [Google Scholar] [CrossRef]

- Flynn, J.R. Massive iq gains in 14 nations: What iq tests really measure. Psychol. Bull. 1987, 101, 171–191. [Google Scholar] [CrossRef]

- Flynn, J.R. What Is Intelligence?: Beyond the Flynn Effect; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Trahan, L.H.; Stuebing, K.K.; Fletcher, J.M.; Hiscock, M. The flynn effect: A meta-analysis. Psychol. Bull. 2014, 140, 1332–1360. [Google Scholar] [CrossRef] [PubMed]

- Organisation for Economic Co-Operation and Development (OECD). Skills Matter: Further Results from the Survey of Adult Skills; OECD Publishing: Paris, France, 2016. [Google Scholar]

- Edwards, J.R.; Bagozzi, R.P. On the nature and direction of relationships between constructs and measures. Psychol. Methods 2000, 5, 155–174. [Google Scholar] [CrossRef] [PubMed]

- McGrew, K.S. Chc theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 2009, 37, 1–10. [Google Scholar] [CrossRef]

- Spearman, C. The Abilities of Man: Their Nature and Measurement; Macmillan and Co., Ltd.: London, UK, 1927. [Google Scholar]

- Johnson, W.; Bouchard, T.J. The structure of human intelligence: It is verbal, perceptual, and image rotation (vpr), not fluid and crystallized. Intelligence 2005, 33, 393–416. [Google Scholar] [CrossRef]

- Devinney, T.; Coltman, T.; Midgley, D.F.; Venaik, S. Formative versus reflective measurement models: Two applications of formative measurement. J. Bus. Res. 2008, 61, 1250–1262. [Google Scholar]

- Jarvis, C.B.; Mackenzie, S.B.; Podsakoff, P.M. A critical review of construct indicators and measurement model misspecification in marketing and consumer research. J. Consum. Res. 2003, 30, 199–218. [Google Scholar] [CrossRef]

- Rindermann, H. Theg-factor of international cognitive ability comparisons: The homogeneity of results in pisa, timss, pirls and iq-tests across nations. Eur. J. Personal. 2007, 21, 667–706. [Google Scholar] [CrossRef]

- Gustafsson, J.-E. A unifying model for the structure of intellectual abilities. Intelligence 1984, 8, 179–203. [Google Scholar] [CrossRef]

- Gustafsson, J.-E.; Balke, G. General and specific abilities as predictors of school achievement. Multivar. Behav. Res. 1993, 28, 407–434. [Google Scholar] [CrossRef] [PubMed]

- Süß, H.-M.; Beauducel, A. Modeling the construct validity of the berlin intelligence structure model. Estud. Psicol. (Camp.) 2015, 32, 13–25. [Google Scholar] [CrossRef]

- National Research Council. A Database for a Changing Economy: Review of the Occupational Information Network (O*NET); National Academies Press: Washington, DC, USA, 2010. [Google Scholar]

- Culpepper, J.C. Merriam-webster online: The language center. Electr. Resour. Rev. 2000, 4, 9–11. [Google Scholar]

- Dörner, D.; Kreuzig, H.W.; Reither, F.; Stäudel, T. Lohhausen: Vom Umgang Mit Unbestimmtheit und Komplexität [Dealing with Uncertainty and Complexity]; Huber: Bern, Switzerland, 1983. [Google Scholar]

- Funke, J. Dynamic systems as tools for analysing human judgement. Think. Reason. 2001, 7, 69–89. [Google Scholar] [CrossRef]

- Organisation for Economic Co-Operation and Development (OECD). Problem-solving framework. In PISA; OECD Publishing: Paris, France, 2013; pp. 119–137. [Google Scholar]

- National Association of Colleges and Employers. Employers Seek Teamwork, Problem-Solving Skills on Resumes; National Association of Colleges and Employers: Bethlehem, PA, USA, 2017. [Google Scholar]

- Federal Institute for Vocational Education and Training (BIBB). Employment Survey. 2012. Available online: https://www.bibb.de/en/15182.php (accessed on 10 July 2017).

- Anderson, J.R. Cognitive Psychology and Its Implications, 6th ed.; Worth Publishers: New York, NY, USA, 2005; p. xv. [Google Scholar]

- Newell, A.; Simon, H.A. Human Problem Solving; Prentice-Hall: Englewood Cliffs, NJ, USA, 1972. [Google Scholar]

- Duncker, K. On problem-solving. Psychol. Monogr. 1945, 58, i-113. [Google Scholar] [CrossRef]

- Maier, N.R.F. Reasoning in humans. II. The solution of a problem and its appearance in consciousness. J. Comp. Psychol. 1931, 12, 181–194. [Google Scholar] [CrossRef]

- Luchins, A.S. Mechanization in problem solving: The effect of einstellung. Psychol. Monogr. 1942, 54, i-95. [Google Scholar] [CrossRef]

- Pólya, G. How to Solve It; Princeton University Press: Princeton, NJ, USA, 1945. [Google Scholar]

- Bransford, J.; Stein, B.S. The Ideal Problem Solver, 2nd ed.; W.H. Freeman: New York, NY, USA, 1993. [Google Scholar]

- Nickerson, R.S.; Perkins, D.N.; Smith, E.E. The Teaching of Thinking; L. Erlbaum Associates: Hillsdale, NJ, USA, 1985. [Google Scholar]

- Segal, J.W.; Chipman, S.F.; Glaser, R. Thinking and Learning Skills; Routledge: Abingdon, UK, 1985; p. 1. [Google Scholar]

- Hedlund, J.; Wilt, J.M.; Nebel, K.L.; Ashford, S.J.; Sternberg, R.J. Assessing practical intelligence in business school admissions: A supplement to the graduate management admissions test. Learn. Individ. Differ. 2006, 16, 101–127. [Google Scholar] [CrossRef]

- McKinsey & Company, I. Mckinsey Problem Solving Test, Practice Test A. Available online: http://www.mckinsey.com/careers/interviewing (accessed on 20 June 2017).

- Funke, J. Complex problem solving: A case for complex cognition? Cogn. Process. 2009, 11, 133–142. [Google Scholar] [CrossRef] [PubMed]

- Engelhart, M.; Funke, J.; Sager, S. A new test-scenario for optimization-based analysis and training of human decision making. In SIAM Conference on Optimization (SIOPT 2011); Darmstadtium Conference Center: Darmstadt, Germany, 2011. [Google Scholar]

- Wittmann, W.W.; Süß, H.-M. Investigating the paths between working memory, intelligence, knowledge, and complex problem-solving performances via brunswik symmetry. In Learning and Individual Differences: Process, Trait, and Content Determinants; Ackerman, P.L., Kyllonen, P.C., Roberts, R.D., Eds.; American Psychological Association: Washington, DC, USA, 1999; pp. 77–108. [Google Scholar]

- Greif, S.; Fischer, A. Measuring complex problem solving: An educational application of psychological theories. J. Educ. Res. Online 2013, 1, 38–58. [Google Scholar]

- Elg, F. Leveraging intelligence for high performance in complex dynamic systems requires balanced goals. Theor. Issues Ergon. Sci. 2005, 6, 63–72. [Google Scholar] [CrossRef]

- Sonnleitner, P.; Brunner, M.; Keller, U.; Martin, R.; Latour, T. The genetics lab—A new computer-based problem solving scenario to assess intelligence. In Proceedings of the 11th European Conference on Psychological Assessment, Riga, Latvia, 31 August–3 September 2011. [Google Scholar]

- Gonzalez, C.; Thomas, R.P.; Vanyukov, P. The relationships between cognitive ability and dynamic decision making. Intelligence 2005, 33, 169–186. [Google Scholar] [CrossRef]

- Schoppek, W. Spiel und wirklichk eit—Reliabilität und validität von verhaltensmustern in komplexen situationen [play and reality: Reliability and validity of behavior patterns in complex situations]. Sprache Kognit. 1991, 10, 15–27. [Google Scholar]

- Ackerman, P.L. Predicting individual differences in complex skill acquisition: Dynamics of ability determinants. J. Appl. Psychol. 1992, 77, 598–614. [Google Scholar] [CrossRef] [PubMed]

- Pappert, S. Mindstorms: Children, Computers, and Powerful Ideas; Basic Books: New York, NY, USA, 1980. [Google Scholar]

- Bauer, M.; Wylie, E.C.; Jackson, J.T.; Mislevy, R.J.; John, M.; Hoffman-John, E. Why video games can be a good fit to formative assessment. J. Appl. Test. Technol. in press.

- Mislevy, R.J.; Behrens, J.T.; Dicerbo, K.E.; Frezzo, D.C.; West, P. Three things game designers need to know about assessment. In Assessment in Game-Based Learning; Springer: New York, NY, USA, 2012; pp. 59–81. [Google Scholar]

- Mislevy, R.J.; Oranje, A.; Bauer, M.I.; von Davier, A.; Hao, J.; Corrigan, S.; Hoffman, E.; DiCerbo, K.; John, M. Psychometric Considerations in Game-Based Assessment; GlassLab Research, Institute of Play: New York, NY, USA, 2014. [Google Scholar]

- Mané, A.; Donchin, E. The space fortress game. Acta Psychol. 1989, 71, 17–22. [Google Scholar] [CrossRef]

- Osman, M. Controlling uncertainty: A review of human behavior in complex dynamic environments. Psychol. Bull. 2010, 136, 65–86. [Google Scholar] [CrossRef] [PubMed]

- Shute, V.J.; Glaser, R.; Raghavan, K. Inference and discovery in an exploratory laboratory. In Learning and Individual Differences; Ackerman, P.L., Sternberg, R.J., Glaser, R., Eds.; W.H. Freeman: New York, NY, USA, 1989; pp. 279–326. [Google Scholar]

- Brehmer, B. Dynamic decision making: Human control of complex systems. Acta Psychol. 1992, 81, 211–241. [Google Scholar] [CrossRef]

- Funke, J. Dealing with dynamic systems: Research strategy, diagnostic approach and experimental results. Ger. J. Psychol. 1992, 16, 24–43. [Google Scholar]

- Buchner, A.; Funke, J. Finite-state automata: Dynamic task environments in problem-solving research. Q. J. Exp. Psychol. Sect. A 1993, 46, 83–118. [Google Scholar] [CrossRef]

- Süß, H.-M. Intelligenz, Wissen und Problemlösen: Kognitive Voraussetzungen für Erfolgreiches Handeln bei Computersimulierten Problemen; Hogrefe: Göttingen, Germany, 1996. [Google Scholar]

- Funke, J. Analysis of minimal complex systems and complex problem solving require different forms of causal cognition. Front. Psychol. 2014, 5. [Google Scholar] [CrossRef] [PubMed]

- Greiff, S.; Martin, R. What you see is what you (don’t) get: A comment on funke’s (2014) opinion paper. Front. Psychol. 2014, 5. [Google Scholar] [CrossRef] [PubMed]

- Danner, D.; Hagemann, D.; Holt, D.V.; Hager, M.; Schankin, A.; Wüstenberg, S.; Funke, J. Measuring performance in dynamic decision making. J. Individ. Differ. 2011, 32, 225–233. [Google Scholar] [CrossRef]

- Goode, N.; Beckmann, J.F. You need to know: There is a causal relationship between structural knowledge and control performance in complex problem solving tasks. Intelligence 2010, 38, 345–352. [Google Scholar] [CrossRef]

- Greiff, S.; Fischer, A.; Wüstenberg, S.; Sonnleitner, P.; Brunner, M.; Martin, R. A multitrait—Multimethod study of assessment instruments for complex problem solving. Intelligence 2013, 41, 579–596. [Google Scholar] [CrossRef]

- Putz-Osterloh, W. Über die beziehung zwischen testintelligenz und problemlöseerfolg [on the relationship between test intelligence and problem solving performance]. Zeitschrift für Psychologie 1981, 189, 79–100. [Google Scholar]

- Liu, O.L.; Bridgeman, B.; Adler, R.M. Measuring learning outcomes in higher education: Motivation matters. Educ. Res. 2012, 41, 352–362. [Google Scholar] [CrossRef]

- Cattell, R.B. The measurement of adult intelligence. Psychol. Bull. 1943, 40, 153–193. [Google Scholar] [CrossRef]

- Ackerman, P.L.; Beier, M.E.; Boyle, M.O. Working memory and intelligence: The same or different constructs? Psychol. Bull. 2005, 131, 30–60. [Google Scholar] [CrossRef] [PubMed]

- Wilhelm, O. Measuring reasoning ability. In Handbook of Understanding and Measuring Intelligence; Engle, R.W., Wilhelm, O., Eds.; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2004; pp. 373–392. [Google Scholar]

- Chuderski, A. The broad factor of working memory is virtually isomorphic to fluid intelligence tested under time pressure. Personal. Individ. Differ. 2015, 85, 98–104. [Google Scholar] [CrossRef]

- Carpenter, P.A.; Just, M.A.; Shell, P. What one intelligence test measures: A theoretical account of the processing in the raven progressive matrices test. Psychol. Rev. 1990, 97, 404–431. [Google Scholar] [CrossRef] [PubMed]

- Snow, R.E.; Kyllonen, P.C.; Marshalek, B. The topography of ability and learning correlations. In Advances in the Psychology of Human Intelligence; Sternberg, R.J., Ed.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 1988; Volume 2, pp. 47–103. [Google Scholar]

- Gignac, G.E. Raven’s is not a pure measure of general intelligence: Implications for g factor theory and the brief measurement of g. Intelligence 2015, 52, 71–79. [Google Scholar] [CrossRef]

- Holzman, T.G.; Pellegrino, J.W.; Glaser, R. Cognitive variables in series completion. J. Educ. Psychol. 1983, 75, 603–618. [Google Scholar] [CrossRef]

- Kotovsky, K.; Simon, H.A. Empirical tests of a theory of human acquisition of concepts for sequential patterns. Cogn. Psychol. 1973, 4, 399–424. [Google Scholar] [CrossRef]

- Simon, H.A.; Kotovsky, K. Human acquisition of concepts for sequential patterns. Psychol. Rev. 1963, 70, 534–546. [Google Scholar] [CrossRef] [PubMed]

- Hambrick, D.Z.; Altmann, E.M. The role of placekeeping ability in fluid intelligence. Psychon. Bull. Rev. 2014, 22, 1104–1110. [Google Scholar] [CrossRef] [PubMed]

- Stankov, L.; Cregan, A. Quantitative and qualitative properties of an intelligence test: Series completion. Learn. Individ. Differ. 1993, 5, 137–169. [Google Scholar] [CrossRef]

- Diehl, K.A. Algorithmic Item Generation and Problem Solving Strategies in Matrix Completion Problems. Ph.D. Thesis, University of Kansas, Lawrence, KS, USA, 2004. [Google Scholar]

- Embretson, S.E. A cognitive design system approach to generating valid tests: Application to abstract reasoning. Psychol. Methods 1998, 3, 380–396. [Google Scholar] [CrossRef]

- Embretson, S.E. Generating abstract reasoning items with cognitive theory. In Item Generation for Test Development; Irvine, S.H., Kyllonen, P.C., Irvine, S.H., Kyllonen, P.C., Eds.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2002; pp. 219–250. [Google Scholar]

- MacCallum, R.C.; Browne, M.W. The use of causal indicators in covariance structure models: Some practical issues. Psychol. Bull. 1993, 114, 533–541. [Google Scholar] [CrossRef] [PubMed]

- National Center for Education Statistics. Improving the Measurement of Socioeconomic Status for the National Assessment of Educational Progress: A the Oretical Foundation; U.S. Department of Education, Institute of Education Sciences: Washington, DC, USA, 2012.

- Bollen, K.A.; Diamantopoulos, A. In defense of causal-formative indicators: A minority report. Psychol. Methods 2015. [Google Scholar] [CrossRef] [PubMed]

- Holmes, T.H.; Rahe, R.H. The social readjustment rating scale. J. Psychosom. Res. 1967, 11, 213–218. [Google Scholar] [CrossRef]

- Organisation for Economic Co-Operation and Development (OECD). Pisa 2012 Assessment and Analytic Framework: Mathematics, Reading, Science, Problem Solving and Financial Literacy; Organisation for Economic Co-Operation and Development (OECD): Paris, France, 2013. [Google Scholar]

- American Educational Research Association; American Psychological Association; National Council on Measurement in Education; Joint Committee on Standards for Educational and Psychological Testing (U.S.). Standards for Educational and Psychological Testing; American Educational Research Association: Washington, DC, USA, 2014; p. ix. [Google Scholar]

- Organisation for Economic Co-Operation and Development (OECD). Pisa 2012 Results: Excellence through Equity: Giving Every Student the Chance to Succeed; Organisation for Economic Co-Operation and Development (OECD): Paris, France, 2013; Volume II. [Google Scholar]

- Hauser, R.M.; Goldberger, A.S. The treatment of unobservable variables in path analysis. Sociol. Methodol. 1971, 3, 81. [Google Scholar] [CrossRef]

- Miller, G.A.; Galanter, E.; Pribram, K.H. Plans and the Structure of Behavior; Henry Holt: New York, NY, USA, 1960; Volume 5, pp. 341–342. [Google Scholar]

- Schoppek, W.; Fischer, A. Complex problem solving—Single ability or complex phenomenon? Front. Psychol. 2015, 6. [Google Scholar] [CrossRef] [PubMed]

- Peterson, N.G.; Mumford, M.D.; Borman, W.C.; Jeanneret, P.R.; Fleishman, E.A. An Occupational Information System for the 21st Century: The Development of O*NET; American Psychological Association: Washington, DC, USA, 1999. [Google Scholar]

- Rounds, J.; Armstrong, P.I.; Liao, H.-Y.; Lewis, P.; Rivkin, D. Second Generation Occupational Interest Profiles for the O*NET System; National Center for O*NET Development: Raleigh, NC, USA, 2008. [Google Scholar]

- Fleisher, M.S.; Tsouicomis, S. O*NET Analyst Occupational Abilities Ratings: Analysis Cycle 12 Results; National Center for O*NET Development: Raleigh, NC, USA, 2012. [Google Scholar]

- Van Iddekinge, C.H.; Tsacoumis, S. A Comparison of Incumbent and Analyst Ratings of O*NET Skills; Human Resources Research Organization: Arlington, VA, USA, 2006. [Google Scholar]

- Hunt, E.; Madhyastha, T.M. Cognitive demands of the workplace. J. Neurosci. Psychol. Econ. 2012, 5, 18–37. [Google Scholar] [CrossRef]

- Lubinski, D.; Humphreys, L.G. Seeing the forest from the trees: When predicting the behavior or status of groups, correlate means. Psychol. Public Policy Law 1996, 2, 363–376. [Google Scholar] [CrossRef]

- Organisation for Economic Co-Operation and Development (OECD). Pisa 2003 Technical Report; Organisation for Economic Co-Operation and Development (OECD): Paris, France, 2004. [Google Scholar]

- Robinson, W.S. Ecological correlations and the behavior of individuals. Am. Sociol. Rev. 1950, 15, 351–357. [Google Scholar] [CrossRef]

| 1 | Although some authors refer to this as the European tradition, it seems that almost all research comes from Germany, and U.K. research seems more in line with the American tradition. |

| 2 | Each domain is broken down further. For example, worker characteristics include abilities (defined as “Enduring attributes of the individual that influence performance”), interests, values, and styles (i.e., personality). Worker requirements include basic and cross-functional skills, knowledge, and education. Cross-functional skills include Complex Problem Solving, Time Management, and 30 others. Knowledge includes 33 knowledge areas. |

| 3 | In the original O*NET prototype questionnaire, Complex Problem Solving was rated through eight constructs: (a) Problem Identification; (b) Information Gathering; (c) Information Organizing; (d) Synthesis/Reorganization; (e) Idea Generation; (f) Idea Evaluation; (g) Implementation Planning; and (h) Solution Appraisal [83]. In the revised questionnaire, these eight ratings were replaced by a single rating for Complex Problem Solving to reduce rater burden. |

| 4 | Skills ratings originally were provided by job incumbents, but more recently have been provided by occupational analysts to avoid problems of incumbent ratings inflation and because of analysts’ understanding of the constructs being rated [84]. |

| 5 | Our results replicate the findings of Hunt and Madhyastha [110], with some differences. The pattern of loadings on the first principal component was identical across the two analyses, with the only negative loading across both studies being Spatial Orientation. Loadings on the earlier study’s first component were consistently larger than those in our study, which is consistent with the fact that Hunt and Madhyastha accounted for 58% of the variance while our analysis accounted for 50% of the variance in ability ratings. We attribute these differences to the prior study being conducted over five years ago. O*Net ratings are periodically updated; the mixture of the jobs ratings varied somewhat between our and Hunt and Madhyastha’s studies. |

| 6 | Note that going from Job Zone 2 to 3, log median annual wages goes from 10.42 to 10.70. Because we are using natural logs, this difference can be interpreted as roughly a 28% wage increase (actually, 32%); similarly going from Zone 4 (10.80) to Zone 5 (10.84) suggests roughly a 4% increase (actually 4%). |

| Cognitive Ability | Component 1 (g/Gf) | Component 2 (Spatial) | Component 3 (Number) |

|---|---|---|---|

| Deductive Reasoning | 0.90 | −0.12 | 0.01 |

| Inductive Reasoning | 0.88 | −0.12 | 0.07 |

| Written Comprehension | 0.85 | −0.37 | 0.03 |

| Written Expression | 0.84 | −0.34 | 0.08 |

| Fluency of Ideas | 0.84 | −0.14 | −0.09 |

| Originality | 0.80 | −0.15 | −0.05 |

| Information Ordering | 0.80 | 0.22 | −0.17 |

| Category Flexibility | 0.79 | 0.11 | −0.26 |

| Oral Comprehension | 0.77 | −0.43 | 0.28 |

| Memorization | 0.77 | −0.06 | 0.06 |

| Problem Sensitivity | 0.76 | 0.24 | 0.26 |

| Oral Expression | 0.74 | −0.50 | 0.30 |

| Speed of Closure | 0.71 | 0.42 | 0.10 |

| Math Reasoning | 0.70 | 0.04 | −0.56 |

| Flexibility of Closure | 0.64 | 0.63 | 0.03 |

| Number Facility | 0.65 | 0.10 | −0.57 |

| Selective Attention | 0.43 | 0.53 | 0.32 |

| Time Sharing | 0.49 | 0.38 | 0.55 |

| Perceptual Speed | 0.33 | 0.81 | −0.03 |

| Visualization | 0.17 | 0.72 | −0.24 |

| Spatial Orientation | −0.31 | 0.66 | 0.23 |

| Predictor Variable | g/Gf | CPS | Knowledge | Log Median Wages |

|---|---|---|---|---|

| g/Gf | 1.00 | 0.86 * | 0.63 * | 0.39 * |

| CPS | - | 1.00 | 0.58 * | 0.42 * |

| Knowledge | - | - | 1.00 | 0.28 * |

| Predictor Variable | M (SD) | Model 1 | Model 2 | Model 3 | Model 4 |

|---|---|---|---|---|---|

| g/Gf | −0.01 (0.99) | 0.19 * (0.02) | 0.06 (0.01) | 0.17 * (0.02) | 0.05 (0.03) |

| CPS | −0.02 (0.99) | - | 0.15 * (0.03) | - | 0.15 * (0.03) |

| Knowledge | 2.53 (1.03) | - | - | 0.04 * (0.02) | 0.03 (0.02) |

| R2 | - | 0.15 | 0.18 | 0.16 | 0.18 |

| SSE | - | 139.10 | 135.13 | 138.25 | 134.60 |

| Predictor Variable | M (SD) | Model 1 | Model 2 | Model 3 | Model 4 |

|---|---|---|---|---|---|

| g/Gf | −0.74 (0.67) | 0.14 * (0.01) | 0.04 (0.03) | 0.13 * (0.03) | 0.04 (0.03) |

| CPS | −0.71 (0.62) | - | 0.15 * (0.04) | - | 0.15 * (0.04) |

| Knowledge | 2.01 (0.89) | - | - | 0.02 (0.02) | 0.01 (0.02) |

| R2 | - | 0.11 | 0.16 | 0.11 | 0.16 |

| SSE | - | 17.78 | 16.67 | 17.71 | 16.65 |

| Predictor Variable | M (SD) | Model 1 | Model 2 | Model 3 | Model 4 |

|---|---|---|---|---|---|

| g/Gf | 0.11 (0.64) | 0.16 * (0.01) | 0.09 (0.02) | 0.15 * (0.04) | 0.08 (0.05) |

| CPS | −0.03 (0.62) | - | 0.10 * (0.05) | - | 0.10 * (0.05) |

| Knowledge | 2.47 (0.76) | - | - | 0.01 (0.03) | 0.02 (0.03) |

| R2 | - | 0.11 | 0.12 | 0.10 | 0.13 |

| SSE | - | 14.79 | 14.46 | 14.78 | 14.43 |

| Predictor Variable | M (SD) | Model 1 | Model 2 | Model 3 | Model 4 |

|---|---|---|---|---|---|

| g/Gf | 0.84 (0.60) | 0.11 (0.09) | 0.04 (0.14) | 0.11 (0.09) | 0.04 (0.14) |

| CPS | 0.79 (0.67) | - | 0.09 (0.12) | - | 0.09 (0.12) |

| Knowledge | 3.02 (0.86) | - | - | −0.04 (0.07) | −0.04 (0.07) |

| R2 | - | 0.01 | 0.02 | 0.02 | 0.02 |

| SSE | - | 47.55 | 47.33 | 47.41 | 47.21 |

| Predictor Variable | M (SD) | Model 1 | Model 2 | Model 3 | Model 4 |

|---|---|---|---|---|---|

| g/Gf | 0.97 (0.57) | −0.28 * (0.11) | −0.29 * (0.13) | −0.26 * (0.11) | −0.28 * (0.13) |

| CPS | 1.14 (0.56) | - | 0.03 (0.13) | - | 0.04 (0.13) |

| Knowledge | 3.49 (0.95) | - | - | 0.04 (0.07) | 0.04 (0.07) |

| R2 | - | 0.06 | 0.06 | 0.06 | 0.06 |

| SSE | - | 44.95 | 44.93 | 44.83 | 44.80 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kyllonen, P.; Anguiano Carrasco, C.; Kell, H.J. Fluid Ability (Gf) and Complex Problem Solving (CPS). J. Intell. 2017, 5, 28. https://doi.org/10.3390/jintelligence5030028

Kyllonen P, Anguiano Carrasco C, Kell HJ. Fluid Ability (Gf) and Complex Problem Solving (CPS). Journal of Intelligence. 2017; 5(3):28. https://doi.org/10.3390/jintelligence5030028

Chicago/Turabian StyleKyllonen, Patrick, Cristina Anguiano Carrasco, and Harrison J. Kell. 2017. "Fluid Ability (Gf) and Complex Problem Solving (CPS)" Journal of Intelligence 5, no. 3: 28. https://doi.org/10.3390/jintelligence5030028

APA StyleKyllonen, P., Anguiano Carrasco, C., & Kell, H. J. (2017). Fluid Ability (Gf) and Complex Problem Solving (CPS). Journal of Intelligence, 5(3), 28. https://doi.org/10.3390/jintelligence5030028