Crossmodal Correspondence Mediates Crossmodal Transfer from Visual to Auditory Stimuli in Category Learning

Abstract

1. Introduction

2. Experiment 1: Adopted Pitch-Elevation Stimuli Varied in Elevation with Constant Size

2.1. Method

2.1.1. Participants

2.1.2. Apparatus and Lab Environment

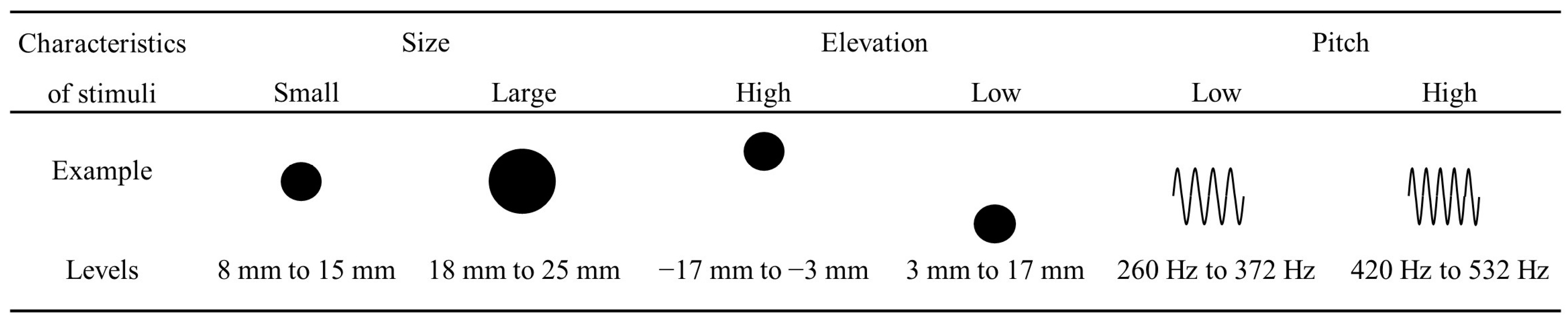

2.1.3. Stimuli

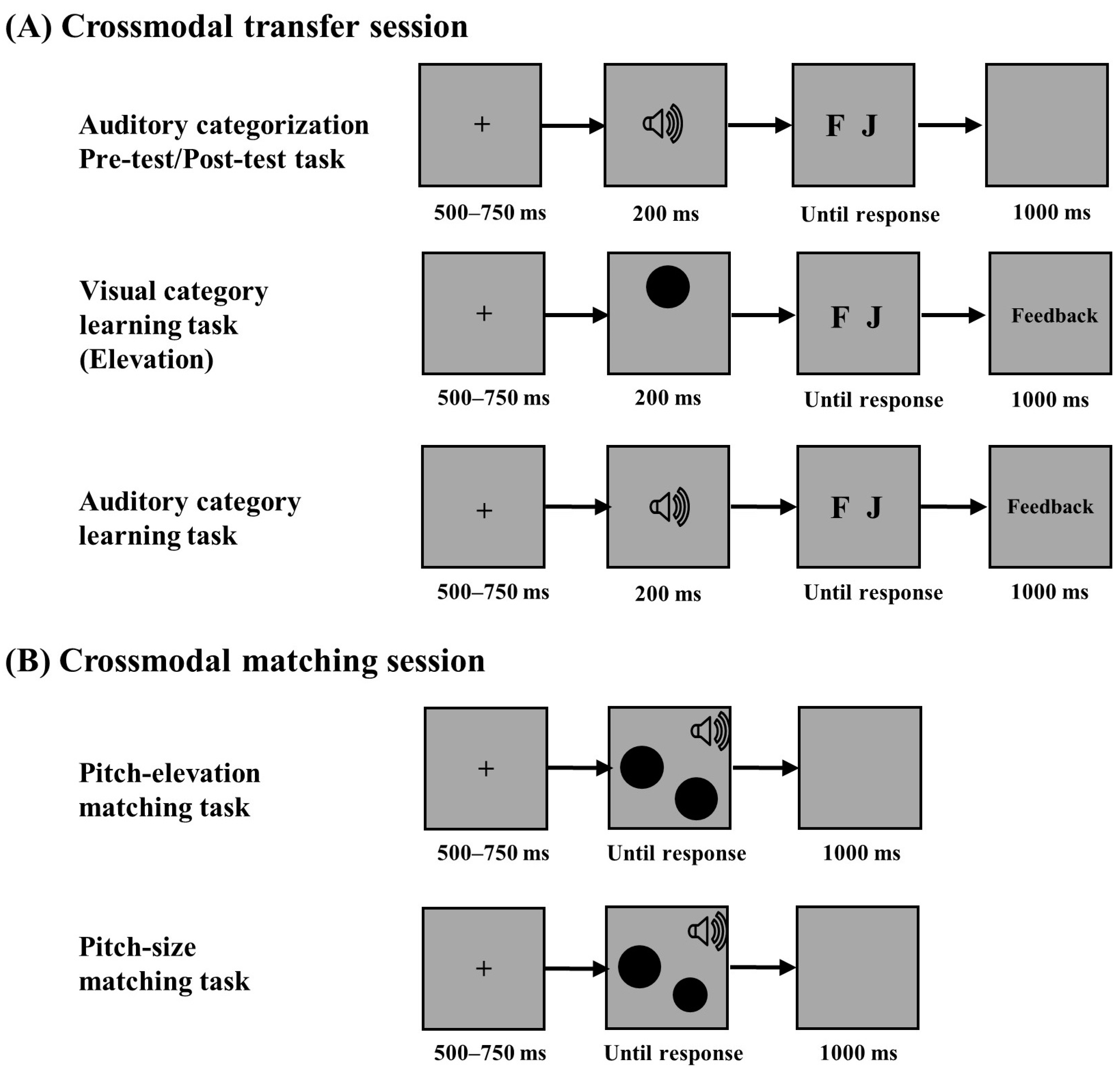

2.1.4. Procedure

2.1.5. Data Analysis

2.2. Results

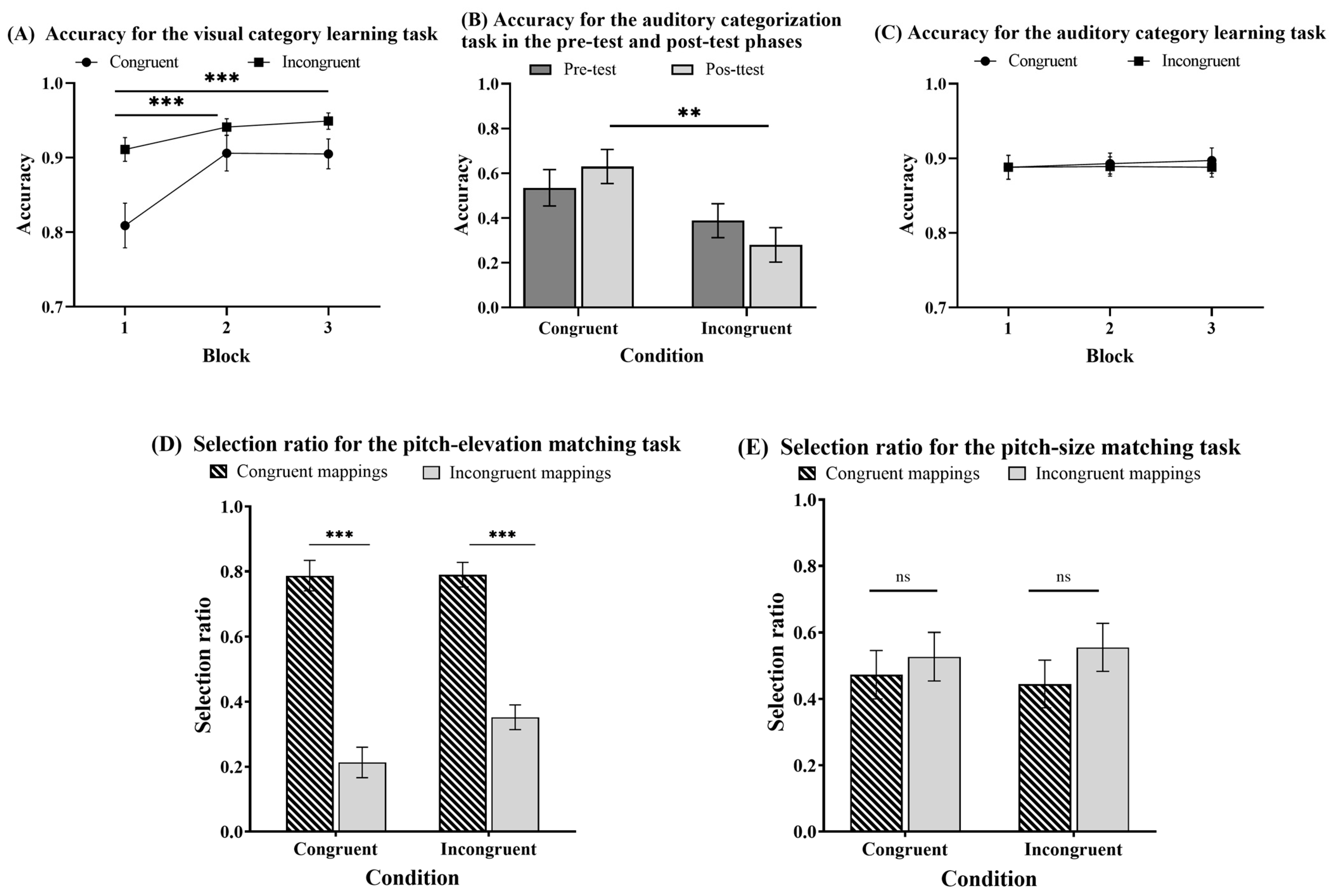

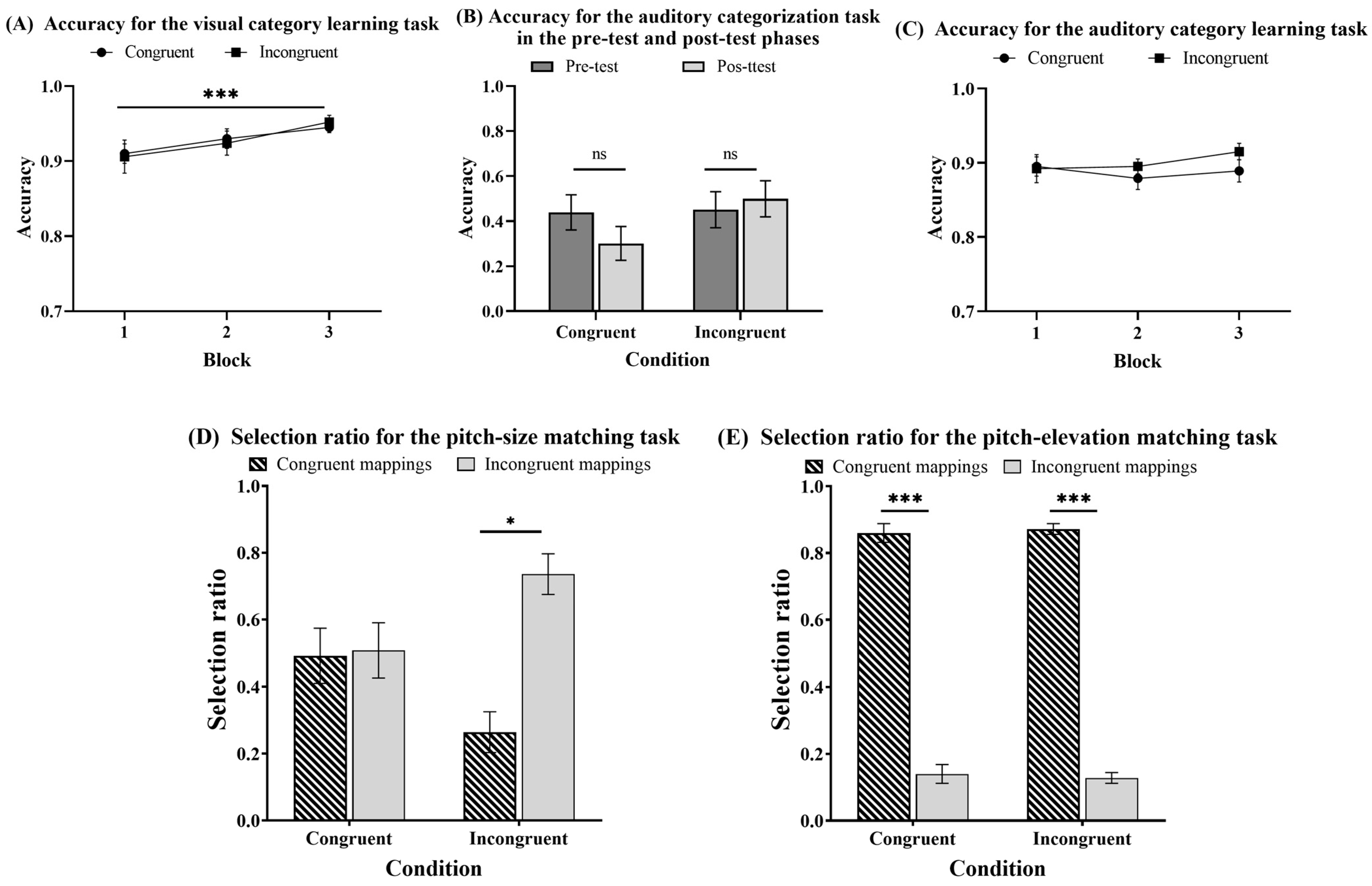

2.2.1. Can People Acquire Category Knowledge in the Elevation Category Learning Task?

2.2.2. Can People Transfer the Knowledge Acquired in Elevation Category Learning to the Categorization of Pitches?

2.2.3. Can People Acquire Category Knowledge in the Pitch Category Learning Task?

2.2.4. Can Multimodal Category Learning Influence Matching Preference in the Pitch-Elevation Matching Task?

2.2.5. Can Multimodal Category Learning Influence Matching Preference in the Pitch-Size Matching Task?

2.3. Discussion

3. Experiment 2: Adopted Pitch-Size Stimuli Varied in Size with Constant Elevation

3.1. Method

3.1.1. Participants

3.1.2. Apparatus and Lab Environment

3.1.3. Stimuli

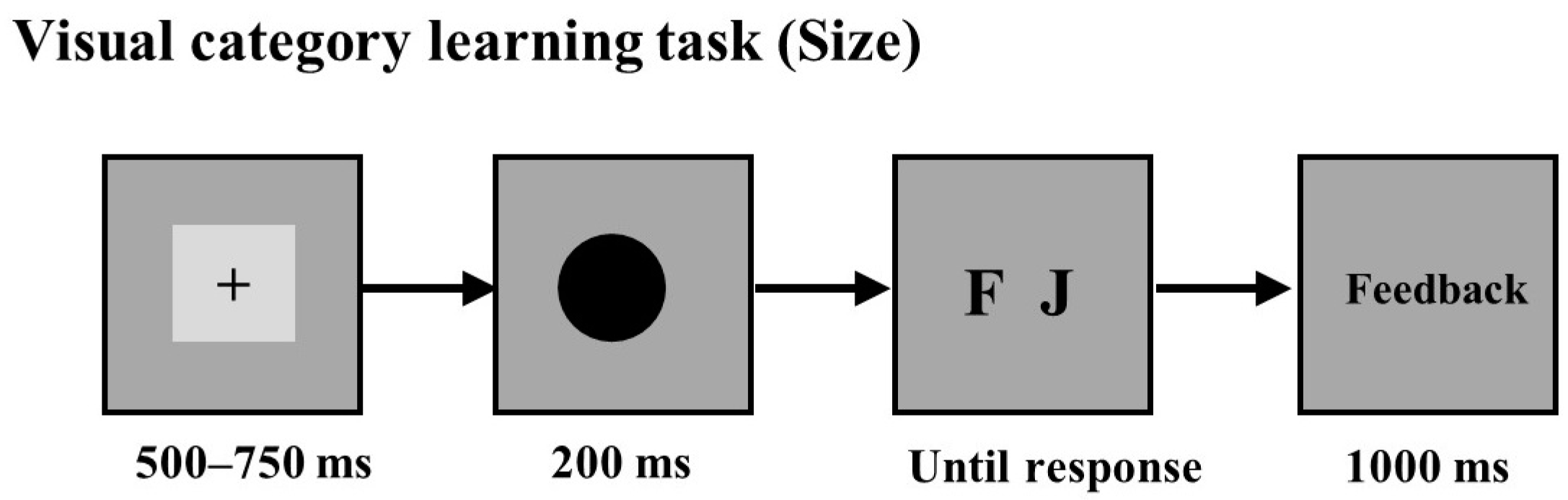

3.1.4. Procedure

3.2. Results

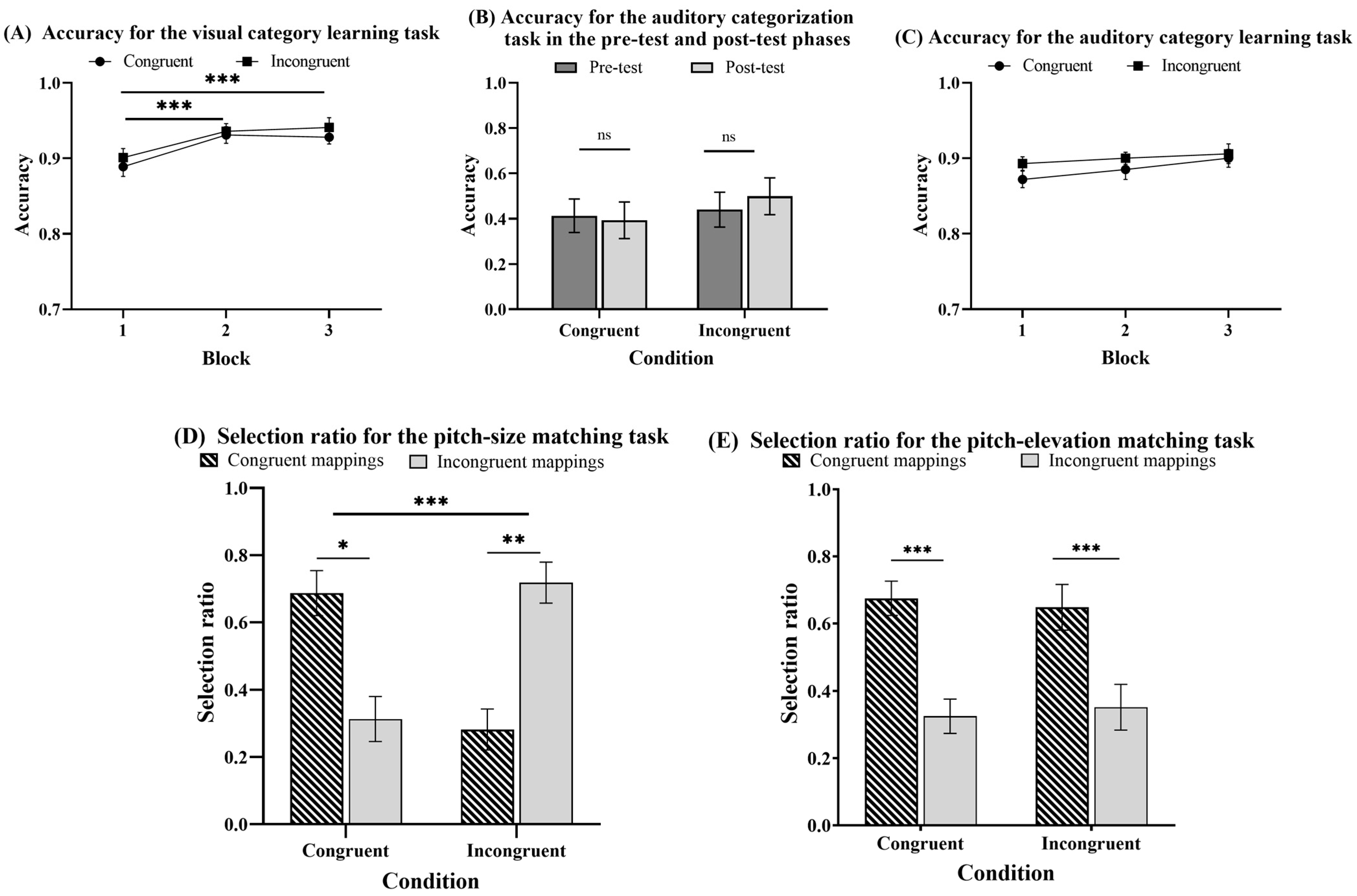

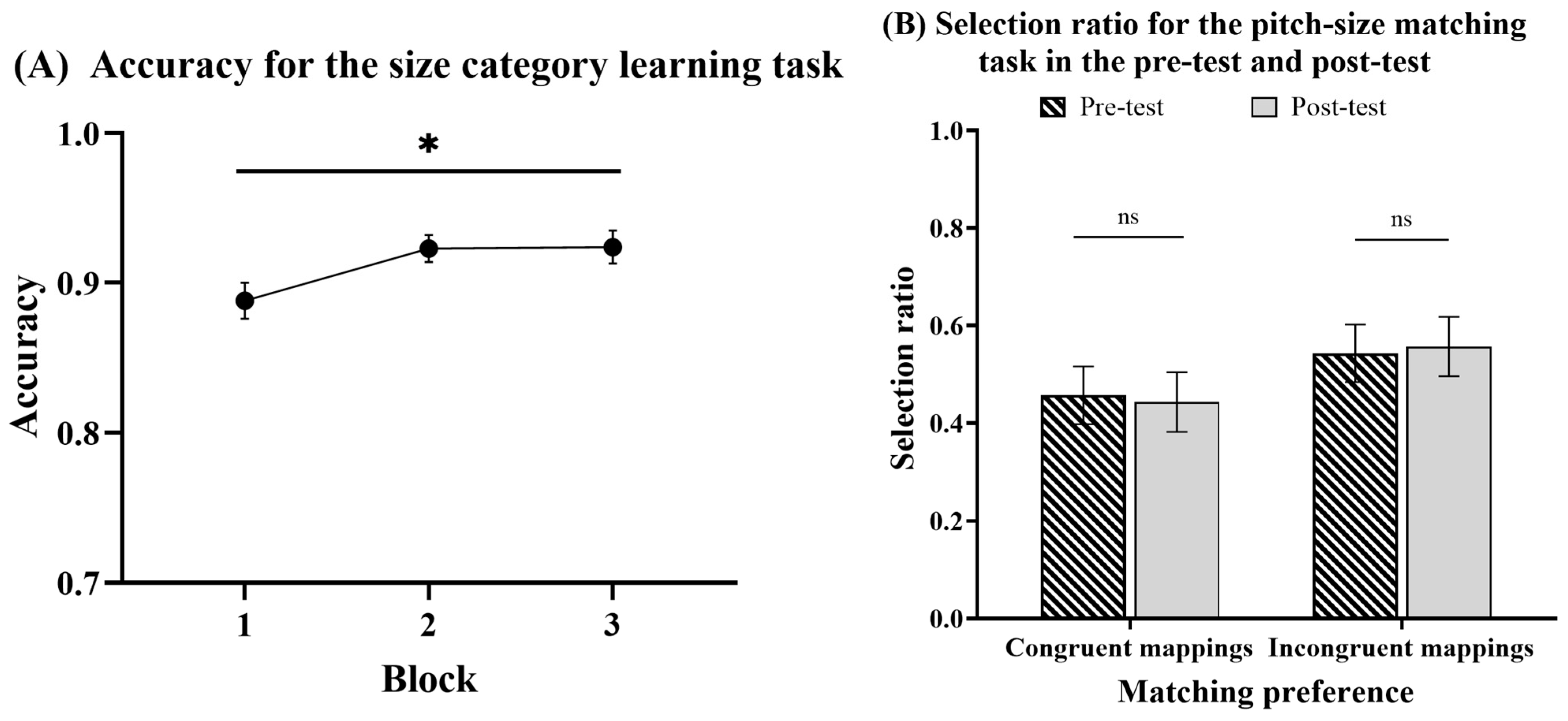

3.2.1. Can People Acquire Category Knowledge in the Size Category Learning Task?

3.2.2. Can People Transfer the Knowledge Acquired in Size Category Learning to the Categorization of Pitches?

3.2.3. Can People Acquire Category Knowledge in the Pitch Category Learning Task?

3.2.4. Can Multimodal Category Learning Influence Matching Preferences in the Pitch-Size Matching Task?

3.2.5. Can Multimodal Category Learning Influence Matching Preference in the Pitch-Elevation Matching Task?

3.3. Discussion

4. Experiment 3: Adopted Pitch-Size Stimuli Varied in Size with Constant Elevation

4.1. Method

4.1.1. Participants

4.1.2. Apparatus and Lab Environment

4.1.3. Stimuli

4.1.4. Procedure

4.2. Results

4.2.1. Can People Acquire Category Knowledge in the Size Category Learning Task?

4.2.2. Can People Transfer the Knowledge Acquired in Size Category Learning to the Categorization of Pitches?

4.2.3. Can People Acquire Category Knowledge in the Pitch Category Learning Task?

4.2.4. Can Multimodal Category Learning Influence Matching Preferences in the Pitch-Size Matching Task?

4.2.5. Can Multimodal Category Learning Influence Matching Preferences in the Pitch-Elevation Matching Task?

4.3. Discussion

5. Experiment 4: Adopted Pitch-Size Stimuli Varied in Size with Constant Elevation

5.1. Method

5.1.1. Participants

5.1.2. Apparatus and Lab Environment

5.1.3. Stimuli

5.1.4. Procedure

5.2. Results

5.2.1. Can People Acquire Category Knowledge in the Size Category Learning Task?

5.2.2. Can Size Category Learning Influence Matching Preference in the Pitch-Size Matching Task?

5.3. Discussion

6. General Discussion

6.1. Crossmodal Correspondence Can Mediate the Occurrence of Crossmodal Transfer

6.2. Multimodal Category Learning Can Shape Crossmodal Correspondence between Visual and Auditory Stimuli

6.3. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amedi, Amir, Rafael Malach, Talma Hendler, Sharon Peled, and Ehud Zohary. 2001. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neuroscience 4: 324–30. [Google Scholar] [CrossRef] [PubMed]

- Bee, Mark, Perrill Stephen, and Owen Patrick. 2000. Male green frogs lower the pitch of acoustic signals in defense of territories: A possible dishonest signal of size? Behavioral Ecology 11: 169–77. [Google Scholar] [CrossRef]

- Berger, Christopher C., and H. Henrik Ehrsson. 2018. Mental imagery induces cross-modal sensory plasticity and changes future auditory perception. Psychological Science 29: 926–93. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, Ira H., and Barry A. Edelstein. 1971. Effects of some variations in auditory input upon visual choice reaction time. Journal of Experimental Psychology 87: 241–47. [Google Scholar] [CrossRef]

- Bien, Nina, Sanne ten Oever, Rainer Goebel, and Alexander T. Sack. 2012. The sound of size: Crossmodal binding in pitch-size synesthesia: A combined TMS, EEG and psychophysics study. Neuroimage 59: 663–72. [Google Scholar] [CrossRef]

- Brunetti, Riccardo, Allegra Indraccolo, Claudia Del Gatto, Charles Spence, and Valerio Santangelo. 2018. Are crossmodal correspondences relative or absolute? Sequential effects on speeded classification. Attention Perception & Psychophysics 80: 527–34. [Google Scholar] [CrossRef]

- Campbell, Jamie I. D., and Florence Scheepers. 2015. Effects of pitch on auditory number comparisons. Psychological Research-Psychologische Forschung 79: 389–400. [Google Scholar] [CrossRef] [PubMed]

- Chiou, Rocco, and Anina N. Rich. 2012. Cross-modality correspondence between pitch and spatial location modulates attentional orienting. Perception 41: 339–53. [Google Scholar] [CrossRef] [PubMed]

- Coward, Sean, and Catherine Stevens. 2004. Extracting meaning from sound: Nomic mappings, everyday listening, and percieving object size from frequency. Psychological Record 54: 349–64. [Google Scholar] [CrossRef][Green Version]

- Dehaene, Stanislas, Florent Meyniel, Catherine Wacongne, Liping Wang, and Christophe Pallier. 2015. The Neural Representation of Sequences: From Transition Probabilities to Algebraic Patterns and Linguistic Trees. Neuron 88: 2–19. [Google Scholar] [CrossRef]

- Deroy, Ophelia, and Charles Spence. 2016. Crossmodal Correspondences: Four Challenges. Multisensory Research 29: 29–48. [Google Scholar] [CrossRef] [PubMed]

- Dolscheid, Sarah, Sabine Hunnius, Daniel Casasanto, and Asifa Majid. 2014. Prelinguistic Infants Are Sensitive to Space-Pitch Associations Found Across Cultures. Psychological Science 25: 1256–61. [Google Scholar] [CrossRef] [PubMed]

- Dolscheid, Sarah, Shakila Shayan, Asifa Majid, and Daniel Casasanto. 2013. The Thickness of Musical Pitch: Psychophysical Evidence for Linguistic Relativity. Psychological Science 24: 613–21. [Google Scholar] [CrossRef] [PubMed]

- Erdogan, Goker, Ilker Yildirim, and Robert A. Jacobs. 2015. From Sensory Signals to Modality-Independent Conceptual Representations: A Probabilistic Language of Thought Approach. PLoS Comput Biol 11: e1004610. [Google Scholar] [CrossRef]

- Ernst, Marc O. 2007. Learning to integrate arbitrary signals from vision and touch. Journal of Vision 7: 7. [Google Scholar] [CrossRef]

- Evans, Karla K., and Anne Treisman. 2010. Natural cross-modal mappings between visual and auditory features. Journal of Vision 10: 6. [Google Scholar] [CrossRef]

- Fernandez-Prieto, Irune, and Jordi Navarra. 2017. The higher the pitch the larger its crossmodal influence on visuospatial processing. Psychology of Music 45: 713–24. [Google Scholar] [CrossRef]

- Fernandez-Prieto, Irune, Charles Spence, Ferran Pons, and Jordi Navarra. 2017. Does Language Influence the Vertical Representation of Auditory Pitch and Loudness? I-Perception 8: 2041669517716183. [Google Scholar] [CrossRef]

- Fernandez-Prieto, Irune, Jordi Navarra, and Ferran Pons. 2015. How big is this sound? Crossmodal association between pitch and size in infants. Infant Behavior & Development 38: 77–81. [Google Scholar] [CrossRef]

- Flanagan, J. Randall, Jennifer P. Bittner, and Roland S. Johansson. 2008. Experience Can Change Distinct Size-Weight Priors Engaged in Lifting Objects and Judging their Weights. Current Biology 18: 1742–47. [Google Scholar] [CrossRef]

- Frost, Ram, Blair C. Armstrong, Noam Siegelman, and Morten H. Christiansen. 2015. Domain generality versus modality specificity: The paradox of statistical learning. Trends in Cognitive Sciences 19: 117–25. [Google Scholar] [CrossRef] [PubMed]

- Gallace, Alberto, and Charles Spence. 2006. Multisensory synesthetic interactions in the speeded classification of visual size. Perception & Psychophysics 68: 1191–203. [Google Scholar] [CrossRef]

- Hadjikhani, Nouchine, and Per E. Roland. 1998. Cross-modal transfer of information between the tactile and the visual representations in the human brain: A positron emission tomographic study. Journal of Neuroscience 18: 1072–84. [Google Scholar] [CrossRef]

- Harrap, Michael J. M., David A. Lawson, Heather M. Whitney, and Sean A. Rands. 2019. Cross-modal transfer in visual and nonvisual cues in bumblebees. Journal of Comparative Physiology a-Neuroethology Sensory Neural and Behavioral Physiology 205: 427–37. [Google Scholar] [CrossRef] [PubMed]

- Haryu, Etsuko, and Sachiyo Kajikawa. 2012. Are higher-frequency sounds brighter in color and smaller in size? Auditory-visual correspondences in 10-month-old infants. Infant Behavior & Development 35: 727–32. [Google Scholar] [CrossRef]

- Jonas, Clare, Mary Jane Spiller, and Paul Hibbard. 2017. Summation of visual attributes in auditory-visual crossmodal correspondences. Psychonomic Bulletin & Review 24: 1104–12. [Google Scholar] [CrossRef]

- Klapman, Sarah F., Jordan T. Munn, and Jonathan M. P. Wilbiks. 2021. Response orientation modulates pitch-space relationships: The ROMPR effect. Psychological Research-Psychologische Forschung 85: 2197–212. [Google Scholar] [CrossRef]

- Konkle, Talia, and Christopher I. Moore. 2009. What can crossmodal aftereffects reveal about neural representation and dynamics? Communicative & Integrative Biology 2: 479–81. [Google Scholar] [CrossRef]

- Konkle, Talia, Qi Wang, Vincent Hayward, and Christopher I. Moore. 2009. Motion Aftereffects Transfer between Touch and Vision. Current Biology 19: 745–50. [Google Scholar] [CrossRef] [PubMed]

- Korzeniowska, Anna T., Holly Root-Gutteridge, Julia Simner, David Reby, and David Reby. 2019. Audio-visual crossmodal correspondences in domestic dogs (Canis familiaris). Biology Letters 15: 20190564. [Google Scholar] [CrossRef]

- Lee, Michael D., and Eric-Jan Wagenmakers. 2013. Bayesian Cognitive Modeling: A Practical Course. Cambridge: Cambridge University Press. [Google Scholar] [CrossRef]

- Levitan, Carmel A., Jiana Ren, Andy T. Woods, Sanne Boesveldt, Jason S. Chan, Kirsten J. McKenzie, Michael Dodson, Jai A. Levin, Christine X. R. Leong, and Jasper J. F. van den Bosch. 2014. Cross-Cultural Color-Odor Associations. PLoS ONE 9: e101651. [Google Scholar] [CrossRef] [PubMed]

- McCormick, Kelly, Simon Lacey, Randall Stilla, Lynne C. Nygaard, and K. Sathian. 2018. Neural basis of the crossmodal correspondence between auditory pitch an visuospatial elevation. Neuropsychologia 112: 19–30. [Google Scholar] [CrossRef] [PubMed]

- Mondloch, Catherine J., and Daphne Maurer. 2004. Do small white balls squeak? Pitch-object correspondences in young children. Cognitive Affective & Behavioral Neuroscience 4: 133–36. [Google Scholar] [CrossRef]

- Nehme, Lea, Reine Barbar, Yelena Maric, and Muriel Jacquot. 2016. Influence of odor function and color symbolism in odor-color associations: A French-Lebanese-Taiwanese cross-cultural study. Food Quality and Preference 49: 33–41. [Google Scholar] [CrossRef]

- Obayashi, Shigeru. 2004. Possible mechanism for transfer of motor skill learning: Implication of the cerebellum. Cerebellum 3: 204–11. [Google Scholar] [CrossRef]

- Parise, Cesare V. 2016. Crossmodal Correspondences: Standing Issues and Experimental Guidelines. Multisensory Research 29: 7–28. [Google Scholar] [CrossRef]

- Pena, Marcela, Jacques Mehler, and Marina Nespor. 2011. The Role of Audiovisual Processing in Early Conceptual Development. Psychological Science 22: 1419–21. [Google Scholar] [CrossRef]

- Pisanski, Katarzyna, Paul J. Fraccaro, Cara C. Tigue, Jillian J. M. O’Connor, and David R. Feinberg. 2014. Return to Oz: Voice Pitch Facilitates Assessments of Men’s Body Size. Journal of Experimental Psychology-Human Perception and Performance 40: 1316–31. [Google Scholar] [CrossRef]

- Pisanski, Katarzyna, Sari G. E. Isenstein, Kelyn J. Montano, Jillian J. M. O’Connor, and David R. Feinberg. 2017. Low is large: Spatial location and pitch interact in voice-based body size estimation. Attention Perception & Psychophysics 79: 1239–51. [Google Scholar] [CrossRef]

- Qi, Yuxuan, Hui Zhao, Chujun Wang, and Xiaoang Wan. 2020. Transfer of repeated exposure cost via color-flavor associations. Journal of Sensory Studies 35: e12578. [Google Scholar] [CrossRef]

- Šašinková, Alžběta, Jiří Čeněk, Pavel Ugwitz, Jie-Li Tsai, Ioannis Giannopoulos, David Lacko, Zdeněk Stachoň, Jan Fitz, and Čeněk Šašinka. 2023. Exploring cross-cultural variations in visual attention patterns inside and outside national borders using immersive virtual reality. Scientific Reports 13: 18852. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, Laura, Gunther Knoblich, Ophelia Deroy, and Cordula Vesper. 2021. Crossmodal correspondences as common ground for joint action. Acta Psychologica 212: 103222. [Google Scholar] [CrossRef] [PubMed]

- Schumacher, Sarah, Theresa Burt de Perera, Johanna Thenert, and Gerhard von der Emde. 2016. Cross-modal object recognition and dynamic weighting of sensory inputs in a fish. Proceedings of the National Academy of Sciences of the United States of America 113: 7638–43. [Google Scholar] [CrossRef] [PubMed]

- Shams, Ladan, and Robyn Kim. 2010. Crossmodal influences on visual perception. Physics of Life Reviews 7: 269–84. [Google Scholar] [CrossRef]

- Shankar, Maya U., Carmel A. Levitan, and Charles Spence. 2010. Grape expectations: The role of cognitive influences in color-flavor interactions. Consciousness and Cognition 19: 380–90. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, Stewart, Mark T. Spence, and Jennifer Gregan-Paxton. 2009. Factors Affecting the Acquisition and Transfer of Novel Attribute Relationships to New Product Categories. Psychology & Marketing 26: 122–44. [Google Scholar] [CrossRef]

- Shen, Yeshayahu, and Roy Porat. 2017. Metaphorical Directionality: The Role of Language. In Metaphor: Embodied Cognition and Discourse. Edited by Beate Hampe. Cambridge: Cambridge University Press, pp. 62–81. [Google Scholar] [CrossRef]

- Spence, Charles. 2011. Crossmodal correspondences: A tutorial review. Atten Percept Psychophys 73: 971–95. [Google Scholar] [CrossRef]

- Spence, Charles. 2019. On the Relationship(s) between Color and Taste/Flavor. Experimental Psychology 66: 99–111. [Google Scholar] [CrossRef]

- Spence, Charles. 2022. Exploring Group Differences in the Crossmodal Correspondences. Multisensory Research 35: 495–536. [Google Scholar] [CrossRef]

- Spence, Charles, and Carlos Velasco. 2018. On the multiple effects of packaging colour on consumer behaviour and product experience in the ‘food and beverage’ and ‘home and personal care’ categories. Food Quality and Preference 68: 226–37. [Google Scholar] [CrossRef]

- Swingley, Daniel. 2009. Contributions of infant word learning to language development. Philosophical Transactions of the Royal Society B-Biological Sciences 364: 3617–32. [Google Scholar] [CrossRef]

- Velasco, Carlos, Xiaoang Wan, Alejandro Salgado-Montejo, Andy Woods, Gonzalo Andres Onate, Bingbing Mu, and Charles Spence. 2014. The context of colour-flavour associations in crisps packaging: A cross-cultural study comparing Chinese, Colombian, and British consumers. Food Quality and Preference 38: 49–57. [Google Scholar] [CrossRef]

- Walker, Peter, Brian J. Francis, and Leanne Walker. 2010a. The Brightness-Weight Illusion Darker Objects Look Heavier but Feel Lighter. Experimental Psychology 57: 462–69. [Google Scholar] [CrossRef] [PubMed]

- Walker, Peter, J. Gavin Bremner, Uschi Mason, Jo Spring, Karen Mattock, Alan Slater, and Scott P. Johnson. 2010b. Preverbal infants’ sensitivity to synaesthetic cross-modality correspondences. Psychological Science 21: 21–5. [Google Scholar] [CrossRef] [PubMed]

- Wallraven, Christian, Heinrich H. Buelthoff, Steffen Waterkamp, Loes van Dam, and Nina Gaiert. 2014. The eyes grasp, the hands see: Metric category knowledge transfers between vision and touch. Psychonomic Bulletin & Review 21: 976–85. [Google Scholar] [CrossRef]

- Wan, Xiaoang, Andy T. Woods, Kyoung-Hwan Seoul, Natalie Butcher, and Charles Spence. 2015. When the shape of the glass influences the flavour associated with a coloured beverage: Evidence from consumers in three countries. Food Quality and Preference 39: 109–16. [Google Scholar] [CrossRef]

- Wan, Xiaoang, Andy T. Woods, Muriel Jacquot, Klemens Knoeferle, Mariko Kikutani, and Charles Spence. 2016. The Effects of Receptacle on the Expected Flavor of a Colored Beverage: Cross-Cultural Comparison Among French, Japanese, and Norwegian Consumers. Journal of Sensory Studies 31: 233–44. [Google Scholar] [CrossRef]

- Yildirim, Ilker, and Robert A. Jacobs. 2013. Transfer of object category knowledge across visual and haptic modalities: Experimental and computational studies. Cognition 126: 135–48. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Yao, L.; Fu, Q. Crossmodal Correspondence Mediates Crossmodal Transfer from Visual to Auditory Stimuli in Category Learning. J. Intell. 2024, 12, 80. https://doi.org/10.3390/jintelligence12090080

Sun Y, Yao L, Fu Q. Crossmodal Correspondence Mediates Crossmodal Transfer from Visual to Auditory Stimuli in Category Learning. Journal of Intelligence. 2024; 12(9):80. https://doi.org/10.3390/jintelligence12090080

Chicago/Turabian StyleSun, Ying, Liansheng Yao, and Qiufang Fu. 2024. "Crossmodal Correspondence Mediates Crossmodal Transfer from Visual to Auditory Stimuli in Category Learning" Journal of Intelligence 12, no. 9: 80. https://doi.org/10.3390/jintelligence12090080

APA StyleSun, Y., Yao, L., & Fu, Q. (2024). Crossmodal Correspondence Mediates Crossmodal Transfer from Visual to Auditory Stimuli in Category Learning. Journal of Intelligence, 12(9), 80. https://doi.org/10.3390/jintelligence12090080