Exploring the Relationship between Cognitive Ability Tilt and Job Performance

Abstract

1. Introduction

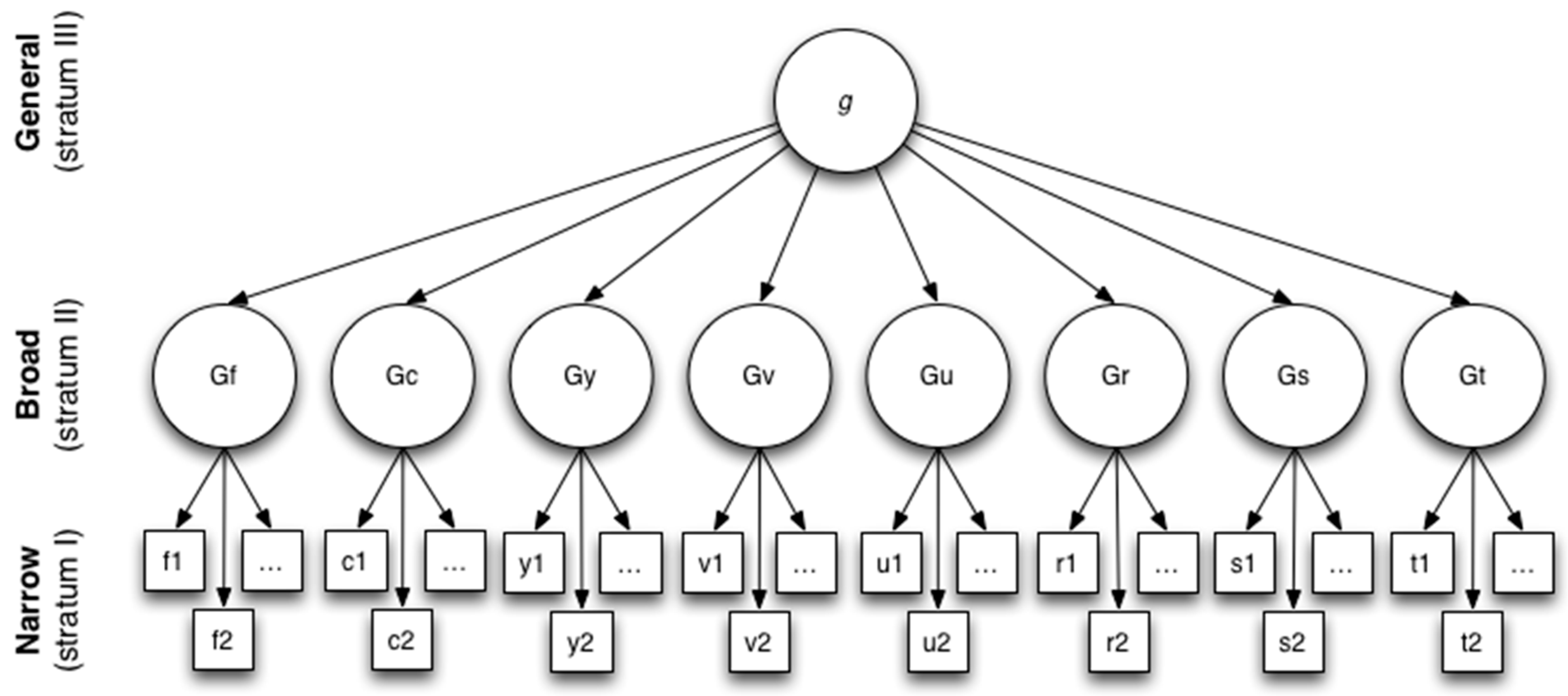

1.1. Conceptualizations of Cognitive Ability

1.2. Cognitive Ability and Job Performance

1.3. Ability Tilt

1.4. Ability Tilt and Job Performance

2. Method

2.1. Sample/Data

2.2. Variables

2.2.1. GATB Scores

2.2.2. g Scores

2.2.3. Ability Tilt Scores

2.2.4. Job Performance Criteria

2.2.5. Job Tilt Scores

2.2.6. Job Groups

3. Results

4. Discussion

4.1. Theoretical Implications

4.2. Practical Implications

4.3. Study Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Allen, Matthew T., Suzanne Tsacoumis, and Rodney A. McCloy. 2011. Updating Occupational Ability Profiles with O*NET® Content Model Descriptors. Report prepared. Raleigh: National Center for O*NET Development. [Google Scholar]

- Bobko, Philip, Philip L. Roth, and Denise Potosky. 1999. Derivation and implications of a meta-analytic matrix incorporating cognitive ability, alternative predictors, and job performance. Personnel Psychology 52: 561–89. [Google Scholar] [CrossRef]

- Brody, Nathan. 2000. History of theories and measurements of intelligence. In Handbook of Intelligence. New York: Cambridge University Press, pp. 16–33. [Google Scholar]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. New York: Cambridge University Press, pp. 16–33. [Google Scholar]

- Cattell, Raymond B. 1957. Personality and Motivation Structure and Measurement. New York: World Book. [Google Scholar]

- Cattell, Raymond B. 1987. Intelligence: Its Structure, Growth and Action. Amsterdam: North-Holland, (Reprinted and revised from Cattell, Raymond B. 1971. Abilities: Their Structure, Growth and Action. Boston: Houghton Mifflin). [Google Scholar]

- Cattell, Raymond B., and John L. Horn. 1978. A check on the theory of fluid and crystallized intelligence with description of new subtest designs. Journal of Educational Measurement 15: 139–64. [Google Scholar] [CrossRef]

- Connell, Michael W., Kimberly Sheridan, and Howard Gardner. 2003. On abilities and domains. In The psychology of Abilities, Competencies, and Expertise. Edited by Robert J. Sternberg and Elena L. Grigorenko. New York: Cambridge University Press, pp. 126–55. [Google Scholar]

- Coyle, Thomas R. 2016. Ability tilt for whites and blacks: Support for differentiation and investment theories. Intelligence 56: 28–34. [Google Scholar] [CrossRef]

- Coyle, Thomas R. 2019. Tech tilt predicts jobs, college majors, and specific abilities: Support for investment theories. Intelligence 75: 33–40. [Google Scholar] [CrossRef]

- Coyle, Thomas R. 2020. Sex differences in tech tilt: Support for investment theories. Intelligence 80: 101437. [Google Scholar] [CrossRef]

- Coyle, Thomas R., Anissa C. Snyder, and Miranda C. Richmond. 2015. Sex differences in ability tilt: Support for investment theory. Intelligence 50: 209–20. [Google Scholar] [CrossRef]

- Coyle, Thomas R., and David R. Pillow. 2008. SAT and ACT predict college GPA after removing g. Intelligence 36: 719–29. [Google Scholar] [CrossRef]

- Coyle, Thomas R., Jason M. Purcell, Anissa C. Snyder, and Miranda C. Richmond. 2014. Ability tilt on the SAT and ACT predicts specific abilities and college majors. Intelligence 46: 18–24. [Google Scholar] [CrossRef]

- Davison, Mark L., Gilbert B. Jew, and Ernest C. Davenport, Jr. 2014. Patterns of SAT scores, choice of STEM major, and gender. Measurement and Evaluation in Counseling and Development 47: 118–26. [Google Scholar] [CrossRef]

- Edwards, Jeffrey R. 1991. Person-job fit: A conceptual integration, literature review, and methodological critique. In International Review of Industrial and Organizational Psychology. Edited by Cary L. Cooper and Ivan T. Robertson. Oxford: John Wiley & Sons, vol. 6, pp. 283–357. [Google Scholar]

- Fleishman, Edwin A. 1975. Toward a taxonomy of human performance. American Psychologist 30: 1127–29. [Google Scholar] [CrossRef]

- Fleishman, Edwin A., David P. Costanza, and Joanne Marshall-Mies. 1999. Abilities. In An Occupational Information System for the 21st Century: The Development of O*NET. Edited by Norman G. Peterson, Michael D. Mumford, Walter C. Borman, P. Richard Jeanneret and Edward A. Fleishman. Washington, DC: American Psychological Association, pp. 175–95. [Google Scholar]

- Fleishman, Edwin A., Marilyn K. Quaintance, and Laurie A. Broedling. 1984. Taxonomies of Human Performance: The Description of Human Tasks. Orlando: Academic Press. [Google Scholar]

- Gottfredson, Linda S. 1997. Mainstream science on intelligence: An editorial with 52 signatories, history, and bibliography. Intelligence 24: 13–23. [Google Scholar] [CrossRef]

- Hanges, Paul J., Charles A. Scherbaum, and Charlie L. Reeve. 2015. There are more things in heaven and earth, Horatio, than DGF. Industrial and Organizational Psychology 8: 472–81. [Google Scholar] [CrossRef]

- Hauser, Robert M. 2002. Meritocracy, cognitive ability, and the Sources of Occupational Success. Madison: Center for Demography and Ecology, University of Wisconsin. [Google Scholar]

- Horn, John L., and Nayena Blankson. 2012. Foundations for better understanding of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: Guilford Press, pp. 73–98. [Google Scholar]

- Hunter, John E. 1980. Test Validation for 12,000 Jobs: An Application of Synthetic Validity and Validity Generalization to the General Aptitude Test Battery (GATB); Washington, DC: U.S. Employment Service, U.S. Department of Labor.

- Hunter, John E., and Ronda F. Hunter. 1984. Validity and utility of alternative predictors of job performance. Psychological Bulletin 96: 72–98. [Google Scholar] [CrossRef]

- Johnson, Jeff W. 2000. A heuristic method for estimating the relative weight of predictor variables in multiple regression. Multivariate Behavioral Research 35: 1–19. [Google Scholar] [CrossRef] [PubMed]

- Johnson, Jeff W., and James M. LeBreton. 2004. History and use of relative importance indices in organizational research. Organizational Research Methods 7: 238–57. [Google Scholar] [CrossRef]

- Johnson, Jeff W., Piers Steel, Charles A. Scherbaum, Calvin C. Hoffman, P. Richard Jeanneret, and Jeff Foster. 2010. Validation is like motor oil: Synthetic is better. Industrial and Organizational Psychology 3: 305–28. [Google Scholar] [CrossRef]

- Johnson, Wendy, and Thomas J. Bouchard, Jr. 2005. The structure of human intelligence: It is verbal, perceptual, and image rotation (VPR), not fluid and crystallized. Intelligence 33: 393–416. [Google Scholar] [CrossRef]

- Kell, Harrison J., and Jonas W. B. Lang. 2017. Specific abilities in the workplace: More important than g? Journal of Intelligence 5: 13. [Google Scholar] [CrossRef]

- Kell, Harrison J., and Jonas W. B. Lang. 2018. The great debate: General ability and specific abilities in the prediction of important outcomes. Journal of Intelligence 6: 39. [Google Scholar] [CrossRef]

- Kell, Harrison J., David Lubinski, and Camilla P. Benbow. 2013. Who rises to the top? Early indicators. Psychological Science 24: 648–59. [Google Scholar] [CrossRef]

- Kristof-Brown, Amy L., Ryan D. Zimmerman, and Erin C. Johnson. 2005. Consequences of individuals’ fit at work: A meta-analysis of person-job, person-organization, person-group, and person-supervisor fit. Personnel Psychology 58: 281–342. [Google Scholar] [CrossRef]

- Krumm, Stefan, Lothar Schmidt-Atzert, and Anastasiya A. Lipnevich. 2014. Specific cognitive abilities at work. Journal of Personnel Psychology 13: 117–22. [Google Scholar] [CrossRef]

- Kvist, Ann V., and Jan-Eric Gustafsson. 2008. The relation between fluid intelligence and the general factor as a function of cultural background: A test of Cattell’s Investment theory. Intelligence 36: 422–36. [Google Scholar] [CrossRef]

- Lang, Jonas W. B., Martin Kersting, Ute R. Hülsheger, and Jessica Lang. 2010. General mental ability, narrower cognitive abilities, and job performance: The perspective of the nested-factors model of cognitive abilities. Personnel Psychology 63: 595–640. [Google Scholar] [CrossRef]

- Lubinski, David. 2009. Exceptional cognitive ability: The phenotype. Behavior Genetics 39: 350–58. [Google Scholar] [CrossRef]

- Lubinski, David, Rose Mary Webb, Martha J. Morelock, and Camilla P. Benbow. 2001. Top 1 in 10,000: A 10-year follow-up of the profoundly gifted. Journal of Applied Psychology 86: 718–29. [Google Scholar] [CrossRef] [PubMed]

- Makel, Matthew C., Harrison J. Kell, David Lubinski, Martha Putallaz, and Camilla P. Benbow. 2016. When lightning strikes twice: Profoundly gifted, profoundly accomplished. Psychological Science 27: 1004–18. [Google Scholar] [CrossRef] [PubMed]

- McDaniel, Michael A., and Sven Kepes. 2014. An evaluation of Spearman’s Hypothesis by manipulating g saturation. International Journal of Selection and Assessment 22: 333–42. [Google Scholar] [CrossRef]

- McGrew, Kevin S. 2009. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 37: 1–10. [Google Scholar] [CrossRef]

- Mount, Michael K., In-Sue Oh, and Melanie Burns. 2008. Incremental validity of perceptual speed and accuracy over general mental ability. Personnel Psychology 61: 113–39. [Google Scholar] [CrossRef]

- Nye, Christopher D., Jingjing Ma, and Serena Wee. 2022. Cognitive ability and job performance: Meta-analytic evidence for the validity of narrow cognitive abilities. Journal of Business and Psychology 37: 1119–39. [Google Scholar] [CrossRef]

- Nye, Christopher D., Oleksandr S. Chernyshenko, Stephen Stark, Fritz Drasgow, Henry L. Phillips, Jeffrey B. Phillips, and Justin S. Campbell. 2020. More than g: Evidence for the incremental validity of performance-based assessments for predicting training performance. Applied Psychology 69: 302–24. [Google Scholar] [CrossRef]

- Oswald, Frederick L. 2019. Measuring and modeling cognitive ability: Some comments on process overlap theory. Journal of Applied Research in Memory and Cognition 8: 296–300. [Google Scholar] [CrossRef]

- Park, Gregory, David Lubinski, and Camilla P. Benbow. 2008. Ability differences among people who have commensurate degrees matter for scientific creativity. Psychological Science 19: 957–61. [Google Scholar] [CrossRef]

- Peterson, Norman G., Michael D. Mumford, Walter C. Borman, P. Richard Jeanneret, Edwin A. Fleishman, Kerry Y. Levin, Michael A. Campion, Melinda S. Mayfield, Frederick P. Morgeson, Kenneth Pearlman, and et al. 2001. Understanding work using the Occupational Information Network (O*NET): Implications for practice and research. Personnel Psychology 54: 451–92. [Google Scholar] [CrossRef]

- Prentice, Deborah A., and Dale T. Miller. 1992. When small effects are impressive. Psychological Bulletin 112: 160–64. [Google Scholar] [CrossRef]

- Ree, Malcolm J., James A. Earles, and Mark S. Teachout. 1994. Predicting job performance: Not much more than g. Journal of Applied Psychology 79: 518–24. [Google Scholar] [CrossRef]

- Ree, Malcolm J., Thomas R. Carretta, and Mark S. Teachout. 2015. Pervasiveness of dominant general factors in organizational measurement. Industrial and Organizational Psychology 8: 409–27. [Google Scholar] [CrossRef]

- Reeve, Charlie L., Charles Scherbaum, and Harold Goldstein. 2015. Manifestations of intelligence: Expanding the measurement space to reconsider specific cognitive abilities. Human Resource Management Review 25: 28–37. [Google Scholar] [CrossRef]

- Sackett, Paul R., Charlene Zhang, Christopher M. Berry, and Filip Lievens. 2022. Revisiting meta-analytic estimates of validity in personnel selection: Addressing systematic overcorrection for restriction of range. Journal of Applied Psychology 107: 2040–68. [Google Scholar] [CrossRef]

- Salgado, Jesús F., Neil Anderson, Silvia Moscoso, Cristina Bertua, Filip de Fruyt, and Jean Pierre Rolland. 2003. A meta-analytic study of general mental ability validity for different occupations in the European community. Journal of Applied Psychology 88: 1068–81. [Google Scholar] [CrossRef]

- Schmidt, Frank L. 2002. The role of general cognitive ability and job performance: Why there cannot be a debate. Human Performance 15: 187–210. [Google Scholar]

- Schmidt, Frank L., and John E. Hunter. 1998. The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychological Bulletin 124: 262–74. [Google Scholar] [CrossRef]

- Schneider, W. Joel, and Daniel A. Newman. 2015. Intelligence is multidimensional: Theoretical review and implications of specific cognitive abilities. Human Resource Management Review 25: 12–27. [Google Scholar] [CrossRef]

- Schneider, W. Joel, and Kevin S. McGrew. 2018. The Cattell-Horn-Carroll theory of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests and Issues. Edited by Dawn P. Flanagan and Erin M. McDonough. New York: Guilford Press, pp. 73–130. [Google Scholar]

- Schneider, W. Joel, and Kevin S. McGrew. 2019. Process overlap theory is a milestone achievement among intelligence theories. Journal of Applied Research in Memory and Cognition 8: 273–76. [Google Scholar] [CrossRef]

- Sorjonen, Kimmo, Gustav Nilsonne, Michael Ingre, and Bo Melin. 2022. Spurious correlations in research on ability tilt. Personality and Individual Differences 185: 111268. [Google Scholar] [CrossRef]

- Spearman, Charles. 1904. “General intelligence”, objectively determined and measured. The American Journal of Psychology 15: 201–92. [Google Scholar] [CrossRef]

- Steel, Piers, and John Kammeyer-Mueller. 2009. Using a meta-analytic perspective to enhance job component validation. Personnel Psychology 62: 533–52. [Google Scholar] [CrossRef]

- Thurstone, Louis Leon. 1938. Primary Mental Abilities. Chicago: University of Chicago Press. [Google Scholar]

- U.S. Department of Labor. 1970. Manual for the USES General Aptitude Test Battery. Section III: Development; Washington, DC: U.S. Government Printing Office.

- U.S. Employment Service. 1977. Dictionary of Occupational Titles, 4th ed.; Washington, DC: U.S. Government Printing Office.

- Van Der Maas, Han L. J., Conor V. Dolan, Raoul P. P. P. Grasman, Jelte M. Wicherts, Hilde M. Huizenga, and Maartje E. J. Raijmakers. 2006. A dynamical model of general intelligence: The positive manifold of intelligence by mutualism. Psychological Review 113: 842–61. [Google Scholar] [CrossRef] [PubMed]

- Vernon, Philip E. 1950. The Structure of Human Abilities. New York: Wiley. [Google Scholar]

- Viswesvaran, Chockalingam, and Deniz S. Ones. 2002. Agreements and disagreements on the role of general mental ability (GMA) in industrial, work, and organizational psychology. Human Performance 15: 212–31. [Google Scholar]

- Wai, Jonathan, Jaret Hodges, and Matthew C. Makel. 2018. Sex differences in ability tilt in the right tail of cognitive abilities: A 35-year examination. Intelligence 67: 76–83. [Google Scholar] [CrossRef]

- Wai, Jonathan, Matthew H. Lee, and Harrison J. Kell. 2022. Distributions of academic math-verbal tilt and overall academic skill of students specializing in different fields: A study of 1.6 million graduate record examination test takers. Intelligence 95: 101701. [Google Scholar] [CrossRef]

- Wai, Jonathan, David Lubinski, and Camilla P. Benbow. 2009. Spatial ability for STEM domains: Aligning over 50 years of cumulative psychological knowledge solidifies its importance. Journal of Educational Psychology 101: 817–35. [Google Scholar] [CrossRef]

- Wee, Serena. 2018. Aligning predictor-criterion bandwidths: Specific abilities as predictors of specific performance. Journal of Intelligence 6: 40. [Google Scholar] [CrossRef]

- Wee, Serena, Daniel A. Newman, and Dana L. Joseph. 2014. More than g: Selection quality and adverse impact implications of considering second-stratum cognitive abilities. Journal of Applied Psychology 99: 547–63. [Google Scholar] [CrossRef]

- Wee, Serena, Daniel A. Newman, and Q. Chelsea Song. 2015. More than g-factors: Second-stratum factors should not be ignored. Industrial and Organizational Psychology 8: 482–88. [Google Scholar] [CrossRef]

- Wiernik, Brenton M., Michael P. Wilmot, and Jack W. Kostal. 2015. How data analysis can dominate interpretations of dominant general factors. Industrial and Organizational Psychology 8: 438–45. [Google Scholar] [CrossRef]

| Aptitude | Definition | Test(s) |

|---|---|---|

| G—General Learning Ability | The ability to “catch on” or understand instructions and underlying principles; the ability to reason and make judgments. Closely related to doing well in school. | Part 3—Three-Dimensional Space Part 4—Vocabulary Part 6—Arithmetic Reason |

| V—Verbal Aptitude | The ability to understand the meaning of words and to use them effectively. The ability to comprehend language, to understand relationships between words and to understand meaning of whole sentences and paragraphs. | Part 4—Vocabulary |

| N—Numerical Aptitude | Ability to perform arithmetic operations quickly and accurately. | Part 2—Computation Part 6—Arithmetic Reason |

| S—Spatial Aptitude | Ability to think visually of geometric forms and to comprehend the two-dimensional representation of three-dimensional objects. The ability to recognize the relationships resulting from the movement of objects in space. | Part 3—Three-Dimensional Space |

| P—Form Perception | Ability to perceive pertinent detail in objects or in pictorial or graphic material. Ability to make visual comparisons and discriminations and see slight differences in shapes and shadings of figures and widths and lengths of lines. | Part 5—Tool Matching Part 7—Form Matching |

| Q—Clerical Perception | Ability to perceive pertinent detail in verbal or tabular material. Ability to observe differences in copy, to proofread words and numbers, and to avoid perceptual errors in arithmetic computation. A measure of speed of perception which is required in many industrial jobs even when the job does not have verbal or numerical content. | Part 1—Name Comparison |

| K—Motor Coordination | Ability to coordinate eyes and hands or fingers rapidly and accurately in making precise movements with speed. Ability to make a movement response accurately and swiftly. | Part 8—Mark Making |

| F—Finger Dexterity | Ability to move the fingers, and manipulate small objects with the fingers, rapidly or accurately. | Part 11—Assemble Part 12—Disassemble |

| M—Manual Dexterity | Ability to move the hands easily and skillfully. Ability to work with the hands in placing and turning motions. | Part 9—Place Part 10—Turn |

| Race | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Gender | Age (Years) | ||||||||||

| Job Family | n | % Female | % Male | % Caucasian | % African American | % Hispanic/Latino | % Asian American | % Native American | M | SD | Mean Tenure (Years) |

| Architecture and engineering | 601 | 3 | 97 | 76 | 15 | 5 | 3 | 1 | 36.1 | 10.6 | 10.7 |

| Life, physical, and social science | 95 | 20 | 80 | 92 | - | 6 | 1 | 1 | 31.9 | 9.7 | 7.8 |

| Legal | 594 | 99 | 1 | 77 | 15 | 6 | 2 | <1 | 29.8 | 11.3 | 7.1 |

| Healthcare practitioners and technical | 1389 | 71 | 29 | 63 | 26 | 6 | 5 | <1 | 30.9 | 9.1 | 5.8 |

| Healthcare support | 329 | 73 | 27 | 35 | 57 | 6 | 1 | 1 | 35.8 | 12.3 | 6.3 |

| Protective service | 849 | 20 | 80 | 46 | 46 | 7 | <1 | 2 | 33.3 | 9.5 | 4.7 |

| Food preparation and serving related | 632 | 81 | 19 | 52 | 37 | 7 | 1 | 3 | 34.4 | 13.2 | 6.5 |

| Personal care and service | 528 | 90 | 10 | 67 | 20 | 8 | 2 | 3 | 26.9 | 9.5 | 1.9 |

| Sales and related | 409 | 86 | 14 | 63 | 27 | 6 | 2 | 2 | 35.3 | 14.4 | 6.2 |

| Office and administrative support | 5621 | 82 | 18 | 60 | 31 | 6 | 2 | 1 | 30.4 | 10.9 | 4.7 |

| Construction and extraction | 697 | 1 | 99 | 70 | 20 | 6 | <1 | 4 | 32.1 | 9.9 | 8.2 |

| Installation, maintenance, and repair | 2740 | 1 | 99 | 71 | 17 | 8 | 1 | 2 | 35.5 | 10.5 | 10.7 |

| Production | 8316 | 41 | 59 | 57 | 32 | 8 | 1 | 2 | 32.9 | 10.6 | 6.3 |

| Transportation and material moving | 1194 | 69 | 31 | 62 | 30 | 5 | 1 | 2 | 36.8 | 12.0 | 8.4 |

| Overall | 23,994 | 51 | 49 | 61 | 29 | 7 | 1 | 2 | 32.6 | 11.0 | 6.5 |

| Verbal Tilt | Quantitative Tilt | Spatial Tilt | Form Perception Tilt | Clerical Perception Tilt |

|---|---|---|---|---|

| V > N | N > V | S > V | P > V | Q > V |

| V > S | N > S | S > N | P > N | Q > N |

| V > P | N > P | S > P | P > S | Q > S |

| V > Q | N > Q | S > Q | P > Q | Q > P |

| GATB Dimension | O*NET Ability |

|---|---|

| Verbal aptitude | Written comprehension |

| Numerical aptitude | Mathematical reasoning, number facility |

| Spatial aptitude | Visualization |

| Form perception | Perceptual speed |

| Clerical perception | Perceptual speed |

| Tilt Type | k | n | Sample Job Titles |

|---|---|---|---|

| WC > QR | 10 | 3375 | Court reporters; file clerks; telephone operators; proofreaders and copy markers |

| QR > WC | 1 | 287 | Tellers |

| WC > Vz | 16 | 5623 | Court reporters; bookkeeping, accounting, and auditing clerks; proofreaders and copy markers |

| Vz > WC | 17 | 5403 | Sheet metal workers; automotive body and related repairers; welders, cutters, and welder fitters |

| WC > PS | 16 | 4482 | Proofreaders and copy markers; telephone operators; interviewers; cargo and freight agents |

| PS > WC | 13 | 3974 | Extruding, forming, pressing, and compacting machine setters, operators, and tenders; surgical technologists |

| QR > Vz | 11 | 3464 | Bookkeeping, accounting, and auditing clerks; tellers; general office clerks |

| Vz > QR | 13 | 5126 | Structural iron and steel workers; electrical and electronic equipment assemblers; general maintenance and repair workers |

| QR > PS | 6 | 2100 | Bookkeeping, accounting, and auditing clerks; tellers; cargo and freight agents |

| PS > QR | 9 | 2847 | Extruding, forming, pressing, and compacting machine setters, operators, and tenders; proofreaders and copy markers; tire builders |

| Vz > PS | 16 | 4626 | Hairdressers, hairstylists, and cosmetologists; structural metal fabricators and fitters; upholsterers |

| PS > Vz | 9 | 3238 | File clerks; proofreaders and copy markers; data entry keyers; court reporters |

| Tilt Type | M | SD |

|---|---|---|

| V > N | 12.84 | 9.08 |

| N > V | 10.89 | 8.14 |

| V > S | 13.28 | 10.28 |

| S > V | 16.18 | 11.80 |

| V > P | 12.87 | 9.82 |

| P > V | 19.93 | 13.82 |

| V > Q | 7.97 | 6.61 |

| Q > V | 19.35 | 12.65 |

| N > S | 14.04 | 10.80 |

| S > N | 17.97 | 12.79 |

| N > P | 11.61 | 8.94 |

| P > N | 21.07 | 14.40 |

| N > Q | 7.83 | 6.51 |

| Q > N | 21.51 | 13.47 |

| S > P | 13.25 | 9.90 |

| P > S | 18.51 | 13.18 |

| S > Q | 12.94 | 9.91 |

| Q > S | 21.99 | 15.36 |

| Tilt Type | k | n | r |

|---|---|---|---|

| V > N | 10 | 3375 | .005 |

| N > V | 1 | 287 | .038 |

| V > S | 16 | 5623 | .106 *** |

| S > V | 17 | 5403 | .019 |

| V > P | 16 | 4482 | .120 *** |

| P > V | 13 | 3974 | −.002 |

| V > Q | 16 | 4482 | .051 *** |

| Q > V | 13 | 3974 | .027 |

| N > S | 11 | 3464 | .090 *** |

| S > N | 13 | 5126 | −.028 * |

| N > P | 6 | 2100 | .046 * |

| P > N | 9 | 2847 | −.020 |

| N > Q | 6 | 2100 | .051 * |

| Q > N | 9 | 2847 | −.020 |

| S > P | 16 | 4626 | .071 *** |

| P > S | 9 | 3238 | .044 * |

| S > Q | 16 | 4626 | .054 *** |

| Q > S | 9 | 3238 | .083 *** |

| Tilt Type | k | n | r |

|---|---|---|---|

| V > N | 1 | 287 | −.038 |

| N > V | 79 | 23,707 | .025 *** |

| V > S | 37 | 10,220 | −.029 ** |

| S > V | 43 | 13,774 | −.057 *** |

| V > P | 24 | 7477 | .015 |

| P > V | 55 | 16,212 | −.056 *** |

| V > Q | 24 | 7477 | −.012 |

| Q > V | 55 | 16,212 | −.019 * |

| N > S | 63 | 18,993 | .045 *** |

| S > N | 16 | 4855 | −.078 *** |

| N > P | 73 | 21,740 | .065 *** |

| P > N | 6 | 2100 | −.046 * |

| N > Q | 73 | 21,740 | .029 *** |

| Q > N | 6 | 2100 | −.051 * |

| S > P | 45 | 14,239 | −.020 * |

| P > S | 33 | 9316 | −.071 *** |

| S > Q | 45 | 14,239 | −.060 *** |

| Q > S | 33 | 9316 | −.049 *** |

| g | Specific Ability | Ability Tilt | Incremental Validities | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Tilt Type | n | εj (%) | εj (%) | εj (%) | R2 | Rg2 | ΔR2 | % Increase in R2 | Rgs2 | ΔR2 | % Increase in R2 |

| V > N | 3375 | .039 (43.7) | .042 (46.5) | .009 (9.8) | .090 | .077 | .013 | 17% | .083 | .006 | 7% |

| N > V | 287 | .020 (35.6) | .032 (56.9) | .004 (7.5) | .057 | .040 | .017 | 43% | .056 | .000 | 0% |

| V > S | 5623 | .053 (62.3) | .026 (30.2) | .006 (7.6) | .085 | .075 | .010 | 13% | .079 | .006 | 8% |

| S > V | 5403 | .015 (55.2) | .010 (37.3) | .002 (7.5) | .028 | .026 | .002 | 8% | .026 | .002 | 8% |

| V > P | 4482 | .047 (57.8) | .028 (34.9) | .006 (7.3) | .081 | .069 | .012 | 17% | .075 | .006 | 8% |

| P > V | 3974 | .021 (72.6) | .007 (24.7) | .001 (2.8) | .028 | .026 | .002 | 8% | .028 | .001 | 4% |

| V > Q | 4482 | .040 (53.2) | .033 (43.9) | .002 (2.9) | .075 | .069 | .007 | 10% | .075 | .000 | 0% |

| Q > V | 3974 | .017 (61.8) | .009 (33.3) | .001 (4.9) | .027 | .026 | .001 | 4% | .027 | .000 | 0% |

| N > S | 3464 | .036 (51.1) | .026 (36.5) | .009 (12.5) | .071 | .059 | .013 | 22% | .068 | .004 | 6% |

| S > N | 5126 | .014 (46.6) | .011 (35.7) | .005 (17.7) | .031 | .027 | .004 | 15% | .027 | .004 | 15% |

| N > P | 2100 | .022 (46.4) | .023 (48.7) | .002 (4.9) | .047 | .040 | .007 | 18% | .047 | .000 | 0% |

| P > N | 2847 | .019 (61.1) | .011 (34.2) | .001 (4.7) | .032 | .031 | .000 | 0% | .032 | .000 | 0% |

| N > Q | 2100 | .023 (48.4) | .021 (44.3) | .003 (7.3) | .048 | .040 | .008 | 20% | .047 | .000 | 0% |

| Q > N | 2847 | .018 (55.3) | .012 (37.7) | .002 (6.9) | .032 | .031 | .000 | 0% | .032 | .000 | 0% |

| S > P | 4626 | .026 (68.7) | .009 (24.0) | .003 (7.2) | .038 | .032 | .006 | 19% | .033 | .005 | 15% |

| P > S | 3238 | .069 (71.6) | .022 (22.7) | .005 (5.7) | .097 | .087 | .010 | 11% | .089 | .008 | 9% |

| S > Q | 4626 | .023 (69.0) | .009 (26.1) | .002 (4.9) | .034 | .032 | .001 | 3% | .033 | .001 | 3% |

| Q > S | 3238 | .065 (68.1) | .024 (25.5) | .006 (6.4) | .095 | .087 | .008 | 9% | .087 | .007 | 8% |

| Tilt Type | k | n | Ability Tilt |

|---|---|---|---|

| V > N | 9 | 1449 | .041 |

| N > V | 9 | 1064 | .020 |

| V > S | 9 | 1105 | .063 * |

| S > V | 9 | 1412 | .004 |

| V > P | 9 | 812 | .060 |

| P > V | 9 | 1682 | .064 ** |

| V > Q | 9 | 414 | −.034 |

| Q > V | 9 | 2091 | −.020 |

| N > S | 9 | 904 | −.024 |

| S > N | 9 | 1595 | −.004 |

| N > P | 9 | 665 | .002 |

| P > N | 9 | 1836 | .024 |

| N > Q | 9 | 327 | −.100 |

| Q > N | 9 | 2169 | −.033 |

| S > P | 9 | 953 | .045 |

| P > S | 9 | 1550 | .020 |

| S > Q | 9 | 698 | −.063 |

| Q > S | 9 | 1817 | −.053 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kato, A.E.; Scherbaum, C.A. Exploring the Relationship between Cognitive Ability Tilt and Job Performance. J. Intell. 2023, 11, 44. https://doi.org/10.3390/jintelligence11030044

Kato AE, Scherbaum CA. Exploring the Relationship between Cognitive Ability Tilt and Job Performance. Journal of Intelligence. 2023; 11(3):44. https://doi.org/10.3390/jintelligence11030044

Chicago/Turabian StyleKato, Anne E., and Charles A. Scherbaum. 2023. "Exploring the Relationship between Cognitive Ability Tilt and Job Performance" Journal of Intelligence 11, no. 3: 44. https://doi.org/10.3390/jintelligence11030044

APA StyleKato, A. E., & Scherbaum, C. A. (2023). Exploring the Relationship between Cognitive Ability Tilt and Job Performance. Journal of Intelligence, 11(3), 44. https://doi.org/10.3390/jintelligence11030044