An Energy-Saving Road-Lighting Control System Based on Improved YOLOv5s

Abstract

:1. Introduction

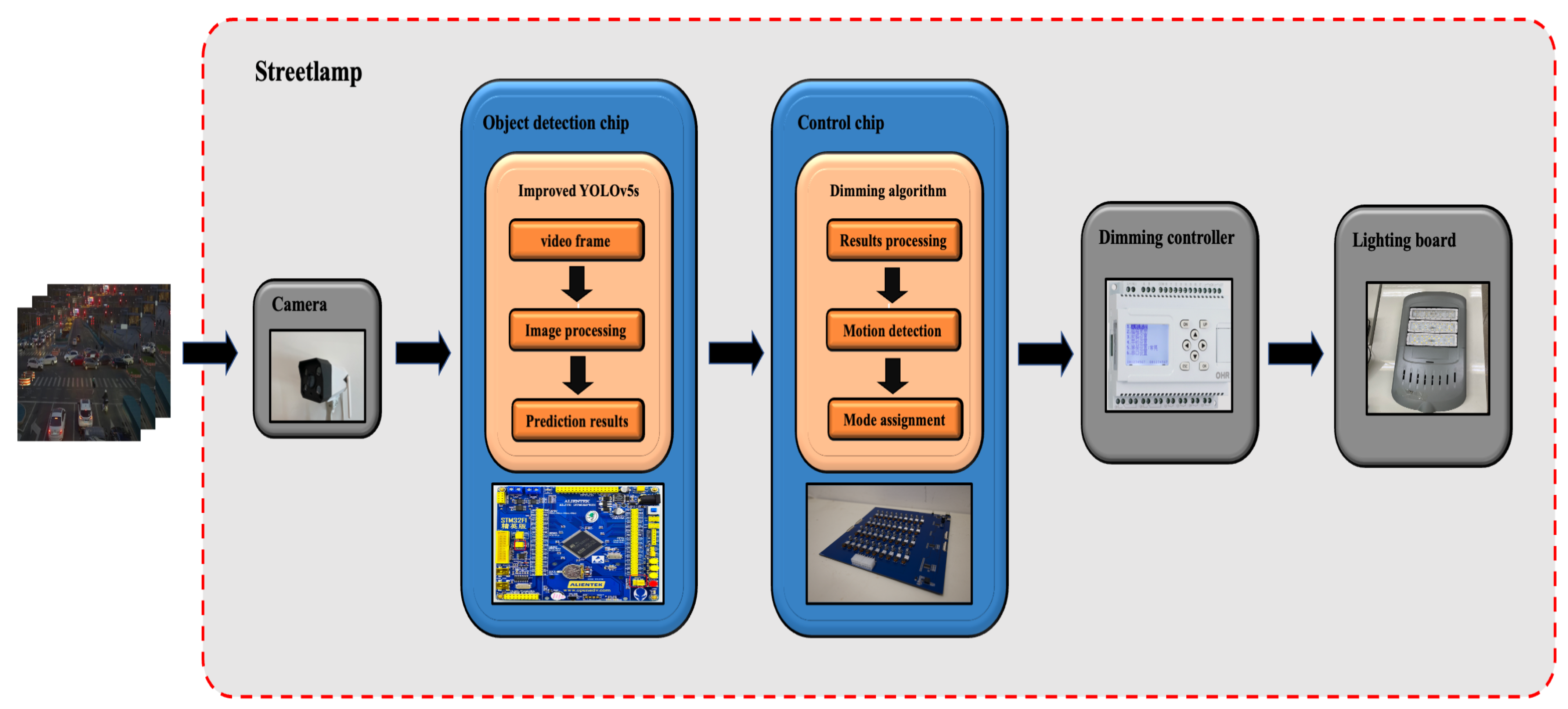

- For the first time, we introduced YOLOv5s into the embedded device of a road-lighting terminal.

- We made targeted improvements to YOLOv5s, and proposed a novel, high-performance energy-saving control system.

- We designed a complete, intelligent dimming method for the dimming controller, and the energy-saving efficiency has increased by nearly 14.1% and 35.2% compared with the same street lighting without dimming the street lighting at the same experimental site.

2. Related Work

2.1. Energy-Saving Road-Lighting Control System

2.2. YOLOv5s

2.3. YOLOv5s-Based System

3. Methodology

3.1. Proposed System

3.2. Dimming Method

3.3. The Improved YOLOv5 Network Framework

- Nonlinear interactions between channels must be figured out.

- Each channel should be assured to have a matching output, and instead of a one-hot vector, a soft label can be generated.

3.4. The Traffic Dataset

3.5. Experimental Environment

3.6. Evaluation Metrics

4. Results and Discussion

4.1. Detection Performance

4.2. Energy-Saving Experiment

4.2.1. Traffic Flow

4.2.2. Energy-Saving Efficiency

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bachanek, K.H.; Tundys, B.; Wiśniewski, T.; Puzio, E.; Maroušková, A. Intelligent street lighting in a smart city concepts—A direction to energy saving in cities: An overview and case study. Energies 2021, 14, 3018. [Google Scholar] [CrossRef]

- Toubal, A.; Bengherbia, B.; Ouldzmirli, M.; Maazouz, M. Energy efficient street lighting control system using wireless sensor networks. In Proceedings of the 2016 8th International Conference on Modelling, Identification and Control (ICMIC), Algiers, Algeria, 15–17 November 2016; pp. 919–924. [Google Scholar]

- Ban, D. Research on intelligent street lamp energy-saving control system based on neural network. Univ. Electron. Sci. Technol. China 2019, 1, 75. [Google Scholar]

- Mohandas, P.; Dhanaraj, J.S.A.; Gao, X.Z. Artificial neural network based smart and energy efficient street lighting system: A case study for residential area in Hosur. Sustain. Cities Soc. 2019, 48, 101499. [Google Scholar] [CrossRef]

- Veena, P.; Tharakan, P.; Haridas, H.; Ramya, K.; Joju, R.; Jyothis, T. Smart street light system based on image processing. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016; pp. 1–5. [Google Scholar]

- Badgelwar, S.S.; Pande, H.M. Survey on energy efficient smart street light system. In Proceedings of the 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC), Palladam, Tamil Nadu, India, 10–11 February 2017; pp. 866–869. [Google Scholar]

- Ai, M.; Wang, P.; Ma, W. Research and application of smart streetlamp based on fuzzy control method. Procedia Comput. Sci. 2021, 183, 341–348. [Google Scholar] [CrossRef]

- He, J.; Zhu, Z.; Wang, F.; Li, J. Illumination control of intelligent street lamps based on fuzzy decision. In Proceedings of the 2019 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Changsha, China, 12–13 June 2019; pp. 513–516. [Google Scholar]

- Alsuwian, T.; Usman, M.H.; Amin, A.A. An Autonomous Vehicle Stability Control Using Active Fault-Tolerant Control Based on a Fuzzy Neural Network. Electronics 2022, 11, 3165. [Google Scholar] [CrossRef]

- Xu, Z.; Huang, X.; Huang, Y.; Sun, H.; Wan, F. A Real-Time Zanthoxylum Target Detection Method for an Intelligent Picking Robot under a Complex Background, Based on an Improved YOLOv5s Architecture. Sensors 2022, 22, 682. [Google Scholar] [CrossRef] [PubMed]

- Stergiou, A.; Poppe, R.; Kalliatakis, G. Refining activation downsampling with SoftPool. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10357–10366. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Mahoor, M.; Hosseini, Z.S.; Khodaei, A.; Paaso, A.; Kushner, D. State-of-the-art in smart streetlight systems: A review. IET Smart Cities 2020, 2, 24–33. [Google Scholar] [CrossRef]

- Mukta, M.Y.; Rahman, M.A.; Asyhari, A.T.; Bhuiyan, M.Z.A. IoT for energy efficient green highway lighting systems: Challenges and issues. J. Netw. Comput. Appl. 2020, 158, 102575. [Google Scholar] [CrossRef]

- Fryc, I.; Czyżewski, D.; Fan, J.; Gălăţanu, C.D. The Drive towards Optimization of Road Lighting Energy Consumption Based on Mesopic Vision—A Suburban Street Case Study. Energies 2021, 14, 1175. [Google Scholar] [CrossRef]

- Sun, J.h.; Su, J.f.; Zhang, G.s.; Li, Y.; Zhao, C. An energy-saving control method based on multi-sensor system for solar street lamp. In Proceedings of the 2010 International Conference on Digital Manufacturing & Automation, Changcha, China, 18–20 December 2010; Volume 1, pp. 192–194. [Google Scholar]

- Asif, M.; Shams, S.; Hussain, S.; Bhatti, J.A.; Rashid, M.; Zeeshan-ul Haque, M. Adaptive Control of Streetlights Using Deep Learning for the Optimization of Energy Consumption during Late Hours. Energies 2022, 15, 6337. [Google Scholar] [CrossRef]

- Kurkowski, M.; Popławski, T.; Zajkowski, M.; Kurkowski, B.; Szota, M. Effective Control of Road Luminaires—A Case Study on an Example of a Selected City in Poland. Energies 2022, 15, 5378. [Google Scholar] [CrossRef]

- Nguyen, A.T.; Taniguchi, T.; Eciolaza, L.; Campos, V.; Palhares, R.; Sugeno, M. Fuzzy control systems: Past, present and future. IEEE Comput. Intell. Mag. 2019, 14, 56–68. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Luo, Q.; Wang, J.; Gao, M.; He, Z.; Yang, Y.; Zhou, H. Multiple mechanisms to strengthen the ability of YOLOv5s for real-time identification of vehicle type. Electronics 2022, 11, 2586. [Google Scholar] [CrossRef]

- Wang, C.; Wang, H.; Yu, F.; Xia, W. A high-precision fast smoky vehicle detection method based on improved Yolov5 network. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Industrial Design (AIID), Guangzhou, China, 28–30 May 2021; pp. 255–259. [Google Scholar]

- Khan, I.R.; Ali, S.T.A.; Siddiq, A.; Khan, M.M.; Ilyas, M.U.; Alshomrani, S.; Rahardja, S. Automatic License Plate Recognition in Real-World Traffic Videos Captured in Unconstrained Environment by a Mobile Camera. Electronics 2022, 11, 1408. [Google Scholar] [CrossRef]

- Elgammal, A.; Harwood, D.; Davis, L. Non-parametric model for background subtraction. In Proceedings of the European Conference on Computer Vision, Dublin, Ireland, 26 June–1 July 2000; pp. 751–767. [Google Scholar]

- CJJ 45-2006; Standard for Lighting Design of Urban Road. Industry Standard-Urban Construction; China Academy of Building Research: Beijing, China, 2006.

- Erkan, A.; Hoffmann, D.; Singer, T.; Schikowski, J.M.; Kunst, K.; Peier, M.A.; Khanh, T.Q. Influence of Headlight Level on Object Detection in Urban Traffic at Night. Appl. Sci. 2023, 13, 2668. [Google Scholar] [CrossRef]

- Zhang, M.; Gao, F.; Yang, W.; Zhang, H. Wildlife Object Detection Method Applying Segmentation Gradient Flow and Feature Dimensionality Reduction. Electronics 2023, 12, 377. [Google Scholar] [CrossRef]

- Wang, C.; Wu, Y.; Wang, Y.; Chen, Y. Scene recognition using deep softpool capsule network based on residual diverse branch block. Sensors 2021, 21, 5575. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Guo, Z.; Li, H.; Hu, X.; Yuan, J.; et al. DAIR-V2X: A Large-Scale Dataset for Vehicle-Infrastructure Cooperative 3D Object Detection. In Proceedings of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wang, Y.; Hao, Z.; Zuo, F.; Pan, S. A Fabric Defect Detection System Based Improved YOLOv5 Detector. In Proceedings of the Journal of Physics: Conference Series, Zamosc, Poland, 25–27 November 2021; Volume 2010, p. 012191. [Google Scholar]

| Road type | Average Surface Luminance | Average Surface Illuminance | Overall Uniformity of Luminance | Uniformity of Illumiance | Glare Threshold increment |

|---|---|---|---|---|---|

| Major road | 1.5/2.0 | 20/30 | 0.4 | 0.4 | 10 |

| Local road | 0.75/1.0 | 10/15 | 0.4 | 0.35 | 10 |

| Conflict areas of major | 0 | ||||

| road and conflict road | / | 30/50 | / | 0.4 | / |

| Night Traffic Flow | Average Surface Illuminance | Minimum Light Surface Illuminance |

|---|---|---|

| High | 10 | 7.5 |

| Middle | 7.5 | 5 |

| Low | 5 | 1 |

| Time | Motor Vehicle | Non-Motor Vehicle | Pedestrian |

|---|---|---|---|

| 7.15–9.00 | 476 | 286 | 926 |

| 9.00–11.00 | 263 | 92 | 456 |

| 11.00–01.00 | 64 | 34 | 49 |

| 01.00–03.00 | 27 | 9 | 49 |

| 03.00–05.00 | 52 | 5 | 62 |

| 05.00–07.00 | 348 | 24 | 265 |

| total | 1516 | 450 | 1765 |

| Lighting | Power Consumption (kwh) | Energy-Saving Efficiency |

|---|---|---|

| 1 | 1.59 | 135.2% |

| 2 | 1.2 | 114.1% |

| Ours | 1.03 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, R.; Zhang, C.; Tang, K.; He, X.; He, Q. An Energy-Saving Road-Lighting Control System Based on Improved YOLOv5s. Computation 2023, 11, 66. https://doi.org/10.3390/computation11030066

Tang R, Zhang C, Tang K, He X, He Q. An Energy-Saving Road-Lighting Control System Based on Improved YOLOv5s. Computation. 2023; 11(3):66. https://doi.org/10.3390/computation11030066

Chicago/Turabian StyleTang, Ren, Chaoyang Zhang, Kai Tang, Xiaoyang He, and Qipeng He. 2023. "An Energy-Saving Road-Lighting Control System Based on Improved YOLOv5s" Computation 11, no. 3: 66. https://doi.org/10.3390/computation11030066

APA StyleTang, R., Zhang, C., Tang, K., He, X., & He, Q. (2023). An Energy-Saving Road-Lighting Control System Based on Improved YOLOv5s. Computation, 11(3), 66. https://doi.org/10.3390/computation11030066