Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Stimuli

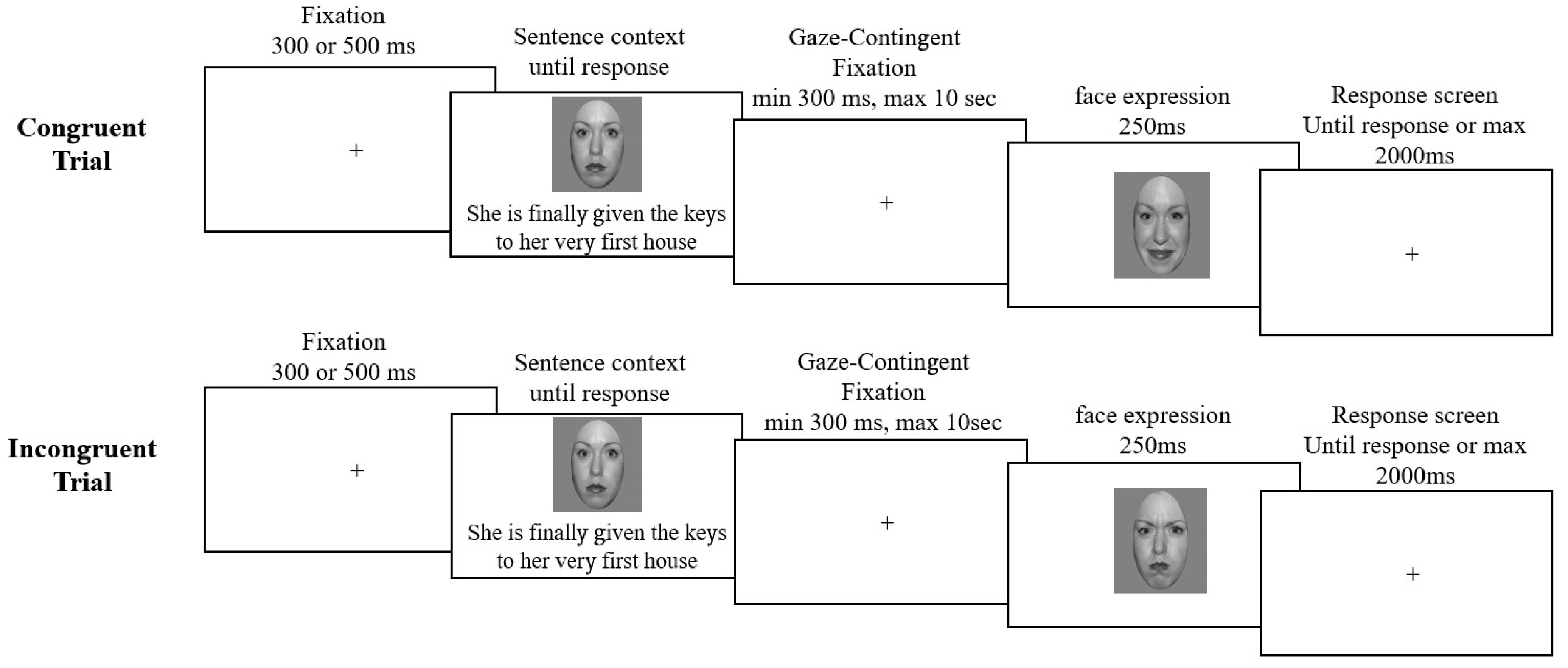

2.3. Procedure

2.4. Eye-Tracking and EEG Recordings

2.5. Data Processing

2.6. Data Analysis

3. Results

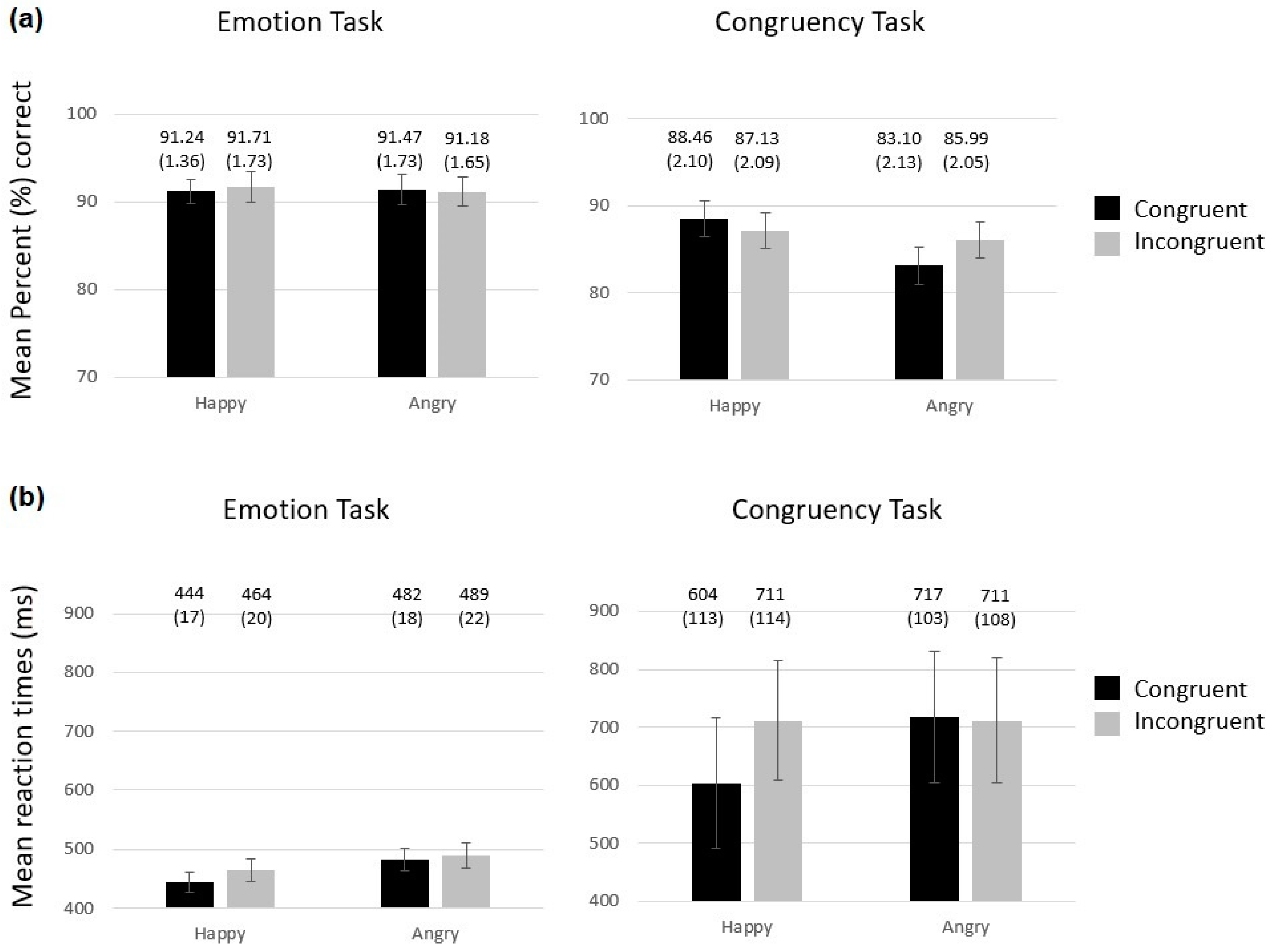

3.1. Behavioural Results

3.1.1. Percentage of Correct Responses

3.1.2. Mean Response Times (ms)

3.2. ERP Results

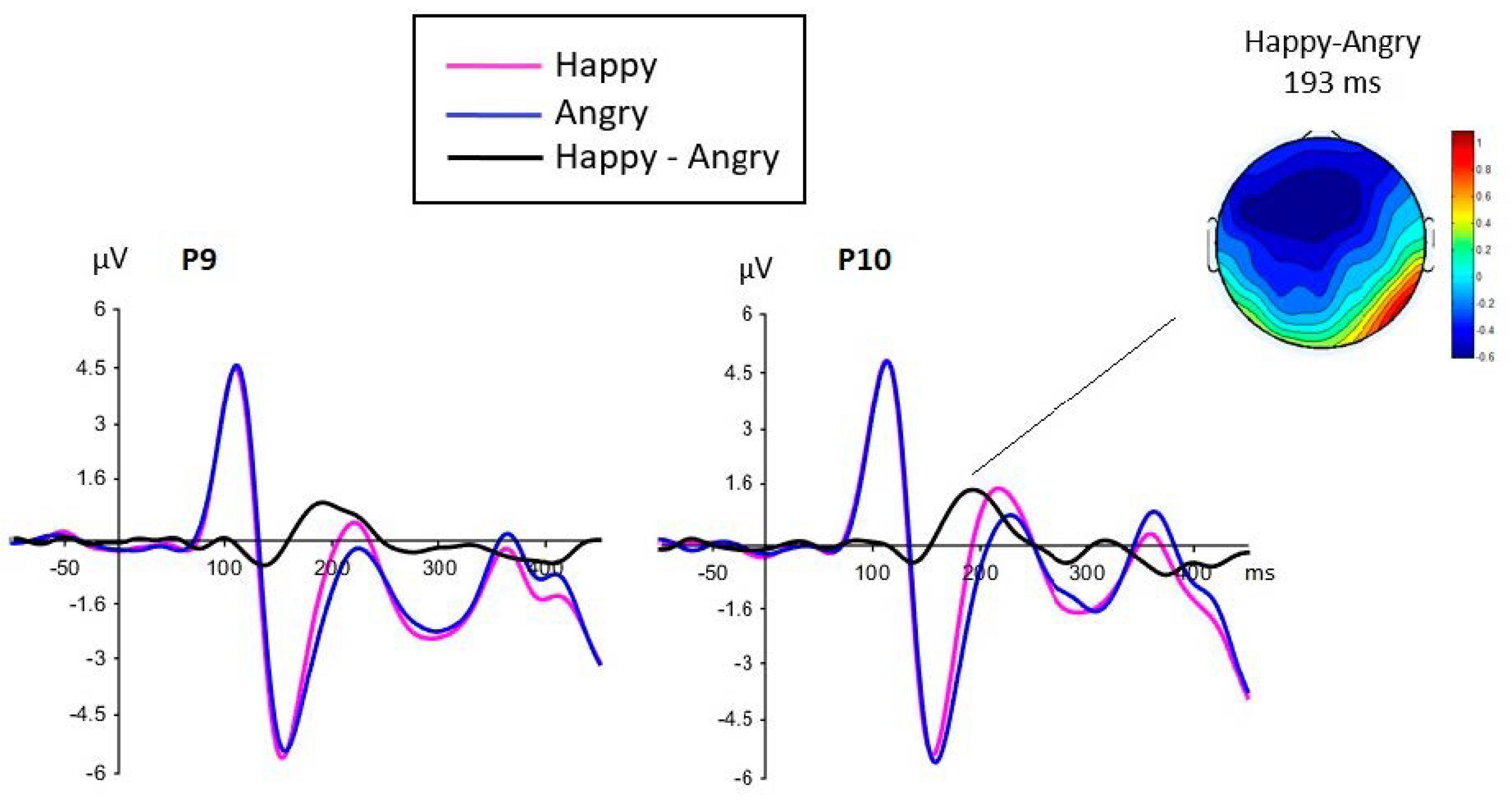

3.2.1. N170 Component

3.2.2. EPN Component

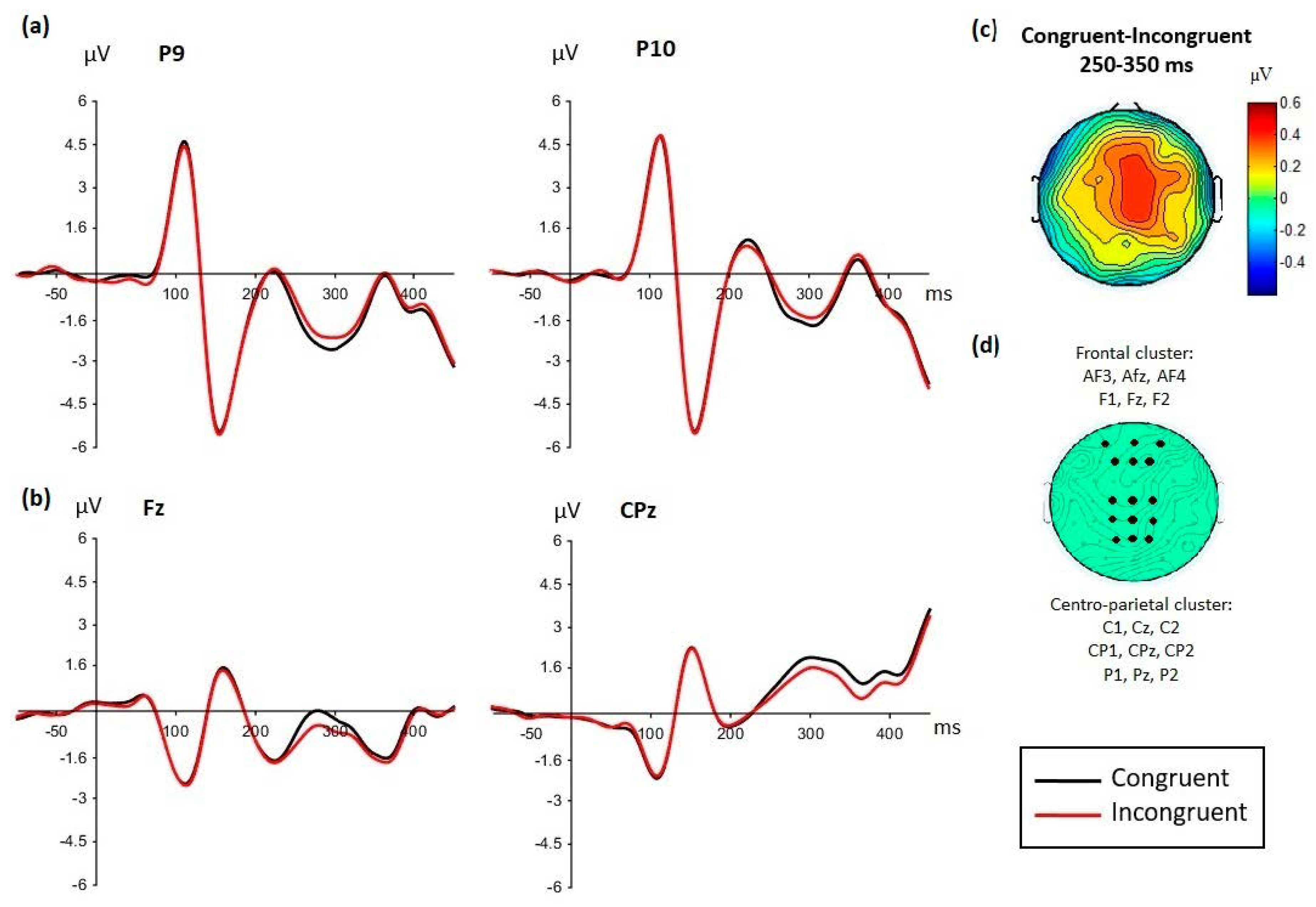

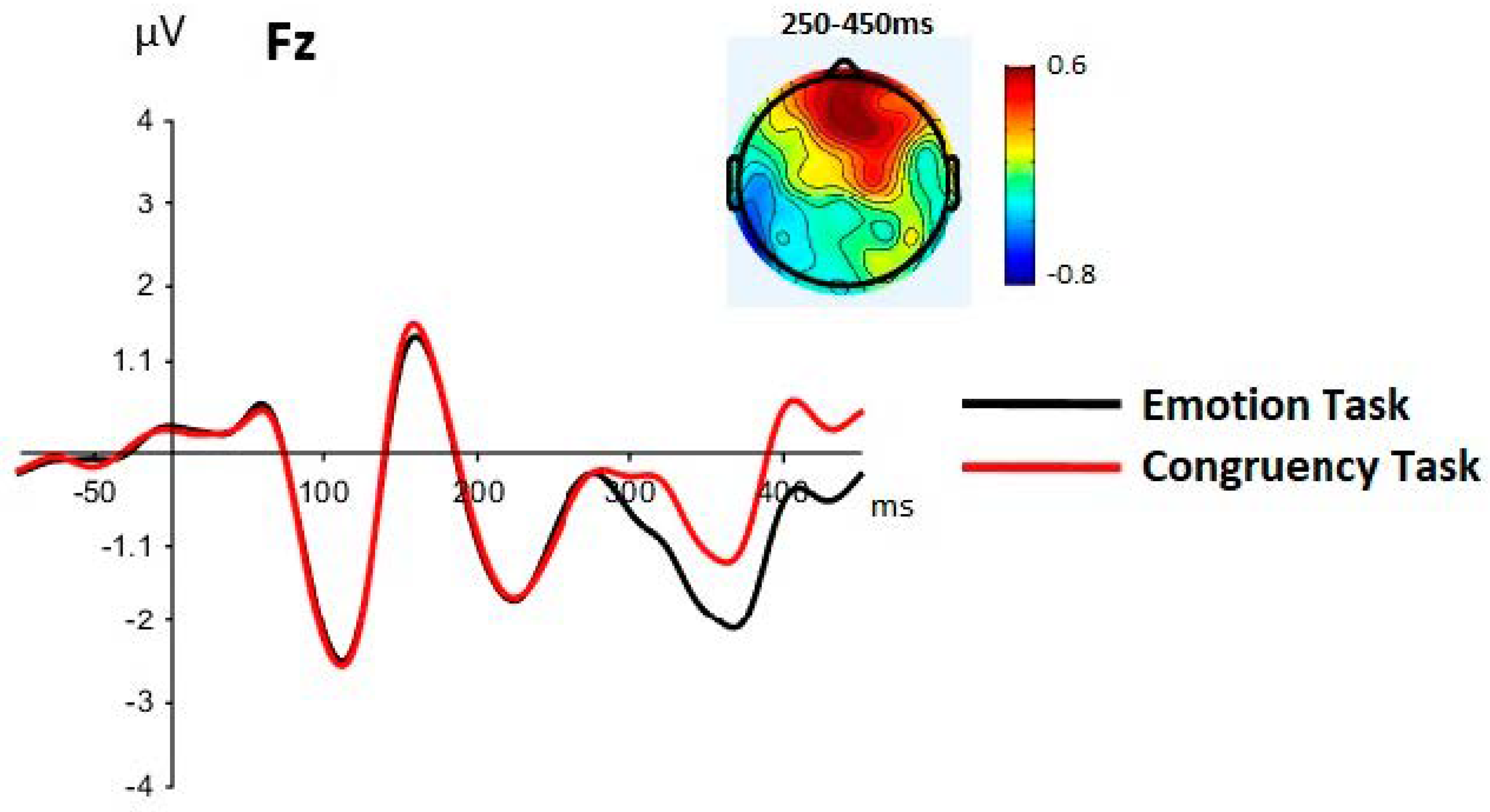

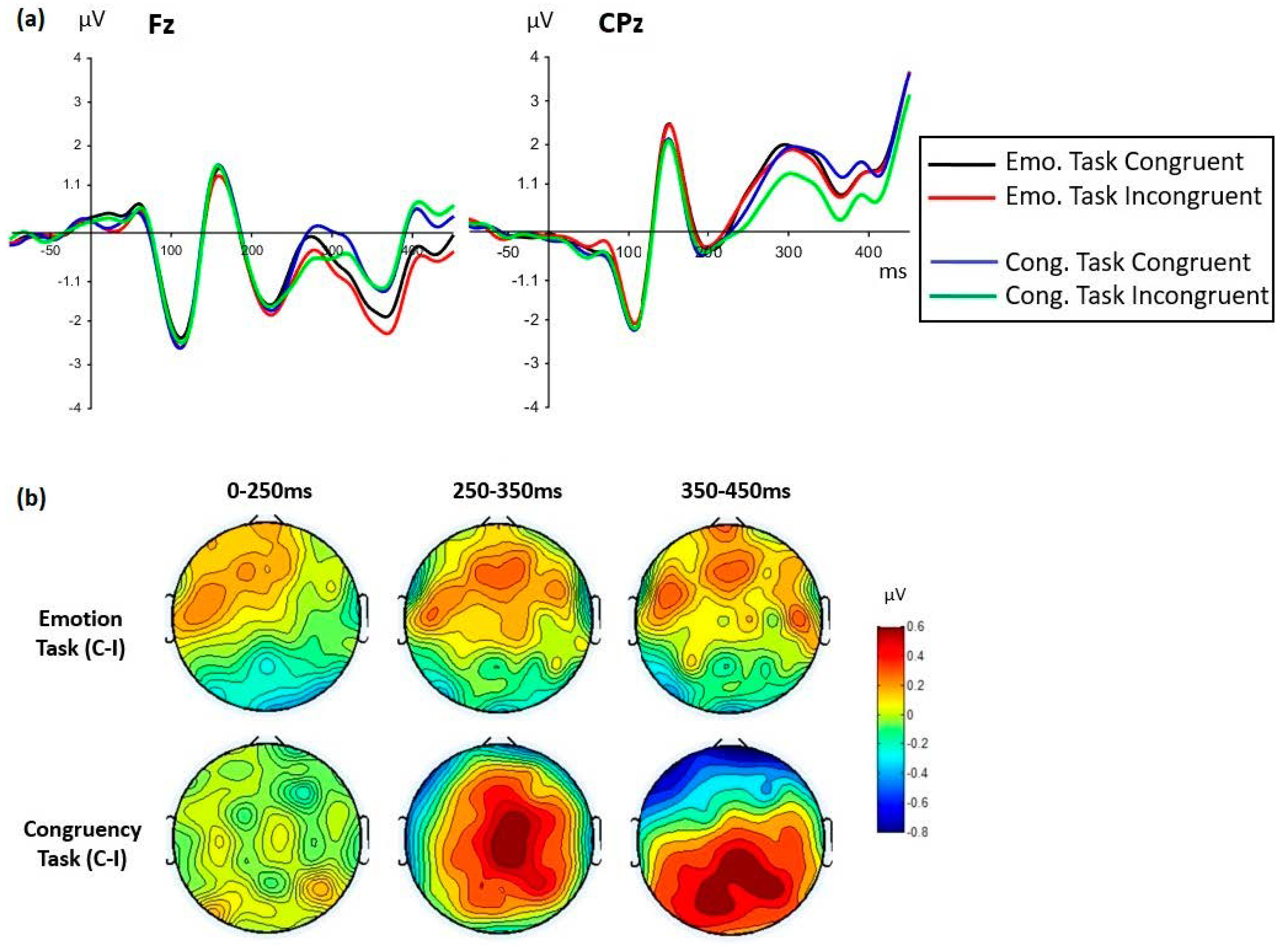

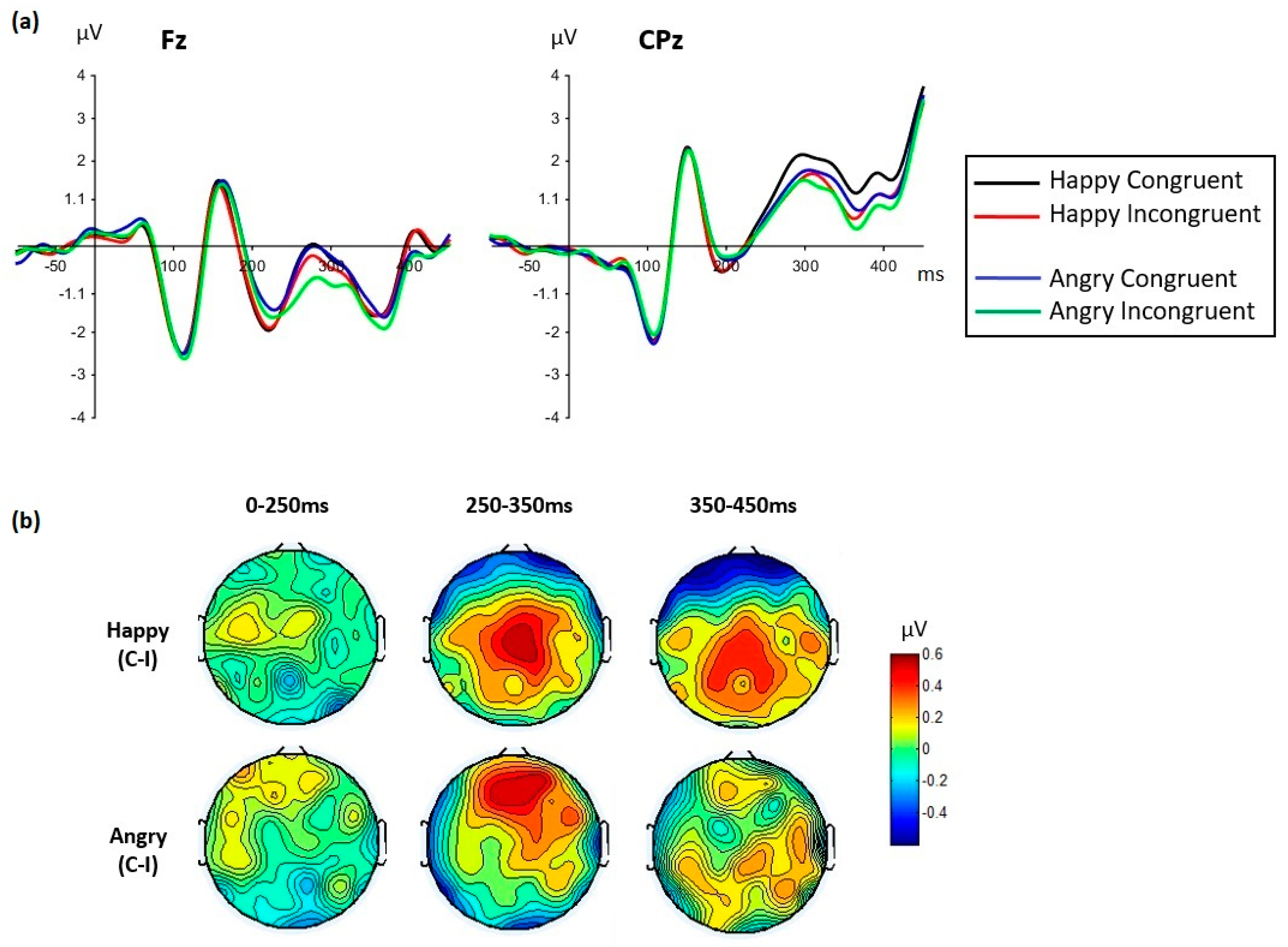

3.2.3. LPP-Like Components

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Sentences Used as Context Primes

| Negative sentences (anger inducing) |

| 1. He/she has been lining up for hours when someone cuts in before him/her |

| 2. He/she has just realized that his/her new mobile phone was stolen |

| 3. He/she bought an appliance that is broken and they will not refund the money |

| 4. He/she has just noticed someone has vandalized his/her brand new car |

| 5. He/she is denied concert entrance because he/she was sold fake tickets |

| 6. His/her friend borrowed a hundred dollars and never paid him/her back |

| 7. He/she catches his/her partner cheating on him/her with his/her best friend |

| 8. He/she has missed his/her vacation trip because of an airport strike |

| 9. They have been speaking badly of his/her mother to infuriate him/her |

| 10. He/she is at a bus stop and the bus driver refuses to stop |

| Positive sentences (Joy inducing) |

| 1. He/she has won two tickets to see his/her favorite band in concert |

| 2. He/she has received the great promotion that he/she wanted at work |

| 3. He/she is finally given the keys to his/her very first house |

| 4. He/she is enjoying the first day of his/her much-needed holidays |

| 5. He/she has been selected amongst many for a very important casting |

| 6. He/she finally got a date with the person of his/her dreams |

| 7. His/her country’s soccer team has just won the world cup final |

| 8. He/she has just received a very important scholarship to study abroad |

| 9. He/she has just won the Outstanding-Graduate-of-the-Year Award |

| 10. He/she finds out his/her child received the best grades of his class |

References

- Barrett, L.F.; Mesquita, B.; Gendron, M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011, 20, 286–290. [Google Scholar] [CrossRef]

- Wieser, M.J.; Brosch, T. Faces in Context: A Review and Systematization of Contextual Influences on Affective Face Processing. Front. Psychol. 2012, 3, 471. [Google Scholar] [CrossRef]

- Hietanen, J.K.; Astikainen, P. N170 response to facial expressions is modulated by the affective congruency between the emotional expression and preceding affective picture. Biol. Psychol. 2013, 92, 114–124. [Google Scholar] [CrossRef] [PubMed]

- Righart, R.; De Gelder, B. Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 2008, 3, 270–278. [Google Scholar] [CrossRef] [PubMed]

- Righart, R.; De Gelder, B. Recognition of facial expressions is influenced by emotional scene gist. Cogn. Affect. Behav. Neurosci. 2008, 8, 264–272. [Google Scholar] [CrossRef]

- Diéguez-Risco, T.; Aguado, L.; Albert, J.; Hinojosa, J.A. Faces in context: Modulation of expression processing by situational information. Soc. Neurosci. 2013, 8, 601–620. [Google Scholar] [CrossRef]

- Diéguez-Risco, T.; Aguado, L.; Albert, J.; Hinojosa, J.A. Judging emotional congruency: Explicit attention to situational context modulates processing of facial expressions of emotion. Biol. Psychol. 2015, 112, 27–38. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Somerville, L.H.; Johnstone, T.; Polis, S.; Alexander, A.L.; Shin, L.M.; Whalen, P.J. Contextual Modulation of Amygdala Responsivity to Surprised Faces. J. Cogn. Neurosci. 2004, 16, 1730–1745. [Google Scholar] [CrossRef] [PubMed]

- Aguado, L.; Martínez-García, N.; Solís-Olce, A.; Diéguez-Risco, T.; Hinojosa, J.A. Effects of affective and emotional congruency on facial expression processing under different task demands. Acta Psychol. 2018, 187, 66–76. [Google Scholar]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological Studies of Face Perception in Humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Eimer, M. The Face-Sensitivity of the N170 Component. Front. Hum. Neurosci. 2011, 5, 119. [Google Scholar] [CrossRef] [PubMed]

- Itier, R.J.; Taylor, M.J. N170 or N1? Spatiotemporal Differences between Object and Face Processing Using ERPs. Cereb. Cortex 2004, 14, 132–142. [Google Scholar] [CrossRef] [PubMed]

- Rossion, B.; Jacques, C. The N170: Understanding the time course of face perception in the human brain. In The Oxford Handbook of Event-Related Potential Components; Luck, S.J., Kappenman, E.S., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 115–141. [Google Scholar]

- Aguado, L.; Dieguez-Risco, T.; Méndez-Bértolo, C.; Pozo, M.A.; Hinojosa, J.A. Priming effects on the N400 in the affective priming paradigm with facial expressions of emotion. Cogn. Affect. Behav. Neurosci. 2013, 13, 284–296. [Google Scholar] [CrossRef] [PubMed]

- Hinojosa, J.; Mercado, F.; Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 2015, 55, 498–509. [Google Scholar] [CrossRef] [PubMed]

- Cuthbert, B.N.; Schupp, H.T.; Bradley, M.M.; Birbaumer, N.; Lang, P.J. Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Boil. Psychol. 2000, 52, 95–111. [Google Scholar] [CrossRef]

- Schupp, H.T.; Öhman, A.; Junghöfer, M.; Weike, A.I.; Stockburger, J.; Hamm, A.O. The Facilitated Processing of Threatening Faces: An ERP Analysis. Emotion 2004, 4, 189–200. [Google Scholar] [CrossRef]

- Herring, D.R.; Taylor, J.H.; White, K.R.; Crites Jr, S.L. Electrophysiological responses to evaluative priming: The LPP is sensitive to incongruity. Emotion 2011, 11, 794. [Google Scholar] [CrossRef] [PubMed]

- Hinojosa, J.A.; Carretié, L.; Méndez-Bértolo, C.; Míguez, A.; Pozo, M.A. Arousal modulates affective priming: An event-related potentials study. NeuroImage 2009, 47, S181. [Google Scholar] [CrossRef]

- Werheid, K.; Alpay, G.; Jentzsch, I.; Sommer, W. Priming emotional facial expressions as evidenced by event-related brain potentials. Int. J. Psychophysiol. 2005, 55, 209–219. [Google Scholar] [CrossRef]

- Bradley, M.M.; Hamby, S.; Löw, A.; Lang, P.J. Brain potentials in perception: Picture complexity and emotional arousal. Psychophysiology 2007, 44, 364–373. [Google Scholar] [CrossRef]

- Herbert, C.; Junghöfer, M.; Kissler, J. Event related potentials to emotional adjectives during reading. Psychophysiology 2008, 45, 487–498. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Markus, J.; Weike, A.I.; Hamm, A.O. Emotional Facilitation of Sensory Processing in the Visual Cortex. Psychol. Sci. 2003, 14, 7–13. [Google Scholar] [CrossRef]

- Schupp, H.T.; Junghöfer, M.; Weike, A.I.; Hamm, A.O. The selective processing of briefly presented affective pictures: An ERP analysis. Psychophysiology 2004, 41, 441–449. [Google Scholar] [CrossRef]

- Itier, R.J.; Neath-Tavares, K.N. Effects of task demands on the early neural processing of fearful and happy facial expressions. Brain Res. 2017, 1663, 38–50. [Google Scholar] [CrossRef]

- Rellecke, J.; Sommer, W.; Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Boil. Psychol. 2012, 90, 23–32. [Google Scholar] [CrossRef]

- Klauer, K.C.; Musch, J. Affective priming: Findings and theories. In The psychology of Evaluation: Affective Processes in Cognition and Emotion; Mush, J., Klauer, K.C., Eds.; Erlbaum: Mahwah, NJ, USA, 2003; Volume 7, p. 49. [Google Scholar]

- De Houwer, J.; Hermans, D.; Rothermund, K.; Wentura, D. Affective priming of semantic categorisation responses. Cogn. Emot. 2002, 16, 643–666. [Google Scholar] [CrossRef]

- Klauer, K.C.; Musch, J. Does sunshine prime loyal? Affective priming in the naming task. Q. J. Exp. Psychol. Sect. A 2001, 54, 727–751. [Google Scholar] [CrossRef] [PubMed]

- Klinger, M.R.; Burton, P.C.; Pitts, G.S. Mechanisms of unconscious priming: I. Response competition, not spreading activation. J. Exp. Psychol. Learn. Mem. Cogn. 2000, 26, 441–455. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F.; Kensinger, E.A. Context Is Routinely Encoded During Emotion Perception. Psychol. Sci. 2010, 21, 595–599. [Google Scholar] [CrossRef] [PubMed]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef]

- Miles, W.R. Ocular dominance in human adults. J. Gen. Psychol. 1930, 3, 412–430. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Itier, R.J.; Preston, F. Increased Early Sensitivity to Eyes in Mouthless Faces: In Support of the LIFTED Model of Early Face Processing. Brain Topogr. 2018, 31, 972–984. [Google Scholar] [CrossRef]

- Neath, K.N.; Itier, R.J. Fixation to features and neural processing of facial expressions in a gender discrimination task. Brain Cogn. 2015, 99, 97–111. [Google Scholar] [CrossRef]

- Neath-Tavares, K.N.; Itier, R.J. Neural processing of fearful and happy facial expressions during emotion-relevant and emotion-irrelevant tasks: A fixation-to-feature approach. Boil. Psychol. 2016, 119, 122–140. [Google Scholar] [CrossRef]

- Nemrodov, D.; Anderson, T.; Preston, F.F.; Itier, R.J. Early sensitivity for eyes within faces: A new neuronal account of holistic and featural processing. NeuroImage 2014, 97, 81–94. [Google Scholar] [CrossRef]

- Parkington, K.B.; Itier, R.J. One versus two eyes makes a difference! Early face perception is modulated by featural fixation and feature context. Cortex 2018, 109, 35–49. [Google Scholar] [CrossRef]

- Naumann, E.; Bartussek, D.; Diedrich, O.; Laufer, M.E. Assessing cognitive and affective information processing functions of the brain by means of the late positive complex of the event-related potential. J. Psychophysiol. 1992, 6, 285–298. [Google Scholar]

- Ruchkin, D.S.; Johnson, R.; Mahaffey, D.; Sutton, S. Toward a Functional Categorization of Slow Waves. Psychophysiology 1988, 25, 339–353. [Google Scholar] [CrossRef]

- Kopf, J.; Dresler, T.; Reicherts, P.; Herrmann, M.J.; Reif, A. The Effect of Emotional Content on Brain Activation and the Late Positive Potential in a Word n-back Task. PLoS ONE 2013, 8, 75598. [Google Scholar] [CrossRef]

- Matsuda, I.; Nittono, H. Motivational significance and cognitive effort elicit different late positive potentials. Clin. Neurophysiol. 2015, 126, 304–313. [Google Scholar] [CrossRef]

- Bernat, E.M.; Cadwallader, M.; Seo, D.; Vizueta, N.; Patrick, C.J. Effects of Instructed Emotion Regulation on Valence, Arousal, and Attentional Measures of Affective Processing. Dev. Neuropsychol. 2011, 36, 493–518. [Google Scholar] [CrossRef]

- Moser, J.S.; Hartwig, R.; Moran, T.P.; Jendrusina, A.A.; Kross, E. Neural markers of positive reappraisal and their associations with trait reappraisal and worry. J. Psychol. 2014, 123, 91–105. [Google Scholar] [CrossRef]

- Shafir, R.; Schwartz, N.; Blechert, J.; Sheppes, G. Emotional intensity influences pre-implementation and implementation of distraction and reappraisal. Soc. Cogn. Affect. Neurosci. 2015, 10, 1329–1337. [Google Scholar] [CrossRef]

- De Lissa, P.; McArthur, G.; Hawelka, S.; Palermo, R.; Mahajan, Y.; Hutzler, F. Fixation location on upright and inverted faces modulates the N170. Neuropsychologia 2014, 57, 1–11. [Google Scholar] [CrossRef] [PubMed]

| Task | Conditions | Mean Trial Number (SD) | Min/Max Trial Number |

|---|---|---|---|

| Emotion Task | Happy Congruent | 54.06 (11.68) | 30/75 |

| Happy Incongruent | 54.08 (12.00) | 32/73 | |

| Angry Congruent | 54.58 (12.57) | 31/75 | |

| Angry Incongruent | 54.11 (11.58) | 27/76 | |

| Congruency Task | Happy Congruent | 56.36 (12.17) | 36/76 |

| Happy Incongruent | 54.58 (12.02) | 27/74 | |

| Angry Congruent | 52.39 (11.5) | 23/71 | |

| Angry Incongruent | 53.03 (12.31) | 29/71 |

| Left Hemisphere | Right Hemisphere | ||

|---|---|---|---|

| Electrode | Number of Participants | Electrode | Number of Participants |

| P7 | 1 | ||

| PO7 | 1 | TP10 | 1 |

| PO9 | 4 | PO10 | 6 |

| P9 | 30 | P10 | 29 |

| Statistical Effects | 250–350 ms | 350–450 ms |

|---|---|---|

| (a) Task | F(1,35) = 0.831, MSE = 3.21, p = 0.36, ηp2 = 0.023 | F(1,35) = 12.32, MSE = 5.53, p = 0.001, ηp2 = 0.260 |

| (b) Emotion | F(1,35) = 3.04, MSE = 1.56, p = 0.09, ηp2 = 0.080 | F(1,35) = 7.43, MSE = 2.01, p = 0.010, ηp2 = 0.175 |

| (c) Congruency | F (1,35) = 43.27, MSE = 0.75, p < 0.0001, ηp2 = 0.553 Congruent > incongruent | F(1,35) = 6.07, MSE = 1.16, p = 0.019, ηp2 = 0.148 Congruent > incongruent |

| (d) Task × cluster | F(1,35) = 4.57, MSE = 5.05, p = 0.04, ηp2 = 0.115 | F(1,35) = 8.55, MSE = 5.21, p = 0.006, ηp2 = 0.196 |

| Frontal cluster Task effect | F(1,35) = 5.29, MSE = 3.92, p = 0.028, ηp2 = 0.131 Congruency task > emotion task | F(1,35) = 16.74, MSE = 6.65, p = 0.001, ηp2 = 0.324 Congruency task > emotion task |

| Centro-parietal cluster Task effect | F(1,35) = 1.15, MSE = 4.35, p = 0.29, ηp2 = 0.032 | F(1,35) = 0.31, MSE = 4.08, p = 0.58, ηp2 = 0.009 |

| (e) Task × congruency | F(1,35) = 5.49, MSE = 0.945, p = 0.025, ηp2 = 0.136 | F(1,35) = 0.00, MSE = 0.981, p = 0.991, ηp2 = 0.000 |

| Emotion task Congruency effect | F(1,35) = 9.22, MSE = 0.634, p = 0.005, ηp2 = 0.208 Congruent > incongruent | |

| Congruency task Congruency effect | F(1,35) = 29.97, MSE = 1.06, p < 0.0001, ηp2 = 0.461 Congruent > incongruent | |

| (f) Congruency × cluster | F(1,35) = 0.113, MSE = 0.942, p = 0.739, ηp2 = 0.003 | F(1,35) = 8.33, MSE = 1.18, p = 0.007, ηp2 = 0.192 |

| (g) Task × congruency × cluster | F(1,35) = 2.63, MSE = 1.41, p = 0.070, ηp2 = 0.113 | F (1,35) = 12.86, MSE = 2.13, p = 0.001, ηp2 = 0.269 |

| Frontal cluster Task × congruency | F(1,35) = 8.01, MSE = 1.70, p = 0.008, ηp2 = 0.186 | |

| Emo. task (congruency effect): F(1,35) = 4.75, MSE = 1.18, p = 0.036, ηp2 = 0.119 Congruent > incongruent | ||

| Cong. task (congruency effect): F(1,35) = 4.59, MSE = 1.77, p = 0.039, ηp2 = 0.116 Incongruent > congruent | ||

| Centro-parietal cluster Task × congruency | F(1,35) = 9.77, MSE = 1.41, p = 0.004, ηp2 = 0.218 | |

| Emo. Task: no effect of congruency (p = 0.78) | ||

| Cong. task (congruency effect): F(1,35) = 20.42, MSE = 1.49, p < 0.0001, ηp2 = 0.368 Congruent > incongruent | ||

| (h) Emotion × congruency | F(1,35) = 4.41, MSE = 0.361, p = 0.043, ηp2 = 0.112 | F(1,35) = 0.60, MSE = 0.750, p = 0.444, ηp2 = 0.017 |

| (i) Emotion × congruency × cluster | F (1,35) = 15.43, MSE = 1.17, p = 0.001, ηp2 = 0.306 | F (1,35) = 6.88, MSE = 1.55, p = 0.013, ηp2 = 0.164 |

| Frontal cluster Emotion × congruency | F (1,35) = 13.78, MSE = 1.10, p = 0.001, ηp2 = 0.283 | F (1,35) = 4.65, MSE = 1.66, p = 0.038, ηp2 = 0.117 |

| Happy faces (no congruency effect): F(1,35) = 0.008, MSE = 0.677, p = 0.92, ηp2 < 0.001 | Happy faces (congruency effect): F(1,35) = 4.29, MSE = 1.13, p = 0.046, ηp2 = 0.109 Congruent > incongruent | |

| Angry faces (congruency effect): F(1,35) = 28.65, MSE = 1.03, p < 0.0001, ηp2 = 0.450 Congruent > incongruent | Angry faces (no congruency effect): F(1,35) = 1.68, MSE = 1.77, p = 0.203, ηp2 = 0.046 | |

| Centro-parietal cluster Emotion x congruency | F(1,35) = 10.42, MSE = 0.431, p = 0.003, ηp2 = 0.229 | F(1,35) = 5.29, MSE = 0.637, p = 0.027, ηp2 = 0.131 |

| Happy faces (congruency effect): F(1,35) = 31.88, MSE = 0.638, p < 0.0001, ηp2 = 0.477 Congruent > incongruent | Happy faces (congruency effect): F(1,35) = 38.14, MSE = 0.462, p < 0.0001, ηp2 = 0.522 Congruent > incongruent | |

| Angry faces (no congruency effect): F(1,35) = 2.61, MSE = 0.878, p = 0.11, ηp2 = 0.069 | Angry faces (no congruency effect): F(1,35) = 2.01, MSE = 1.27, p = 0.16, ηp2 = 0.055 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguado, L.; Parkington, K.B.; Dieguez-Risco, T.; Hinojosa, J.A.; Itier, R.J. Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands. Brain Sci. 2019, 9, 116. https://doi.org/10.3390/brainsci9050116

Aguado L, Parkington KB, Dieguez-Risco T, Hinojosa JA, Itier RJ. Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands. Brain Sciences. 2019; 9(5):116. https://doi.org/10.3390/brainsci9050116

Chicago/Turabian StyleAguado, Luis, Karisa B. Parkington, Teresa Dieguez-Risco, José A. Hinojosa, and Roxane J. Itier. 2019. "Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands" Brain Sciences 9, no. 5: 116. https://doi.org/10.3390/brainsci9050116

APA StyleAguado, L., Parkington, K. B., Dieguez-Risco, T., Hinojosa, J. A., & Itier, R. J. (2019). Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands. Brain Sciences, 9(5), 116. https://doi.org/10.3390/brainsci9050116