Extensive Visual Training in Adulthood Reduces an Implicit Neural Marker of the Face Inversion Effect

Abstract

1. Introduction

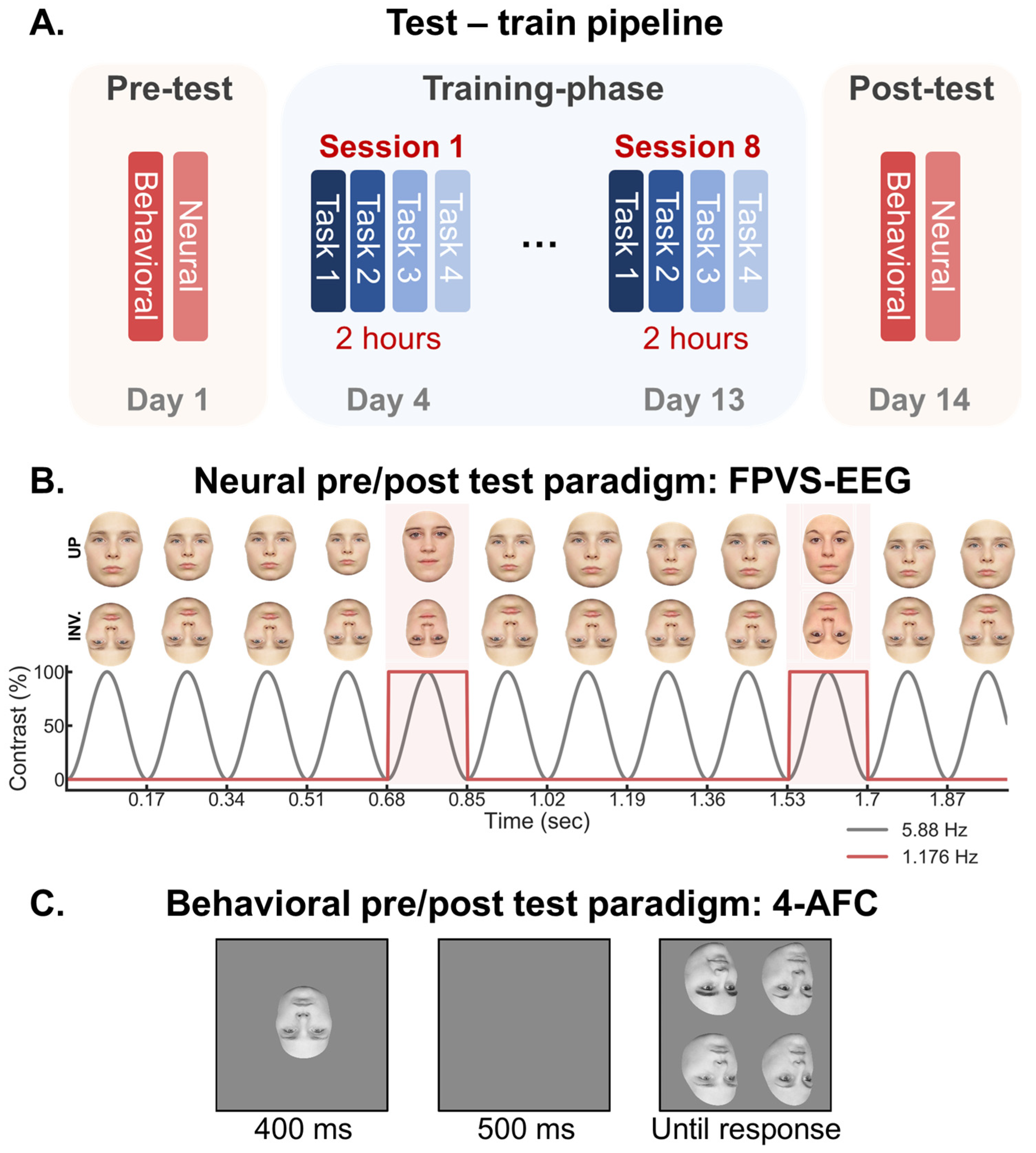

2. Methods

2.1. Training Sessions

2.2. Testing Sessions

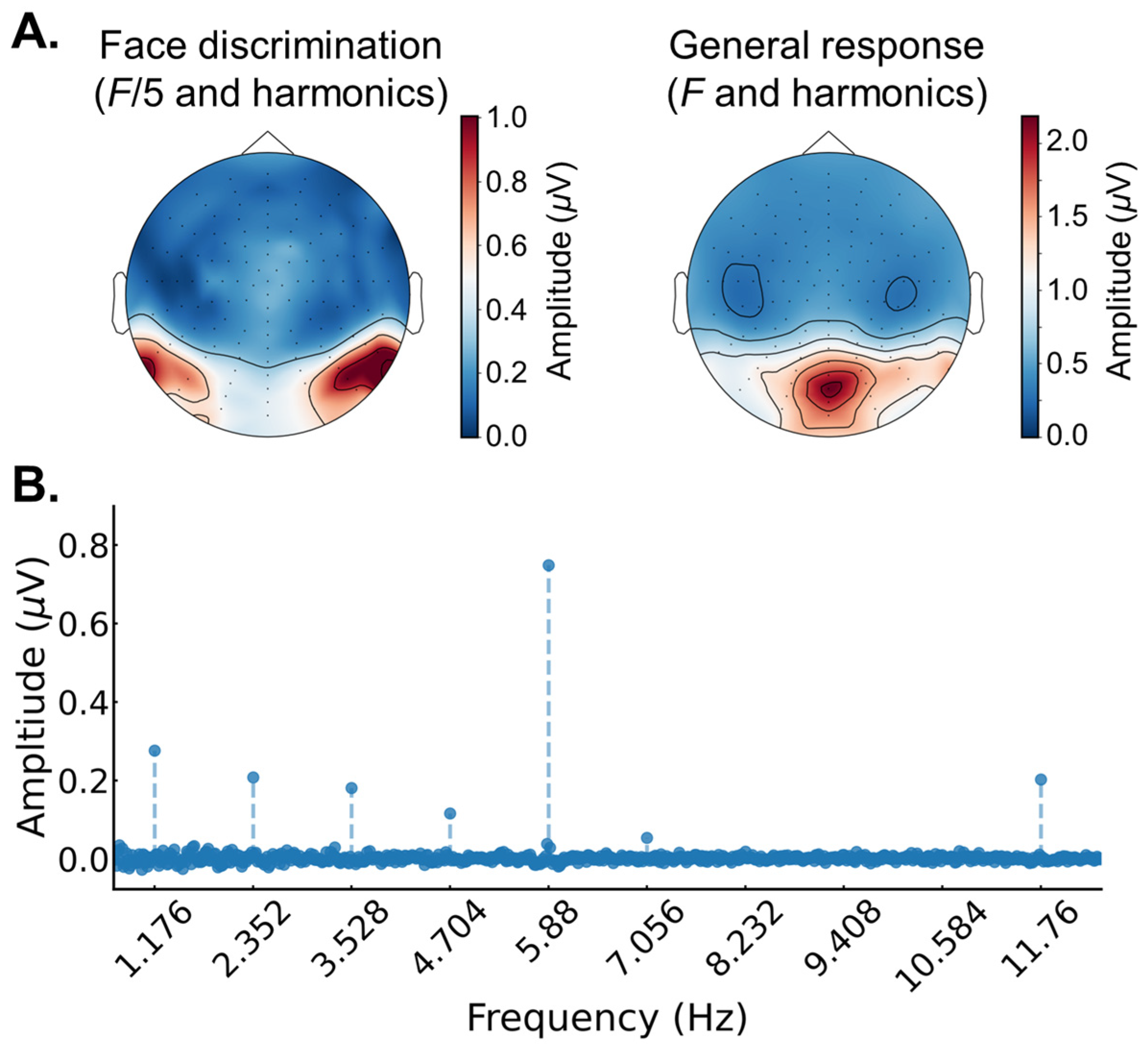

2.3. EEG Analysis

3. Results

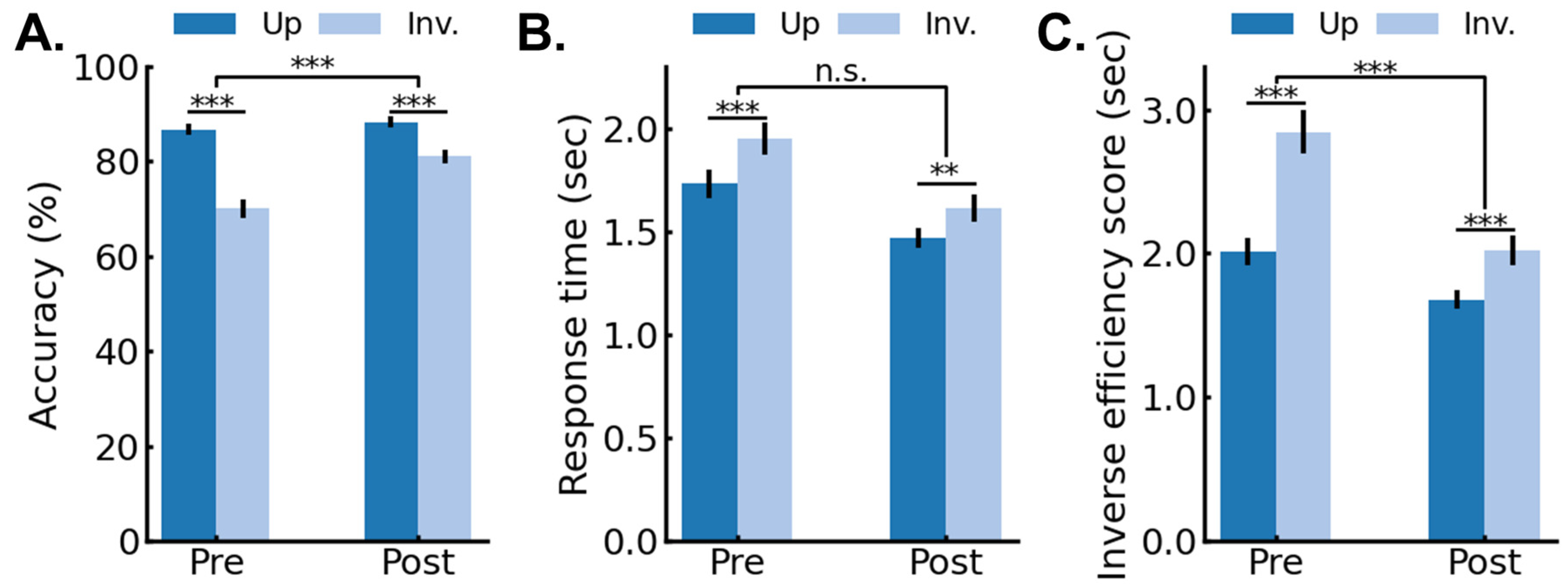

3.1. Behavioral Results: Pre/Post 4AFC Delayed Matching Task

3.2. EEG Results: Pre/Post FPVS-EEG Test

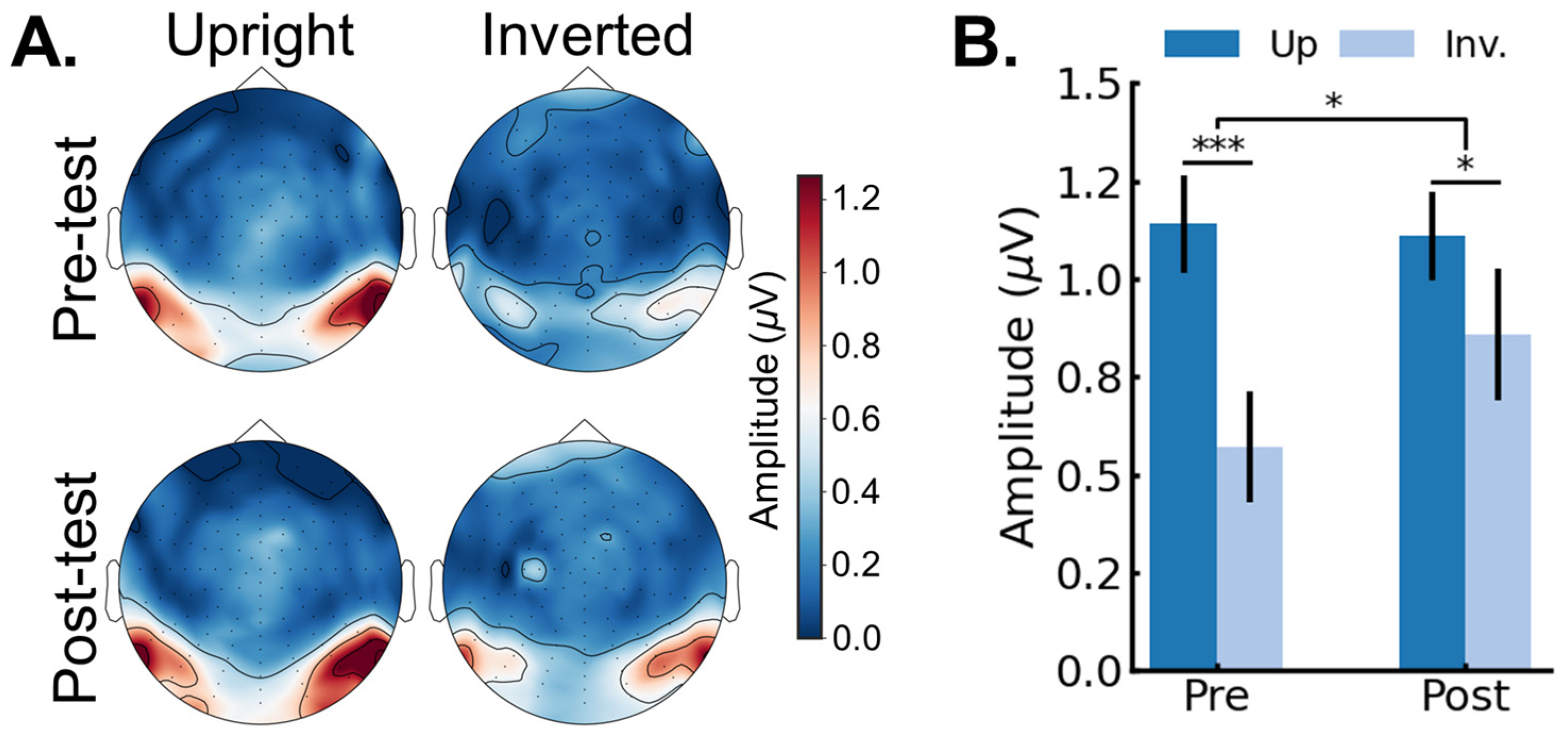

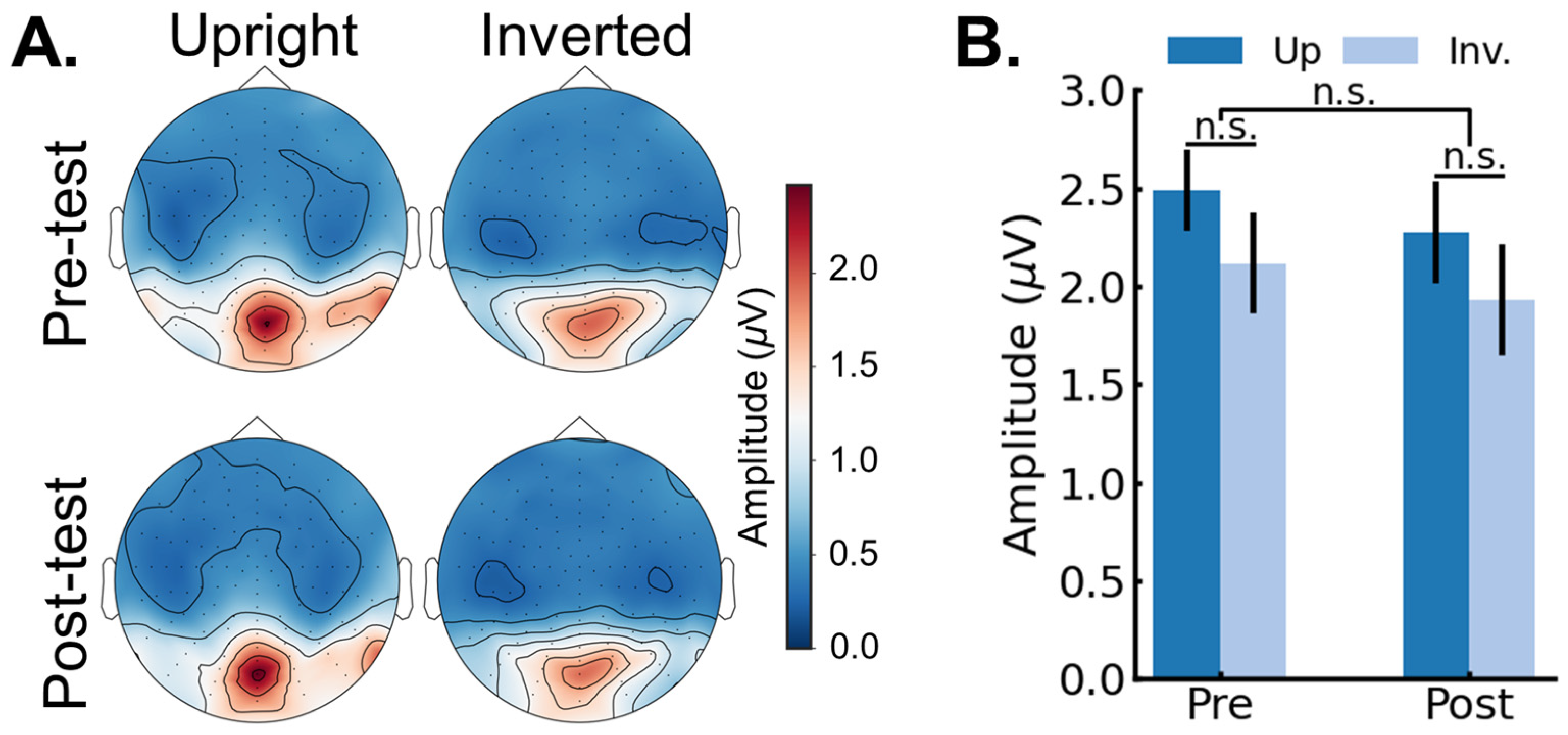

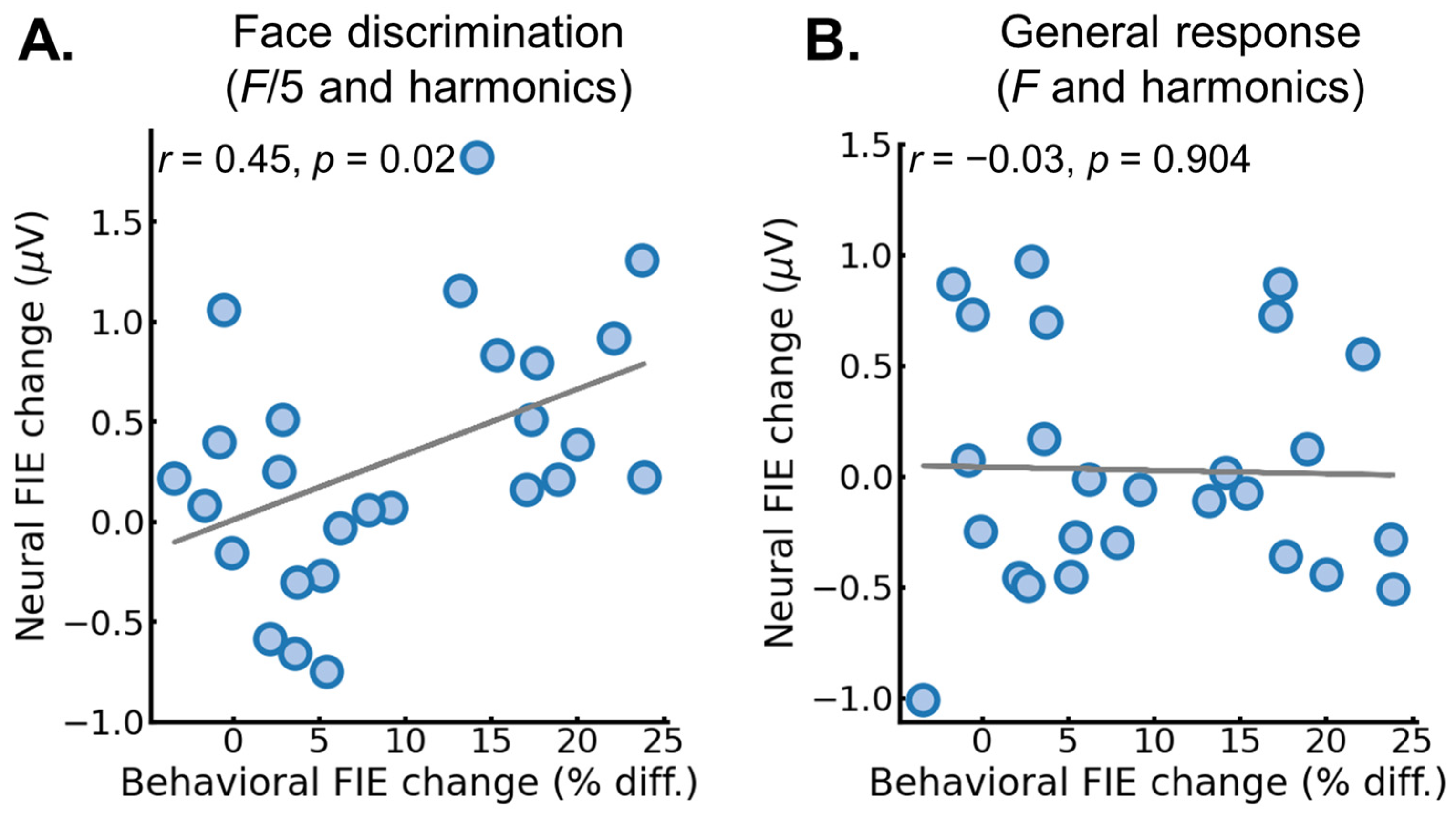

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jenkins, R.; Dowsett, A.J.; Burton, A.M. How Many Faces Do People Know? Proc. R. Soc. B 2018, 285, 20181319. [Google Scholar] [CrossRef]

- Hsiao, J.H.W.; Cottrell, G. Two Fixations Suffice in Face Recognition. Psychol. Sci. 2008, 19, 998–1006. [Google Scholar] [CrossRef]

- Jacques, C.; d’Arripe, O.; Rossion, B. The Time Course of the Inversion Effect during Individual Face Discrimination. J. Vis. 2007, 7, 3. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Retter, T.L.; Jiang, F.; Webster, M.A.; Michel, C.; Schiltz, C.; Rossion, B. Varying Stimulus Duration Reveals Consistent Neural Activity and Behavior for Human Face Individuation. Neuroscience 2021, 472, 138–156. [Google Scholar] [CrossRef] [PubMed]

- Liu-Shuang, J.; Norcia, A.M.; Rossion, B. An Objective Index of Individual Face Discrimination in the Right Occipito-Temporal Cortex by Means of Fast Periodic Oddball Stimulation. Neuropsychologia 2014, 52, 57–72. [Google Scholar] [CrossRef] [PubMed]

- Palermo, R.; Rhodes, G. Are You Always on My Mind? A Review of How Face Perception and Attention Interact. Neuropsychologia 2007, 45, 75–92. [Google Scholar] [CrossRef] [PubMed]

- Yin, R.K. Looking at Upside-down Faces. J. Exp. Psychol. 1969, 81, 141. [Google Scholar] [CrossRef]

- Rossion, B. Picture-Plane Inversion Leads to Qualitative Changes of Face Perception. Acta Psychol. 2008, 128, 274–289. [Google Scholar] [CrossRef] [PubMed]

- Bruce, C. Face Recognition by Monkeys: Absence of an Inversion Effect. Neuropsychologia 1982, 20, 515–521. [Google Scholar] [CrossRef] [PubMed]

- Rossion, B.; Taubert, J. What Can We Learn about Human Individual Face Recognition from Experimental Studies in Monkeys? Vis. Res. 2019, 157, 142–158. [Google Scholar] [CrossRef]

- Griffin, J.W. Quantifying the Face Inversion Effect in Nonhuman Primates: A Phylogenetic Meta-Analysis. Anim. Cogn. 2020, 23, 237–249. [Google Scholar] [CrossRef] [PubMed]

- Morton, J.; Johnson, M.H. CONSPEC and CONLERN: A two-process theory of infant face recognition. Psychol. Rev. 1991, 98, 164. [Google Scholar] [CrossRef] [PubMed]

- Goren, C.C.; Sarty, M.; Wu, P.Y.K. Visual Following and Pattern Discrimination of Face like Stimuli by Newborn Infants. Pediatrics 1975, 56, 544–549. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.H.; Dziurawiec, S.; Ellis, H.; Morton, J. Newborns’ Preferential Tracking of Face-like Stimuli and Its Subsequent Decline. Cognition 1991, 40, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Simion, F.; Valenza, E.; Cassia, V.M.; Turati, C.; Umiltà, C. Newborns’ Preference for up-down Asymmetrical Configurations. Dev. Sci. 2002, 5, 427–434. [Google Scholar] [CrossRef]

- Buiatti, M.; Di Giorgio, E.; Piazza, M.; Polloni, C.; Menna, G.; Taddei, F.; Baldo, E.; Vallortigara, G. Cortical Route for Facelike Pattern Processing in Human Newborns. Proc. Natl. Acad. Sci. USA 2019, 116, 4625–4630. [Google Scholar] [CrossRef] [PubMed]

- Duchaine, B.; Rezlescu, C.; Garrido, L.; Zhang, Y.; Braga, M.V.; Susilo, T. The Development of Upright Face Perception Depends on Evolved Orientation-Specific Mechanisms and Experience. iScience 2023, 26, 107763. [Google Scholar] [CrossRef] [PubMed]

- Geldart, S.; Mondloch, C.J.; Maurer, D.; De Schonen, S.; Brent, H.P. The Effect of Early Visual Deprivation on the Development of Face Processing. Dev. Sci. 2002, 5, 490–501. [Google Scholar] [CrossRef]

- Le Grand, R.; Mondloch, C.J.; Maurer, D.; Brent, H.P. Early Visual Experience and Face Processing. Nature 2001, 410, 890. [Google Scholar] [CrossRef]

- Le Grand, R.; Mondloch, C.J.; Maurer, D.; Brent, H.P. Expert Face Processing Requires Visual Input to the Right Hemisphere during Infancy. Nat. Neurosci. 2003, 6, 1108–1112. [Google Scholar] [CrossRef]

- Le Grand, R.; Mondloch, C.J.; Maurer, D.; Brent, H.P. Impairment in Holistic Face Processing Following Early Visual Deprivation. Psychol. Sci. 2004, 15, 762–768. [Google Scholar] [CrossRef]

- Carey, S. Becoming a Face Expert. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1992, 335, 95–103. [Google Scholar] [CrossRef] [PubMed]

- Hills, P.J.; Lewis, M.B. The Development of Face Expertise: Evidence for a Qualitative Change in Processing. Cogn. Dev. 2018, 48, 1–18. [Google Scholar] [CrossRef]

- Lochy, A.; Schiltz, C.; Rossion, B. The Right Hemispheric Dominance for Face Perception in Preschool Children Depends on the Visual Discrimination Level. Dev. Sci. 2020, 23, e12914. [Google Scholar] [CrossRef]

- Meissner, C.A.; Brigham, J.C. Thirty Years of Investigating the Own-Race Bias in Memory for Faces: A Meta-Analytic Review. Psychol. Public Policy Law 2001, 7, 3–35. [Google Scholar] [CrossRef]

- Rossion, B.; Michel, C. An Experience-Based Holistic Account of the Other-Race Face Effect. In Oxford Handbook of Face Perception; Oxford University Press: Oxford, UK, 2012; pp. 215–244. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Heptonstall, B.; Hagen, S. Perceptual Expertise and the Plasticity of Other-Race Face Recognition. Vis. Cogn. 2013, 21, 1183–1201. [Google Scholar] [CrossRef]

- Stelter, M.; Schweinberger, S.R. Bridging the Gap between Intergroup and Face Perception Research: Understanding the Mechanisms Underlying the Other-“race” Effect. Br. J. Psychol. 2021, 112, 373. [Google Scholar] [CrossRef]

- Kelly, D.J.; Quinn, P.C.; Slater, A.M.; Lee, K.; Ge, L.; Pascalis, O. The other-race effect develops during infancy: Evidence of perceptual narrowing. Psychol. Sci. 2007, 18, 1084–1089. [Google Scholar] [CrossRef] [PubMed]

- Kelly, D.J.; Liu, S.; Lee, K.; Quinn, P.C.; Pascalis, O.; Slater, A.M.; Ge, L. Development of the other-race effect during infancy: Evidence toward universality? J. Exp. Child. Psychol. 2009, 104, 105–114. [Google Scholar] [CrossRef]

- Sangrigoli, S.; Pallier, C.; Argenti, A.M.; Ventureyra, V.A.G.; De Schonen, S. Reversibility of the Other-Race Effect in Face Recognition during Childhood. Psychol. Sci. 2005, 16, 440–444. [Google Scholar] [CrossRef]

- De Heering, A.; De Liedekerke, C.; Deboni, M.; Rossion, B. The Role of Experience during Childhood in Shaping the Other-Race Effect. Dev. Sci. 2010, 13, 181–187. [Google Scholar] [CrossRef]

- Lebrecht, S.; Pierce, L.J.; Tarr, M.J.; Tanaka, J.W. Perceptual Other-Race Training Reduces Implicit Racial Bias. PLoS ONE 2009, 4, e4215. [Google Scholar] [CrossRef]

- McKone, E.; Brewer, J.L.; MacPherson, S.; Rhodes, G.; Hayward, W.G. Familiar Other-Race Faces Show Normal Holistic Processing and Are Robust to Perceptual Stress. Perception 2007, 36, 224–248. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Pierce, L.J. The Neural Plasticity of Other-Race Face Recognition. Cogn. Affect. Behav. Neurosci. 2009, 9, 122–131. [Google Scholar] [CrossRef]

- Yovel, G.; Halsband, K.; Pelleg, M.; Farkash, N.; Gal, B.; Goshen-Gottstein, Y. Can Massive but Passive Exposure to Faces Contribute to Face Recognition Abilities? J. Exp. Psychol. Hum. Percept. Perform. 2012, 38, 285. [Google Scholar] [CrossRef][Green Version]

- Cassia, V.M.C.; Picozzi, M.; Kuefner, D.; Bricolo, E.; Turati, C. Holistic Processing for Faces and Cars in Preschool-Aged Children and Adults: Evidence from the Composite Effect. Dev. Sci. 2009, 12, 236–248. [Google Scholar] [CrossRef]

- Kuefner, D.; Cassia, V.M.; Picozzi, M.; Bricolo, E. Do All Kids Look Alike? Evidence for an Other-Age Effect in Adults. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 811. [Google Scholar] [CrossRef]

- Ashworth, A.R.S.; Vuong, Q.C.; Rossion, B.; Tarr, M.J. Recognizing Rotated Faces and Greebles: What Properties Drive the Face Inversion Effect? Vis. Cogn. 2008, 16, 754–784. [Google Scholar] [CrossRef]

- Hussain, Z.; Sekuler, A.B.; Bennett, P.J. How Much Practice Is Needed to Produce Perceptual Learning? Vis. Res. 2009, 49, 2624–2634. [Google Scholar] [CrossRef] [PubMed]

- Laguesse, R.; Dormal, G.; Biervoye, A.; Kuefner, D.; Rossion, B. Extensive Visual Training in Adulthood Significantly Reduces the Face Inversion Effect. J. Vis. 2012, 12, 14. [Google Scholar] [CrossRef] [PubMed]

- Robbins, R.; McKone, E. Can Holistic Processing Be Learned for Inverted Faces? Cognition 2003, 88, 79–107. [Google Scholar] [CrossRef]

- Rossion, B.; Retter, T.L.; Liu-Shuang, J. Understanding Human Individuation of Unfamiliar Faces with Oddball Fast Periodic Visual Stimulation and Electroencephalography. Eur. J. Neurosci. 2020, 52, 4283–4344. [Google Scholar] [CrossRef]

- Liu-Shuang, J.; Torfs, K.; Rossion, B. An Objective Electrophysiological Marker of Face Individualisation Impairment in Acquired Prosopagnosia with Fast Periodic Visual Stimulation. Neuropsychologia 2016, 83, 100–113. [Google Scholar] [CrossRef]

- Fisher, K.; Towler, J.; Rossion, B.; Eimer, M. Neural Responses in a Fast Periodic Visual Stimulation Paradigm Reveal Domain-General Visual Discrimination Deficits in Developmental Prosopagnosia. Cortex 2020, 133, 76–102. [Google Scholar] [CrossRef]

- Jacques, C.; Rossion, B.; Volfart, A.; Brissart, H.; Colnat-Coulbois, S.; Maillard, L.; Jonas, J. The Neural Basis of Rapid Unfamiliar Face Individuation with Human Intracerebral Recordings. Neuroimage 2020, 221, 117174. [Google Scholar] [CrossRef]

- Volfart, A.; Rossion, B.; Yan, X.; Angelini, L.; Maillard, L.; Colnat-Coulbois, S.; Jonas, J. Intracerebral Electrical Stimulation of the Face-Selective Right Lateral Fusiform Gyrus Transiently Impairs Face Identity Recognition. Neuropsychologia 2023, 190, 108705. [Google Scholar] [CrossRef] [PubMed]

- Stickgold, R.; Hobson, J.A.; Fosse, R.; Fosse, M. Sleep, Learning, and Dreams: Off-Line Memory Reprocessing. Science 2001, 294, 1052–1057. [Google Scholar] [CrossRef] [PubMed]

- Quek, G.; Nemdorov, D.; Rossion, B.; Liu-Shuang, J. Selective Attention to Faces in a Rapid Visual Stream: Hemispheric Differences in Enhancement and Suppression of Category-Selective Neural Activity. J. Cogn. Neurosci. 2018, 30, 393–410. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.; Tanaka, J.W. When a Stranger Becomes a Friend: Measuring the Neural Correlates of Real-World Face Familiarisation. Vis. Cogn. 2021, 29, 689–707. [Google Scholar] [CrossRef]

- Popova, T.; Wiese, H. Developing Familiarity during the First Eight Months of Knowing a Person: A Longitudinal EEG Study on Face and Identity Learning. Cortex 2023, 165, 26–37. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Curran, T.; Porterfield, A.L.; Collins, D. Activation of Preexisting and Acquired Face Representations: The N250 Event-Related Potential as an Index of Face Familiarity. J. Cogn. Neurosci. 2006, 18, 1488–1497. [Google Scholar] [CrossRef]

- Ambrus, G.G.; Eick, C.M.; Kaiser, D.; Kovács, G. Getting to Know You: Emerging Neural Representations during Face Familiarization. J. Neurosci. 2021, 41, 5687–5698. [Google Scholar] [CrossRef] [PubMed]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological Studies of Face Perception in Humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Kanwisher, N.; Tong, F.; Nakayama, K. The Effect of Face Inversion on the Human Fusiform Face Area. Cognition 1998, 68, B1–B11. [Google Scholar] [CrossRef]

- Gilaie-Dotan, S.; Gelbard-Sagiv, H.; Malach, R. Perceptual Shape Sensitivity to Upright and Inverted Faces Is Reflected in Neuronal Adaptation. Neuroimage 2010, 50, 383–395. [Google Scholar] [CrossRef] [PubMed]

- Itier, R.J.; Herdman, A.T.; George, N.; Cheyne, D.; Taylor, M.J. Inversion and Contrast-Reversal Effects on Face Processing Assessed by MEG. Brain Res. 2006, 1115, 108–120. [Google Scholar] [CrossRef] [PubMed]

- Mazard, A.; Schiltz, C.; Rossion, B. Recovery from Adaptation to Facial Identity Is Larger for Upright than Inverted Faces in the Human Occipito-Temporal Cortex. Neuropsychologia 2006, 44, 912–922. [Google Scholar] [CrossRef]

- Watanabe, S.; Miki, K.; Kakigi, R. Mechanisms of Face Perception in Humans: A Magneto- and Electro-Encephalographic Study. Neuropathology 2005, 25, 8–20. [Google Scholar] [CrossRef]

- Scott, L.S.; Arcaro, M.J. A Domain-Relevant Framework for the Development of Face Processing. Nat. Rev. Psychol. 2023, 2, 183–195. [Google Scholar] [CrossRef]

- Kosakowski, H.L.; Cohen, M.A.; Takahashi, A.; Keil, B.; Kanwisher, N.; Saxe, R. Selective Responses to Faces, Scenes, and Bodies in the Ventral Visual Pathway of Infants. Curr. Biol. 2022, 32, 265–274. [Google Scholar] [CrossRef]

- Aylward, E.H.; Park, J.E.; Field, K.M.; Parsons, A.C.; Richards, T.L.; Cramer, S.C.; Meltzoff, A.N. Brain Activation during Face Perception: Evidence of a Developmental Change. J. Cogn. Neurosci. 2005, 17, 308–319. [Google Scholar] [CrossRef]

- Golarai, G.; Ghahremani, D.G.; Whitfield-Gabrieli, S.; Reiss, A.; Eberhardt, J.L.; Gabrieli, J.D.E.; Grill-Spector, K. Differential Development of High-Level Visual Cortex Correlates with Category-Specific Recognition Memory. Nat. Neurosci. 2007, 10, 512–522. [Google Scholar] [CrossRef]

- Golarai, G.; Liberman, A.; Grill-Spector, K. Experience Shapes the Development of Neural Substrates of Face Processing in Human Ventral Temporal Cortex. Cereb. Cortex 2015, 27, bhv314. [Google Scholar] [CrossRef]

- Peelen, M.V.; Glaser, B.; Vuilleumier, P.; Eliez, S. Differential Development of Selectivity for Faces and Bodies in the Fusiform Gyrus. Dev. Sci. 2009, 12, F16–F25. [Google Scholar] [CrossRef]

- Natu, V.S.; Barnett, M.A.; Hartley, J.; Gomez, J.; Stigliani, A.; Grill-Spector, K. Development of Neural Sensitivity to Face Identity Correlates with Perceptual Discriminability. J. Neurosci. 2016, 36, 10893–10907. [Google Scholar] [CrossRef] [PubMed]

- Nordt, M.; Gomez, J.; Natu, V.S.; Rezai, A.A.; Finzi, D.; Kular, H.; Grill-Spector, K. Cortical Recycling in High-Level Visual Cortex during Childhood Development. Nat. Hum. Behav. 2021, 5, 1686–1697. [Google Scholar] [CrossRef]

- Scherf, K.S.; Behrmann, M.; Humphreys, K.; Luna, B. Visual Category-Selectivity for Faces, Places and Objects Emerges along Different Developmental Trajectories. Dev. Sci. 2007, 10, F15–F30. [Google Scholar] [CrossRef] [PubMed]

- Adibpour, P.; Dubois, J.; Dehaene-Lambertz, G. Right but Not Left Hemispheric Discrimination of Faces in Infancy. Nat. Hum. Behav. 2018, 2, 67–79. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, J.W.; Farah, M.J. Parts and Wholes in Face Recognition. Q. J. Exp. Psychol. Sect. A 1993, 46, 225–245. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, J.W.; Simonyi, D. The “parts and wholes” of face recognition: A review of the literature. Q. J. Exp. Psychol. 2016, 69, 1876–1889. [Google Scholar] [CrossRef] [PubMed]

- Young, A.W.; Hellawell, D.; Hay, D.C. Configurational Information in Face Perception. Perception 2013, 42, 747–759. [Google Scholar] [CrossRef]

- Goffaux, V.; Rossion, B. Face Inversion Disproportionately Impairs the Perception of Vertical but Not Horizontal Relations Between Features. J. Exp. Psychol. Hum. Percept. Perform. 2007, 33, 995. [Google Scholar] [CrossRef]

- Sekunova, A.; Barton, J.J.S. The Effects of Face Inversion on the Perception of Long-Range and Local Spatial Relations in Eye and Mouth Configuration. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 1129. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Kaiser, M.D.; Hagen, S.; Pierce, L.J. Losing Face: Impaired Discrimination of Featural and Configural Information in the Mouth Region of an Inverted Face. Atten. Percept. Psychophys. 2014, 76, 1000–1014. [Google Scholar] [CrossRef]

- Freire, A.; Lee, K.; Symons, L.A. The Face-Inversion Effect as a Deficit in the Encoding of Configural Information: Direct Evidence. Perception 2000, 29, 159–170. [Google Scholar] [CrossRef]

- Rossion, B. Distinguishing the Cause and Consequence of Face Inversion: The Perceptual Field Hypothesis. Acta Psychol. 2009, 132, 300–312. [Google Scholar] [CrossRef] [PubMed]

- Van Belle, G.; de Graef, P.; Verfaillie, K.; Rossion, B.; Lefèvre, P. Face Inversion Impairs Holistic Perception: Evidence from Gaze-Contingent Stimulation. J. Vis. 2010, 10, 10. [Google Scholar] [CrossRef] [PubMed]

- Poltoratski, S.; Kay, K.; Finzi, D.; Grill-Spector, K. Holistic Face Recognition Is an Emergent Phenomenon of Spatial Processing in Face-Selective Regions. Nat. Commun. 2021, 12, 4745. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Li, J.; Fang, H.; Tian, M.; Liu, J. Individual Differences in Holistic Processing Predict Face Recognition Ability. Psychol. Sci. 2012, 23, 169–177. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, G.; Sporns, O.; Avidan, G. Stimulus Dependent Dynamic Reorganization of the Human Face Processing Network. Cereb. Cortex 2017, 27, 4823–4834. [Google Scholar] [CrossRef] [PubMed][Green Version]

- de Heering, A.; Rossion, B. Prolonged Visual Experience in Adulthood Modulates Holistic Face Perception. PLoS ONE 2008, 3, e2317. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Gauthier, I.; Curran, T.; Curby, K.M.; Collins, D. Perceptual Interference Supports a Non-Modular Account of Face Processing. Nat. Neurosci. 2003, 6, 428–432. [Google Scholar] [CrossRef] [PubMed]

- Boggan, A.L.; Bartlett, J.C.; Krawczyk, D.C. Chess Masters Show a Hallmark of Face Processing with Chess. J. Exp. Psychol. Gen. 2012, 141, 37. [Google Scholar] [CrossRef]

- Hagen, S.; Vuong, Q.C.; Jung, L.; Chin, M.D.; Scott, L.S.; Tanaka, J.W. A Perceptual Field Test in Object Experts Using Gaze-Contingent Eye Tracking. Sci. Rep. 2023, 13, 11437. [Google Scholar] [CrossRef]

- Wong, A.C.N.; Palmeri, T.J.; Gauthier, I. Conditions for Facelike Expertise with Objects: Becoming a Ziggerin Expert—But Which Type? Psychol. Sci. 2009, 20, 1108–1117. [Google Scholar] [CrossRef] [PubMed]

- Lochy, A.; Zimmermann, F.G.S.; Laguesse, R.; Willenbockel, V.; Rossion, B.; Vuong, Q.C. Does Extensive Training at Individuating Novel Objects in Adulthood Lead to Visual Expertise? The Role of Facelikeness. J. Cogn. Neurosci. 2018, 30, 449–467. [Google Scholar] [CrossRef]

- Macchi Cassia, V. Age biases in face processing: The effects of experience across development. Br. J. Psychol. 2011, 102, 816–829. [Google Scholar] [CrossRef] [PubMed]

- Bate, S.; Adams, A.; Bennetts, R.J. Guess who? Facial identity discrimination training improves face memory in typically developing children. J. Exp. Psychol. Gen. 2020, 149, 901–913. [Google Scholar] [CrossRef]

| Pre-test, day 1, week 1 (Friday) | |||||

|---|---|---|---|---|---|

| 1 | Forced-choice matching task Set 0 (32 faces) | ||||

| Learning session | |||||

| Session 1, day 4, week 2 (Monday) | Estimated time | ||||

| 1 | Exposure/learning task Set 1 (10 faces) | ± | 10 | min | |

| 2 | Naming task Set 1 | ± | 75 | min | |

| 3 | Forced-choice matching task | ± | 25 | min | |

| Session 2, day 5, week 2 (Tuesday) | |||||

| 1 | Exposure/learning task Set 1 | ± | 10 | min | |

| 2 | Naming task Set 1 | ± | 50 | min | |

| 3 | Old/New task Set 1 | ± | 5 | min | |

| 4 | Exposure/learning task Set 2 (10 faces) | ± | 10 | min | |

| 5 | Naming task Set 2 | ± | 20 | min | |

| 6 | Naming task Set 1 + Set 2 | ± | 15 | min | |

| Session 3, day 6, week 2 (Wednesday) | |||||

| 1 | Exposure/learning task Set 1 | ± | 10 | min | |

| 2 | Exposure/learning task Set 2 | ± | 10 | min | |

| 3 | Naming task Set1 + Set 2 | ± | 60 | min | |

| 4 | Forced-choice matching task | ± | 20 | min | |

| 5 | Naming task Set 1 + Set 2 | ± | 15 | min | |

| Session 4, day 7, week 2 (Thursday) | |||||

| 1 | Naming task Set 1 + Set 2 | ± | 60 | min | |

| 2 | Exposure/learning task Set 1 | ± | 10 | min | |

| 3 | Exposure/learning task Set 2 | ± | 10 | min | |

| 4 | Old/New task Set 1 + Set 2 | ± | 10 | min | |

| 5 | Naming task Set 1 + Set 2 | (two successes required) | ± | 15 | min |

| Session 5, day 8, week 2 (Friday) | |||||

| 1 | Old/New task Set 1 + Set 2 | ± | 10 | min | |

| 2 | Naming task Set 1 + Set 2 | ± | 30 | min | |

| 3 | Exposure/learning task Set 3 | ± | 10 | min | |

| 4 | Naming task Set 3 (10 faces) | ± | 15 | min | |

| 5 | Forced-choice matching task | ± | 15 | min | |

| 6 | Naming task Set 3 | ± | 25 | min | |

| 7 | Naming task Set 1 + Set 2 + Set 3 | ± | 15 | min | |

| Session 6, day 11, week 3 (Monday) | |||||

| 1 | Exposure/learning task Set 3 | ± | 10 | min | |

| 2 | Naming task Set 1 + Set 2 + Set 3 | ± | 40 | min | |

| 3 | Old/New task Set 1 + Set 2 + Set 3 | ± | 15 | min | |

| 4 | Forced-choice matching task | ± | 15 | min | |

| 5 | Naming task Set 1 + Set 2 + Set 3 | (two successes required) | ± | 40 | min |

| Session 7, day 12, week 3 (Tuesday) | |||||

| 1 | Old/New task Set 1 + Set 2 + Set 3 | ± | 15 | min | |

| 2 | Forced-choice matching task | ± | 15 | min | |

| 3 | Naming task Set 1 + Set 2 + Set 3 | (two successes required) | ± | 40 | min |

| 4 | Old/New task Set 1 + Set 2 + Set 3 | ± | 15 | min | |

| 5 | Naming task Set 1 + Set 2 + Set 3 | (two successes required) | ± | 40 | min |

| Session 8, day 13, week 3 (Wednesday) | |||||

| 1 | Forced-choice matching task | ± | 15 | min | |

| 2 | Naming task Set 1 + Set 2 + Set 3 | (two successes required) | ± | 40 | min |

| 3 | Old/New task Set 1 + Set 2 + Set 3 | ± | 15 | min | |

| 4 | Naming task Set 1 + Set 2 + Set 3 | ± | 40 | min | |

| Post-test, day 14, week 3 (Thursday) | |||||

| 1 | Forced-choice matching task (Set 4) | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hagen, S.; Laguesse, R.; Rossion, B. Extensive Visual Training in Adulthood Reduces an Implicit Neural Marker of the Face Inversion Effect. Brain Sci. 2024, 14, 146. https://doi.org/10.3390/brainsci14020146

Hagen S, Laguesse R, Rossion B. Extensive Visual Training in Adulthood Reduces an Implicit Neural Marker of the Face Inversion Effect. Brain Sciences. 2024; 14(2):146. https://doi.org/10.3390/brainsci14020146

Chicago/Turabian StyleHagen, Simen, Renaud Laguesse, and Bruno Rossion. 2024. "Extensive Visual Training in Adulthood Reduces an Implicit Neural Marker of the Face Inversion Effect" Brain Sciences 14, no. 2: 146. https://doi.org/10.3390/brainsci14020146

APA StyleHagen, S., Laguesse, R., & Rossion, B. (2024). Extensive Visual Training in Adulthood Reduces an Implicit Neural Marker of the Face Inversion Effect. Brain Sciences, 14(2), 146. https://doi.org/10.3390/brainsci14020146