Maintenance of Bodily Expressions Modulates Functional Connectivity Between Prefrontal Cortex and Extrastriate Body Area During Working Memory Processing

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

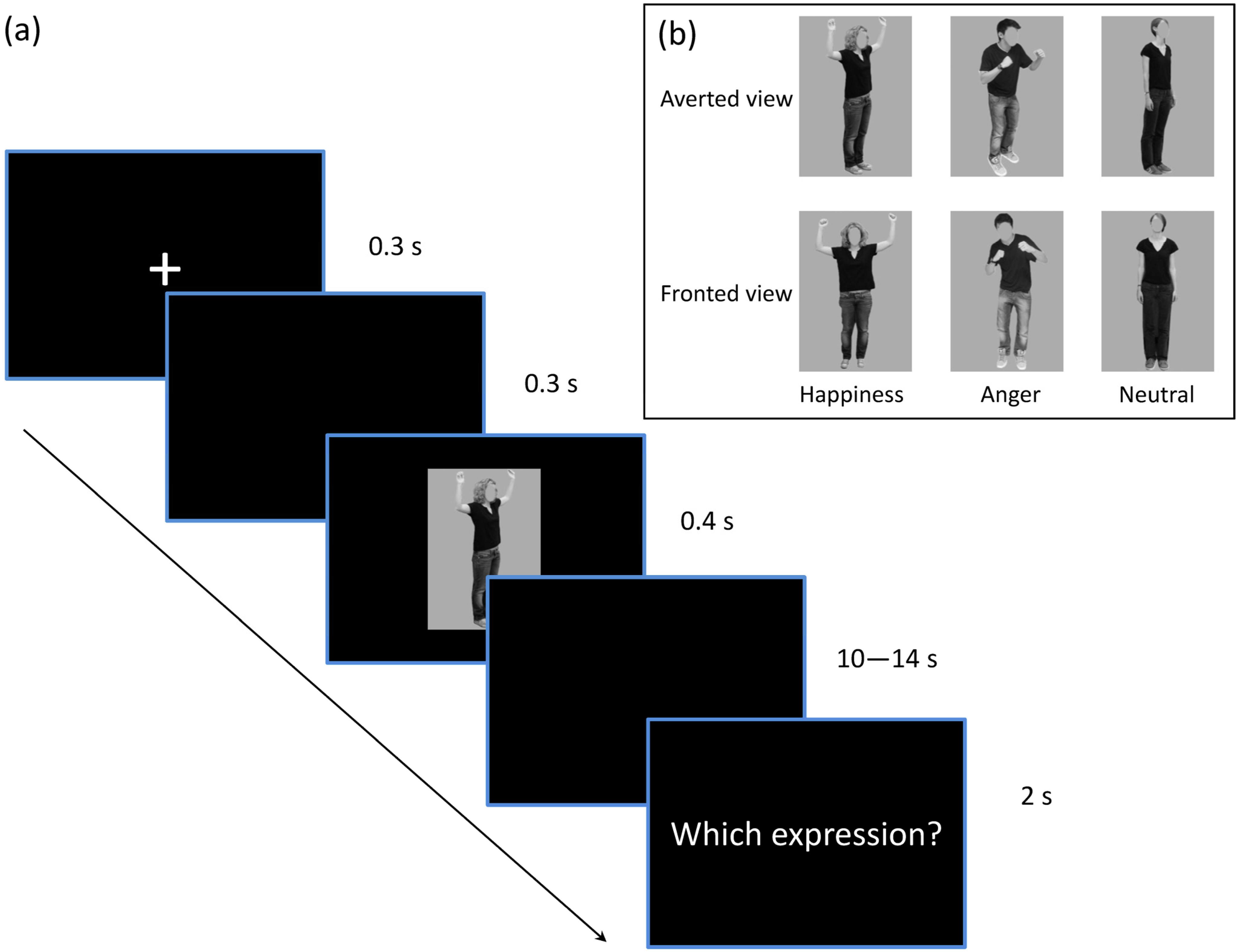

2.2. Stimuli and Experimental Procedure

2.3. fMRI Data Acquisition

2.4. fMRI Data Preprocessing

2.5. Behavioral Analysis

2.6. Univariate Analysis

2.7. Multivariate Analysis

2.8. Functional Connectivity Analysis: gPPI

3. Results

3.1. Behavioral Performance

3.2. Analysis of Condition Effects at Activation Level

3.3. Multivariate Decoding of Emotion-Related Information

3.4. Functional Connectivity

4. Discussion

4.1. Brain Areas for Initial Processing

4.2. Importance of PFC in Information Storage

4.3. Involvement of Functional Connectivity in Maintaining Period

4.4. Deep Exploration of Abstract Concepts Beyond Simple Repetitions of Information

4.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grimm, S.; Weigand, A.; Kazzer, P.; Jacobs, A.M.; Bajbouj, M. Neural mechanisms underlying the integration of emotion and working memory. Neuroimage 2012, 61, 1188–1194. [Google Scholar] [CrossRef] [PubMed]

- Touroutoglou, A.; Hollenbeck, M.; Dickerson, B.C.; Barrett, L.F. Dissociable large-scale networks anchored in the right anterior insula subserve affective experience and attention. Neuroimage 2012, 60, 1947–1958. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Peeters, N.; Fernández, G.; Kohn, N. Common neural and transcriptional correlates of inhibitory control underlie emotion regulation and memory control. Soc. Cogn. Affect. Neurosci. 2020, 15, 523–536. [Google Scholar] [CrossRef] [PubMed]

- Mary, A.; Dayan, J.; Leone, G.; Postel, C.; Fraisse, F.; Malle, C.; Vallée, T.; Klein-Peschanski, C.; Viader, F.; De la Sayette, V. Resilience after trauma: The role of memory suppression. Science 2020, 367, eaay8477. [Google Scholar] [CrossRef]

- Lee, S.-H.; Baker, C.I. Multi-voxel decoding and the topography of maintained information during visual working memory. Front. Syst. Neurosci. 2016, 10, 2. [Google Scholar] [CrossRef]

- LoPresti, M.L.; Schon, K.; Tricarico, M.D.; Swisher, J.D.; Celone, K.A.; Stern, C.E. Working memory for social cues recruits orbitofrontal cortex and amygdala: A functional magnetic resonance imaging study of delayed matching to sample for emotional expressions. J. Neurosci. 2008, 28, 3718–3728. [Google Scholar] [CrossRef]

- Röder, C.H.; Mohr, H.; Linden, D.E. Retention of identity versus expression of emotional faces differs in the recruitment of limbic areas. Neuropsychologia 2011, 49, 444–453. [Google Scholar] [CrossRef][Green Version]

- Jackson, M.C.; Wolf, C.; Johnston, S.J.; Raymond, J.E.; Linden, D.E. Neural correlates of enhanced visual short-term memory for angry faces: An fMRI study. PLoS ONE 2008, 3, e3536. [Google Scholar] [CrossRef][Green Version]

- Crowe, D.A.; Goodwin, S.J.; Blackman, R.K.; Sakellaridi, S.; Sponheim, S.R.; MacDonald, A.W., III; Chafee, M.V. Prefrontal neurons transmit signals to parietal neurons that reflect executive control of cognition. Nat. Neurosci. 2013, 16, 1484–1491. [Google Scholar] [CrossRef]

- D’Esposito, M.; Postle, B.R. The cognitive neuroscience of working memory. Annu. Rev. Psychol. 2015, 66, 115–142. [Google Scholar] [CrossRef]

- Rigotti, M.; Barak, O.; Warden, M.R.; Wang, X.-J.; Daw, N.D.; Miller, E.K.; Fusi, S. The importance of mixed selectivity in complex cognitive tasks. Nature 2013, 497, 585–590. [Google Scholar] [CrossRef] [PubMed]

- Sreenivasan, K.K.; Curtis, C.E.; D’Esposito, M. Revisiting the role of persistent neural activity during working memory. Trends Cogn. Sci. 2014, 18, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Mejias, J.F.; Wang, X.-J. Mechanisms of distributed working memory in a large-scale network of macaque neocortex. eLife 2022, 11, e72136. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; O’Sullivan, M.J.; Mattingley, J.B. Delay activity during visual working memory: A meta-analysis of 30 fMRI experiments. Neuroimage 2022, 255, 119204. [Google Scholar] [CrossRef] [PubMed]

- de Gelder, B.; Solanas, M.P. A computational neuroethology perspective on body and expression perception. Trends Cogn. Sci. 2021, 25, 744–756. [Google Scholar] [CrossRef]

- van de Riet, W.A.; Grèzes, J.; de Gelder, B. Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Soc. Neurosci. 2009, 4, 101–120. [Google Scholar] [CrossRef]

- Pichon, S.; de Gelder, B.; Grèzes, J. Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. Neuroimage 2009, 47, 1873–1883. [Google Scholar] [CrossRef]

- Ren, J.; Ding, R.; Li, S.; Zhang, M.; Wei, D.; Feng, C.; Xu, P.; Luo, W. Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception. Brain Sci. 2022, 12, 466. [Google Scholar] [CrossRef]

- Poyo Solanas, M.; Vaessen, M.; de Gelder, B. Computation-Based Feature Representation of Body Expressions in the Human Brain. Cereb. Cortex 2020, 30, 6376–6390. [Google Scholar] [CrossRef]

- Yovel, G.; O’Toole, A.J. Recognizing people in motion. Trends Cogn. Sci. 2016, 20, 383–395. [Google Scholar] [CrossRef]

- Ma, F.; Xu, J.; Li, X.; Wang, P.; Wang, B.; Liu, B. Investigating the neural basis of basic human movement perception using multi-voxel pattern analysis. Exp. Brain Res. 2018, 236, 907–918. [Google Scholar] [CrossRef] [PubMed]

- Zimmermann, M.; Mars, R.B.; De Lange, F.P.; Toni, I.; Verhagen, L. Is the extrastriate body area part of the dorsal visuomotor stream? Brain Struct. Funct. 2018, 223, 31–46. [Google Scholar] [CrossRef] [PubMed]

- Marrazzo, G.; Vaessen, M.J.; de Gelder, B. Decoding the difference between explicit and implicit body expression representation in high level visual, prefrontal and inferior parietal cortex. Neuroimage 2021, 243, 118545. [Google Scholar] [CrossRef] [PubMed]

- Saarimaki, H.; Gotsopoulos, A.; Jaaskelainen, I.P.; Lampinen, J.; Vuilleumier, P.; Hari, R.; Sams, M.; Nummenmaa, L. Discrete Neural Signatures of Basic Emotions. Cereb. Cortex 2016, 26, 2563–2573. [Google Scholar] [CrossRef] [PubMed]

- Jastorff, J.; Huang, Y.A.; Giese, M.A.; Vandenbulcke, M. Common neural correlates of emotion perception in humans. Hum. Brain Mapp. 2015, 36, 4184–4201. [Google Scholar] [CrossRef] [PubMed]

- Bracci, S.; Caramazza, A.; Peelen, M.V. Representational similarity of body parts in human occipitotemporal cortex. J. Neurosci. 2015, 35, 12977–12985. [Google Scholar] [CrossRef]

- Friston, K.J.; Buechel, C.; Fink, G.R.; Morris, J.; Rolls, E.; Dolan, R.J. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 1997, 6, 218–229. [Google Scholar] [CrossRef]

- Dijkstra, N.; Bosch, S.E.; van Gerven, M.A. Shared neural mechanisms of visual perception and imagery. Trends Cogn. Sci. 2019, 23, 423–434. [Google Scholar] [CrossRef]

- Thoma, P.; Bauser, D.S.; Suchan, B. BESST (Bochum Emotional Stimulus Set)—A pilot validation study of a stimulus set containing emotional bodies and faces from frontal and averted views. Psychiatry Res. 2013, 209, 98–109. [Google Scholar] [CrossRef]

- Nieto-Castanon, A.; Whitfield-Gabrieli, S. CONN Functional Connectivity Toolbox (RRID: SCR_009550), version 22; Hilbert Press: Boston, MA, USA, 2021. [Google Scholar] [CrossRef]

- Oosterhof, N.N.; Connolly, A.C.; Haxby, J.V. CoSMoMVPA: Multi-modal multivariate pattern analysis of neuroimaging data in Matlab/GNU Octave. Front. Neuroinform. 2016, 10, 27. [Google Scholar] [CrossRef]

- Behzadi, Y.; Restom, K.; Liau, J.; Liu, T.T. A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage 2007, 37, 90–101. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Castillo, J.; Hoy, C.W.; Handwerker, D.A.; Robinson, M.E.; Buchanan, L.C.; Saad, Z.S.; Bandettini, P.A. Tracking ongoing cognition in individuals using brief, whole-brain functional connectivity patterns. Proc. Natl. Acad. Sci. USA 2015, 112, 8762–8767. [Google Scholar] [CrossRef] [PubMed]

- Smith, S.M.; Nichols, T.E. Threshold-free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 2009, 44, 83–98. [Google Scholar] [CrossRef] [PubMed]

- Taylor, J.C.; Wiggett, A.J.; Downing, P.E. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J. Neurophysiol. 2007, 98, 1626–1633. [Google Scholar] [CrossRef]

- Downing, P.E.; Peelen, M.V. The role of occipitotemporal body-selective regions in person perception. Cogn. Neurosci. 2011, 2, 186–203. [Google Scholar] [CrossRef]

- De Gelder, B.; Snyder, J.; Greve, D.; Gerard, G.; Hadjikhani, N. Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl. Acad. Sci. USA 2004, 101, 16701–16706. [Google Scholar] [CrossRef]

- Pichon, S.; de Gelder, B.; Grezes, J. Threat prompts defensive brain responses independently of attentional control. Cereb. Cortex 2012, 22, 274–285. [Google Scholar] [CrossRef]

- Weiner, K.S.; Grill-Spector, K. Neural representations of faces and limbs neighbor in human high-level visual cortex: Evidence for a new organization principle. Psychol. Res. 2013, 77, 74–97. [Google Scholar] [CrossRef]

- Bugatus, L.; Weiner, K.S.; Grill-Spector, K. Task alters category representations in prefrontal but not high-level visual cortex. Neuroimage 2017, 155, 437–449. [Google Scholar] [CrossRef]

- Orlov, T.; Porat, Y.; Makin, T.R.; Zohary, E. Hands in motion: An upper-limb-selective area in the occipitotemporal cortex shows sensitivity to viewed hand kinematics. J. Neurosci. 2014, 34, 4882–4895. [Google Scholar] [CrossRef]

- Blythe, E.; Garrido, L.; Longo, M.R. Emotion is perceived accurately from isolated body parts, especially hands. Cognition 2023, 230, 105260. [Google Scholar] [CrossRef] [PubMed]

- Ross, P.; Flack, T. Removing hand form information specifically impairs emotion recognition for fearful and angry body stimuli. Perception 2020, 49, 98–112. [Google Scholar] [CrossRef] [PubMed]

- Pollux, P.M.; Craddock, M.; Guo, K. Gaze patterns in viewing static and dynamic body expressions. Acta. Psychol. 2019, 198, 102862. [Google Scholar] [CrossRef] [PubMed]

- Zhan, M.; Goebel, R.; de Gelder, B. Ventral and Dorsal Pathways Relate Differently to Visual Awareness of Body Postures under Continuous Flash Suppression. eNeuro 2018, 5, ENEURO.0285-17.2017. [Google Scholar] [CrossRef] [PubMed]

- Bracci, S.; Ietswaart, M.; Peelen, M.V.; Cavina-Pratesi, C. Dissociable neural responses to hands and non-hand body parts in human left extrastriate visual cortex. J. Neuropsychol. 2010, 103, 3389–3397. [Google Scholar] [CrossRef]

- Nachev, P.; Kennard, C.; Husain, M. Functional role of the supplementary and pre-supplementary motor areas. Nat. Rev. Neurosci. 2008, 9, 856–869. [Google Scholar] [CrossRef]

- Lau, H.C.; Rogers, R.D.; Haggard, P.; Passingham, R.E. Attention to intention. Science 2004, 303, 1208–1210. [Google Scholar] [CrossRef]

- Luppino, G.; Matelli, M.; Camarda, R.; Gallese, V.; Rizzolatti, G. Multiple representations of body movements in mesial area 6 and the adjacent cingulate cortex: An intracortical microstimulation study in the macaque monkey. J. Comp. Neurol. 1991, 311, 463–482. [Google Scholar] [CrossRef]

- Kravitz, D.J.; Saleem, K.S.; Baker, C.I.; Ungerleider, L.G.; Mishkin, M. The ventral visual pathway: An expanded neural framework for the processing of object quality. Trends Cogn. Sci. 2013, 17, 26–49. [Google Scholar] [CrossRef]

- Chong, T.T.-J.; Williams, M.A.; Cunnington, R.; Mattingley, J.B. Selective attention modulates inferior frontal gyrus activity during action observation. Neuroimage 2008, 40, 298–307. [Google Scholar] [CrossRef]

- Courtney, S.M.; Ungerleider, L.G.; Keil, K.; Haxby, J.V. Transient and sustained activity in a distributed neural system for human working memory. Nature 1997, 386, 608–611. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y. Reevaluating the sensory account of visual working memory storage. Trends Cogn. Sci. 2017, 21, 794–815. [Google Scholar] [CrossRef] [PubMed]

- Curtis, C.E.; Rao, V.Y.; D’Esposito, M. Maintenance of spatial and motor codes during oculomotor delayed response tasks. J. Neurosci. 2004, 24, 3944–3952. [Google Scholar] [CrossRef] [PubMed]

- Ester, E.F.; Sprague, T.C.; Serences, J.T. Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron 2015, 87, 893–905. [Google Scholar] [CrossRef] [PubMed]

- Yu, Q.; Shim, W.M. Occipital, parietal, and frontal cortices selectively maintain task-relevant features of multi-feature objects in visual working memory. Neuroimage 2017, 157, 97–107. [Google Scholar] [CrossRef]

- Szczepanski, S.M.; Knight, R.T. Insights into human behavior from lesions to the prefrontal cortex. Neuron 2014, 83, 1002–1018. [Google Scholar] [CrossRef]

- Hebart, M.N.; Bankson, B.B.; Harel, A.; Baker, C.I.; Cichy, R.M. The representational dynamics of task and object processing in humans. Elife 2018, 7, e32816. [Google Scholar] [CrossRef]

- Ranzini, M.; Ferrazzi, G.; D’Imperio, D.; Giustiniani, A.; Danesin, L.; D’Antonio, V.; Rigon, E.; Cacciante, L.; Rigon, J.; Meneghello, F. White matter tract disconnection in Gerstmann’s syndrome: Insights from a single case study. Cortex 2023, 166, 322–337. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Y.; Song, L.; Huang, R.; Ding, J.; Fang, Y.; Xu, Y.; Han, Z. Structural connectivity subserving verbal fluency revealed by lesion-behavior mapping in stroke patients. Neuropsychologia 2017, 101, 85–96. [Google Scholar] [CrossRef]

- Meyer, L.; Cunitz, K.; Obleser, J.; Friederici, A.D. Sentence processing and verbal working memory in a white-matter-disconnection patient. Neuropsychologia 2014, 61, 190–196. [Google Scholar] [CrossRef]

- Glasser, M.F.; Rilling, J.K. DTI tractography of the human brain’s language pathways. Cereb. Cortex 2008, 18, 2471–2482. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, R.; Kinoshita, M.; Shinohara, H.; Nakada, M. The superior longitudinal fascicle: Reconsidering the fronto-parietal neural network based on anatomy and function. Brain Imaging Behav. 2020, 14, 2817–2830. [Google Scholar] [CrossRef] [PubMed]

- Herbert, C.; Sfarlea, A.; Blumenthal, T. Your emotion or mine: Labeling feelings alters emotional face perception-an ERP study on automatic and intentional affect labeling. Front. Hum. Neurosci. 2013, 7, 378. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, R.; Yordanova, Y.N.; Duffau, H.; Herbet, G. Neuropsychological evidence for the crucial role of the right arcuate fasciculus in the face-based mentalizing network: A disconnection analysis. Neuropsychologia 2018, 115, 179–187. [Google Scholar] [CrossRef]

- Kay, K.; Bonnen, K.; Denison, R.N.; Arcaro, M.J.; Barack, D.L. Tasks and their role in visual neuroscience. Neuron 2023, 111, 1697–1713. [Google Scholar] [CrossRef]

- Morawetz, C.; Bode, S.; Baudewig, J.; Kirilina, E.; Heekeren, H.R. Changes in effective connectivity between dorsal and ventral prefrontal regions moderate emotion regulation. Cereb. Cortex 2016, 26, 1923–1937. [Google Scholar] [CrossRef] [PubMed]

- Patterson, K.; Nestor, P.J.; Rogers, T.T. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat. Rev. Neurosci. 2007, 8, 976–987. [Google Scholar] [CrossRef]

- Rogers, T.T.; Lambon Ralph, M.A.; Garrard, P.; Bozeat, S.; McClelland, J.L.; Hodges, J.R.; Patterson, K. Structure and deterioration of semantic memory: A neuropsychological and computational investigation. Psychol. Rev. 2004, 111, 205. [Google Scholar] [CrossRef]

- Xu, M.; Li, Z.; Diao, L.; Fan, L.; Yang, D. Contextual Valence and Sociality Jointly Influence the Early and Later Stages of Neutral Face Processing. Front. Psychol. 2016, 7, 1258. [Google Scholar] [CrossRef]

- Liu, S.; He, W.; Zhang, M.; Li, Y.; Ren, J.; Guan, Y.; Fan, C.; Li, S.; Gu, R.; Luo, W. Emotional concepts shape the perceptual representation of body expressions. Hum. Brain Mapp. 2024, 45, e26789. [Google Scholar] [CrossRef]

- Burle, B.; Spieser, L.; Roger, C.; Casini, L.; Hasbroucq, T.; Vidal, F. Spatial and temporal resolutions of EEG: Is it really black and white? A scalp current density view. Int. J. Psychophysiol. 2015, 97, 210–220. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Solanas, M.P.; Marrazzo, G.; Raman, R.; Taubert, N.; Giese, M.; Vogels, R.; de Gelder, B. A large-scale brain network of species-specific dynamic human body perception. Prog. Neurobiol. 2023, 221, 102398. [Google Scholar] [CrossRef] [PubMed]

| MNI Coordinates (Peak) | |||||

|---|---|---|---|---|---|

| Brain Areas | x | y | z | F or t | Cluster Size |

| Main effects of emotion | |||||

| PFC (IFG/MFG/AI) | −46 | 32 | 8 | 20.951 | 980 |

| PFC (IFG/AI) | 50 | 24 | 12 | 15.155 | 349 |

| AI | 40 | 30 | −8 | 11.571 | 63 |

| ACC (mPFC) | 6 | 54 | −10 | 11.887 | 344 |

| pre-SMA | −6 | 22 | 42 | 10.954 | 169 |

| IPS | −32 | −42 | 40 | 12.836 | 89 |

| AG | 52 | −52 | 26 | 10.443 | 31 |

| EBA | −50 | −56 | −2 | 23.077 | 649 |

| Inter-emotion decoding | |||||

| PFC (IFG/MFG) | −48 | 26 | 28 | 7.198 | 765 |

| PFC (IFG/MFG) | 54 | 18 | 26 | 4.867 | 88 |

| ACC | 6 | 34 | 24 | 4.715 | 54 |

| PreC | −32 | −20 | 64 | 6.285 | 139 |

| IPS | −32 | −60 | 46 | 5.463 | 100 |

| Precu | 2 | −72 | 30 | 6.294 | 223 |

| EBA | −54 | −62 | −2 | 6.493 | 235 |

| MNI Coordinates (Peak) | |||||

|---|---|---|---|---|---|

| Brain Areas | x | y | z | t | Cluster Size |

| Univariate Analysis | |||||

| Happiness > Neutral | |||||

| PFC(IFG/MFG/AI) | 50 | 24 | 12 | 5.488 | 1451 |

| PFC(IFG/AI) | −46 | 30 | 8 | 5.956 | 1733 |

| pre-SMA | −6 | 22 | 44 | 4.382 | 358 |

| EBA(FBA) | −50 | −56 | −4 | 6.85 | 1078 |

| EBA | 50 | −60 | −10 | 4.61 | 135 |

| IPS | −32 | −42 | 38 | 4.861 | 240 |

| Anger > Neutral | |||||

| PFC(IFG/AI) | −42 | 24 | 8 | 5.427 | 450 |

| PFC(IFG) | 54 | 32 | 4 | 4.93 | 120 |

| EBA | −48 | −60 | −4 | 4.31 | 68 |

| Multivariate Analysis | |||||

| Happiness > Neutral | |||||

| ATL | −50 | −4 | −30 | 4.939 | 66 |

| PFC(MFG/IFG) | −38 | 22 | 20 | 6.732 | 342 |

| PFC(MFG) | −46 | 12 | 42 | 5.16 | 88 |

| PFC(WM) | 44 | 32 | 28 | 5.641 | 62 |

| CS | −34 | −24 | 60 | 6.594 | 340 |

| IPS | −44 | −42 | 40 | 6.184 | 847 |

| IPS | 26 | −70 | 30 | 6.292 | 433 |

| SOG | −32 | −80 | 24 | 5.728 | 76 |

| pre-SMA | −6 | 28 | 46 | 5.197 | 74 |

| MCC | 4 | −30 | 36 | 6.13 | 239 |

| PreC | −36 | −2 | 46 | 4.504 | 57 |

| PreC | 38 | −4 | 44 | 4.721 | 55 |

| EBA(FBA) | −48 | −62 | −4 | 6.265 | 627 |

| EBA | 48 | −56 | −6 | 4.878 | 53 |

| Anger > Neutral | |||||

| PFC(IFG/MFG) | 52 | 16 | 28 | 5.925 | 31 |

| PFC(MFG) | 36 | 56 | 16 | 7.207 | 62 |

| EBA | −56 | −66 | −4 | 6.205 | 80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, J.; Zhang, M.; Liu, S.; He, W.; Luo, W. Maintenance of Bodily Expressions Modulates Functional Connectivity Between Prefrontal Cortex and Extrastriate Body Area During Working Memory Processing. Brain Sci. 2024, 14, 1172. https://doi.org/10.3390/brainsci14121172

Ren J, Zhang M, Liu S, He W, Luo W. Maintenance of Bodily Expressions Modulates Functional Connectivity Between Prefrontal Cortex and Extrastriate Body Area During Working Memory Processing. Brain Sciences. 2024; 14(12):1172. https://doi.org/10.3390/brainsci14121172

Chicago/Turabian StyleRen, Jie, Mingming Zhang, Shuaicheng Liu, Weiqi He, and Wenbo Luo. 2024. "Maintenance of Bodily Expressions Modulates Functional Connectivity Between Prefrontal Cortex and Extrastriate Body Area During Working Memory Processing" Brain Sciences 14, no. 12: 1172. https://doi.org/10.3390/brainsci14121172

APA StyleRen, J., Zhang, M., Liu, S., He, W., & Luo, W. (2024). Maintenance of Bodily Expressions Modulates Functional Connectivity Between Prefrontal Cortex and Extrastriate Body Area During Working Memory Processing. Brain Sciences, 14(12), 1172. https://doi.org/10.3390/brainsci14121172