Visual Deprivation Alters Functional Connectivity of Neural Networks for Voice Recognition: A Resting-State fMRI Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

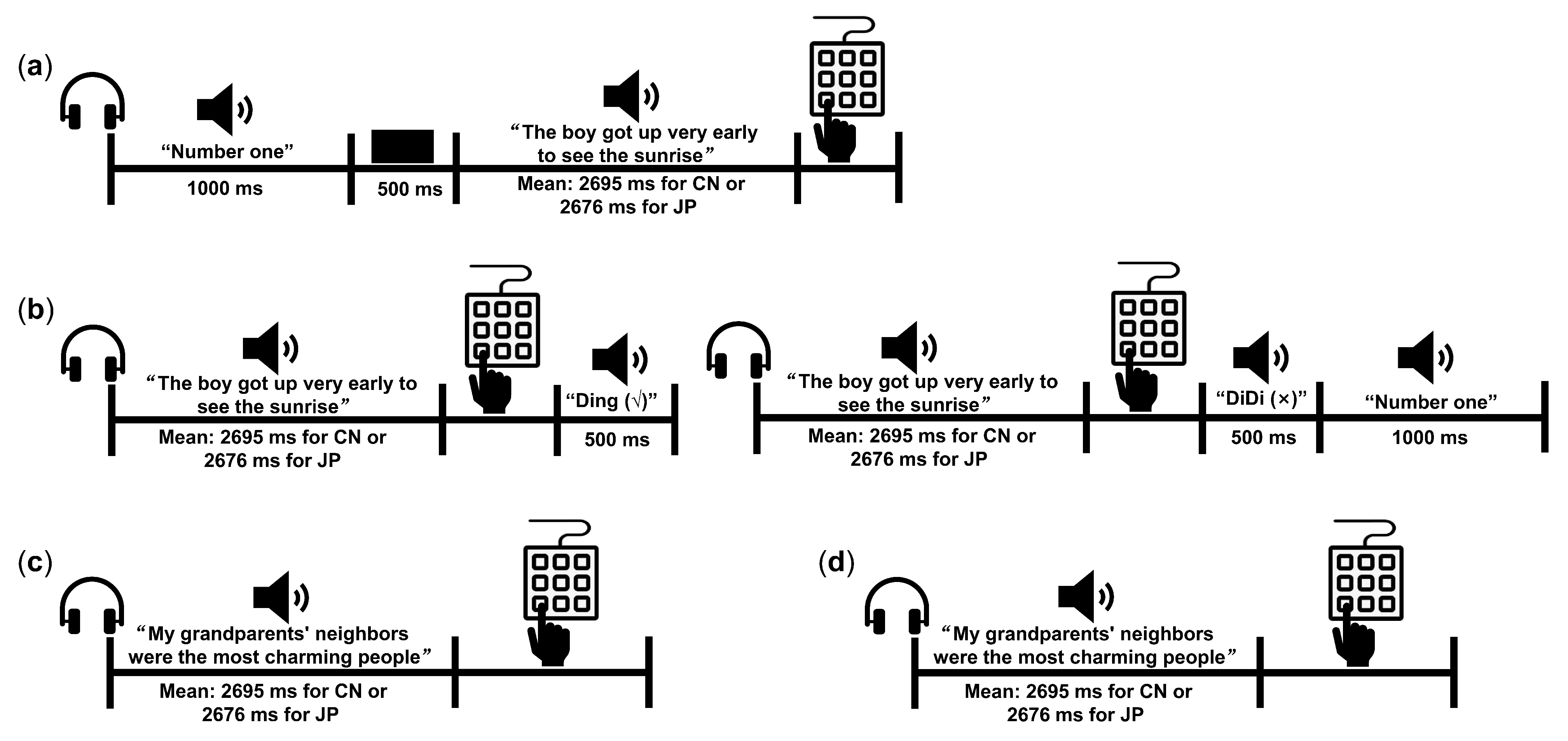

2.2. Stimuli and Procedure of the Behavioral Experiment

- Familiarization phase

- Practice phase

- Generalization phase (GP)

- Delayed memory phase (DP)

2.3. rs-fMRI Data Acquisition and Preprocessing

2.4. Seed-Based FC Analysis

3. Results

3.1. Behavioral Results

3.2. Changes in Functional Connectivity among the Voice- and Face-Sensitive Areas in the Early Blind

3.3. Correlations between Voice-recognition Ability and the Strengths of FC

4. Discussion

4.1. Enhanced Internal Connections of Voice Perception Network in the Early Blind

4.2. Reorganization of the Internetwork Connections between the Voice- and Face-Sensitive Areas in the Early Blind

4.3. Neuroplastic Changes of the Face-Sensitive Areas in the Early Blind

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tsao, D.Y.; Freiwald, W.A.; Knutsen, T.A.; Mandeville, J.B.; Tootell, R.B. Faces and objects in macaque cerebral cortex. Nat. Neurosci. 2003, 6, 989–995. [Google Scholar] [CrossRef] [PubMed]

- Tsao, D.Y.; Moeller, S.; Freiwald, W.A. Comparing face patch systems in macaques and humans. Proc. Natl. Acad. Sci. USA 2008, 105, 19514–19519. [Google Scholar] [CrossRef] [PubMed]

- Pinsk, M.A.; DeSimone, K.; Moore, T.; Gross, C.G.; Kastner, S. Representations of faces and body parts in macaque temporal cortex: A functional MRI study. Proc. Natl. Acad. Sci. USA 2005, 102, 6996–7001. [Google Scholar] [CrossRef] [PubMed]

- Gobbini, M.I.; Haxby, J.V. Neural systems for recognition of familiar faces. Neuropsychologia 2007, 45, 32–41. [Google Scholar] [CrossRef] [PubMed]

- Freiwald, W.; Duchaine, B.; Yovel, G. Face Processing Systems: From Neurons to Real-World Social Perception. Annu. Rev. Neurosci. 2016, 39, 325–346. [Google Scholar] [CrossRef] [PubMed]

- Kanwisher, N.; McDermott, J.; Chun, M.M. The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. J. Neurosci. 1997, 17, 4302–4311. [Google Scholar] [CrossRef]

- Kanwisher, N.; Stanley, D.; Harris, A. The fusiform face area is selective for faces not animals. Neuroreport 1999, 10, 183–187. [Google Scholar] [CrossRef]

- McCarthy, G.; Puce, A.; Gore, J.C.; Allison, T. Face-Specific Processing in the Human Fusiform Gyrus. J. Cogn. Neurosci. 1997, 9, 605–610. [Google Scholar] [CrossRef]

- Blank, H.; Wieland, N.; von Kriegstein, K. Person recognition and the brain: Merging evidence from patients and healthy individuals. Neurosci. Biobehav. Rev. 2014, 47, 717–734. [Google Scholar] [CrossRef]

- Duchaine, B.; Yovel, G. A Revised Neural Framework for Face Processing. Annu. Rev. Vis. Sci. 2015, 1, 393–416. [Google Scholar] [CrossRef]

- Belin, P.; Zatorre, R.J.; Lafaille, P.; Ahad, P.; Pike, B. Voice-selective areas in human auditory cortex. Nature 2000, 403, 309–312. [Google Scholar] [CrossRef]

- Belin, P.; Zatorre, R.J. Adaptation to speaker’s voice in right anterior temporal lobe. Neuroreport 2003, 14, 2105–2109. [Google Scholar] [CrossRef] [PubMed]

- Kriegstein, K.V.; Giraud, A.L. Distinct functional substrates along the right superior temporal sulcus for the processing of voices. Neuroimage 2004, 22, 948–955. [Google Scholar] [CrossRef]

- Belin, P.; Fecteau, S.; Bedard, C. Thinking the voice: Neural correlates of voice perception. Trends Cogn. Sci. 2004, 8, 129–135. [Google Scholar] [CrossRef]

- Andics, A.; McQueen, J.M.; Petersson, K.M.; Gal, V.; Rudas, G.; Vidnyanszky, Z. Neural mechanisms for voice recognition. Neuroimage 2010, 52, 1528–1540. [Google Scholar] [CrossRef] [PubMed]

- Schall, S.; Kiebel, S.J.; Maess, B.; von Kriegstein, K. Voice identity recognition: Functional division of the right STS and its behavioral relevance. J. Cogn. Neurosci. 2015, 27, 280–291. [Google Scholar] [CrossRef]

- Pernet, C.R.; McAleer, P.; Latinus, M.; Gorgolewski, K.J.; Charest, I.; Bestelmeyer, P.E.; Watson, R.H.; Fleming, D.; Crabbe, F.; Valdes-Sosa, M.; et al. The human voice areas: Spatial organization and inter-individual variability in temporal and extra-temporal cortices. Neuroimage 2015, 119, 164–174. [Google Scholar] [CrossRef]

- Aglieri, V.; Cagna, B.; Velly, L.; Takerkart, S.; Belin, P. FMRI-based identity classification accuracy in left temporal and frontal regions predicts speaker recognition performance. Sci. Rep. 2021, 11, 489. [Google Scholar] [CrossRef] [PubMed]

- von Kriegstein, K.v.; Kleinschmidt, A.; Sterzer, P.; Giraud, A.-L. Interaction of Face and Voice Areas during Speaker Recognition. J. Cogn. Neurosci. 2005, 17, 367–376. [Google Scholar] [CrossRef] [PubMed]

- von Kriegstein, K.; Dogan, Ö.; Grüter, M.; Giraud, A.-L.; Kell, C.A.; Grüter, T.; Kleinschmidt, A.; Kiebel, S.J. Simulation of talking faces in the human brain improves auditory speech recognition. Proc. Natl. Acad. Sci. USA 2008, 105, 6747–6752. [Google Scholar] [CrossRef] [PubMed]

- von Kriegstein, K.; Giraud, A.L. Implicit multisensory associations influence voice recognition. PLoS Biol. 2006, 4, e326. [Google Scholar] [CrossRef] [PubMed]

- Schall, S.; Kiebel, S.J.; Maess, B.; von Kriegstein, K. Early auditory sensory processing of voices is facilitated by visual mechanisms. Neuroimage 2013, 77, 237–245. [Google Scholar] [CrossRef]

- Blank, H.; Anwander, A.; von Kriegstein, K. Direct structural connections between voice- and face-recognition areas. J. Neurosci. 2011, 31, 12906–12915. [Google Scholar] [CrossRef] [PubMed]

- Blank, H.; Kiebel, S.J.; von Kriegstein, K. How the human brain exchanges information across sensory modalities to recognize other people. Hum. Brain Mapp. 2015, 36, 324–339. [Google Scholar] [CrossRef]

- Benetti, S.; Novello, L.; Maffei, C.; Rabini, G.; Jovicich, J.; Collignon, O. White matter connectivity between occipital and temporal regions involved in face and voice processing in hearing and early deaf individuals. Neuroimage 2018, 179, 263–274. [Google Scholar] [CrossRef] [PubMed]

- Campanella, S.; Belin, P. Integrating face and voice in person perception. Trends Cogn. Sci. 2007, 11, 535–543. [Google Scholar] [CrossRef]

- Schweinberger, S.R.; Kloth, N.; Robertson, D.M. Hearing facial identities: Brain correlates of face--voice integration in person identification. Cortex 2011, 47, 1026–1037. [Google Scholar] [CrossRef]

- Yovel, G.; Belin, P. A unified coding strategy for processing faces and voices. Trends Cogn. Sci. 2013, 17, 263–271. [Google Scholar] [CrossRef]

- Awwad Shiekh Hasan, B.; Valdes-Sosa, M.; Gross, J.; Belin, P. “Hearing faces and seeing voices”: Amodal coding of person identity in the human brain. Sci. Rep. 2016, 6, 37494. [Google Scholar] [CrossRef]

- Pascual-Leone, A.; Hamilton, R. The metamodal organization of the brain. Prog. Brain Res. 2001, 134, 427–445. [Google Scholar] [CrossRef]

- Reich, L.; Maidenbaum, S.; Amedi, A. The brain as a flexible task machine: Implications for visual rehabilitation using noninvasive vs. invasive approaches. Curr. Opin. Neurol. 2012, 25, 86–95. [Google Scholar] [CrossRef]

- Amedi, A.; Hofstetter, S.; Maidenbaum, S.; Heimler, B. Task Selectivity as a Comprehensive Principle for Brain Organization. Trends Cogn. Sci. 2017, 21, 307–310. [Google Scholar] [CrossRef] [PubMed]

- Gougoux, F.; Belin, P.; Voss, P.; Lepore, F.; Lassonde, M.; Zatorre, R.J. Voice perception in blind persons: A functional magnetic resonance imaging study. Neuropsychologia 2009, 47, 2967–2974. [Google Scholar] [CrossRef] [PubMed]

- Dormal, G.; Pelland, M.; Rezk, M.; Yakobov, E.; Lepore, F.; Collignon, O. Functional Preference for Object Sounds and Voices in the Brain of Early Blind and Sighted Individuals. J. Cogn. Neurosci. 2018, 30, 86–106. [Google Scholar] [CrossRef] [PubMed]

- Holig, C.; Focker, J.; Best, A.; Roder, B.; Buchel, C. Brain systems mediating voice identity processing in blind humans. Hum. Brain Mapp. 2014, 35, 4607–4619. [Google Scholar] [CrossRef] [PubMed]

- Fairhall, S.L.; Porter, K.B.; Bellucci, C.; Mazzetti, M.; Cipolli, C.; Gobbini, M.I. Plastic reorganization of neural systems for perception of others in the congenitally blind. Neuroimage 2017, 158, 126–135. [Google Scholar] [CrossRef]

- Klinge, C.; Roder, B.; Buchel, C. Increased amygdala activation to emotional auditory stimuli in the blind. Brain 2010, 133, 1729–1736. [Google Scholar] [CrossRef]

- Boldt, R.; Seppa, M.; Malinen, S.; Tikka, P.; Hari, R.; Carlson, S. Spatial variability of functional brain networks in early-blind and sighted subjects. Neuroimage 2014, 95, 208–216. [Google Scholar] [CrossRef]

- Burton, H.; Snyder, A.Z.; Raichle, M.E. Resting state functional connectivity in early blind humans. Front. Syst. Neurosci. 2014, 8, 51. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, C.; Liang, M.; Li, J.; Tian, L.; Zhou, Y.; Qin, W.; Li, K.; Jiang, T. Whole brain functional connectivity in the early blind. Brain 2007, 130, 2085–2096. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Y.; Li, J.; Zhou, Y.; Wang, K.; Tian, L.; Qin, W.; Jiang, T.; Li, K. Altered functional connectivity of primary visual cortex in early blindness. Hum. Brain Mapp. 2008, 29, 533–543. [Google Scholar] [CrossRef] [PubMed]

- Focker, J.; Best, A.; Holig, C.; Roder, B. The superiority in voice processing of the blind arises from neural plasticity at sensory processing stages. Neuropsychologia 2012, 50, 2056–2067. [Google Scholar] [CrossRef]

- Pang, W.; Xing, H.; Zhang, L.; Shu, H.; Zhang, Y. Superiority of blind over sighted listeners in voice recognition. J. Acoust. Soc. Am. 2020, 148, EL208. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Li, Y.; Zhou, H.; Zhang, Y.; Shu, H. Language-familiarity effect on voice recognition by blind listeners. JASA Express Lett. 2021, 1, 055201. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Shu, H.; Zhang, L.; Wang, X.; Gong, Q.; Li, P. Cortical competition during language discrimination. Neuroimage 2008, 43, 624–633. [Google Scholar] [CrossRef]

- Perrachione, T.K.; Del Tufo, S.N.; Gabrieli, J.D.E. Human Voice Recognition Depends on Language Ability. Science 2011, 333, 595. [Google Scholar] [CrossRef]

- Xie, X.; Myers, E. The impact of musical training and tone language experience on talker identification. J. Acoust. Soc. Am. 2015, 137, 419–432. [Google Scholar] [CrossRef]

- Chao-Gan, Y.; Yu-Feng, Z. DPARSF: A MATLAB Toolbox for “Pipeline” Data Analysis of Resting-State fMRI. Front. Syst. Neurosci. 2010, 4, 13. [Google Scholar] [CrossRef]

- Friston, K.J.; Williams, S.; Howard, R.; Frackowiak, R.S.J.; Turner, R. Movement-Related effects in fMRI time-series. Magn. Reson. Med. 1996, 35, 346–355. [Google Scholar] [CrossRef]

- Ashburner, J. A fast diffeomorphic image registration algorithm. Neuroimage 2007, 38, 95–113. [Google Scholar] [CrossRef] [PubMed]

- Van Lancker, D.R.; Canter, G.J. Impairment of voice and face recognition in patients with hemispheric damage. Brain Cogn. 1982, 1, 185–195. [Google Scholar] [CrossRef]

- Bestelmeyer, P.E.; Belin, P.; Grosbras, M.H. Right temporal TMS impairs voice detection. Curr. Biol. 2011, 21, R838–R839. [Google Scholar] [CrossRef]

- Diedenhofen, B.; Musch, J. cocor: A Comprehensive Solution for the Statistical Comparison of Correlations. PLoS ONE 2015, 10, e0121945. [Google Scholar] [CrossRef]

- Todorov, A.; Engell, A.D. The role of the amygdala in implicit evaluation of emotionally neutral faces. Soc. Cogn. Affect. Neurosci. 2008, 3, 303–312. [Google Scholar] [CrossRef]

- Ajina, S.; Pollard, M.; Bridge, H. The Superior Colliculus and Amygdala Support Evaluation of Face Trait in Blindsight. Front. Neurol. 2020, 11, 769. [Google Scholar] [CrossRef]

- Latinus, M.; Crabbe, F.; Belin, P. Learning-induced changes in the cerebral processing of voice identity. Cereb. Cortex 2011, 21, 2820–2828. [Google Scholar] [CrossRef] [PubMed]

- McGaugh, J.L.; Cahill, L.; Roozendaal, B. Involvement of the amygdala in memory storage: Interaction with other brain systems. Proc. Natl. Acad. Sci. USA 1996, 93, 13508–13514. [Google Scholar] [CrossRef] [PubMed]

- McGaugh, J.L. Memory consolidation and the amygdala: A systems perspective. Trends Neurosci. 2002, 25, 456–461. [Google Scholar] [CrossRef]

- Roozendaal, B.; McEwen, B.S.; Chattarji, S. Stress, memory and the amygdala. Nat. Rev. Neurosci. 2009, 10, 423–433. [Google Scholar] [CrossRef]

- Focker, J.; Holig, C.; Best, A.; Roder, B. Neural plasticity of voice processing: Evidence from event-related potentials in late-onset blind and sighted individuals. Restor. Neurol. Neurosci. 2015, 33, 15–30. [Google Scholar] [CrossRef] [PubMed]

- Maguinness, C.; Roswandowitz, C.; von Kriegstein, K. Understanding the mechanisms of familiar voice-identity recognition in the human brain. Neuropsychologia 2018, 116, 179–193. [Google Scholar] [CrossRef] [PubMed]

- Schweinberger, S.R.; Kaufmann, J.M.; Moratti, S.; Keil, A.; Burton, A.M. Brain responses to repetitions of human and animal faces, inverted faces, and objects: An MEG study. Brain Res. 2007, 1184, 226–233. [Google Scholar] [CrossRef] [PubMed]

- Watson, R.; Latinus, M.; Charest, I.; Crabbe, F.; Belin, P. People-selectivity, audiovisual integration and heteromodality in the superior temporal sulcus. Cortex 2014, 50, 125–136. [Google Scholar] [CrossRef]

- Smith, E.L.; Grabowecky, M.; Suzuki, S. Auditory-visual crossmodal integration in perception of face gender. Curr. Biol. 2007, 17, 1680–1685. [Google Scholar] [CrossRef]

- Catani, M.; Jones, D.K.; Donato, R.; Ffytche, D.H. Occipito-temporal connections in the human brain. Brain 2003, 126, 2093–2107. [Google Scholar] [CrossRef]

- Herrington, J.D.; Taylor, J.M.; Grupe, D.W.; Curby, K.M.; Schultz, R.T. Bidirectional communication between amygdala and fusiform gyrus during facial recognition. Neuroimage 2011, 56, 2348–2355. [Google Scholar] [CrossRef]

- Kann, S.J.; O’Rawe, J.F.; Huang, A.S.; Klein, D.N.; Leung, H.C. Preschool negative emotionality predicts activity and connectivity of the fusiform face area and amygdala in later childhood. Soc. Cogn. Affect. Neurosci. 2017, 12, 1511–1519. [Google Scholar] [CrossRef]

- Wang, J.; Wei, Q.; Bai, T.; Zhou, X.; Sun, H.; Becker, B.; Tian, Y.; Wang, K.; Kendrick, K. Electroconvulsive therapy selectively enhanced feedforward connectivity from fusiform face area to amygdala in major depressive disorder. Soc. Cogn. Affect. Neurosci. 2017, 12, 1983–1992. [Google Scholar] [CrossRef]

- Davies-Thompson, J.; Andrews, T.J. Intra- and interhemispheric connectivity between face-selective regions in the human brain. J. Neurophysiol. 2012, 108, 3087–3095. [Google Scholar] [CrossRef]

- Pascual-Leone, A.; Amedi, A.; Fregni, F.; Merabet, L.B. The plastic human brain cortex. Annu. Rev. Neurosci. 2005, 28, 377–401. [Google Scholar] [CrossRef] [PubMed]

- van den Hurk, J.; Van Baelen, M.; Op de Beeck, H.P. Development of visual category selectivity in ventral visual cortex does not require visual experience. Proc. Natl. Acad. Sci. USA 2017, 114, E4501–E4510. [Google Scholar] [CrossRef] [PubMed]

- Aglieri, V.; Chaminade, T.; Takerkart, S.; Belin, P. Functional connectivity within the voice perception network and its behavioural relevance. Neuroimage 2018, 183, 356–365. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, W.; Zhou, W.; Ruan, Y.; Zhang, L.; Shu, H.; Zhang, Y.; Zhang, Y. Visual Deprivation Alters Functional Connectivity of Neural Networks for Voice Recognition: A Resting-State fMRI Study. Brain Sci. 2023, 13, 636. https://doi.org/10.3390/brainsci13040636

Pang W, Zhou W, Ruan Y, Zhang L, Shu H, Zhang Y, Zhang Y. Visual Deprivation Alters Functional Connectivity of Neural Networks for Voice Recognition: A Resting-State fMRI Study. Brain Sciences. 2023; 13(4):636. https://doi.org/10.3390/brainsci13040636

Chicago/Turabian StylePang, Wenbin, Wei Zhou, Yufang Ruan, Linjun Zhang, Hua Shu, Yang Zhang, and Yumei Zhang. 2023. "Visual Deprivation Alters Functional Connectivity of Neural Networks for Voice Recognition: A Resting-State fMRI Study" Brain Sciences 13, no. 4: 636. https://doi.org/10.3390/brainsci13040636

APA StylePang, W., Zhou, W., Ruan, Y., Zhang, L., Shu, H., Zhang, Y., & Zhang, Y. (2023). Visual Deprivation Alters Functional Connectivity of Neural Networks for Voice Recognition: A Resting-State fMRI Study. Brain Sciences, 13(4), 636. https://doi.org/10.3390/brainsci13040636