Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

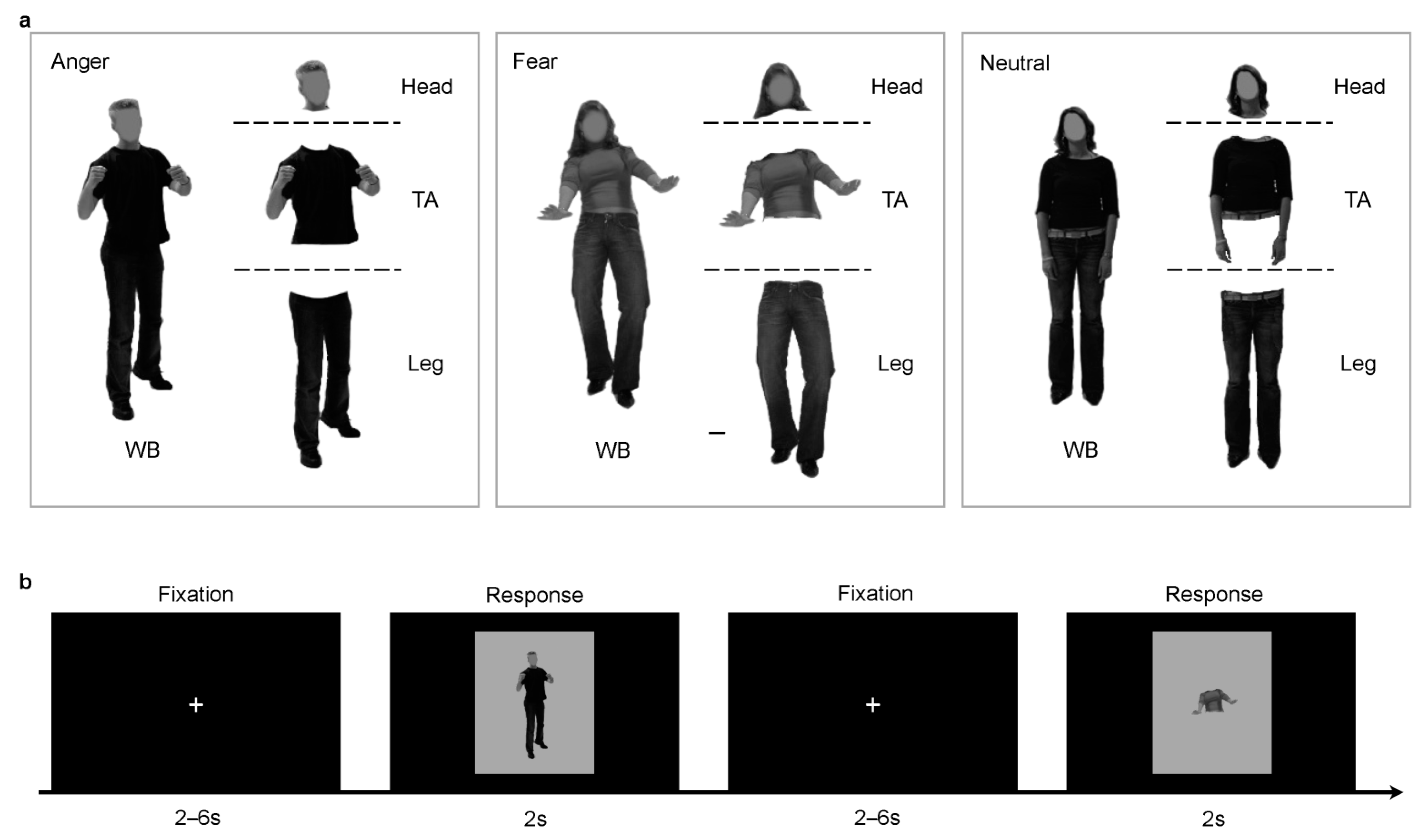

2.2. Stimuli Presentation

2.3. Experimental Tasks

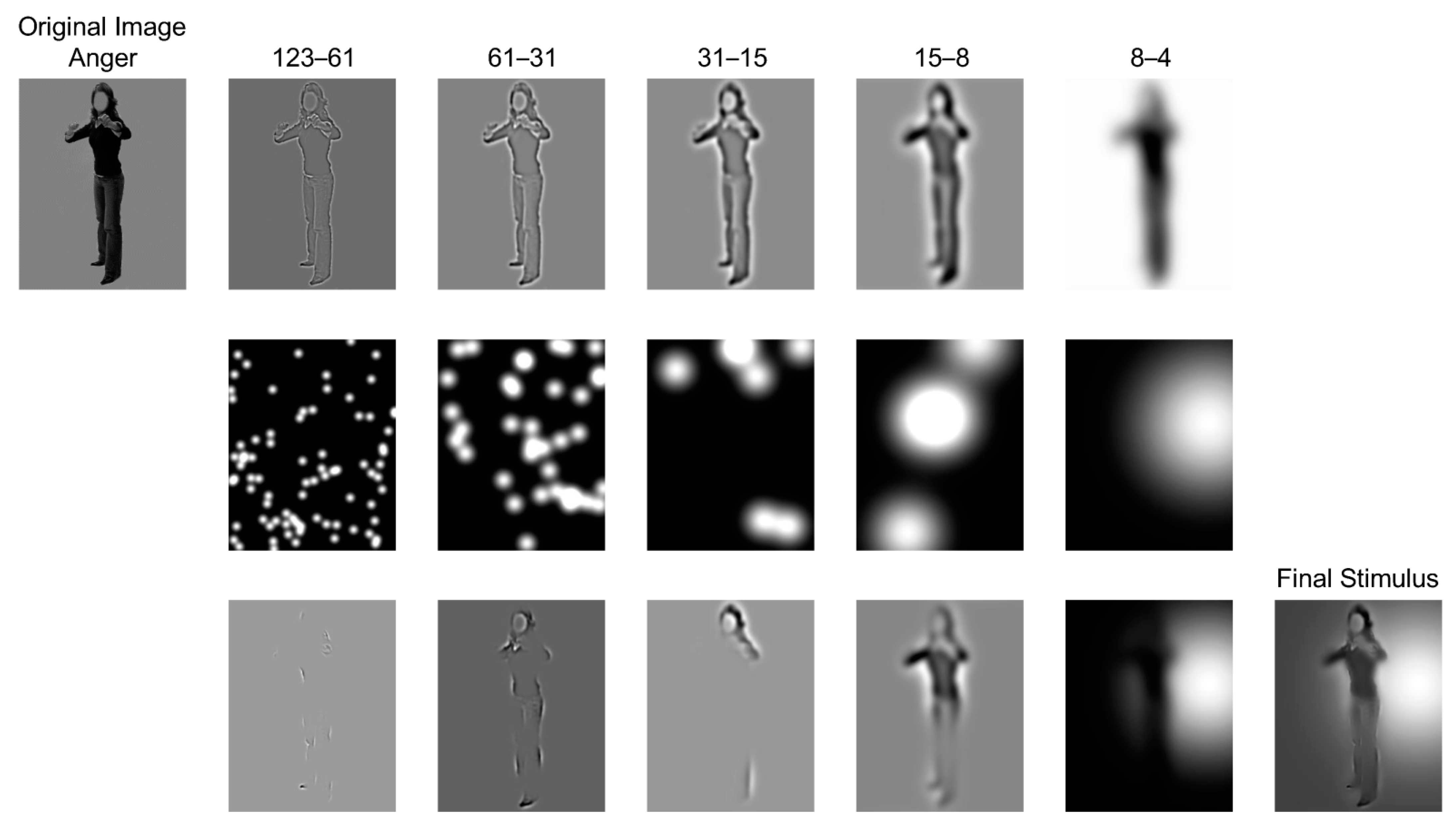

2.3.1. Bubbles Task

2.3.2. fMRI Behavioral Task

2.4. Data Acquisition

2.4.1. Bubbles Data

2.4.2. fMRI Data

2.5. Data Analysis

2.5.1. Bubbles Data

2.5.2. fMRI Behavioral Data

2.6. fMRI Localizer and ROI definition

2.7. Image Data Preprocessing

2.8. fMRI Activation Analysis

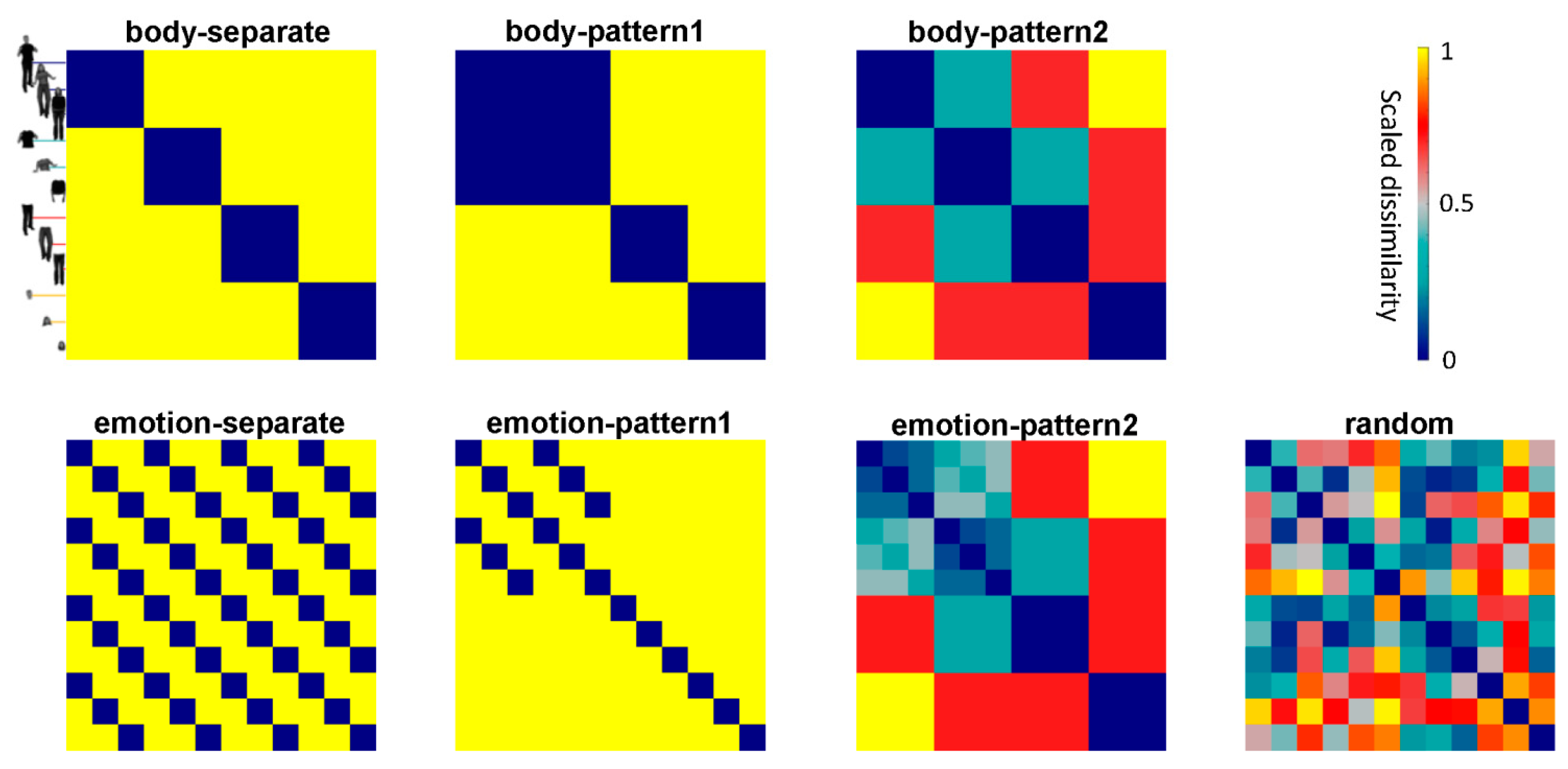

2.9. Constructing Candidate RDMs

2.10. Representational Similarity Analysis

3. Results

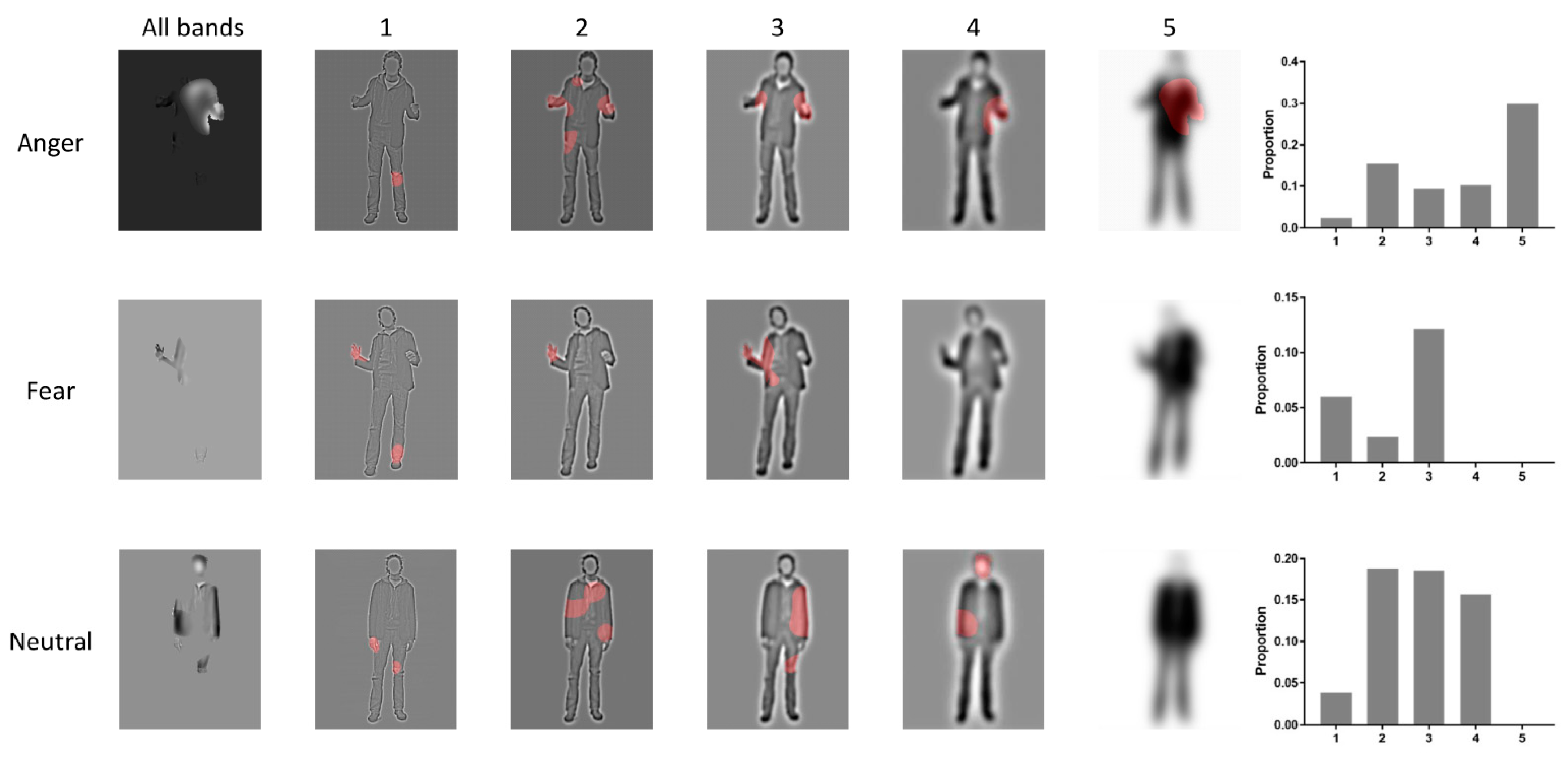

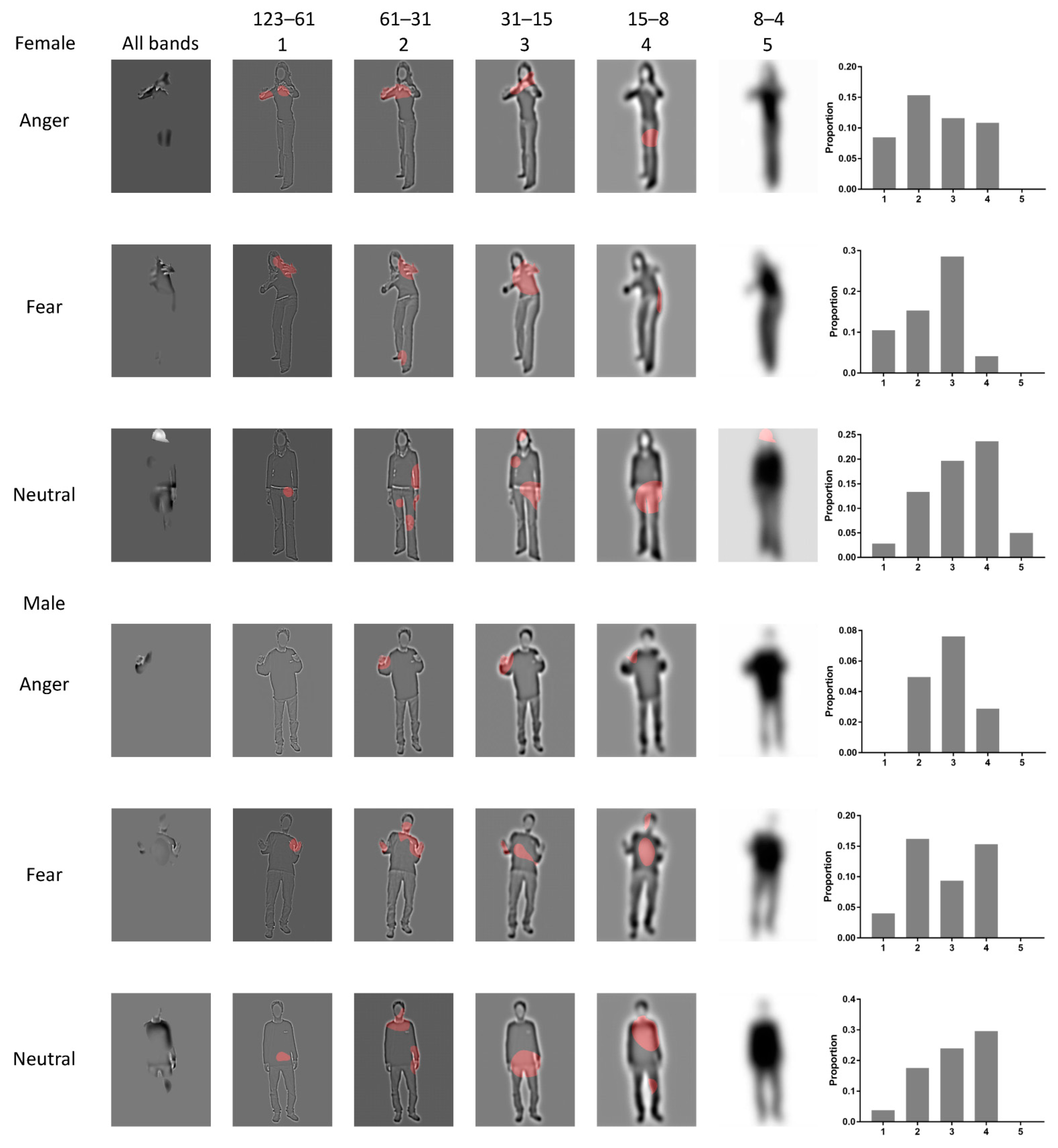

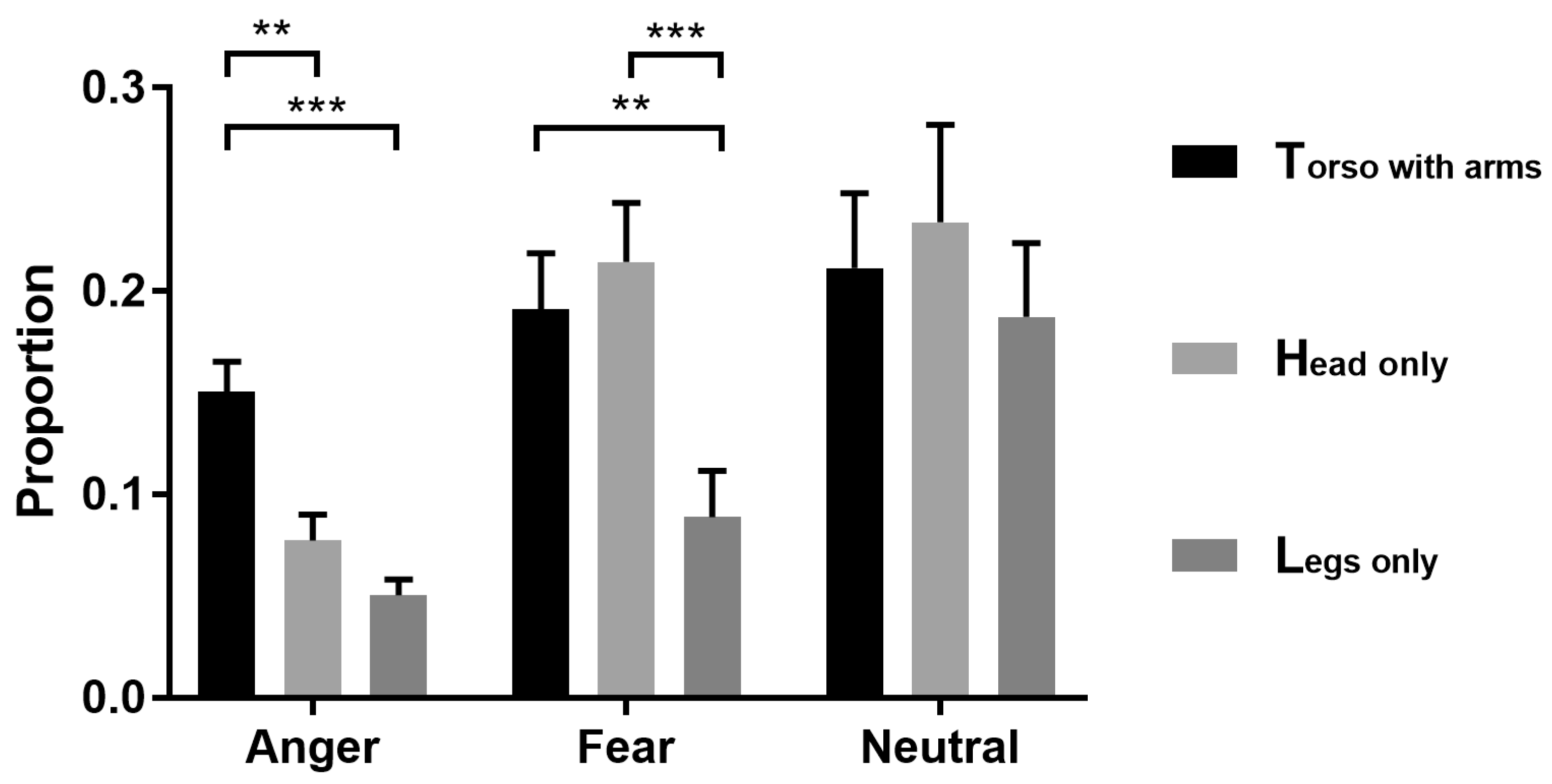

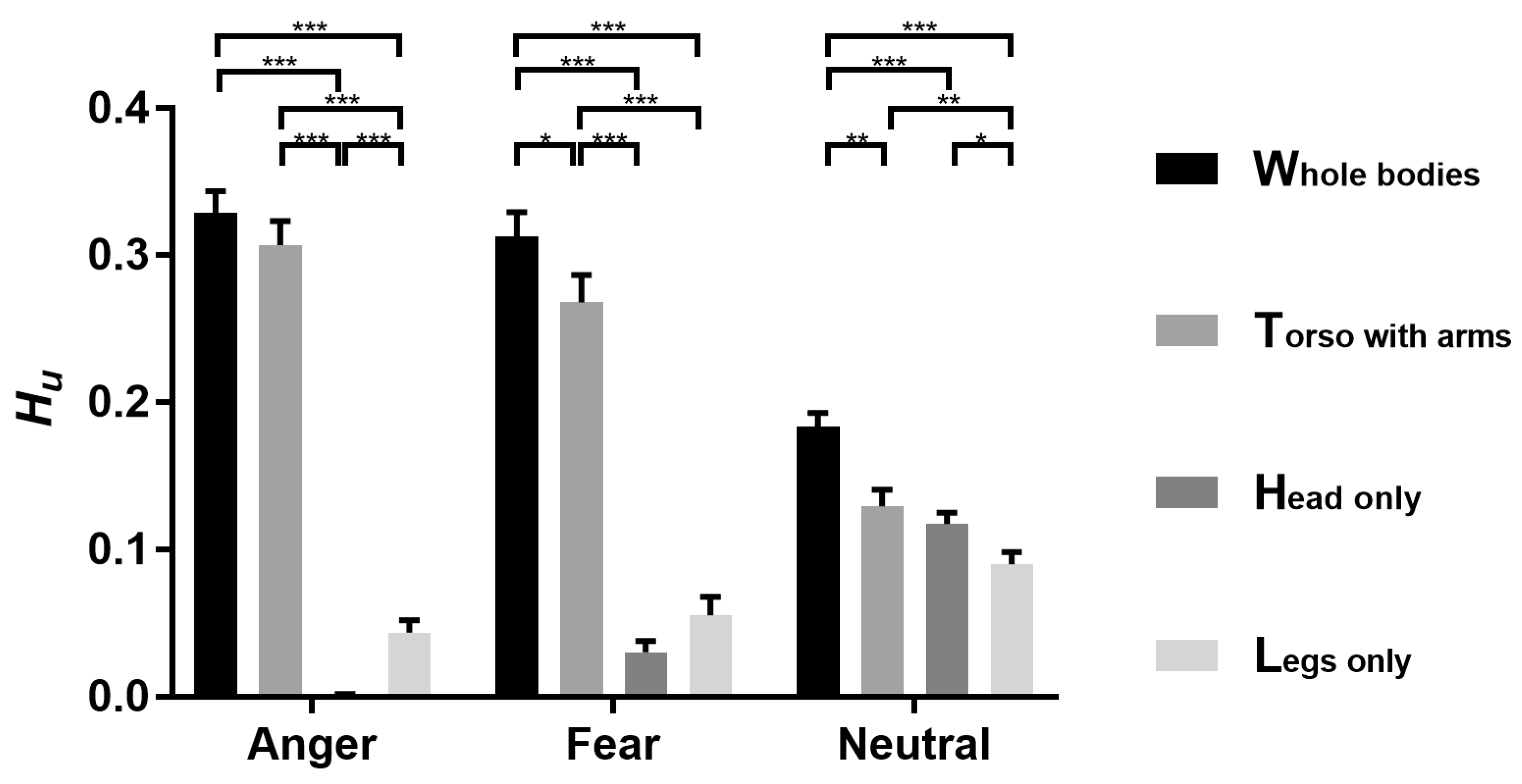

3.1. Bubbles Results

3.2. fMRI Behavioral Performance

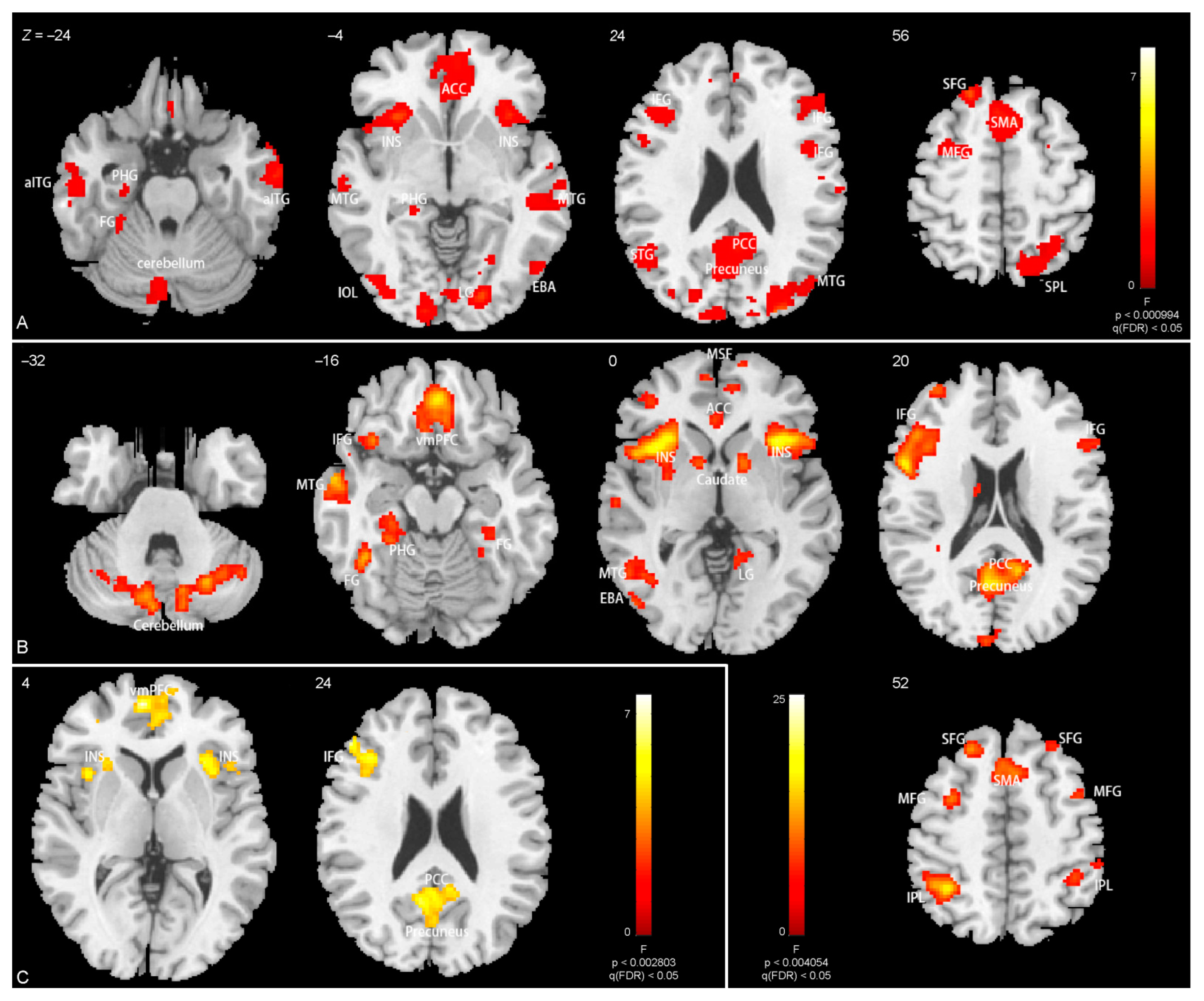

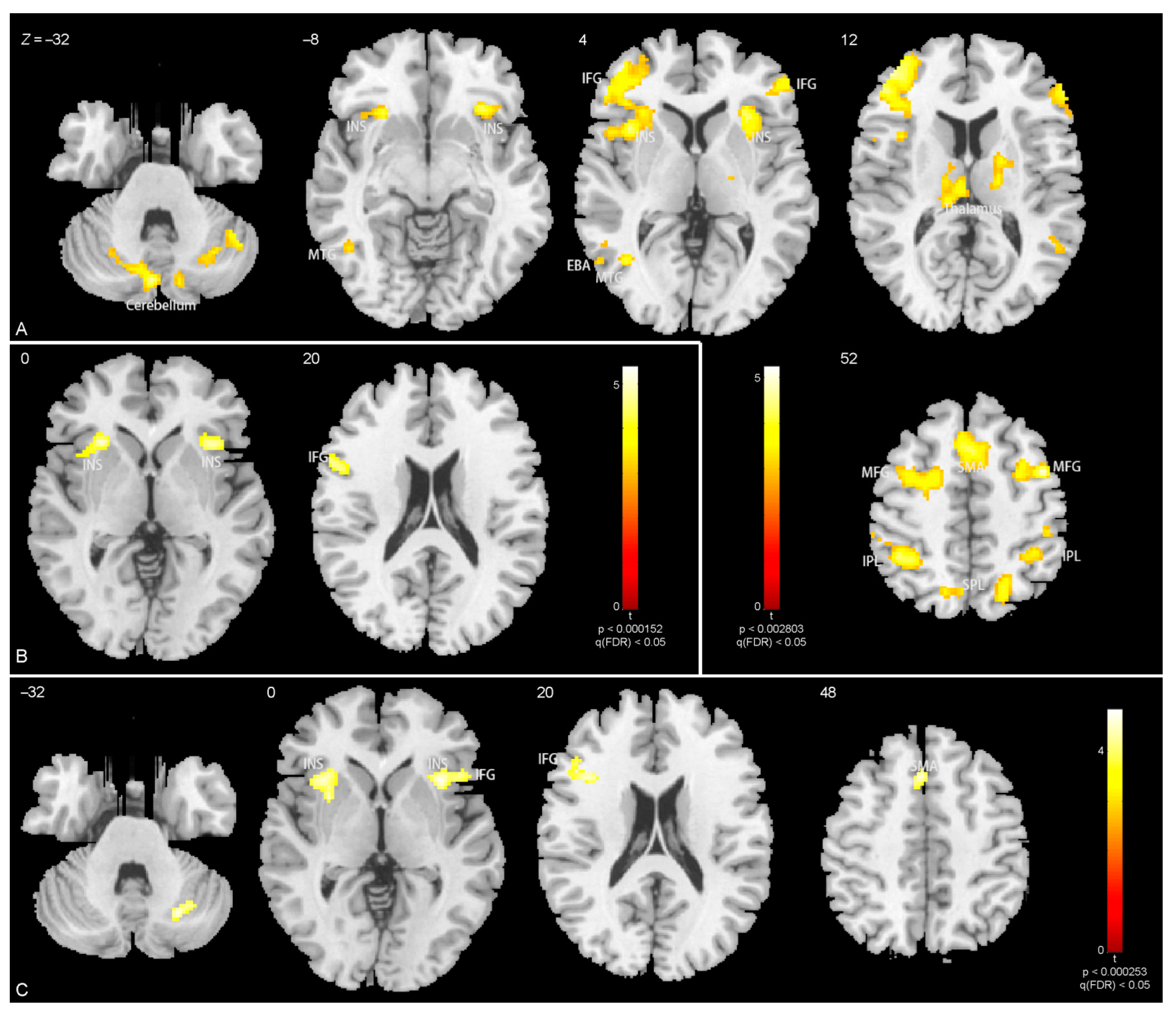

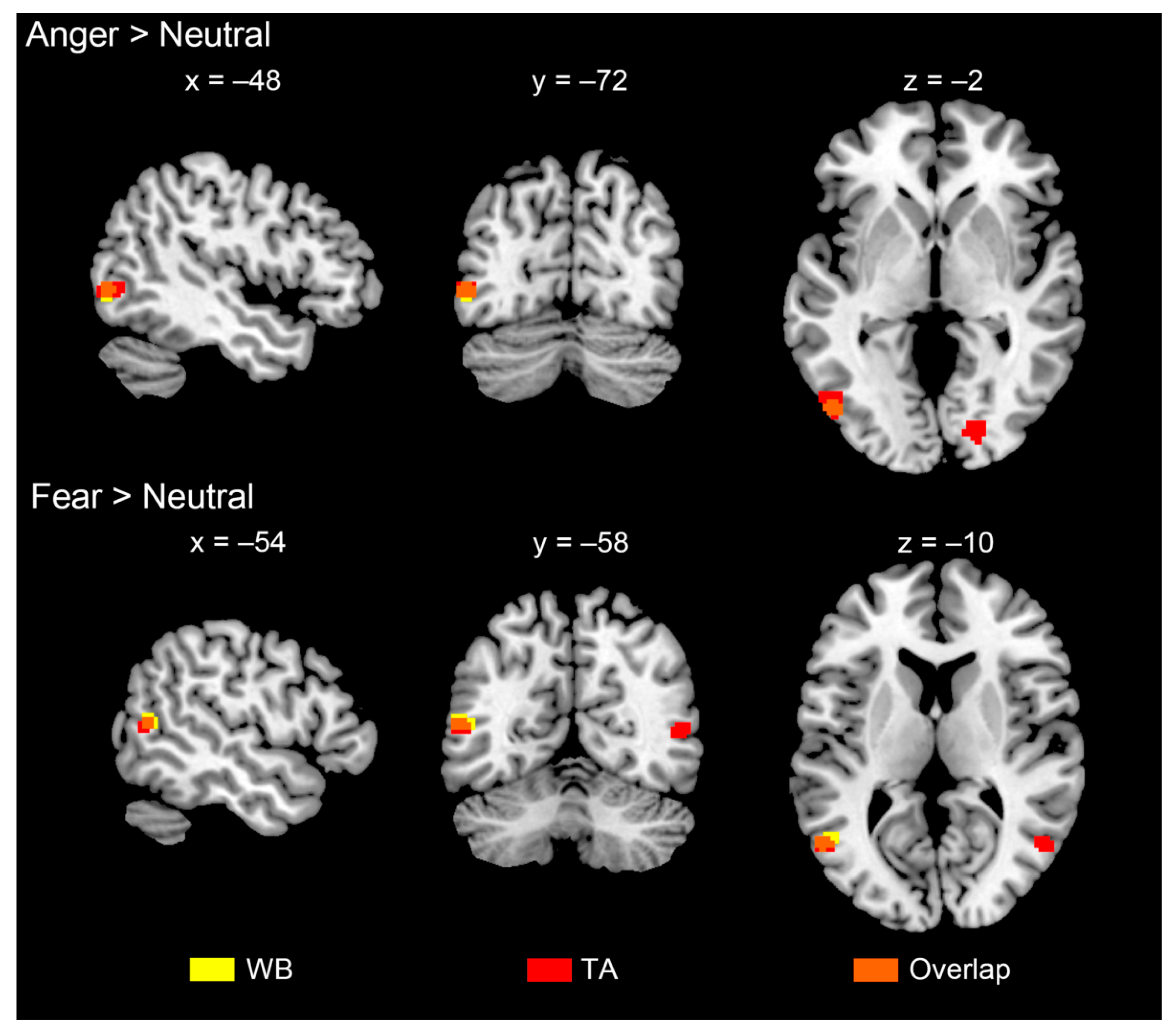

3.3. Brain Activations

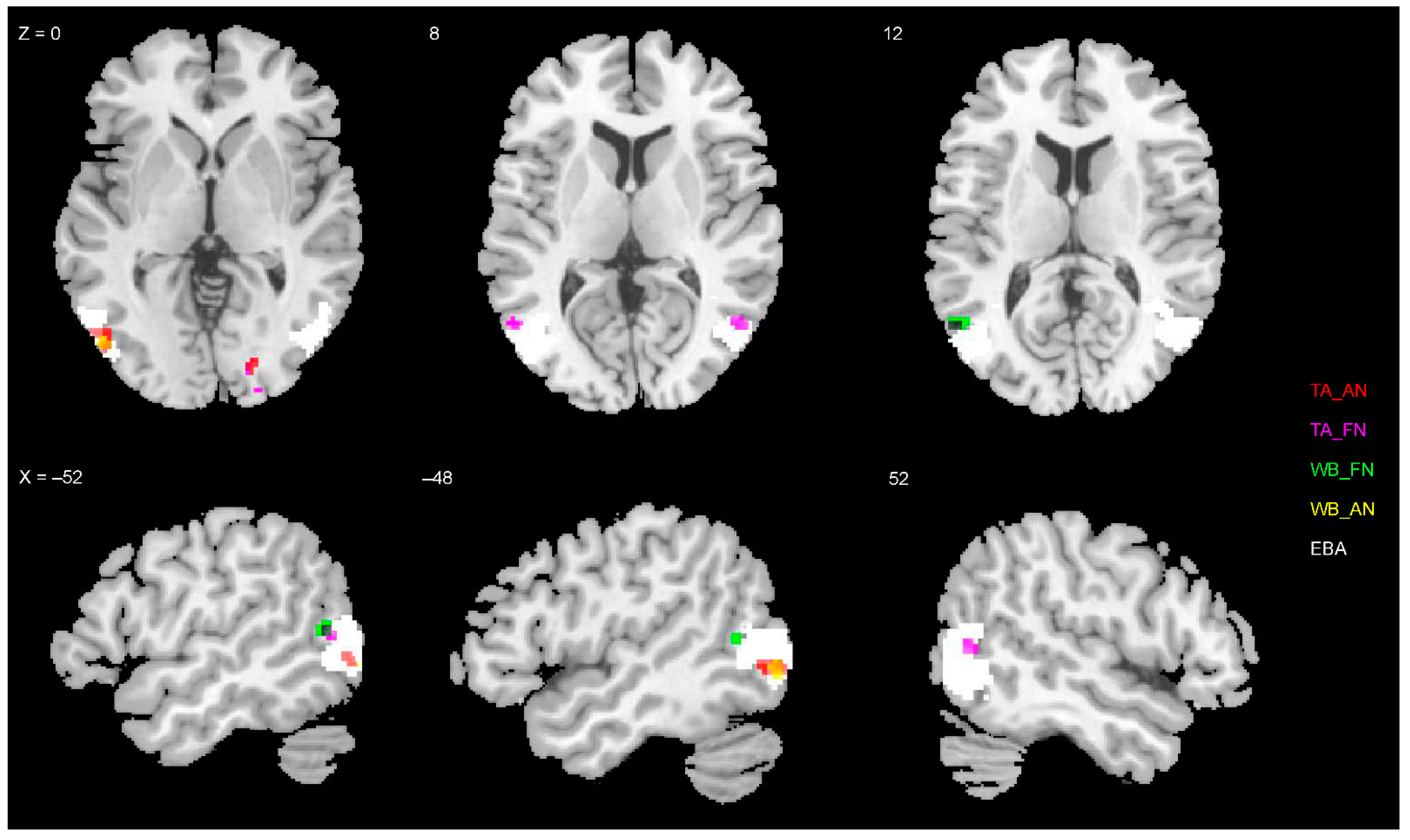

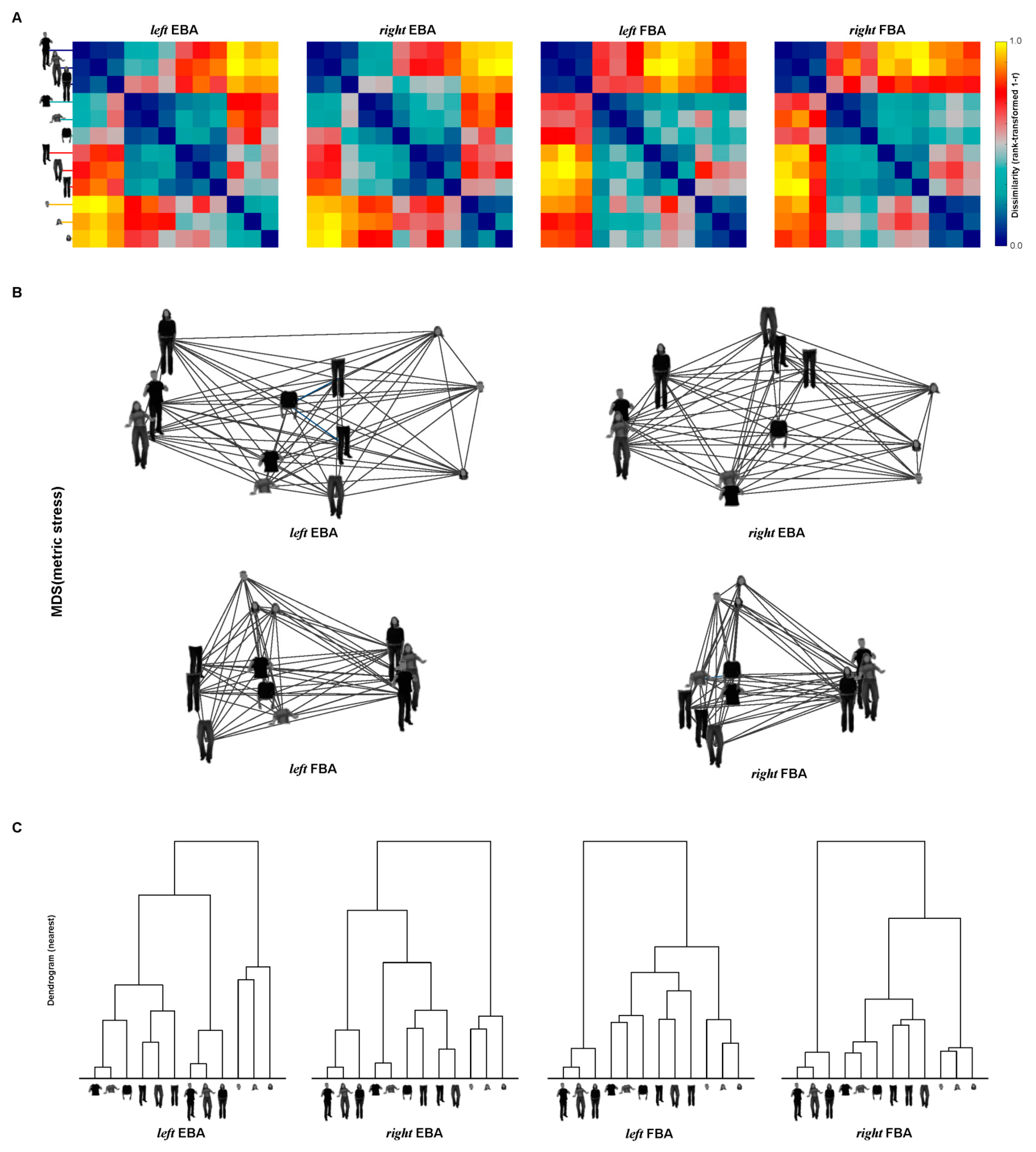

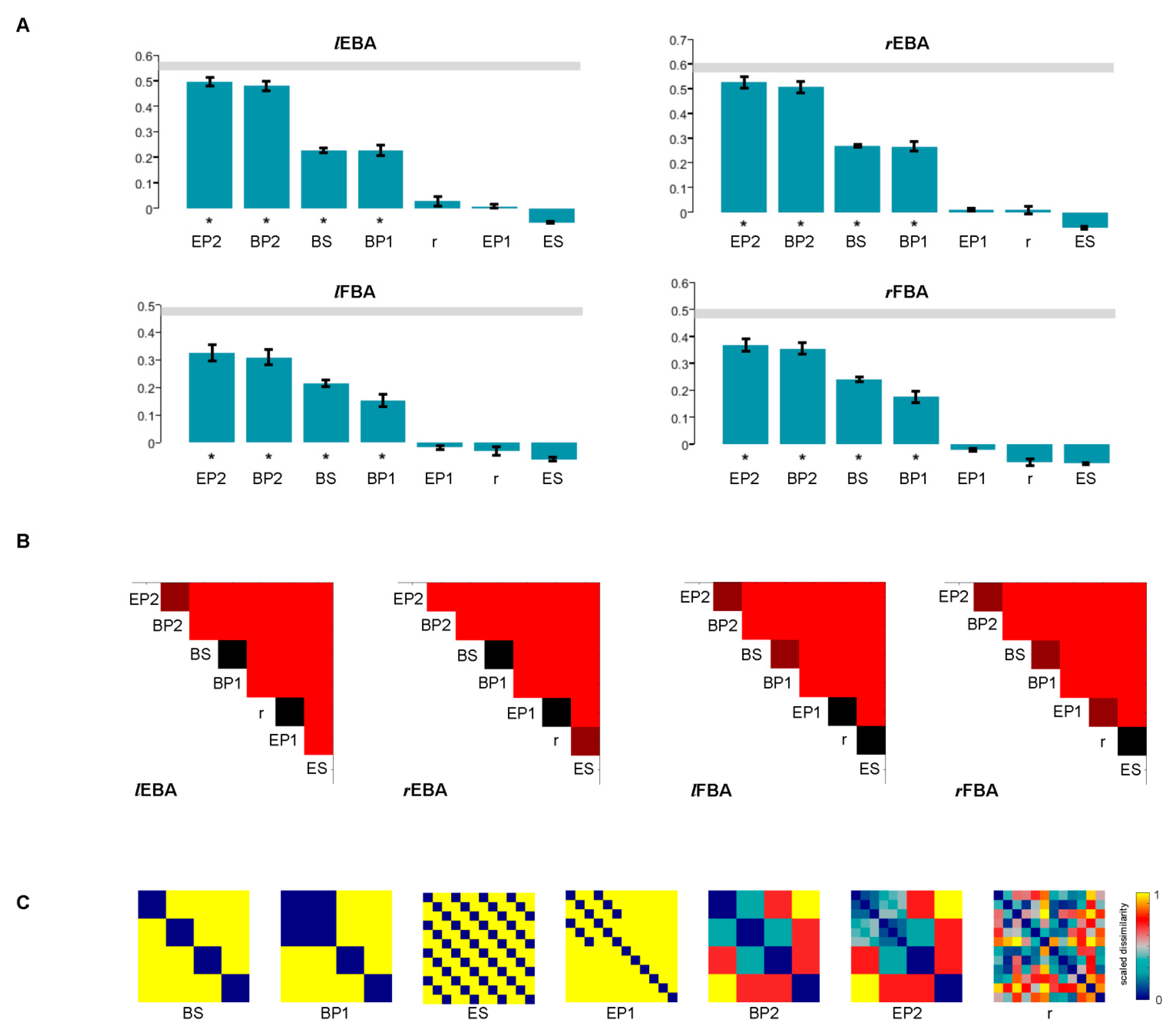

3.4. Representations of EBA and FBA

3.5. Statistical Inference of RSA

4. Discussion

4.1. Torso with Arms as Diagnostic Body Parts

4.2. Brain Activations Related to Body Parts

4.3. Neural Representation of Diagnostic Body Parts

4.4. Limitations and Future Expectations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Contrasts or Region | MNI Co-Ordinates | Peak z or t | Cluster p | Cluster Size | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Emotion × body interactions | ||||||

| Ventromedial prefrontal gyrus | −8 | 56 | 2 | 7.6 | 1.5 × 10−5 | 143 |

| R anterior insula | 30 | 20 | 0 | 7.5 | 0.0021 | 62 |

| L precuneus | −8 | −56 | 28 | 6.9 | 1.2 × 10−4 | 103 |

| Inferior frontal gyrus | −50 | 36 | 24 | 6.5 | 6.4 × 10−4 | 426 |

| Main effect of expression | ||||||

| L cerebellum | −12 | −74 | −34 | 14.5 | 0.0033 | 104 |

| R cerebellum | 24 | −66 | −30 | 13.5 | 4.7 × 10−4 | 150 |

| L fusiform gyrus | −30 | −36 | −20 | 13.4 | 0.031 | 58 |

| L middle temporal gyrus | −60 | −4 | −18 | 18.0 | 0.0019 | 118 |

| Ventromedial prefrontal gyrus | 0 | 44 | −16 | 16.4 | 2.7 × 10−7 | 380 |

| L inferior frontal gyrus, L insula | −30 | 22 | −4 | 25.2 | 8.3 × 10−15 | 1115 |

| R inferior frontal gyrus, L insula | 34 | 20 | 2 | 19.5 | 1.4 × 10−8 | 489 |

| R caudate | 12 | 10 | 6 | 11.8 | 0.050 | 50 |

| L(R) posterior cingulate, precuneus | −6 | −60 | 20 | 16.1 | 4.0 × 10−10 | 633 |

| L(R) medial frontal gyrus | 6 | 52 | 10 | 16.6 | 7.5 × 10−5 | 205 |

| L inferior parietal lobule | −38 | −50 | 54 | 16.5 | 1.3 × 10−5 | 257 |

| L middle frontal gyrus | −24 | 30 | 48 | 15.0 | 0.0038 | 99 |

| L(R) supplementary motor area | −4 | 22 | 48 | 11.3 | 0.0018 | 122 |

| Main effect of body | ||||||

| R anterior inferior temporal gyrus | 60 | −10 | −24 | 11.0 | 1.7 × 10−4 | 202 |

| L anterior inferior temporal gyrus | −62 | −14 | −18 | 9.0 | 0.0032 | 118 |

| L middle occipital lobe (covering L EBA, L FBA gyrus, cerebellum) | −42 | −82 | 4 | 14.9 | 7.1 × 10−23 | 2311 |

| R middle occipital lobe (covering R EBA, R FBA gyrus, cerebellum) | 14 | −100 | 12 | 40.0 | 5.7 × 10−19 | 1732 |

| L(R) medial frontal gyrus and anterior cingulate | −4 | 44 | −20 | 11.4 | 1.3 × 10−14 | 1160 |

| L insula, L inferior frontal gyrus | −30 | 22 | −2 | 15.9 | 3.3 × 10−6 | 332 |

| R insula, R inferior frontal gyrus | 32 | 22 | 2 | 16.9 | 4.3 × 10−5 | 247 |

| Precuneus, posterior cingulate | −2 | −22 | 40 | 13.5 | 1.5 × 10−16 | 1405 |

| L inferior frontal gyrus | −42 | 28 | 26 | 9.2 | 0.0083 | 92 |

| L angular | −46 | −68 | 46 | 11.3 | 1.7 × 10−4 | 197 |

| R inferior frontal gyrus | 46 | 4 | 28 | 9.5 | 0.0039 | 112 |

| L superior parietal lobule | 18 | −68 | 50 | 11.5 | 1.7 × 10−4 | 198 |

| L(R) supplementary motor area | −4 | 20 | 50 | 17.3 | 6.8 × 10−10 | 654 |

| L superior frontal gyrus | −16 | 46 | 40 | 8.3 | 0.0083 | 93 |

| L superior frontal gyrus | −20 | 32 | 54 | 14.5 | 0.0012 | 144 |

| In whole body, | ||||||

| Anger > neutral | ||||||

| L occipito-temporal gyrus (EBA) | −48 | −76 | −2 | 4.3 | 0.015 | 25 |

| Fear > neutral | ||||||

| L occipito-temporal gyrus (EBA) | −54 | −60 | 12 | 5.2 | 0.010 | 35 |

| In torso with arms, | ||||||

| Anger > neutral | ||||||

| R lingual gyrus | 20 | −84 | 6 | 5.4 | 0.032 | 80 |

| L occipito-temporal gyrus (EBA) | −50 | −74 | 0 | 4.6 | 0.048 | 60 |

| Fear > neutral | ||||||

| R middle temporal gyrus | 50 | −62 | 8 | 4.6 | 0.045 | 23 |

| L occipito-temporal gyrus (EBA) | −54 | −62 | 10 | 4.6 | 0.012 | 24 |

| In legs, | ||||||

| Anger > neutral | ||||||

| R cerebellum | 44 | −56 | −34 | 4.4 | 0.010 | 212 |

| L cerebellum | −12 | −70 | −36 | 4.7 | 0.0038 | 285 |

| L middle temporal gyrus | −42 | −60 | 4 | 4.0 | 0.0053 | 259 |

| L insula | −30 | 22 | −2 | 5.2 | 2.6 × 10−16 | 3593 |

| R insula | 32 | 20 | 0 | 4.8 | 4.6 × 10−4 | 435 |

| R middle frontal gyrus | 42 | 4 | 52 | 5.0 | 3.1 × 10−9 | 1557 |

| R thalamus (including R caudate) | 20 | −4 | 14 | 3.9 | 0.016 | 185 |

| L thalamus (including L caudate) | −6 | −18 | 12 | 3.9 | 0.0091 | 223 |

| R inferior parietal lobule | 68 | −34 | 24 | 3.8 | 0.019 | 171 |

| L inferior parietal lobule | −32 | −42 | 42 | 4.9 | 2.8 × 10−8 | 1297 |

| R inferior parietal lobule | 32 | −42 | 44 | 4.4 | 5.2 × 10−5 | 604 |

| L(R) supplementary motor area | −6 | 20 | 50 | 4.5 | 3.3 × 10−6 | 841 |

| L(R) precuneus | −14 | −68 | 56 | 4.1 | 0.023 | 159 |

| R superior parietal lobule | 18 | −64 | 54 | 4.0 | 0.0028 | 310 |

| Fear > neutral | ||||||

| L insula | −30 | 22 | −4 | 5.4 | 5.4 × 10−5 | 273 |

| R insula | 34 | 20 | 2 | 5.0 | 7.1 × 10−4 | 173 |

| L inferior frontal gyrus | −56 | 10 | 22 | 4.7 | 0.0090 | 97 |

| In head, | ||||||

| Anger > neutral | ||||||

| - | − | − | − | − | − | − |

| Fear > neutral | ||||||

| R cerebellum | 24 | −66 | −32 | 4.6 | 0.042 | 70 |

| R insula, R inferior frontal gyrus | 32 | 18 | 0 | 4.6 | 1.7 × 10−4 | 282 |

| L insula | −34 | 20 | −2 | 4.6 | 0.0020 | 177 |

| L inferior frontal gyrus | −42 | 18 | 16 | 4.8 | 0.0032 | 142 |

| L supplementary motor area | −6 | 22 | 44 | 4.7 | 0.0020 | 164 |

References

- Aviezer, H.; Trope, Y.; Todorov, A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 2012, 338, 1225–1229. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aviezer, H.; Trope, Y.; Todorov, A. Holistic person processing: Faces with bodies tell the whole story. J. Pers. Soc. Psychol. 2012, 103, 20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barrett, L.F.; Mesquita, B.; Gendron, M. Context in Emotion Perception. Curr. Dir. Psychol. Sci. 2011, 20, 286–290. [Google Scholar] [CrossRef]

- De Gelder, B. Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. B 2009, 364, 3475–3484. [Google Scholar] [CrossRef]

- Dael, N.; Mortillaro, M.; Scherer, K.R. Emotion expression in body action and posture. Emotion 2012, 12, 1085. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martinez, L.; Falvello, V.B.; Aviezer, H.; Todorov, A. Contributions of facial expressions and body language to the rapid perception of dynamic emotions. Cogn. Emot. 2016, 30, 939–952. [Google Scholar] [CrossRef] [PubMed]

- Darwin, C. The Expression of the Emotions in Man and Animals; University of Chicago Press: Chicago, IL, USA; London, UK, 1965; pp. 196–219. [Google Scholar]

- Tracy, J.L.; Robins, R.W. The nonverbal expression of pride: Evidence for cross-cultural recognition. J. Pers. Soc. Psychol. 2008, 94, 516–530. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Livingstone, S.R.; Palmer, C. Head movements encode emotions during speech and song. Emotion 2016, 16, 365–380. [Google Scholar] [CrossRef] [PubMed]

- Witkower, Z.; Tracy, J.L. Bodily Communication of Emotion: Evidence for Extrafacial Behavioral Expressions and Available Coding Systems. Emot. Rev. 2019, 11, 184–193. [Google Scholar] [CrossRef]

- O’Reilly, P. Bipedics: Towards a New Category of Kinesics—An Empirical Investigation of the Expression of Attitude, and Emotion, Through Simple Leg and Foot Gesture. Master’s Thesis, University of Gothenburg, Gothenburg, Sweden, June 2012. [Google Scholar]

- De Gelder, B. Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 2006, 7, 242–249. [Google Scholar] [CrossRef] [PubMed]

- Sowden, P.T.; Schyns, P.G. Channel surfing in the visual brain. Trends Cogn. Sci. 2006, 10, 538–545. [Google Scholar] [CrossRef] [Green Version]

- Sawada, M.; Suda, K.; Ishii, M. Expression of emotions in dance: Relation between arm movement characteristics and emotion. Percept. Mot. Ski. 2003, 97, 697–708. [Google Scholar] [CrossRef]

- Aviezer, H.; Ensenberg, N.; Hassin, R.R. The inherently contextualized nature of facial emotion perception. Curr. Opin. Psychol. 2017, 17, 47–54. [Google Scholar] [CrossRef]

- Van den Stock, J.; Righart, R.; de Gelder, B. Body expressions influence recognition of emotions in the face and voice. Emotion 2007, 7, 487–494. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coulson, M. Attributing Emotion to Static Body Postures: Recognition Accuracy, Confusions, and Viewpoint Dependence. J. Nonverbal Behav. 2004, 28, 117–139. [Google Scholar] [CrossRef]

- Parkinson, C.; Walker, T.T.; Memmi, S.; Wheatley, T. Emotions are understood from biological motion across remote cultures. Emotion 2017, 17, 459–477. [Google Scholar] [CrossRef] [PubMed]

- Beck, A.; Cañamero, L.; Bard, K.A. Towards an affect space for robots to display emotional body language. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Aviezer, H.; Hassin, R.R.; Ryan, J.; Grady, C.; Susskind, J.; Anderson, A.; Moscovitch, M.; Bentin, S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 2008, 19, 724–732. [Google Scholar] [CrossRef]

- Shariff, A.F.; Tracy, J.L. What are emotion expressions for? Curr. Dir. Psychol. Sci. 2011, 20, 395–399. [Google Scholar] [CrossRef] [Green Version]

- Gross, M.M.; Crane, E.A.; Fredrickson, B.L. Effort-shape and kinematic assessment of bodily expression of emotion during gait. Hum. Mov. Sci. 2012, 31, 202–221. [Google Scholar] [CrossRef]

- Nummenmaa, L.; Saarimäki, H. Emotions as discrete patterns of systemic activity. Neurosci. Lett. 2019, 693, 3–8. [Google Scholar] [CrossRef]

- Kreibig, S.D. Autonomic nervous system activity in emotion: A review. Biol. Psychol. 2010, 84, 394–421. [Google Scholar] [CrossRef]

- Nummenmaa, L.; Glerean, E.; Hari, R.; Hietanen, J.K. Bodily maps of emotions. Proc. Natl. Acad. Sci. USA 2014, 111, 646–651. [Google Scholar] [CrossRef] [Green Version]

- Volynets, S.; Glerean, E.; Hietanen, J.K.; Hari, R.; Nummenmaa, L. Bodily maps of emotions are culturally universal. Emotion 2020, 20, 1127. [Google Scholar] [CrossRef] [Green Version]

- Gosselin, F.; Schyns, P.G. Bubbles: A technique to reveal the use of information in recognition tasks. Vision Res. 2001, 41, 2261–2271. [Google Scholar] [CrossRef] [Green Version]

- Zhan, M.; Goebel, R.; de Gelder, B. Ventral and Dorsal Pathways Relate Differently to Visual Awareness of Body Postures under Continuous Flash Suppression. eNeuro 2018, 5, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Schyns, P.G.; Bonnar, L.; Gosselin, F. Show Me the Features! Understanding Recognition From the Use of Visual Information. Psychol. Sci. 2002, 13, 402–409. [Google Scholar] [CrossRef]

- Van Rijsbergen, N.; Jaworska, K.; Rousselet, G.A.; Schyns, P.G. With age comes representational wisdom in social signals. Curr. Biol. 2014, 24, 2792–2796. [Google Scholar] [CrossRef] [Green Version]

- Jack, R.E.; Garrod, O.G.; Yu, H.; Caldara, R.; Schyns, P.G. Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. USA 2012, 109, 7241–7244. [Google Scholar] [CrossRef] [Green Version]

- Jack, R.E.; Garrod, O.G.; Schyns, P.G. Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Curr. Biol. 2014, 24, 187–192. [Google Scholar] [CrossRef] [Green Version]

- Jack, R.E.; Sun, W.; Delis, I.; Garrod, O.G.; Schyns, P.G. Four not six: Revealing culturally common facial expressions of emotion. J. Exp. Psychol. Gen. 2016, 145, 708. [Google Scholar] [CrossRef] [Green Version]

- Moro, V.; Urgesi, C.; Pernigo, S.; Lanteri, P.; Pazzaglia, M.; Aglioti, S.M. The neural basis of body form and body action agnosia. Neuron 2008, 60, 235–246. [Google Scholar] [CrossRef] [Green Version]

- Urgesi, C.; Moro, V.; Candidi, M.; Aglioti, S.M. Mapping implied body actions in the human motor system. J. Neurosci. 2006, 26, 7942–7949. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nummenmaa, L.; Hirvonen, J.; Parkkola, R.; Hietanen, J.K. Is emotional contagion special? An fMRI study on neural systems for affective and cognitive empathy. NeuroImage 2008, 43, 571–580. [Google Scholar] [CrossRef]

- De Gelder, B.; de Borst, A.W.; Watson, R. The perception of emotion in body expressions. Wiley Interdiscip. Rev. Cogn. Sci. 2015, 6, 149–158. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Popivanov, I.D.; Schyns, P.G.; Vogels, R. Stimulus features coded by single neurons of a macaque body category selective patch. Proc. Natl. Acad. Sci. USA 2016, 113, E2450–E2459. [Google Scholar] [CrossRef] [Green Version]

- Downing, P.E.; Peelen, M.V. The role of occipitotemporal body-selective regions in person perception. Cogn. Neurosci. 2011, 2, 186–203. [Google Scholar] [CrossRef] [PubMed]

- Downing, P.E.; Jiang, Y.; Shuman, M.; Kanwisher, N. A cortical area selective for visual processing of the human body. Science 2001, 293, 2470–2473. [Google Scholar] [CrossRef]

- Peelen, M.V.; Downing, P.E. Is the extrastriate body area involved in motor actions? Nat. Neurosci. 2005, 8, 125. [Google Scholar] [CrossRef]

- Bracci, S.; Caramazza, A.; Peelen, M.V. Representational similarity of body parts in human occipitotemporal cortex. J. Neurosci. 2015, 35, 12977–12985. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kriegeskorte, N.; Mur, M.; Bandettini, P.A. Representational similarity analysis-connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2008, 2, 4. [Google Scholar] [CrossRef] [Green Version]

- Touroutoglou, A.; Hollenbeck, M.; Dickerson, B.C.; Barrett, L.F. Dissociable large-scale networks anchored in the right anterior insula subserve affective experience and attention. NeuroImage 2012, 60, 1947–1958. [Google Scholar] [CrossRef] [Green Version]

- Van den Stock, J.; Tamietto, M.; Sorger, B.; Pichon, S.; Grézes, J.; de Gelder, B. Cortico-subcortical visual, somatosensory, and motor activations for perceiving dynamic whole-body emotional expressions with and without striate cortex (V1). Proc. Natl. Acad. Sci. USA 2011, 108, 16188–16193. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van de Riet, W.A.; Grèzes, J.; de Gelder, B. Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Soc. Neurosci. 2009, 4, 101–120. [Google Scholar] [CrossRef] [PubMed]

- Tamietto, M.; Adenzato, M.; Geminiani, G.; de Gelder, B. Fast recognition of social emotions takes the whole brain: Interhemispheric cooperation in the absence of cerebral asymmetry. Neuropsychologia 2007, 45, 836–843. [Google Scholar] [CrossRef] [Green Version]

- Moro, V.; Pernigo, S.; Avesani, R.; Bulgarelli, C.; Urgesi, C.; Candidi, M.; Aglioti, S. Visual body recognition in a prosopagnosic patient. Neuropsychologia 2012, 50, 104–117. [Google Scholar] [CrossRef] [PubMed]

- De Gelder, B.; Van den Stock, J. The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2011, 2, 181. [Google Scholar] [CrossRef] [Green Version]

- Delplanque, S.; N’diaye, K.; Scherer, K.; Grandjean, D. Spatial frequencies or emotional effects? J. Neurosci. Methods 2007, 165, 144–150. [Google Scholar] [CrossRef]

- Smith, F.W.; Schyns, P.G. Smile through your fear and sadness: Transmitting and identifying facial expression signals over a range of viewing distances. Psychol. Sci. 2009, 20, 1202–1208. [Google Scholar] [CrossRef] [PubMed]

- Schyns, P.G.; Petro, L.S.; Smith, M.L. Transmission of facial expressions of emotion co-evolved with their efficient decoding in the brain: Behavioral and brain evidence. PLoS ONE 2009, 4, e5625. [Google Scholar] [CrossRef] [Green Version]

- Dore, B.P.; Weber, J.; Ochsner, K.N. Neural Predictors of Decisions to Cognitively Control Emotion. J. Neurosci. 2017, 37, 2580–2588. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kinnison, J.; Padmala, S.; Choi, J.M.; Pessoa, L. Network analysis reveals increased integration during emotional and motivational processing. J. Neurosci. 2012, 32, 8361–8372. [Google Scholar] [CrossRef] [Green Version]

- Quarto, T.; Blasi, G.; Maddalena, C.; Viscanti, G.; Lanciano, T.; Soleti, E.; Mangiulli, I.; Taurisano, P.; Fazio, L.; Bertolino, A. Association between Ability Emotional Intelligence and Left Insula during Social Judgment of Facial Emotions. PLoS ONE 2016, 11, e0148621. [Google Scholar] [CrossRef] [Green Version]

- Chauvin, A.; Worsley, K.J.; Schyns, P.G.; Arguin, M.; Gosselin, F. Accurate statistical tests for smooth classification images. J. Vis. 2005, 5, 1. [Google Scholar] [CrossRef]

- Wagner, H.L. On measuring performance in category judgment studies of nonverbal behavior. J. Nonv. Beha. 1993, 17, 3–28. [Google Scholar] [CrossRef]

- Bracci, S.; Ietswaart, M.; Peelen, M.V.; Cavina-Pratesi, C. Dissociable neural responses to hands and non-hand body parts in human left extrastriate visual cortex. J. Neuropsychol. 2010, 103, 3389–3397. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Diedrichsen, J.; Kriegeskorte, N. Representational models: A common framework for understanding encoding, pattern-component, and representational-similarity analysis. PLoS Comput. Biol. 2017, 13, e1005508. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nili, H.; Wingfield, C.; Walther, A.; Su, L.; Marslen-Wilson, W.; Kriegeskorte, N. A toolbox for representational similarity analysis. PLoS Comput. Biol. 2014, 10, e1003553. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wallbott, H.G. Bodily expression of emotion. Eur. J. Soc. Psychol. 1998, 28, 879–896. [Google Scholar] [CrossRef]

- Vaessen, M.J.; Abassi, E.; Mancini, M.; Camurri, A.; de Gelder, B. Computational Feature Analysis of Body Movements Reveals Hierarchical Brain Organization. Cereb. Cortex 2018, 29, 3551–3560. [Google Scholar] [CrossRef] [PubMed]

- Reed, C.L.; Stone, V.E.; Grubb, J.D.; McGoldrick, J.E. Turning configural processing upside down: Part and whole body postures. J. Exp. Psychol. Hum. Percept. Perform. 2006, 32, 73. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ngo, N.; Isaacowitz, D.M. Use of context in emotion perception: The role of top-down control, cue type, and perceiver’s age. Emotion 2015, 15, 292. [Google Scholar] [CrossRef] [PubMed]

- Jastorff, J.; Huang, Y.A.; Giese, M.A.; Vandenbulcke, M. Common neural correlates of emotion perception in humans. Hum. Brain Mapp. 2015, 36, 4184–4201. [Google Scholar] [CrossRef] [Green Version]

- Mccarthy, G.; Puce, A.; Belger, A.; Allison, T. Electrophysiological studies of human face perception. II: Response properties of face-specific potentials generated in occipitotemporal cortex. Cereb. Cortex 1999, 9, 431–444. [Google Scholar] [CrossRef] [PubMed]

- Astafiev, S.V.; Stanley, C.M.; Shulman, G.L.; Corbetta, M. Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat. Neurosci. 2004, 7, 542–548. [Google Scholar] [CrossRef] [PubMed]

- Radua, J.; Phillips, M.L.; Russell, T.; Lawrence, N.; Marshall, N.; Kalidindi, S.; Elhage, W.; Mcdonald, C.; Giampietro, V.; Brammer, M.J. Neural response to specific components of fearful faces in healthy and schizophrenic adults. NeuroImage 2010, 49, 939–946. [Google Scholar] [CrossRef] [Green Version]

- Engelen, T.; de Graaf, T.A.; Sack, A.T.; de Gelder, B. A causal role for inferior parietal lobule in emotion body perception. Cortex 2015, 73, 195–202. [Google Scholar] [CrossRef]

- Roland, P.E.; Larsen, B.; Lassen, N.A.; Skinhøj, E. Supplementary motor area and other cortical areas in organization of voluntary movements in man. J. Neurophysiol. 1980, 43, 118–136. [Google Scholar] [CrossRef]

- Tanji, J.; Shima, K. Role for supplementary motor area cells in planning several movements ahead. Nature 1994, 371, 413–416. [Google Scholar] [CrossRef]

- Galléa, C.; Popa, T.; Billot, S.; Méneret, A.; Depienne, C.; Roze, E. Congenital mirror movements: A clue to understanding bimanual motor control. J. Neurol. 2011, 258, 1911–1919. [Google Scholar] [CrossRef] [PubMed]

- Craig, A.D. How do you feel—now? The anterior insula and human awareness. Nat. Rev. Neurosci. 2009, 10, 59–70. [Google Scholar] [CrossRef]

- Menon, V.; Uddin, L.Q. Saliency, switching, attention and control: A network model of insula function. Brain Struct. Funct. 2010, 214, 655–667. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Taylor, J.C.; Wiggett, A.J.; Downing, P.E. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J. Neurophysiol. 2007, 98, 1626–1633. [Google Scholar] [CrossRef] [Green Version]

- Poyo Solanas, M.; Vaessen, M.; de Gelder, B. Computation-Based Feature Representation of Body Expressions in the Human Brain. Cereb. Cortex 2020, 30, 6376–6390. [Google Scholar] [CrossRef]

- Bracci, S.; Caramazza, A.; Peelen, M.V. View-invariant representation of hand postures in the human lateral occipitotemporal cortex. NeuroImage 2018, 181, 446–452. [Google Scholar] [CrossRef]

- Zimmermann, M.; Mars, R.B.; De Lange, F.P.; Toni, I.; Verhagen, L. Is the extrastriate body area part of the dorsal visuomotor stream? Brain Struct. Funct. 2018, 223, 31–46. [Google Scholar] [CrossRef] [PubMed]

- Hanke, M.; Adelhöfer, N.; Kottke, D.; Iacovella, V.; Sengupta, A.; Kaule, F.R.; Nigbur, R.; Waite, A.Q.; Baumgartner, F.; Stadler, J. A studyforrest extension, simultaneous fMRI and eye gaze recordings during prolonged natural stimulation. Sci. Data 2016, 3, 160092. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lettieri, G.; Handjaras, G.; Ricciardi, E.; Leo, A.; Papale, P.; Betta, M.; Pietrini, P.; Cecchetti, L. Emotionotopy in the human right temporo-parietal cortex. Nat. Commun. 2019, 10, 5568. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lettieri, G.; Handjaras, G.; Setti, F.; Cappello, E.M.; Bruno, V.; Diano, M.; Leo, A.; Ricciardi, E.; Pietrini, P.; Cecchetti, L. Default and control network connectivity dynamics track the stream of affect at multiple timescales. Soc. Cogn. Affect. Neurosci. 2021, Online. [Google Scholar] [CrossRef]

- Kuppens, P.; Verduyn, P. Emotion dynamics. Curr. Opin. Psychol. 2017, 17, 22–26. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, J.; Ding, R.; Li, S.; Zhang, M.; Wei, D.; Feng, C.; Xu, P.; Luo, W. Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception. Brain Sci. 2022, 12, 466. https://doi.org/10.3390/brainsci12040466

Ren J, Ding R, Li S, Zhang M, Wei D, Feng C, Xu P, Luo W. Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception. Brain Sciences. 2022; 12(4):466. https://doi.org/10.3390/brainsci12040466

Chicago/Turabian StyleRen, Jie, Rui Ding, Shuaixia Li, Mingming Zhang, Dongtao Wei, Chunliang Feng, Pengfei Xu, and Wenbo Luo. 2022. "Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception" Brain Sciences 12, no. 4: 466. https://doi.org/10.3390/brainsci12040466

APA StyleRen, J., Ding, R., Li, S., Zhang, M., Wei, D., Feng, C., Xu, P., & Luo, W. (2022). Features and Extra-Striate Body Area Representations of Diagnostic Body Parts in Anger and Fear Perception. Brain Sciences, 12(4), 466. https://doi.org/10.3390/brainsci12040466