Abstract

Background: Locked-In Syndrome (LIS) is a rare neurological condition in which patients’ ability to move, interact, and communicate is impaired despite their being conscious and awake. After assessing the patient’s needs, we developed a customized device for an LIS patient, as the commercial augmentative and alternative communication (AAC) devices could not be used. Methods: A 51-year-old woman with incomplete LIS for 15 years came to our laboratory seeking a communication tool. After excluding the available AAC devices, a careful evaluation led to the creation of a customized device (hardware + software). Two years later, we assessed the patient’s satisfaction with the device. Results: A switch-operated voice-scanning communicator, which the patient could control by residual movement of her thumb without seeing the computer screen, was implemented, together with postural strategies. The user and her family were generally satisfied with the customized device, with a top rating for its effectiveness: it fit well the patient’s communication needs. Conclusions: Using customized AAC and strategies provides greater opportunities for patients with LIS to resolve their communication problems. Moreover, listening to the patient’s and family’s needs can help increase the AAC’s potential. The presented switch-operated voice-scanning communicator is available for free on request to the authors.

1. Introduction

Brain stem injuries caused by stroke, trauma, anoxia, or even neurodegenerative diseases, e.g., amyotrophic lateral sclerosis (ALS), can lead to a state of Locked-In Syndrome (LIS) []. The term was first used by Plum and Posner in 1966 [] to identify a condition in which a person is severely paralyzed but has intact cognitive skills []. The syndrome is a consequence of traumatic or vascular damage that bilaterally disconnects the corticospinal and corticobulbar tracts, and it can have different forms. The classification is based on the amount of motor outputs affected. In the total form, no movement can be produced; in the pure form, patients can move their eyes and blink but are unable to produce any other movement; in the incomplete form, some voluntary movements other than with the eyes remain []. LIS rarely shows any improvement. The most common form has a chronic pattern [,,].

The major challenge in LIS is communication, which is essential for the quality of life (QOL) [], emotional state, and sense of existence of the locked-in person. Communication is not only vital for practical daily life activities, but it is also essential in enabling social participation []. People with incomplete LIS can also benefit greatly from communication aids, but the traditional augmentative and alternative communication (AAC) devices are not always a solution, particularly if the residual muscle activity is insufficient to control the device [].

Re-establishing a communication channel for people with LIS has always been a challenge. Starting with low-tech AAC, i.e., non-battery powered methods of communicating that are usually cheaper to make, patients seek to overcome their communication barrier. Some studies have reported the use of Morse code (by eye blinking) or other eye-coded methods by these patients to transmit a message letter by letter to their interlocutor []. Another system is partner-assisted scanning (PAS), in which the caregiver (partner) is in front of the subject and verbally “scans” the sequential items to select []. Alternatively, the partner can point out the letters on a visual scanning device (i.e., stating “this row” and scanning through each letter). The patient is only required to look up or down, the easiest way to indicate the letter chosen [].

In addition, there is high-tech AAC, which includes various technologies and devices to restore the ability to communicate, such as ETCDs (eye-tracking computer devices for communication) [], BCIs (brain-computer interfaces) [], ERP (event-related potentials)-based BCIs [], motor imagery BCIs [], SSVEP (steady-state visual evoked potential) BCIs [,,], hybrid BCIs [] combining several techniques (e.g., eye-tracking and electrooculography), and electromyographic (EMG) switches []. More recently, a new high-tech approach, experimented in a LIS patient, utilized the intracortical signals to select letters and, thus, write words []. Although very exciting, this new approach has so far been used in only one case report where it was invasive, requiring a surgical procedure conducted by an ultra-specialized team with a specific instrumentation.

Communicating with AAC requires strategies for the formulation, storage, and retrieval of words, codes, and messages []. A wide variety of software options exist for generating messages, e.g., spelling words letter-by-letter, use of symbols or sequence icons to represent words/messages, selecting words from a display to compose a message, or use of programmed messages that can be retrieved. Each option suits some individuals more than others. Thus, careful consideration is required to match the individual with the most appropriate system [,,,,,,]. Moreover, the management of the message can be done in different ways. Most AAC technologies use aided symbols with visual displays of pictures, alphabet, pictorial symbols, or codes from which the individual selects []. For people with visual impairments, AAC technologies can present spoken messages or tactile representation [].

All in all, it can be difficult to determine the advantages of one method over another, given the complexity of the individual factors involved (e.g., fatigue, medication, pain, alertness, mood) []. This can also make it difficult to determine when and if it is appropriate to change methods; to date, there is still no software that can provide dynamic recognition of these factors, so clinicians and caregivers play a fundamental role in spotting, evaluating, and reporting changes in a patient’s needs. Given the unique characteristics of each individual, the available technology might not be able to meet their needs and it may be necessary to modify existing commercial devices [].

Our study’s participant was in the locked-in state due to a ponto-cerebellar stroke. At the time of the study, she was using a no-tech AAC [] that allowed her to communicate with her caregivers but in a very energy-expensive way; she had not been using such technology previously. Her family contacted us because she had read about the integrated Laboratory of Assistive Solutions and Translational Research in which physiotherapists study the possibility of using customized AAC methods. Although she could still communicate via eyebrow movements, she wanted to test other methods as an alternative, since she had a slight voluntary control and repeatable movements of the left thumb (that appeared a few months after the stroke). Hence, the aim of our study was to test a high-tech AAC that she could control without the caregiver’s help, as an alternative to her current method. After comparing various AAC interfaces using different modes for signals acquisition, we found that the most effective AAC method for this user was actually the least expensive.

2. Material and Methods

2.1. Participant

At the time of the study, the patient (a 51-year old Italian woman, under the pseudonym of Lisa) had been in the locked-in state for 15 years. Lisa was paralyzed since the birth of her daughter, due to a ponto-cerebellar hemorrhage that occurred shortly after the birth. The diagnosis of incomplete LIS [] was made a few weeks after the stroke, when Lisa showed an ability to answer closed questions by elevating her left eyebrow. A few months later, slight voluntary and repeatable movements of the left thumb appeared. The strength of the thumb was assessed with Kendall’s scale []: both the flexion and extension movements had a strength of 3/10 (i.e., movement through a moderate execution range). She was able to sit in a wheelchair, but trembled, had difficulty controlling her head, and was not able to control her upper body. She could voluntarily move the left eyebrow and the distal phalange of the left thumb. Her eyes moved only vertically with continuous and uncontrolled movements, not functional for communication. She was fully assisted 24 h a day.

2.2. Conventional Communication

Lisa began to communicate entire words and sentences a few months after the vascular injury by slightly twitching her left eyebrow, choosing the desired letters of the alphabet which were spelt out vocally by her caregiver. This acoustic scanning method, though, was highly demanding both for Lisa and the caregiver, without whom this approach would not have been possible. Lisa’s dad began looking for a solution that would improve his daughter’s ability to communicate with others.

From an Internet search, he contacted different professionals—without good feedback—before he found the integrated Laboratory of Assistive Solutions and Translational Research of the Istituti Clinici Scientifici Maugeri and decided to pursue the matter with them immediately. Lisa and her family were invited to the Institute, where they explained the strategy she used for communicating and her desire to find a better method of communication. The laboratory team was enthusiastic about accompanying her and exploring a possible solution; the decision to write this case report was based on Lisa’s satisfaction with the solution found and her written words. Moreover, based on Lisa’s needs, we created a software that today we wish to make available to all free of charge, considering it an intuitive tool, easy to manage, and immediately available, without excessive instrumentation. Therefore, Lisa gave her informed consent to the present report and to give her testimony. All experimental procedures were conducted in accordance with the Declaration of Helsinki, and with ethical approval of the institutional review board.

2.3. Procedure

The researchers began by testing some commercial AAC devices that were available in the Laboratory. Obviously, low technology systems (i.e., non-electronic) were a priori discarded.

High-technology systems include voice output communication aids (called “speech-generating devices”), software on personal computers or laptops, tablet touch-screen devices and mobile phones, which provide multiple access strategies, customizable content, and voice output function []. However, these mainstream aids with a relatively low cost and high accessibility are not useful for people with severe motor impairments unable to use standard interfaces [].

Consequently, for Lisa, the first trial we carried out was with an eye-tracking computer device (Mytobii P10 Eye Tracker1), designed as an aid to communication for individuals with minimal movement []. However, this eye-tracking was immediately discarded because Lisa, like many patients with LIS, had no horizontal eye movements [] and her vertical movements were strongly disturbed by continuous involuntary movements. She could not point or keep her gaze fixed on a target for more than 0.5 s, which made interaction with the eye tracker impossible. Next, a sensorized headset for BCI (EMOTIV EPOC®2) [] was tried, in search of some useful signal for communication. Continuous involuntary head movements and facial and cranial muscle spasms created high levels of electroencephalogram contamination; as a result, the BCI option was also abandoned.

Then, the decision was made to use a method that conceptually came very close to the communication system that Lisa and her caregiver were currently using: an auditory scanning AAC. BCIs using the auditory steady state response (ASSR) or the auditory P300 for interaction with BCI devices [] were excluded for the above-mentioned reasons. The conceptually similar commercially available software required a payment, and we were not sure that they were appropriate for Lisa. Therefore, we decided to develop a custom-designed (both hardware and software) scanning communicator, controlled by a switch that Lisa could move with her left thumb to command a computer screen.

2.4. Assessment

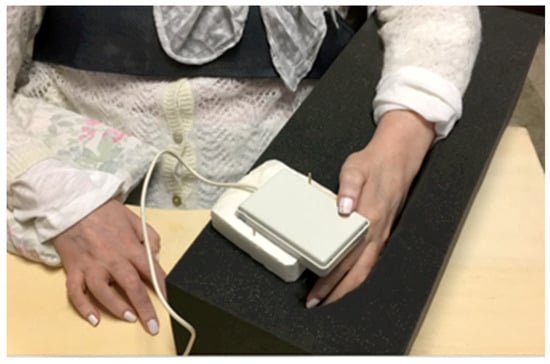

To evaluate Lisa’s effective ability to interact with a switch, a single session of the click-test [] was administered by a physiotherapist with experience in the AAC field. The test required to click (activate/deactivate) the control switch as many times as possible in 30 s, and then count the number of activations. Lisa was able to click the switch 41 times in the given time, well above the minimum required level of 15 clicks. Because Lisa could not control her hand or arm, the instability of the trunk initially caused many dysfunctional movements of the thumb and provoked loss of contact and unintentional activation of the switch. To solve this problem, the limb was stabilized on a foam pad shaped to accommodate the arm and hand, and the switch used for the click test was fixed in the most suitable position with double-sided tape. All devices and aids, including the laptop, were placed on the wheelchair table for the test (Figure 1).

Figure 1.

Finding the most suitable arm position for Lisa. In order to satisfy Lisa’s needs, different upper limb positions were tested. Finally, Lisa’s arm and hand were set in a foam pad with the thumb placed on the control switch. Lisa can voluntarily move only her left thumb; the table and the foam allow her to maintain her arms in a comfortable and stable position.

2.5. Custom-Device Development

2.5.1. Hardware

To allow Lisa to interact with the computer, we opted for a mechanical switch, based on a lever system and previously created for patients with ALS [] (see Appendix A). The switch needed to be both easy to activate voluntarily and at the same time have a certain resistance to unintentional micro-movements that could activate it at the wrong time. This meant positioning the thumb on the lever arm about 80% of the way down distally from the fulcrum.

2.5.2. Software

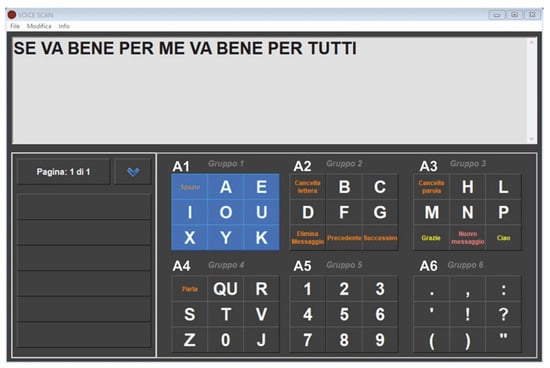

The initial idea was to utilize the same method as a typical scanning grid communicator, in which the user selects letters and commands highlighted on a screen; however, the main obstacle was to provide information about the real time position of the scanning marker without using the heavily compromised visual channel. Thus, we decided to use the auditory channel (the computer’s speech synthesis) but no software or application for this existed. Therefore, we created a new alphabetical virtual keyboard with a vocal scanning-access, starting from a scanning-access keyboard previously designed for patients with severe motor disabilities. First, letters and commands were grouped into six sets, from “A1” to “A6”. The speech synthesis was programmed to sequentially pronounce the names assigned to each set (“A1, A2, [...], A6”) cyclically, and then each item of the group selected by the user. The message the user wants to transmit gradually appears in a dedicated text box (see Appendix A).

Figure 2 shows the dashboard of the software, written in Italian, Lisa’s language. Vowels were positioned in the first set followed by consonants, generally respecting alphabetical order to improve the software’s usability and make it easier for the user to remember the position of each letter. For the same reason, the more uncommon Italian letters were placed at the end of the sets, to avoid spelling them out in vain. Moreover, in accordance with Lisa’s preferences, commands were placed in various sets (i.e., “space” in A1, “speak” in A4, etc.). A practice trial is presented in video-format in Supplementary Materials.

Figure 2.

Lisa writes her opinion on her new AAC. The computer writes in response to Lisa’s commands with her left thumb click. Lisa cannot see the scanning marker, she can only hear the corresponding code: “A1” for group 1 (‘gruppo’ means group in Italian), “A2” for group 2, and so on. After the first click (group selection) by pressing the control switch, she hears the letters of the selected set, spelt out one after another (e.g., A-E-I-O-U-J-X-Y). A second click chooses the desired letter. This screenshot shows one of the first sentences written by Lisa (translated from Italian “If it works for me, it works for everyone”), who motivated this present article.

3. Results

The device was run, and Lisa listened to the synthetic voice describing the content of each group for 2 min, so as to make a mental map of the position of each letter or command. As a first trial, in order to verify that the system as a whole worked properly, we asked her to write her name and those of her children and she did so immediately. Then we asked her what she thought about the device overall. She typed, in about 3 min, without making any typing mistakes: “Se funziona per me funziona per tutti” (If it works for me, it works for everyone) (Figure 2). While her answers were being video-documented, Lisa typed, on her own initiative “Può andare più veloce?” (Can it go any faster?), referring to the advancement speed of the scanning marker. Through a trial-and-error process using different timings, we were able to reduce the scanning pause from 1200 to 800 milliseconds; with this speed, Lisa could comfortably type letters, without too-long a latency time. The new setting did not result in any typing errors, and Lisa confirmed that this was the setting she preferred.

Effectiveness of the Custom Device

Two years after development of the switch-based communicator, Lisa returned to the laboratory for a follow-up regarding use of the device. She reported that she was able to use the device for just over ½ an hour at a time, after which she found it tiring. She used the communicator 2–3 days a week on average, not every day due to problems mostly related to health or assistance. The communicator was usually placed on a table with wheels so it could be easily moved and used both when in bed and in her wheelchair. During her daily routine, the time required for the caregiver to position Lisa’s arm on the foam pad, her thumb on the control switch, and set up the device for use was approximately 2–3 min. The scanning pause Lisa used was 800 milliseconds, and she was able to write over 2 words per min: one character every 4.55 s on average. She generally wrote messages to those who assisted her, especially her family members and children. Even though the time taken was slightly longer than with the previous communication modality, she could write by herself and choose her words without suggestions, i.e., she was more autonomous.

In order to determine the overall usability of the device, including Lisa’s confidence with it for daily living activities, we asked Lisa and her father (the main caregiver) to complete the following questionnaires:

- Global Rating of Change scale (GRC). This single-item scale assesses the perceived improvement in quality of life with use of the new device. The subject grades the perceived improvement (or lack of improvement) on a 15-point scale from −7 (lack of improvement) to +7 (excellent improvement) [].

- Quebec User Evaluation of Satisfaction with Assistive Technology (QUEST 2.0) questionnaire. This 12-item scale assesses user satisfaction with an assistive technology device. The scale investigates two dimensions: satisfaction with the device and satisfaction with the service. Item scores range from 1 (not satisfied at all) to 5 (very satisfied) []. Only the eight items related to satisfaction with the device (QUEST 2.0-Dev) were reported for this study.

In the GCR, Lisa’s rating on how much the communicator had changed her quality of life was 3, while her father rated it 5. Regarding satisfaction with the communicator, Table 1 reports the results on QUEST 2.0. Even if other future improvements might be possible, Lisa and her father were generally satisfied about the characteristics of the customized device. In particular, Lisa gave the top score (5) for the effectiveness of the device; she found it well suited to her communication needs.

Table 1.

Quebec User Evaluation of Satisfaction with Assistive Technology.

4. Discussion

Facilitating communication for individuals with severe motor impairments such as LIS can be extremely difficult either because an access solution is unavailable or it is unreliable, causing frustration and limited use of the technology []. When both vertical and horizontal ocular movements are present (e.g., in many cases of advanced-stage ALS), AAC devices using gaze pointing should be used to recover communication [] and to improve life quality. More often, in traumatic or vascular Locked-In Syndrome, BCIs can provide a muscle-independent communication channel []. In 1988, researchers proposed a BCI based on ERPs in the electroencephalogram (EEG) such as P300 []. Today, many kinds of BCIs exist, such as motor imagery BCIs [], SSVEP BCIs [,,,], or Hybrid BCIs [] that conjugate several techniques (such as eye tracking and electrooculography) with the aim of improving reliability and speed in human-machine interaction. BCI techniques first need to acquire EEG signals from the user’s brain. The most non-invasive methods of EEG detection use helmets or a headset with dry or wet surface electrodes placed on the scalp [,]. The acquisition of EEG signals is a critical operation that can be affected by disturbances and artefacts []. EEG contamination from the cranial muscles is often present in the early stages of BCI training but it gradually wanes []. Some users never acquire EEG control. In these cases, electromyography (EMG) may be used to move the cursor toward the target []; for example, the EMG signal can be used as a trigger to control a scanning communicator with an alphabetical onscreen keyboard [] or to select virtual directional arrows that move the cursor across the screen []. However, dystonic movements, eye blinking, clenching of teeth, or continuous facial or cranial muscle spasms lead to a high rate of EMG contamination [], which represents the main cause of failure []. This situation occurred in our case: due to continuous artefacts, Lisa would not have been able to use either a BCI-based communication system or the EMG signal acquired from the scalp as a trigger to control a scanning communication system.

Some studies [] have reported use of the Morse code or other eye-coded methods by LIS patients to transmit a message letter by letter to the interlocutor, even if the patient’s eye movements may be inconsistent, very small, and easily exhausted []. In 1997, Jean-Dominique Bauby wrote an entire (and successful) book, “The diving bell and the butterfly”, dictating it to a typist only by eye blink []. A study conducted in 2012 described a patient with LIS who was able to communicate, after 3 weeks of training, through a virtual scanning keyboard thanks to detection of the eye blink by an infrared sensor mounted on a pair of glasses []. This method requires the ability to observe the screen, follow the progress of the scanning cursor over the items, and wink with precision at the right time—skills that are not always available in patients with LIS. In another recent study, an electrooculogram-based auditory communication system was used by a small group of patients with incomplete LIS []. They had no gait fixation but were able to partially control the eye movements. Nevertheless, only 1 patient had sufficiently good oculomotor control, as reflected in electrooculogram signals, to use this AAC aid. Therefore, the biggest issue is the fact that each patient has a unique clinical picture, with distinct abilities and disabilities; Lisa could in no way use her eyes to communicate.

The multiplicity of clinical manifestations makes it difficult to find an easy, standard solution to help these patients interact with their environment and to lay the foundations for further research to find ways to suit their varied needs. This is what we accomplished for Lisa by creating a customized device specifically for her, but which could also serve other patients with LIS with similar characteristics.

First, we created a software program with a specific application describing the progress of the scanning marker with speech feedback. Then, we discussed and defined some issues for the practical implementation of the scanning-based communicator. We considered whether it was better to adopt an automatic scanning engine (requiring only one user movement to select the desired item) or a manual one (faster but requiring more than one switch and many clicks for one selection). Then, we had to choose the hardware, in particular the control switch. There are many switches on the market with different activation modes: mechanical, capacitive, electromyographic, optical, sound, blowing, or suctioning. It was necessary to identify which part of the body to use to interact with the switch, which kind of button to use, how to stabilize it, and how to posture the patient to ensure comfort and minimize fatigue.

Furthermore, in order to use an alphabetical communication device with scanning access, the patient must have adequate cognitive skills, together with very good mnemonic abilities. In addition, the capacity to interact with the command switch with a certain reactivity and reliability is required; otherwise, the user might press the switch when the scanning marker has already moved on to the next item. In our intervention, the click test confirmed that Lisa was able to control the switch. Moreover, among the first written sentences Lisa declared “If it works for me, it works for everyone” (translated from Italian); this remark appeared as an invitation to spread her story to give other people in the same condition a new possibility for communicating.

5. Conclusions

The intervention described in this case study was successful for several reasons. First, it is thanks to Lisa’s excellent intact cognitive skills that she is able to write and communicate with the world around her. Indeed, she has no difficulty in understanding; thanks to her 10-year long experience in vocal scanning-writing with the caregiver, she was able to easily start using the computer vocal scanning. Moreover, Lisa has a good memory and learnt the letter distribution in each box without problems.

Second, it is thanks to her father, who did not give up the search for a solution, as well as to her family and friends, who organized for Lisa the most suitable conditions in which to use the communicator. Finally, the fact of being able to modify the whole device—both its hardware and software components—enabled us to create a solution that was well suited to Lisa’s needs.

Another reason for the overall success was the close involvement of Lisa and her father in the development of the device. We believe that it is very important to involve users themselves in the development process in order to customize the device to their needs. For example, Lisa asked us to speed up the scanning marker and then to insert recurring courtesy phrases in the communicator interface (“Thanks”, “Please”, “I love you”, and others). The laboratory team would not have been able to conceive this sort of detail; only a real user can suggest such improvements to optimize the result personally. Moreover, after using the device for about 1 month, Lisa requested that Arabic numerals be added and that the layout of the keyboard be modified, moving some letters and commands from one position to another so as to speed up the writing process and make use easier. The fact that she is still using the device after over 2 years is a sign that it is technically reliable and sufficiently functional and effective to meet her communication needs, an assumption confirmed by our follow-up assessment.

The limitation of our device appears to lie in the cognitive load it requires and produces: Lisa can use the communicator for only about ½ an hour at a time, after which she gets tired and has to stop. However, practice and habit might improve ability in using the device and so reduce fatigue, decreasing the cognitive load. It seems clear that a good cognitive level is crucial to access this communicative channel, as it is demanding on time, attention, and concentration. In fact, patients who have significant linguistic and cognitive limitations (e.g., persons with dementia) often have difficulty learning to use traditional AAC technologies []. Moreover, there is no validated evaluation to assess the psycho-physiological states of patients with LIS [].

In our case study, the tests that we carried out with the most common communication systems and facilitated access methods (eye tracker, BCIs, scanning with visual feedback) failed. This implies that, in the field of AAC, one cannot rely on standard solutions despite the advantages that each AAC method may offer. For instance, Lisa’s complex needs (the LIS condition coupled with her specific involuntary eye movements, and trembling) ruled out interaction with BCI or eye-tracking computer devices. We should point out that we did not test all AAC devices on the market, for budget reasons (both ours and Lisa’s): the more sophisticated the instrument, the higher the cost. In our clinical setting, we did not have at our disposal the full range of commercial devices available on the market. However, even if it were possible for our Institute to purchase a specific tool, the same would not be true for a “normal” family. Thus, we preferred to develop a custom-made tool, which was very cheap and fitted well Lisa’s needs.

We agree that there is need for continuing research to develop new alternative or facilitated access methods and provide more customized AAC devices. The design process we followed was person-centered, in line with theoretical frameworks for medical device and assistive technology development []. The challenge is to ensure that all individuals have access to effective means of communication and can participate in social relations to the fullest extent possible.

Finally, if the reader is interested in testing and using our AAC tool, please contact the authors. We will be pleased to share free of charge this software, as our contribution to the field.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/brainsci12111523/s1.

Author Contributions

M.C. and M.G. (Marco Guenzi) designed the work, M.C. collected the data, M.C. and M.G. (Marica Giardini) carried out the data analysis, M.G. (Marica Giardini) and M.G. (Marco Guenzi) drafted the manuscript and interpreted the results. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Istituti Clinici Scientifici Maugeri.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study, along with the consent to publish this paper.

Acknowledgments

This work was supported by the ‘Ricerca Corrente’ funding scheme of the Ministry of Health, Italy. We would like to express our sincere gratitude to Lisa and her family.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

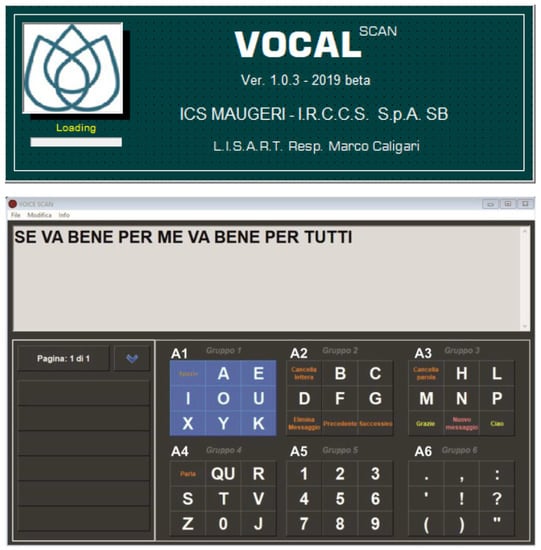

Appendix A. Vocal-Scan Software and Hardware Details

Appendix A.1. Software

VOCAL-Scan (M-VOCAL.exe, version 1.1, Italy) is a AAC application for Windows, developed in VB6, designed for writing by a switch-operated voice-scanning communicator—it is intended for users suffering from severe motor disabilities who have visual problems. The interaction between user and communicator takes place through a switch command. The user clicks it to validate the scanned items, and so is able to compose messages letter by letter without having to see them on the screen.

The app has several options that can be adapted to the user’s needs: (1) adjustable scan progress speed from 400 to 4000 ms; (2) speech synthesis in various languages (Microsoft localized according to the user’s country is the default, but others can be selected); (3) volume and speed of pronunciation of the elements scanned; (4) speed and volume of speech synthesis that reads messages. It is also possible to turn word prediction on/off or automatically enter a space after typing a punctuation character.

Acoustic feedback: As the scan advances, a synthetic voice verbalizes the process. It initially calls the groups with a progressive and cyclic number (1, 2, 3...); after the user has selected the desired group with a click, it verbalizes in succession the elements in the chosen group. The user, on hearing the desired letter (or command), clicks a second time. The voice repeats the item to inform the user of the choice. When the user has written an entire word and typed in a space, the voice repeats it so that the user can verify the correctness of what is written. In the case of error, the user can correct it through the appropriate commands (e.g., delete last letter, delete last word).

Appendix A.2. Hardware

The app runs on multimedia PCs from Windows 7 onwards, requires a USB 2.1 port or later to power a sensor interface to connect the control sensor, such as the one we use (Gradual).

The software is very lightweight (only 844 Kb) so it does not require any special specifications, although we recommend a PC with a good audio section to provide adequate acoustic feedback to the user.

Figure A1.

Screenshot showing the text composed by the user. Mytobii© P10 Eye Tracker, Tobii Technology, Danderyd, Sweden.

Figure A2.

Screen with the program settings. EMOTIV EPOC® 14 channels EEG headset. EMOTIV® San Francisco, U.S.A. https://www.emotiv.com/epoc/.

References

- Käthner, I.; Kübler, A.; Halder, S. Comparison of eye tracking, electrooculography and an auditory braincomputer interface for binary communication: A case study with a participant in the locked-in state. JNER 2015, 12, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Posner, J.B.; Saper, C.B.; Schiff, N. Plum and Posner’s Diagnosis of Stupor and Coma, 4th ed.; Oxford University Press: New York, NY, USA, 2007. [Google Scholar]

- Bauer, G.; Gerstenbrand, F.; Rumpl, E. Varieties of the locked-in syndrome. J. Neurol. 1979, 221, 77–91. [Google Scholar] [CrossRef] [PubMed]

- Feldman, M.H. Physiological observations in a chronic case of “locked-in” syndrome. Neurology 1971, 21, 459. [Google Scholar] [CrossRef] [PubMed]

- Nordgren, R.E.; Markesbery, W.R.; Fukuda, K.; Reeves, A.G. Seven cases of cerebromedullospinal disconnection: The “locked-in” syndrome. Neurology 1971, 21, 1140. [Google Scholar] [CrossRef]

- Markand, O.N.; Dyken, M.L. Sleep abnormalities in patients with brain stem lesions. Neurology 1976, 26, 769. [Google Scholar] [CrossRef]

- Vansteensel, M.J.; Jarosiewicz, B. Brain-computer interfaces for communication. In Handbook of Clinical Neurology; Elsevier: Amsterdam, The Netherlands, 2020; Volume 168, pp. 67–85. [Google Scholar]

- Vidal, F. Phenomenology of the locked-in syndrome: An overview and some suggestions. Neuroethics 2020, 13, 119–143. [Google Scholar] [CrossRef]

- Kim, D.Y.; Han, C.H.; Im, C.H. Development of an electrooculogram-based human-computer interface using involuntary eye movement by spatially rotating sound for communication of locked-in patients. Sci. Rep. 2018, 8, 9505. [Google Scholar] [CrossRef]

- Kuzma-Kozakiewicz, M.; Andersen, P.M.; Ciecwierska, K.; Vázquez, C.; Helczyk, O.; Loose, M.; Uttner, I.; Ludolph, A.C.; Lulé, D. An observational study on quality of life and preferences to sustain life in locked-in state. Neurology 2019, 93, e938–e945. [Google Scholar] [CrossRef]

- Beukelman, D.R.; Light, J.C. Augmentative & Alternative Communication: Supporting Children and Adults with Complex Communication Needs, 5th ed.; Paul H. Brookes Pub.: Baltimore, MD, USA, 2020. [Google Scholar]

- Davis, T.J. Auditory Motion Perception: Investigation of Benefit in Multi-Talker Environments. Ph.D. Thesis, Vanderbilt University, Nashville, TN, USA, 2020. [Google Scholar]

- Caligari, M.; Godi, M.; Guglielmetti, S.; Franchignoni, F.; Nardone. A. Eye tracking communication devices in amyotrophic lateral sclerosis: Impact on disability and quality of life. Amyotroph. Lateral Scler. Front. Lobar Degener. 2013, 14, 546–552. [Google Scholar] [CrossRef]

- McFarland, D.J.; Sarnacki, W.A.; Vaughan, T.M.; Wolpaw, J.R. Brain-computer interface (BCI) operation: Signal and noise during early training sessions. Clin. Neurophysiol. Pract. 2005, 116, 56–62. [Google Scholar] [CrossRef]

- Maggi, L.; Parini, S.; Piccini, L.; Panfili, G.; Andreoni, G. A four command BCI system based on the SSVEP protocol. In Proceedings of the2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 1264–1267. [Google Scholar]

- Wierzgała, P.; Zapała, D.; Wojcik, G.M.; Masiak, J. Most popular signal processing methods in motor-imagery BCI: A review and meta-analysis. Front. Neuroinform. 2018, 12, 78. [Google Scholar] [CrossRef] [PubMed]

- Parini, S.; Maggi, L.; Turconi, A.C.; Andreoni, G. A robust and self-paced BCI system based on a four class SSVEP paradigm: Algorithms and protocols for a high-transfer-rate direct brain communication. Comput. Intell. Neurosci. 2009, 2009, 864564. [Google Scholar] [CrossRef] [PubMed]

- Jalilpour, S.; Sardouie, S.H.; Mijani, A. A novel hybrid BCI speller based on RSVP and SSVEP paradigm. Comput. Methods Programs Biomed. 2020, 187, 105326. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, U.; Vlachos, I.; Zimmermann, J.B.; Espinosa, A.; Tonin, A.; Jaramillo-Gonzalez, A.; Khalili-Ardali, M.; Topka, H.; Lehmberg, J.; Friehs, G.M.; et al. Spelling interface using intracortical signals in a completely locked-in patient enabled via auditory neurofeedback training. Nat. Commun. 2022, 13, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Beukelman, D.R.; Mirenda, P. Augmentative & Alternative Communication: Supporting Children and Adults with Complex Communication Needs; Paul H Brookes Publishing Co. Inc.: Baltimore, MD, USA, 2013. [Google Scholar]

- Ratcliff, A. Comparison of relative demands implicated in direct selection and scanning: Considerations from normal children. AAC 1994, 10, 67–74. [Google Scholar] [CrossRef]

- Mizuko, M.; Reichle, J.; Ratcliff, A.; Esser, J. Effects of selection techniques and array sizes on short-term visual memory. AAC 1994, 10, 237–244. [Google Scholar] [CrossRef]

- Rowland, C.; Schweigert, P.D.; Light, J.C. Cognitive skills and AAC. In Communicative Competence for Individuals who Use AAC: From Research to Effective Practice; Light, J.C., Beukelman, D.R., Reichle, J., Eds.; Paul H Brookes Publishing Co. Inc.: Baltimore, MD, USA, 2003; pp. 241–275. [Google Scholar]

- Wagner, B.; Jackson, H.M. Developmental memory capacity resources of typical children retrieving picture communication symbols using direct selection and visual linear scanning with fixed communication displays. J. Speech Lang. Hear. Res. 2006, 49, 113–126. [Google Scholar] [CrossRef]

- Higginbotham, D.J.; Shane, H.; Russell, S.; Caves, K. Access to AAC: Present, past, and future. AAC 2007, 23, 243–257. [Google Scholar] [CrossRef]

- Light, J.C.; McNaughton, D. Putting people first: Re-thinking the role of technology in augmentative and alternative communication intervention. AAC 2013, 29, 299–309. [Google Scholar] [CrossRef]

- Thistle, J.J.; Wilkinson, K.M. Working memory demands of aided augmentative and alternative communication for individuals with developmental disabilities. AAC 2013, 29, 235–245. [Google Scholar] [CrossRef]

- Flaubert, J.L.; Spicer, C.M.; Jette, A.M.; National Academies of Sciences, Engineering, and Medicine. Augmentative and Alternative Communication and Voice Products and Technologies. In The Promise of Assistive Technology to Enhance Activity and Work Participation; National Academies Press: Washington, DC, USA, 2017. [Google Scholar]

- McLaughlin, D.; Peters, B.; McInturf, K.; Eddy, B.; Kinsella, M.; Mooney, A.; Deibert, T.; Montgomery, K.; Fried-Oken, M. Decision-Making for Access to AAC Technologies in Late Stage ALS. Augment. Altern. Commun. 2021, 169, 169–199. [Google Scholar]

- Villalobos, A.E.L.; Giusiano, S.; Musso, L.; de’Sperati, C.; Riberi, A.; Spalek, P.; Calvo, A.; Moglia, C.; Roatta, S. When assistive eye tracking fails: Communicating with a brainstem-stroke patient through the pupillary accommodative response—A case study. Biomed. Signal Processing Control. 2021, 67, 102515. [Google Scholar] [CrossRef]

- Elsahar, Y.; Hu, S.; Bouazza-Marouf, K.; Kerr, D.; Mansor, A. Augmentative and alternative communication (AAC) advances: A review of configurations for individuals with a speech disability. Sensors 2019, 19, 1911. [Google Scholar] [CrossRef] [PubMed]

- Kendall, F.P.; Provance, P.G.; McCreary, E.K.; Crosby, R.W. I Muscoli: Funzioni e Test Con Postura e Dolore; Verduci Editore: Rome, Italy, 2000. [Google Scholar]

- Ganz, J.B.; Morin, K.L.; Foster, M.J.; Vannest, K.J.; Genç Tosun, D.; Gregori, E.V.; Gerow, S.L. High-technology augmentative and alternative communication for individuals with intellectual and developmental disabilities and complex communication needs: A meta-analysis. AAC 2017, 33, 224–238. [Google Scholar] [CrossRef] [PubMed]

- Mandak, K.; Light, J.; Brittlebank-Douglas, S. Exploration of multimodal alternative access for individuals with severe motor impairments: Proof of concept. Assist. Technol. 2021, 3, 1–10. [Google Scholar] [CrossRef]

- Ball, L.J.; Nordness, A.S.; Fager, S.K.; Kersch, K.; Mohr, B.; Pattee, G.L.; Beukelman, D.R. Eye gaze access of AAC technology for people with amyotrophic lateral sclerosis. J. Med. Speech-Lang. Pathol. 2010, 18, 11. [Google Scholar]

- Brumberg, J.S.; Pitt, K.M.; Mantie-Kozlowski, A.; Burnison, J.D. Brain-Computer Interfaces for Augmentative and Alternative Communication: A Tutorial. Am. J. Speech-Lang. Pathol. 2018, 27, 1–12. [Google Scholar] [CrossRef]

- Caligari, M.; Godi, M.; Giardini, M.; Colombo, R. Development of a new high sensitivity mechanical switch for augmentative and alternative communication access in people with amyotrophic lateral sclerosis. JNER 2019, 16, 1–13. [Google Scholar] [CrossRef]

- Kamper, S.J.; Maher, C.G.; Mackay, G. Global rating of change scales: A review of strengths and weaknesses and considerations for design. J. Man. Manip. Ther. 2009, 17, 163–170. [Google Scholar] [CrossRef]

- Demers, L.; Weiss-Lambrou, R.; Ska, B. The Quebec User Evaluation of Satisfaction with Assistive Technology (QUEST 2.0): An overview and recent progress. Technol. Disabil. 2002, 14, 101–105. [Google Scholar] [CrossRef]

- Koch Fager, S.; Fried-Oken, M.; Jakobs, T.; Beukelman, D.R. New and emerging access technologies for adults with complex communication needs and severe motor impairments: State of the science. AAC 2019, 35, 13–25. [Google Scholar] [CrossRef] [PubMed]

- Fager, S.; Beukelman, D.R.; Fried-Oken, M.; Jakobs, T.; Baker, J. Access interface strategies. Assist. Technol. 2012, 24, 25–33. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Kapgate, D.; Kalbande, D.; Shrawankar, U. An optimized facial stimuli paradigm for hybrid SSVEP+ P300 brain computer interface. Cogn. Syst. Res. 2020, 59, 114–122. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.; Han, C.; Park, K. An instant donning multi-channel EEG headset (with comb-shaped dry electrodes) and BCI applications. Sensors 2019, 19, 1537. [Google Scholar] [CrossRef] [PubMed]

- Ko, L.W.; Chang, Y.; Wu, P.L.; Tzou, H.A.; Chen, S.F.; Tang, S.C.; Yeh, C.-L.; Chen, Y.-J. Development of a smart helmet for strategical BCI applications. Sensors 2019, 19, 1867. [Google Scholar] [CrossRef] [PubMed]

- Usakli, A.B. Improvement of EEG signal acquisition: An electrical aspect for state of the art of front end. Comput. Intell. Neurosci. 2010, 2010, 630649. [Google Scholar] [CrossRef]

- Cler, M.J.; Nieto-Castañón, A.; Guenther, F.H.; Fager, S.K.; Stepp, C.E. Surface electromyographic control of a novel phonemic interface for speech synthesis. AAC 2016, 32, 120–130. [Google Scholar] [CrossRef]

- Lee, D.; Lee, S.; Lee, K.J.; Lee, G. Biological surface electromyographic switch and necklace-type button switch control as an augmentative and alternative communication input device: A feasibility study. Phys. Eng. Sci. Med. 2019, 42, 839–851. [Google Scholar] [CrossRef]

- Laureys, S.; Pellas, F.; Van Eeckhout, P.; Ghorbel, S.; Schnakers, C.; Perrin, F.; Berré, J.; Faymonville, M.-E.; Pantke, K.-H.; Dama, F.; et al. The locked-in syndrome: What is it like to be conscious but paralyzed and voiceless? Prog. Brain Res. 2005, 150, 495–511. [Google Scholar]

- Bauby, J.D. The Diving Bell and the Butterfly [Le Scaphandre et le Papillon], 4th ed.; Leggatt, T.J., Ed.; Random House Value Publis: London, UK, 1997. [Google Scholar]

- Park, S.W.; Yim, Y.L.; Yi, S.H.; Kim, H.Y.; Jung, S.M. Augmentative and alternative communication training using eye blink switch for locked-in syndrome patient. Ann. Rehabil. Med. 2012, 36, 268. [Google Scholar] [CrossRef] [PubMed]

- Tonin, A.; Jaramillo-Gonzalez, A.; Rana, A.; Khalili-Ardali, M.; Birbaumer, N.; Chaudhary, U. Auditory electrooculogram-based communication system for ALS patients in transition from locked-in to complete locked-in state. Sci. Rep. 2020, 10, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Light, J.; McNaughton, D.; Beukelman, D.; Fager, S.K.; Fried-Oken, M.; Jakobs, T.; Jakobs, E. Challenges and opportunities in augmentative and alternative communication: Research and technology development to enhance communication and participation for individuals with complex communication needs. AAC 2019, 35, 1–12. [Google Scholar] [CrossRef]

- Khalili-Ardali, M.; Wu, S.; Tonin, A.; Birbaumer, N.; Chaudhary, U. Neurophysiological aspects of the completely locked-in syndrome in patients with advanced amyotrophic lateral sclerosis. Clin. Neurophysiol. 2021, 132, 1064–1076. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.G.S.; Robinson, I.; AlShawi, S. Developing medical device technologies from users’ perspectives: A theoretical framework for involving users in the development process. Int. J. Technol. Assess. Health Care 2009, 25, 514–521. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).