Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage

Abstract

1. Introduction

- We propose an improved transfer-learning-based facial recognition framework that has the potential of yielding high accuracy to identify autistic children.

- We explore an improved MobileNet model that shows the best performance among various standard machine learning, deep learning and related pre-trained models.

- We focus on a less explored area of image processing and recognition, i.e., identifying ASD-affected children from facial images of normal and autistic children.

- We implement and validate a range of different machine learning and deep learning models.

- We identify relevant clusters of autistic children.

2. Materials and Methods

2.1. Dataset

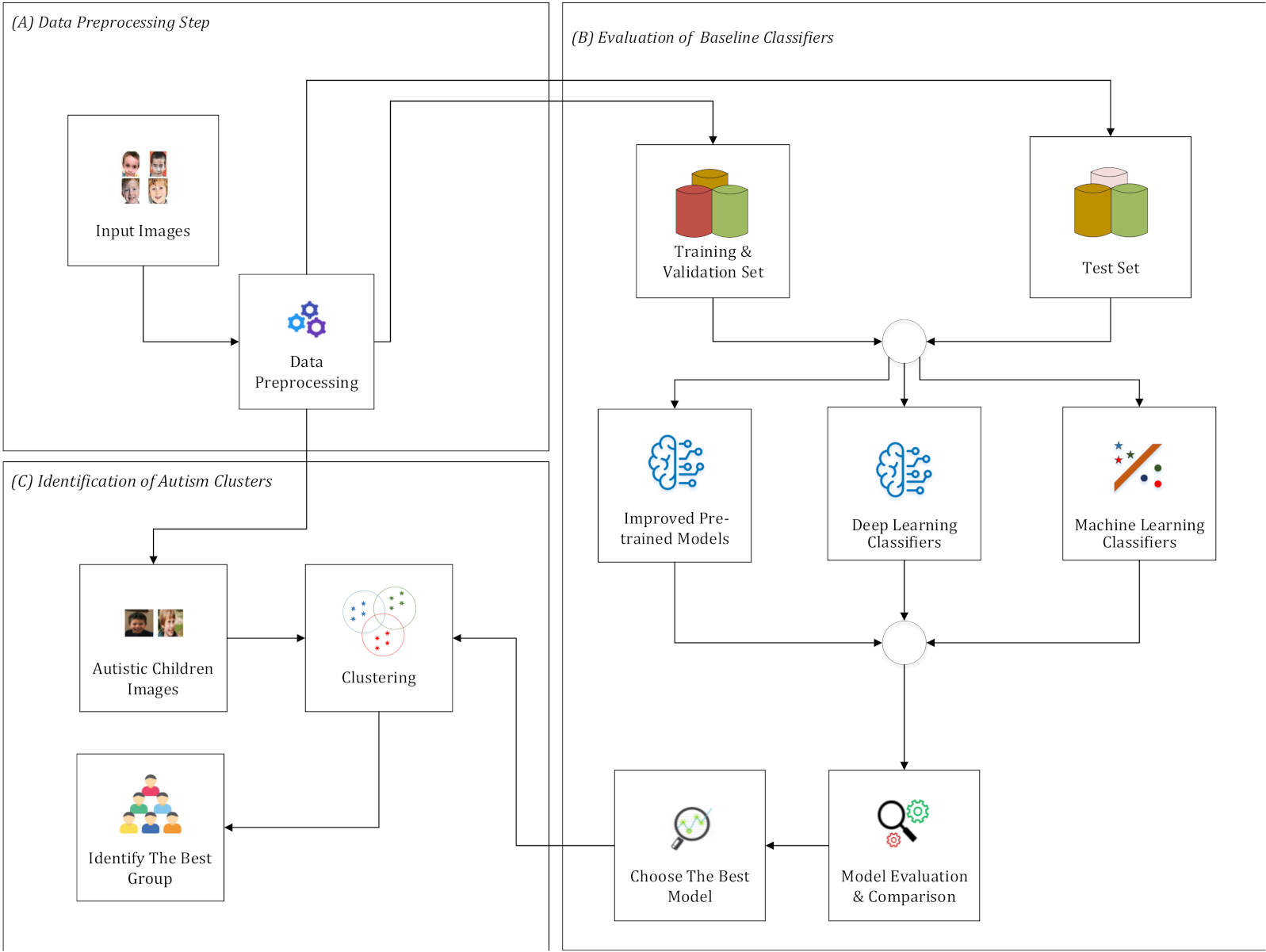

2.2. Improved Transfer-Learning-Based Autism Facial Recognition Framework

- Data Acquisition: Firstly, the primary facial image dataset was gathered and cleaned. It contains training, validation and test set for further analysis.

- Training Stage: Then, the facial training dataset was used to train CNN, pre-trained improved CNN models and other machine learning classifiers. In this circumstances, the classifiers were called baseline classifiers.

- Performance of Validation and Test Set: In this training process, various classifiers were employed in the validation set to assess the performance of training activities. Consequently, baseline classifiers were also implemented into the test set and to evaluate of the performance of classifiers. Notably, we considered the distribution of face scanning coordinates as a discriminating features for autism classification.

- Evaluation Metrics: In this evaluation process, different metrics such as accuracy, area under curve (AUC), f-measure, g-mean, sensitivity, specificity, fall-out and miss-rate were used to verify the result of various classifiers.

- Comparison and Evaluation: After evaluating the performance of individual classifiers in the validation and test set, improved MobileNet-V1 shows the best result among all other classifiers.

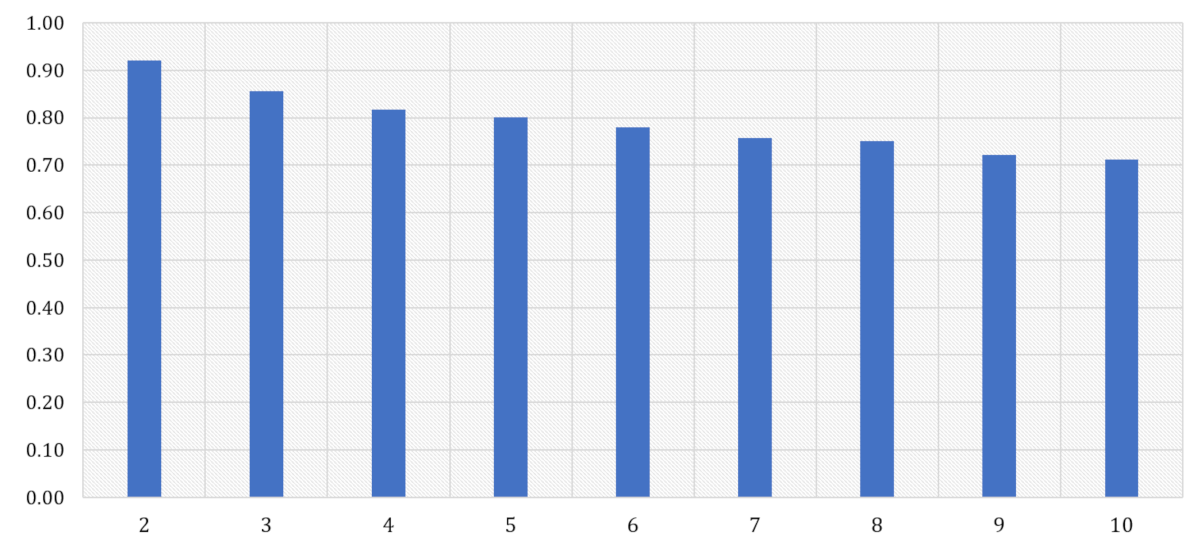

- Clustering-based Autism Sub-typing: According to previous studies [16,17,18], several sub-types were generated from patients with autism for further medical and neurological analysis. The motivation for conducting clustering on the autistic faces only was to explore different autism sub-types based on quantitative analysis to help to extract and investigate distinguishable significant features of autism. This approach reduces data dimensionality and wraps strong and significant features that can appear in multiple clusters [19]. Therefore, this procedure can lessen false-positive and false-negative results of autism for further supervised learning tasks. To generate the number of clusters/groups from the working dataset, k-means algorithm (see details in Section 2.5) was widely employed in the different fields of machine learning [16,20,21,22]. In this work, this algorithm was implemented into only autistic facial images (e.g., all autism images were gathered from training, validation and testing sets) and generated numerous autism sub-types in each iteration by changing the values of k from 2 to 10. Then, those sub-types were considered as individual class labels to further demonstrate the predictability of our selected improved MobileNet-V1 (i.e., the best performing model in classifying autistic/normal faces in our first experiment). Note that the problem then became a multi-class classification problem (i.e., it depends on the value of k, e.g., k = 3 would be a 3-class classification and so on), rather than a simple binary-class classification problem (i.e., autistic/normal faces). Note, this multi-class classification task only uses the autistic faces (i.e., no controls were used) for training, validating and testing the best performing improved MobileNet-V1. In addition, this classification was followed 10-fold cross validation and best sub-types has been selected based on accuracy of the classifier.

2.3. Baseline Classifiers

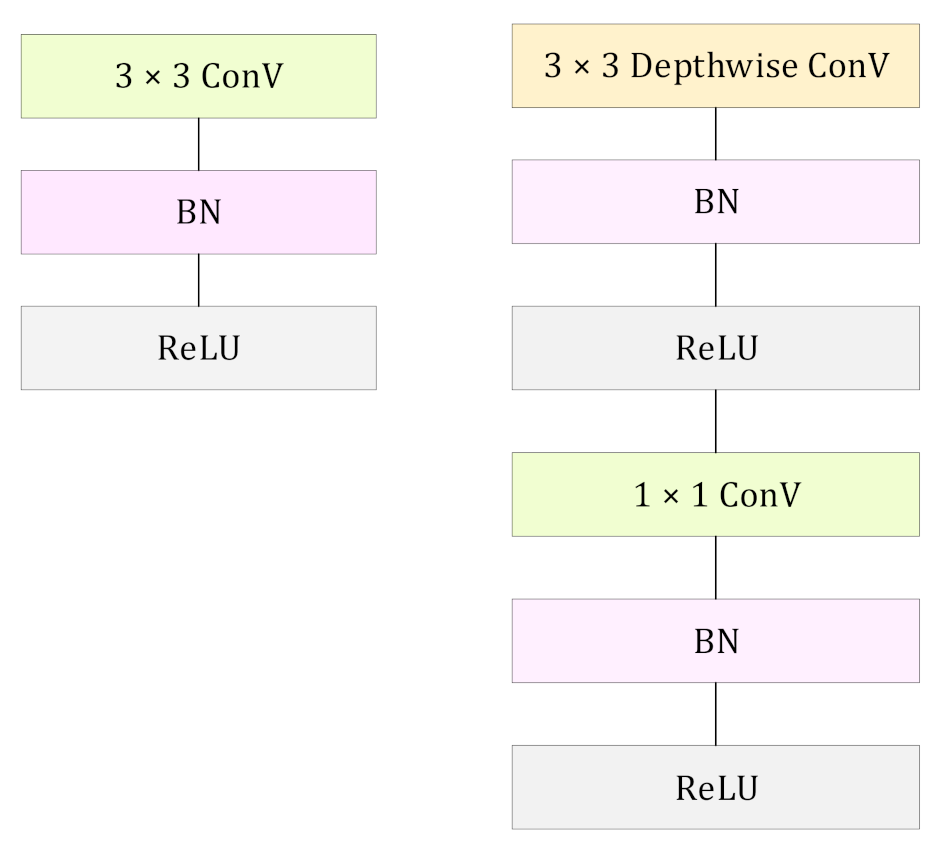

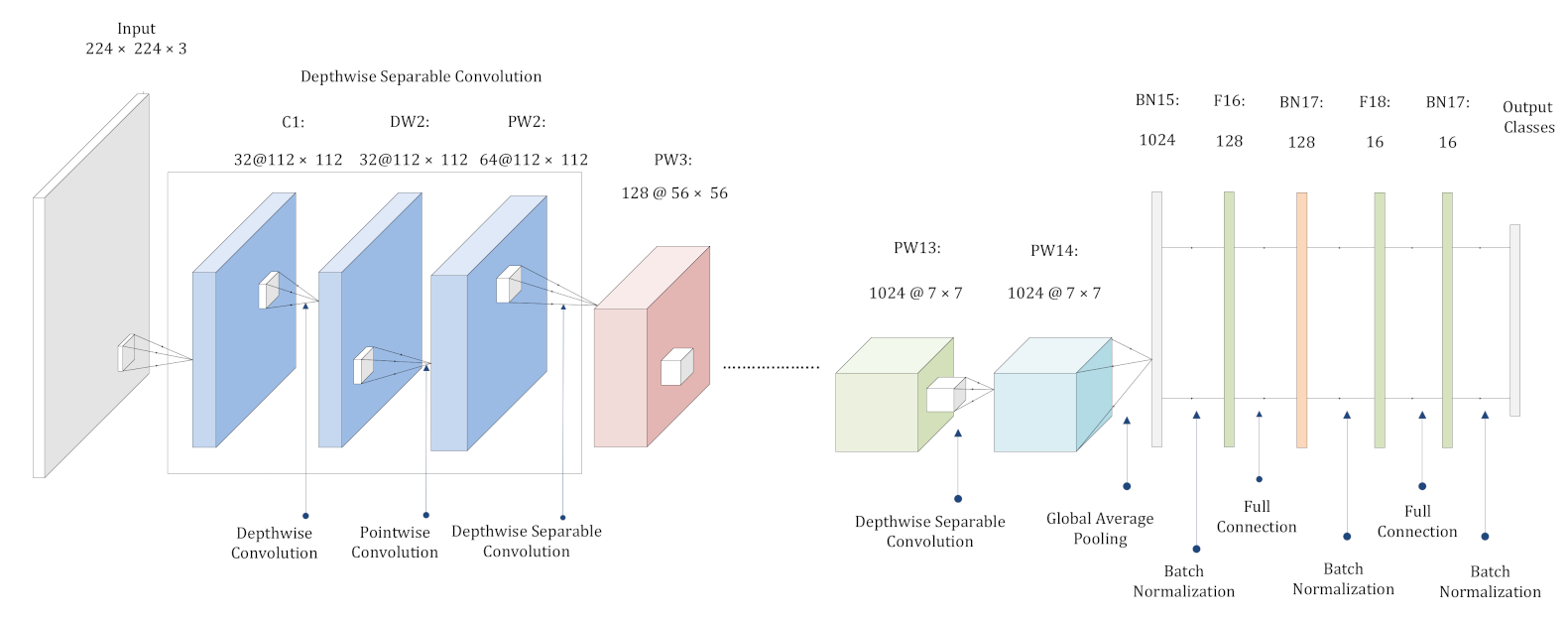

2.4. Improved MobileNet-V1

2.5. K-Means Clustering

3. Experimental Result

3.1. Evaluation the Result of Validation Set

3.2. Evaluation the Result of Test Set

4. Discussion

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Traditional MobileNet-V1

Appendix A.2. Performance Evaluation

- Accuracy determines the rate of the classification correctly. It is computed by the proportion of the sum of the TP and TN against the total number of the population.

- Area Under Curve (AUC) measures the probabilities of how well the positive classes are isolated from the negative classes.

- F-Measure computes the harmonic mean of precision and recall.

- Geometric Mean (G-mean) is the product root of class-wise sensitivity and is calculated as the squared root of the product of the sensitivity and specificity.

- Sensitivity is determined by the proportion of the positive status against the positive predicted status.

- Specificity is computed as the proportion of the negative status against the predicted negative status.

- Fall-Out//false-positive rate is calculated as the ratio of TP and the summation of FP and TN.

- Miss Rate:Miss Rate/false negative rate measures the ratio of FN and the summation of FN and TP.

References

- Goh, K.L.; Morris, S.; Rosalie, S.; Foster, C.; Falkmer, T.; Tan, T. Typically developed adults and adults with autism spectrum disorder classification using centre of pressure measurements. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 844–848, ISSN 2379-190X. [Google Scholar] [CrossRef]

- Speaks, A. What is autism. Retrieved Novemb. 2011, 17, 2011. [Google Scholar]

- Satu, M.S.; Farida Sathi, F.; Arifen, M.S.; Hanif Ali, M.; Moni, M.A. Early Detection of Autism by Extracting Features: A Case Study in Bangladesh. In Proceedings of the 2019 International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 10–12 January 2019; pp. 400–405. [Google Scholar] [CrossRef]

- Guillon, Q.; Hadjikhani, N.; Baduel, S.; Rogé, B. Visual social attention in autism spectrum disorder: Insights from eye tracking studies. Neurosci. Biobehav. Rev. 2014, 42, 279–297. [Google Scholar] [CrossRef]

- Haque, M.I.U.; Valles, D. A Facial Expression Recognition Approach Using DCNN for Autistic Children to Identify Emotions. In Proceedings of the 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 1–3 November 2018; pp. 546–551. [Google Scholar] [CrossRef]

- Rudovic, O.; Utsumi, Y.; Lee, J.; Hernandez, J.; Ferrer, E.C.; Schuller, B.; Picard, R.W. CultureNet: A Deep Learning Approach for Engagement Intensity Estimation from Face Images of Children with Autism. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 339–346, ISSN 2153-0866. [Google Scholar] [CrossRef]

- Yolcu, G.; Oztel, I.; Kazan, S.; Oz, C.; Palaniappan, K.; Lever, T.E.; Bunyak, F. Facial expression recognition for monitoring neurological disorders based on convolutional neural network. Multimed. Tools Appl. 2019, 78, 31581–31603. [Google Scholar] [CrossRef]

- Akter, T.; Satu, M.S.; Barua, L.; Sathi, F.F.; Ali, M.H. Statistical Analysis of the Activation Area of Fusiform Gyrus of Human Brain to Explore Autism. Int. J. Comput. Sci. Inf. Secur. (IJCSIS) 2017, 15, 331–337. [Google Scholar]

- Satu, M.S.; Azad, M.S.; Haque, M.F.; Imtiaz, S.K.; Akter, T.; Barua, L.; Rashid, M.; Soron, T.R.; Al Mamun, K.A. Prottoy: A Smart Phone Based Mobile Application to Detect Autism of Children in Bangladesh. In Proceedings of the 2019 4th International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 20–22 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Schelinski, S.; Borowiak, K.; von Kriegstein, K. Temporal voice areas exist in autism spectrum disorder but are dysfunctional for voice identity recognition. Soc. Cogn. Affect. Neurosci. 2016, 11, 1812–1822. [Google Scholar] [CrossRef]

- Jiang, X.; Chen, Y.F. Facial Image Processing. In Applied Pattern Recognition; Bunke, H., Kandel, A., Last, M., Eds.; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2008; pp. 29–48. [Google Scholar] [CrossRef]

- Garcia, C.; Ostermann, J.; Cootes, T. Facial Image Processing. Eurasip J. Image Video Process. 2008, 2007, 1–2. [Google Scholar] [CrossRef][Green Version]

- Yolcu, G.; Oztel, I.; Kazan, S.; Oz, C.; Palaniappan, K.; Lever, T.E.; Bunyak, F. Deep learning-based facial expression recognition for monitoring neurological disorders. In Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, 13–16 November 2017; pp. 1652–1657. [Google Scholar] [CrossRef]

- Joseph, L.; Pramod, S.; Nair, L.S. Emotion recognition in a social robot for robot-assisted therapy to autistic treatment using deep learning. In Proceedings of the 2017 International Conference on Technological Advancements in Power and Energy (TAP Energy), Kollam, India, 21–23 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Piosenka, G. Detect Autism from a Facial Image. Available online: https://cutt.ly/ibIXt5a (accessed on 26 December 2020).

- Hu, V.W.; Steinberg, M.E. Novel clustering of items from the Autism Diagnostic Interview-Revised to define phenotypes within autism spectrum disorders. Autism Res. Off. J. Int. Soc. Autism Res. 2009, 2, 67–77. [Google Scholar] [CrossRef]

- Ellegood, J.; Anagnostou, E.; Babineau, B.; Crawley, J.; Lin, L.; Genestine, M.; DiCicco-Bloom, E.; Lai, J.; Foster, J.A.; Peñagarikano, O.; et al. Clustering autism-using neuroanatomical differences in 26 mouse models to gain insight into the heterogeneity. Mol. Psychiatry 2015, 20, 118–125. [Google Scholar] [CrossRef]

- Vargason, T.; Frye, R.E.; McGuinness, D.L.; Hahn, J. Clustering of co-occurring conditions in autism spectrum disorder during early childhood: A retrospective analysis of medical claims data. Autism Res. 2019, 12, 1272–1285. [Google Scholar] [CrossRef]

- Baadel, S.; Thabtah, F.; Lu, J. A clustering approach for autistic trait classification. Inform. Health Soc. Care 2020, 45, 309–326. [Google Scholar] [CrossRef]

- Satu, M.S.; Khan, M.I.; Mahmud, M.; Uddin, S.; Summers, M.A.; Quinn, J.M.W.; Moni, M.A. TClustVID: A novel machine learning classification model to investigate topics and sentiment in COVID-19 tweets. Knowl. Based Syst. 2021, 226, 107126. [Google Scholar] [CrossRef]

- Ruzich, E.; Allison, C.; Smith, P.; Watson, P.; Auyeung, B.; Ring, H.; Baron-Cohen, S. Subgrouping siblings of people with autism: Identifying the broader autism phenotype. Autism Res. 2016, 9, 658–665. [Google Scholar] [CrossRef] [PubMed]

- Stevens, E.; Atchison, A.; Stevens, L.; Hong, E.; Granpeesheh, D.; Dixon, D.; Linstead, E. A Cluster Analysis of Challenging Behaviors in Autism Spectrum Disorder. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 661–666. [Google Scholar] [CrossRef]

- Akter, T.; Khan, M.I.; Ali, M.H.; Satu, M.S.; Uddin, M.J.; Moni, M.A. Improved Machine Learning based Classification Model for Early Autism Detection. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Online, 5–7 January 2021; pp. 742–747. [Google Scholar] [CrossRef]

- Akter, T.; Ali, M.H.; Khan, M.I.; Satu, M.S.; Moni, M.A. Machine Learning Model To Predict Autism Investigating Eye-Tracking Dataset. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Online, 5–7 January 2021; pp. 383–387. [Google Scholar] [CrossRef]

- Gudipati, V.K.; Barman, O.R.; Gaffoor, M.; Abuzneid, A. Efficient facial expression recognition using adaboost and haar cascade classifiers. In Proceedings of the 2016 Annual Connecticut Conference on Industrial Electronics, Technology Automation (CT-IETA), Bridgeport, CT, USA, 14–15 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Salmam, F.Z.; Madani, A.; Kissi, M. Facial Expression Recognition Using Decision Trees. In Proceedings of the 2016 13th International Conference on Computer Graphics, Imaging and Visualization (CGiV), Beni Mellal, Morocco, 29 March–1 April 2016; pp. 125–130. [Google Scholar] [CrossRef]

- Howlader, K.C.; Satu, M.S.; Barua, A.; Moni, M.A. Mining Significant Features of Diabetes Mellitus Applying Decision Trees: A Case Study In Bangladesh. bioRxiv 2018, 481994. [Google Scholar] [CrossRef]

- Huynh, X.P.; Park, S.M.; Kim, Y.G. Detection of Driver Drowsiness Using 3D Deep Neural Network and Semi-Supervised Gradient Boosting Machine. In Computer Vision–ACCV 2016 Workshops; Lecture Notes in Computer Science; Chen, C.S., Lu, J., Ma, K.K., Eds.; Springer: Cham, Switzerland, 2017; pp. 134–145. [Google Scholar] [CrossRef]

- Zennifa, F.; Ageno, S.; Hatano, S.; Iramina, K. Hybrid System for Engagement Recognition During Cognitive Tasks Using a CFS + KNN Algorithm. Sensors 2018, 18, 3691. [Google Scholar] [CrossRef]

- Liu, T.L.; Wang, P.W.; Yang, Y.H.C.; Shyi, G.C.W.; Yen, C.F. Association between Facial Emotion Recognition and Bullying Involvement among Adolescents with High-Functioning Autism Spectrum Disorder. Int. J. Environ. Res. Public Health 2019, 16, 5125. [Google Scholar] [CrossRef] [PubMed]

- Thepade, S.D.; Abin, D. Face Gender Recognition Using Multi Layer Perceptron with OTSU Segmentation. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, Y.P.; Liu, C.H.; Chou, K.Y.; Wang, S.Y. Real-time and low-memory multi-face detection system design based on naive Bayes classifier using FPGA. In Proceedings of the 2016 International Automatic Control Conference (CACS), Taichung, Taiwan, 9–11 November 2016; pp. 7–12. [Google Scholar] [CrossRef]

- Jarraya, S.K.; Masmoudi, M.; Hammami, M. A comparative study of Autistic Children Emotion recognition based on Spatio-Temporal and Deep analysis of facial expressions features during a Meltdown Crisis. Multimed. Tools Appl. 2021, 80, 83–125. [Google Scholar] [CrossRef]

- Mostafa, S.; Yin, W.; Wu, F.X. Autoencoder Based Methods for Diagnosis of Autism Spectrum Disorder. In Computational Advances in Bio and Medical Sciences; Lecture Notes in Computer Science; Măndoiu, I., Murali, T.M., Narasimhan, G., Rajasekaran, S., Skums, P., Zelikovsky, A., Eds.; Springer: Cham, Switzerland, 2020; pp. 39–51. [Google Scholar] [CrossRef]

- Vinay, A.; Gupta, A.; Kamath, V.R.; Bharadwaj, A.; Srinivas, A.; Murthy, K.N.B.; Natarajan, S. Facial Analysis Using Jacobians and Gradient Boosting. In Mathematical Modelling and Scientific Computing with Applications; Springer Proceedings in Mathematics & Statistics; Manna, S., Datta, B.N., Ahmad, S.S., Eds.; Springer: Singapore, 2020; pp. 393–404. [Google Scholar] [CrossRef]

- Tong, X.; Sun, S.; Fu, M. Data Augmentation and Second-Order Pooling for Facial Expression Recognition. IEEE Access 2019, 7, 86821–86828. [Google Scholar] [CrossRef]

- Yang, B.; Cao, J.; Ni, R.; Zhang, Y. Facial Expression Recognition Using Weighted Mixture Deep Neural Network Based on Double-Channel Facial Images. IEEE Access 2018, 6, 4630–4640. [Google Scholar] [CrossRef]

- Raghavendra, R.; Raja, K.B.; Venkatesh, S.; Busch, C. Transferable Deep-CNN Features for Detecting Digital and Print-Scanned Morphed Face Images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1822–1830, ISSN 2160-7516. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2019, arXiv:1801.04381. [Google Scholar]

- Chollet, F. Keras: The Python Deep Learning Library; Astrophysics Source Code Library, 2018; p. ascl:1806.022. Available online: https://en.wikipedia.org/wiki/Astrophysics_Source_Code_Library (accessed on 1 April 2021).

- Satu, M.S.; Rahman, S.; Khan, M.I.; Abedin, M.Z.; Kaiser, M.S.; Mahmud, M. Towards Improved Detection of Cognitive Performance Using Bidirectional Multilayer Long-Short Term Memory Neural Network. In Brain Informatics; Lecture Notes in Computer Science; Mahmud, M., Vassanelli, S., Kaiser, M.S., Zhong, N., Eds.; Springer: Cham, Switzerland, 2020; pp. 297–306. [Google Scholar] [CrossRef]

- Bisong, E. Google Colaboratory. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Bisong, E., Ed.; Apress: Berkeley, CA, USA, 2019; pp. 59–64. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255, ISSN 1063-6919. [Google Scholar] [CrossRef]

- Jain, N.; Kumar, S.; Kumar, A.; Shamsolmoali, P.; Zareapoor, M. Hybrid deep neural networks for face emotion recognition. Pattern Recognit. Lett. 2018, 115, 101–106. [Google Scholar] [CrossRef]

- Akter, T.; Shahriare Satu, M.; Khan, M.I.; Ali, M.H.; Uddin, S.; Lió, P.; Quinn, J.M.W.; Moni, M.A. Machine Learning-Based Models for Early Stage Detection of Autism Spectrum Disorders. IEEE Access 2019, 7, 166509–166527. [Google Scholar] [CrossRef]

- Aldridge, K.; George, I.D.; Cole, K.K.; Austin, J.R.; Takahashi, T.N.; Duan, Y.; Miles, J.H. Facial phenotypes in subgroups of prepubertal boys with autism spectrum disorders are correlated with clinical phenotypes. Mol. Autism. 2011, 2, 15. [Google Scholar] [CrossRef]

| Classifier | Accuracy | AUC | F-Measure | G-Mean | Sensitivity | Specificity | Fall-Out | Miss Rate |

|---|---|---|---|---|---|---|---|---|

| AdaBoost | 0.6200 | 0.6200 | 0.6198 | 0.6200 | 0.6200 | 0.6200 | 0.3800 | 0.3800 |

| DT | 0.6000 | 0.6000 | 0.5998 | 0.6000 | 0.6000 | 0.6000 | 0.4000 | 0.4000 |

| GB | 0.7100 | 0.7100 | 0.7097 | 0.7100 | 0.7100 | 0.7100 | 0.2900 | 0.2900 |

| KNN | 0.6200 | 0.6200 | 0.5824 | 0.6200 | 0.6200 | 0.6200 | 0.3800 | 0.3800 |

| LR | 0.7000 | 0.7000 | 0.6981 | 0.7000 | 0.7000 | 0.7000 | 0.3000 | 0.3000 |

| MLP | 0.6400 | 0.6400 | 0.6279 | 0.6400 | 0.6400 | 0.6400 | 0.3600 | 0.3600 |

| NB | 0.6600 | 0.6600 | 0.6578 | 0.6600 | 0.6600 | 0.6600 | 0.3400 | 0.3400 |

| RF | 0.7600 | 0.7600 | 0.7600 | 0.7600 | 0.7600 | 0.7600 | 0.2400 | 0.2400 |

| SVM | 0.6700 | 0.6700 | 0.6692 | 0.6700 | 0.6700 | 0.6700 | 0.3300 | 0.3300 |

| XGB | 0.7300 | 0.7300 | 0.7300 | 0.7300 | 0.7300 | 0.7300 | 0.2700 | 0.2700 |

| CNN | 0.7200 | 0.7200 | 0.7190 | 0.7200 | 0.7200 | 0.7200 | 0.2800 | 0.2800 |

| DenseNet121 | 0.7800 | 0.7800 | 0.7786 | 0.7800 | 0.7800 | 0.7800 | 0.2200 | 0.2200 |

| ResNet50 | 0.8000 | 0.8000 | 0.8000 | 0.8000 | 0.8000 | 0.8000 | 0.2000 | 0.2000 |

| VGG16 | 0.7100 | 0.7100 | 0.7014 | 0.7100 | 0.7100 | 0.7100 | 0.2900 | 0.2900 |

| VGG19 | 0.7600 | 0.7600 | 0.7478 | 0.7600 | 0.7600 | 0.7600 | 0.2400 | 0.2400 |

| MobileNet-V1 | 0.8300 | 0.8300 | 0.8296 | 0.8300 | 0.8300 | 0.8300 | 0.1700 | 0.1700 |

| MobileNet-V2 | 0.6200 | 0.6200 | 0.6176 | 0.6200 | 0.6200 | 0.6200 | 0.3800 | 0.3800 |

| Classifier | Accuracy | AUC | F-Measure | G-Mean | Sensitivity | Specificity | Fall-Out | Miss Rate |

|---|---|---|---|---|---|---|---|---|

| AdaBoost | 0.6633 | 0.6633 | 0.6625 | 0.6633 | 0.6633 | 0.6633 | 0.3367 | 0.3367 |

| DT | 0.6633 | 0.6633 | 0.6631 | 0.6633 | 0.6633 | 0.6633 | 0.3367 | 0.3367 |

| GB | 0.7333 | 0.7333 | 0.7331 | 0.7333 | 0.7333 | 0.7333 | 0.2667 | 0.2667 |

| KNN | 0.6867 | 0.6867 | 0.6627 | 0.6867 | 0.6867 | 0.6867 | 0.3133 | 0.3133 |

| LR | 0.6933 | 0.6933 | 0.6920 | 0.6933 | 0.6933 | 0.6933 | 0.3067 | 0.3067 |

| MLP | 0.6767 | 0.6767 | 0.6646 | 0.6767 | 0.6767 | 0.6767 | 0.3233 | 0.3233 |

| NB | 0.6833 | 0.6833 | 0.6825 | 0.6833 | 0.6833 | 0.6833 | 0.3167 | 0.3167 |

| RF | 0.7600 | 0.7600 | 0.7599 | 0.7600 | 0.7600 | 0.7600 | 0.2400 | 0.2400 |

| SVM | 0.7400 | 0.7400 | 0.7399 | 0.7400 | 0.7400 | 0.7400 | 0.2600 | 0.2600 |

| XGB | 0.7400 | 0.7400 | 0.7400 | 0.7400 | 0.7400 | 0.7400 | 0.2600 | 0.2600 |

| CNN | 0.7000 | 0.7000 | 0.6998 | 0.7000 | 0.7000 | 0.7000 | 0.3000 | 0.3000 |

| DenseNet121 | 0.8367 | 0.8367 | 0.8365 | 0.8367 | 0.8367 | 0.8367 | 0.1633 | 0.1633 |

| ResNet50 | 0.8100 | 0.8100 | 0.8082 | 0.8100 | 0.8100 | 0.8100 | 0.1900 | 0.1900 |

| VGG16 | 0.7667 | 0.7667 | 0.7615 | 0.7667 | 0.7667 | 0.7667 | 0.2333 | 0.2333 |

| VGG19 | 0.7133 | 0.7133 | 0.6948 | 0.7133 | 0.7133 | 0.7133 | 0.2867 | 0.2867 |

| MobileNet-V1 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.0933 | 0.0933 |

| MobileNet-V2 | 0.6467 | 0.6467 | 0.6463 | 0.6467 | 0.6467 | 0.6467 | 0.3533 | 0.3533 |

| Pre-Trained Model | Top 1 Accuracy | Top 5 Accuracy | Validation Set | Test Set |

|---|---|---|---|---|

| Base/Improved Model | ImageNet (of Base) | Autism Dataset ( of Improved) | ||

| DenseNet121 | 0.7500 | 0.9230 | 0.7800 | 0.8367 |

| ResNet50 | 0.7490 | 0.9210 | 0.8000 | 0.8100 |

| VGG16 | 0.7130 | 0.9010 | 0.7100 | 0.7667 |

| VGG19 | 0.7130 | 0.9000 | 0.7600 | 0.7133 |

| MobileNet-V1 | 0.7040 | 0.8950 | 0.8300 | 0.9067 |

| MobileNet-V2 | 0.7130 | 0.9010 | 0.6200 | 0.6467 |

| Classifier | Accuracy | AUC | F-Measure | G-Mean | Sensitivity | Specificity | Fall Out | Miss Rate |

|---|---|---|---|---|---|---|---|---|

| Validation Set | ||||||||

| Base MobileNet-V1 | 0.7800 | 0.7800 | 0.7778 | 0.7800 | 0.7800 | 0.7800 | 0.2200 | 0.2200 |

| MobileNet-V1 | 0.8300 | 0.8300 | 0.8296 | 0.8300 | 0.8300 | 0.8300 | 0.1700 | 0.1700 |

| Test Set | ||||||||

| Base MobileNet-V1 | 0.8300 | 0.8300 | 0.8298 | 0.8300 | 0.8300 | 0.8300 | 0.1700 | 0.1700 |

| MobileNet-V1 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.9067 | 0.0933 | 0.0933 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akter, T.; Ali, M.H.; Khan, M.I.; Satu, M.S.; Uddin, M.J.; Alyami, S.A.; Ali, S.; Azad, A.; Moni, M.A. Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage. Brain Sci. 2021, 11, 734. https://doi.org/10.3390/brainsci11060734

Akter T, Ali MH, Khan MI, Satu MS, Uddin MJ, Alyami SA, Ali S, Azad A, Moni MA. Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage. Brain Sciences. 2021; 11(6):734. https://doi.org/10.3390/brainsci11060734

Chicago/Turabian StyleAkter, Tania, Mohammad Hanif Ali, Md. Imran Khan, Md. Shahriare Satu, Md. Jamal Uddin, Salem A. Alyami, Sarwar Ali, AKM Azad, and Mohammad Ali Moni. 2021. "Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage" Brain Sciences 11, no. 6: 734. https://doi.org/10.3390/brainsci11060734

APA StyleAkter, T., Ali, M. H., Khan, M. I., Satu, M. S., Uddin, M. J., Alyami, S. A., Ali, S., Azad, A., & Moni, M. A. (2021). Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage. Brain Sciences, 11(6), 734. https://doi.org/10.3390/brainsci11060734