TSMG: A Deep Learning Framework for Recognizing Human Learning Style Using EEG Signals

Abstract

:1. Introduction

1.1. Field Overview

1.1.1. Learning Style

1.1.2. Existing Methods to Recognize Learning Style

1.1.3. Relationship between Learning Style and EEG

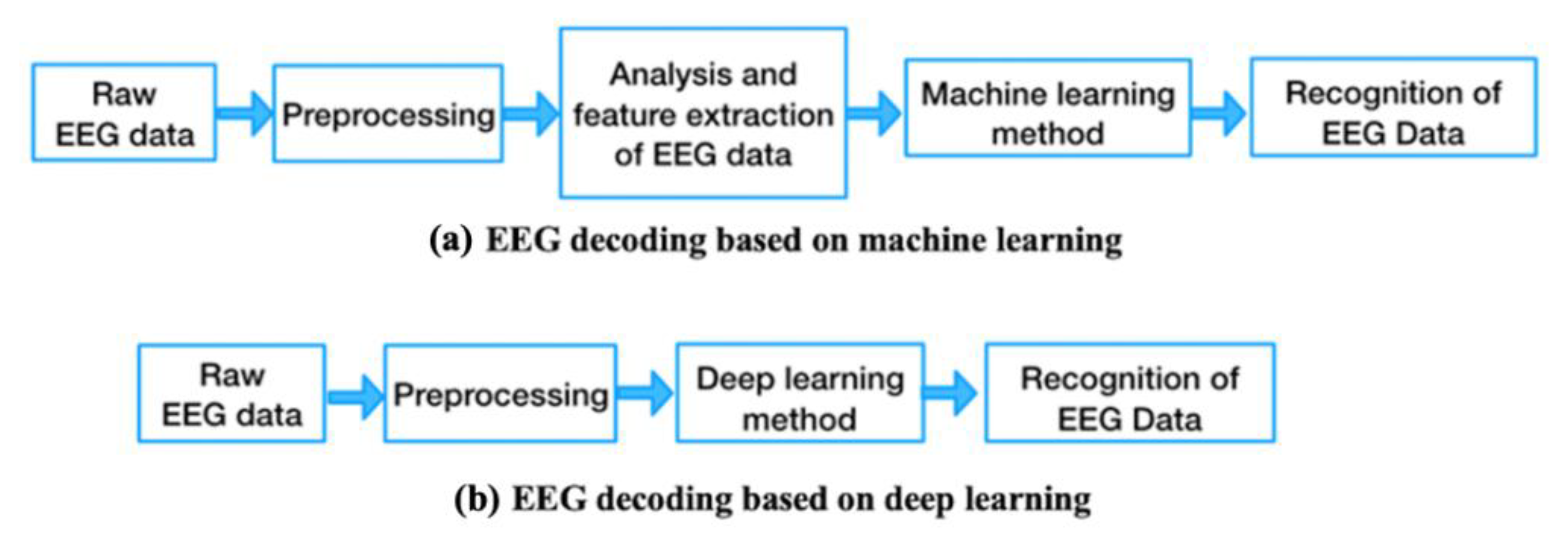

1.1.4. Basic Process of EEG Data Processing

1.1.5. Experiment to Recognize Learning Style Using EEG Features

- (1)

- Labeling subjects’ learning style: We asked the subjects about their willingness to fill out the ILS in advance, and only those who expressed willingness to do so were selected. We translated each ILS item in a straightforward, detailed manner, and explained the meaning of each item to the subjects before they filled it out. They were asked to fill out the ILS based on careful consideration of their own actual situation. On this basis, subjects’ learning styles were obtained, providing a reliable basis for labeling learning styles.

- (2)

- Evoking the EEG signal of the learning style: For the selection of stimuli, Raven’s Advanced Progressive Matrices (RAPM) is selected. RAPM asks subjects to think logically based on the rules associated with the symbols in the matrix diagram; RAPM test questions are shown in [28]. RAPM can not only effectively stimulate the differences in the subjects’ learning styles in the processing dimension, but can also ensure that the designed stimulus mode would generate as few invalid signals as possible. Using RAPM as a stimulus can prompt subjects to undertake logical thinking, which will stimulate brain processing.

- (3)

- Collecting the EEG data: The subjects wear a brain–computer device so that their EEG data can be recorded by one computer. The Emotiv Epoc+ is used because it is lightweight and easy to use, which can reduce the stress or nervousness of subjects in the study and provide a better setup while still delivering reliable results [29]. A computer is used to present a stimulus to the subject, and another computer is used to simultaneously record their EEG signals.

- (4)

- Processing EEG data and building recognition model: The collected EEG raw data are preprocessed by the EEG processing methods (including removing the unused frequency range, EOG and EMG artifacts, etc.), and then the preprocessed EEG data will be inputted into the recognition model (e.g., machine learning methods, deep learning methods) to recognize the subjects’ learning styles.

1.2. Literature Review

1.2.1. Recognition of Learning Style

1.2.2. Application of EEG

1.2.3. Processing Variable-Length EEG Data

1.2.4. Methods to Recognize EEG Data

1.3. Problem Focus and Solution

- (1)

- How do we deal with variable-length data more efficiently?

- (2)

- How do we reduce the cost of calculation while increasing accuracy?

- (3)

- The absence of an EEG dataset on learning styles

1.4. Highlights

- (1)

- We design a deep learning model (TSMG) by using a non-overlapping sliding window, 1D spatio-temporal convolutions, multi-scale feature extraction, global average pooling, and the group voting mechanism for recognizing the features of EEG signals to solve the problem of processing variable-length EEG data. The proposed model improves the accuracy of recognition by nearly 5% compared to prevalent methods, while reducing the amount of calculations needed by 41.93%. The model can also recognize variable-length data in other fields.

- (2)

- We develop an EEG dataset (LSEEG dataset) containing features of the learning styles in processing dimensions. It can be used for testing and comparing models for the recognition of learning styles, and can help with the application and further development of EEG technology in the context of identifying learning styles.

2. Methodology

2.1. Review of Basic Knowledge

2.2. General Structure of Proposed TSMG Model

2.3. Non-Overlapping Sliding Window

2.4. 1D Spatio-Temporal Convolutional Layer

- (1)

- Temporal convolution: As shown in Figure 4a, the 1D convolution is calculated on different channels of the original EEG signals along the time axis, and the output is the temporal features of the EEG signals containing different bandpass frequencies, which are suitable for frequency recognition over a short time scale.

- (2)

- Spatial convolution: As shown in Figure 4b, the spatial convolution is a convolutional filter acting on the channel that extracts the characteristics of spatial distributions of different channels. The spatial convolution is also often used to decompose the convolution operations to reduce the number of training parameters and the time needed to train the model.

2.5. Multi-Scale Feature Extraction Module

2.6. Global Average Pooling

2.7. Group Voting Mechanism

3. Proposed EEG Dataset—LSEEG Dataset

3.1. Details of LSEEG Dataset

3.2. Visualization of EEG Responses of LSEEG Dataset

3.3. Two-Tailed Paired t-Test on EEG Responses of LSEEG Dataset

4. Parameter Setting and Model Training

4.1. Parameter Setting of TSMG Model

- (1)

- Learning rate: The learning rate affects the convergence of the model [62]. In this paper, the decay method for the learning rate was chosen. The idea is to let the learning rate gradually decay with training. The algorithm is as follows:

- (2)

- Loss function: The loss function is used to measure the performance of the model [62]. Cross-entropy loss was used in this paper. It the most commonly used loss function for classification tasks, and is defined as follows:

- (3)

- Optimizer: The optimizer minimizes loss so that parameter update is not affected by the change in the scale of the gradient. Its formula is as follows:

4.2. Training Process of TSMG Model

4.3. Parameter Setting and Training of Models for Comparison

4.3.1. Feature Extraction of the Compared Models

4.3.2. Parameter Setting of the Compared Models

5. Evaluation

5.1. Analysis of Effectiveness of Multi-Scale Convolution

5.2. Analyzing Effectiveness of 1D Convolution

5.3. Analysis of Overall Accuracy

5.4. Visualizing Intermediate Results

5.5. Analyzing Contribution of EEG Leads

5.6. Statistical Hypothesis Test of Accuracy

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Debello, T.C. Comparison of eleven major learning styles models: Variables; appropriate populations; validity of instrumentation and the research behind them. Read. Writ. Learn. Disabil. 1990, 6, 203–222. [Google Scholar] [CrossRef]

- Bernarda, J.; Chang, T.W.; Popescuc, E.; Grafa, S. Learning style identifier: Improving the precision of learning style identification through computational intelligence algorithms. Exp. Syst. Appl. 2017, 75, 94–108. [Google Scholar] [CrossRef]

- Kirschner, P.A. Stop propagating the learning styles myth. Comp. Educ. 2017, 106, 166–171. [Google Scholar] [CrossRef]

- Felder, R.M. Learning and teaching styles in engineering education. Eng. Educ. 1988, 78, 674–681. [Google Scholar] [CrossRef]

- Kolb, A.Y.; Kolb, D.A. Learning styles and learning spaces: Enhancing experiential learning in higher education. Acad. Manag. Learn. Educ. 2005, 4, 193–212. [Google Scholar] [CrossRef] [Green Version]

- Fleming, N.D.; Mills, C. Not another inventory, rather a catalyst for reflection. Improv. Acad. 1992, 11, 137–143. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Q.; Zhao, W.; Du, X. Study on the users learning style model of correction under felder-silverman questionnaire. Mod. Distance Educ. 2010, 1, 62–66. [Google Scholar]

- Surjono, H.D. The evaluation of a moodle based adaptive e-learning system. Int. J. Inf. Educ. Technol. 2014, 4, 89–92. [Google Scholar]

- Yang, T.C.; Hwang, G.J.; Yang, S.J.H. Development of an adaptive learning system with multiple perspectives based on students’ learning styles and cognitive styles. J. Educ. Technol. Soc. 2013, 16, 185–200. [Google Scholar]

- Cha, H.J.; Kim, Y.S.; Park, S.H.; Yoon, T.B.; Jung, Y.M.; Lee, J.H. Learning style diagnosis based on user interface behavior for the customization of learning interfaces in an intelligent tutoring system. In Proceedings of the 8th International Conference on Intelligent Tutoring Systems, Jhongli, Taiwan, 26 June 2006; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Villaverde, J.E.; Godoy, D.; Amandi, A. Learning styles’ recognition in e-learning environments with feed-forward neural networks. J. Comput. Assist. Learn. 2006, 22, 197–206. [Google Scholar] [CrossRef]

- Song, T.; Lu, G.; Yan, J. Emotion recognition based on physiological signals using convolution neural networks. In Proceedings of the 2020 12th International Conference on Machine Learning and Computing, Shenzhen, China, 19 June 2020; pp. 161–165. [Google Scholar]

- Garofalo, S.; Timmermann, C.; Battaglia, S.; Maier, M.E.; Di Pellegrino, G. Mediofrontal negativity signals unexpected timing of salient outcomes. J. Cogn. Neurosci. 2017, 29, 718–727. [Google Scholar] [CrossRef] [PubMed]

- Sambrook, T.D.; Goslin, J. Principal components analysis of reward prediction errors in a reinforcement learning task. Neuroimage 2016, 124, 276–286. [Google Scholar] [CrossRef] [Green Version]

- Garofalo, S.; Battaglia, S.; di Pellegrino, G. Individual differences in working memory capacity and cue-guided behavior in humans. Sci. Rep. 2019, 9, 1–14. [Google Scholar]

- Bouchard, A.E.; Garofalo, S.; Rouillard, C.; Fecteau, S. Cognitive functions in substance-related and addictive disorders. In Transcranial Direct Current Stimulation in Neuropsychiatric Disorders; Springer: Cham, Switzerland, 2021; pp. 519–531. [Google Scholar]

- Dag, F.; Gecer, A. Relations between online learning and learning styles. Procedia-Soc. Behav. Sci. 2009, 1, 862–871. [Google Scholar] [CrossRef] [Green Version]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef]

- Black, M.H.; Chen, N.T.; Iyer, K.K.; Lipp, O.V.; Bölte, S.; Falkmer, M.; Tan, T.; Girdler, S. Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neurosci. Biobehav. Rev. 2017, 80, 488–515. [Google Scholar] [CrossRef]

- Alarcao, S.M.; Fonseca, M.J. Emotions recognition using EEG signals: A survey. IEEE Trans. Affect. Comput. 2019, 10, 374–393. [Google Scholar] [CrossRef]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2019, 10, 417–429. [Google Scholar] [CrossRef] [Green Version]

- Read, G.L.; Innis, I.J. Electroencephalography. In The International Encyclopedia of Communication Research Methods; John Wiley & Sons: Hoboken, NJ, USA, 2017; pp. 1–18. [Google Scholar]

- Rubin, S.J.; Marquardt, J.D.; Gottlieb, R.H.; Meyers, S.P.; Totterman, S.M.S.; O’Mara, R.E. Magnetic resonance imaging: A cost-effective alternative to bone scintigraphy in the evaluation of patients with suspected hip fractures. Skelet. Radiol. 1998, 27, 199–204. [Google Scholar] [CrossRef]

- DeYoe, E.A.; Bandettini, P.; Neitz, J.; Miller, D.; Winans, P. Functional magnetic resonance imaging (FMRI) of the human brain. J. Neurosci. Methods 1994, 54, 171–187. [Google Scholar] [CrossRef]

- Bryn, F. EEG vs. MRI vs. fMRI—What Are the Differences. Available online: https://imotions.com/blog/eeg-vs-mri-vs-fmri-differences/ (accessed on 18 October 2021).

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 1–28. [Google Scholar] [CrossRef]

- Zhang, B.; Chai, C.; Yin, Z.; Shi, Y. Design and implementation of an EEG-based learning-style recognition mechanism. Brain Sci. 2021, 11, 613. [Google Scholar] [CrossRef] [PubMed]

- Raven’s Advanced Progressivwe Matrices Practive. Available online: https://www.jobtestprep.com/raven-matrices-assessment-test?campaignid=13450189103&adgroupid=118530635690&network=g&device=m&gclid=EAIaIQobChMIw__46d3w8gIV024qCh0dcwhzEAAYASAAEgJUqfD_BwE (accessed on 18 October 2021).

- Sawangjai, P.; Hompoonsup, S.; Leelaarporn, P.; Kongwudhikunakorn, S.; Wilaiprasitporn, T. Consumer Grade EEG measuring sensors as research tools: A review. IEEE Sens. J. 2020, 20, 3996–4024. [Google Scholar] [CrossRef]

- Index of Learning Styles Questionnaire. Available online: http://www.engr.ncsu.edu/learningstyles/ilsweb.html (accessed on 18 October 2021).

- D’Amore, A.; James, S.; Eleanor, K.; Mitchell, E.K.L. Learning styles of first-year undergraduate nursing and midwifery students: A cross-sectional survey utilising the Kolb Learning Style Inventory. Nurse Educ. Today 2012, 32, 506–515. [Google Scholar] [CrossRef]

- Wang, F.Q. The Research of Distance Learner Learning Style Analysis System. Ph.D. Thesis, The ShanDong Normal University, Jinan, China, 2009. [Google Scholar]

- Garg, D.; Verma, G.K. Emotion recognition in valence-arousal space from multi-channel EEG data and wavelet based deep learning framework. Procedia Comput. Sci. 2020, 171, 857–867. [Google Scholar] [CrossRef]

- Buvaneswari, B.; Reddy, T.K. A review of EEG based human facial expression recognition systems in cognitive sciences. In Proceedings of the International Conference on Energy, Communication, Data Analytics and Soft Computing, Chennai, India, 1 August 2017. [Google Scholar]

- Katona, J.; Kovari, A. The evaluation of BCI and PEBL-based Attention Tests. Acta Polytech. Hung. 2018, 15, 225–249. [Google Scholar]

- Zarjam, P.; Epps, J.; Lovell, N.H. Beyond subjective self-rating: EEG signal classification of cognitive workload. IEEE Trans. Auton. Ment. Dev. 2015, 7, 301–310. [Google Scholar] [CrossRef]

- Magorzata, P.W.; Borys, M.; Tokovarov, M.; Kaczorowska, M. Measuring cognitive workload in arithmetic tasks based on response time and EEG features. In Proceedings of the 38th International Conference on Information Systems Architecture and Technology (ISAT), Szklarska, Poland, 17 September 2017; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Xu, T.; Zhou, Y.; Zhang, W. Decode brain system: A dynamic adaptive convolutional quorum voting approach for variable-length EEG data. Complexity 2020, 2020, 6929546. [Google Scholar] [CrossRef] [Green Version]

- Davelaar, E.J.; Barnby, J.M.; Almasi, S.; Eatough, V. Differential subjective experiences in learners and non-learners in frontal alpha neurofeedback: Piloting a mixed-method approach. Front. Hum. Neurosci. 2018, 12, 402. [Google Scholar] [CrossRef] [Green Version]

- Liao, C.Y.; Chen, R.C.; Tai, S.K. Emotion stress detection using EEG signal and deep learning technologies. In Proceedings of the IEEE International Conference on Applied System Innovation (ICASI), Chiba, Japan, 13–17 April 2018. [Google Scholar]

- Arns, M.; Etkin, A.; Hegerl, U.; Williams, L.M.; De Battista, C.; Palmer, D.M.; Fitzgerlad, P.B.; Harris, A.; DeBeuss, R.; Gordon, E. Frontal and rostral anterior cingulate(rACC)theta EEG in depression: Implications for treatment outcome? Eur. Neuropsychopharmacol. 2015, 25, 1190–1200. [Google Scholar] [CrossRef]

- Knyazeva, M.G.; Carmeli, C.; Khadivi, A.; Ghika, J.; Meuli, R.; Frackowiak, R.S. Evolution of source EEG synchronization in early Alzheimer&apos’s disease. Neurobiol. Aging 2013, 34, 694–705. [Google Scholar] [PubMed]

- Chiarelli, A.M.; Croce, P.; Merla, A.; Zappasodi, F. Deep learning for hybrid EEG-fNIRS brain–computer interface: Application to motor imagery classification. J. Neural Eng. 2018, 15, 036028. [Google Scholar] [CrossRef]

- Al-Saegh, A.; Dawwd, S.A.; Abdul-Jabbar, J.M. Deep learning for motor imagery EEG-based classification: A review. Biomed. Signal Process. Control 2021, 63, 102172. [Google Scholar] [CrossRef]

- Sangnark, S.; Autthasan, P.; Ponglertnapakorn, P.; Chalekarn, P.; Sudhawiyangkul, T.; Trakulruangroj, M.; Songsermsawad, S.; Assabumrungrat, R.; Amplod, S.; Ounjai, K.; et al. Revealing preference in popular music through familiarity and brain response. IEEE Sens. J. 2021, 21, 14931–14940. [Google Scholar] [CrossRef]

- Yin, Z.; Zhang, J. Task-generic mental fatigue recognition based on neurophysiological signals and dynamical deep extreme learning machine. Neurocomputing 2018, 283, 266–281. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, G.; Graham, D.; Holtzhauer, A. Towards an EEG-based brain-computer interface for online robot control. Multimed. Tools Appl. 2016, 75, 7999–8017. [Google Scholar] [CrossRef]

- Xu, T.; Zhou, Y.; Wang, Z.; Peng, Y. Learning emotions EEG-based recognition and brain activity: A survey study on BCI for intelligent tutoring system. Procedia Comput. Sci. 2018, 130, 376–382. [Google Scholar] [CrossRef]

- Xu, Y.; Haykin, S.; Racine, R.J. Multiple window time- frequency distribution and coherence of EEG using Slepian sequences and Hermite functions. IEEE Trans. Biomed. Eng. 1999, 46, 861–866. [Google Scholar]

- Li, X.; Song, D.; Zhang, P.; Zhang, Y.; Hou, Y.; Hu, B. Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 2018, 12, 162. [Google Scholar] [CrossRef] [Green Version]

- Fazli, S.; Grozea, C.; Danoczy, M.; Blankertz, B.; Popescu, F.; Muller, K.R. Subject independent EEG-based BCI decoding. Adv. Neural Inf. Process. Syst. 2009, 22, 513–521. [Google Scholar]

- Atkinsonand, J.; Campos, D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 2016, 47, 35–41. [Google Scholar] [CrossRef]

- Tsoi, A.C.; So, D.S.C.; Sergejew, A. Classification of Electroencephalogram using artificial neural networks. Adv. Neural Inf. Process. Syst. 1994, 6, 1151–1158. [Google Scholar]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Zhang, Z.; He, H. Hierarchical convolutional neural networks for EEG-based emotion recognition. Cogn. Comput. 2018, 10, 368–380. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [Green Version]

- León, J.; Escobar, J.J.; Ortiz, A.; Ortega, J.; González, J.; Martín-Smith, P.; Gan, J.Q.; Damas, M. Deep learning for EEG-based motor imagery classification: Accuracy-cost trade-off. PLoS ONE 2020, 15, e0234178. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Wu, Q.M.J.; Zheng, W.L.; Lu, B.L. EEG-based emotion recognition using hierarchical network with subnetwork nodes. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 408–419. [Google Scholar] [CrossRef]

- Li, Y.; Chen, W. A comparative performance assessment of ensemble learning for credit scoring. Mathematics 2020, 8, 1756. [Google Scholar] [CrossRef]

- Emotiv Epoc+. Available online: https://www.emotiv.com/EPOC/ (accessed on 20 October 2021).

- Kim, T.K. T test as a parametric statistic. Korean J. Anesthesiol. 2015, 68, 540. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bengio, Y.; Ian, G.; Aaron, C. Deep networks: Modern practices. In Deep Learning; MIT Press: Cambridge, MA, USA, 2017; pp. 156–423. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Saini, I.; Singh, D.; Khosla, A. QRS detection using K-Nearest Neighbor algorithm (KNN) and evaluation on standard ECG databases. J. Adv. Res. 2013, 4, 331–344. [Google Scholar] [CrossRef] [Green Version]

- Buscema, M. Supervised artificial neural networks: Backpropagation neural networks. In Intelligent Data Mining in Law Enforcement Analytics; Springer: Dordrecht, The Netherlands, 2013; pp. 119–135. [Google Scholar]

- Kaur, T.; Gandhi, T.K. Automated brain image classification based on VGG-16 and transfer learning. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Shanghai, China, 20 December 2019. [Google Scholar]

- Ou, X.F.; Yan, P.C.; Zhang, Y.; Tu, B.; Zhang, G.; Wu, J.; Li, W. Moving object detection method via ResNet-18 with encoder–decoder structure in complex scenes. IEEE Access 2019, 7, 108152–108160. [Google Scholar] [CrossRef]

- Wong, T.T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Montavon, G.; Samek, W.; Müller, K.R. Methods for interpreting and understanding deep neural networks. Digit. Signal Process. 2018, 73, 1–15. [Google Scholar] [CrossRef]

- Taheri, S.M.; Hesamian, G. A generalization of the Wilcoxon signed-rank test and its applications. Stat. Pap. 2013, 54, 457–470. [Google Scholar] [CrossRef]

| Model Configuration | |||||

|---|---|---|---|---|---|

| A | B | C | D | ||

| Input | |||||

| 1 × 1 Conv. (10) | 1 × 1 Conv. (10) | 1 × 3 Conv. (20) | 1 × 1 Conv. (40) | ||

| Batch Normalization | |||||

| ReLU | |||||

| 1 × 7 Conv. (20) | 1 × 5 Conv. (20) | 3 × 1 Conv. (40) | |||

| Batch Normalization | |||||

| ReLU | |||||

| 7 × 1 Conv. (40) | 3 × 1 Conv. (40) | 5 × 1 Conv. (40) | 3 × 1 Conv. (40) | ||

| Batch Normalization | |||||

| ReLU | |||||

| Concatenation | |||||

| EEG Feature Type | Feature Index | Notation of the Extracted Feature |

|---|---|---|

| Frequency-domain features | No. 1–56, power-related features | Mean power for all EEG channels (F3, F4, AF3, AF4, F7, F8, P7, P8, FC5, FC6, T7, T8, O1, and O2) in the theta (4–8 Hz), alpha (8–12 Hz), beta (12–30 Hz), and gamma (30–45 Hz) bands (14 channels × 4 power features = 56 features). |

| No. 57–76, power-difference-related features | Differences in mean power in 14 EEG channel pairs between the right and left scalps (F4-F3, AF4-AF3, T8-T7, P8-P7, and O2-O1) in the theta, alpha, beta, and gamma bands (5 channel pairs × 4 power differences = 20 features). | |

| Time-domain features | No. 77–174, time-domain-related features | Mean, variance, zero crossing rate, Shannon entropy, spectral entropy, kurtosis, and skewness of 14 EEG channels (7 features × 14 channels = 98 features). |

| Classification Method | Hyper-Parameter Settings |

|---|---|

| SVM | Regularization and number of kernel parameters: 16, 128. |

| BP | Numbers of hidden nodes, layers and training epochs: 144, 4, 60. |

| KNN | k value: 26 |

| VGGNet | Number of convolution layers, batch size, optimizer, and number of training epochs: 3, 30, Adam, 100 |

| ResNet | Number of convolution layers, fully connected layers, basic block size, batch size, optimizer, learning rate, number of training epochs: 17, 1, 3 × 3, 30, SGD, 0.1, 100 |

| Classifier | Leave-One-Out Cross-Validation Accuracy (%) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F-1 | F-2 | F-3 | F-4 | F-5 | F-6 | F-7 | F-8 | F-9 | F-10 | F-11 | F-12 | F-13 | F-14 | LOO Average (%) | |

| TSMG | 78.56 | 76.21 | 72.35 | 75.68 | 73.24 | 71.62 | 72.69 | 70.81 | 69.72 | 73.54 | 67.28 | 68.34 | 73.61 | 73.50 | 72.65 |

| SVM | 64.23 | 65.37 | 61.20 | 65.37 | 63.28 | 64.78 | 60.43 | 61.57 | 62.78 | 63.12 | 66.31 | 60.39 | 61.24 | 64.57 | 63.18 |

| BP (3 h-layers) | 61.56 | 60.37 | 59.24 | 58.30 | 57.56 | 60.58 | 58.36 | 57.98 | 58.36 | 61.58 | 61.25 | 57.58 | 59.42 | 58.38 | 59.32 |

| BP (5 h-layers) | 58.64 | 60.31 | 57.12 | 56.36 | 58.64 | 56.84 | 56.36 | 61.47 | 58.71 | 57.62 | 56.55 | 58.12 | 57.23 | 56.89 | 57.91 |

| KNN | 53.64 | 52.57 | 55.61 | 50.47 | 51.84 | 50.67 | 54.87 | 51.37 | 55.67 | 54.32 | 51.64 | 50.47 | 52.78 | 52.31 | 52.73 |

| VGGNet | 68.54 | 65.71 | 66.80 | 67.52 | 67.91 | 64.33 | 62.41 | 64.56 | 64.81 | 62.72 | 66.23 | 65.34 | 62.65 | 64.40 | 65.28 |

| ResNet | 71.31 | 68.43 | 65.67 | 70.54 | 68.40 | 72.62 | 67.32 | 66.31 | 67.42 | 65.14 | 68.47 | 67.24 | 70.21 | 67.30 | 68.31 |

| i | W Statistics | p-Value |

|---|---|---|

| SVM | 105.0 | 6.10352 × 10−5 |

| BP (three-layer) | 105.0 | 6.10352 × 10−5 |

| BP (five-layer) | 105.0 | 6.10352 × 10−5 |

| KNN | 105.0 | 6.10352 × 10−5 |

| VGGNet | 105.0 | 6.10352 × 10−5 |

| ResNet | 101.0 | 0.00043 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Shi, Y.; Hou, L.; Yin, Z.; Chai, C. TSMG: A Deep Learning Framework for Recognizing Human Learning Style Using EEG Signals. Brain Sci. 2021, 11, 1397. https://doi.org/10.3390/brainsci11111397

Zhang B, Shi Y, Hou L, Yin Z, Chai C. TSMG: A Deep Learning Framework for Recognizing Human Learning Style Using EEG Signals. Brain Sciences. 2021; 11(11):1397. https://doi.org/10.3390/brainsci11111397

Chicago/Turabian StyleZhang, Bingxue, Yang Shi, Longfeng Hou, Zhong Yin, and Chengliang Chai. 2021. "TSMG: A Deep Learning Framework for Recognizing Human Learning Style Using EEG Signals" Brain Sciences 11, no. 11: 1397. https://doi.org/10.3390/brainsci11111397