Abstract

In the last two decades, both the amount and quality of geoinformation in the geosciences field have improved substantially due to the increasingly more widespread use of techniques such as Laser Scanning (LiDAR), digital photogrammetry, unmanned aerial vehicles, geophysical reconnaissance (seismic, electrical, geomagnetic), and ground-penetrating radar (GPR), among others. Furthermore, the advances in computing, storage and visualization resources allow the acquisition of 3D terrain models (surface and underground) with unprecedented ease and versatility. However, despite these scientific and technical developments, it is still a common practice to simplify the 3D data in 2D static images, losing part of its communicative potential. The objective of this paper is to demonstrate the possibilities of extended reality (XR) for communication and sharing of 3D geoinformation in the field of geosciences. A brief review of the different variants within XR is followed by the presentation of the design and functionalities of headset-type mixed reality (MR) devices, which allow the 3D models to be investigated collaboratively by several users in the office environment. The specific focus is on the functionalities of Microsoft’s HoloLens 2 untethered holographic head mounted display (HMD), and the ADA Platform App by Clirio, which is used to manage model viewing with the HMD. We demonstrate the capabilities of MR for the visualization and dissemination of complex 3D information in geosciences in data rich and self-directed immersive environment, through selected 3D models (most of them of the Montserrat massif). Finally, we highlight the educational possibilities of MR technology. Today MR has an incipient and reduced use; we hope that it will gain popularity as the barriers of entry become lower.

1. Introduction

Approximately since the beginning of the century, techniques such as Laser Scanning (LiDAR) and digital photogrammetry have made it possible to obtain 3D models of sites of interest (e.g., slopes, open pits, tunnels and dams) with increasing speed, ease and versatility. Improvements in high resolution point cloud acquisition can be followed, for instance, in [1,2,3,4,5,6,7,8,9,10,11].

The improvement in the resolution and quality of 3D models has also been favored by the emergence of unmanned aerial vehicles (UAVs), also known as drones. They make the acquisition of massive photograms (recently, even of LiDAR) extremely simple, being able to cover wider areas with convenient point-of-view and close distances. This is a true advantage in steep terrains such as slopes and areas of difficult access [12].

Until 2016, the increase in the number of publications using 3D models obtained with LiDAR and digital photogrammetry has been significantly higher than the general increase in publications in geosciences [8]. A more recent study [13] confirms the growing use of 3D models during field investigation and research, but notes that 3D geoinformation is not used as much in the dissemination of results or in the final publication.

On the other hand, geomechanical subsoil reconnaissance techniques continue to provide crucial information: in boreholes, in reconnaissance trenches and galleries, through geophysics (seismic, electrical, geomagnetic) and Ground Penetrating Radar (GPR), among others. In structural geology, traditional mapping methods have evolved from the geological compass, tape and paper field book to smart phones for the dip/dip direction logging, and the use of tablets for sketching photographic images. Additionally, Terrestrial Laser Scanning (TLS) and digital photogrammetry are now available to assist logging in wider areas, with an improvement in the completeness and representativeness of the results [14].

All these sources of information on the geometry and properties of the ground are of a very different nature and format, making them quite complementary. Thus, in any engineering geology/ mining project or investigation (slopes, excavations, open pits, dams, tunnels and caverns), it is convenient to have global and simultaneous access to this data, all together and in 3D.

However, despite these scientific and technical advances, it is still a common practice to simplify the 3D data in 2D static images, losing part of its communicative potential [14,15]. Because of technical limitations, the collected 3D data is usually displayed in 2D on monitors and in PDF or paper documents (static plan and elevation views, with some sections), leading to a notably different experience when compared to fieldwork. The sense of proportion and 3D perception are lost, and the comprehension of the geometry is reduced, which may result in a biased interpretation of the scene. In some cases, these 2D static views have been selected by a single professional, perhaps influenced by their previous experience and background, and may not be as useful to others, including rights-holders and stakeholders whose input may be critical for the success of a project.

Today, engineers and geoscientists can overcome this simplification (3D real world to 2D plan and section views) to improve the communication of the geological, geotechnical and geoenvironmental information to stakeholders using technologies that liberate users from paper or screen-bound experiences by offering natural and intuitive interactions with the data being presented. According to [16,17], this communication today faces the following challenges: we must consult many data sources and inspect them simultaneously (multisource); the tools to visualize the different sources must be as simple or as complex as needed, but not more so, allowing for the interrogation of data with the required level of sophistication for the project; the assessment of the data and the design process must be collaborative, taking into account the stakeholders’ points of view and feedback (multiple “engaged users” interrogating many data sources and looking at them simultaneously to reach a shared understanding in contrast with the current reality of a presenter “driving” the experience and multiple “passengers” listening); different brains process data in different ways, so it is better to ‘show’ data rather than ‘tell’ the audience what they must see or interpret from it; the presentation of the information should be immersive, because people understand data better when it is presented in 3D and they can walk through it in a natural way; and the scale of the scene must be as large as it takes to have enough resolution detail (large size, filling the room with the model if needed).

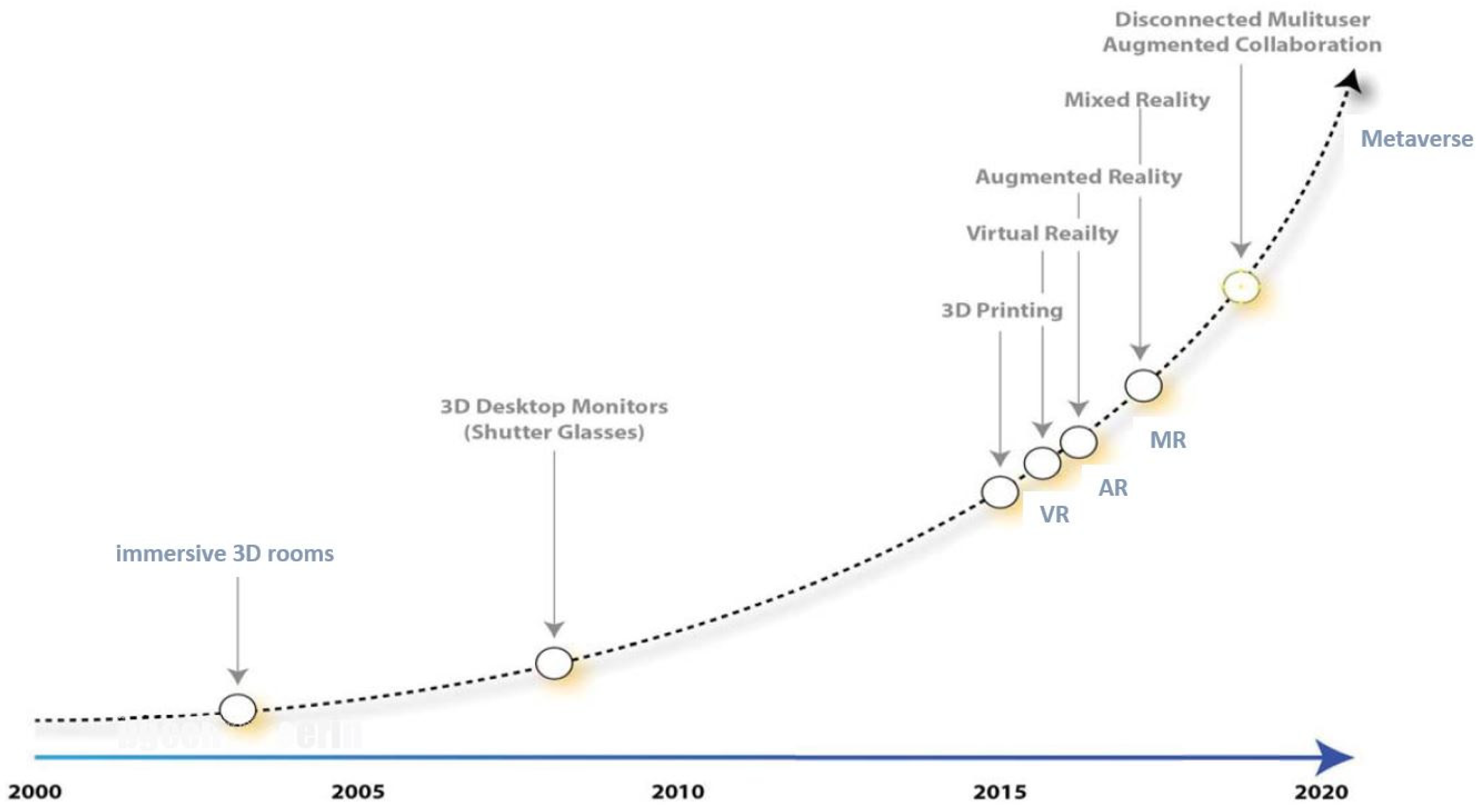

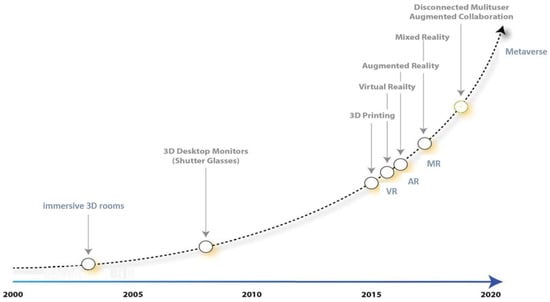

Fortunately, the advances in computing, storage and visualization resources are driving the dissemination of geoinformation dynamically and in 3D [15,18], both through web viewers and through headset-type devices (extended reality head mounted displays) (Figure 1).

Figure 1.

Technical evolution of Understanding and Visualization in the last 20 years, modified from [18].

In the authors’ experience, traditional 2D representations are still valid and work in many cases. They are clear in their format and easy to publish (e.g., for the submission of official project documentation). For more complex situations, viewing the information in 3D using a web viewer is necessary, even though it means seeing a 3D model through a 2D “window” (a screen or a portable device), perhaps with some added information to enhance the scene (augmented reality). When there is a large amount of information, especially underground, and the model is very complex, it is convenient to experience it as realistically as possible, walking inside the model as if you were in a ‘see-through’ world. This is necessary to properly understand the relative situation of the data, and is easily achieved with virtual reality (VR) or mixed reality (MR).

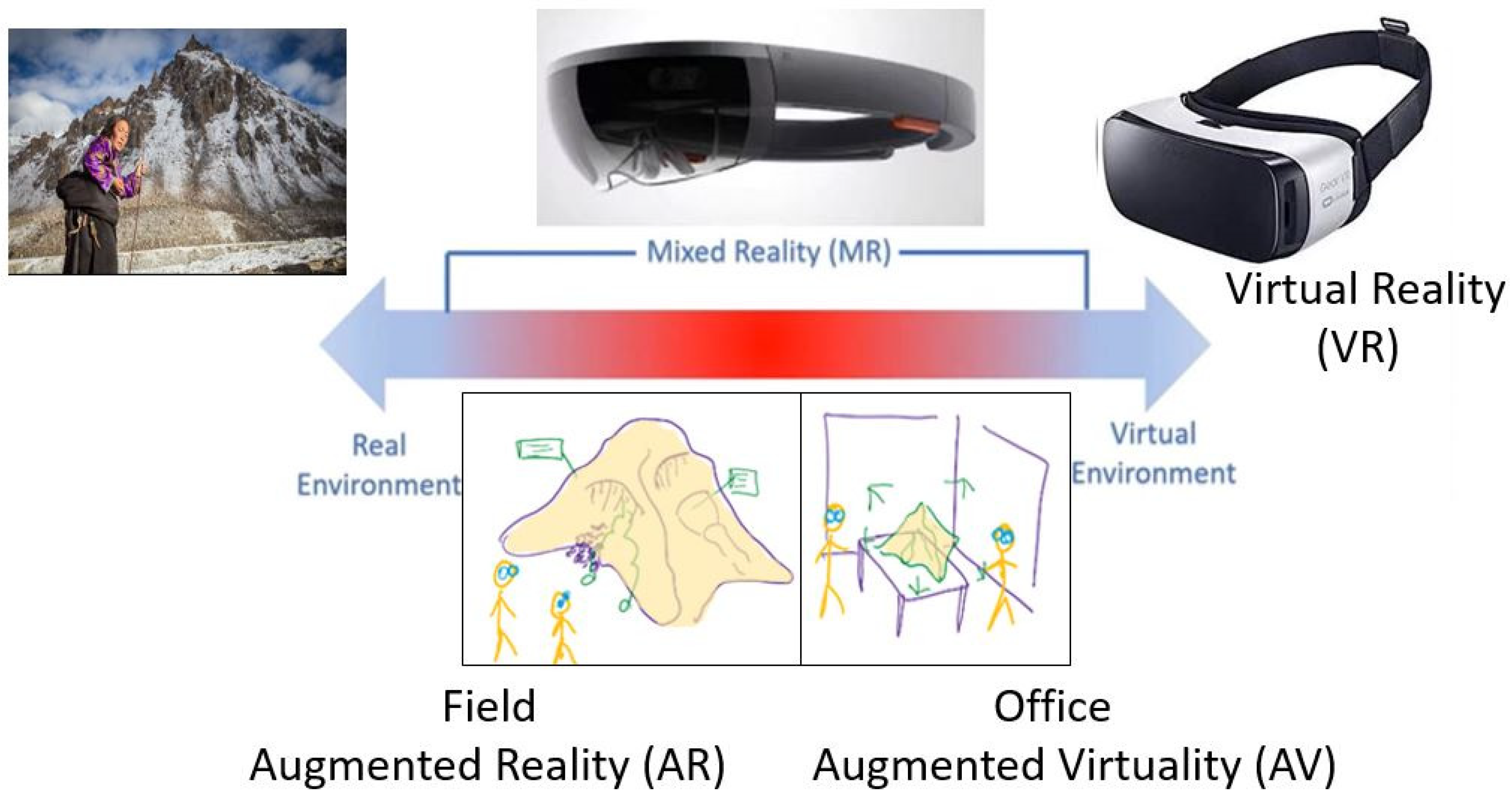

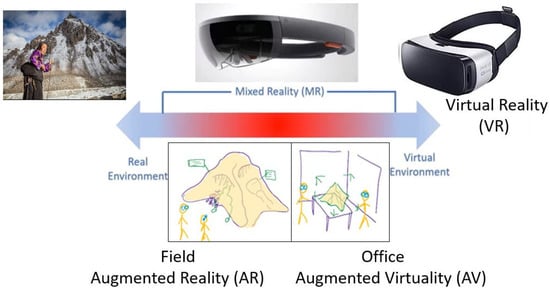

In Figure 2, the reality–virtuality possibilities are presented, following the ideas of [19,20]. Starting in the Real Environment, we find the so-called Extended Reality (XR) environments. XR is a term that is increasingly used in computer vision (see [21,22,23], for instance) and refers to computer-generated graphics presented to the user usually through some kind of wearable headsets. This solution enables the creation of Mixed and Virtual Environments which the users can interact with. Therefore, XR encompasses several technologies such as mixed reality (MR), augmented reality (AR), virtual reality (VR).

Figure 2.

The reality–virtuality continuum [19,20] (adapted from [15,23]).

The studies referenced in [24,25] highlight the following characteristics of the “virtualization” (XR): (hyper)realistic and therefore three-dimensional scenarios with textures, lighting and perspectives; immersion of the user (360°) in such a scenario; real-time response, equivalent to field practices and experimental tests; optional incorporation of different layers of data/hypertext that may enhance the objects in view (such as in video games).

Over time, XR technology is being applied in an increasing variety of fields. From the videogames sector (the big market) there are now solutions for a wide range of needs, such as assisted manufacturing and maintenance [22]; training of workers (for instance, in risk prevention); robot and vehicle remote guidance; architectural designs, real estate promotions and refurbishments [21,26]; construction [25,27,28,29]; assisted surgery (even remotely, [30,31]); and many others. It is worth mentioning the application of XR for the preservation and visualization of cultural heritage sites. Refs. [32,33] show how VR can help extend the 3D and immersive experience to support historic heritage and redeveloped post-industrial sites.

Within the geosciences field, with XR immersive options, several of the 2D limitations and some of the challenges listed before [16] can be overcome. In particular, it is possible to share information, walk around or inside the 3D models, and work on the geoinformation collaboratively, in a more natural and intuitive manner.

Between the two options (VR and MR), in this paper we develop the possibilities of MR. It is worth mentioning the following advantages of the MR: the glasses are transparent and enable communication and interaction between users looking at the same 3D holographic model and their physical environment as if they were not wearing an HMD. It is possible to move and walk around and inside the model, in some cases below the surface of the terrain. It is the authors’ understanding that this enhances communication and trust. From a communication perspective, any VR ‘connected session’ relies on digital avatars. For some users, being able to speak face to face and look someone in the eye is easier to adopt than avatars and is important in building trust.

Certainly, VR is more powerful since the HDM are usually tethered to a computer, allowing for more complex visualizations with more data at the same time. However, it is mostly an isolated, personal experience since the user cannot generally see their surroundings, unless they are using VR headsets that bring the real world into the device via passthrough cameras and added to a pixel-dominant experience. This technology continues to advance and is heading towards overcoming the challenges of bulky VR headsets and near-eye focal dynamics for illuminated pixels on the device. VR is also known to cause nausea and vertigo in some users, making communication difficult, whereas this occurs less frequently in MR as the users’ peripheral vision of their surroundings is preserved. One could say that VR allows the user to jump into the virtual environment and interact with the data, whereas with MR it is possible to release the virtual 3D model and place it in the middle of the real world, allowing interaction with the data and with other users more naturally. Both worlds (MR and VR) have their applications. Moreover, both worlds offer advantages over a 2D static display.

In recent years, important advances in MR have already been made in the field of geosciences. So far, this technology has been mainly used in North America by large private consulting firms such as SRK Consulting or BGC Engineering (Canada), public institutions such as the Crown-Indigenous Relations and Northern Affairs (CIRNAC, Gatineau, QC, Canada) or some Departments of Transportation (DoT’s, Washington, DC, USA), R&D university departments such as the Simon Fraser University (SFU, Burnaby, BC, Canada) or the Case Western Reserve University (Cleveland, OH, USA), among others. In these organizations, MR has enabled fast and effective communication of complex 3D challenges.

It is beyond any doubt that MR/VR has major present and future applications in rock engineering in terms of data collection, interpretation and communication. Prof. D. Stead (SFU, Burnaby, BC, Canada) spoke about “Geovisualization of mixed reality holographic models” in the final part of his ISRM lecture [23], giving a set of inspiring examples. At SFU, the Engineering Geology and Resource Geotechnics Research Group has used MR technology in numerous engineering projects including open pits and landslide investigation, developing several applications based on MR HMD, such as “Virtual Core Logging”, “Virtual rock outcrop”, or “EasyMap/EasyMine” [14,34,35]. For instance, in [14], the developers used state-of-the-art new VR/MR technology to provide in the field the virtual tools to be used for real outcrop mapping (virtual compass, virtual tape and so on). They also developed holographic mapping procedures for office-based mapping of virtual rock outcrops acquired with ground- and UAV-based LiDAR and with digital photogrammetry.

On the other hand, BGC Engineering (Vancouver, BC, Canada) has developed some MR tools to demonstrate and communicate the results of company projects around the world. Some details of the developments can be found in [18,36]. More information is available as video material in [16,37,38,39]. Section 3 in this paper presents some examples which use the MR platform developed (initially) by this company.

To finish this introductory section, a special mention is devoted to the application of XR to teaching and learning in geosciences. The new visualization techniques may boost the teaching methods, in particular in engineering [25,28,29]. Some experiences are reported at university level [40,41], whereas other initiatives have been introduced as early as secondary and high school [42,43,44].

Refs. [41,45] highlight the advantage of immersive XR to show students geological sites and outcrops that are remote and difficult to visit, even more so during the pandemic period.

Close to the geosciences field there have been some MR teaching and learning initiatives at, among others: Simon Fraser University (SFU, Burnaby, BC, Canada), Case Western Reserve University (Cleveland, OH, USA), Duke University (Durham, NC, USA), Washington University (Washington, DC, USA), Aalto University (Espoo, Finland), Rome University-NHAZCA (Rome, Italy), Polytechnique Montréal (Montreal, QC, Canada), and Technical University of Catalonia (UPC, Barcelona, Spain). In the latter, the MR is used in geohazard and risk communication to end-users in two R&D projects [46,47].

Section 2 describes the MR HMD in use (hardware and software). Then, different 3D models of interest will be presented to highlight the possibilities of MR in geomechanics, followed by a section of conclusions.

2. Materials and Methods

In this section, after a brief market overview, we describe the MR HMD (Microsoft HoloLens 2) used for the visualization of 3D models of interest in the geosciences field, which will in turn be presented in detail in the results section. This part also includes a description of an application called ADA Platform, a software by Clirio Inc., which operates inside the holographic device to manage the 3D data and ensure the correct visualization of the models.

The first XR headsets were available as concept tools around 2010. Since 2015, several working MR solutions have managed to reach enterprise users and general public on a routine and massive scale. According to [25], the main improvements over the years have been the miniaturization and portability, and the increase in the memory, calculation and data transfer capacity of both graphics cards (GPU) and processors (CPU). Currently, the software inside the HMD has a better capacity to handle 3D scenes, and to move them in real time, at highly realistic levels. This has been achieved thanks to the evolution in human–computer interaction technologies (HCI).

The main driving force in XR’s fast evolution is the entertainment industry, including videogames. Secondly, there is the contribution of multimedia companies, general tech providers for film and television. Finally, we have the CAD and animation solutions developed specifically for engineering and architecture. According to [48], professional headset sales grew almost tenfold between 2020 and 2021, when 10 million devices were delivered.

Some key models released in recent years are, among others: Oculus Rift glasses (later named as HTC Vive); Google Tango (or “Google glasses”); Magic Leap; Azure Kinect DK; and Microsoft HoloLens. The total number of HoloLens sold through the end of 2021 is estimated to be around half a million devices [49]. Apple is also reported to be working to release its own new XR solution. Some of these products will evolve, whereas others will disappear, given that this business is nowadays interlinked with the eventual success of the brand-new concept of the “metaverse” and the evolution of HCI.

From now on, we describe the MR HMD we use as a basic tool for the visualization of 3D models in this paper, i.e., Microsoft HoloLens [50]. The first model, HoloLens 1, was released in 2015, and HoloLens 2 was released region by region from 2019.

The Microsoft HoloLens 2 (Figure 3) is a cutting-edge self-contained wearable computer, with a double semi-transparent holographic screen positioned in front of the eyes, with several built-in sensors to map the surrounding environment in real time. Thanks to its see-through lenses, the user is aware of objects and people around them, a feature that constitutes the base of MR.

Figure 3.

Microsoft’s HoloLens MR see-through holographic HMD. (Left) HoloLens 1. (Center) HoloLens 2. (Right) detail of some built-in sensors: (a) MEMS projector; (b) two visible-light cameras for head tracking; (c) IR camera for eye tracking; (d) depth sensor.

In the center of the frontal part (Figure 3, right), the HoloLens has a 3D camera (1-MP time-of-flight depth sensor). Four 8-MP cameras are located to the right and to the left, two and two, respectively. The cameras are continuously scanning the space around the user. The HPU creates a triangular mesh of the user’s environment, in real time. This 3D information is used to place virtual objects into the real world in a stable way, anchoring the digital projections to the user-space. An Inertial Measurement Unit (IMU), with MEMS accelerometer, gyroscope and magnetometer, complements the other sensors in the tracking of the user’s head position and attitude. Two IR cameras are tracking the position of the user’s eyes. The system projects a slightly different view of the digital 3D model in front of each eye. The user’s human stereoscopic vision ability achieves the 3D visualization.

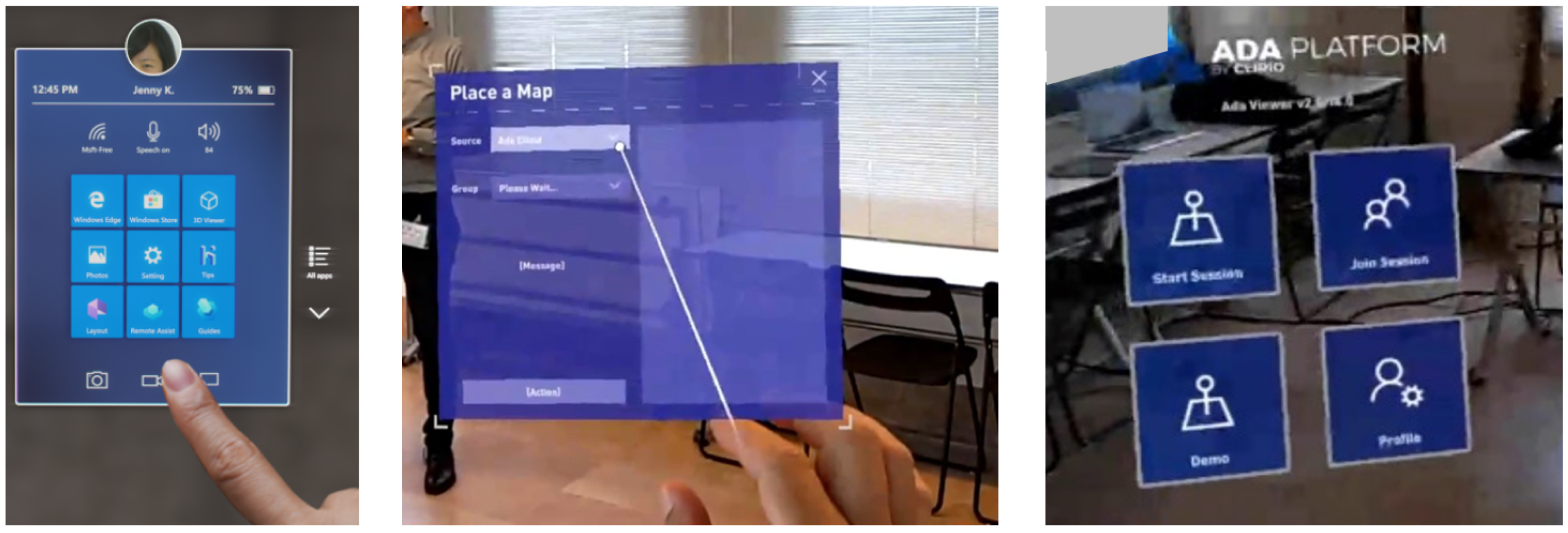

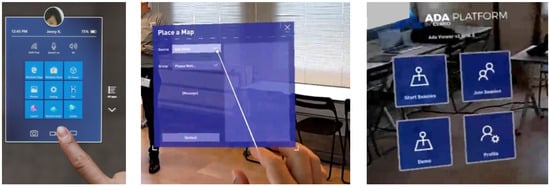

Each HoloLens has an internal computer (Qualcomm Snapdragon 850 compute platform; 4 GB DRAM LPDDR4x memory; second-generation custom-built holographic processing unit (HPU); 64 GB internal Storage UFS 2.1). The operating system is Windows Holographic (a version of Windows 10 designed for HoloLens 2), where the user can execute Universal Windows Platform (UWP) applications (Figure 4). Therefore, each HoloLens can work in a stand-alone mode (untethered), although, when necessary, a Wi-Fi connection is needed to download the 3D models and to communicate with other users.

Figure 4.

(Left) HoloLens 2 Home menu. (Center) The user must tap the desired app/option with their finger, or “air-tap” from distance with the pointer, as in the image in the centre. (Right) Main menu of the viewer of the ADA Platform by Clirio.

A hand-tracking system for gesture recognition allows the user to command the system and/or interact with the holograms as if they were real objects (Figure 4). Optionally, the system can be controlled by voice, through the 5 built-in microphones and 2 speakers.

In [14] the HoloLens 2 UWP management is described. The applications for the HoloLens are created by using Unity game engine software [51] and the Mixed Reality Toolkit, an open-source project supported by Microsoft that provides primary scripts to accelerate the development of MR applications. Unity supports 3D mesh files and bitmap image files.

In the present work we act as users of ADA Platform in order to manage the 3D models and holograms. Our main goal is to investigate the capabilities of MR for the visualization and dissemination of complex 3D models in geosciences rather than focus on new software development. Therefore, although we use the aforementioned app, similar results should be obtained with other UWP applications.

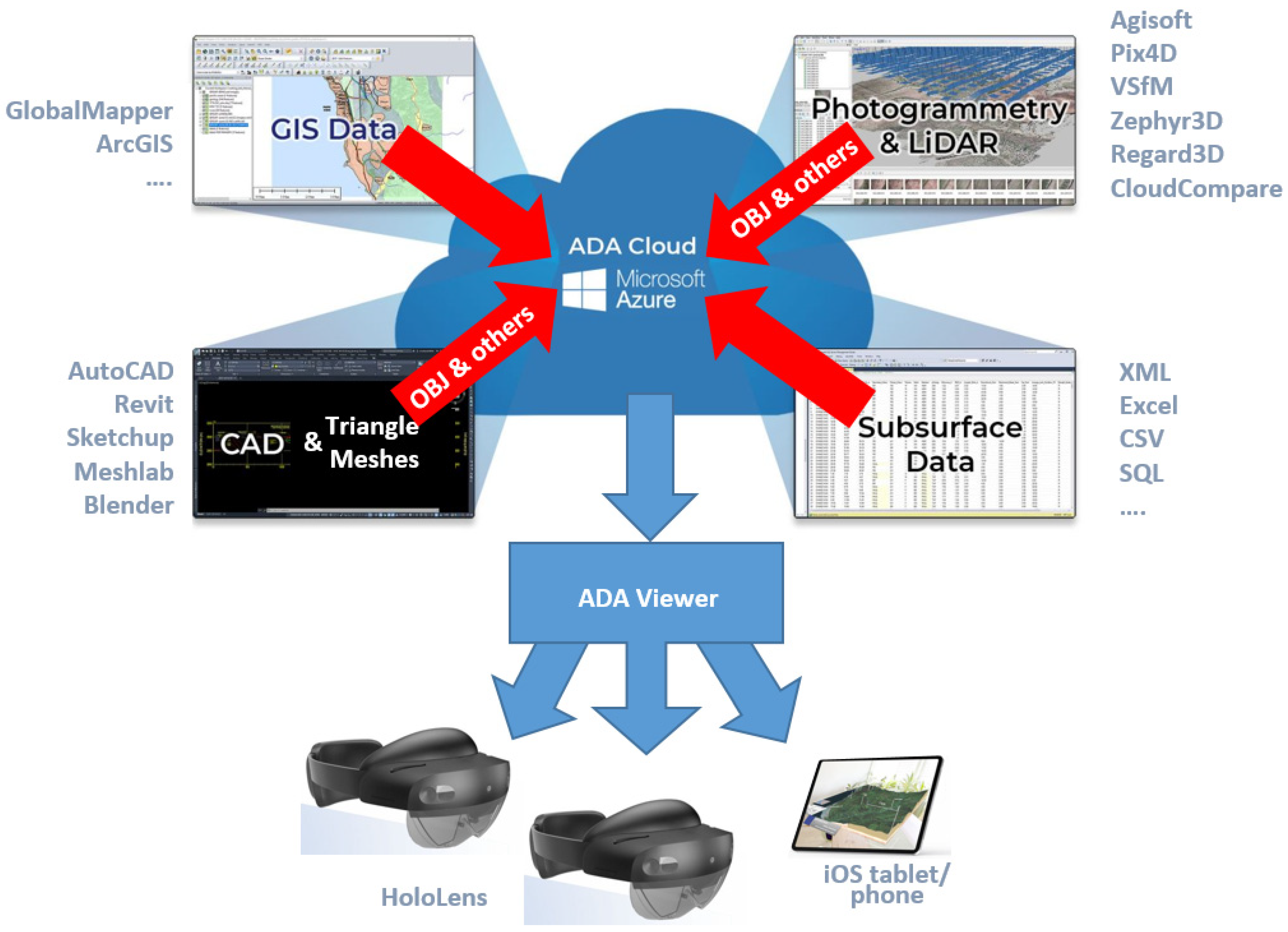

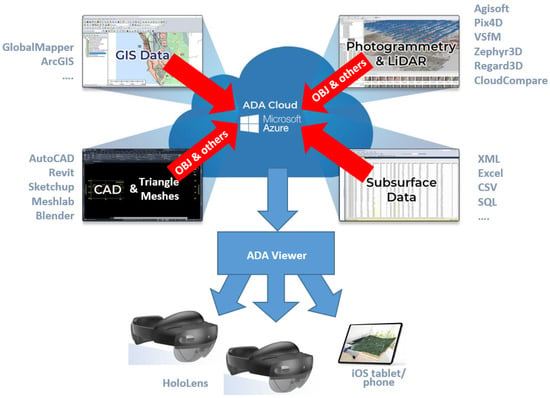

ADA Platform [52], developed by Clirio Inc. in collaboration with BGC Engineering Inc., is a UWP application for HoloLens 2. It is programmed in Unity3D, based in the cloud, and allows the conversion of 3D digital models (already available) to 3D holographic models for HoloLens. It is worth noting that 3D models are created outside HoloLens, inside a desktop or laptop computer, before the MR visualization. In Figure 5 we sketch the workflow with ADA Platform. The original datasets may be collected, for example, using acquisition techniques such as TLS, Aerial Laser Scanning (ALS), digital photogrammetry (terrestrial, drone or aerial), or other remote sensing techniques. The processing of the raw data may be carried out on a computer with specific programs, some of which appear on the sides of Figure 5. Nowadays, the resulting “cloud” can contain tens or hundreds of millions of points, which can be used to create a “mesh” file (OBJ in Figure 5). This corresponds to a surface formed by a massive number of triangles linking real points (nodes) of the object. This surface can be endowed with textures (visible color, IR, multispectral, or any scalar property) that can be overlaid onto the 3D mesh, wrapping the object. The texture information may be stored, for instance, in MTL and PNG/JPG files.

Figure 5.

General workflow with the ADA Platform by Clirio: from the data acquisition to the geovisualization in HoloLens or on an iOS tablet/phone (modified from [52]).

Due to current HoloLens limitations (processing power, memory and resolution), an optimization of the datasets is required to increase the performance of visualizations. This optimization is carried out after uploading the files from the user computer program to the ADA cloud, when the original model (files OBJ + MTL + PNG, for instance) is converted to a format suitable for the visualization in HoloLens 2. This conversion is automatic and quick (10–60 min depending on the mesh size).

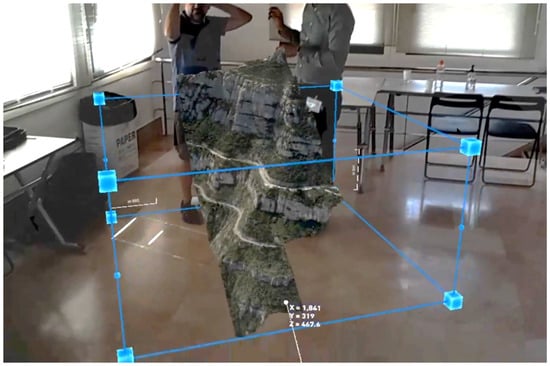

Once converted, the 3D holographic models are downloaded to the HoloLens via the “ADA viewer” app from anywhere with an internet connection. The version of ADA Viewer used in the present work is v2.8.14.0 (Figure 4, right), although Clirio has recently released a new software platform that provides additional features and workflows (Clirio view, [53]). After downloading, the user can open the 3D model and experience the MR viewing in the HoloLens (Figure 6). As can be seen in Figure 5, it is also possible to visualize the holographic model on a tablet (iPhone o iPad), but in 2D.

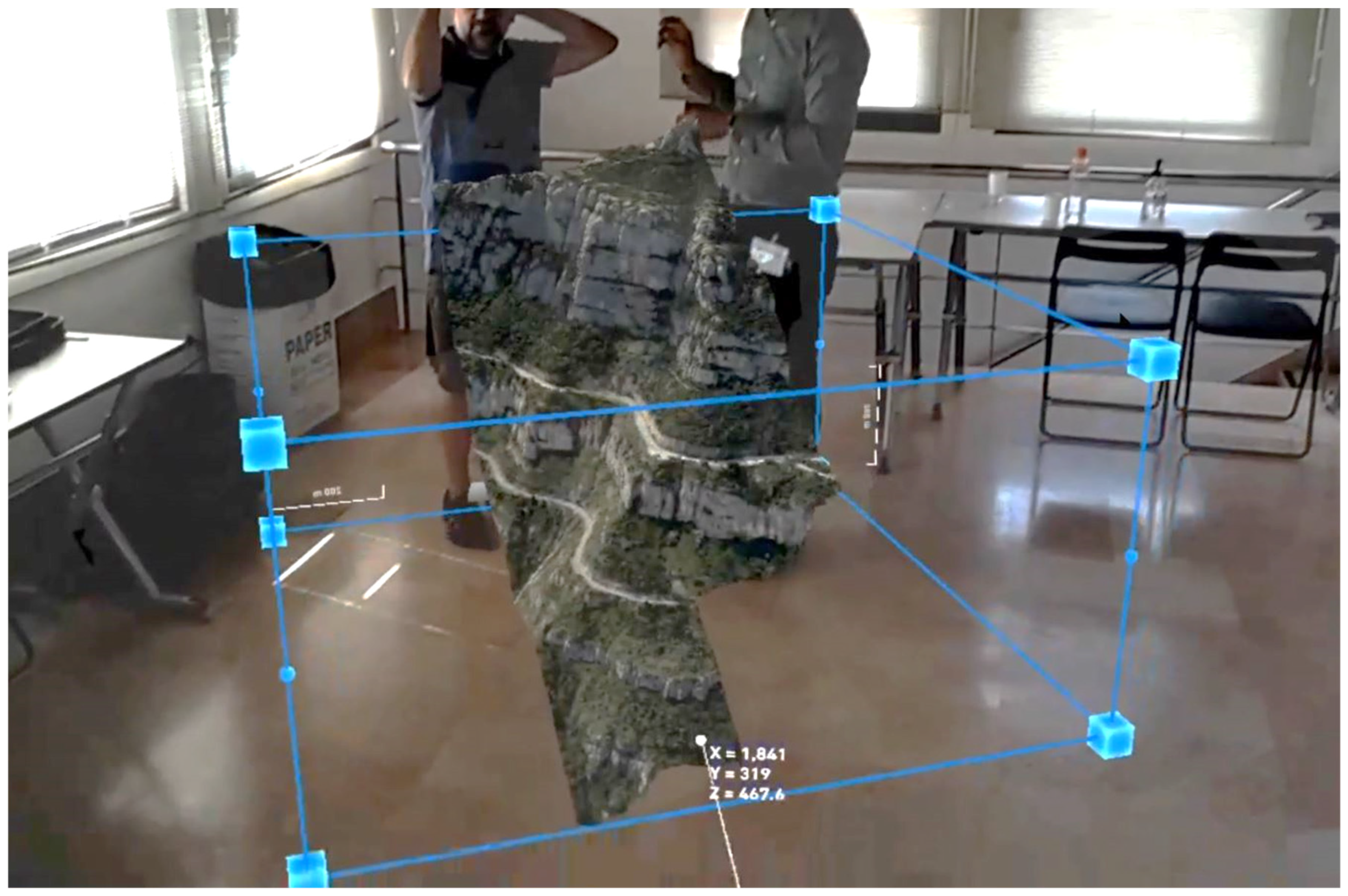

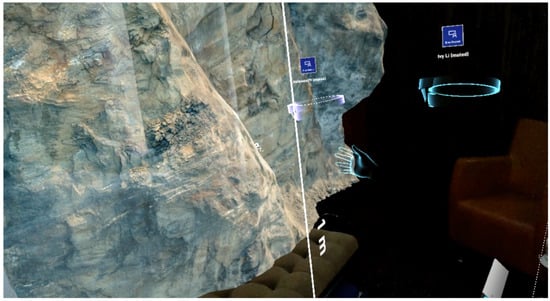

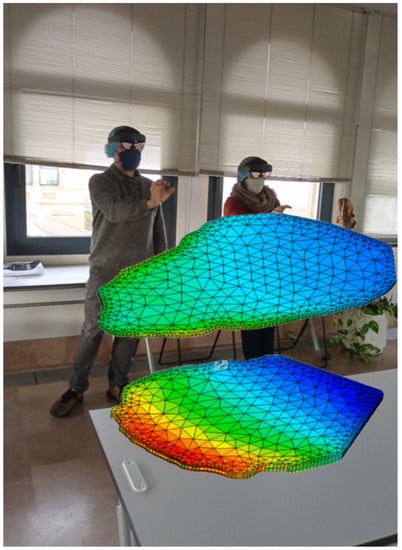

Figure 6.

Example of MR as seen in the office through the HoloLens 2 (3D terrain model in Montserrat massif, Degotalls area).

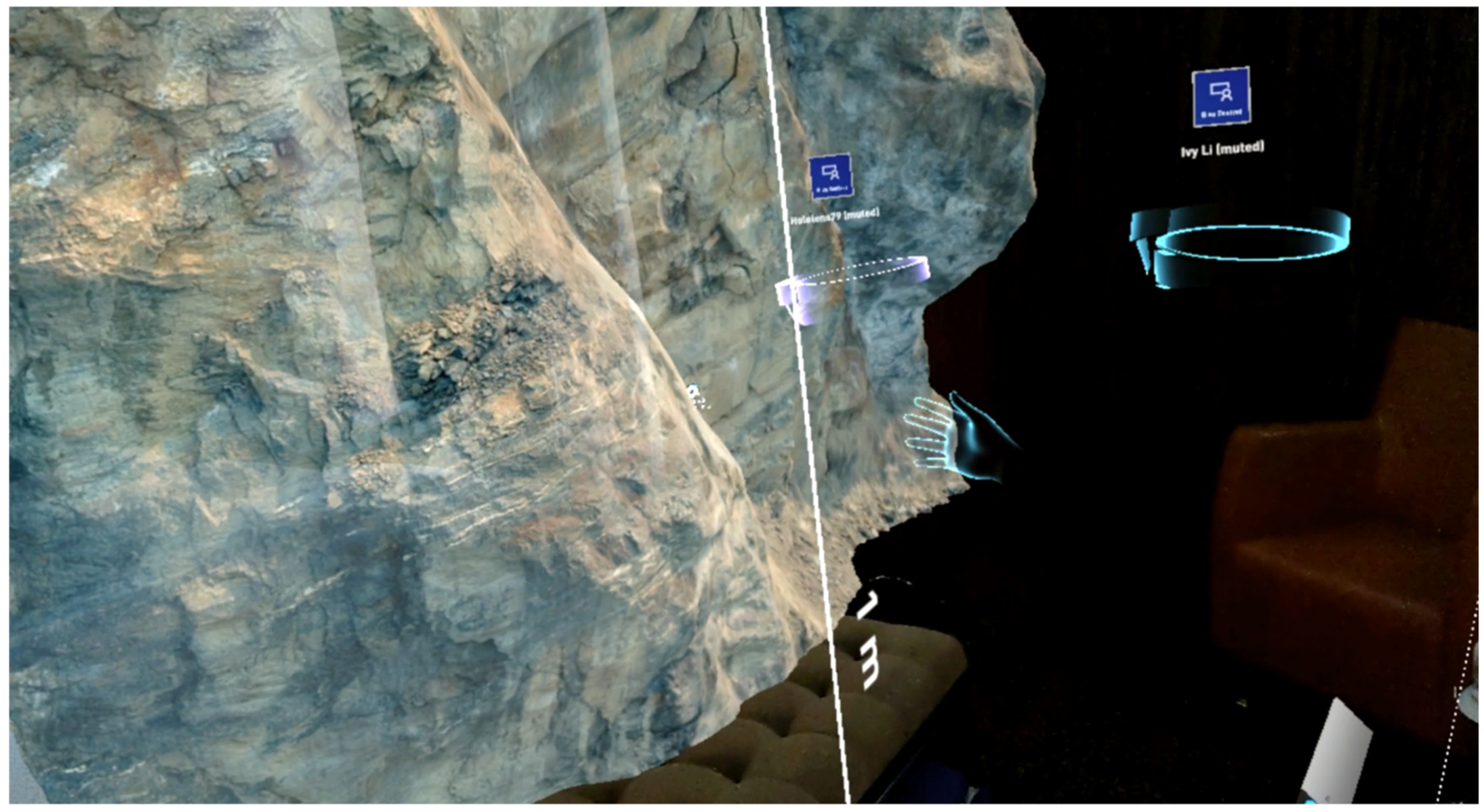

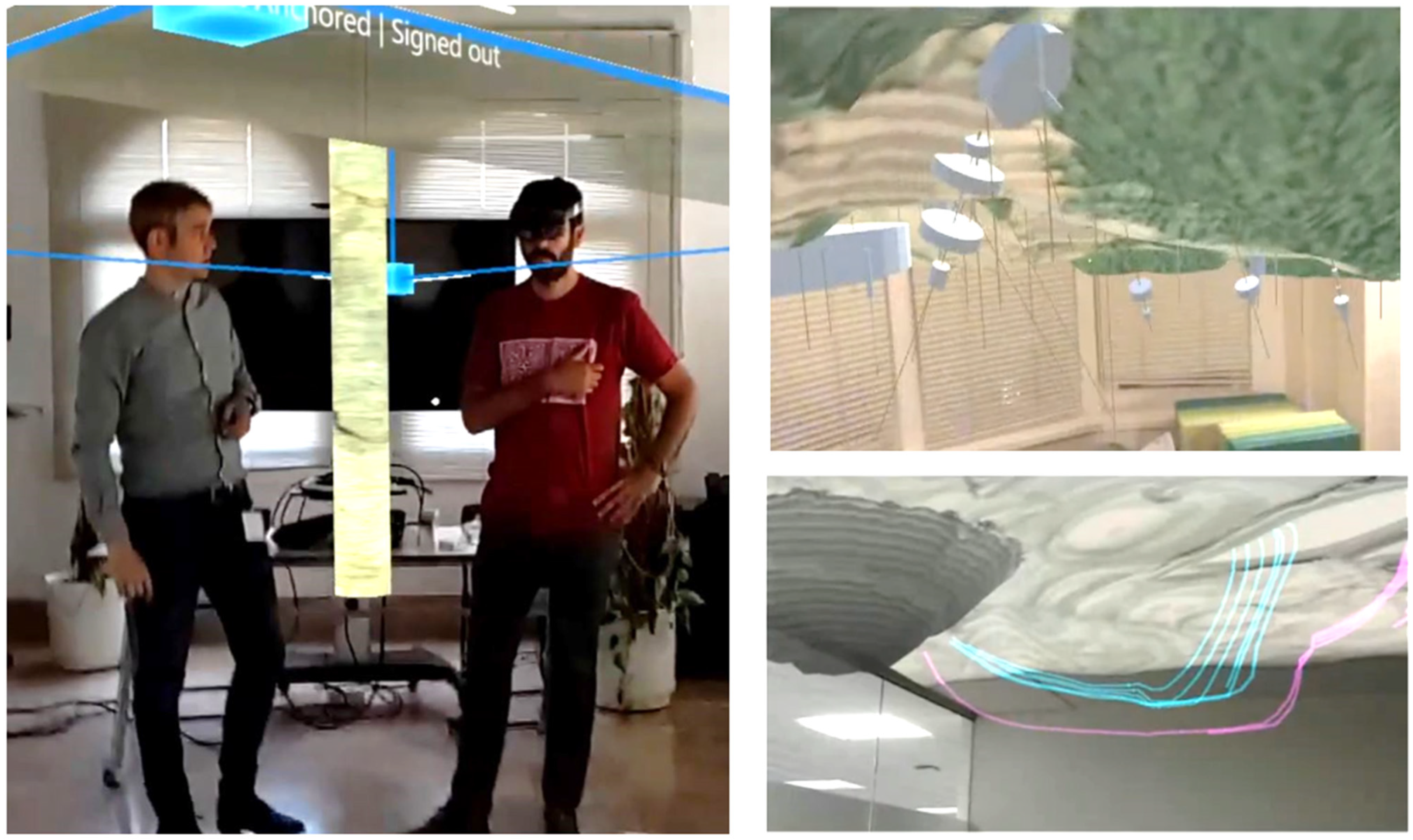

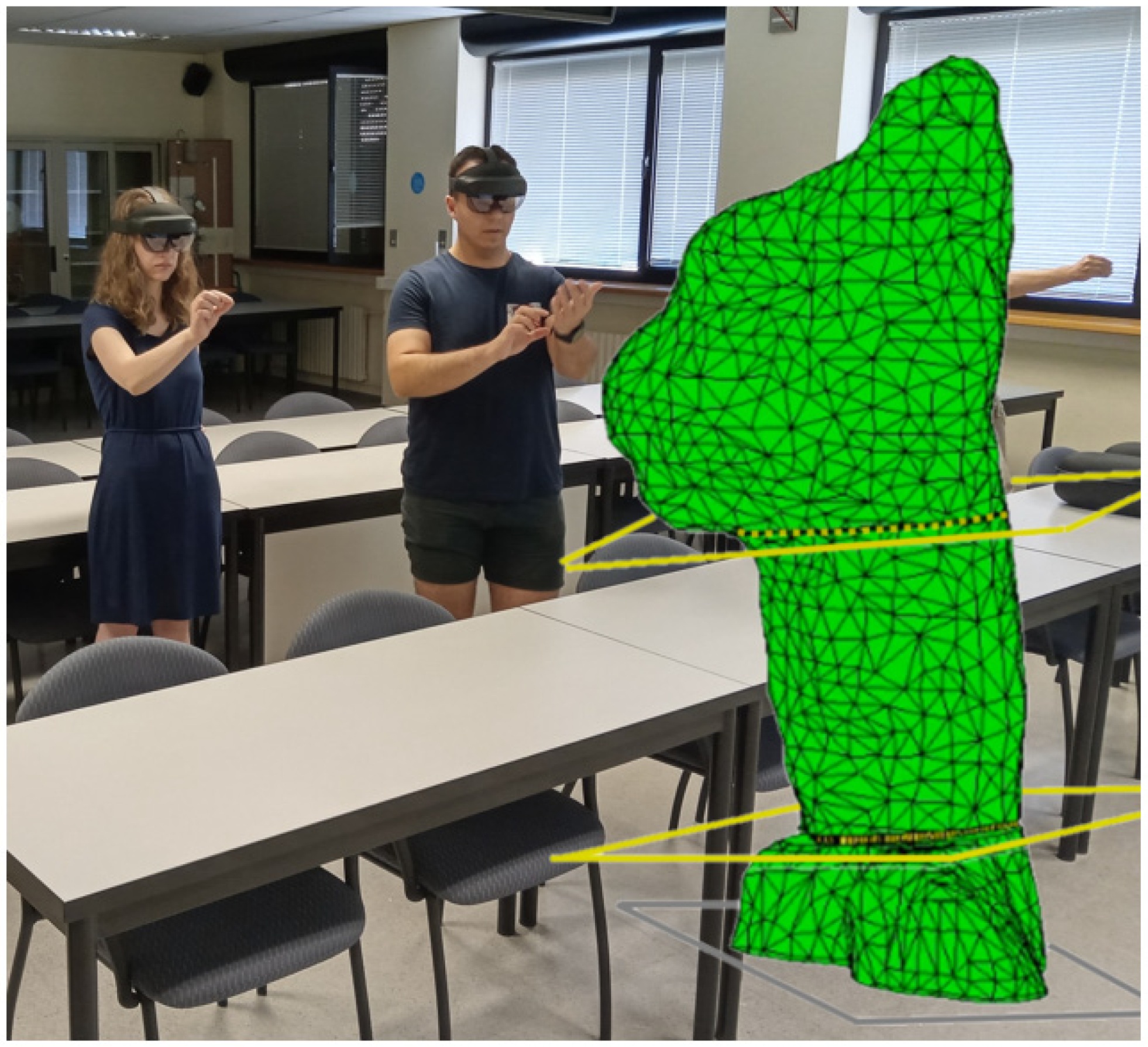

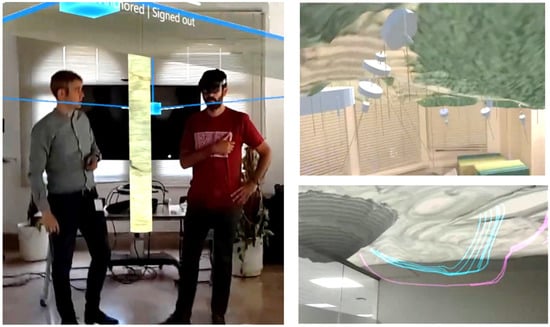

An interesting feature of the ADA Platform is that the visualization can be shared by different people equipped with an HMD and connected to a local or remote session (see Figure 7). Once downloaded, the 3D holographic model is anchored in the real space using the ADA Viewer in the “master” or “host” HoloLens unit. Then, the session may be shared with up to 10 users, who connect using other HoloLens (“guests”) to see the same 3D holographic model.

Figure 7.

Shared session within ADA Platform, from the point of view of one of the 3 HoloLens devices connected to a remote session. In this example, two users are remote, thus they appear only as avatars (headset hologram with their nickname). One virtual hand is also visible to highlight where the pointer is aiming to (picture courtesy of BGC/Clirio).

During a visualization session, the ADA Platform allows the user to manipulate the 3D holographic model, adjusting its rotation, location, elevation and dimensions, from a reduced scale to full-scale (1:1). Users can walk around the 3D geoinformation as if they were in the field. Using the ADA Viewer user interfaces, users can measure, pinpoint, and manipulate the information presented (hide/unhide data layers, for instance) to enhance the experience.

As stated earlier, seeing the model simultaneously and identically to real life makes it easy to interrogate the data presented and communicate ideas to reach a common understanding quickly. Technicians, academics and researchers can show and share data or study results effectively within the company or institution, as well as show the information to stakeholders. All these possibilities are illustrated with examples within the field of geosciences in Section 3.

3. Results and Discussion

A set of different 3D models are presented here to demonstrate the possibilities of MR in showing data and results in the field of geosciences. Instead of fully developing one or two case studies, we have opted to present, first, several simple and self-explanatory outputs of selected 3D models. Later, we will show how MR is being used for 3D geovisualization during research on the stability of large conglomerate blocks in the Montserrat massif (Spain).

The first challenge is to attempt to highlight the advantages of the 3D visualization without using the MR HMD, that is, through 2D images. Two videos are available in the electronic Supplementary Materials in order to partially overcome this limitation. Undoubtedly, the only way to fully comprehend the power of the tool is to test a set of MR HMD live.

3.1. MR Applied to Mining, Geotechnical and Geological Engineering

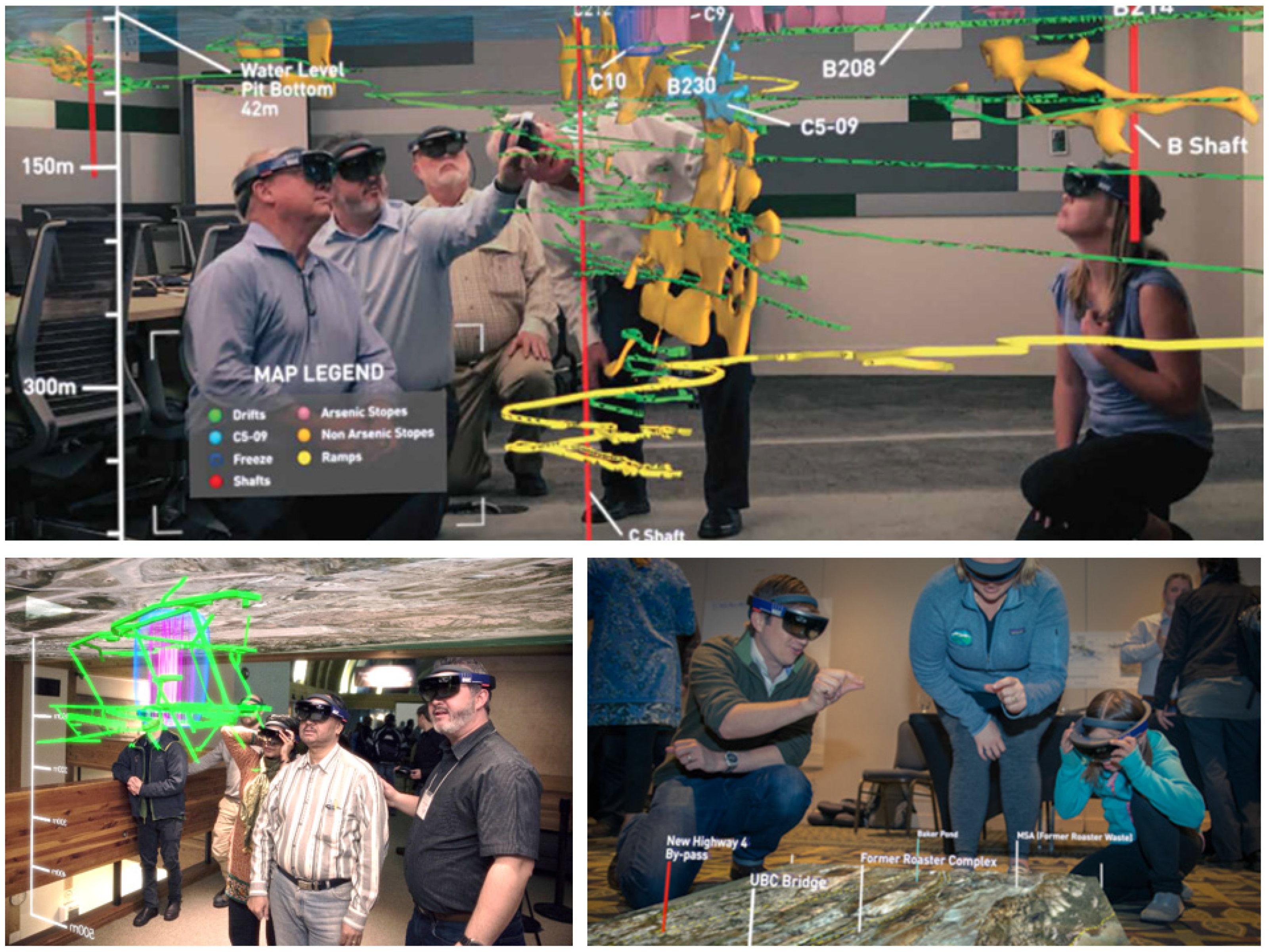

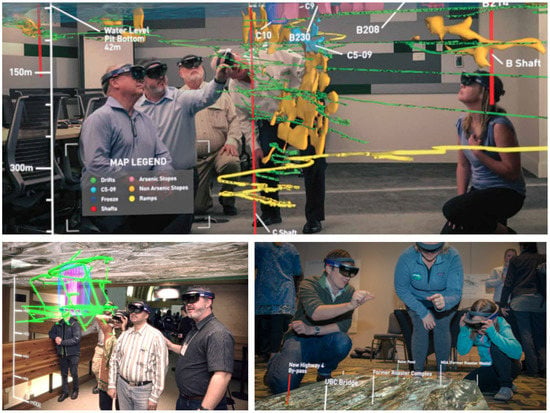

The visualization and dissemination of complex information in geosciences is extremely challenging. In Figure 8, we present an activity from the community engagement sessions of a real mining remediation project in northwestern Canada [17,54,55]. Sometimes the consultancy engineers, experts and academics (Figure 8, Top) “explain” a biased part of the data, focusing on a fraction of the whole picture, “telling” the stakeholders their conclusions directly, instead of “showing” them the fundamental data and letting the other party see it from another point of view, at their own pace. This is conducted for the sake of efficacy, but also because there is too much data, complex in nature and in the spatial dimension. It is difficult to share the global view in order to help others reach their own conclusions. For this reason, we tend to simplify, and the collaboration between different groups is hampered, making it difficult to reach a “common understanding” of the situation, much less, common conclusions and decisions. The situation worsens when experts and non-experts mix: representatives of local communities, politicians, end users, clients, managers and so on (Figure 8, Left). “Non-expert” may also refer to engineers or scientists working in a situation out of their field of expertise. However, “non-experts” are key in finding new points of view, alternative solutions or hidden flaws in the design assumptions (Figure 8, Right).

Figure 8.

Example of sharing information for a mining remediation project using Microsoft’s HoloLens HMD. Giant Mine, Canada [17,54,55]. (Top) Five experts examining the complex 3D data at the office. The Arsenic stopes, ramps, shafts, tunnels and remedial measures are considered from all points of view. (Left) Visualizing the underground Arsenic chambers and the locations of remedial measures with community members. (Right) An engineer training two members of the community in the basic gestures when working with the HoloLens. The holographic view near the floor is a 3D orthophoto of the surface of Giant Mine, with some labels pinpointing points of interest (pictures courtesy of BGC).

When the topography is abrupt and/or complex, 3D viewing is a great way to manage changes or new projects. In Figure 9 a limestone open pit is under examination, taking advantage of the “bird’s eye” point of view. The 3D model was obtained with a drone flight over the quarry; the pixel size is approximately 0.2 m. The photograms were processed with standard digital photogrammetry software. The high-resolution model favors a detailed overview of the slopes, benches, ramps, and stockpiles. Lengths, widths, dips and changes in elevation can all be measured directly in the model. Several scales of visualization allow the model to be adapted to the available space or to the field.

Figure 9.

An open pit mine 3D hologram in MR. (Left) sharing the model in the classroom at the university. (Right) the model opened in the field for inspection and direct comparison with the real environment (pictures courtesy of UPC and BGC).

In the authors’ experience, when the MR geovisualization truly adds value to the direct view is when there is something else in addition to the pure bare-ground or the topography. Some examples are presented and discussed below in Case ‘a’ to Case ‘d’.

- Case (a)

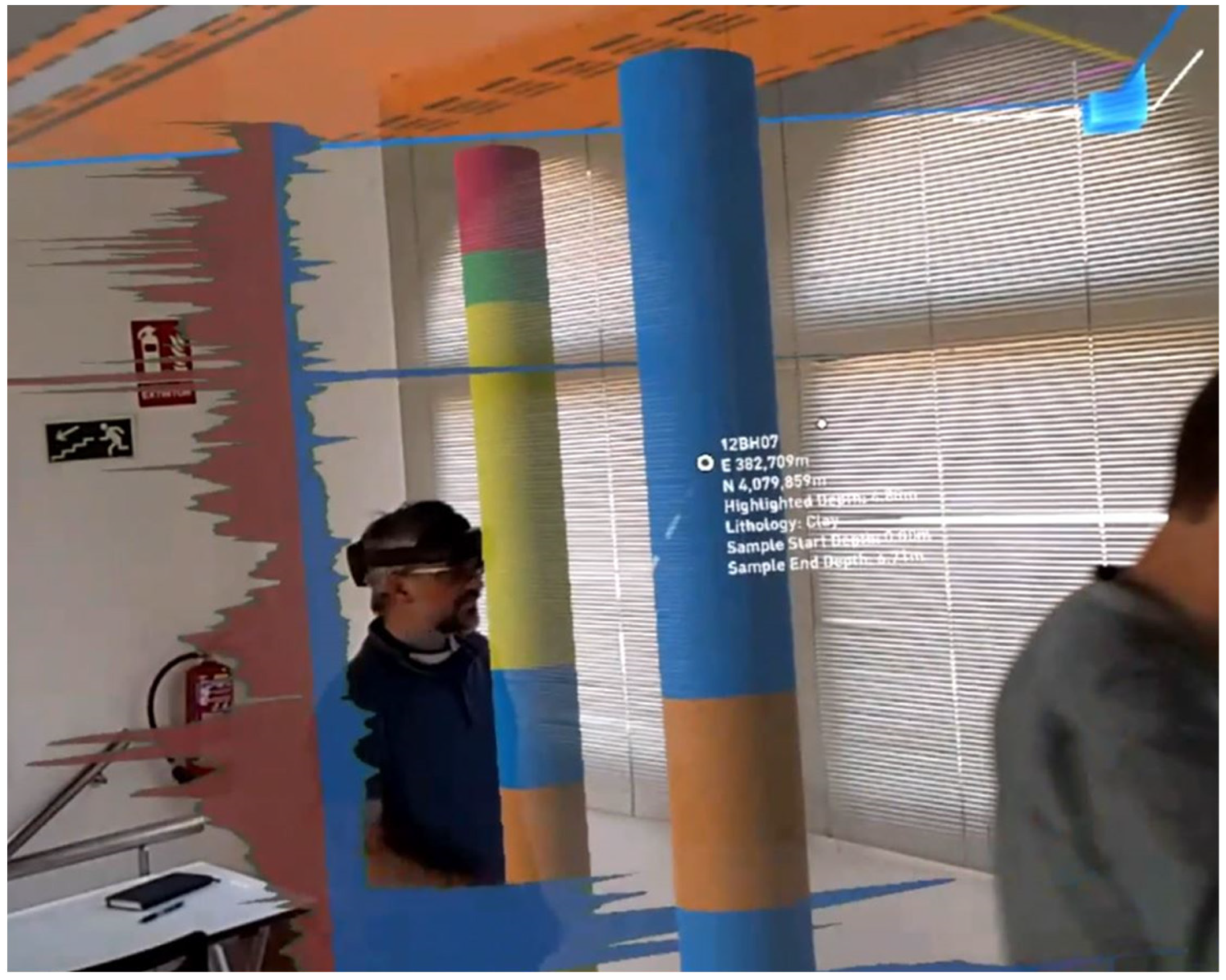

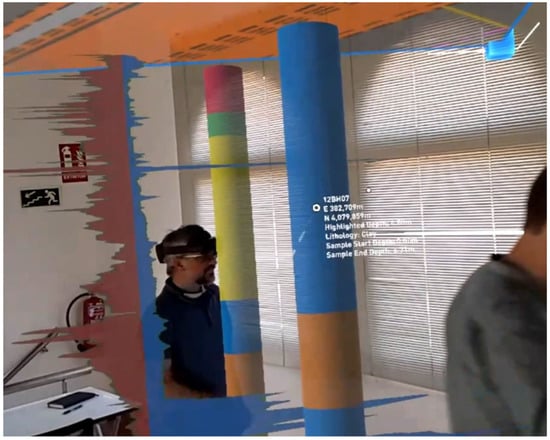

A significant part of the information consists of material properties below the topographic surface, such as borehole information (lithology, soil/rock properties, CPT values, permeability results, RQD, geophysical profiles, GPR profiles, etc. (Figure 10, Figure 11, Figure 12 and Figure 13). Additionally, a “below-the-surface” visualization not detailed here: the representation of the bottom of the sea, lake or river, which is known as bathymetries. In many cases, direct underwater viewing is very difficult due to the turbidity of the water.

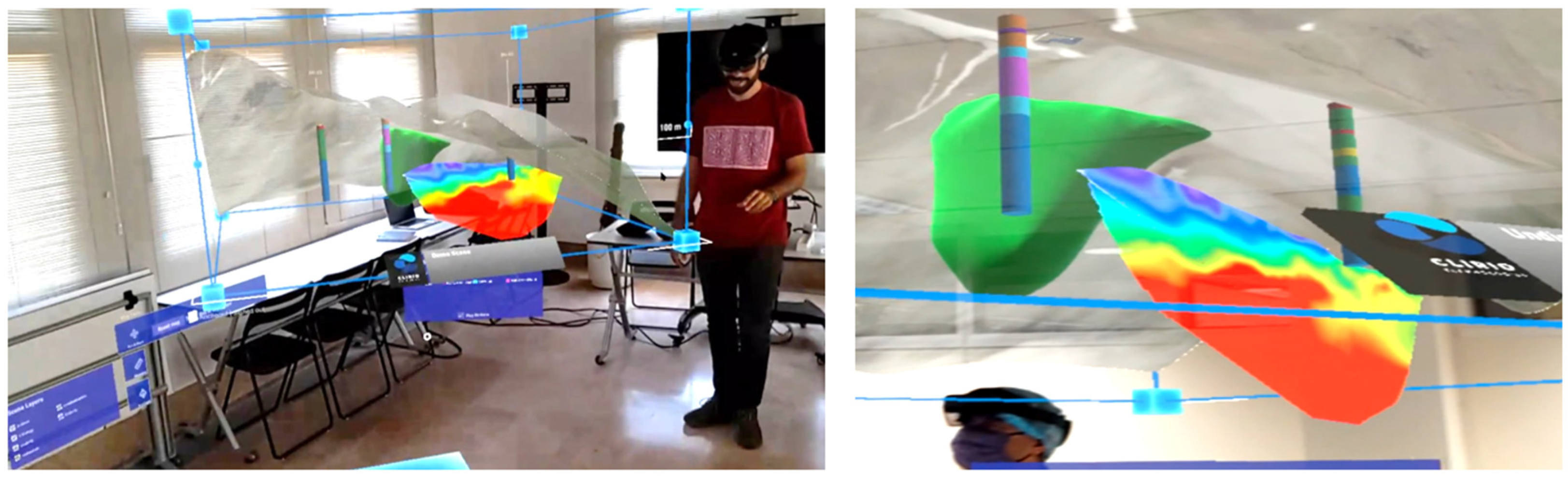

Figure 10.

MR underground visualization (as seen in the office through the HoloLens HMD) of two boreholes (center and right) and a CPT profile (left). Pointing to the borehole, the properties of the sample are obtained at the given depth. The ground surface can be appreciated at the top (picture courtesy of BGC/Clirio).

Figure 11.

Idealized subsoil scene with several boreholes, a geophysical profile (colored according to Electrical Resistivity Value) and an ore body (green polygon), as depicted in an underground visualization with HoloLens 2. A stepped ground surface can be appreciated in grey. Some contextual menus are located to the left of the model. Right: a close-up view of the holograms (pictures courtesy of BGC/Clirio).

Figure 12.

Examples of other underground data that can be shown as 3D holograms with the HoloLens 2. (Left) the light column in the image is a representation of the borehole wall as registered by an optical “televiewer” camera array. (Upper right) each dark line is a representation of a borehole, and each light gray disc is sized proportionally to the permeability measured at a given segment of the borehole (Lugeon test). (Bottom right) flowlines and particle velocity through the ground for an open pit (pictures courtesy of BGC/Clirio).

Figure 13.

Sometimes the right presentation of the model requires a large space, such as the hall of a building (left) or a parking lot passageway (right). Data shown include boreholes and geophysical horizontal and vertical Electrical Resistivity Value profiles (pictures courtesy of BGC/Clirio).

- Case (b)

The models are geometrically involved and labyrinthine-like, with a number of caverns, ramps, corridors, stopes, shafts, tunnels and conduits. This might be the situation in old mining developments, as in Figure 8.

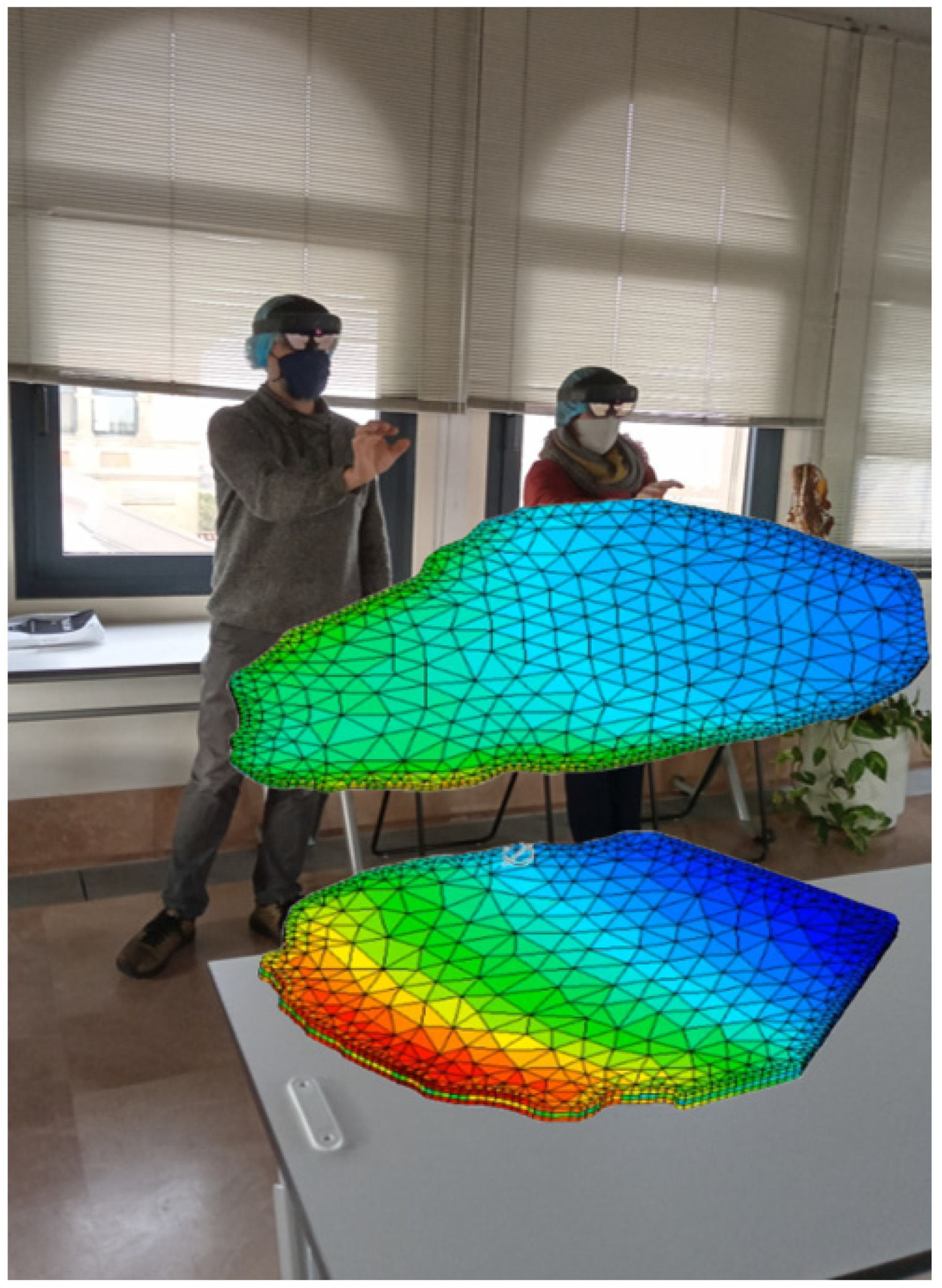

- Case (c)

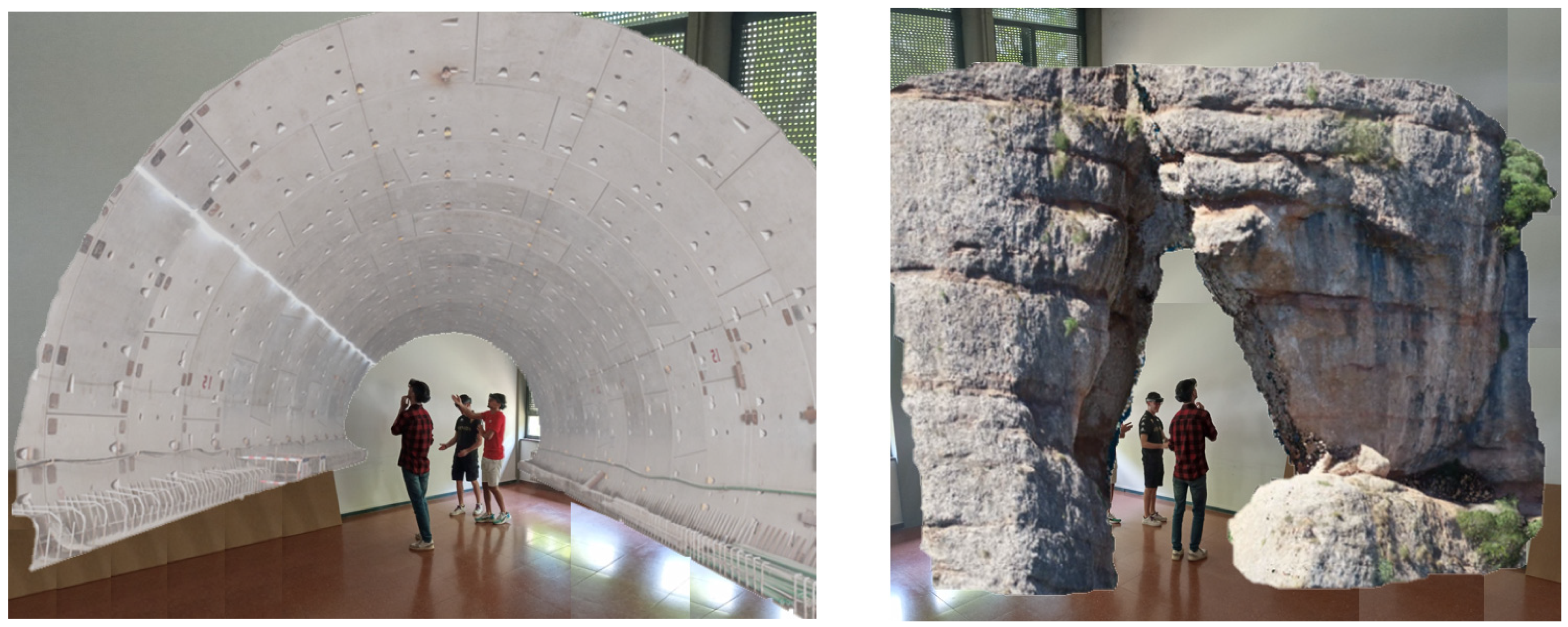

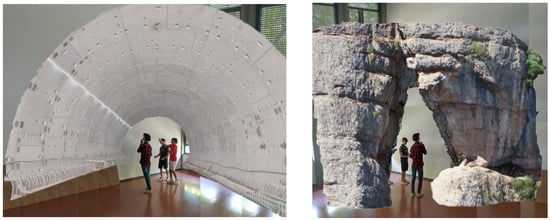

The objects are genuinely 360°, enclosing the user, such as caves, cavities and tunnels (Figure 14). Although beyond the scope of this paper, it is worth mentioning some applications in archaeology, heritage and/or architecture (excavations, interior spaces, cathedrals, chapels, monuments). The immersive capability of MR can be fully experienced in these situations, as the user can walk into the model and examine the walls from within.

Figure 14.

Examples of MR visualization when the model encloses the user: inside a tunnel section (left) and inside a cavern (right). The latter model corresponds to “La Foradada” site (Montserrat).

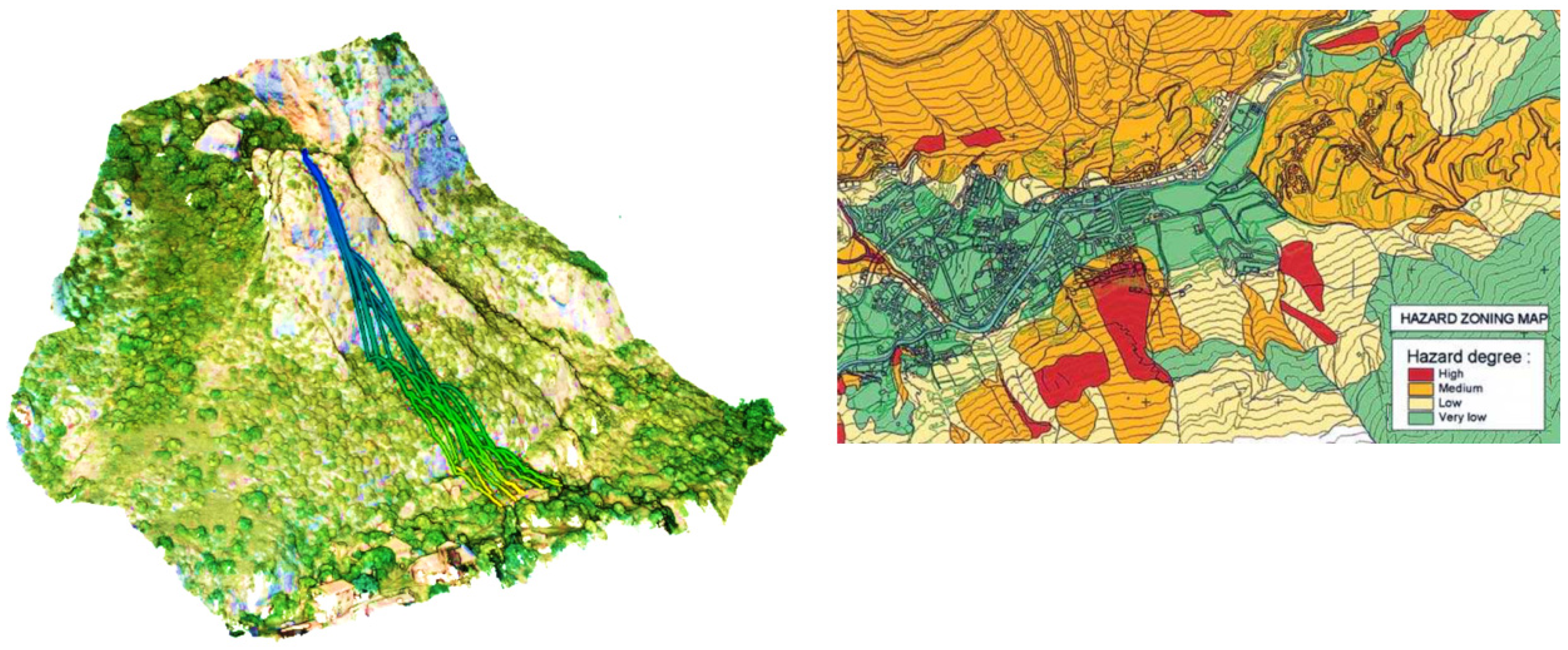

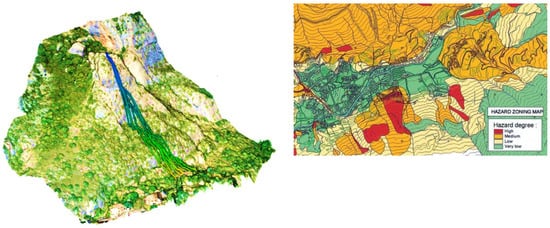

The 360° “sphericity” of the object can also be observed from “outwards”, such as the rock block in Figure 15. This block was released onto the slope in Figure 16 during a set of real-scale tests designed for the study and quantification of rock fragmentation during rockfalls [46,56]. Block 29 was completely destroyed during the drop. However, we can still study the block (measures, internal structure and joint filling) thanks to the 3D HR digital model. The eventual geovisualization of the rockfall simulation results in 3D MR (Figure 16 and Figure 17) facilitates the complete and common understanding of the phenomena. In these two figures, the trajectories followed by the rocky blocks are presented statically. A further step can be found in [23], where the blocks falling down the slope are animated and presented in MR.

Figure 15.

In the classroom, inspecting a 1:1 scale hologram of the limestone block number 29 used in the R&D project GeoRisk [46]. The model was built by means of a circular terrestrial photogrammetry survey [10,56] (pictures courtesy of GeoRisk project).

Figure 16.

MR visualization concept of a limestone site used in the GeoRisk project to drop blocks, as shown in Figure 15. The 3D slope model (scale 1:20) was built by means of a drone flight. The result of the rockfall simulation with the RockGIS code [57] is presented over the slope (pictures courtesy of GeoRisk project).

Figure 17.

Comparison between the geovisualization of rockfall simulation results in 3D with MR (left) and a 2D hazard map of a typical risk assessment study (right). The first one is optimal for the full and effective communication of the risk to the community, especially to non-expert members (picture courtesy of ICGC).

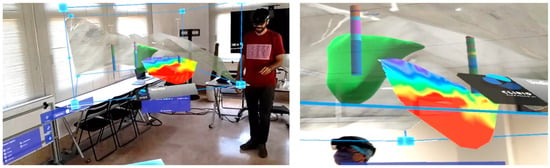

- Case (d)

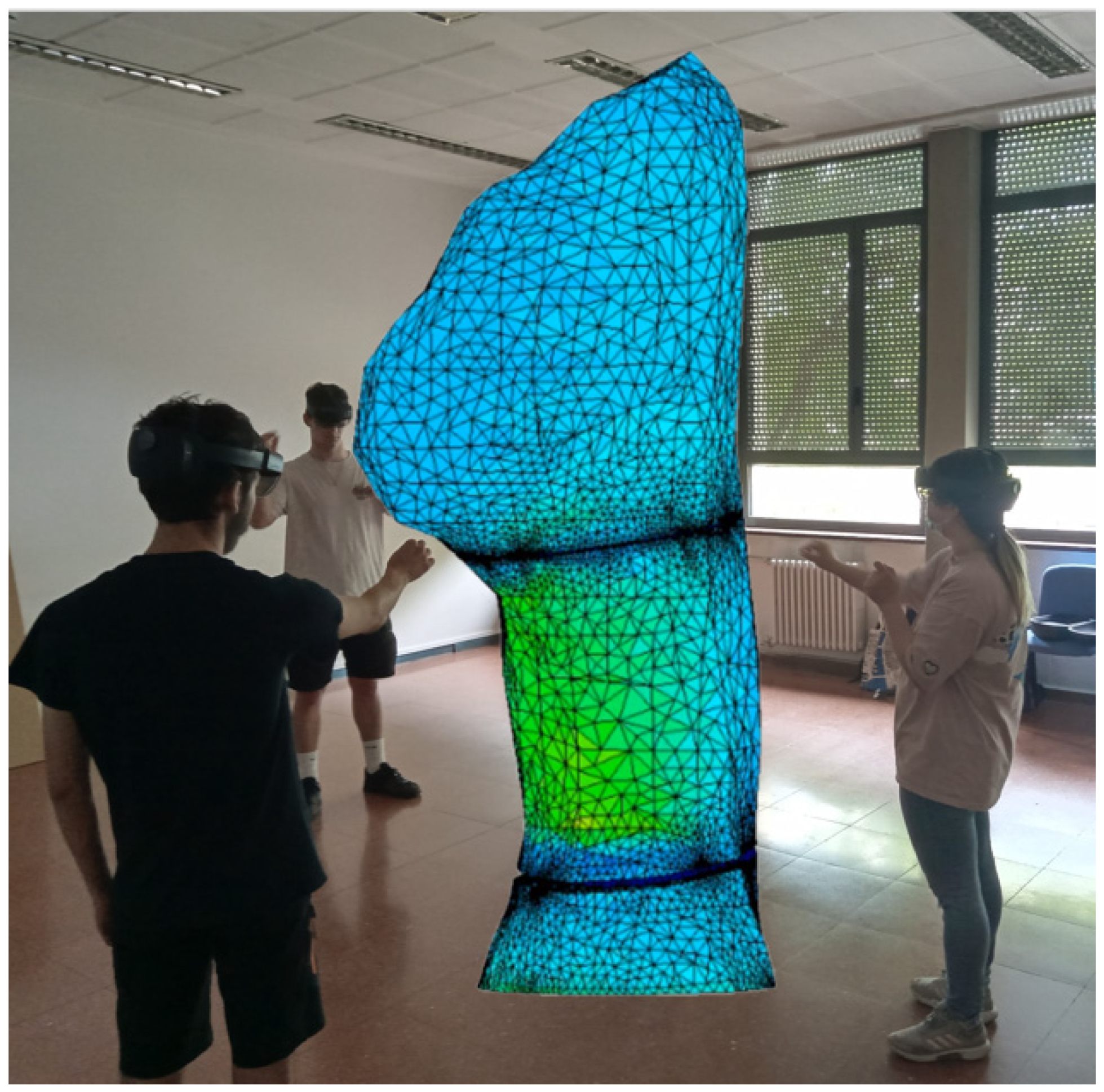

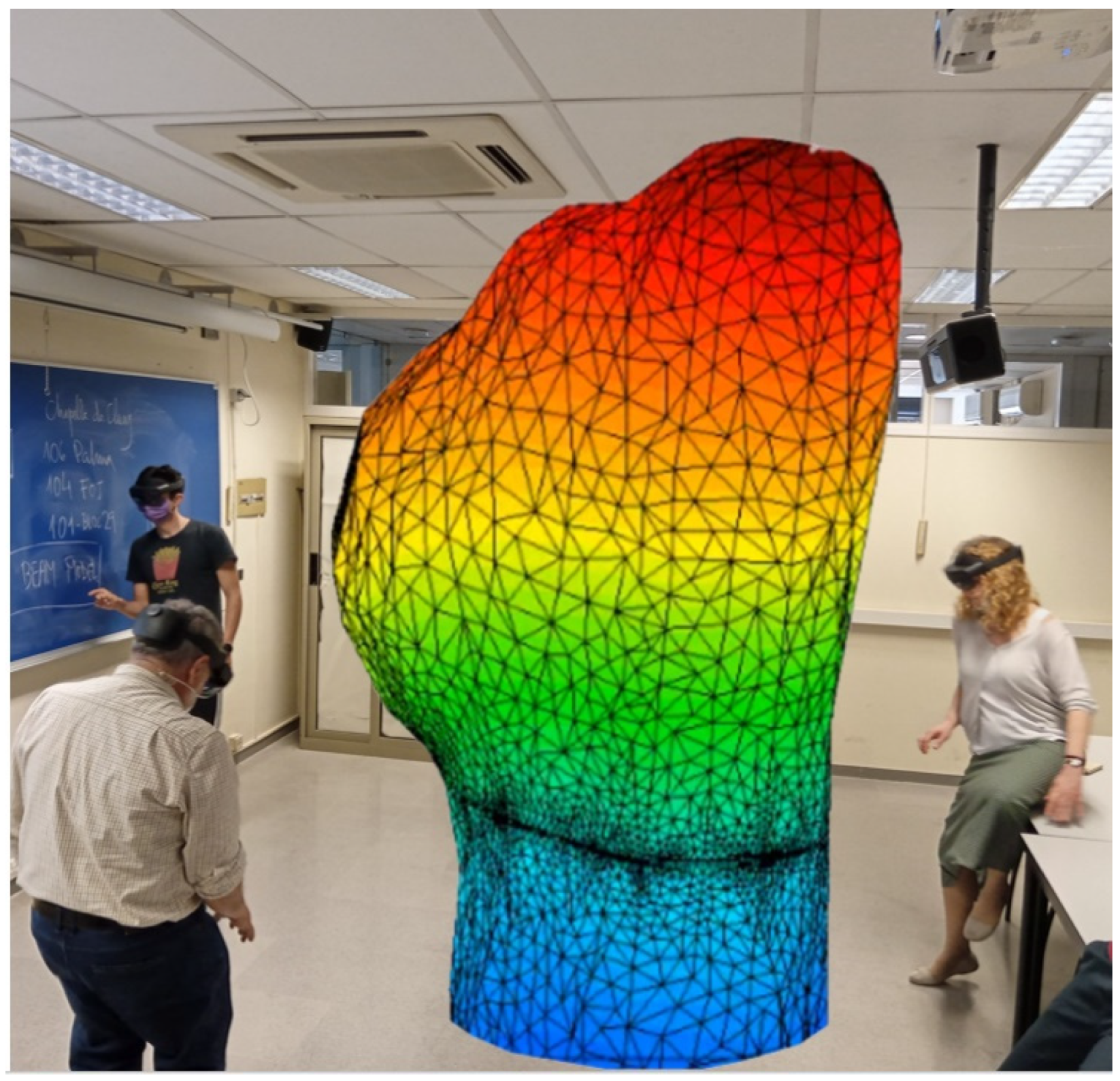

Apart from the physical appearance of the object, we may show some values over it, namely computational results about tensions or deformations, typical outputs from geotechnical numerical programs (e.g., Finite Element Method—FEM—codes). In this field, again, the simplification from 3D to 2D is common, given the difficulty in presenting and sharing the results globally. Examples of this case (d) are enclosed in the next point.

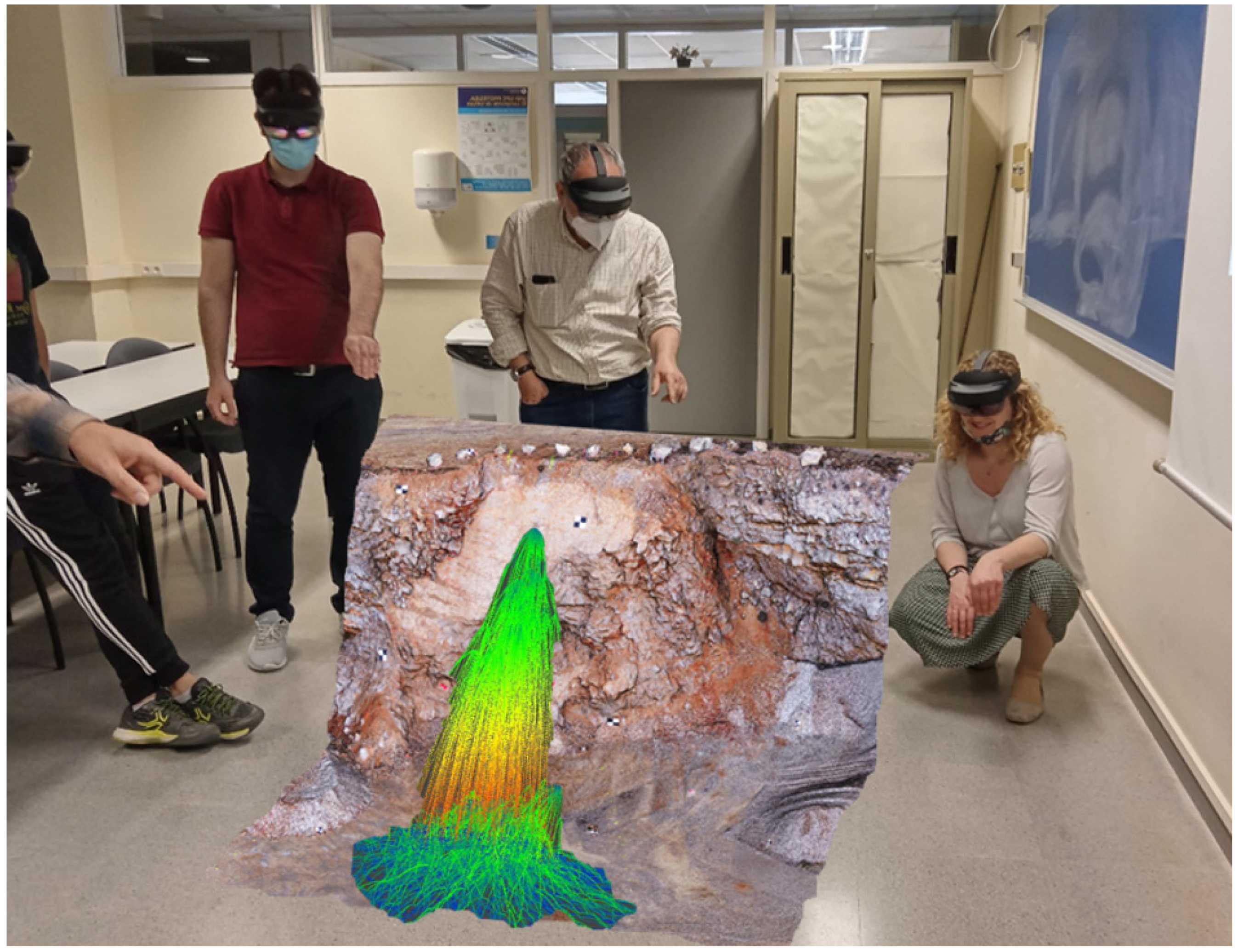

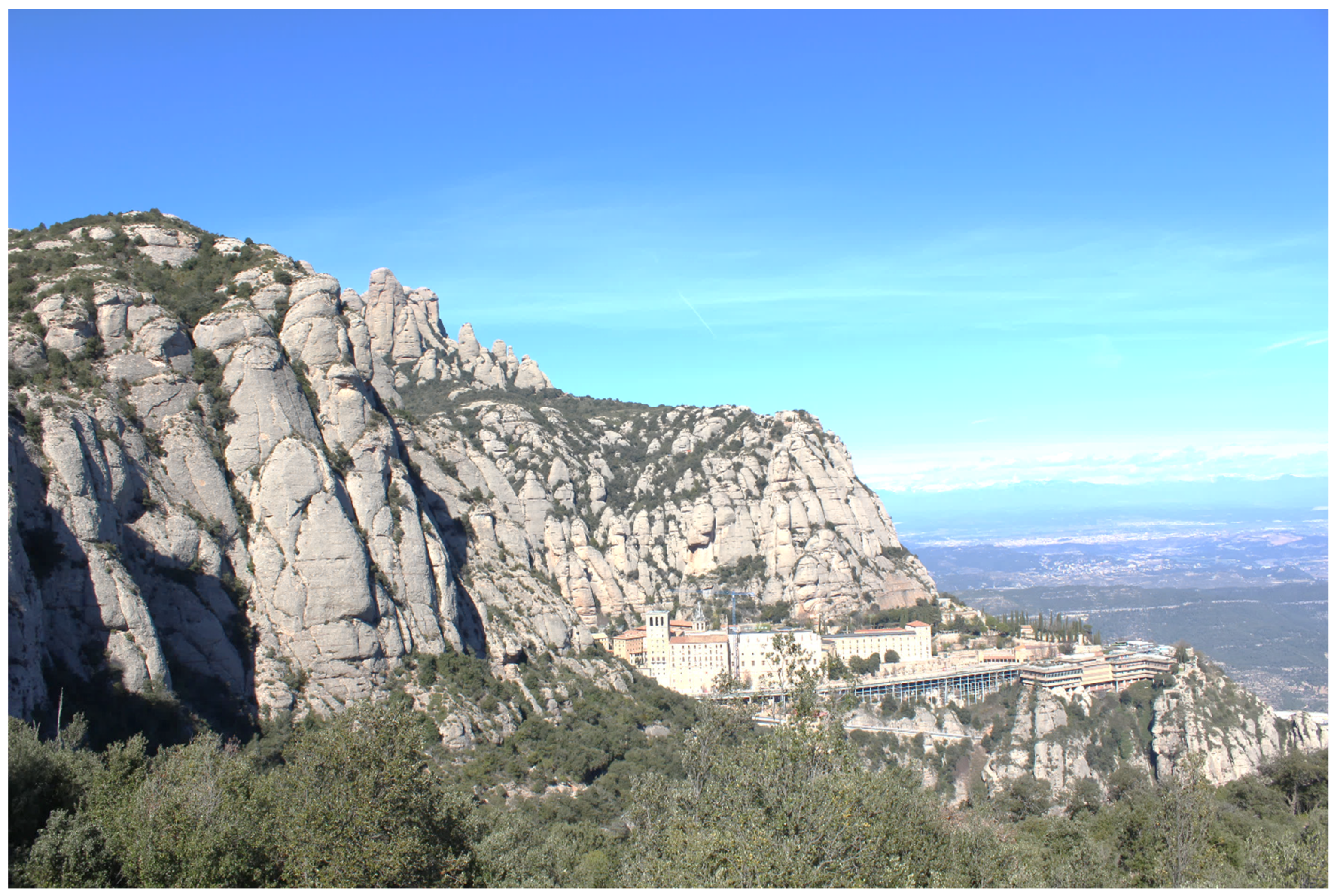

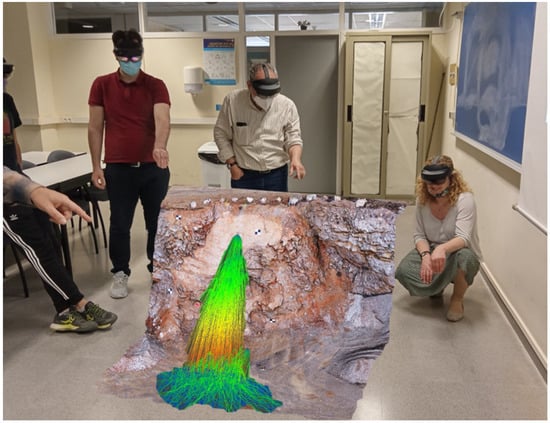

3.2. MR Geovisualization during Stability Assessment on Montserrat Rocky Slopes

Some results of ongoing investigations in the Montserrat massif serve to further develop the case d introduced in the previous section. This mountain is located 50 km northwest of the city of Barcelona in Catalonia (Spain). This isolated massif (Figure 18), formed by thick conglomerate layers interspersed by siltstone/sandstone, has an elevation change of 1000 m (from 200 to 1200 m a.s.l.). The geology and structure of Montserrat can be studied in [58]. The massif has stepped slopes where vertical cliffs alternate with steep slopes. The failure processes and mechanisms are described in [59]. This geometry combined with the large number of visitors (around 3.5 million per year) depicts a high-risk scenario.

Figure 18.

General view of the Montserrat massif (50 km northwest of the city of Barcelona, Spain) near the monastery.

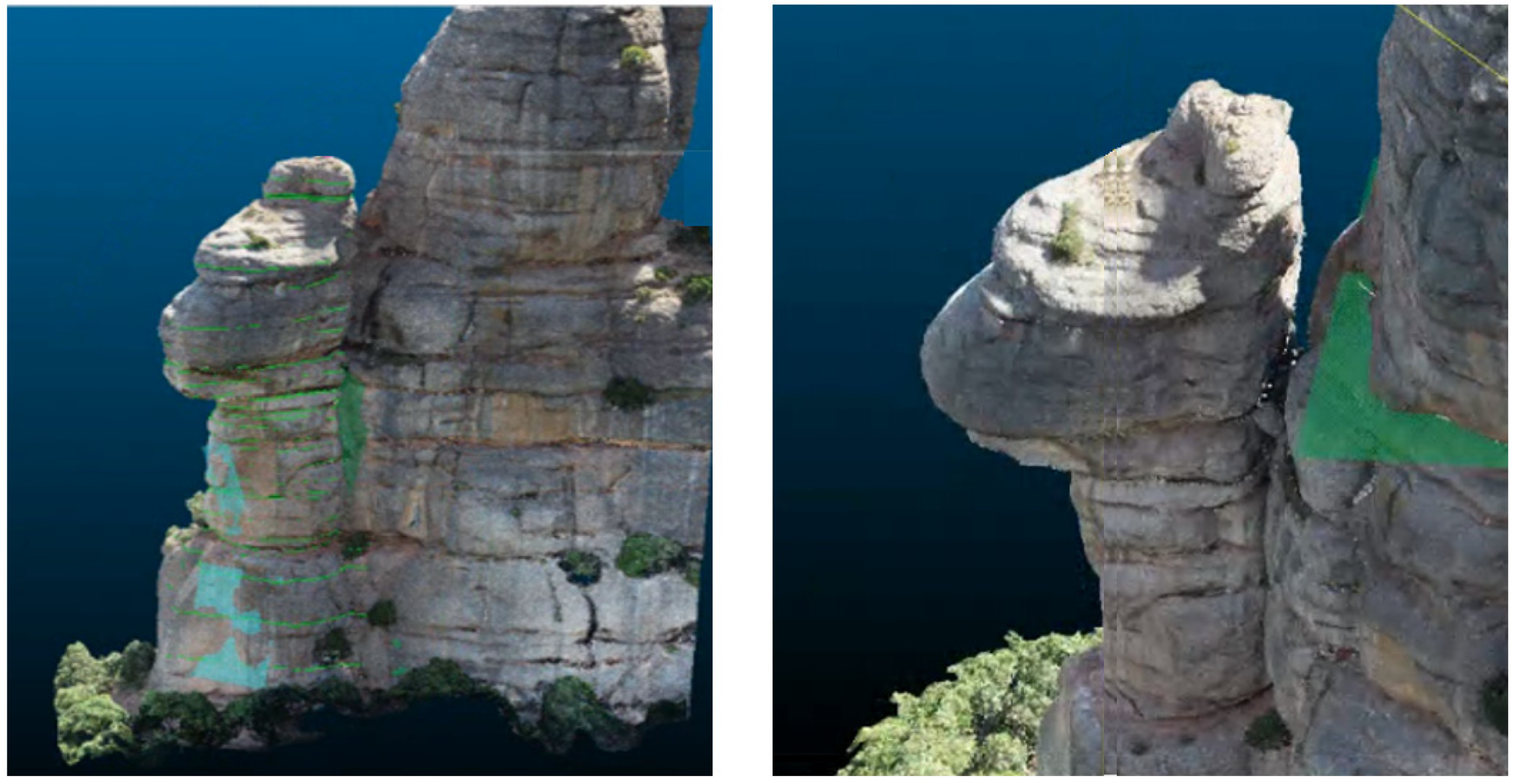

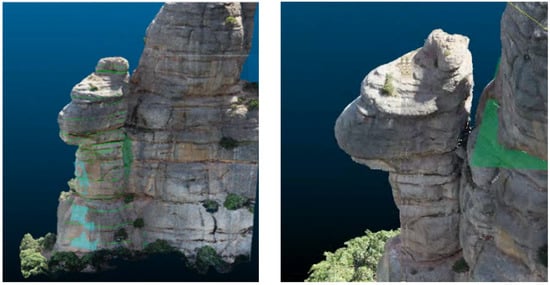

We have already seen visualizations of Montserrat in Figure 6 (the topography of the “Degotalls” area, 500 m from the monastery) and in Figure 14 (“La Foradada” cavern). The data that follows corresponds to the area known as “Agulles” (meaning “rock columns” or “rock needles”) approximately 5 km from the monastery. This is the site of an investigation into the stability of a massive rock block of conglomerates (known as “Cadireta”), approximately 25,000 m3, over a thin rock column (Figure 19). This column is crossed by two sub-horizontal siltstone layers, which compromises the overall stability.

Figure 19.

Two perspectives of the “Cadireta” conglomerate block (Montserrat, Agulles area). The block under study is on the left, separated from the massif by a vertical penetrating joint. Some planes in light green were added to the geometry prior to the FEM mesh generation. The 3D model was built using a drone and digital photogrammetry software, and is viewed with CloudCompare [60].

The “Cadireta” block has been isolated from the rest of the massif (Figure 20). In order to close the volume or body of rock, several planes have been added on the flanks of the base of the column. The 3D visualization is of great aid in this operation. From there, it has been possible to generate the FEM mesh, Figure 21. In the conglomerates we have about 70,000 tetrahedral elements, whereas about 5000 prismatic elements discretize the two siltstone layers, with a grand total of 20,000 nodes.

Figure 20.

Montserrat: West, North, East and South 2D views of the “Cadireta” 3D model, prior to the FEM mesh generation. Note in the picture on the right (partially hidden side) the planes added to the surface of the base of the column to close the volume.

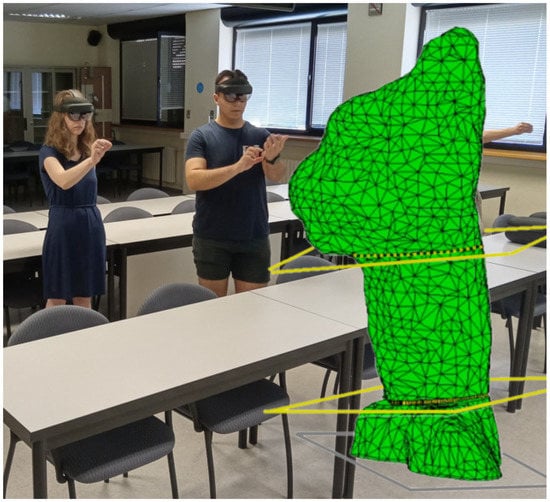

Figure 21.

“Cadireta” (Montserrat): MR visualization concept for inspection of the FEM mesh. To highlight the two siltstone layers (in yellow), several planes have been added to the scene.

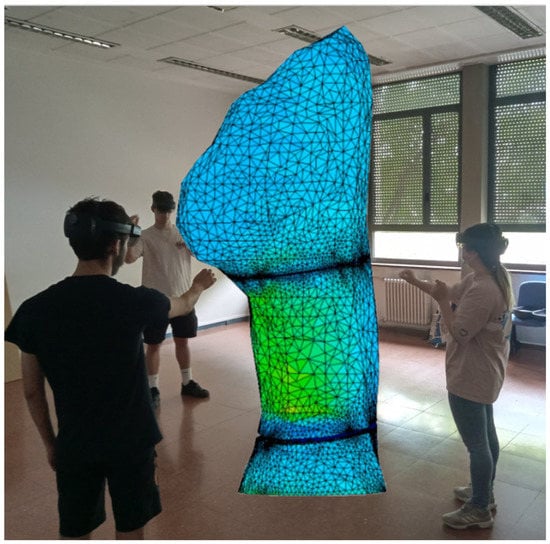

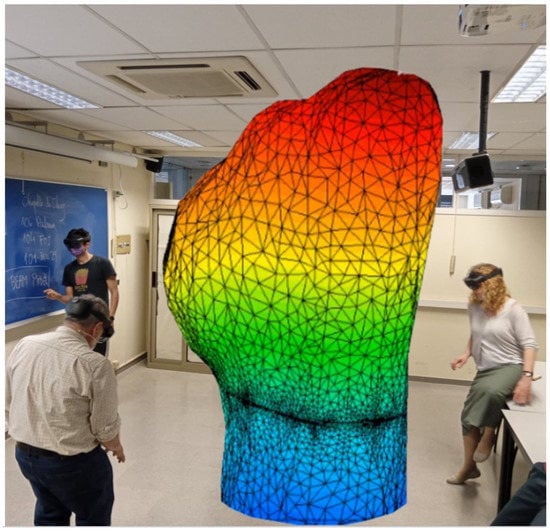

After FEM mesh depuration and debugging, it has been possible to run the program (using a computer/laptop). The FEM code in use is Code_Bright [61], which delivers the results on tensions and displacements. Some selected results are presented in Figure 22, Figure 23 and Figure 24, firstly for the conglomerates (rock material), and secondly for the siltstones (in the two thin layers).

Figure 22.

“Cadireta” (Montserrat): visualization concept of FEM results in MR. The conglomerate elements are colored according to the value of the tension first invariant (P), ranging from light blue (near zero) to yellow (close to 2 MPa).

Figure 23.

“Cadireta” (Montserrat): visualization concept of FEM results in MR. The elements are colored according to the value of the displacement, ranging from blue (zero) to red (close to 12 mm).

Figure 24.

“Cadireta” (Montserrat): visualization concept of FEM results in MR in the two siltstone layers. The elements are colored according to the value of the tension first invariant (P), ranging from light blue (near zero) to red (close to 4.8 MPa).

To summarize, it has been shown that the 3D models of the different cliffs of Montserrat are a fundamental aid to understand the relationship between the geometry, the properties of the massif and the equilibrium level in the massif. This is of paramount importance to manage the rockfall hazard to the vulnerable elements at risk (monastery, road, car park, rack railway and trails, visible in Figure 18), as the first step to carry out correction measures is to mitigate the risk.

4. Conclusions

A number of conclusions can be derived from this paper. In this conclusive section, it is worth highlighting the complementary vision of the authors, coming from three different worlds (consultancy, administration and academia).

Geotechnical and geological data, similar to nature, are complex, multilayered, and 3D. The global picture suffers a great loss if we compress it into 2D drawings and onto flat screens.

XR (MR, VR or AR) facilitates the understanding and communication of this complexity, especially in underground projects, in a more natural way. XR technology is developed enough to be used in geosciences, both in data collection, interpretation and communication.

Besides the functionalities of different HMD, any solution allows the investigation of 3D models by several users, collaboratively, in the office environment or in the field, together or remotely,

Most of the results presented here are based on Microsoft’s HoloLens 2 holographic glasses and Clirio’s software platform; thus, we can state that this solution is working properly for the aforementioned geovisualization goal. On the other hand, there is plenty of room for new solutions with other apps, or to develop specific solutions through the Unity3D programming environment.

The last point links with the educational possibilities of MR technology (Figure 8, Figure 9, Figure 15 and Figure 16). Students have the opportunity to navigate, in immersive mode and/or in “bird’s eye” point of view, through virtual geological landscapes, such as volcanic areas, remote geosites in the jungle or on glaciers, underground caverns, inaccessible cliffs and others. They can also inspect virtual rock samples from around the world, reconstructed using state-of-the-art photogrammetry techniques [41]. Ref. [45], after nine immersive virtual reality-based events and 459 questionnaires, reports that the majority of geoeducation subjects, both academics and students, confirmed the usefulness of the XR approach for geovisualization in geoscience education. At the UPC, after 76 pilot HoloLens sessions involving more than 300 students and 30 academics, we can confirm this general, very positive feedback from the attendants, as well as the MR broad range of possibilities in education.

The future is open to an increase in applications in this field. However, the price of the HMD can preclude the adoption of this technology. Other societal changes, such as the metaverse concept, the evolution in the human–computer interaction technologies, or the adoption of the BIM paradigm, may help, eventually, in the more general introduction of some XR tools in the learning process.

Through a broad set of selected 3D models (most of them of the Montserrat massif), we have demonstrated the capabilities of MR for the geovisualization and dissemination of complex 3D information. It is worth noting the suitability of MR for risk communication to the final users and the community (Figure 8, Figure 16 and Figure 17).

A glimpse into the future can be found in [14,29]. We will experience improvements in the MR HDM, which will be lighter, smaller, cheaper and with more power and resolution. The apps will permit advanced data processing on site. The process between the acquisition and the final visualization will be progressively automated. Changes in the workflow in engineering geology practice are envisaged, both in field and office work, which will grow closer and merge. A goal for the future is to make it easy for non-specialists to visualize complex 3D information, allowing them to show it to other stakeholders.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/geosciences12100370/s1: Video S1, title: “Several 3D models viewed as holograms inside HoloLens with ADA Platform by Clirio (mixed reality)”. Video S2, title: “Inspecting the 3D terrain model of Montserrat massif (Spain), Degotalls area, using HoloLens and ADA Platform by Clirio (mixed reality)”.

Author Contributions

The individual contributions of the co-authors has been as follows: Conceptualization and methodology, M.J., J.R., J.A.G., G.M. and K.F.; Investigation and validation, M.J., J.R., J.A.G. and M.A.N.-A.; Resources, J.R., J.A.G., G.M., M.A.N.-A. and K.F.; Writing—original draft, M.J. and J.A.G.; Writing—review and editing, M.J., J.R., J.A.G., O.P., G.M., M.A.N.-A. and K.F.; Supervision, M.J., J.R. and J.A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by MCIN/AEI/10.13039/501100011033: PID2019-103974RB-I00 and by Interreg V-A, POCTEFA: EFA364/19.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Part of this work is being carried out in the framework of the research project “Advances in rockfall quantitative risk analysis (QRA), incorporating developments in geomatics (GeoRisk)” with reference code PID2019-103974RB-I00, funded by MCIN/AEI/10.13039/501100011033. Additionally, we acknowledge the contribution of the “PyrMove” project, “Prevention and cross-border management of risk associated with landslides” (Ref.EFA364/19), which is funded by Interreg V-A, POCTEFA, and by the European Regional Development Fund, ERDF. The first, third, fourth and sixth authors would like to thank BGC Engineering and Clirio Inc. for the technical support (software resources and know-how) provided during the realization of the models presented in this work. Finally, we acknowledge the work of two anonymous reviewers, who helped improve the original manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Janeras, M.; Navarro, M.; Arnó, G.; Ruiz, A.; Kornus, W.; Talaya, J.; Barberà, M.; López, F. LiDAR applications to rock fall hazard assesment in Vall de Núria. In Proceedings of the 4th Mountain Cartography Workshop, Catalonia, Spain, 30 September–2 October 2004; International Cartographic Association, ICA: Bern, Switzerland, 2004. [Google Scholar]

- Buckley, S.J.; Howell, J.A.; Enge, H.D.; Kurz, T.H. Terrestrial laser scanning in geology: Data acquisition, processing and accuracy considerations. J. Geol. Soc. 2008, 165, 625–638. [Google Scholar] [CrossRef]

- Derron, M.H.; Jaboyedoff, M. LIDAR and DEM techniques for landslides monitoring and characterization. Nat. Hazards Earth Syst. Sci. 2010, 10, 1877–1879. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.-H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Santana, D.; Corominas, J.; Mavrouli, O.; Garcia-Sellés, D. Magnitude-frequency relation for rockfall scars using a Terrestrial Laser Scanner. Eng. Geol. 2012, 145, 50–64. [Google Scholar] [CrossRef]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; James, M.R.; Akciz, S.; Thiele, S.T.; Bangash, H.A. Ground-based and UAV-based photogrammetry: A multi-scale, high-resolution mapping tool for structural geology and paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Riquelme, A.; Abellán, A.; Tomás, R. Discontinuity spacing analysis in rock masses using 3D point clouds. Eng. Geol. 2015, 195, 185–195. [Google Scholar] [CrossRef]

- Abellan, A.; Derron, M.H.; Jaboyedoff, M. “Use of 3D point clouds in geohazards” special issue: Current challenges and future trends. Remote Sens. 2016, 8, 130. [Google Scholar] [CrossRef]

- Williams, J.; Rosser, N.; Hardy, R.; Brain, M.; Afana, A. Optimising 4-D surface change detection: An approach for capturing rockfall magnitude-frequency. Earth Surf. Dyn. 2018, 6, 101–119. [Google Scholar] [CrossRef]

- Ruiz-Carulla, R.; Corominas, J.; Gili, J.A.; Matas, G.; Lantada, N.; Moya, J.; Prades, A.; Núñez-Andrés, M.A.; Buill, F.; Puig, C. Analysis of fragmentation of rock blocks from real-scale tests. Geosciences 2020, 10, 308. [Google Scholar] [CrossRef]

- Pugsley, J.; Howell, J.; Hartley, A.; Buckley, S.; Brackenridge, R.; Schofield, N.; Maxwell, G.; Chmielewska, M.; Ringdal, K.; Naumann, N.; et al. Virtual Fieldtrips: Construction, delivery, and implications for future geological fieldtrips. Geosci. Commun. 2021. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Briones-Bitar, J.; Carrión-Mero, P.; Montalván-Burbano, N.; Morante-Carballo, F. Rockfall research: A bibliometric analysis and future trends. Geosciences 2020, 10, 403. [Google Scholar] [CrossRef]

- Onsel, E.I.; Stead, D.; Barnett, W.; Zorzi, L.; Shaban, A. Innovative mixed reality approach to rock mass mapping in underground mining. In MassMin 2020: Proceedings of the Eighth International Conference & Exhibition on Mass Mining; Castro, R., Báez, F., Suzuki, K., Eds.; University of Chile: Santiago, Chile, 2020; pp. 1375–1383. [Google Scholar] [CrossRef]

- Pedraza, O.; Janeras, M.; Gili, J.A.; Struth, L.; Buill FGuinau, M.; Ferré, A.; Roca, J. Comunicación de la Geoinformación 3D Mediante Visores Web y Entornos Inmersivos de Realidad Mixta en Problemas de Taludes Y Laderas. In X Simposio Nacional sobre Taludes y Laderas Inestables Granada; Hürlimann, M., Pinyol, N., Eds.; CIMNE: Barcelona, Spain, 2022. [Google Scholar]

- Roca, J. Webinar-Mixed Reality Tools for Visualization of Complex Spatial Data in Geomechanics. Video Material, Duration 45:14. 2022. Available online: https://www.youtube.com/channel/UC-WCRiWoes7q-J0_nMqPmUg/featured (accessed on 31 July 2022).

- Franklin, K.A.; MacInnis, C.R.; Roca, J.; Magnusson, G.R.; Burton, B.T.J.; Enos, R.N. Holographic models of closure landscapes for stakeholder engagement—When you need more than words and pictures. In Proceedings of the 15th International Conference on Mine Closure ‘Mine Closure 2022’, Brisbane, Australia, 4–6 October 2022; p. 12. [Google Scholar]

- Li, I.; Lato, M.; Magnusson, G.; Roca, J.; Reid, E. Augmented Reality and Applied Augmented Reality and Applied Earth Science: A New Tool for Site Characterization. Vancouver Geotechnical Society Symposium, 2019. Available online: http://v-g-s.ca/2019-proceedings (accessed on 31 July 2022).

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented Reality: A class of displays on the reality-virtuality continuum. In Proceedings of Telemanipulator and Telepresence Technologies; International Society for Optics and Photonics: Bellingham, WA, USA, 1994; Volume 2351, pp. 282–292. [Google Scholar]

- Milgram, P.; Colquhoun, H., Jr. A Taxonomy of Real and Virtual World Display Integration. In Mixed Reality: Merging Real and Virtual Worlds; Springer: New York, NY, USA; Berlin/Heidelberg, Germany, 1999; pp. 5–30. [Google Scholar] [CrossRef]

- Carrasco, M.D.O.; Chen, P.H. Application of mixed reality for improving architectural design comprehension effectiveness. Autom. Constr. 2021, 126, 103677. [Google Scholar] [CrossRef]

- Fast-Berglund, Å.; Liang, G.; Li, D. Testing and validating Extended Reality (xR) technologies in Manufacturing. Procedia Manuf. 2018, 25, 31–38. [Google Scholar] [CrossRef]

- Stead, D. Rock Slope Engineering: A combined Remote Sensing-Numerical Modelling Approach. In Proceedings of the 34th ISRM, online Lecture, 24 June 2021; Available online: https://isrm.net/isrm/page/show/1588 (accessed on 31 July 2022).

- Li, X.; Yi, W.; Chi, H.; Wang, X.; Chan, A. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Mora-Serrano, J.; Muñoz-La Rivera, F.; Valero, I. Factors for the Automation of the Creation of Virtual Reality Experiences to Raise Awareness of Occupational Hazards on Construction Sites. Electronics 2021, 10, 1355. [Google Scholar] [CrossRef]

- Janusz, J. Toward the New Mixed Reality Environment for Interior Design. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 471, p. 102065. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, H.; Kang, S.C.; Al-Hussein, M. Virtual reality applications for the built environment: Research trends and opportunities. Autom. Constr. 2020, 118, 103311. [Google Scholar] [CrossRef]

- La Rivera, F.M.; Mora-Serrano, J.; Oñate, E. Virtual reality stories for construction training scenarios: The case of social distancing at the construction site. WIT Trans. Built Environ. 2021, 205, 37–47. [Google Scholar] [CrossRef]

- Bopp, M.J.; La Rivera, F.M.; Sierra-Martí, C.; Mora-Serrano, J. Automating the Creation of VR Experiences as Learning Pills for the Construction Sector. In Proceedings of the 6th International Conference of Educational Innovation in Building, CINIE, Surabaya, Indonesia, 10 September 2022; p. 13. [Google Scholar]

- Frederiksen, J.G.; Sørensen, S.M.D.; Konge, L.; Svendsen, M.B.S.; Nobel-Jørgensen, M.; Bjerrum, F.; Andersen, S.A.W. Cognitive load and performance in immersive virtual reality versus conventional virtual reality simulation training of laparoscopic surgery: A randomized trial. Surg. Endosc. 2020, 34, 1244–1252. [Google Scholar] [CrossRef]

- Vasudevan, M.K.; Isaac, J.H.R.; Sadanand, V.; Muniyandi, M. Novel virtual reality based training system for fine motor skills: Towards developing a robotic surgery training system. Int. J. Med. Robot. 2020, 16, 1–14. [Google Scholar] [CrossRef]

- Tschirschwitz, F.; Richerzhagen, C.; Przybilla, H.J.; Kersten, T.P. Duisburg 1566: Transferring a Historic 3D City Model from Google Earth into a Virtual Reality Application. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 47–56. [Google Scholar] [CrossRef]

- Edler, D.; Keil, J.; Wiedenlübbert, T.; Sossna, M.; Kühne, O.; Dickmann, F. Immersive VR Experience of Redeveloped Post-industrial Sites: The Example of “Zeche Holland” in Bochum-Wattenscheid. KN J. Cartogr. Geogr. Inf. 2019, 69, 267–284. [Google Scholar] [CrossRef]

- Onsel, I.E.; Chang, O.; Mysiorek, J.; Donati, D.; Stead, D.; Barnett, W.; Zorzi, L. Applications of mixed and virtual reality techniques in site characterization. In Proceedings of the 26th Vancouver Geotechnical Society Symposium, Vancouver, BC, Canada, 31 May 2019. [Google Scholar]

- Mysiorek, J.; Onsel, I.E.; Stead, D.; Rosser, N. Engineering geological characterization of the 2014 Jure Nepal landslide: An integrated field, remote sensing-Virtual/Mixed Reality approach. In Proceedings of the 53rd US Rock Mechanics/Geomechanics Symposium, New York, NY, USA, 23–26 June 2019; OnePetro: Richardson, TX, USA, 2019. [Google Scholar]

- Lato, M. Canadian Geotechnical Colloquium: Three-dimensional remote sensing, four-dimensional analysis and visualization in geotechnical engineering—State of the art and outlook. Can. Geotech. J. 2021, 58, 1065–1076. [Google Scholar] [CrossRef]

- Anderson, S. We All Saw It the Same Way, Video Material, Duration 14:23. 2019. Available online: https://youtu.be/lkY-B_weRaA (accessed on 31 July 2022).

- BGC. BGC Engineering Inc Brings Giant Mine Project to Life in 3D with the ADA Platform. Video Material, Duration 1:35. 2018. Available online: https://youtu.be/6yNj3U105Oo (accessed on 31 July 2022).

- BGC. Bring Your Field to the Office. Video Material, Duration 2:14. 2021. Available online: https://youtu.be/hiN8mnvWl7s (accessed on 31 July 2022).

- Janiszewski, M.; Uotinen, L.; Merkel, J.; Leveinen, J.; Rinne, M. Virtual Reality learning environments for rock engineering, geology and mining education. In Proceedings of the 54th US Rock Mechanics/Geomechanics Symposium, Golden, CO, USA, 28 June–1 July 2020; OnePetro: Richardson, TX, USA, 2020. [Google Scholar]

- Pratt, M.J.; Skemer, P.A.; Arvidson, R.E. Developing an Augmented Reality Environment for Earth Science Education. In Proceedings of the 2017 AGU Fall Meeting Abstracts, New Orleans, LA, USA, 11–15 December 2017; Volume 2017, p. ED11C-0133. Available online: https://ui.adsabs.harvard.edu/abs/2017AGUFMED11C0133P/abstract (accessed on 31 July 2022).

- Rienow, A.; Lindner, C.; Dedring, T.; Hodam, H.; Ortwein, A.; Schultz, J.; Selg, F.; Staar, K.; Jürgens, C. Augmented reality and virtual reality applications based on satellite-borne and ISS-borne remote sensing data for school lessons. PFG J. Photogramm. Remote Sens. Geoinformation Sci. 2020, 88, 187–198. [Google Scholar] [CrossRef]

- Lindner, C.; Rienow, A.; Otto, K.-H.; Juergens, C. Development of an App and Teaching Concept for Implementation of Hyperspectral Remote Sensing Data into School Lessons Using Augmented Reality. Remote Sens. 2022, 14, 791. [Google Scholar] [CrossRef]

- Leonard, S.N.; Fitzgerald, R.N. Holographic learning: A mixed reality trial of Microsoft HoloLens in an Australian secondary school. Res. Learn. Technol. 2018, 26, 2160. [Google Scholar] [CrossRef]

- Bonali, F.L.; Russo, E.; Vitello, F.; Antoniou, V.; Marchese, F.; Fallati, L.; Bracchi, V.; Corti, N.; Savini, A.; Whitworth, M.; et al. How Academics and the Public Experienced Immersive Virtual Reality for Geo-Education. Geosciences 2022, 12, 9. [Google Scholar] [CrossRef]

- GeoRisk R&D Project. Available online: https://georisk.upc.edu/en (accessed on 31 July 2022).

- Pyrmove R&D Project. Available online: https://pyrmove.eu/ (accessed on 31 July 2022).

- Anderson, S.A.; Klopfer, R. Going Everywhere with Your Digital Twin. Shared Vision Through Portable, Immersive, 3D Visualizations. Geostrata Magazine. August/September 2022. Available online: www.geoinstitute.org (accessed on 24 September 2022).

- Build Wagon. What Happened to the Microsoft HoloLens? 2022. Available online: https://www.buildwagon.com/What-happened-to-the-Hololens.html (accessed on 31 July 2022).

- Microsoft. “HoloLens 2: Get to know the New Features and Technical Specs”. 6 April 2020. Available online: https://www.microsoft.com/en-us/HoloLens/hardware (accessed on 31 July 2022).

- Unity Technologies. Unity-Real-Time Development Platform |3D, 2D VR & AR. Unity Technologies, 2022. Available online: https://unity.com/ (accessed on 31 July 2022).

- ADA Platform. Available online: https://www.adaplatform.io/products/ (accessed on 31 July 2022).

- Clirio Inc. Available online: https://clir.io/product (accessed on 31 July 2022).

- Giant Mine. Available online: https://en.wikipedia.org/wiki/Giant_Mine (accessed on 31 July 2022).

- St-Pierre, S. Bringing Remediation to the Public. A New App Provides 3D Engagement for the Giant Mine Remediation Project. Can. Inst. Min. Metall. Pet. 2022, 17. Available online: https://magazine.cim.org/en/news/2022/bringing-remediation-to-the-public-en/ (accessed on 31 July 2022).

- Gili, J.A.; Ruiz-Carulla, R.; Matas, G.; Moya, J.; Prades, A.; Corominas, J.; Lantada, N.; Núñez-Andrés, M.A.; Buill, F.; Puig, C.; et al. Rockfalls: Analysis of the block fragmentation through field experiments. Landslides 2022, 19, 1009–1029. [Google Scholar] [CrossRef]

- Matas, G.; Lantada, N.; Corominas, J.; Gili, J.; Ruiz-Carulla, R.; Prades, A. Simulation of full-scale rockfall tests with a fragmentation model. Geosciences 2020, 10, 168. [Google Scholar] [CrossRef]

- Alsaker, E.; Gabrielsen, R.H.; Roca, E. The significance of the fracture patterns of Late-Eocene Montserrat fan-delta, Catalan Coastal Ranges (NE Spain). Tectonophysics 1996, 266, 465–491. [Google Scholar] [CrossRef]

- Janeras, M.; Jara, J.A.; Royán, M.J.; Vilaplana, J.M.; Aguasca, A.; Fàbregas, X.; Gili, J.A.; Buxó, P. Multitechnique approach to rockfall monitoring in the Montserrat massif (Catalonia, NE Spain). Eng. Geol. 2017, 219, 4–20. [Google Scholar] [CrossRef]

- Cloud Compare. Available online: http://www.cloudcompare.org/ (accessed on 31 July 2022).

- CODE_BRIGHT. Available online: https://deca.upc.edu/en/projects/code_bright (accessed on 31 July 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).