Development of a Tool to Manipulate Flexible Pieces in the Industry: Hardware and Software

Abstract

1. Background

1.1. Industry Automatization

1.2. Handling of Flexible Pieces in the Industry

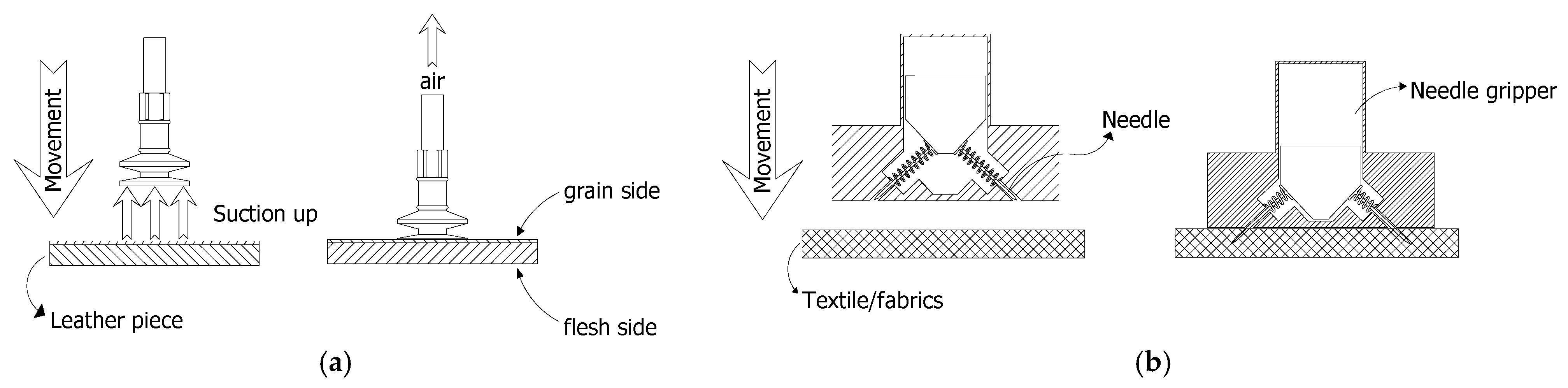

1.3. Actuators in the Leather and Textile Fashion Industry

2. Design and Prototyping of the Suction Cups Array Actuator

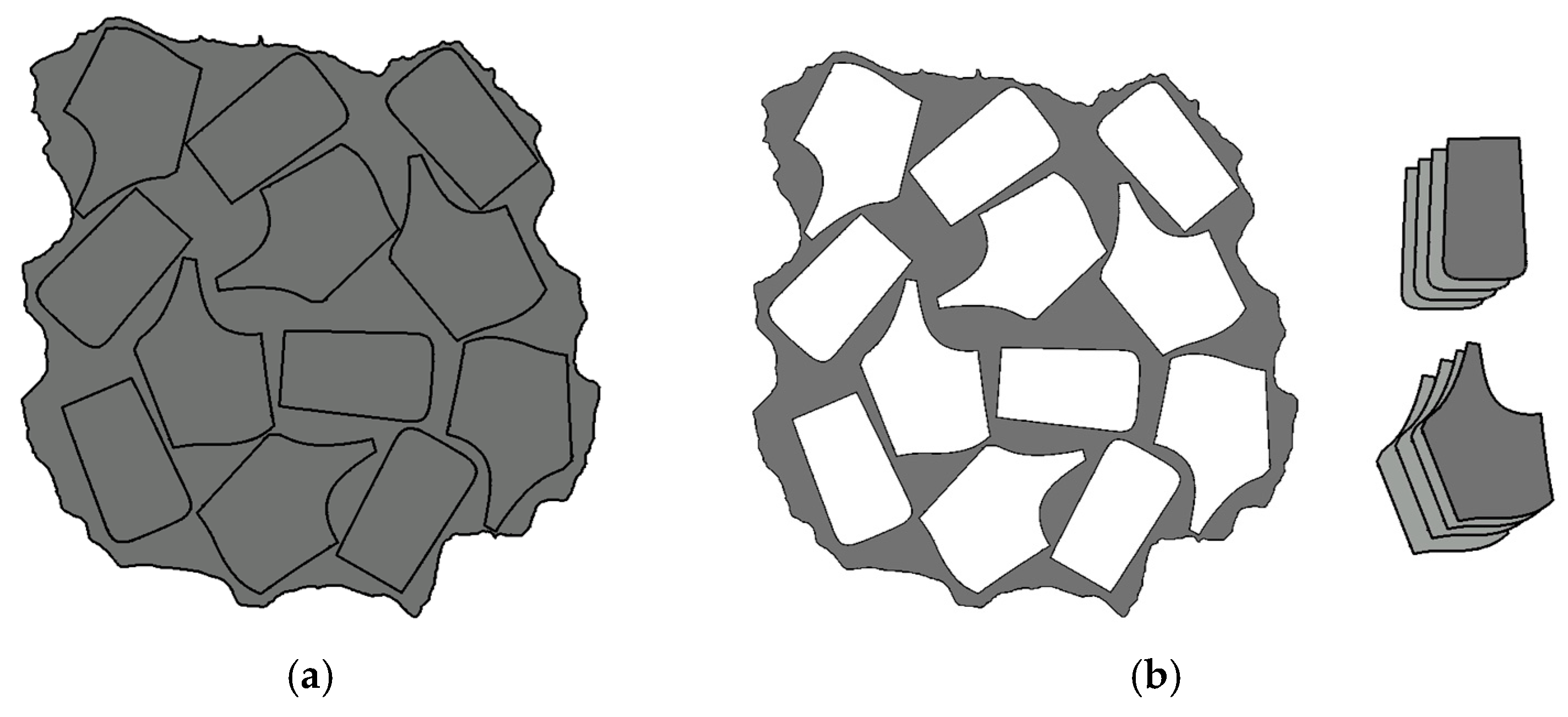

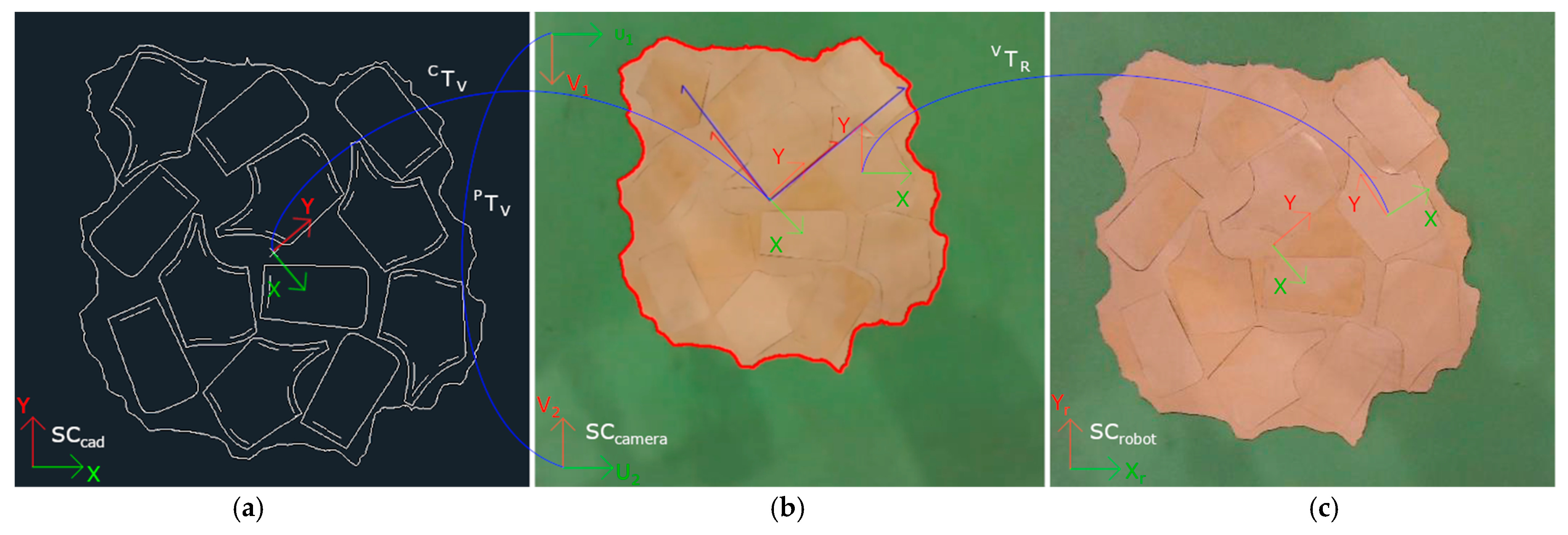

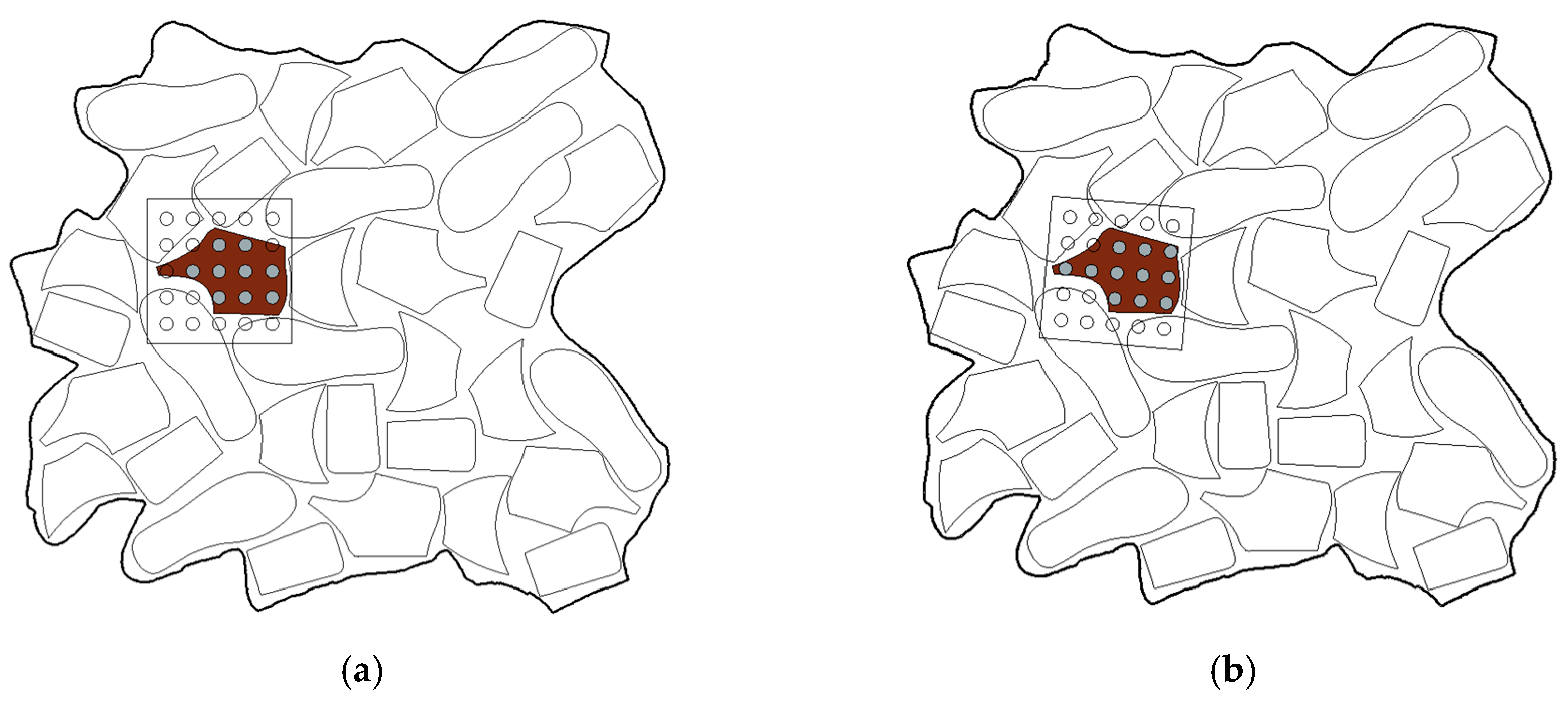

2.1. The Nesting Process and Pieces Location

2.2. The Actuator: Suction Cups Array Actuator

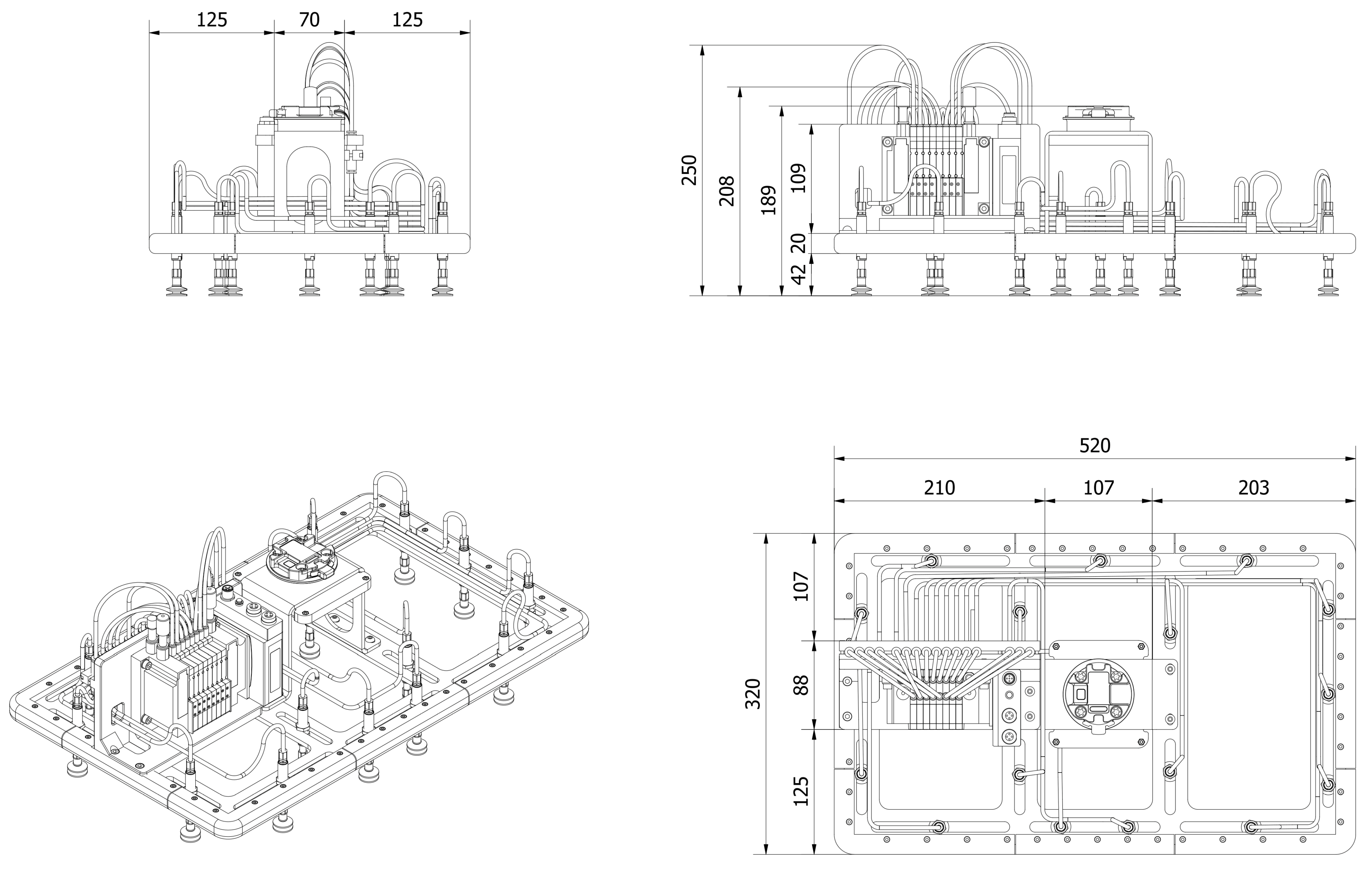

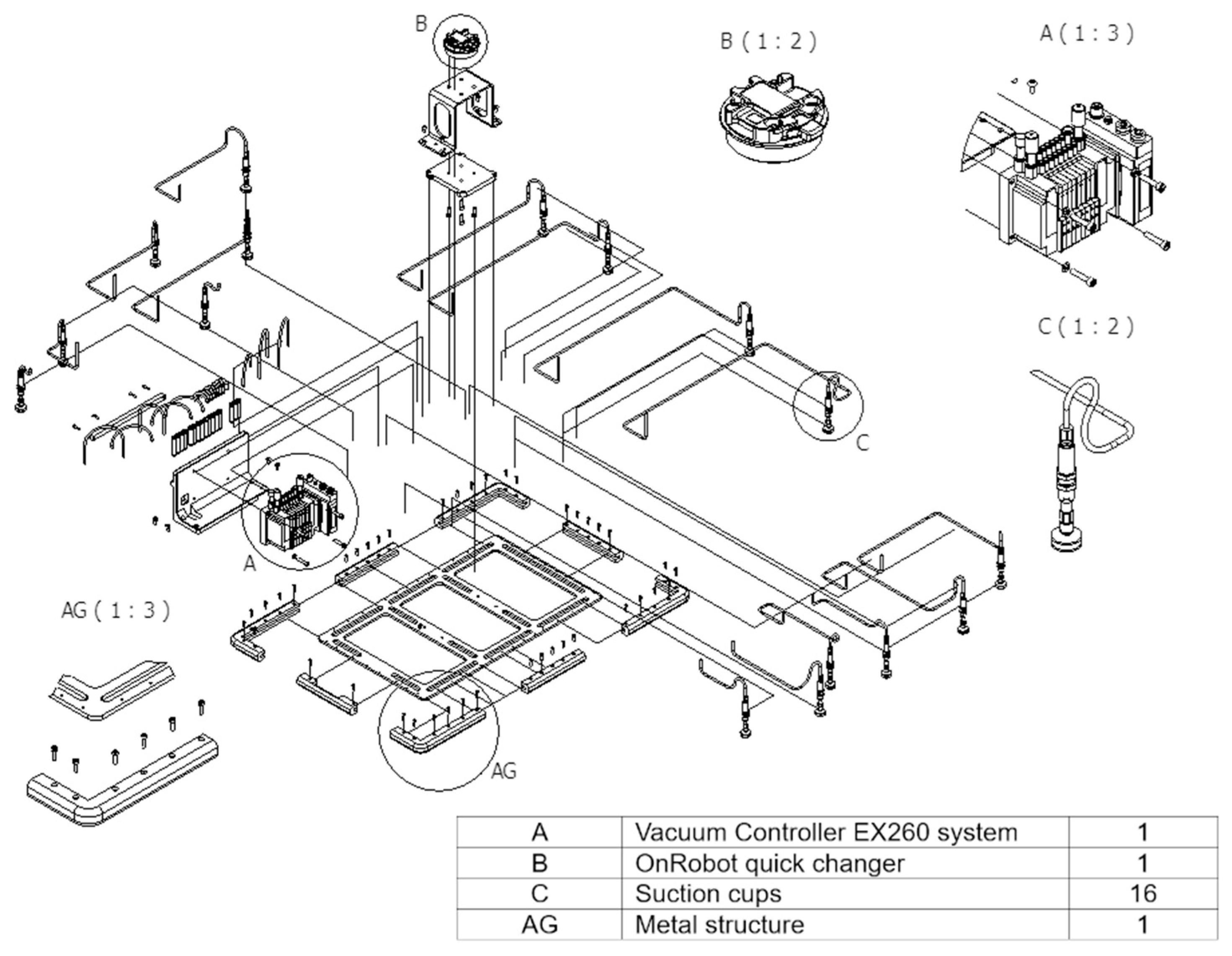

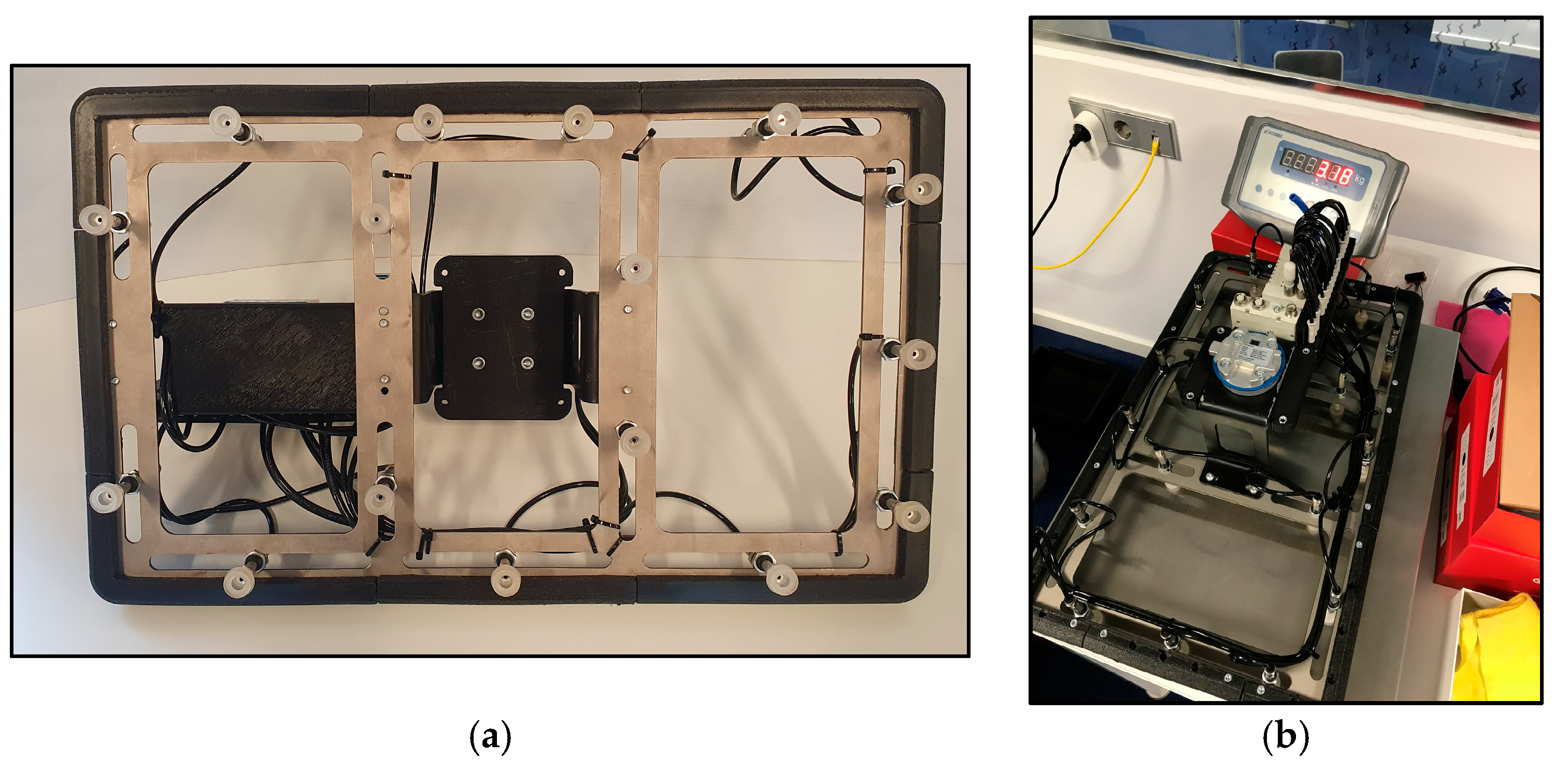

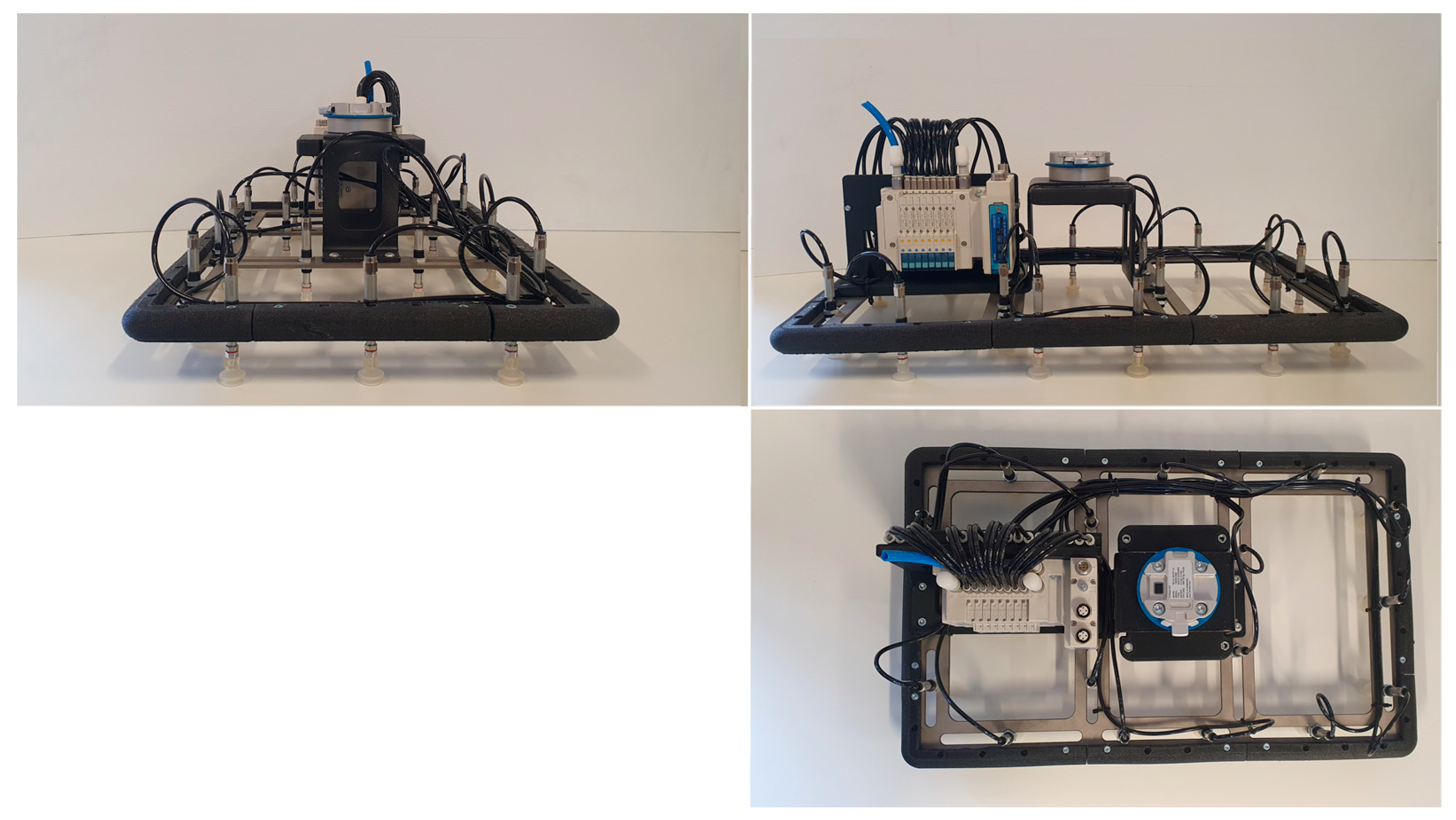

2.2.1. Implementation of a Suction Cups Array Actuator: Hardware

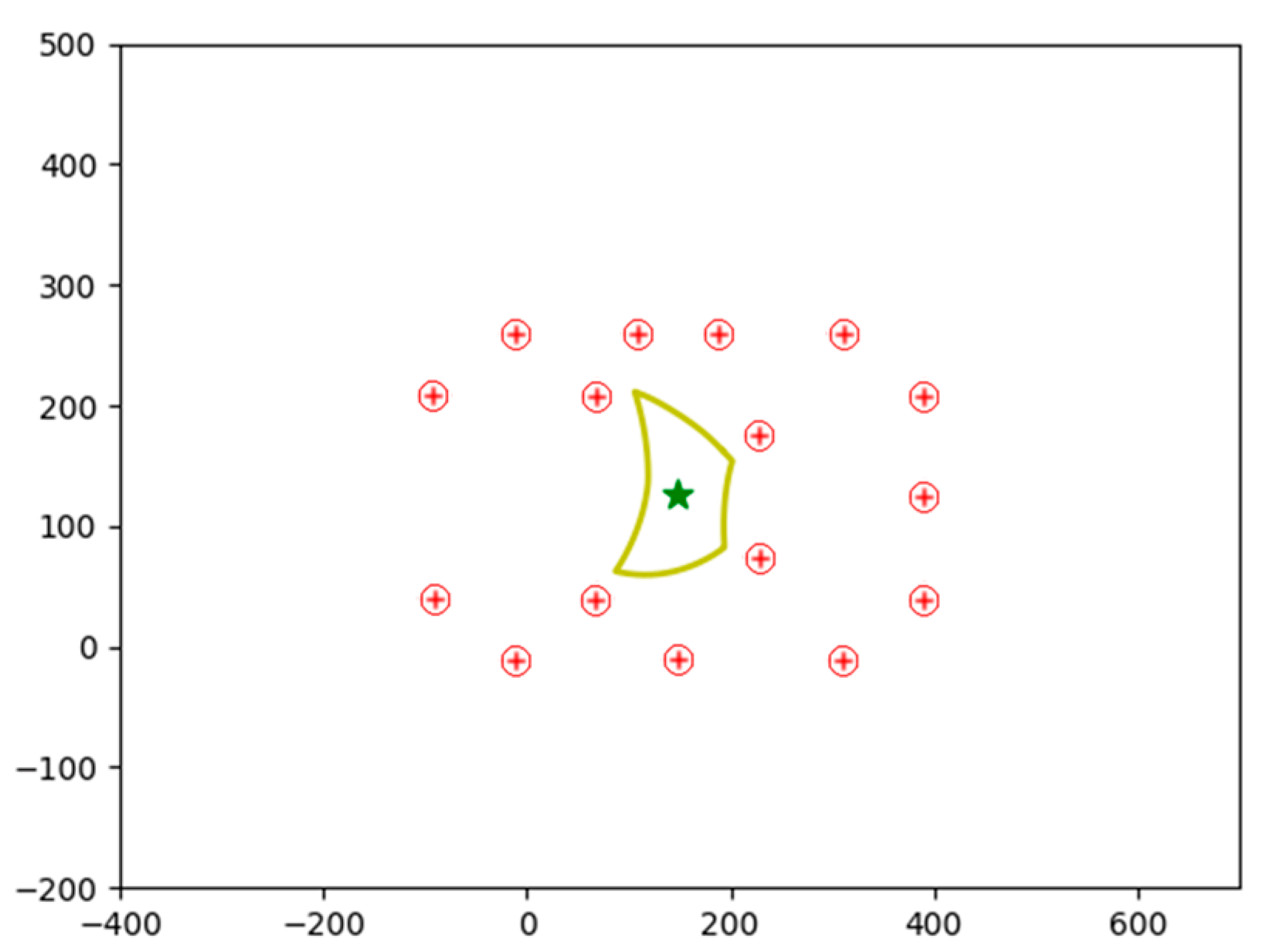

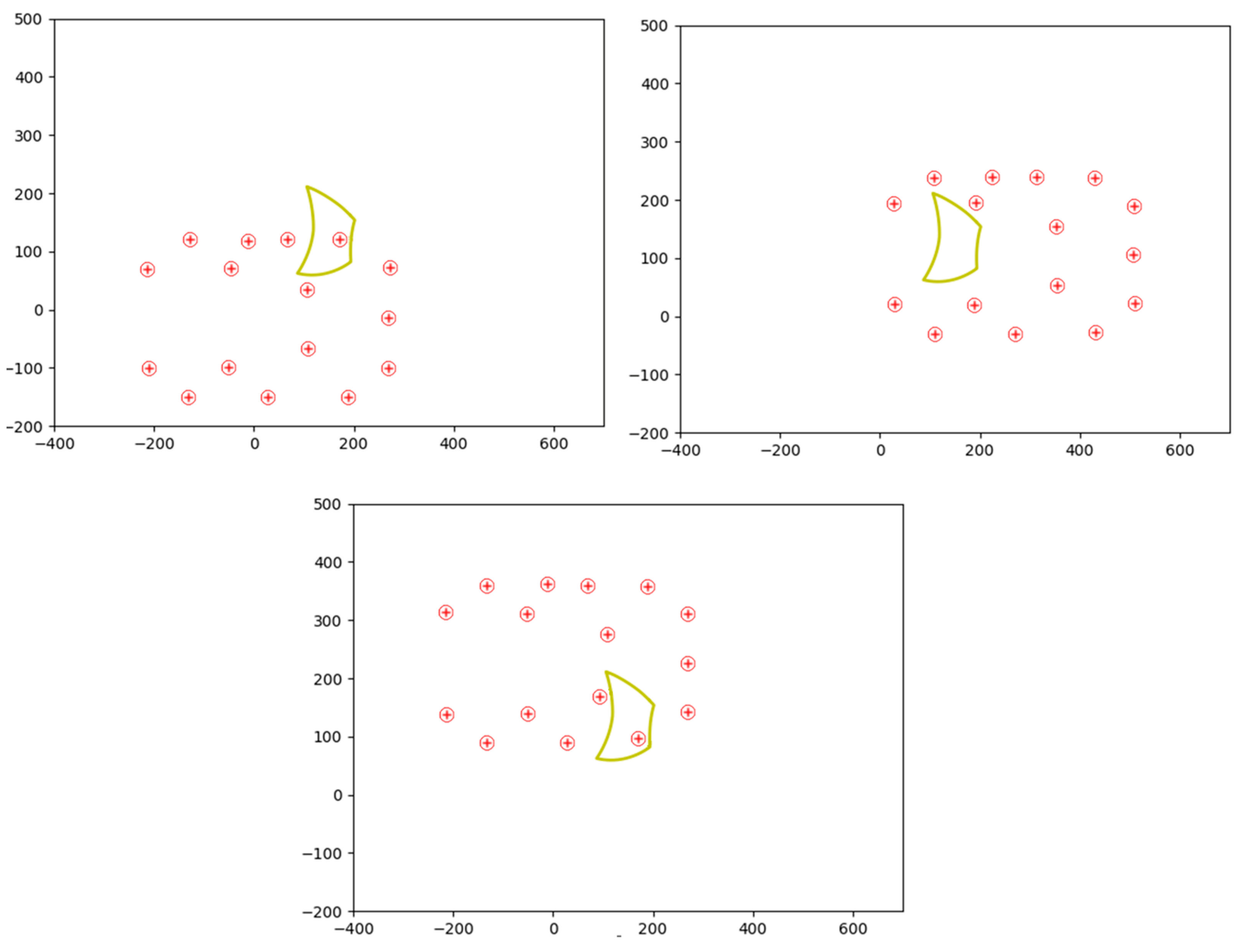

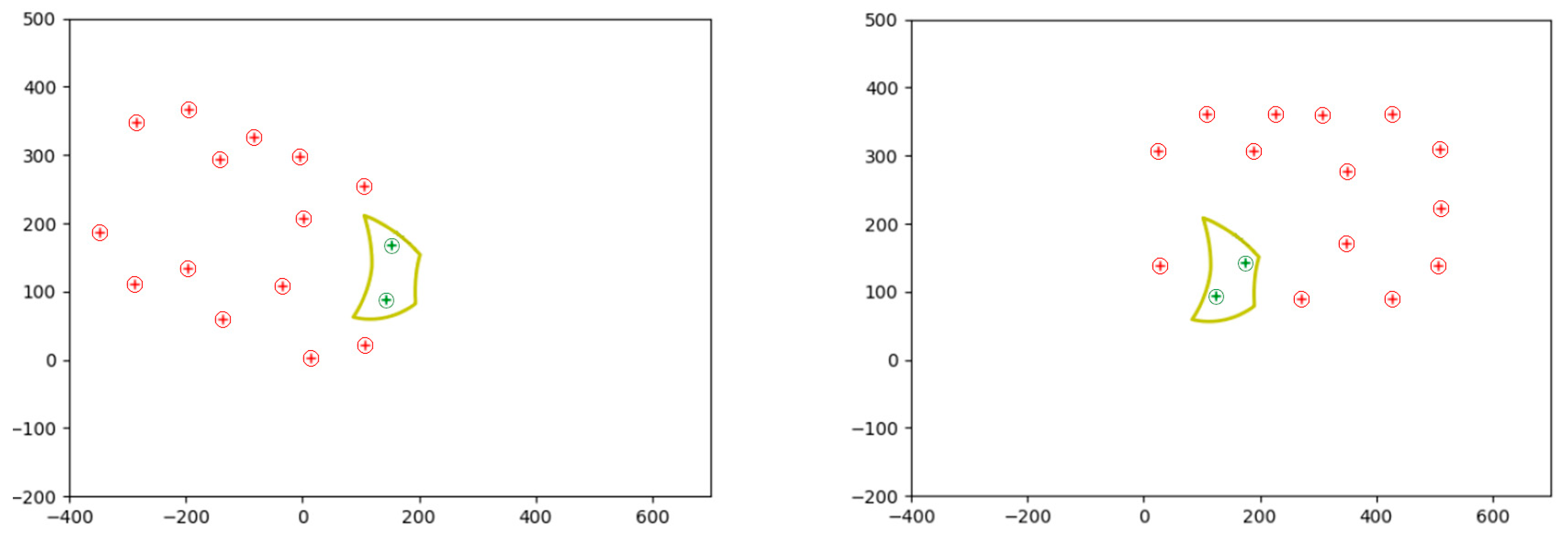

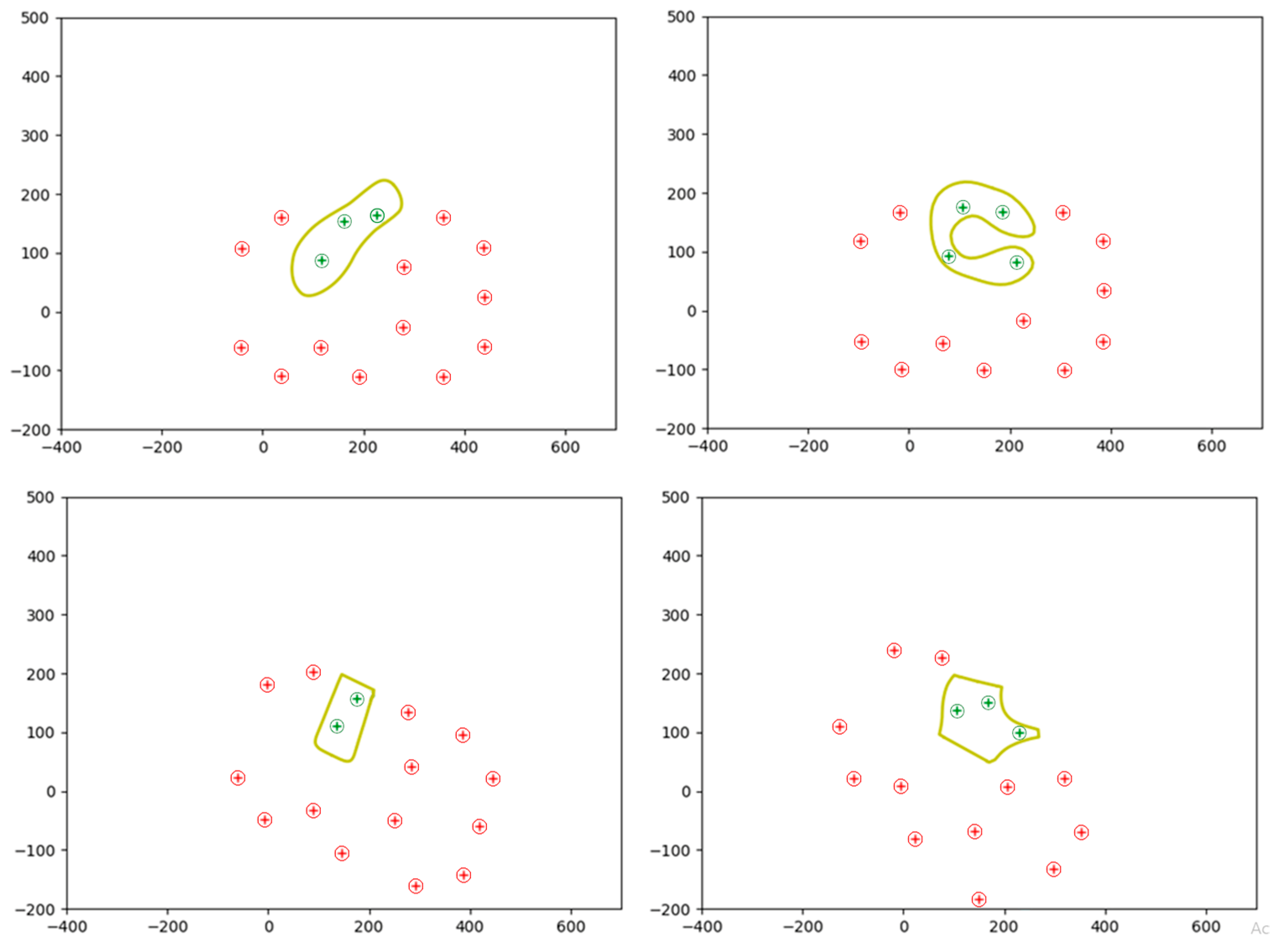

2.2.2. Optimized Use of the Tool: Software 1.0

- ezdxf 1.0.3: Python interface to the DXF format, developed by Autodesk. Allows developers to read and alter existing DXF documents or to create new DXF documents [31].

- Math 3.2.: A module that offers access to mathematical functions as defined by the C standard C language. This includes functions for representation and number theory, powers and logarithms, trigonometric functions, hyperbolic functions, special functions, and mathematical constants [32].

- Matplotlib 3.8.1.: A comprehensive library designed for generating static, animated, and interactive visualizations in Python [33].

- NumPy 1.26.1: Library that defines a data type representing multidimensional arrays, having basic functions to work with. It is a stable and fast library [34].

- Shapely 2.0.1: A Python package utilized for the set-theoretic analysis and manipulation of planar features, leveraging the functionalities of the GEOS library [35].

2.3. Contour Scanning

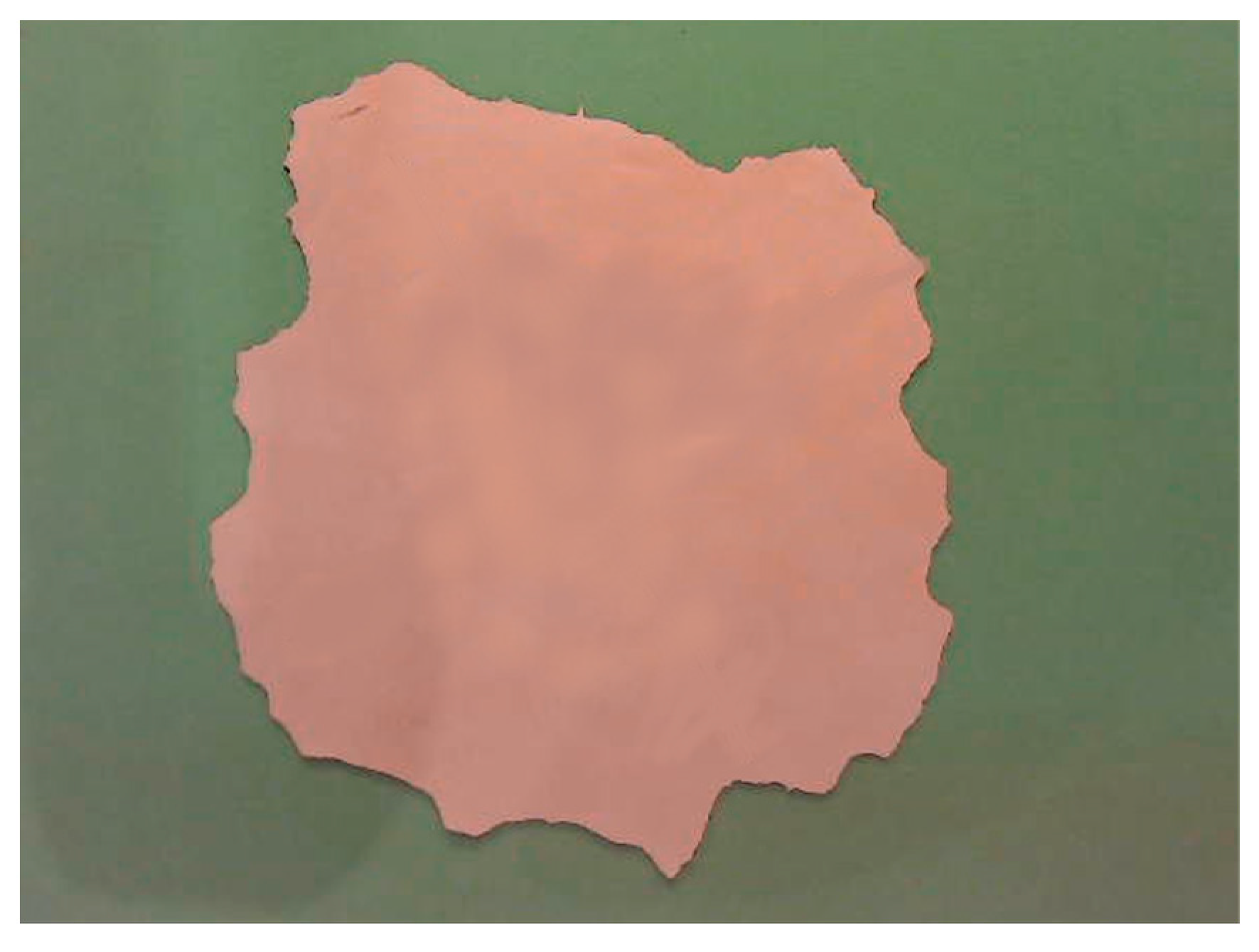

2.4. Computer Vision System

3. Results and Discussion

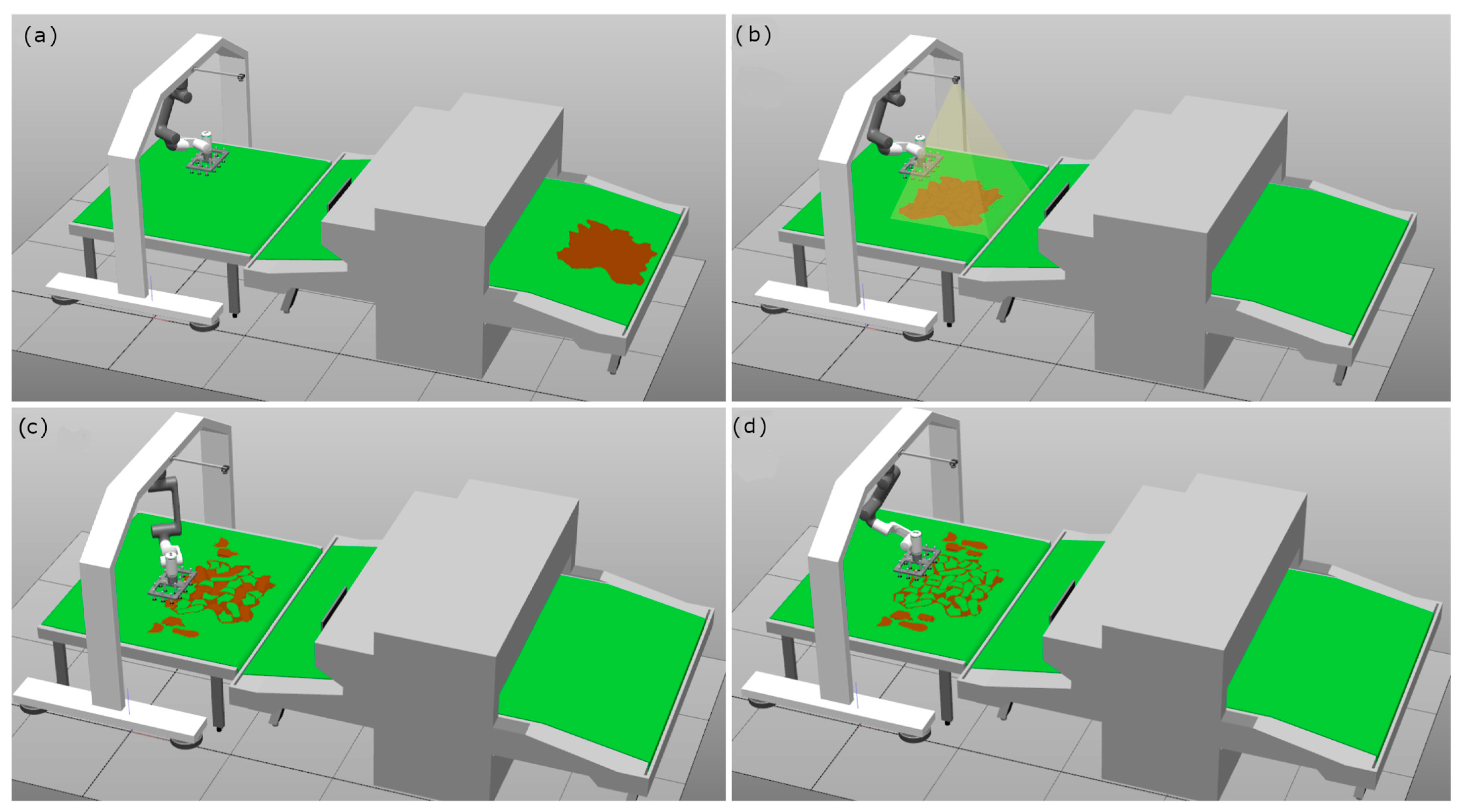

3.1. Simulation Results

3.2. Real Tests

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brynzér, H.; Johansson, M. Design and performance of kitting and order picking systems. Int. J. Prod. Econ. 1995, 41, 115–125. [Google Scholar] [CrossRef]

- Hanson, R.; Medbo, L. Kitting and time efficiency in manual assembly. Int. J. Prod. Res. 2012, 50, 1115–1125. [Google Scholar] [CrossRef][Green Version]

- Hanson, R.; Brolin, A. A comparison of kitting and continuous supply in in-plant materials supply. Int. J. Prod. Res. 2013, 51, 979–992. [Google Scholar] [CrossRef]

- Méndez, J.B.; Cremades, D.; Nicolas, F.; Perez-Vidal, C.; Segura-Heras, J.V. Conceptual and Preliminary Design of a Shoe Manufacturing Plant. Appl. Sci. 2021, 11, 11055. [Google Scholar] [CrossRef]

- Borrell, J.; González, A.; Perez-Vidal, C.; Gracia, L.; Solanes, J.E. Cooperative human–robot polishing for the task of patina growing on high-quality leather shoes. Int. J. Adv. Manuf. Technol. 2023, 125, 2467–2484. [Google Scholar] [CrossRef]

- Smith, A.; Johnson, B.; Brown, C. Robotic Automation in Textile Industry: Advancements and Benefits. J. Text. Eng. Fash. Technol. 2020, 6, 110–112. [Google Scholar]

- Lee, S.; Kim, H. Precision Sewing with Computer-Aided Robotic Systems in Textile Manufacturing. Int. J. Robot. Autom. 2019, 34, 209–218. [Google Scholar]

- Wang, Y.; Liu, H.; Zhang, Q. IoT-Based Inventory Management in Textile Manufacturing: A Case Study. J. Ind. Eng. Manag. 2021, 14, 865–878. [Google Scholar]

- García, L.; Pérez, M.; Rodríguez, J. Sustainability Benefits of Automation in the Textile Industry. J. Sustain. Text. 2018, 5, 94–105. [Google Scholar]

- Chen, X.; Wu, Z.; Li, J. Robotic Pick-and-Place Systems in Textile Manufacturing: A Review of Efficiency and Quality Improvements. Robot. Autom. Rev. 2022, 18, 45–56. [Google Scholar]

- Mendez, J.B.; Perez-Vidal, C.; Heras, J.V.S.; Perez-Hernandez, J.J. Robotic Pick-and-Place Time Optimization: Application to Footwear Production. IEEE Access 2020, 8, 209428–209440. [Google Scholar] [CrossRef]

- Borrell, J.; Perez-Vidal, C.; Segura, J.V. Optimization of the pick-and-place sequence of a bimanual collaborative robot in an industrial production line. Int. J. Adv. Manuf. Technol. 2024, 130, 4221–4234. [Google Scholar] [CrossRef]

- Mateu-Gomez, D.; Martínez-Peral, F.J.; Perez-Vidal, C. Multi-Arm Trajectory Planning for Optimal Collision-Free Pick-and-Place Operations. Technologies 2024, 12, 12. [Google Scholar] [CrossRef]

- Körber, M.; Glück, R. A Toolchain for Automated Control and Simulation of Robot Teams in Carbon-Fiber-Reinforced Polymers Production. Appl. Sci. 2024, 14, 2475. [Google Scholar] [CrossRef]

- Jørgensen, T.B.; Jensen, S.H.N.; Aanæs, H.; Hansen, N.W.; Krüger, N. An Adaptive Robotic System for Doing Pick and Place Operations with Deformable Objects. J. Intell. Robot. Syst. 2019, 94, 81–100. [Google Scholar] [CrossRef]

- Morino, K.; Kikuchi, S.; Chikagawa, S.; Izumi, M.; Watanabe, T. Sheet-Based Gripper Featuring Passive Pull-In Functionality for Bin Picking and for Picking Up Thin Flexible Objects. IEEE Robot. Autom. Lett. 2020, 5, 2007–2014. [Google Scholar] [CrossRef]

- Björnsson, A.; Jonsson, M.; Johansen, K. Automated material handling in composite manufacturing using pick-and-place system—A review. Robot. Comput. Manuf. 2018, 51, 222–229. [Google Scholar] [CrossRef]

- Samadikhoshkho, Z.; Zareinia, K.; Janabi-Sharifi, F. A Brief Review on Robotic Grippers Classifications. In Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE), Edmonton, AB, Canada, 5–8 May 2019; pp. 1–4. [Google Scholar]

- Zhang, B.; Xie, Y.; Zhou, J.; Wang, K.; Zhang, Z. State-of-the-art robotic grippers, grasping and control strategies, as well as their applications in agricultural robots: A review. Comput. Electron. Agric. 2020, 177, 105694. [Google Scholar] [CrossRef]

- Ye, Y.; Cheng, P.; Yan, B.; Lu, Y.; Wu, C. Design of a Novel Soft Pneumatic Gripper with Variable Gripping Size and Mode. J. Intell. Robot. Syst. 2022, 106, 5. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, S.; Dai, J.; Oseyemi, A.E.; Liu, L.; Du, N.; Lv, F. A Modular Soft Gripper with Combined Pneu-Net Actuators. Actuators 2023, 12, 172. [Google Scholar] [CrossRef]

- Qi, J.; Li, X.; Tao, Z.; Feng, H.; Fu, Y. Design and Control of a Hydraulic Driven Robotic Gripper. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 398–404. [Google Scholar]

- Gabriel, F.; Fahning, M.; Meiners, J.; Dietrich, F.; Dröder, K. Modeling of vacuum grippers for the design of energy efficient vacuum-based handling processes. Prod. Eng. 2020, 14, 545–554. [Google Scholar] [CrossRef]

- Maggi, M.; Mantriota, G.; Reina, G. Introducing POLYPUS: A novel adaptive vacuum gripper. Mech. Mach. Theory 2022, 167, 104483. [Google Scholar] [CrossRef]

- Wacker, C.; Dierks, N.; Kwade, A.; Dröder, K. Analytic and Data-Driven Force Prediction for Vacuum-Based Granular Grippers. Machines 2024, 12, 57. [Google Scholar] [CrossRef]

- Heckmann, R.; Lengauer, T. A simulated annealing approach to the nesting problem in the textile manufacturing industry. Ann. Oper. Res. 1995, 57, 103–133. [Google Scholar] [CrossRef]

- Chryssolouris, G.; Papakostas, N.; Mourtzis, D. A decision-making approach for nesting scheduling: A textile case. Int. J. Prod. Res. 2000, 38, 4555–4564. [Google Scholar] [CrossRef]

- Alves, C.; Brás, P.; de Carvalho, J.V.; Pinto, T. New constructive algorithms for leather nesting in the automotive industry. Comput. Oper. Res. 2012, 39, 1487–1505. [Google Scholar] [CrossRef]

- OnRobot: Quik Changer. Available online: https://onrobot.com/es/productos/quick-changer (accessed on 11 December 2023).

- Electric Vacuum: JSY100. Available online: https://www.smcworld.com/catalog/New-products-en/mpv/es11-113-jsy-np/data/es11-113-jsy-np.pdf (accessed on 11 December 2023).

- Introduction—Ezdxf 1.0.3 Documentation. (s. f.). Available online: https://ezdxf.readthedocs.io/en/stable/introduction.html (accessed on 11 December 2023).

- Math—Mathematical Functions. (s. f.). Python Documentation. Available online: https://docs.python.org/3/library/math.html (accessed on 11 December 2023).

- Matplotlib—Visualization with Python. Available online: https://matplotlib.org (accessed on 11 December 2023).

- NumPy—Bioinformatics at COMAV 0.1 Documentation. (s. f.). Available online: https://bioinf.comav.upv.es/courses/linux/python/scipy.html (accessed on 11 December 2023).

- The Shapely User Manual—Shapely 2.0.1 Documentation. (s. f.). Available online: https://shapely.readthedocs.io/en/stable/manual.html (accessed on 11 December 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Peral, F.J.; Borrell Méndez, J.; Segura Heras, J.V.; Perez-Vidal, C. Development of a Tool to Manipulate Flexible Pieces in the Industry: Hardware and Software. Actuators 2024, 13, 149. https://doi.org/10.3390/act13040149

Martínez-Peral FJ, Borrell Méndez J, Segura Heras JV, Perez-Vidal C. Development of a Tool to Manipulate Flexible Pieces in the Industry: Hardware and Software. Actuators. 2024; 13(4):149. https://doi.org/10.3390/act13040149

Chicago/Turabian StyleMartínez-Peral, Francisco José, Jorge Borrell Méndez, José Vicente Segura Heras, and Carlos Perez-Vidal. 2024. "Development of a Tool to Manipulate Flexible Pieces in the Industry: Hardware and Software" Actuators 13, no. 4: 149. https://doi.org/10.3390/act13040149

APA StyleMartínez-Peral, F. J., Borrell Méndez, J., Segura Heras, J. V., & Perez-Vidal, C. (2024). Development of a Tool to Manipulate Flexible Pieces in the Industry: Hardware and Software. Actuators, 13(4), 149. https://doi.org/10.3390/act13040149