Abstract

The detection of obstacles of intelligent driving vehicles becomes a primary condition to ensure safe driving. LiDAR and camera are the main sensors for intelligent vehicles to obtain information about their surroundings, and they each have their own benefits in terms of object detection. LiDAR can obtain the position and geometric structure of the object, and camera is very suitable for object recognition, but the reliance on environmental perception by a single-type sensor can no longer meet the detection requirements in complex traffic scenes. Therefore, this paper proposes an improved AVOD fusion algorithm for LiDAR and machine vision sensors. The traditional NMS (non-maximum suppression) algorithm is optimized using a Gaussian weighting method, while the 3D-IoU pose estimation loss function is introduced into the target frame screening module to upgrade the 2D loss function to 3D and design the 3D-IoU criterion. By comparing the detection accuracy of the algorithm proposed in this paper with that of the traditional method, it has been found that the improved AVOD fusion algorithm significantly improved the detection efficiency and the detection accuracy reached 96.3%. The algorithm proposed in this paper can provide a new approach for object detection of intelligent driving vehicles.

1. Introduction

An intelligent driving system normally includes perception, prediction, planning, and control modules. Among them, the perception module, the foundation of unmanned driving systems [1,2,3,4], is a key part of intelligent driving vehicles to interact with the external environment. The accuracy of perceived information has become one of the recent research hotspots in the field of unmanned driving. To realize accurate perception, it is primarily necessary to solve the issue of object detection in the vehicle movement process. At present, the common vehicle detection methods mainly include 2D image object detection, 3D point cloud object detection, and others. The traditional methods used in 2D image object detection chiefly involve the histogram of oriented gradients (HOG), support vector machine (SVM), and the improvement and optimization of the above methods, but these methods are found to have a poor generalization and low recognition accuracy [5,6]. As deep learning technology progresses forward, image object detection methods are being widely used [7,8], representative ones including R-CNN [9], Fast R-CNN [10], Faster R-CNN [11], YOLO [12], and SSD [13]. However, there are certain limitations of machine vision-based 2D object detection methods used for unmanned vehicles to accurately obtain environmental information, mainly because machine vision, as a passive sensor, is sensitive to the light intensity when providing high-resolution images, and produces poor imaging effect in obscured and dimly lit environments [14]. Meanwhile, the imaging principle of machine vision based on perspective projection may cause the absence of distance information in the images, which poses difficult for machine vision to achieve accurate 3D positioning and distance measurement [15].

As an active sensor, LiDAR demonstrates its superior performance in object detection by measuring the reflected light energy and converting the surrounding environment into 3D point cloud data. Current methods for 3D object detection based on LIDAR point cloud include two main types: One involves the voxelization of point clouds, such as VoxelNet [16] and OcNet [17], and the other is the projection of point cloud onto a 2D plane, such as PIXOR [18] and BirdNet+ [19]. Among them, VoxelNet, based on prior knowledge, divides the point cloud space into several voxels at equal distances in length, and height and encodes them, followed by the realization of object detection through a region proposal network. PIXOR is to obtain the feature map of a 2D bird’s eye view with height and reflectance as channels gained from the point cloud, and then use RetinaNet [20] with the fine-tuned structure for object identification and localization. However, due to the sparseness of the LiDAR point cloud, the network based on 3D voxels shows poor real-time performance. However, the mapping process of projecting a point cloud to a 2D plane will cause a partial loss of information in a certain dimension, which affects the detection accuracy.

In recent years, many scholars have made use of information complementation between machine vision and LiDAR to explore new methods in object detection. For example, Premebida et al. [21] combined LiDAR and an RGB camera for pedestrian detection in 2014 and used a deformable parts model (DPM) to detect pedestrians on a LiDAR dense depth map and RGB images to realize fusion detection. However, its guidance information appeared to be too single, which resulted in complementary values with large errors. Kang et al. [22] designed a convolutional neural network composed of an independent unary classifier and fused CNN. The network fused the LiDAR 3D point cloud and RGB image to achieve object detection but failed to guarantee real-time performance due to the intense computation involved. In 2017, Chen et al. [23] proposed the MV3D (multi-view 3D object detection network) method to fuse LiDAR point cloud data with RGB image information. MV3D streamlined the VGG16 network, but as it was based on the KITTI dataset, MV3D took a long time to recognize, making it difficult to meet the real-time requirements for unmanned driving. Based on that, in 2018, Ku et al. [24] proposed an aggregate view object detection network (AVOD), which improved the detection rate and was able to meet the real-time requirement of unmanned driving systems but with declined detection accuracy. To sum up, there are still some limitations in current information fusion detection methods of LiDAR and machine vision, mainly found in two aspects: (1) the network structure of the fusion algorithm is complex, so it is difficult to realize real-time performance or high detection accuracy, resulting in poor practical effect; and (2) in the case of special working conditions such as overlapping and severe occlusion in detection, it is difficult to meet the accuracy requirement for object detection.

To address the above problems, this paper proposes an improved AVOD fusion algorithm of machine vision and LiDAR sensor. AVOD sensor fusion network is selected as the basic framework, which is divided into feature extraction and object screening modules for analysis and optimization. For the object bounding box screening module in the AVOD sensor fusion network, the 2D GIoU (generalized intersection over union) loss function is upgraded to 3D and a 3D-IoU criterion is designed, with the pose estimation loss function further obtained. The NMS algorithm in the original network is replaced with a Gaussian-weighted NMS algorithm, into which 3D-IoU is introduced. The method is robust even for scenes with occlusion and performs well for small targets at a distance.

The remainder of the paper is structured as follows: Section 2 presents related work, including a brief description of the deep learning-based image detection algorithm, as well as the LiDAR point cloud detection algorithm; in Section 3, an improved AVOD algorithm is proposed, detailing the improvements for the algorithmic network; in Section 4, based on the KITTI dataset under the same working conditions, the verification and analysis are made in comparison with a single-sensor object detection network through a comparative trial; and Section 5 presents a summary of the study.

2. Methods and Principles

2.1. Overall Structure

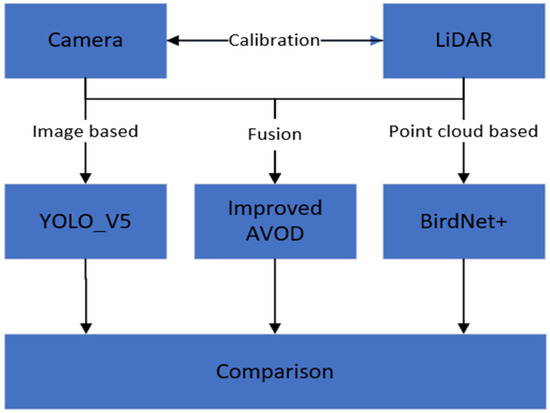

The overall structure of the paper is shown in Figure 1, which mainly includes the following parts:

Figure 1.

Overall structure.

- Deep learning algorithm is used for object detection of images from machine vision and LiDAR point cloud, respectively. The YOLOv5 algorithm is employed to acquire information such as image detection bounding box category, and the BirdNet+ algorithm is then applied to the point cloud data to obtain detection bounding box information of LiDAR point cloud bird’s eye view.

- The AVOD sensor fusion algorithm is improved, and the 3D-IoU pose estimation loss function is introduced into the network object bounding box screening module of the algorithm, which is validated by the KITTI dataset image and point cloud data.

- Based on the improved AVOD fusion algorithm, the 3D bounding box detected is analyzed and compared with the image detection bounding box and the point cloud bird’s eye view detection bounding box.

2.2. Machine Vision Image Detection Algorithm

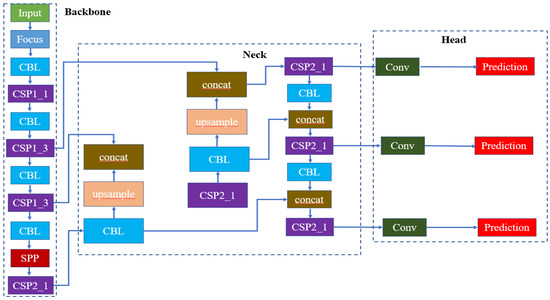

YOLOv5 detection algorithm belongs to the one-stage algorithm, and compared with the two-stage one, it has the advantages of less computation and faster calculation [25]. YOLOv5s, as a light weight model in the YOLOv5 algorithm, has the fastest speed, the least parameters, and higher detection accuracy, and can better meet the real-time requirements of object detection in traffic scenes. Therefore, the YOLOv5s model is selected as the image object detection algorithm, as shown in Figure 2.

Figure 2.

YOLOv5s network structure.

The following figure shows the network structure of the YOLOv5s algorithm. The object detection network is mainly composed of three parts: a backbone, a neck, and a head. The backbone is mainly responsible for extracting the necessary features from the input network images. The neck, which is located behind the backbone, is the key link in the object detection network, and its role is to reprocess and make rational use of the feature extracted from the backbone, so as to improve the network performance. In addition, the head is chiefly used to realize the final object classification and positioning function.

2.3. LiDAR Point Cloud Detection Algorithm

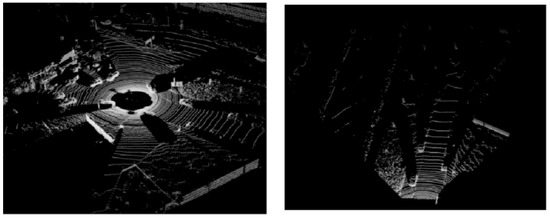

Vehicle-mounted 3D object detection usually relies on the geometric information captured by LiDAR equipment. The LiDAR point cloud data has about 1.3 million points per frame, and the huge amount of data assists the environment perception system in unmanned vehicles. The perception algorithm can effectively extract the obstacle type and distance information around a driverless vehicle, but it is highly necessary to process the huge point cloud data. To solve the difficulty, this paper adopts the perspective of converting point cloud data into a bird’s eye view (BEV), which can effectively solve the problem of object occlusion and overlapping while retaining vehicle features. BirdNet+ algorithm is an improved version of BirdNet [26] based on the object detection framework. By projecting point cloud data into BEV representation, a 3D detection task is thus transformed into a 2D image detection, and then the two-stage Faster RCNN model is adopted to realize the detection function.

The surrounding environment is created through the establishment of a bird’s eye view.

- The points of length, width, and height (L × W × H) in the detection area are discretized, and the reflection intensity is quantified as a true value between [0, 1].

- The occupied feature resolution is defined as ,, respectively, and refers to a dimension of a unit grid of the bird’s eye view and the final resolution of the bird’s eye view is obtained as .

Taking the LiDAR center position as the center point, the plane range of the point cloud data of the KITTI dataset is defined as ×, the height being . After the bird’s eye view is obtained, the object features are extracted by the pyramid module in the BirdNet+ network. In the process of generating the view, due to the limitations in network input and memory, the final BEV size is 416 × 416. The bird’s eye view point cloud is shown in Figure 3.

Figure 3.

Comparison of point cloud data before and after preprocessing.

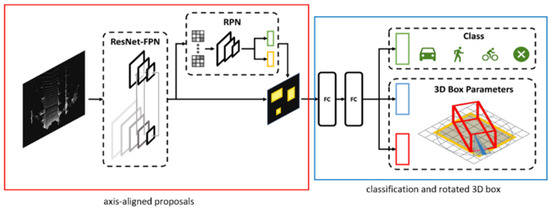

BirdNet+, depicted in Figure 4, uses BEV images as input and can perform 3D object detection in a two-stage procedure. In the first stage, a set of axis-aligned proposals is obtained, and in the second stage, the 3D box corresponding to each proposal is estimated.

Figure 4.

Overview of the BirdNet+.

3. Improvement of AVOD Sensor Fusion Network

To improve the detection accuracy of machine vision and LiDAR point cloud for small objects and the ability of information complementation in specific cases, point cloud and image need to be fused at the pixel level by projecting the point cloud onto the image after joint calibration. AVOD sensor fusion network can be divided into feature extraction module and object bounding box screening module. AVOD is weak in extracting small object features from point cloud information, which leads to its poor effect on pedestrian detection, and because its object screening scheme is harder to adapt to complex situations [27], so 3D-IoU is introduced to improve the effect of sensor fusion detection.

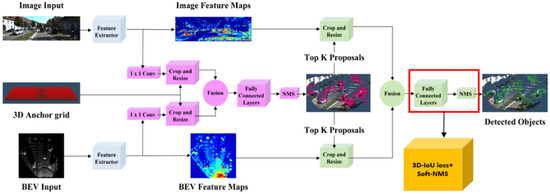

The input data of the AVOD algorithm includes two parts: The first part is an RGB image, and the second is the BEV of the LiDAR point cloud corresponding to the RGB image. The input data is then processed with the FPN network to obtain its full-resolution feature map. The regions corresponding to the feature maps obtained by BEV in the RGB image are fused to extract 3D candidate bounding boxes. The obtained 3D candidate bounding boxes are then used for 3D object detection. The improved AVOD algorithm proposed in this paper is to replace the red box shown in Figure 5 with 3D IOU and introduce Gaussian-weighted Soft-NMS to improve the performance of the algorithm.

Figure 5.

Architectural of AVOD sensor fusion network.

3.1. Gaussian Weighting NMS Algorithm

The quantitative box to be detected is an issue that cannot be ignored by common algorithms (e.g., region proposal network (RPN), pre-division), whose computational speed is drastically reduced by the computational redundancy for irrelevant regions. Based on Soft-NMS, this paper introduces a Gaussian weighting algorithm for non-maximum suppression screening, and the confidence is weighted with the Gaussian function, with the ranking repositioned. The box successfully detected is directly replaced by the box to be detected with the highest confidence, based on which the distribution parameter of Gaussian weighting coefficients is replaced by the intersection area between the successfully detected box and another detection box, which can effectively introduce the intersection degree of detection boxes into the confidence so that the detection accuracy of the occluded object can be significantly improved [28]. Algorithm 1 shows the pseudo codes for Gaussian weighting NMS algorithm.

where B is the initial set of recognition boxes, S is the confidence corresponding to the recognition box, and is the NMS threshold.

| Algorithm 1: Pseudo codes for Gaussian weighting NMS algorithm |

|

|

|

|

|

|

|

|

|

|

The conventional NMS processing can be visualized by the following fractional reset function:

Compared with the NMS algorithm [29], the intersection degree of detection bounding boxes is introduced into the confidence level through Gaussian weighting and the scores of predicted bounding boxes are also weighted. All detection bounding boxes obtained are combined to form a new set , and they are circularly calculated from high to low to obtain their coincidence degree with other detection boxes in the results of the new set . The main improvements are manifested as follows.

Step 1: The position of the vanishing point of the intelligent driving vehicle in the corrected visual field and the center of the detection result B are used as the basis for the calculation of the predicted bounding boxes.

Step 2: is the storage mode of in the set B, and the area of each detection bounding box can be expressed as:

Effective image edge completion is achieved by adding 1 to the numerator and the denominator respectively.

Step 3: The intersection degree of the detection bounding boxes is introduced into the confidence level through Gaussian weighting and the predicted bounding boxes scores are also weighted, all the detection bounding boxes obtained are combined to form a new set , and the elements in the new set are sorted by score S from lowest to highest:

Step 4: All detection bounding boxes are circularly calculated from high to low to obtain their coincidence degree with other detection bounding boxes in the new set results:

The Gaussian parameters of the weighted coefficient of the detection bounding box score are replaced by the coincidence degrees . The calculated score is then compared with the detection threshold previously set, and the output result is the detection bounding box that meets the requirements [30].

3.2. The Loss of Bounding Box Regression

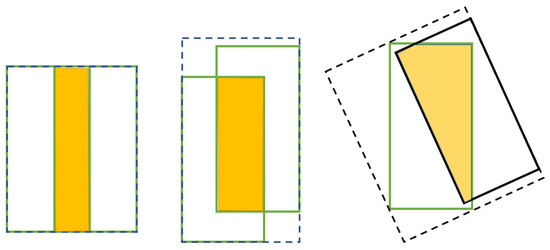

The result of object detection is described with a bounding box, which contains important information such as the location, size, and category of the object, so an accurate bounding box is essential for object detection. Intersection over Union (IoU) is an indispensable basis for judging and selecting multiple 2D bounding boxes, which reflects the detection effect of the detection bounding box and the ground truth bounding box, Figure 6 shows the various cases of overlapping target boxes. The traditional IoU [31] can be expressed as:

Figure 6.

Multiple cases of bounding box intersection.

In the formula, is the loss function; is the detection bounding box; and is the ground truth bounding box. If the value of is 0, the algorithm makes no response to the distance between the ground truth bounding box and the object one, and the training and gradient conduction cannot be performed either; in the meantime, the evaluation standard is too singular and may have the same value due to the angle problem, leading to nonoverlapping between two bounding box and low detection accuracy.

To reduce the problem that the object bounding boxes do not intersect in 2D object detection to a certain extent, the loss function is used in this paper. The main steps involve (1) to assume that and of the smallest rectangular box of the object bounding box C containing and must exist; (2) to calculate the smallest rectangular box of the object bounding box C containing and ; (3) to estimate the ratio of the area of C not covered by and to the total area of C; and (4) to subtract the ratio from the of and to obtain:

In which the coordinates of the ground truth bounding boxes and predicted bounding boxes are defined as:

The area of the ground truth bounding boxes and that of of the predicted bounding boxes are defined as:

Then, the intersection area of and can be calculated as:

Therefore, the coordinates of the smallest object bounding box containing and can be calculated as follows:

So, the area of the minimum bounding box can be calculated as:

Finally, based on , the final loss can be calculated as:

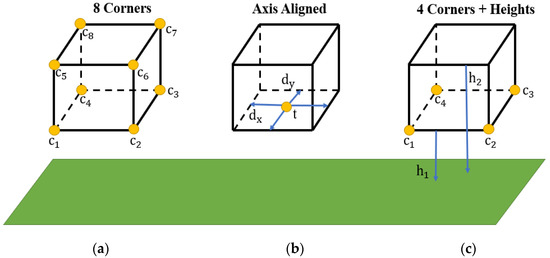

3.3. 3D Bounding Box Coding Mode

There are more 3D bounding boxes than 2D ones in terms of regression point quantity, and the definitions of regression parameters in the loss function are directly affected by how assuming that 3D bounding boxes are encoded and angles are pinned down. For a cuboid 3D bounding box, the analogous 2D bounding box is traditionally represented with the length, width, and height of the spatial centroid of the cube, but the regression of the spatial centroid is very inaccurate, and the direction angle information cannot be obtained. As shown in Figure 7, three main ways can be employed to encode a common 3D bounding box. Figure 7a goes through the 8 vertices of the cuboid to regress the 3D position of the detection box while taking the direction of the long side vector as the vehicle heading angle, but the geometric constraints of the cuboid are not taken into account, thus resulting too much redundant output information. The regression method adopted in Figure 7b can minimize redundant parameters, and the cuboid is represented with the undersurface center point , the axial distance , and the intersection angle , but this encoding easily leads to the drift of the bounding box. As shown in Figure 7c, considering that the 3D box is a cuboid with its top surface parallel to the road surface, and the reliance on vertex information for accurate angle regression, the 3D-IoU AVOD algorithm proposed in this paper uses the four vertex coordinates of the bottom surface and the bottom and top surface heights to represent a 3D bounding box. Therefore, the encoding method shown in Figure 7c is selected.

Figure 7.

3D bounding box encoding method. (a) 8 corner box encoding. (b) Axis aligned box encoding. (c) 4 corner encoding.

Based on the encoding method of the 3D bounding box shown in Figure 7c and the derivation process of the 2D object loss function, it is assumed that the ground truth of 3D bounding boxes is , so the coordinates of the predicted values of the 3D detection box can be expressed as:

In Formula (23), the volumes and of the predicted 3D bounding box can be expressed as:

Further, the intersection volume of and can be calculated as:

The volume of the minimum object bounding box containing and can be calculated as:

Then, the loss function of the 3D bounding box detection algorithm based on sensor fusion can be calculated as:

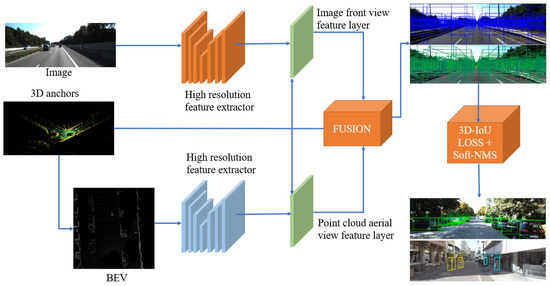

The IoU discrimination criterion in the traditional object bounding box screening algorithm is simple, and it is difficult to determine the direction, height, and length of the object contained in the 3D bounding box. In this paper, a screening algorithm for a 3D bounding box is designed and put forward. In the object bounding box screening stage, the idea of the GIoU algorithm is taken for reference in designing the 3D-IoU evaluation standard, and the 3D-IOU proposed in formulating (24)–(27) in this section is replaced by 3D-IOU in Formula (6) , and a new pose estimation loss function is obtained, which improves the screening accuracy and the efficiency of the 3D bounding box and reduces the occurrence of intersections. Finally, based on the proposed 3D-AP detection criteria, the object detection results are analyzed and compared to the KITTI dataset. The network framework is shown in Figure 8.

Figure 8.

3D-IoU AVOD sensor fusion network framework.

4. Experimental Validation

4.1. Test Environment and Parameter Configuration

To make a fair comparison with existing methods, 7481 frames from the KITTI dataset were trained and divided into training and verification sets by the ratio of 1:1. Based on the simple, medium, and difficult classifications proposed by the KITTI dataset, the dataset was set up in the same way as the pre-modified AVOD network, and the detection objects included vehicles and pedestrians. We used Ubuntu 18.04, a virtual environment built with anaconda, we trained the network with the mini-batches containing one image with 512 and 1024 ROIs, and the network is trained for 120k iterations with an initial learning rate of 0.0001.

The experiment in this paper used the PyTorch deep learning framework, with python and C++ as the main languages. Other hardware conditions are shown in Table 1.

Table 1.

Parameters of the test platform.

4.2. Evaluation Criteria of the Detection Algorithm

In terms of the KITTI criterion, all objects were classified into three difficulty levels by size, truncation, and occlusion, namely, easy (E), medium (M), and hard (H). The evaluation indexes of object detection algorithms mainly include precision, recall, and average precision (AP). The main task of 3D object detection is to estimate the position and the direction of the 3D bounding box of the object. General detection and evaluation indicators on the KITTI dataset include 3D average accuracy (AP3D), the average accuracy of the bird’s eye view (APbev), and average orientation similarity (AOS) [31] When the average accuracy is calculated, based on the 3D IoU between the 3D predicted bounding box and the ground truth bounding box, whether the bounding box is detected correctly is determined. The IoU threshold is set to 0.7 for a vehicle object, and 0.5 for a pedestrian object. The data to be detected are input into the improved AVOD fusion algorithm network framework, and the point cloud and image data are passed through the feature extraction module and the object bounding box screening module; through the counting of the correct, missed, and false detection results, the precision, recall, and mean average precision (mAP) can be calculated using the formulas below:

In the formula, TP refers to the true case, that is, the number of vehicles and pedestrians detected correctly; FP means false positive, that is, the number of the objects incorrectly detected as vehicles or pedestrians; FN means false negative, that is, the number of vehicles or pedestrians but not detected as vehicles or pedestrians. Accuracy indicates the proportion of correct predictions in a category of prediction objects to the total correct samples, and recall indicates the proportion of correct prediction objects to the total prediction samples. Since the training set in this paper only contains two samples, mAP is calculated as shown in Equation (34).

4.3. Comparison and Visualization of Experimental Results

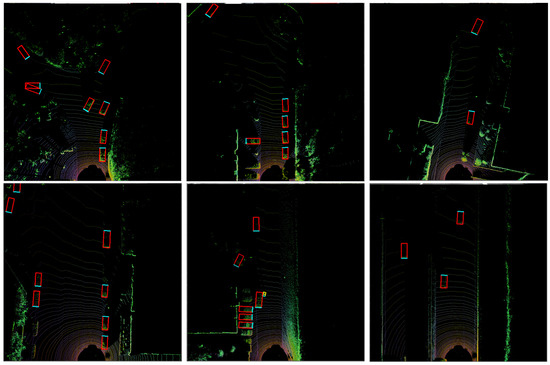

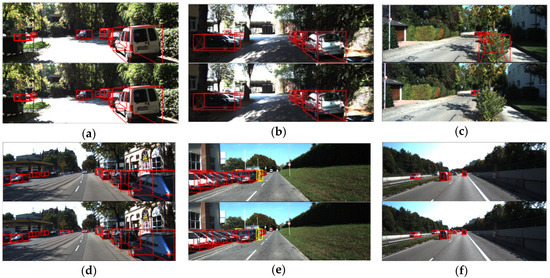

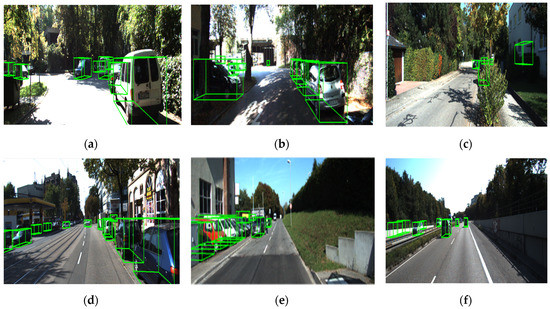

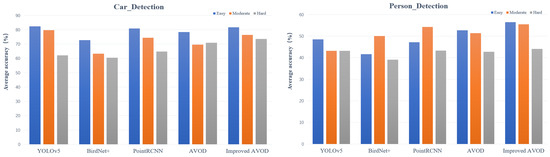

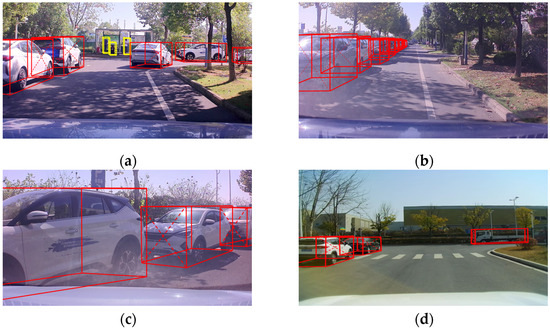

The test results on the KITTI dataset are shown in Figure 9 below. For the same vehicle and pedestrian training set, image object detection algorithm YOLOv5, point cloud bird’s eye view object detection algorithm BirdNet+, pre-improved AVOD fusion algorithm, improved AVOD fusion algorithm proposed in this paper, and 3D object detection PointRCNN algorithm are used to test each frame data of the dataset, with image detection results shown in Figure 10. In RGB images, the red box represents a detected object bounding box and confidence level. In point cloud bird’s eye view, the red box stands for a detected vehicle, and the yellow box for a detected pedestrian, as shown in Figure 11 below. Figure 12 shows the results of the PointRCNN detection.

Figure 9.

YOLOv5 detection results.

Figure 10.

BirdNet+ algorithm detection results.

Figure 11.

Comparison of detection results before and after the improvement of the AVOD fusion algorithm. (a–c) are the detection results before the improvement and (d–f) are the results of the improved algorithm.

Figure 12.

Comparison of detection results of PointRCNN algorithm. (a–f) show the detection of the same scenario with the PointRCNN algorithm.

It can be observed from the above detection results that there are different degrees of missed and false detections in both images and point cloud bird’s eye view detections. The missing and falsehood in image detection more often occur in the case of a vehicle being obscured. The falsehood in point cloud detection is mostly caused by the fact that the shrubs, leaves, and other objects on both sides of the road, which are similar in shape to the point cloud of vehicles and pedestrians, are detected as vehicles.

4.4. Further Discussion

From the above results, it can be seen that the improved AVOD algorithm proposed in this paper was significantly better in detection accuracy, compared to the YOLOv5 image object detection algorithm, BirdNet+ point cloud bird’s eye view object detection algorithm, PointRCNN algorithm, and the pre-improved AVOD fusion algorithm without optimized object screening module which were applied alone. The improved AVOD fusion algorithm improved the accuracy by 6.38% compared to the YOLOv5 algorithm, and reduced the number of falses and missed objects by 13.3%, with the overall mAP index going up by 5.57%. Compared with the BirdNet+ algorithm, the accuracy was improved by 8.01%, the number of false and missed objects was reduced by 16.62%, and the overall mAP was enhanced by 3.06%. Compared with the PointRCNN algorithm, the accuracy was improved by 6.72%, the number of false and missed objects was reduced by 14.37%, and the overall mAP rose by 4.54%. In addition, compared with the AVOD fusion algorithm before improvement, the accuracy was improved by about 5.10%, the number of false and missed objects was cut down by 7.9%, and the overall mAP index was raised by 1.35%.

The comparison of vehicle and pedestrian detection results in the AVOD fusion algorithm without an optimized object bounding box screening module before and after the improvement is shown in Figure 13 above. It can be seen from the following scenarios that the introduction of the 3D-IoU pose estimation loss function into the AVOD network object bounding box screening module ensures accuracy while reducing the ratio of false detection. Moreover, the improved AVOD fusion point cloud and RGB image information object detection framework score the highest mAP values in Figure 13 on object categories E and M, indicating that the optimization scheme of the object bounding box screening module proposed in this paper can improve the detection accuracy of certain small and medium objects, reduce the occurrence of missed detections, and have certain robustness in the actual unmanned driving.

Figure 13.

Comparison of performance indexes of detection accuracy of each algorithm.

5. Real Vehicle Verification

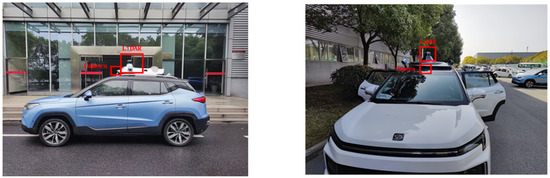

To test the accuracy and effectiveness of the algorithms in this paper, the experimental core setup is a 32-line LiDAR and a camera. In addition, there are two new energy vehicles, a laptop computer, a LiDAR adaptor box with an RJ45 port for a network cable, a Dspace domain controller, battery power supplies and an inverter, and other auxiliary equipment. The sensor data acquisition system in the upper computer is capable of real-time information acquisition, in addition to the functions of adding time stamps, recording, synchronization, and playback of the collected information data, as well as supporting the data acquisition from multi-millimeter wave radar, BeiDou and other sensors. The data acquisition system can receive LiDAR data through the network port and camera data through the USB interface. The real-time and synchronous data acquisition from LiDAR and camera can be realized, as shown in Figure 14.

Figure 14.

Environment perception system of the intelligent vehicle, the left figure shows the position of each sensor on the side, and the right figure shows the position from the front.

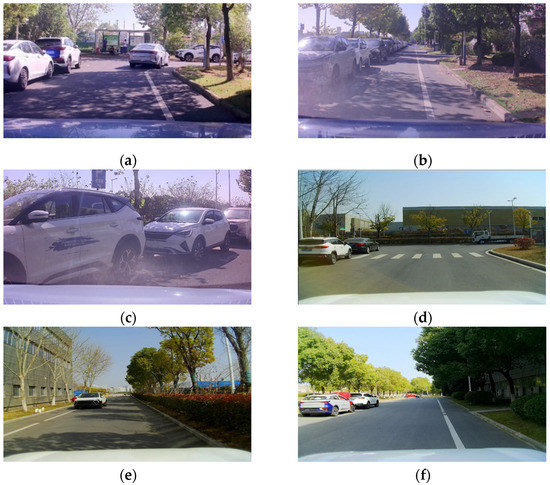

The topic node names of the two sensors were to be opened before the test, and Rosbag was then used to record the sensor data represented by the topic node names, and the data was collected in the following scenarios. The vehicle was driven around the main road of JAC Technology Center Park, recording as many cars, pedestrians, dense occlusion scenes, and small distant objects as possible during the driving process. The actual test scenario is shown in Figure 15, and the different experimental scenarios in Figure 15.

Figure 15.

Parts of the test scenario. (a–f) include road scenes with different shading conditions and different lighting conditions.

Next, the LiDAR point cloud data parsed by Rosbag were preprocessed, and since the image data and the corresponding LiDAR point cloud bird’s eye view data were used as the inputs for the AVOD sensor fusion network, the image data obtained from the camera and the point cloud data acquired by the LiDAR were modified according to the KITTI dataset format. Figure 16 below shows the detection results based on the improved AVOD sensor fusion algorithm.

Figure 16.

Detection results in different scenarios. (a–f) are the results of the improved algorithm for different occlusion situations and different lighting conditions.

Figure 16a,e shows road scenes with relatively sparse vehicles and pedestrians. It can be seen from the figure that the improved AVOD sensor fusion detection algorithm in this paper was still able to accurately detect occluded and distant pedestrian objects even when the pedestrians were in a scene with illumination or severe occlusion. Figure 16b,c shows a complex road scene with the dense occlusion of vehicles and strong light. Light, leaving shadows and the occlusion of vehicles in front had a great impact on the image information. It can be seen that most vehicles on the left side of the road in Figure 16b were accurately detected, while the vehicle objects on the structured road and even small objects in a relatively long distance in Figure 16d,f were detected with a certain accuracy, which demonstrated that the improvement of the object box screening module of the AVOD sensor fusion network in this paper had a better detection capability for densely occluded objects as well as small objects at long distances. However, for the vehicle objects which were densely obscured in the distance and only a small part of the vehicle top was exposed on the image, there was a partial loss of point cloud information corresponding to the vehicle objects in the LiDAR point cloud data as well, resulting the missing of a small number of objects. The average detection accuracy of the improved AVOD algorithm was calculated to be 96.3% in the real vehicle test. Tests showed that the possibility of missed and false detections exists in the following two cases: (1) when the vehicles were densely parked and placed far away, the point cloud data was sparse, and the image data obtained less feature information about the objects; (2) the trees on both sides of the road were incorrectly detected as pedestrian objects.

6. Conclusions

In this paper, a fusion algorithm of LiDAR and machine vision sensors based on improved AVOD is proposed. With the AVOD fusion algorithm taken as the benchmark framework, the object bounding box screening module is optimized with the adoption of Gaussian function weighting. By borrowing the idea of 2D-IoU algorithm to upgrade the loss function of 2D pose estimation to 3D, thus improving the screening accuracy and efficiency of 3D target. KITTI dataset is used to conduct four sets of experiments under the same working conditions. The results show that the improved AVOD fusion algorithm has improved the detection accuracy and speed, compared with the original AVOD fusion algorithm in detecting automobile objects and pedestrian 3D-AP values in urban road scenarios. In the future, we will verify the detection performance of our algorithm in other scenarios, and we plan to incorporate a control module based on this method.

Author Contributions

Conceptualization, Z.B.; methodology, Z.B.; software, Z.B.; validation, D.B.; formal analysis, J.W.; investigation, M.W.; resources, D.B.; data curation, J.W.; writing—original draft preparation, Z.B.; writing—review and editing, L.C. and Z.B.; visualization, Q.Z.; supervision, Q.Z.; project administration, L.C.; funding acquisition, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by Anhui Natural Science Foundation Project (Grant No. 1908085ME174).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study is self-test and self-collection.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| NMS: | Non-maximum suppression |

| HOG: | Histogram of oriented gradients |

| SVM: | Support vector machine |

| DPM: | Deformable parts model |

| MV3D: | Multi-view 3D object detection network |

| AVOD: | Aggregate view object detection network |

| GIoU: | Generalized intersection over union |

| BEV: | Bird’s eye view |

| RPN: | Region proposal network |

| IoU: | Intersection over union |

| AP: | Average precision |

| mAP: | Mean average precision |

| AOS: | Average orientation similarity |

References

- Pendleton, S.D.; Andersen, H.; Du, X.; Shen, X.; Meghjani, M.; Eng, Y.H.; Rus, D.; Ang, M.H., Jr. Perception, planning, control, and coordination for autonomous vehicles. Machines 2017, 5, 6. [Google Scholar] [CrossRef]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixao, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Zhang, Z.; Jia, X.; Yang, T.; Gu, Y.; Wang, W.; Chen, L. Multi-objective optimization of lubricant volume in an ELSD considering thermal effects. Int. J. Therm. Sci. 2021, 164, 106884. [Google Scholar] [CrossRef]

- Singh, G.; Chhabra, I. Effective and fast face recognition system using complementary OC-LBP and HOG feature descriptors with SVM classifier. J. Inf. Technol. Res. (JITR) 2018, 11, 91–110. [Google Scholar] [CrossRef]

- Jian, L.; Lin, C. Pure FPGA Implementation of an HOG Based Real-Time Pedestrian Detection System. Sensors 2018, 18, 1174. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Li, Z.; Zhang, Z.; Li, J.; Chen, L. Path tracking control of field information-collecting robot based on improved convolutional neural network algorithm. Sensors 2020, 20, 797. [Google Scholar] [CrossRef]

- Quan, L.; Jiang, W.; Li, H.; Li, H.; Wang, Q.; Chen, L. Intelligent intra-row robotic weeding system combining deep learning technology with a targeted weeding mode. Biosyst. Eng. 2022, 216, 13–31. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Zhao, Z.Q.; Bian, H.; Hu, D.; Cheng, W.; Glotin, H. Pedestrian Detection Based on Fast R-CNN and Batch Normalization. In International Conference on Intelligent Computing; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Liu, B.; Zhao, W.; Sun, Q. Study of object detection based on Faster R-CNN. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 6233–6236. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Chu, J.; Guo, Z.; Leng, L. Object detection based on multi-layer convolution feature fusion and online hard example mining. IEEE Access 2018, 6, 19959–19967. [Google Scholar] [CrossRef]

- Chen, Y.; Tai, L.; Sun, K.; Li, M.M. Monocular 3d object detection using pairwise spatial relationships. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14–19. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yuan, Y.; Huang, L.; Guo, J.; Zhang, C.; Chen, X.; Wang, J. Ocnet: Object context network for scene parsing. arXiv 2018, arXiv:1809.00916. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7652–7660. [Google Scholar]

- Barrera, A.; Guindel, C.; Beltrán, J.; García, F. Birdnet+: End-to-end 3d object detection in lidar bird’s eye view. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Premebida, C.; Carreira, J.; Batista, J.; Nunes, U. Pedestrian detection combining RGB and dense LIDAR data. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Oh, S.I.; Kang, H.B. Object detection and classification by decision-level fusion for intelligent vehicle systems. Sensors 2017, 17, 207. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Fang, J.; Liu, Q.; Li, J. A Deployment Scheme of YOLOv5 with Inference Optimizations Based on the Triton Inference Server. In Proceedings of the 2021 IEEE 6th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), Chengdu, China, 24–26 April 2021. [Google Scholar]

- Beltrán, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; De La Escalera, A. Birdnet: A 3d object detection framework from lidar information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3517–3523. [Google Scholar]

- Li, J.; Luo, S.; Zhu, Z.; Dai, H.; Krylov, A.S.; Ding, Y.; Shao, L. 3D IoU-Net: IoU guided 3D object detector for point clouds. arXiv 2020, arXiv:2004.04962. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Improving object detection with one line of code. arXiv 2017, arXiv:1704.04503. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted boxes fusion: Ensembling boxes from different object detection models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of localization confidence for accurate object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 784–799. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).