Art in the Age of Machine Intelligence

Art has always existed in a complex, symbiotic and continually evolving relationship with the technological capabilities of a culture. Those capabilities constrain the art that is produced, and inform the way art is perceived and understood by its audience.

Like the invention of applied pigments, the printing press, photography, and computers, we believe machine intelligence is an innovation that will profoundly affect art. As with these earlier innovations, it will ultimately transform society in ways that are hard to imagine from today’s vantage point; in the nearer term, it will expand our understanding of both external reality and our perceptual and cognitive processes.

As with earlier technologies (

Figure 1), some artists will embrace machine intelligence as a new medium or a partner, while others will continue using today’s media and modes of production. In the future, even the act of rejecting it may be a conscious statement, just as photorealistic painting is a statement today. Any artistic gesture toward machine intelligence—whether negative, positive, both, or neither—seems likelier to withstand the test of time if it is historically grounded and technically well informed.

Walter Benjamin illustrated this point mordantly in his 1931 essay, “Little History of Photography” (

Benjamin 1999), citing an 1839 critique of the newly announced French daguerreotype technology in the Leipziger Stadtanzeiger (a “chauvinist rag”):

To try to capture fleeting mirror images,” it said, “is not just an impossible undertaking, as has been established after thorough German investigation; the very wish to do such a thing is blasphemous. Man is made in the image of God, and God’s image cannot be captured by any machine of human devising. The utmost the artist may venture, borne on the wings of divine inspiration, is to reproduce man’s God-given features without the help of any machine, in the moment of highest dedication, at the higher bidding of his genius.

This sense of affront over the impingement of technology on what had been considered a defining human faculty has obvious parallels with much of today’s commentary on machine intelligence. It is a reminder that what Rosi Braidotti has called “moral panic about the disruption of centuries-old beliefs about human ‘nature’” (

Braidotti 2013, p. 2) is nothing new.

Benjamin (

1999) goes on to comment:

Here we have the philistine notion of “art” in all its overweening obtuseness, a stranger to all technical considerations, which feels that its end is nigh with the alarming appearance of the new technology. Nevertheless, it was this fetishistic and fundamentally antitechnological concept of art with which the theoreticians of photography sought to grapple for almost a hundred years, naturally without the smallest success.

While these “theoreticians” remained stuck in their thinking, practitioners were not standing still. Many professionals who had been making their living painting miniature portraits enacted a very successful shift to studio photography; with those who brought together technical mastery and a good eye, art photography was born, over the following decades unfolding a range of artistic possibilities latent in the new technology that had been inaccessible to painters: micro-, macro- and telephotography, frozen moments of gesture and microexpression, slow motion, time lapse, negatives and other manipulations of the film, and so on.

Artists who stuck to their paintbrushes also began to realize new possibilities in their work, arguably in direct response to photography. David Hockney interprets cubism from this perspective:

cubism was about the real world. It was an attempt to reclaim a territory for figuration, for depiction. Faced with the claim that photography had made figurative painting obsolete, the cubists performed an exquisite critique of photography; they showed that there were certain aspects of looking — basically the human reality of perception — that photography couldn’t convey, and that you still needed the painter’s hand and eye to convey them.

Of course, the ongoing relationship between painting and photography is by no means mutually exclusive; the language of wholesale embrace on the one hand versus response or critique on the other is inadequate. Hockney’s “joiners” explored rich artistic possibilities in the combination of photography with “a painter’s hand and eye” via collage in the 1980s, and his more recent video pieces from Woldgate Woods do something similar with montage.

Hockney was also responsible, in his 2001 collaboration with physicist Charles Falco, for reigniting interest in the role optical instruments—mirrors, lenses, and perhaps something like a camera lucida—played in the sudden emergence of visual realism in early Renaissance art.

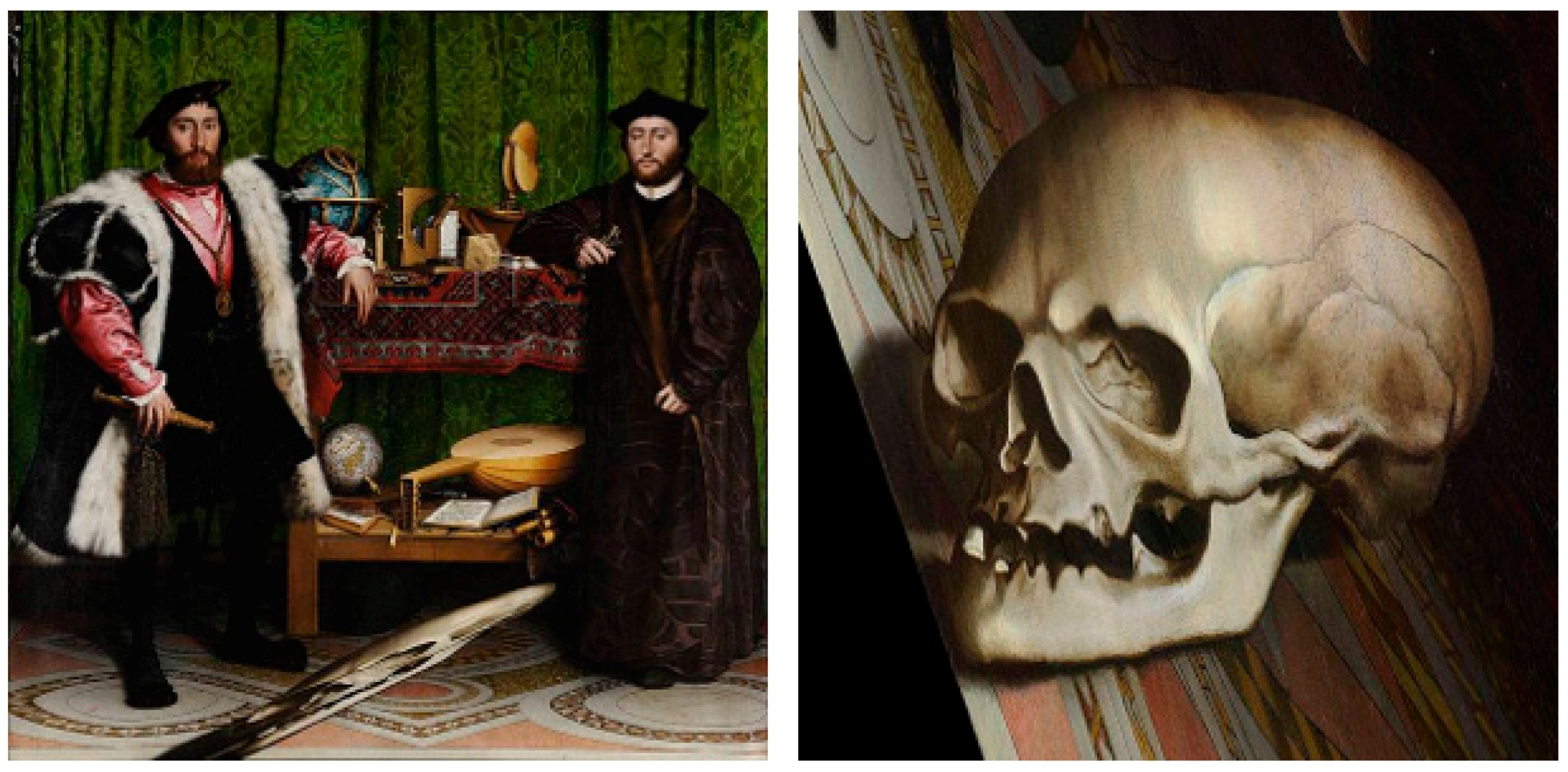

1 It has been clear for a long time that visual effects like the anamorphic skull across the bottom of Hans Holbein’s 1553 painting The Ambassadors (

Figure 2) could not have been rendered without clever optical tricks involving tracing from mirrors or lenses—effectively, paintbrush-assisted photography. Had something like the Daguerre-Niépce photochemical process existed in their time, it seems likely that artists like van Eyck and Holbein would have experimented with it, either in addition to, in combination with, or even instead of paint.

So, the old European masters fetishized by the Leipziger Stadtanzeiger were not reproducing “man’s God-given features without the help of any machine”, but were in fact using the state of the art. They were playing with the same new optical technologies that allowed Galileo to discover the moons of Jupiter, and van Leeuwenhoek to make the first observations of microorganisms.

Understanding the ingenuity of the Renaissance artists as users and developers of technology should only increase our regard for them and our appreciation of their work. It should not come as a surprise, as in their own time they were not “Old Masters” canonized in the historical wings of national art museums, but intellectual and cultural innovators. To imagine that optics somehow constituted “cheating” in Renaissance painting is both a failure of the imagination and the application of a historically inappropriate value system. Yet even today, some commentators and theoreticians — typically not themselves working artists — remain wedded to what Benjamin called “the philistine notion of ‘art’”, as pointed out in an article in

The Observer from 2000 in response to the Hockney-Falco thesis:

Is [the use of optics] so qualitatively different from using grids, plumb-lines and maulsticks? Yes — for those who regard these painters as a pantheon of mysterious demigods, more than men if less than angels, anything which smacks of technical aid is blasphemy. It is akin to giving scientific explanations for the miracles of saints.

There is a pungent irony here. Scientific inquiry has, step by step, revealed to us a universe much more vast and complex than the mythologies of our ancestors, while the parallel development of technology has extended our creative potential to allow us to make works (whether we call them “art”, “design”, “technology”, “entertainment”, or something else) that would indeed appear miraculous to a previous generation. Where we encounter the word “blasphemy”, we may often read “progress”, and can expect miracles around the corner.

2One would like to believe that, after being discredited so many times and over so many centuries, the “antitechnological concept of art” would be relegated to a fundamentalist fringe. However, if history has anything to teach us in this regard, it is that this particular debate is always ready to resurface. Perhaps this is because it impinges, consciously or not, on much larger issues of human identity, status and authority. We resist epistemological shock. Faced with a new technical development in art it is easier for us to quietly move the goalposts after a suitable period of outrage, re-inscribing what it means for something to be called fine art, what counts as skill or creativity, what is natural and what is artifice, and what it means for us to be privileged as uniquely human, all while keeping our categorical value system—and our human apartness from the technology—fixed.

More radical thinking that questions the categories and the value systems themselves comes from writers like Donna Haraway and Joanna Zylinska. Haraway, originally a primatologist, has done a great deal to blur the conceptual border between humans and other animals;

3 the same line of thinking led her to question human exceptionalism with respect to machines and human-machine hybrids. This may seem like speculative philosophy best left to science fiction, but in many respects, it already applies. Zylinska, in her 2002 edited collection

The Cyborg Experiments: The Extensions of the Body in the Media Age, interviewed the Australian performance artist Stelarc, whose views on the relationship between humanity and technology set a useful frame of reference:

The body has always been a prosthetic body. Ever since we evolved as hominids and developed bipedal locomotion, two limbs became manipulators. We have become creatures that construct tools, artefacts and machines. We’ve always been augmented by our instruments, our technologies. Technology is what constructs our humanity; the trajectory of technology is what has propelled human developments. I’ve never seen the body as purely biological, so to consider technology as a kind of alien other that happens upon us at the end of the millennium is rather simplistic.

As Zylinska and her coauthor Sarah Kember elaborate in their book

Life after New Media (2012), one should not conclude that anything goes, that the direction of our development is predetermined, or that technology is somehow inherently utopian. Many of us working actively on machine intelligence are, for example, co-signatories of an open letter calling for a worldwide ban on autonomous machine intelligence-enabled weapons systems (

Future of Life Institute 2015), which do pose very real dangers.

Sherry Turkle (

2011) has written convincingly about the subtler, but in their way equally disturbing failures of empathy, self-control and communication that can arise when we project emotion onto machines that have none, or use our technology to mediate our interpersonal relationships to the exclusion of direct human contact. It is clear that, as individuals and as a society, we do not always make good choices; so far we have muddled through, with plenty of (hopefully instructive, so far survivable) missteps along the way. However,

Zylinska and Kember (

2012) point out,

If we do accept that we have always been cyborgs

4…it will be easier for us to let go of paranoid narratives…that see technology as an external other that threatens the human and needs to be stopped at all costs before a new mutant species—of replicants, robots, aliens emerges to compete with humans and eventually to win the battle…[S]eeing ourselves as always already connected, as being part of the system — rather than as masters of the universe to which all beings are inferior — is an important step to developing a more critical and a more responsible relationship to the world, to what we call “man,” “nature” and “technology”.

Perhaps it is unsurprising that these perspectives have often been explored by feminist philosophers, while replicants and terminators come from the decidedly more masculine (and speculative) universes of Philip K. Dick, Ridley Scott and James Cameron. On the most banal level, the masculine narratives tend to emphasize hierarchy, competition, and winner-takes-all domination, while these feminist narratives tend to point out the collaborative, interconnected and non-zero sum; more tellingly, they point out that we are already far into and part of the cyborg future, deeply entangled with technology in every way, not organic innocents subject to a technological onslaught from without at some future date.

This point of view invites us to rethink art as something generated by (and consumed by) hybrid beings; the technologies involved in artistic production are not so much “other” as they are “part of”. As the media philosopher Vilém Flusser put it, “tools…are extensions of human organs: extended teeth, fingers, hands, arms, legs” (

Flusser 1983, p. 23). Preindustrial tools, like paintbrushes or pickaxes, extend the biomechanics of the human body, while more sophisticated machines extend prosthetically into the realms of information and thought. Hence, “All apparatuses (not just computers) are… ‘artificial intelligences’, the camera included” (ibid., pp. 30–31).

That the camera extends and is modeled after the eye is self-evident. Does this make the eye a tool, or the camera an organ —and is the distinction meaningful?

Flusser’s (

1983) characterization of the camera as a form of intelligence might have raised eyebrows in the 20th century, since, surrounded by cameras, many people had long since reinscribed the boundaries of intelligence more narrowly around the brain — perhaps, as we have seen, in order to safeguard the category of the uniquely human. Calling the brain the seat of intelligence, and the eye therefore a mere peripheral, is a flawed strategy, though. We are not brains in biological vats. Even if we were to adopt a neurocentric attitude, modern neuroscientists typically refer to the retina as an “outpost of the brain” (

Tosini et al. 2014)

5, as it is largely made out of neurons and performs a great deal of information processing before sending encoded visual signals along the optic nerve.

Do cameras also process information nontrivially? It is remarkable that Flusser was so explicit in describing the camera as having a “program” and “software” when he was writing his philosophy of photography in 1983, given that the first real digital camera was not made until 1988 (

Wikipedia 2017a). Maybe it took a philosopher’s squint to notice the “programming” inherent in the grinding and configuration of lenses, the creation of a frame and field of view, the timing of the shutter, the details of chemical emulsions and film processing. Maybe, also, Flusser was writing about programming in a wider, more sociological sense.

Be this as it may, for today’s cameras, this is no longer a metaphor. The camera in your phone is indeed powered by software, amounting at a minimum to millions of lines of code (

Information is Beautiful 2017). Much of this code performs support functions peripheral to the actual imaging, but some of it makes explicit the nonlinear summing-up of photons into color components that used to be physically computed by the film emulsion. Other code does things like removing noise in near-constant areas, sharpening edges, and filling in defective pixels with plausible surrounding color, not unlike the way our retinas hallucinate away the blood vessels at the back of the eye that would otherwise mar our visual field (

Summers 2011). The images we see can only be “beautiful” or “real-looking” because they have been heavily processed, either by neural machinery or by code (in which case, both), operating below our threshold of consciousness. In the case of the software, this processing relies on norms and aesthetic judgments on the part of software engineers, so they are also unacknowledged collaborators in the image-making.

6 There is no such thing as a natural image; perhaps, too, there is nothing especially artificial about the camera.

The flexibility of code allows us to make cameras that do much more than producing images that can pass for natural. Researchers like those at Massachusetts Institute of Technology (MIT) Media Lab’s Camera Culture group have developed software-enabled nontraditional cameras (many of which still use ordinary hardware) that can sense depth, see around corners, or see through skin (

http://cameraculture.media.mit.edu/); Abe Davis and collaborators have even developed a computational camera that can “see” sound, by decoding the tiny vibrations of houseplant leaves and potato chip bags (

Davis et al. 2014). So,

Flusser (

1983) was perhaps even more right than he realized in asserting that cameras follow programs, and that their software has progressively become more important than their hardware. Cameras are “thinking machines”.

It follows that when a photographer is at work nowadays, she does so as a hybrid artist, thinking, manipulating and encoding information with neurons in both the brain and the retina, working with muscles, motors, transistors, and millions of lines of code. Photographers are cyborgs.

What new kinds of art become possible when we begin to play with technology analogous not only to the eye, but also to the brain? This is the question that launched the Artists and Machine Intelligence (AMI) program (

https://ami.withgoogle.com/). The timing is not accidental. Over the past several years, approaches to machine intelligence based on approximating the brain’s architecture have started to yield impressive practical results — this is the explosion in so-called “deep learning” or, more accurately, the renaissance of artificial neural networks. In the summer of 2015, we also began to see some surprising experiments hinting at the creative and artistic possibilities latent in these models.

Understanding the lineage of this body of work will involve going back to the origins of computing, neuroscience, machine learning and artificial intelligence. For now, we will briefly introduce the two specific technologies used in our first gallery event, Deep Dream (in partnership with Gray Area Foundation for the Arts in San Francisco,

http://grayarea.org/event/deepdream-the-art-of-neural-networks)

7. These are “Inceptionism” or “Deep Dreaming”, first developed by Alex Mordvintsev at Google’s Zurich office (

Mordvintsev et al. 2015), and “style transfer”, first developed by Leon Gatys and collaborators in the Bethge Lab at the Centre for Integrative Neuroscience in Tübingen (

Gatys et al. 2016). It is fitting and likely a sign of things to come that one of these developments came from a computer scientist working on a neurally inspired algorithm for image classification, while the other came from a grad student in neuroscience working on computational models of the brain. We are witnessing a time of convergences: not just across disciplines, but between brains and computers; between scientists trying to understand and technologists trying to make; and between academia and industry. We do not believe the convergence will yield a monoculture, but a vibrant hybridity.

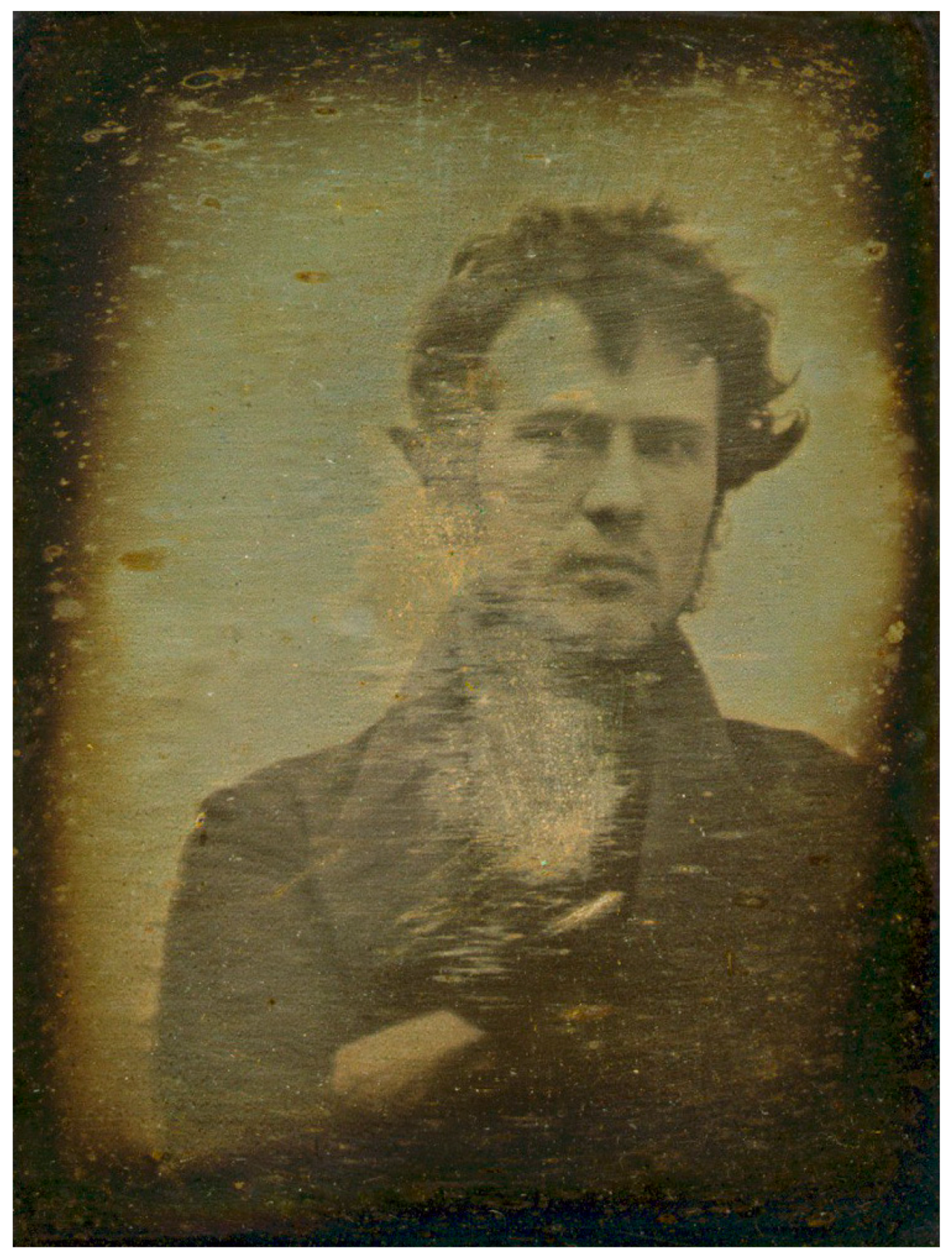

These are early days. The art realizable with the current generation of machine intelligence might generously be called a kind of neural daguerreotype. More varied and higher-order artistic possibilities will emerge not only through further development of the technology, but through longer term collaborations involving a wider range of artists and intents. This first show at the Gray Area is small in scale and narrow in scope; it stays close to the early image-making processes that first inspired our Art and Machine Intelligence (AMI) program. We believe the magic in the pieces is something akin to that of Robert Cornelius’s tentative self-portrait in 1839.

As machine intelligence develops, we imagine that some artists who work with it will draw the same critique leveled at early photographers. An unsubtle critic might accuse them of “cheating”, or claim that the art produced with these technologies is not “real art”. A subtler (but still antitechnological) critic might dismiss machine intelligence art wholesale as kitsch. As with art in any medium, some of it undoubtedly will be kitsch — we have already seen examples — but some will be beautiful, provocative, frightening, enthralling, unsettling, revelatory, and everything else that good art can be.

Discoveries will be made. If previous cycles of new technology in art are any guide, then early works have a relatively high likelihood of enduring and being significant in retrospect, since they are by definition exploring new ground, not retreading the familiar. Systematically experimenting with what neural-like systems can generate gives us a new tool to investigate nature, culture, ideas, perception, and the workings of our own minds.

Our interest in exploring the possibilities of machine intelligence in art could easily be justified on these grounds alone. However, we feel that the stakes are much higher, for several reasons. One is that machine intelligence is such a profoundly transformational technology; it is about creating the very stuff of thought and mind. The questions of authenticity, reproducibility, legitimacy, purpose and identity that Walter Benjamin, Vilém Flusser, Donna Haraway and others have raised in the context of earlier technologies shift from metaphorical to literal; they become increasingly consequential. In the era where so many of us have become “information workers” (just as I am, in writing this piece), the issues raised by MI are not mere “theory” to be endlessly rehearsed by critics and journalists. We need to make decisions, personally and societally. A feedback loop needs to be closed at places like Google, where our work as engineers and researchers will have a real effect on how the technology is developed and deployed.

This requires that we apply ourselves rigorously and imaginatively across disciplines. The work cannot be done by technophobic humanists, any more than it can be done by inhuman technologists. Luckily, we are neither of the above. Both categories are stereotypes, if occasionally self-fulfilling ones, perpetuated by an unhelpful cultural narrative: the philistines again, claiming that artists are elves, and technical people dwarves, when of course the reality is that we are all (at least) human. There is no shortage today of artists and intellectuals who, like Alberti, Holbein or Hockney, are eager to work with and influence the development of new technologies. There is also no shortage of engineers and scientists who are thoughtful and eager to engage with artists and other humanists. And of course, the binary is false; there are people who are simultaneously serious artists and scientists or engineers. We are lucky to have several of such among our group of collaborators.