Behavioral Immunity in Insects

Abstract

:1. Introduction

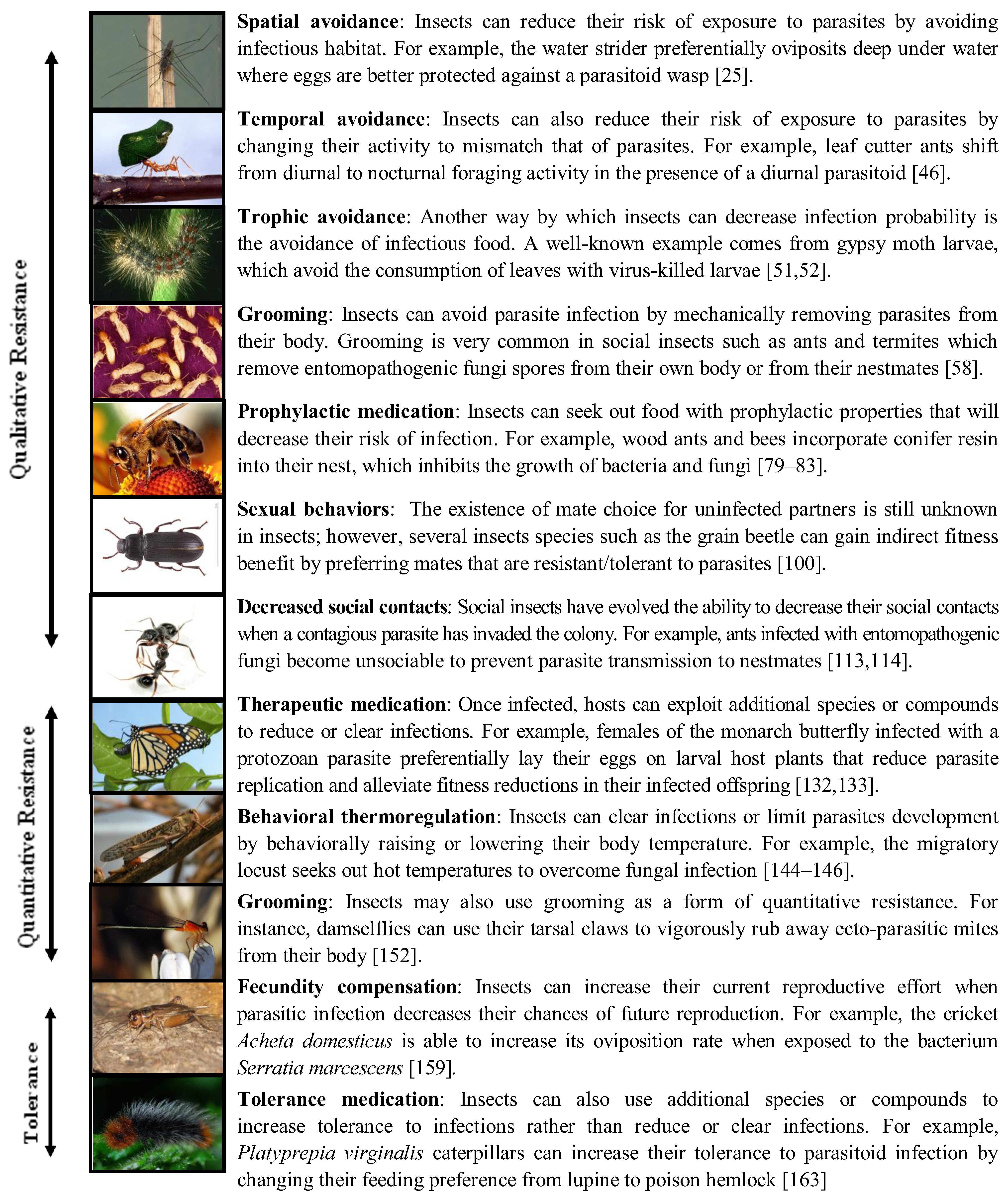

2. Qualitative Resistance: Avoiding Infection

2.1. Spatial Avoidance

2.2. Temporal Avoidance

2.3. Trophic Avoidance

2.4. Grooming

2.5. Prophylactic Medication

2.6. Sexual Behaviors

2.7. Decreased Contact with Conspecifics

3. Quantitative Resistance: Reducing Parasite Growth and Clearance

3.1. Therapeutic Medication

3.2. Behavioral Thermo-Regulation

3.3. Grooming

4. Tolerance

4.1. Fecundity Compensation

4.2. Tolerance Medication

5. Ecological and Evolutionary Consequences

5.1. Costs and Trade-Offs

5.2. Loss or Reduction of Non-Behavioral Immunity

5.3. Parasite risk and Behavior: Plastic and Fixed Behaviors

5.4. Local Adaptation

5.5. Resistance vs. Tolerance

5.6. Virulence Evolution

5.7. Parasites Strike Back: Manipulation

6. Lessons for Vertebrates

7. Conclusions

Acknowledgements

References

- Gillespie, J.P.; Kanost, M.R.; Trenczek, T. Biological mediators of insect immunity. Annu. Rev. Entomol. 1997, 42, 611–643. [Google Scholar] [CrossRef]

- Rolff, J.; Reynolds, K.T. Insect Infection and Immunity: Evolution, Ecology, and Mechanisms; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- St Leger, R.J. Integument as a barrier to microbial infections. In The Physiology of Insect Epidermis; Retnakaran, A., Binnington, K., Eds.; CSIRO: Clayto, Australia, 1991; pp. 286–308. [Google Scholar]

- Moore, J. Parasites and the Behavior of Animals; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Michalakis, Y. Parasitism and the Evolution of Life-History Traits. In Ecology and Evolution of Parasitism; Thomas, F., Guegan, J.-F., Renaud, F., Eds.; Oxford University Press: Oxford, UK, 2009; pp. 19–30. [Google Scholar]

- Oliver, K.M.; Degnan, P.H.; Burke, G.R.; Moran, N.A. Facultative symbionts in aphids and the horizontal transfer of ecologically important traits. Annu. Rev. Entomol. 2010, 55, 247–266. [Google Scholar] [CrossRef]

- Parker, B.J.; Barribeau, S.M.; Laughton, A.M.; De Roode, J.C.; Gerardo, N.M. Non-immunological defense in an evolutionary framework. Trends Ecol. Evol. 2011, 26, 242–248. [Google Scholar] [CrossRef]

- Schaller, M.; Park, J.H. The behavioral immune system (and why it matters). Curr. Dir. Psychol. Sci. 2011, 20, 99–103. [Google Scholar] [CrossRef]

- Hart, B.L. Behavioural defences in animals against pathogens and parasites: Parallels with the pillars of medicine in humans. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 3406–3417. [Google Scholar] [CrossRef]

- Schaller, M. The behavioural immune system and the psychology of human sociality. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 3418–3426. [Google Scholar] [CrossRef]

- Perrot-Minnot, M.-J.; Cézilly, F. Parasites and Behaviour. In Ecology and Evolution of Parasitism; Thomas, F., Guegan, J.-F., Renaud, F., Eds.; Oxford University Press: Oxford, UK, 2009; pp. 49–68. [Google Scholar]

- Roy, B.A.; Kirchner, J.W. Evolutionary dynamics of pathogen resistance and tolerance. Evolution 2000, 54, 51–63. [Google Scholar]

- Rausher, M.D. Co-evolution and plant resistance to natural enemies. Nature 2001, 411, 857–864. [Google Scholar] [CrossRef]

- Restif, O.; Koella, J.C. Concurrent evolution of resistance and tolerance to pathogens. Am. Nat. 2004, 164, E90–E102. [Google Scholar] [CrossRef]

- Miller, M.R.; White, A.; Boots, M. The evolution of host resistance: Tolerance and control as distinct strategies. J. Theor. Biol. 2005, 236, 198–207. [Google Scholar] [CrossRef]

- Best, A.; White, A.; Boots, M. Maintenance of host variation in tolerance to pathogens and parasites. Proc. Natl. Acad. Sci. USA 2008, 105, 20786–20791. [Google Scholar]

- Hart, B.L. Behavioral adaptations to pathogens and parasites-5 strategies. Neurosci. Biobehav. Rev. 1990, 14, 273–294. [Google Scholar] [CrossRef]

- Schmid-Hempel, P. Parasites in Social Insects; Princeton University Press: Princeton, NJ, USA, 1998; Volume 26, pp. 255–271. [Google Scholar]

- Cremer, S.; Armitage, S.A.O.; Schmid-Hempel, P. Social immunity. Curr. Biol. 2007, 17, R693–R702. [Google Scholar] [CrossRef]

- Wilson-Rich, N.; Spivak, M.; Fefferman, N.H.; Starks, P.T. Genetic, individual, and group facilitation of disease resistance in insect societies. Annu. Rev. Entomol. 2009, 54, 405–423. [Google Scholar] [CrossRef]

- Cremer, S.; Sixt, M. Analogies in the evolution of individual and social immunity. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 129–142. [Google Scholar] [CrossRef]

- Cotter, S.C.; Kilner, R.M. Personal immunity versus social immunity. Behav. Ecol. 2010, 21, 663–668. [Google Scholar] [CrossRef]

- Kilpatrick, A.M.; Kramer, L.D.; Jones, M.J.; Marra, P.P.; Daszak, P. West Nile virus epidemics in North America are driven by shifts in mosquito feeding behavior. PLoS Biol. 2006, 4, e82. [Google Scholar] [CrossRef]

- Loehle, C. Social barrier to pathogen transmission in wild animal populations. Ecology 1995, 76, 326–335. [Google Scholar] [CrossRef]

- Amano, H.; Hayashi, K.; Kasuya, E. Avoidance of egg parasitism through submerged oviposition by tandem pairs in the water strider, Aquarius paludum insularis (Heteroptera: Gerridae). Ecol. Entomol. 2008, 33, 560–563. [Google Scholar] [CrossRef]

- Altizer, S.; Bartel, R.A.; Han, B.A. Animal migration and infectious disease risk. Science 2011, 331, 296–302. [Google Scholar]

- Bartel, R.A.; Oberhauser, K.S.; de Roode, J.C.; Altizer, S. Monarch butterfly migration and parasite transmission in eastern North America. Ecology 2011, 92, 342–351. [Google Scholar] [CrossRef]

- Bradley, C.A.; Altizer, S. Parasites hinder monarch butterfly flight: Implications for disease spread in migratory hosts. Ecol. Lett. 2005, 8, 290–300. [Google Scholar] [CrossRef]

- Jeffries, M.J.; Lawton, J.H. Enemy free space and the structure of ecological communities. Biol. J. Linn. Soc. Lond. 1984, 23, 269–286. [Google Scholar] [CrossRef]

- Thompson, J.N. Evolutionary ecology of the relationship between oviposition preference and performance of offspring in phytophagous insects. Entomol. Exp. Appl. 1988, 47, 3–14. [Google Scholar] [CrossRef]

- Lowenberger, C.A.; Rau, M.E. Selective oviposition by Aedes aegypti (Diptera: Culicidae) in response to a larval parasite, Plagiorchis elegans (Trematoda: Plagiorchiidae). Environ. Entomol. 1994, 23, 920–923. [Google Scholar]

- Zahiri, N.; Rau, M.E.; Lewis, D.J.; Khanizadeh, S. Intensity and site of Plagiorchis elegans (Trematoda; Plagiorchiidae) infection in Aedes aegypti (Diptera: Culicidae) larvae affect the attractiveness of their waters to ovipositing, conspecific females. Environ. Entomol. 1997, 26, 920–923. [Google Scholar]

- Zahiri, N.; Rau, M.E.; Lewis, D.J. Oviposition responses of Aedes aegypti and Ae. atropalpus (Diptera: Culicidae) females to waters from conspecific and heterospecific normal larvae and from larvae infected with Plagiorchis elegans (Trematoda: Plagiorchiidae). J. Med. Entomol. 1997, 34, 565–568. [Google Scholar]

- Zahiri, N.; Rau, M.E. Oviposition attraction and repellency of Aedes aegypti (Diptera: Culicidae) to waters from conspecific larvae subjected to crowding, confinement, starvation, or infection. J. Med. Entomol. 1998, 35, 782–787. [Google Scholar]

- Feder, J.L. The effects of parasitoids on sympatric host races of Rhagoletis pomonella (Diptera, Tephritidae). Ecology 1995, 76, 801–813. [Google Scholar] [CrossRef]

- Videla, M.; Valladares, G.; Salvo, A. A tritrophic analysis of host preference and performance in a polyphagous leafminer. Entomol. Exp. Appl. 2006, 121, 105–114. [Google Scholar] [CrossRef]

- Chen, Y.H.; Welter, S.C. Crop domestication creates a refuge from parasitism for a native moth. J. Appl. Ecol. 2007, 44, 238–245. [Google Scholar]

- Sadek, M.M.; Hansson, B.S.; Anderson, P. Does risk of egg parasitism affect choice of oviposition sites by a moth? A field and laboratory study. Basic Appl. Ecol. 2010, 11, 135–143. [Google Scholar] [CrossRef]

- Vet, L.E.M.; Dicke, M. Ecology of infochemical use by natural enemies in a tritrophic context. Annu. Rev. Entomol. 1992, 37, 141–172. [Google Scholar] [CrossRef]

- Deas, J.B.; Hunter, M.S. Mothers modify eggs into shields to protect offspring from parasitism. Proc. R. Soc. Lond. B Biol. Sci. 2011, 279, 847–853. [Google Scholar]

- Weiss, M.R. Defecation behavior and ecology of insects. Annu. Rev. Entomol. 2006, 51, 635–661. [Google Scholar] [CrossRef]

- Carrasco, D.; Kaitala, A. Egg-laying tactic in Phyllomorpha laciniata in the presence of parasitoids. Entomol. Exp. Appl. 2009, 131, 300–307. [Google Scholar] [CrossRef]

- Carrasco, D.; Kaitala, A. Active protection of unrelated offspring against parasitoids. A byproduct of self defense? Behav. Ecol. Soc. 2010, 64, 1291–1298. [Google Scholar] [CrossRef]

- Feener, D.H. Effects of parasites on foraging and defense behavior of a termitophagous ant, Pheidole titanis Wheeler (Hymenoptera: Formicidae). Behav. Ecol. Soc. 1988, 22, 421–427. [Google Scholar] [CrossRef]

- Feener, D.H.; Brown, B.V. Reduced foraging of Solenopsis geminata (Hymenoptera: Formicidae) in the presence of parasitic Pseudacteon spp. (Diptera: Phoridae). Ann. Entomol. Soc. Am. 1992, 85, 80–84. [Google Scholar]

- Orr, M.R. Parasitic flies (Diptera: Phoridae) influence foraging rhythms and caste division of labor in the leaf-cutter ant, Atta cephalotes (Hymenoptera: Formicidae). Behav. Ecol. Soc. 1992, 30, 395–402. [Google Scholar]

- Porter, S.D.; Vander Meer, R.K.; Pesquero, M.A.; Campiolo, S.; Fowler, H.G. Solenopsis (Hymenoptera: Formicidae) fire ant reactions to attacks of Pseudacteon flies (Diptera: Phoridae) in Southeastern Brazil. Ann. Entomol. Soc. Am. 1995, 88, 570–575. [Google Scholar]

- Folgarait, P.J.; Gilbert, L.E. Phorid parasitoids affect foraging activity of Solenopsis richteri under different availability of food in Argentina. Ecol. Entomol. 1999, 24, 163–173. [Google Scholar] [CrossRef]

- Lozano, G.A. Optimal foraging theory: A possible role for parasites. Oikos 1991, 60, 391–395. [Google Scholar] [CrossRef]

- Hutchings, M.R.; Athanasiadou, S.; Kyriazakis, I.; Gordon, I.J. Can animals use foraging behaviour to combat parasites? Proc. Nutr. Soc. 2003, 62, 361–370. [Google Scholar] [CrossRef]

- Capinera, J.L.; Kirouac, S.P.; Barbosa, P. Phagodeterrency of cadaver components to gypsy moth larvae, Lymantria dispar. J. Invertebr. Pathol. 1976, 28, 277–279. [Google Scholar] [CrossRef]

- Parker, B.J.; Elderd, B.D.; Dwyer, G. Host behaviour and exposure risk in an insect-pathogen interaction. J. Anim. Ecol. 2010, 79, 863–870. [Google Scholar]

- Hutchings, M.R.; Gordon, I.J.; Kyriazakis, I.; Jackson, F. Sheep avoidance of faeces-contaminated patches leads to a trade-off between intake rate of forage and parasitism in subsequent foraging decisions. Anim. Behav. 2001, 62, 955–964. [Google Scholar] [CrossRef]

- Hajek, A.E.; St Leger, R.J. Interactions between fungal pathogens and insect hosts. Annu. Rev. Entomol. 1994, 39, 293–322. [Google Scholar] [CrossRef]

- Gaugler, R.; Wang, Y.; Campbell, J.F. Aggressive and evasive behaviors in Popillia japonica (Coleoptera, Scarabaeidae) larvae-defenses against entomopathogenic nematode attack. J. Invertebr. Pathol. 1994, 64, 193–199. [Google Scholar] [CrossRef]

- Rosengaus, R.B.; Maxmen, A.B.; Coates, L.E.; Traniello, J.F.A. Disease resistance: A benefit of sociality in the dampwood termite Zootermopsis angusticollis (Isoptera: Termopsidae). Behav. Ecol. Soc. 1998, 44, 125–134. [Google Scholar] [CrossRef]

- Hughes, W.O.H.; Eilenberg, J.; Boomsma, J.J. Trade-offs in group living: Transmission and disease resistance in leaf-cutting ants. Proc. R. Soc. Lond. B Biol. Sci. 2002, 269, 1811–1819. [Google Scholar] [CrossRef]

- Yanagawa, A.; Shimizu, S. Resistance of the termite, Coptotermes formosanus Shiraki to Metarhizium anisopliae due to grooming. BioControl 2007, 52, 75–85. [Google Scholar] [CrossRef]

- Walker, T.N.; Hughes, W.O.H. Adaptive social immunity in leaf-cutting ants. Biol. Lett. 2009, 5, 446–448. [Google Scholar] [CrossRef]

- Traniello, J.F.A.; Rosengaus, R.B.; Savoie, K. The development of immunity in a social insect: Evidence for the group facilitation of disease resistance. Proc. Natl. Acad. Sci. USA 2002, 99, 6838–6842. [Google Scholar] [CrossRef]

- Ugelvig, L.V.; Cremer, S. Social prophylaxis: Group interaction promotes collective immunity in ant colonies. Curr. Biol. 2007, 17, 1967–1971. [Google Scholar] [CrossRef]

- Yanagawa, A.; Yokohari, F.; Shimizu, S. The role of antennae in removing entomopathogenic fungi from cuticle of the termite, Coptotermes formosanus. J. Insect Sci. 2009, 9, 1–9. [Google Scholar]

- Yanagawa, A.; Yokohari, F.; Shimizu, S. Influence of fungal odor on grooming behavior of the termite, Coptotermes formosanus. J. Insect Sci. 2010, 10. [Google Scholar] [CrossRef]

- Yanagawa, A.; Fujiwara-Tsujii, N.; Akino, T.; Yoshimura, T.; Yanagawa, T.; Shimizu, S. Musty odor of entomopathogens enhances disease-prevention behaviors in the termite Coptotermes formosanus. J. Invertebr. Pathol. 2011, 108, 1–6. [Google Scholar] [CrossRef]

- Oxley, P.R.; Spivak, M.; Oldroyd, B.P. Six quantitative trait loci influence task thresholds for hygienic behaviour in honeybees (Apis mellifera). Mol. Ecol. 2010, 19, 1452–1461. [Google Scholar] [CrossRef]

- Spivak, M.; Masterman, R.; Ross, R.; Mesce, K.A. Hygienic behavior in the honey bee (Apis mellifera L.) and the modulatory role of octopamine. J. Neurobiol. 2003, 55, 341–354. [Google Scholar] [CrossRef]

- Swanson, J.A.I.; Torto, B.; Kells, S.A.; Mesce, K.A.; Tumlinson, J.H.; Spivak, M. Odorants that induce hygienic behavior in honeybees: Identification of volatile compounds in chalkbrood-infected honeybee larvae. J. Chem. Ecol. 2009, 35, 1108–1116. [Google Scholar] [CrossRef]

- Woodrow, A.W.; Holst, E.C. The mechanism of colony resistance to American foulbrood. J. Econ. Entomol. 1942, 35, 327–330. [Google Scholar]

- Gilliam, M.; Taber, S.; Richardson, G.V. Hygienic behavior of honey bees in relation to chalkbrood disease. Apidologie 1983, 14, 29–39. [Google Scholar] [CrossRef]

- Rothenbuhler, W. Behaviour genetics of nest cleaning in honey bees. I. Responses of four inbred lines to disease-killed brood. Anim. Behav. 1964, 12, 578–583. [Google Scholar] [CrossRef]

- Boecking, O.; Drescher, W. The removal response of Apis mellifera L. colonies to brood in wax and plastic cells after artificial and natural infestation with Varroa jacobsoni Oud. and to freeze-killed brood. Exp. Appl. Acarol. 1992, 16, 321–329. [Google Scholar] [CrossRef]

- Raubenheimer, D.; Simpson, S.J. Nutritional PharmEcology: Doses, nutrients, toxins, and medicines. Integr. Comp. Biol. 2009, 49, 329–337. [Google Scholar] [CrossRef]

- Ponton, F.; Wilson, K.; Cotter, S.C.; Raubenheimer, D.; Simpson, S.J. Nutritional immunology: A multi-dimensional approach. PLoS Pathog. 2011, 7, e1002223. [Google Scholar] [CrossRef]

- Janzen, D.H. Complications in interpreting the chemical defenses of trees against tropical arboreal plant-eating vertebrates. In Ecology of Arboreal Folivores; Montgomery, G.G., Ed.; Smithsonian Institution Press: Washington, DC, USA, 1978; pp. 73–84. [Google Scholar]

- Nishida, R. Sequestration of defensive substances from plants by Lepidoptera. Annu. Rev. Entomol. 2002, 47, 57–92. [Google Scholar] [CrossRef]

- Singer, M.S.; Stireman, J.O. Does anti-parasitoid defense explain host-plant selection by a polyphagous caterpillar? Oikos 2003, 100, 554–562. [Google Scholar] [CrossRef]

- Singer, M.S.; Rodrigues, D.; Stireman, J.O.; Carrière, Y. Roles of food quality and enemy-free space in host use by a generalist insect herbivore. Ecology 2004, 85, 2747–2753. [Google Scholar] [CrossRef]

- Singer, M.S.; Carrière, Y.; Theuring, C.; Hartmann, T. Disentangling food quality from resistance against parasitoids: Diet choice by a generalist caterpillar. Am. Nat. 2004, 164, 423–429. [Google Scholar] [CrossRef]

- Christe, P.; Oppliger, A.; Bancal, F.; Castella, G.; Chapuisat, M. Evidence for collective medication in ants. Ecol. Lett. 2003, 6, 19–22. [Google Scholar]

- Chapuisat, M.; Oppliger, A.; Magliano, P.; Christe, P. Wood ants use resin to protect themselves against pathogens. Proc. R. Soc. Lond. B Biol. Sci. 2007, 274, 2013–2017. [Google Scholar] [CrossRef]

- Castella, G.; Chapuisat, M.; Christe, P. Prophylaxis with resin in wood ants. Anim. Behav. 2008, 75, 1591–1596. [Google Scholar] [CrossRef]

- Simone, M.; Evans, J.D.; Spivak, M. Resin collection and social immunity in honey bees. Evolution 2009, 63, 3016–3022. [Google Scholar] [CrossRef]

- Simone-Finstrom, M.; Spivak, M. Propolis and bee health: The natural history and significance of resin use by honey bees. Apidologie 2010, 41, 295–311. [Google Scholar] [CrossRef]

- Simone-Finstrom, M.D.; Spivak, M. Increased resin collection after parasite challenge: A case of self-medication in honey bees? PLoS One 2012, 7, e34601. [Google Scholar] [CrossRef]

- Loehle, C. The pathogen transmission avoidance theory of sexual selection. Ecol. Model. 1997, 103, 231–250. [Google Scholar] [CrossRef]

- Hamilton, W.D.; Zuk, M. Heritable true fitness and bright birds: A role for parasites? Science 1982, 218, 384–387. [Google Scholar]

- Lockhart, A.B.; Thrall, P.H.; Antonovics, J. Sexually transmitted diseases in animals: Ecological and evolutionary implications. Biol. Rev. Camb. Philos. Soc. 1996, 71, 415–471. [Google Scholar] [CrossRef]

- Penn, D.; Potts, W.K. Chemical signals and parasite-mediated sexual selection. Trends Ecol. Evol. 1998, 13, 391–396. [Google Scholar] [CrossRef]

- Nunn, C. Behavioural defences against sexually transmitted diseases in primates. Anim. Behav. 2003, 66, 37–48. [Google Scholar] [CrossRef]

- Knell, R.J.; Webberley, K.M. Sexually transmitted diseases of insects: Distribution, evolution, ecology and host behaviour. Biol. Rev. Camb. Philos. Soc. 2004, 79, 557–581. [Google Scholar] [CrossRef]

- Abbot, P.; Dill, L.M. Sexually transmitted parasites and sexual selection in the milkweed leaf beetle, Labidomera clivicollis. Oikos 2001, 92, 91–100. [Google Scholar]

- Webberley, K.M.; Hurst, G.D.D.; Buszko, J.; Majerus, M.E.N. Lack of parasite-mediated sexual selection in a ladybird/sexually transmitted disease system. Anim. Behav. 2002, 63, 131–141. [Google Scholar] [CrossRef]

- Luong, L.T.; Kaya, H.K. Sexually transmitted parasites and host mating behavior in the decorated cricket. Behav. Ecol. 2005, 16, 794–799. [Google Scholar] [CrossRef]

- Rosengaus, R.B.; James, L.-T.; Hartke, T.R.; Brent, C.S. Mate preference and disease risk in Zootermopsis angusticollis (Isoptera: Termopsidae). Environ. Entomol. 2011, 40, 1554–1565. [Google Scholar] [CrossRef]

- Knell, R.J. Sexually transmitted disease and parasite-mediated sexual selection. Evolution 1999, 53, 957–961. [Google Scholar] [CrossRef]

- Rantala, M.J.; Koskimäki, J.; Taskinen, J.; Tynkkynen, K.; Suhonen, J. Immunocompetence, developmental stability and wingspot size in the damselfly Calopteryx splendens L. Proc. R. Soc. Lond. B Biol. Sci. 2000, 267, 2453–2457. [Google Scholar] [CrossRef]

- Siva-Jothy, M.T. A mechanistic link between parasite resistance and expression of a sexually selected trait in a damselfly. Proc. R. Soc. Lond. B Biol. Sci. 2000, 267, 2523–2527. [Google Scholar] [CrossRef]

- Siva-Jothy, M.T. Male wing pigmentation may affect reproductive success via female choice in a calopterygid damselfly (Zygoptera). Behaviour 1999, 136, 1365–1377. [Google Scholar] [CrossRef]

- Rantala, M.J.; Kortet, R. Courtship song and immune function in the field cricket Gryllus bimaculatus. Biol. J. Linn. Soc. Lond. 2003, 79, 503–510. [Google Scholar] [CrossRef]

- Rantala, M.J.; Kortet, R.; Kotiaho, J.S.; Vainikka, A.; Suhonen, J. Condition dependence of pheromones and immune function in the grain beetle Tenebrio molitor. Funct. Ecol. 2003, 17, 534–540. [Google Scholar] [CrossRef]

- Ryder, J.J.; Siva-Jothy, M.T. Male calling song provides a reliable signal of immune function in a cricket. Proc. R. Soc. Lond. B Biol. Sci. 2000, 267, 1171–1175. [Google Scholar] [CrossRef]

- Tregenza, T.; Simmons, L.W.; Wedell, N.; Zuk, M. Female preference for male courtship song and its role as a signal of immune function and condition. Anim. Behav. 2006, 72, 809–818. [Google Scholar] [CrossRef]

- Cade, W.H. Alternative male strategies: Genetic differences in crickets. Science 1981, 212, 563–564. [Google Scholar]

- Zuk, M.; Simmons, L.W.; Cupp, L. Calling characteristics of parasitized and unparasitized populations of the field cricket Teleogryllus oceanicus. Behav. Ecol. Soc. 1993, 33, 339–343. [Google Scholar]

- Zuk, M.; Simmons, L.W.; Rotenberry, J.T. Acoustically-orienting parasitoids in calling and silent males of the field cricket Teleogryllus oceanicus. Ecol. Entomol. 1995, 20, 380–383. [Google Scholar] [CrossRef]

- Zuk, M.; Rotenberry, J.T.; Simmons, L.W. Calling songs of field crickets (Teleogryllus oceanicus) with and without phonotactic parasitoid infection. Evolution 1998, 52, 166–171. [Google Scholar] [CrossRef]

- Zuk, M.; Rotenberry, J.T.; Simmons, L.W. Geographical variation in calling song of the field cricket Teleogryllus oceanicus: The importance of spatial scale. J. Evol. Biol. 2001, 14, 731–741. [Google Scholar]

- Zuk, M.; Rotenberry, J.T.; Tinghitella, R.M. Silent night: Adaptive disappearance of a sexual signal in a parasitized population of field crickets. Biol. Lett. 2006, 2, 521–524. [Google Scholar] [CrossRef]

- Rotenberry, J.T.; Zuk, M.; Simmons, L.W.; Hayes, C. Phonotactic parasitoids and cricket song structure: An evaluation of alternative hypotheses. Evol. Ecol. 1996, 10, 233–243. [Google Scholar] [CrossRef]

- Lewkiewicz, D.A.; Zuk, M. Latency to resume calling after disturbance in the field cricket, Teleogryllus oceanicus, corresponds to population-level differences in parasitism ris. Behav. Ecol. Soc. 2004, 55, 569–573. [Google Scholar] [CrossRef]

- Marikovsky, P.I. On some features of behavior of the ants Formica rufa L. infected with fungus disease. Insect Soc. 1962, 9, 173–179. [Google Scholar] [CrossRef]

- Hamilton, W.D. The genetical evolution of social behaviour. I. J. Theor. Biol. 1964, 7, 1–16. [Google Scholar] [CrossRef]

- Heinze, J.; Walter, B. Moribund ants leave their nests to die in social isolation. Curr. Biol. 2010, 20, 249–252. [Google Scholar] [CrossRef]

- Bos, N.; Lefèvre, T.; Jensen, A.B.; D’Ettorre, P. Sick ants become unsociable. J. Evol. Biol. 2011, 25, 1–10. [Google Scholar]

- Rueppell, O.; Hayworth, M.K.; Ross, N.P. Altruistic self-removal of health-compromised honey bee workers from their hive. J. Evol. Biol. 2010, 23, 1538–1546. [Google Scholar]

- Clayton, D.H.; Wolfe, N.D. The adaptive significance of self-medication. Trends Ecol. Evol. 1993, 8, 60–63. [Google Scholar] [CrossRef]

- Lozano, G.A. Parasitic stress and self-medication in wild animals. Adv. Study Behav. 1998, 27, 291–317. [Google Scholar] [CrossRef]

- Sapolsky, R.M. Fallible instinct- a dose of skepticism about the medicinal “knowledge” of animals. Sciences 1994, 34, 13–15. [Google Scholar]

- Bernays, E.A.; Singer, M.S. Taste alteration and endoparasites. Nature 2005, 436. [Google Scholar] [CrossRef]

- Singer, M.S.; Mace, K.C.; Bernays, E.A. Self-medication as adaptive plasticity: Increased ingestion of plant toxins by parasitized caterpillars. PLoS One 2009, 4, e4796. [Google Scholar]

- Smilanich, A.M.; Mason, P.A.; Sprung, L.; Chase, T.R.; Singer, M.S. Complex effects of parasitoids on pharmacophagy and diet choice of a polyphagous caterpillar. Oecologia 2011, 165, 995–1005. [Google Scholar] [CrossRef]

- Milan, N.F.; Kacsoh, B.Z.; Schlenke, T.A. Alcohol consumption as self-medication against blood-borne parasites in the fruit fly. Curr. Biol. 2012, 22, 488–493. [Google Scholar] [CrossRef]

- Lee, K.P.; Cory, J.S.; Wilson, K.; Raubenheimer, D.; Simpson, S.J. Flexible diet choice offsets protein costs of pathogen resistance in a caterpillar. Proc. R. Soc. Lond. B Biol. Sci. 2006, 273, 823–829. [Google Scholar] [CrossRef]

- Povey, S.; Cotter, S.C.; Simpson, S.J.; Lee, K.P.; Wilson, K. Can the protein costs of bacterial resistance be offset by altered feeding behaviour? J. Anim. Ecol. 2009, 78, 437–46. [Google Scholar] [CrossRef]

- Anagnostou, C.; LeGrand, E.A.; Rohlfs, M. Friendly food for fitter flies? -Influence of dietary microbial species on food choice and parasitoid resistance in Drosophila. Oikos 2010, 119, 533–541. [Google Scholar] [CrossRef]

- De Roode, J.C.; Gold, L.R.; Altizer, S. Virulence determinants in a natural butterfly-parasite system. Parasitol. 2007, 134, 657–668. [Google Scholar] [CrossRef]

- De Roode, J.C.; Yates, A.J.; Altizer, S. Virulence-transmission trade-offs and population divergence in virulence in a naturally occurring butterfly parasite. Proc. Natl. Acad. Sci. USA 2008, 105, 7489–7494. [Google Scholar]

- De Roode, J.C.; Chi, J.; Rarick, R.M.; Altizer, S. Strength in numbers: High parasite burdens increase transmission of a protozoan parasite of monarch butterflies (Danaus plexippus). Oecologia 2009, 161, 67–75. [Google Scholar] [CrossRef]

- De Roode, J.C.; Pedersen, A.B.; Hunter, M.D.; Altizer, S. Host plant species affects virulence in monarch butterfly parasites. J. Anim. Ecol. 2008, 77, 120–126. [Google Scholar] [CrossRef]

- De Roode, J.C.; Lopez Fernandez de Castillejo, C.; Faits, T.; Alizon, S. Virulence evolution in response to anti-infection resistance: Toxic food plants can select for virulent parasites of monarch butterflies. J. Evol. Biol. 2011, 24, 712–722. [Google Scholar]

- De Roode, J.C.; Rarick, R.M.; Mongue, A.; Gerardo, N.M.; Hunter, M.D. Aphids indirectly increase virulence and transmission potential of a monarch butterfly parasite by reducing defensive chemistry of a shared food plant. Ecol. Lett. 2011, 14, 453–461. [Google Scholar] [CrossRef]

- Lefèvre, T.; Oliver, L.; Hunter, M.D.; de Roode, J.C. Evidence for trans-generational medication in nature. Ecol. Lett. 2010, 13, 1485–1493. [Google Scholar] [CrossRef]

- Lefèvre, T.; Chiang, A.; Kelavkar, M.; Li, H.; Li, J.; Lopez Fernandez de Castillejo, C.; Oliver, L.; Potini, Y.; Hunter, M.D.; De Roode, J.C. Behavioural resistance against a protozoan parasite in the monarch butterfly. J. Anim. Ecol. 2011, 81, 70–79. [Google Scholar]

- Kluger, M.J. Fever in ectotherms: Evolutionary implications. Am. Zool. 1979, 19, 295–304. [Google Scholar]

- Thomas, M.B.; Blanford, S. Thermal biology in insect-parasite interactions. Trends Ecol. Evol. 2003, 18, 344–350. [Google Scholar]

- Roy, H.E.; Steinkraus, D.C.; Eilenberg, J.; Hajek, A.E.; Pell, J.K. Bizarre interactions and endgames: Entomopathogenic fungi and their arthropod hosts. Annu. Rev. Entomol. 2006, 51, 331–357. [Google Scholar] [CrossRef]

- Kalsbeek, V. Field studies of Entomophthora (Zygomycetes: Entomophthorales)—induced behavioral fever in Musca domestica (Diptera: Muscidae) in Denmark. Biol. Contr. 2001, 21, 264–273. [Google Scholar] [CrossRef]

- Watson, D.W.; Mullens, B.A.; Petersen, J.J. Behavioral fever response of Musca domestica (Diptera: Muscidae) to infection by Entomophthora muscae (Zygomycetes: Entomophthorales). J. Invertebr. Pathol. 1993, 61, 10–16. [Google Scholar] [CrossRef]

- Boorstein, S.M.; Ewald, P.W. Costs and benefits of behavioural fever in Melanoplus sanguinipes infected by Nosema acridophagus. Physiol. Zool. 1987, 60, 586–595. [Google Scholar]

- Carruthers, R.I.; Larkin, T.S.; Firstencel, H.; Feng, Z. Influence of thermal ecology on the mycosis of a rangeland grasshopper. Ecology 1992, 73, 190–204. [Google Scholar] [CrossRef]

- Inglis, G.D.; Johnson, D.L.; Goettel, M.S. Effects of temperature and sunlight on mycosis (Beauveria bassiana) (Hyphomycetes: Symopdulosporae) of grasshoppers under field conditions. Environ. Entomol. 1997, 26, 400–409. [Google Scholar]

- Inglis, G.D.; Johnson, D.L.; Goettel, M.S. Effects of temperature and thermoregulation on mycosis by Beauveria bassiana in grasshoppers. Biol. Control 1996, 7, 131–139. [Google Scholar] [CrossRef]

- Blanford, S.; Thomas, M.B.; Langewald, J. Behavioural fever in the Senegalese grasshopper, Oedaleus senegalensis, and its implications for biological control using pathogens. Ecol. Entomol. 1998, 23, 9–14. [Google Scholar] [CrossRef]

- Elliot, S.L.; Blanford, S.; Thomas, M.B. Host-pathogen interactions in a varying environment: Temperature, behavioural fever and fitness. Proc. R. Soc. Lond. B Biol. Sci. 2002, 269, 1599–1607. [Google Scholar] [CrossRef]

- Ouedraogo, R.M.; Cusson, M.; Goettel, M.S.; Brodeur, J. Inhibition of fungal growth in thermoregulating locusts, Locusta migratoria, infected by the fungus Metarhizium anisopliae var acridum. J. Invertebr. Pathol. 2003, 82, 103–109. [Google Scholar] [CrossRef]

- Ouedraogo, R.M.; Goettel, M.S.; Brodeur, J. Behavioral thermoregulation in the migratory locust: A therapy to overcome fungal infection. Oecologia 2004, 138, 312–319. [Google Scholar] [CrossRef]

- Louis, C.; Jourdan, M.; Cabanac, M. Behavioral fever and therapy in a rickettsia-infected Orthoptera. Am. J. Physiol. 1986, 250, R991–R995. [Google Scholar]

- Adamo, S.A. The specificity of behavioral fever in the cricket Acheta domesticus. J. Parasitol. 1998, 84, 529–533. [Google Scholar] [CrossRef]

- Starks, P.T.; Blackie, C.A.; Seeley, T.D. Fever in honeybee colonies. Naturwissenschaften 2000, 87, 229–231. [Google Scholar] [CrossRef]

- Müller, C.B.; Schmid-Hempel, P. Exploitation of cold temperature as defence against parasitoids in bumblebees. Nature 1993, 363, 65–67. [Google Scholar]

- Moore, J.; Freehling, M. Cockroach hosts in thermal gradients suppress parasite development. Oecologia 2002, 133, 261–266. [Google Scholar] [CrossRef]

- Leung, B.; Forbes, M.R.; Baker, R.L. Nutritional stress and behavioural immunity of damselflies. Anim. Behav. 2001, 61, 1093–1099. [Google Scholar] [CrossRef]

- Williams, G.C. Natural selection, the costs of reproduction, and a refinement of Lack’s principle. Am. Nat. 1966, 100, 687–690. [Google Scholar]

- Clutton-Brock, T.H. Reproductive effort and terminal investment in iteroparous animals. Am. Nat. 1984, 123, 212–229. [Google Scholar]

- Minchella, D.J. Host life-history variation in response to parasitism. Parasitology 1985, 90, 205–216. [Google Scholar] [CrossRef]

- Minchella, D.J.; Loverde, P.T. A cost of increased early reproductive effort in the snail Biomphalaria glabrata. Am. Nat. 1981, 118, 876–881. [Google Scholar]

- Agnew, P.; C Koella, J.; Michalakis, Y. Host life history responses to parasitism. Microb. Inf. 2000, 2, 891–896. [Google Scholar] [CrossRef]

- Polak, M.; Starmer, W.T. Parasite-induced risk of mortality elevates reproductive effort in male Drosophila. Proc. R. Soc. Lond. B Biol. Sci. 1998, 265, 2197–2201. [Google Scholar] [CrossRef]

- Adamo, S.A. Evidence for adaptive changes in egg laying in crickets exposed to bacteria and parasites. Anim. Behav. 1999, 57, 117–124. [Google Scholar] [CrossRef]

- Barribeau, S.M.; Sok, D.; Gerardo, N.M. Aphid reproductive investment in response to mortality risks. BMC Evol. Biol. 2010, 10. [Google Scholar] [CrossRef]

- Reynolds, D.G. Laboratory studies of the microsporidian Plistophora culicis (Weiser) infecting Culex pipiens fatigans Wied. Bull. Entomol. Res. 1970, 60. [Google Scholar] [CrossRef]

- Sternberg, E.D.; Lefèvre, T.; Li, J.; Lopez Fernandez de Castillejo, C.; Li, H.; Hunter, M.D.; de Roode, J.C. Food plant-derived disease tolerance and resistance in a natural butterfly-plant-parasite interaction. Evolution 2012. [Google Scholar] [CrossRef]

- Karban, R.; English-Loeb, G. Tachinid parasitoids affect host plant choice by caterpillars to increase caterpillar survival. Ecology 1997, 78, 603–611. [Google Scholar] [CrossRef]

- Rolff, J.; Siva-Jothy, M.T. Invertebrate ecological immunology. Science 2003, 301, 472–475. [Google Scholar]

- Sadd, B.M.; Schmid-Hempel, P. Principles of ecological immunology. Evol. Appl. 2008, 2, 113–121. [Google Scholar] [CrossRef]

- Schulenburg, H.; Kurtz, J.; Moret, Y.; Siva-Jothy, M.T. Introduction. Ecological immunology. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3–14. [Google Scholar] [CrossRef]

- Sheldon, B.C.; Verhulst, S. Ecological immunity: Costly parasite defences and trade-offs in evolutionary ecology. Trends Ecol. Evol. 1996, 11, 317–321. [Google Scholar] [CrossRef]

- Boots, M.; Begon, M. Trade-offs with resistance to a granulosis virus in the Indian meal moth, examined by a laboratory evolution experiment. Funct. Ecol. 1993, 7, 528–534. [Google Scholar] [CrossRef]

- Moret, Y.; Schmid-Hempel, P. Survival for immunity: The price of immune activation for bumblebee workers. Science 2000, 290, 1166–1168. [Google Scholar]

- Kraaijeveld, A.R.; Godfray, H.C. Trade-off between parasitoid resistance and larval competitive ability in Drosophila melanogaster. Nature 1997, 389, 278–280. [Google Scholar]

- Simmons, L.W.; Roberts, B. Bacterial immunity traded for sperm viability in male crickets. Science 2005, 309. [Google Scholar] [CrossRef]

- McKean, K.A.; Nunney, L. Sexual selection and immune function in Drosophila melanogaster. Evolution 2008, 62, 386–400. [Google Scholar] [CrossRef]

- Voordouw, M.J.; Koella, J.C.; Hurd, H. Comparison of male reproductive success in malaria-refractory and susceptible strains of Anopheles gambiae. Malar. J. 2008, 7. [Google Scholar] [CrossRef]

- Shoemaker, K.L.; Adamo, S.A. Adult female crickets, Gryllus texensis, maintain reproductive output after repeated immune challenges. Physiol. Entomol. 2007, 32, 113–120. [Google Scholar] [CrossRef]

- Elliot, S.L.; Hart, A.G. Density-dependent prophylactic immunity reconsidered in the light of host group living and social behavior. Ecology 2010, 91, 65–72. [Google Scholar] [CrossRef]

- Hughes, D.P.; Cremer, S. Plasticity in antiparasite behaviours and its suggested role in invasion biology. Anim. Behav. 2007, 74, 1593–1599. [Google Scholar] [CrossRef]

- Hirayama, H.; Kasuya, E. Cost of oviposition site selection in a water strider Aquarius paludum insularis: Egg mortality increases with oviposition depth. J. Insect Physiol. 2010, 56, 646–649. [Google Scholar] [CrossRef]

- Evans, J.D.; Aronstein, K.; Chen, Y.P.; Hetru, C.; Imler, J.-L.; Jiang, H.; Kanost, M.; Thompson, G.J.; Zou, Z.; Hultmark, D. Immune pathways and defence mechanisms in honey bees Apis mellifera. Insect Mol. Biol. 2006, 15, 645–656. [Google Scholar] [CrossRef]

- Castella, G.; Chapuisat, M.; Moret, Y.; Christe, P. The presence of conifer resin decreases the use of the immune system in wood ants. Ecol. Entomol. 2008, 33, 408–412. [Google Scholar] [CrossRef]

- Lefèvre, T.; de Roode, J.C.; Kacsoh, B.Z.; Schlenke, T.A. Defence strategies against a parasitoid wasp in Drosophila: Fight or flight? Biol. Lett. 2011, 8, 230–233. [Google Scholar]

- Carrai, V.; Borgognini-Tarli, S.M.; Huffman, M.A.; Bardi, M. Increase in tannin consumption by sifaka (Propithecus verreauxi verreauxi) females during the birth season: A case for self-medication in prosimians? Primates 2003, 44, 61–66. [Google Scholar]

- Hart, B.L. The evolution of herbal medicine: Behavioural perspectives. Anim. Behav. 2005, 70, 975–989. [Google Scholar] [CrossRef]

- Agrawal, A.A. Phenotypic plasticity in the interactions and evolution of species. Science 2001, 294, 321–326. [Google Scholar] [CrossRef]

- DeWitt, T.J.; Sih, A.; Wilson, D.S. Costs and limits of phenotypic plasticity. Trends Ecol. Evol. 1998, 13, 77–81. [Google Scholar] [CrossRef]

- Hirayama, H.; Kasuya, E. Oviposition depth in response to egg parasitism in the water strider: High-risk experience promotes deeper oviposition. Anim. Behav. 2009, 78, 935–941. [Google Scholar] [CrossRef]

- Jaenike, J. Parasite pressure and the evolution of amanitin tolerance in Drosophila. Evolution 1985, 39, 1295–1301. [Google Scholar] [CrossRef]

- Spicer, G.S.; Jaenike, J. Phylogenetic analysis of breeding site use and amanitin tolerance within the Drosophila quinaria Species Group. Evolution 1996, 50, 2328–2337. [Google Scholar] [CrossRef]

- Karban, R. Fine-scale adaptation of herbivorous thrips to individual host plants. Nature 1989, 340, 60–61. [Google Scholar] [CrossRef]

- Kawecki, T.J.; Ebert, D. Conceptual issues in local adaptation. Ecol. Lett. 2004, 7, 1225–1241. [Google Scholar] [CrossRef]

- Thompson, J.N. The Coevolutionary Process; University of Chicago Press: Chicago, IL, USA, 1994; p. 376. [Google Scholar]

- The Geographic Mosaic Theory of Coevolution. In The Coevolutionary Process; Thompson, J.N. (Ed.) University of Chicago Press: Chicago, IL, USA, 2005.

- Greischar, M.A.; Koskella, B. A synthesis of experimental work on parasite local adaptation. Ecol. Lett. 2007, 10, 418–434. [Google Scholar] [CrossRef]

- Hoeksema, J.D.; Forde, S.E. A meta-analysis of factors affecting local adaptation between interacting species. Am. Nat. 2008, 171, 275–290. [Google Scholar] [CrossRef]

- Decaestecker, E.; Gaba, S.; Raeymaekers, J.A.M.; Stoks, R.; van Kerckhoven, L.; Ebert, D.; de Meester, L. Host-parasite “Red Queen” dynamics archived in pond sediment. Nature 2007, 450, 870–873. [Google Scholar]

- Kaltz, O.; Shykoff, J.A. Local adaptation in host-parasite systems. Heredity 1998, 81, 361–370. [Google Scholar] [CrossRef]

- Lively, C.M. Adaptation by a parasitic trematode to local populations of its snail host. Evolution 1989, 43, 1663–1671. [Google Scholar] [CrossRef]

- Lively, C.M. Migration, virulence, and the geographic mosaic of adaptation by parasites. Am. Nat. 1999, 153, S34–S47. [Google Scholar] [CrossRef]

- Lively, C.M.; Dybdahl, M.F. Parasite adaptation to locally common host genotypes. Nature 2000, 405, 679–681. [Google Scholar]

- Manning, S.D.; Woolhouse, M.E.; Ndamba, J. Geographic compatibility of the freshwater snail Bulinus globosus and schistosomes from the Zimbabwe highveld. Int. J. Parasitol. 1995, 25, 37–42. [Google Scholar] [CrossRef]

- Mccoy, K.D.; Boulinier, T.; Schjørring, S. Local adaptation of the ectoparasite Ixodes uriae to its seabird host. Evol. Ecol. 2002, 4, 441–456. [Google Scholar]

- Parker, M.A. Local population differentiation for compatibility in an annual legume and its host-specific fungal pathogen. Evolution 1985, 39, 713–723. [Google Scholar] [CrossRef]

- Thrall, P.H.; Burdon, J.J. Evolution of virulence in a plant host pathogen metapopulation. Science 2003, 299, 1735–1737. [Google Scholar]

- Thrall, P.H.; Burdon, J.J.; Bever, J.D. Local adaptation in the Linum marginale-Melampsora lini host-pathogen interaction. Evolution 2002, 56, 1340–1351. [Google Scholar]

- Imhoof, B.; Schmid-Hempel, P. Patterns of local adaptation of a protozoan parasite to its bumblebee host. Oikos 1998, 82, 59–65. [Google Scholar] [CrossRef]

- Kaltz, O.; Gandon, S.; Michalakis, Y.; Shykoff, J.A. Local maladaptation in the anther-smut fungus Microbotryum violaceum to its host plant Silene latifolia: Evidence from a cross-inoculation experiment. Evolution 1999, 53, 395–407. [Google Scholar] [CrossRef]

- Oppliger, A.; Vernet, R.; Baez, M. Parasite local maladaptation in the Canarian lizard Gallotia galloti (Reptilia: Lacertidae) parasitized by haemogregarian blood parasite. J. Evol. Biol. 1999, 12, 951–955. [Google Scholar]

- Burdon, J.J.; Thrall, P.H. Spatial and temporal patterns in coevolving plant and pathogen associations. Am. Nat. 1999, 153, S15–S33. [Google Scholar] [CrossRef]

- Dybdahl, M.F.; Storfer, A. Parasite local adaptation: Red queen versus suicide king. Trends Ecol. Evol. 2003, 18, 523–530. [Google Scholar] [CrossRef]

- Gandon, S. Local adaptation and the geometry of host-parasite coevolution. Ecol. Lett. 2002, 5, 246–256. [Google Scholar] [CrossRef]

- Boots, M.; Bowers, R.G. Three mechanisms of host resistance to microparasites-avoidance, recovery and tolerance-show different evolutionary dynamics. J. Theor. Biol. 1999, 201, 13–23. [Google Scholar] [CrossRef]

- Gandon, S.; Michalakis, Y. Evolution of parasite virulence against qualitative or quantitative host resistance. Proc. R. Soc. Lond. B Biol. Sci. 2000, 267, 985–990. [Google Scholar] [CrossRef]

- Van Baalen, M. Coevolution of recovery ability and virulence. Proc. R. Soc. Lond. B Biol. Sci. 1998, 265, 317–325. [Google Scholar] [CrossRef]

- Gandon, S.; Jansen, V.A.A.; van Baalen, M. Host life history and the evolution of parasite virulence. Evolution 2001, 55, 1056–1062. [Google Scholar] [CrossRef]

- Levin, S.; Pimentel, D. Selection of intermediate rates of increase in parasite-host systems. Am. Nat. 1981, 117, 308–315. [Google Scholar]

- Anderson, R.M.; May, R.M. Coevolution of hosts and parasites. Parasitol. 1982, 85 (Pt. 2), 411–426. [Google Scholar]

- Frank, S.A. Models of parasite virulence. Q. Rev. Biol. 1996, 71, 37–78. [Google Scholar]

- Alizon, S.; Hurford, A.; Mideo, N.; van Baalen, M. Virulence evolution and the trade-off hypothesis: History, current state of affairs and the future. J. Evol. Biol. 2009, 22, 245–259. [Google Scholar]

- Bremermann, H.J.; Pickering, J. A game-theoretical model of parasite virulence. J. Theor. Biol. 1983, 100, 411–426. [Google Scholar] [CrossRef]

- Nowak, M.A.; May, R.M. Superinfection and the evolution of parasite virulence. Proc. R. Soc. Lond. B Biol. Sci. 1994, 255, 81–89. [Google Scholar] [CrossRef]

- Van Baalen, M.; Sabelis, M.W. The dynamics of multiple infection and the evolution of virulence. Am. Nat. 1995, 146, 881–910. [Google Scholar]

- De Roode, J.C.; Pansini, R.; Cheesman, S.J.; Helinski, M.E.H.; Huijben, S.; Wargo, A.R.; Bell, A.S.; Chan, B.H.K.; Walliker, D.; Read, A.F. Virulence and competitive ability in genetically diverse malaria infections. Proc. Natl. Acad. Sci. USA 2005, 102, 7624–7628. [Google Scholar]

- Alizon, S.; van Baalen, M. Multiple infections, immune dynamics, and the evolution of virulence. Am. Nat. 2008, 172, E150–E168. [Google Scholar] [CrossRef]

- Choisy, M.; de Roode, J.C. Mixed infections and the evolution of virulence: Effects of resource competition, parasite plasticity, and impaired host immunity. Am. Nat. 2010, 175, E105–E118. [Google Scholar] [CrossRef]

- Little, T.J.; Shuker, D.M.; Colegrave, N.; Day, T.; Graham, A.L. The coevolution of virulence: Tolerance in perspective. PLoS Pathog. 2010, 6, e1001006. [Google Scholar] [CrossRef]

- Miller, M.R.; White, A.; Boots, M. The evolution of parasites in response to tolerance in their hosts: The good, the bad, and apparent commensalism. Evolution 2006, 60, 945–956. [Google Scholar]

- Fraser, C.; Hollingsworth, T.D.; Chapman, R.; de Wolf, F.; Hanage, W.P. Variation in HIV-1 set-point viral load: Epidemiological analysis and an evolutionary hypothesis. Proc. Natl. Acad. Sci. USA 2007, 104, 17441–17446. [Google Scholar]

- Mackinnon, M.J.; Read, A.F. Virulence in malaria: An evolutionary viewpoint. Philos. Trans. R. Soc. B Biol. Sci. 2004, 359, 965–986. [Google Scholar] [CrossRef]

- Restif, O.; Koella, J.C. Shared control of epidemiological traits in a coevolutionary model of host-parasite interactions. Am. Nat. 2003, 161, 827–836. [Google Scholar] [CrossRef]

- Poulin, R. Adaptive” changes in the behaviour of parasitized animals: A critical review. Int. J. Parasitol. 1995, 25, 1371–1383. [Google Scholar] [CrossRef]

- Thomas, F.; Adamo, S.A.; Moore, J. Parasitic manipulation: Where are we and where should we go? Behav. Process. 2005, 68, 185–199. [Google Scholar] [CrossRef]

- Lefèvre, T.; Roche, B.; Poulin, R.; Hurd, H.; Renaud, F.; Thomas, F. Exploitation of host compensatory responses: The “must” of manipulation? Trends Parasitol. 2008, 24, 435–439. [Google Scholar] [CrossRef]

- Lefèvre, T.; Adamo, S.A.; Biron, D.G.; Missé, D.; Hughes, D.P.; Thomas, F. Invasion of the body snatchers: The diversity and evolution of manipulative strategies in host-parasite interactions. Adv. Parasitol. 2009, 68, 45–83. [Google Scholar] [CrossRef]

- Poulin, R. Parasite manipulation of host behavior: An update and frequently asked questions. Adv. Study Behav. 2010, 41, 151–186. [Google Scholar] [CrossRef]

- Ebert, D. Virulence and local adaptation of a horizontally transmitted parasite. Science 1994, 265, 1084–1086. [Google Scholar]

- Dawkins, R.; Krebs, J.R. Arms races between and within species. Proc. R. Soc. Lond. B Biol. Sci. 1979, 205, 489–511. [Google Scholar] [CrossRef]

- Poulin, R.; Brodeur, J.; Moore, J. Parasite manipulation of host behaviour: Should hosts always lose? Oikos 1994, 70, 479–484. [Google Scholar] [CrossRef]

- Gandon, S.; Michalakis, Y. Local adaptation, evolutionary potential and host-parasite coevolution: Interactions between migration, mutation, population size and generation time. J. Evol. Biol. 2002, 15, 451–462. [Google Scholar] [CrossRef]

- Brockhurst, M.A.; Buckling, A.; Poullain, V.; Hochberg, M.E. The impact of migration from parasite-free patches on antagonistic host-parasite coevolution. Evolution 2007, 61, 1238–1243. [Google Scholar] [CrossRef]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

De Roode, J.C.; Lefèvre, T. Behavioral Immunity in Insects. Insects 2012, 3, 789-820. https://doi.org/10.3390/insects3030789

De Roode JC, Lefèvre T. Behavioral Immunity in Insects. Insects. 2012; 3(3):789-820. https://doi.org/10.3390/insects3030789

Chicago/Turabian StyleDe Roode, Jacobus C., and Thierry Lefèvre. 2012. "Behavioral Immunity in Insects" Insects 3, no. 3: 789-820. https://doi.org/10.3390/insects3030789

APA StyleDe Roode, J. C., & Lefèvre, T. (2012). Behavioral Immunity in Insects. Insects, 3(3), 789-820. https://doi.org/10.3390/insects3030789