Simple Summary

Honey bees are essential for food production and biodiversity, but extreme weather events and harsh winters can significantly affect bee colonies and reduce honey yields. This study investigated if winter weather could be used to predict honey production in the subsequent harvest season. By analyzing environmental data from both winter and harvest seasons, using variables such as temperature, humidity, precipitation, pressure, wind, and vegetation, three different machine learning (ML) models were used to predict honey yields. Data were collected from five Italian apiaries within a breeding population to train the models from 2015 to 2019. Model performance was evaluated using accuracy, sensitivity, specificity, precision, and area under the ROC curve (AUC). The results showed that winter weather conditions have a substantial impact on honey production and can serve as predictors. Understanding and forecasting these patterns can assist beekeepers in making informed decisions to protect their colonies and optimize honey yields.

Abstract

Bees are crucial for food production and biodiversity. However, extreme weather variation and harsh winters are the leading causes of colony losses and low honey yields. This study aimed to identify the most important features and predict Total Honey Harvest (THH) by combining machine learning (ML) methods with climatic conditions and environmental factors recorded from the winter before and during the harvest season. The initial dataset included 598 THH records collected from five apiaries in Lombardy (Italy) during spring and summer from 2015 to 2019. Colonies were classified into medium-low or high production using the 75th percentile as a threshold. A total of 38 features related to temperature, humidity, precipitation, pressure, wind, and enhanced vegetation index–EVI were used. Three ML models were trained: Decision Tree, Random Forest, and Extreme Gradient Boosting (XGBoost). Model performance was evaluated using accuracy, sensitivity, specificity, precision, and area under the ROC curve (AUC). All models reached a prediction accuracy greater than 0.75 both in the training and in the testing sets. Results indicate that winter climatic conditions are important predictors of THH. Understanding the impact of climate can help beekeepers in developing strategies to prevent colony decline and low production.

1. Introduction

There is a close relationship between honey production and climate. Honey production is the consequence of a complex social organisation with specific external and internal actions that depend on local environmental and geographical conditions. Honey bee flight activity, waggle runs frequency, honey stores, queen provisioning, egg-laying rate, and development are influenced by environmental factors [1,2,3,4]. In addition, latitude and altitude determine the biology, behaviour and distribution of honey bees and flowers.

Temperature, solar radiation, wind, precipitation, and humidity are the most important factors influencing honey harvest [5,6,7,8]. Among the environmental factors, temperature is a determinant of colony development. Indeed, brood growth is influenced by hive temperature, which must remain between 33 and 36 °C and with high relative humidity. The thermoregulation mechanism is triggered when the temperature is above 25 °C; nevertheless, prolonged high (>40 °C) or low (<6 °C) temperatures can result in severe colony losses [9]. Similarly, foraging activity peaks when the temperature ranges between 12 and 25 °C and ceases when the temperature is below −7 or above +43 °C [9,10]. In tropical countries, precipitations strongly affect honey production; indeed, pollen foraging is highly influenced by summer rainfall and cloud cover [11]. In addition, high rainfall during winter has been associated with decreased food storage and increased colony mortality in the Mediterranean islands [9]. Furthermore, since relative humidity levels within the hive influence egg hatching, precipitation is also thought to influence honey productivity and bee survival. In the Mediterranean islands, wind speed can significantly affect flight activity [9], which is related to the increased time spent foraging [12]. In addition, climatic conditions during winter have been associated with health problems, lower reproductive rate, irregular egg laying, condensation starvation, and mortality of honey bees [13]. Therefore, estimating and minimising the impact of winter climatic conditions on the following harvest season is one of the challenges for beekeepers.

Machine Learning (ML) is a branch of artificial intelligence (AI) that refers to the process of implementation and evaluation of algorithms that allow pattern recognition, classification, and prediction by fitting models derived from existing data [14]. Data learning can occur through three approaches: supervised learning, unsupervised learning, and reinforcement learning [15]. Supervised learning algorithms allow the identification of meaningful relationships between a set of input variables (features or predictors) and the label (target or outcome) to be predicted and exploit this information to train different prediction models and evaluate their predictive performance on unknown unlabelled data. In general, this approach includes a training and a testing step. In the first step, a larger training subset is used as input, in which features are learned by the algorithm utilized to build the model. Once trained, the model can be used to make predictions on new data and assess the error during the testing step [16].

Among the several supervised learning methods that can deal with both classification and regression problems, linear models are the simplest and most commonly used ML models. Most linear models are used to solve regression issues, although linear models for classification problems do exist, e.g., logistic regression [15]. Decision trees (DT), non-parametric methods based on root and leaf nodes, are also widely used. These algorithms provide accurate estimates for both large and small datasets and present several advantages over linear methods as no assumptions about the distribution of explanatory variables are required, and they are not influenced by high correlation among independent variables, outliers, and missing values [17,18]. Unlike DT, Random Forest (RF) does not rely on a singular decision, but on several decisions. The greater number of trees in the RF algorithm leads to higher accuracy and prevents overfitting problems [19]. Novel approaches have recently been included in the family of gradient boosting algorithms, e.g., eXtreme Gradient Boosting–XGBoost, which is widely used due to its computational efficiency (fast and lower memory use), high accuracy, and its combination with DT [20,21]. The idea behind the boost is to sequentially build new models in sequence. In essence, “boosting” increases its performance by continuing to build new trees, where each new tree in the sequence attempts to correct where the previous one made the largest errors.

In complex biological data, different ML methods have been successfully used in ecology [22,23], environmental monitoring [17], plant species identification [24,25], flowering time [26,27], and animal science [18,28,29]. ML models have also been used to predict crop yields using honey bee census data, along with climatic and satellite-derived variables [30]. In honey bees, ML methods have been applied for predicting demographic alterations [31], toxicological assessment [32], beehive monitoring [33], varroa infestation [34], behaviour [31], genetics [35,36], queen body mass [37] bee products quality assessment [38,39,40], bee colony health monitoring [41,42], climate change impact and honey production [43,44,45,46,47]. In particular, predictors used for honey yield forecasting were management factors [37], historical weather variables (e.g., rainfall, temperature, and relative humidity) excluding data concerning the year to forecast [45] or several climatic and satellite-derived vegetation data concerning both preceding (11 months ahead) and during the main honey flow period [48].

In Italy, with the honey harvest season starting in March, giving beekeepers a prediction of a subsequent good or poor harvest season using climatic information from the few preceding months will help them design appropriate management strategies. This is particularly important in a country with widespread migratory beekeeping, where colonies are transported to follow blooming patterns. This practice accounts for 39% of all beekeeping operations and 58% excluding hobbyists [49]. Thus, climatic information of winter prior to harvest combined with enhanced vegetation index -EVI, a satellite-derived measure of vegetation greenness during harvest season, were used to determine the most important features and predict the Total Honey Harvest (THH) using ML methods.

2. Materials and Methods

2.1. Dataset Construction and Variables Pre-Processing

A total of 598 THH records were collected from spring 2015 to summer 2019 from five commercial apiaries located in Monza and Brianza province of the Lombardy region in Italy. The bees belonged to an Italian breeding population composed of a combination of Apis ligustica, Apis carnica, and Buckfast subspecies. Over a four-year period, these bees were selected in a controlled mating system, focusing on: honey production, gentleness and hygienic behaviour. Further details on the selection scheme can be found in De Iorio et al. [50]. The dataset also included geographic coordinates of apiaries and year of production. The latitude and longitude values were used to retrieve pre-harvest winter (December–February) monthly climatic data from weather stations using the NASA Prediction of Worldwide Energy Resource (POWER) Data Access Viewer [51]. Thus, a total of 33 variables associated with temperature (12), humidity (6), precipitation (3), pressure (3), and wind (9) relative to the winter months prior to the harvest season of the years from 2014 to 2019 were added and used for further analysis (Table 1).

Table 1.

Features related to monthly climatic conditions of winter prior to harvest and environmental information of harvesting season.

Neither the data distribution nor the residual distribution was analysed because ML methods do not assume a functional distribution and focus on prediction [18].

The continuous THH outcome was classified into high or medium-low production according to the 75th percentile of the THH distribution, where values above 31.2 kg were considered high (set as 1) and values below or equal to 31.2 as medium-low (set as 0). Records with missing climatic and EVI values and THH lower than 5 kg were removed. After editing, 394 THH records were included in the analysis.

2.2. Machine Learning Procedures and Model Evaluation

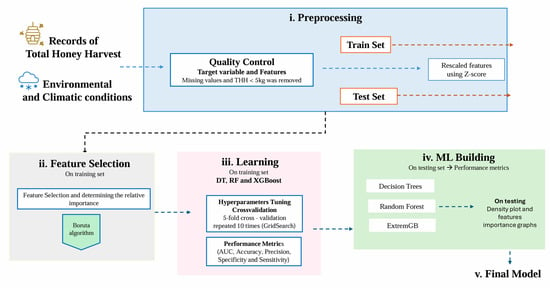

Prediction models were developed using three ML algorithms: DT, RF, and XGBoost. The dataset of 394 records was split into two subsets: 75% of the data (294 records) was used to train the models, while the remaining 25% of the data was excluded from model building and preserved as an external testing set, simulating new unknown data for evaluating model performance. Figure 1 summarizes the ML analysis flowchart used to identify the most important features and predict total honey harvest from climatic and environmental conditions before and during harvest.

Figure 1.

Flowchart illustrating the steps in the full ML pipeline, including pre-processing, feature selection, and model building.

Prior to model building and training, features were rescaled using the z-score, and automatic feature selection was performed using the Boruta algorithm in R software [52], which evaluates the relevance of the features and ranks them according to their importance [53,54]. Indeed, Boruta is a feature selection algorithm that iteratively removes the features that are statistically less relevant than random probes and provides an unbiased and stable selection of important and unimportant variables. In particular, Boruta is a wrapper around an RF classifier and uses shifting with fictitious (shadow) characteristics produced throughout numerous iterations to locate all relevant predictor variables. Model training and hyperparameters optimization were computed by applying a 5-fold cross-validation repeated 10 times. In the cross-validation process, the data were divided into five mutually exclusive subsets or folds, and in each iteration of the cross-validation, one fold was reserved for validation, while the remaining four folds were used for model training.

The GridSearch technique was used in order to choose the optimal combination of hyperparameters [55]. The predictive ability of each model on the training and testing sets was then evaluated based on performance metrics like:

Besides the metrics above, the area under the Receiver Operating Characteristic (ROC) curve (AUC) was also obtained with the R package yardstick [56]. An AUC of 1 indicates that the classifier can correctly differentiate between all positive and negative class points. An AUC of 0 indicates that the classifier incorrectly classifies all negatives as positives and vice versa. In the present study, we used AUC as the main metric for model selection. In addition, the contribution of each variable to the best model (i.e., feature importance) was computed using the Ranger package [57]. All analyses were performed using the R software version 4.1.3, and ML analysis used the ecosystem package “tidymodels” [58]. For clarity, Supplementary File SA provides pseudocode outlining the key methodological steps.

3. Results

3.1. Descriptive Statistics

A first exploratory data analysis, including descriptive statistics (mean, standard deviation, and interquartile range), was carried out on the entire dataset (Table 2). The average honey harvest was 23.4 kg, with substantial variability (SD = 11.4 kg). The wide range between the minimum (5.2 kg) and maximum (63.2 kg) harvests highlights the potential influence of environmental factors. The winter months show variations in temperature, humidity, precipitation, and wind speed. Notably, December (T2M_12) experienced average temperatures around 4.5 °C, while January (T2M_1) and February (T2M_2) were slightly cooler and warmer, respectively. Precipitation was highest in February. The EVI, a measure of vegetation greenness, generally increased from March to July, indicating growing vegetation during the honey harvest season. April and June had the highest median EVI values. This suggests a possible link between late-winter precipitation and forage availability during the honey harvest season. Additionally, other factors without available information, such as bee colony health during this period, could also play significant roles.

Table 2.

Descriptive statistics of total honey harvest (THH), climatic features of winter prior to harvest, and enhanced vegetation index (EVI) of harvesting season.

3.2. Features Selection

Training a model with redundant or uninformative features can be ineffective and inefficient. Indeed, non-informative features cannot contribute to improving the performance of a predictive model. The Boruta algorithm identified irrelevant features, enabling unbiased and efficient model training, and these features were subsequently removed from the original dataset. The 18 remaining variables included in the training and testing sets were: QV2M_1, T2MDEW_1, RH2M_1, WS2M_MIN_2, RH2M_2, WS2M_MAX_12, WS2M_1, PS_1, T2M_2, T2M_MAX_2, EVI3, PS_2, WS2M_MAX_1, T2M_MAX_1, WS2M_MAX_2, T2M_MIN_1, T2M_1 and PRECTOTCORR_1. After employing Boruta for feature selection, we excluded non-informative or redundant variables, thus refining the final set of predictors used for model training. To ensure the consistency of performance metrics, we compared three model configurations (see Figure S1—Supplementary Material SC): (i) a full model using all available variables, (ii) a model using only the features retained by Boruta, and (iii) a reduced model using only the six most influential variables based on Shafigurepley importance scores (see Figure 2). Notably, the Boruta-selected subset offered comparable AUC while preserving interpretability, indicating that the exclusion of low-importance features did not compromise predictive performance. In fact, reducing the feature set mitigated the risk of overfitting and decreased computational overhead, reinforcing that the retained predictors were both statistically significant and practically relevant for accurately forecasting honey production.

Figure 2.

Feature importance based on Random Forest. The top 10 important features for total honey harvest prediction are reported. PS2: Average of surface pressure at the surface of the earth (kPa) in February; WS2M_1: Mean wind speed (m/s) at 2 m in January; T2M_2: Mean temperature (°C) at 2 m in February; WS2M_MAX_12: Maximum wind speed (m/s) at 2 m in December; PS_1: Average of surface pressure at the surface of the earth (kPa)in January; T2M_MAX_2: Maximum temperature (°C) at 2 m in February (2); WS2M_MIN_2: Minimum wind speed (m/s) at 2 m in February (2); RH2M_2: Mean relatives humidity (%) at 2 m in February; EVI3: Enhanced Vegetation Index in March; QV2M_1: Mean specific humidity (g/kg) at 2 m in January (1).

3.3. Model Training and Evaluation on Training and Test Sets

Three algorithms (DT, RF, and XGBoost) were applied to the dataset, and their predictive performance on both training and testing sets was compared according to several metrics. The hyperparameters were optimized for the three ML algorithms (Table 3) using GridSearch, a method that systematically explores a predefined set of parameter combinations. This approach ensures the selection of the best configuration by evaluating performance through cross-validation. Each algorithm had different hyperparameters that were tuned to achieve optimal performance. Thus, for RF, the number of variables randomly sampled as candidates at each split (mtry) was set to 7, and the minimum number of data points in a node (min_n) was set to 22, ensuring robust splits and avoiding overfitting. Instead, the optimal DT model was found with a cost_complexity of 0.00000353 to control tree pruning, and min_n was 19. For XGBoost, the tuned model used a more complex set of hyperparameters, including trees (682), min_n (4), tree_depth (5), learn_rate (0.0424), loss_reduction (0.0000000464), and sample_size (0.958). The identification of optimized hyperparameters suggests that a model selection process was performed to find the best settings for each algorithm.

Table 3.

Optimized hyperparameters for Random Forest, Decision Trees, and XGBoost for Honey harvest prediction.

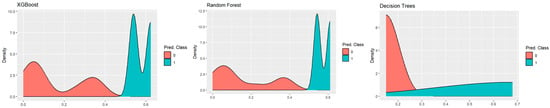

Similar performances were observed for all three algorithms. Specifically, accuracy was 0.82 on the training set and 0.76 on the testing set. Specificity was greater than 0.92 in the training set and greater than 0.85 in the testing set. Sensitivity was only slightly lower in the testing set than in the training set (0.48 vs. 0.50, respectively). A similar result was found for precision: 0.85 in the training set vs. 0.83 in the testing set for all models. In addition, among the algorithms, the mean variation in AUC between the training and testing sets was close to 5%. Similarly, the AUC variation across the algorithms in the testing set was less than 20% (0.66 for DT vs. 0.82 for RF). Our results indicated that RF exhibited the best performance (AUC: 0.82), followed by XGBoost (AUC: 0.77), while DT showed a relatively lower performance (AUC: 0.66). Figure 3 presents the prediction density plots for the three algorithms (DT, RF, and XGBoost) based on the predicted probabilities from the test set. These probabilities represent the likelihood of each observation belonging to class 1 (high honey production) or class 0 (low honey production). The distributions of RF and XGBoost are visually comparable, showing a clear distinction between the probability densities of the two classes. In contrast, the DT model displays a greater overlap between these densities, which aligns with its lower AUC compared to RF and XGBoost. The predicted probabilities plotted are continuous values representing the likelihood of an observation belonging to class 1. Although the final classification is binary (high or low production), the continuous analysis of probabilities provides a more detailed understanding of the confidence level in the predictions. These results highlight the superior discriminative ability of RF and XGBoost, which more effectively separate high production levels from low ones, enhancing the reliability of predictions for the test data.

Figure 3.

Prediction density plots for the test set showing the distribution of predicted probabilities for high and low honey production levels across the tree algorithms Decision Trees, Random Forest and Extreme Gradient Boosting.

3.4. Feature Importance and Performance

Under the specified computational conditions (MacBook Pro [M1, 2020, 16 GB RAM]), we measured the execution time of three algorithms: Random Forest, XGBoost, and Decision Tree. RF exhibited a 28% reduction in execution time compared to XGBoost and a 55% reduction compared to DT. XGBoost also outperformed DT by 37% in terms of computational efficiency. Although DT had the fastest processing time, its predictive performance was lower. Detailed execution times are presented in Supplementary SB Table S1. Therefore, although XGBoost exhibited good predictive capacity, Random Forest (RF) demonstrated more robust performance—combining a higher AUC with faster processing—thus offering a more favorable balance between accuracy and computational cost. For this reason, we adopted RF as our primary tool for result interpretation and to guide the variable selection process. The feature importance analysis, conducted using the best-performing RF model, revealed the top 10 most influential factors that contribute to predicting THH (Figure 2). Among the 18 features retained after the feature selection process, the average temperature and pressure in February (T2M_2 and PS_2), as well as the average wind speed in January (WS2M_1), emerged as the most significant predictors.

In addition, we evaluated the impact of feature selection on model performance. Figure S1 (Supplementary Material SC) illustrates how the number of selected features influenced the predictive ability of the best-performing algorithm. Our results demonstrate that feature selection using the Boruta algorithm yielded the highest AUC, outperforming models trained with all features and a limited subset of top-ranked features. This finding reinforces the importance of an effective feature selection strategy for optimizing ML models and balancing model complexity and predictive accuracy.

4. Discussion

The application of machine learning algorithms has gained increasing attention in recent years due to their ability to capture intricate relationships between variables without requiring prior assumptions about their nature. Despite the relatively limited number of studies on honey bees, a range of inputs and outputs in beehive monitoring data models suggest that research has focused on the division between data collection and analysis, as well as determining the appropriate data to collect and how to obtain valuable insights. Most studies compare different approaches and algorithms to identify the best-suited ones for specific application cases. However, they often lack data from multiple beehives, apiaries, and geographical locations, leading to results that are specific to a particular condition and may differ when these limitations are addressed [59]. External validation is often recommended to guarantee the generalizability of ML models and reduce overfitting. In the present study, the original dataset was split into two subsets so that one subset was used to build and train the models, while the other was excluded from model building and used as an external testing set to evaluate the model’s performance on unseen data. Nevertheless, we are aware that future studies should evaluate the model’s performance on other data (e.g., bees experiencing different climatic conditions) to generalise our findings to other contexts or bee populations. In our study, we found that climatic conditions during the coldest months prior to harvest can indicate that changes in hive comfort may reduce production and colony survival levels. In particular, we found that atmospheric pressure, wind, temperature, humidity during the coldest months, and EVI at the beginning of the harvest season are good predictors of high or medium-low honey production. These findings align with recent studies that have highlighted the critical role of weather conditions during the winter months in forecasting agricultural yields, such as vegetative production [60,61,62]. The elevated temperatures and pressure levels in February may signal the onset of favorable growing conditions for nectar-producing plants, while the wind patterns in January could impact the foraging behavior and productivity of honey bees.

To our knowledge, this is the first study to predict honey harvest using climatic and environmental information prior to harvest, making it difficult to compare with previous research. These findings can be attributed to the fact that Lombardy, one of the coldest regions of Italy, has a climate that largely corresponds to Central European weather conditions. The region experiences cold and wet conditions from November to March, with the majority of the rainfall occurring from April to May. In the timeframe 2014–2019, the atmospheric pressure was greater from November to February than the rest of the year, and it affected nectar secretion and foraging activity. Additionally, the mean temperature trend showed monthly temperatures below 6 °C in winter, with variations ranging from 10 °C in 2017 to 15 °C during the same season for all years. In contrast, the relative humidity trend was higher in the winter months from 2014 to 2019. Temperature and humidity have a significant impact on thermoregulation [3,63,64], brood rearing [65,66], drone rearing [67], food storing and brood rearing activity [68], and matting flights [69,70], which have a direct relation with honey yield. In addition, low temperatures have been linked to a reduction in the number of adult workers [71], parasite infection in summer [72], flight reduction [73], and starvation [74]. Other studies have identified the effect of biotic and abiotic factors on bees’ foraging [75,76,77], reproduction [78], health status [79], genetics [80], behaviour [81] and yield [43,46,47] during the honey flow period. In particular, Campbell et al. [43] used regression trees to estimate honey harvest in South West Australia, utilizing weather and vegetation-related datasets from satellite sensors. The authors concluded that data from the month immediately preceding honey flow, out of the 115 input features collected both prior to and during the harvest period, is sufficient to make accurate predictions with minimal reduction in model performance. Additionally, Karadas et al. [48] also included anthropogenic features to predict honey production.

Therefore, monitoring winter weather patterns and leveraging advanced ML methods can help beekeepers more accurately anticipate critical hive conditions, such as changes in temperature, humidity, and atmospheric pressure. These insights enable the implementation of targeted strategies, such as adjusting feeding regimes, introducing supplementary heating, or adopting other climate-adaptive measures based on forecasted conditions. This study underscores the potential of ML in apiculture, serving as a foundational step toward future research aimed at developing practical tools for beekeepers. While the current findings demonstrate that ML can effectively predict honey production based on climatic and environmental factors, they also highlight the need for further refinement to enhance model accuracy and applicability. Incorporating additional variables, such as floral diversity, pest prevalence, and management practices, could lead to more robust predictions and facilitate the development of user-friendly decision-support systems. Similar approaches, as evidenced by Campbell et al. [43], Switanek et al. [13], and Karadas et al. [48], demonstrate how predictive analytics can bridge the gap between theoretical models and practical applications, paving the way for more precise and adaptive beekeeping strategies.

Scalability and Generalizability

Our study provides new insights into how winter climate and environment affect honey production, but its regional specificity requires further exploration. To enhance generalizability and predictive capacity, future research should apply our approach to a database encompassing diverse geographic regions and incorporate additional factors, like as floral diversity (species richness, bloom timing, etc.), the prevalence of pests and diseases (e.g., Varroa mites, specific viral or bacterial infections), and beekeeping management practices (hive management techniques, supplemental feeding, migratory patterns). This broader approach will enable the development of more robust and comprehensive predictive models, ultimately leading to improved honey production management in various environmental contexts, including those affected by climate change.

5. Conclusions

Honeybee populations are increasingly threatened by climate change, experiencing escalating stressors that have reduced the productivity of managed colonies. These stressors affect bee survival by influencing the availability and quality of floral resources, directly impacting bee behavior and physiology, and affecting colony development and honey production. Furthermore, shifting climate conditions alter honeybee distribution, creating new competitive interactions among species, ecotypes, parasites, and pathogens. Precision beekeeping offers a vital strategy to support honeybees in this changing environment. By leveraging ML and innovative technologies, precision beekeeping empowers data-driven decision-making. Beekeepers can use pre-harvest weather parameters, such as winter temperature, humidity, atmospheric pressure, and wind speed, to inform management strategies for mitigating adverse conditions. Incorporating insights into optimal hive relocation sites, a common practice in migratory beekeeping can further enhance these decisions. Moreover, integrating additional factors into predictive models, such as flower species, management practices, pesticide/herbicide exposure, and the beekeepers’ technological capabilities, can improve prediction accuracy. Machine learning algorithms, particularly Random Forest, show promise for future research on honey harvest prediction. These data-driven approaches enable more resilient management strategies, allowing beekeepers to minimize losses and maintain productivity despite the challenges of a changing climate. Continued research, integrating these insights with landscape genomics, will further refine our understanding of bee adaptation and inform conservation efforts, ensuring the long-term health of bee populations, ecosystem services, and honey production.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/insects16030278/s1. Supplementary File SA: Pseudo Code; Supplementary SB table S1: Computational Resources and Processing Time; Supplementary Material SC Figure S1: Trade-off between Area Under the ROC Curve (AUC) vs. Number of Features for Random Forest.

Author Contributions

Conceptualization, J.R.-D., A.M., A.S. and G.M.; methodology, T.B. and T.A.d.O.; software, J.R.-D. and T.A.d.O.; validation, T.B. and T.A.d.O.; formal analysis, T.A.d.O.; investigation, J.R.-D., A.M., T.B., F.N. and M.B.; resources: J.R.-D., M.G.D.I. and F.N.; data curation, J.R.-D., T.A.d.O. and F.N.; writing—original draft preparation, J.R.-D. and A.M.; writing—review and editing, J.R.-D., A.M., T.A.d.O., T.B., M.G.D.I., G.P., F.N., M.B., M.P., G.M. and A.S.; visualization, A.M. and T.B.; supervision, G.M. and A.S.; project administration, G.M. and A.S.; funding acquisition, G.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the BEENOMIX 2.0 and BEENOMIX 3.0 projects funded by the Lombardy Region (FEASR program), PSR (grant number 201801057971—G44I19001910002) and grant number (202202375596—E87F23000000009).

Data Availability Statement

The phenotypes are part of a reference population used for selection by a commercial breeder and have commercial value. Therefore, restrictions apply to the availability of these data, which are not publicly available. The authors can be contacted for specific requests.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Szabo, T.I. Effect of Weather Factors on Honeybee Flight Activity and Colony Weight Gain. J. Apic. Res. 1980, 19, 164–171. [Google Scholar] [CrossRef]

- Alhaddad, B.D. The influence of meteorological conditions on the feeding and egg-laying of the queen honey-bee. Comptes Rendus L’academie Sci. Ser. 3 Sci. Vie 1995, 318, 245–248. [Google Scholar]

- Stabentheiner, A.; Kovac, H.; Brodschneider, R. Honeybee Colony Thermoregulation—Regulatory Mechanisms and Contribution of Individuals in Dependence on Age, Location and Thermal Stress. PLoS ONE 2010, 5, e8967. [Google Scholar] [CrossRef] [PubMed]

- Stabentheiner, A.; Kovac, H. Honeybee economics: Optimisation of foraging in a variable world. Sci. Rep. 2016, 6, 28339. [Google Scholar] [CrossRef]

- Delgado, D.L.; Pérez, M.E.; Galindo-Cardona, A.; Giray, T.; Restrepo, C. Forecasting the Influence of Climate Change on Agroecosystem Services: Potential Impacts on Honey Yields in a Small-Island Developing State. Psyche A J. Entomol. 2012, 951215. [Google Scholar] [CrossRef]

- Langowska, A.; Zawilak, M.; Sparks, T.H.; Glazaczow, A.; Tomkins, P.W.; Tryjanowski, P. Long-term effect of temperature on honey yield and honeybee phenology. Int. J. Biometeorol. 2017, 61, 1125–1132. [Google Scholar] [CrossRef]

- Muhammad Shakir, Z.; Mohd Rasdi, Z.; Mohd Salleh, D. Honeybees, Apis cerana colony performance in the non-protected and protected beehive methods in relation to climatic factors. Food Res. 2022, 6, 112–123. [Google Scholar] [CrossRef]

- Vicens, N.; Bosch, J. Pollinating Efficacy of Osmia cornuta and Apis mellifera (Hymenoptera: Megachilidae, Apidae) on ‘Red Delicious’ Apple. Environ. Entomol. 2000, 29, 235–240. [Google Scholar] [CrossRef]

- Gounari, S.; Proutsos, N.; Goras, G. How does weather impact on beehive productivity in a Mediterranean island? Ital. J. Agrometeorol. 2022, 1, 65–81. [Google Scholar] [CrossRef]

- Joshi, N.C.; Joshi, P.C. Foraging Behaviour of Apis Spp. on Apple Flowers in a Subtropical Environment. NY Sci. J. 2010, 3, 71–76. [Google Scholar]

- Mattos, I.M.; de Souza, J.; Soares, A.E.E. Analysis of the effects of climate variables on Apis mellifera pollen foraging performance. Arq. Bras. Med. Veterinária Zootec. 2018, 70, 1301–1308. [Google Scholar] [CrossRef]

- Hennessy, G.; Harris, C.; Eaton, C.; Wright, P.; Jackson, E.; Goulson, D.; Ratnieks, F.F.L.W. Gone with the wind: Effects of wind on honey bee visit rate and foraging behaviour. Anim. Behav. 2020, 161, 23–31. [Google Scholar] [CrossRef]

- Switanek, M.; Crailsheim, K.; Truhetz, H.; Brodschneider, R. Modelling seasonal effects of temperature and precipitation on honey bee winter mortality in a temperate climate. Sci. Total Environ. 2017, 579, 1581–1587. [Google Scholar] [CrossRef] [PubMed]

- Tarca, A.L.; Carey, V.J.; Chen, X.; Romero, R.; Drăghici, S. Machine Learning and Its Applications to Biology. PLoS Comput. Biol. 2007, 3, e116. [Google Scholar] [CrossRef] [PubMed]

- Jovel, J.; Greiner, R. An Introduction to Machine Learning Approaches for Biomedical Research. Front. Med. 2021, 8, 771607. [Google Scholar] [CrossRef]

- Nasteski, V. An overview of the supervised machine learning methods. HORIZONS B 2017, 4, 51–62. [Google Scholar] [CrossRef]

- Hino, M.; Benami, E.; Brooks, N. Machine learning for environmental monitoring. Nat. Sustain. 2018, 1, 583–588. [Google Scholar] [CrossRef]

- Bobbo, T.; Biffani, S.; Taccioli, C.; Penasa, M.; Cassandro, M. Comparison of machine learning methods to predict udder health status based on somatic cell counts in dairy cows. Sci. Rep. 2021, 11, 13642. [Google Scholar] [CrossRef]

- Lang, L.; Tiancai, L.; Shan, A.; Xiangyan, T. An improved random forest algorithm and its application to wind pressure prediction. Int. J. Intell. Syst. 2021, 36, 4016–4032. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Rufo, D.D.; Debelee, T.G.; Ibenthal, A.; Negera, W.G. Diagnosis of Diabetes Mellitus Using Gradient Boosting Machine (LightGBM). Diagnostics 2021, 11, 1714. [Google Scholar] [CrossRef] [PubMed]

- Tabak, M.A.; Norouzzadeh, M.S.; Wolfson, D.W.; Sweeney, S.J.; Vercauteren, K.C.; Snow, N.P.; Halseth, J.; Salvo, P.; Lewis, J.; White, M.; et al. Machine learning to classify animal species in camera trap images: Applications in ecology. Methods Ecol. Evol. 2019, 10, 585–590. [Google Scholar] [CrossRef]

- Peters, D.P.C.; Havstad, K.M.; Cushing, J.; Tweedie, C.; Fuentes, O.; Villanueva-Rosales, N. Harnessing the power of big data: Infusing the scientific method with machine learning to transform ecology. Ecosphere 2014, 5, 1–15. [Google Scholar] [CrossRef]

- Mahmudul Hassan, S.K.; Kumar Maji, A. Identification of Plant Species Using Deep Learning. In Proceedings of the International Conference on Frontiers in Computing and Systems: COMSYS 2020, West Bengal, India, 13–15 January 2019; Springer: Singapore, 2021; pp. 115–125. [Google Scholar]

- Wäldchen, J.; Rzanny, M.; Seeland, M.; Mäder, P. Automated plant species identification—Trends and future directions. PLoS Comput. Biol. 2018, 14, e1005993. [Google Scholar] [CrossRef]

- Li, R.; Sun, Y.; Sun, Q. Automated Flowering Time Prediction Using Data Mining and Machine Learning. In Proceedings of the Machine Learning and Intelligent Communications: Second International Conference, MLICOM 2017, Weihai, China, 5–6 August 2017; Springer International Publishing: Cham, Switzerland, 2018; pp. 518–527. [Google Scholar]

- Kim, T.K.; Kim, S.; Won, M.; Lim, J.H.; Yoon, S.; Jang, K.; Lee, K.H.; Park, Y.; Kim, H.S. Utilizing machine learning for detecting flowering in mid-range digital repeat photography. Ecol. Model. 2021, 440, 109419. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, S.; Liu, J.; Wang, H.; Zhu, J.; Li, D.; Zhao, R. Application of machine learning in intelligent fish aquaculture: A review. Aquaculture 2021, 540, 736724. [Google Scholar] [CrossRef]

- Piles, M.; Fernandez-Lozano, C.; Velasco-Galilea, M.; González-Rodríguez, O.; Sánchez, J.P.; Torrallardona, D.; Ballester, M.; Quintanilla, R. Machine learning applied to transcriptomic data to identify genes associated with feed efficiency in pigs. Genet. Sel. Evol. 2019, 51, 10. [Google Scholar] [CrossRef] [PubMed]

- Mahdizadeh Gharakhanlou, N.; Perez, L. From data to harvest: Leveraging ensemble machine learning for enhanced crop yield predictions across Canada amidst climate change. Sci. Total Environ. 2024, 951, 175764. [Google Scholar] [CrossRef]

- Cabbri, R.; Ferlizza, E.; Bellei, E.; Andreani, G.; Galuppi, R.; Isani, G.A. Machine learning approach to study demographic alterations in honeybee colonies using SDS–PAGE Fingerprinting. Animals 2021, 11, 1823. [Google Scholar] [CrossRef]

- Bernardes, R.C.; Botina, L.L.; da Silva, F.P.; Fernandes, K.M.; Lima, M.A.P.; Martins, G.F. Toxicological assessment of agrochemicals on bees using machine learning tools. J. Hazard. Mater. 2022, 424, 127344. [Google Scholar] [CrossRef]

- Kulyukin, V.; Mukherjee, S.; Amlathe, P. Toward Audio Beehive Monitoring: Deep Learning vs. Standard Machine Learning in Classifying Beehive Audio Samples. Appl. Sci. 2018, 8, 1573. [Google Scholar] [CrossRef]

- Kaplan Berkaya, S.; Sora Gunal, E.; Gunal, S. Deep learning-based classification models for beehive monitoring. Ecol. Inform. 2021, 64, 101353. [Google Scholar] [CrossRef]

- Veiner, M.; Morimoto, J.; Leadbeater, E.; Manfredini, F. Machine learning models identify gene predictors of waggle dance behaviour in honeybees. Mol. Ecol. Resour. 2022, 22, 2248–2261. [Google Scholar] [CrossRef]

- Fathian, M.; Amiri, B.; Maroosi, A. Application of honey-bee mating optimization algorithm on clustering. Appl. Math. Comput. 2007, 190, 1502–1513. [Google Scholar] [CrossRef]

- Prešern, J.; Smodiš Škerl, M.I. Parameters influencing queen body mass and their importance as determined by machine learning in honey bees (Apis mellifera carnica). Apidologie 2019, 50, 745–757. [Google Scholar] [CrossRef]

- Formosa, J.P.; Lia, F.; Mifsud, D.; Farrugia, C. Application of ATR-FT-MIR for Tracing the Geographical Origin of Honey Produced in the Maltese Islands. Foods 2020, 9, 710. [Google Scholar] [CrossRef]

- Damiani, T.; Alonso-Salces, R.M.; Aubone, I.; Baeten, V.; Arnould, Q.; Dall’Asta, C.; Fuselli, S.R.; Fernández Pierna, J.A. Vibrational Spectroscopy Coupled to a Multivariate Analysis Tiered Approach for Argentinean Honey Provenance Confirmation. Foods 2020, 9, 1450. [Google Scholar] [CrossRef]

- Didaras, N.A.; Kafantaris, I.; Dimitriou, T.G.; Mitsagga, C.; Karatasou, K.; Giavasis, I.; Stagos, D.; Amoutzias, G.D.; Hatjina, F.; Mossialos, D. Biological Properties of Bee Bread Collected from Apiaries Located across Greece. Antibiotics 2021, 10, 555. [Google Scholar] [CrossRef]

- Giliba, R.A.; Mpinga, I.H.; Ndimuligo, S.A.; Mpanda, M.M. Changing climate patterns risk the spread of Varroa destructor infestation of African honey bees in Tanzania. Ecol. Process. 2020, 9, 48. [Google Scholar] [CrossRef]

- Braga, D.; Madureira, A.; Scotti, F.; Piuri, V.; Abraham, A. An Intelligent Monitoring System for Assessing Bee Hive Health. IEEE Access 2021, 9, 89009–89019. [Google Scholar] [CrossRef]

- Campbell, T.; Dixon, K.W.; Dods, K.; Fearns, P.; Handcock, R. Machine learning regression model for predicting honey harvests. Agriculture 2020, 10, 118. [Google Scholar] [CrossRef]

- Ibrahim, L.; Mesinovic, M.; Yang, K.W.; Eid, M.A. Explainable prediction of acute myocardial infarction using machine learning and shapley values. IEEE Access 2020, 8, 210410–210417. [Google Scholar] [CrossRef]

- Rocha, H.; Dias, J. Honey yield forecast using radial basis functions. In Proceedings of the Machine Learning, Optimization, and Big Data: Third International Conference, MOD 2017, Volterra, Italy, 14–17 September 2017; Springer International Publishing: Cham, Switzerland, 2018; pp. 483–495. [Google Scholar]

- Brini, A.; Giovannini, E.; Smaniotto, E. A Machine Learning Approach to Forecasting Honey Production with Tree-Based Methods. arXiv 2023, arXiv:2304.01215. [Google Scholar] [CrossRef]

- Ramirez-Diaz, J.; Manunza, A.; De Oliveira, T.A.; Bobbo, T.; Silva, V.; Cozzi, P.; Biffani, S.; Stella, A.; Minozzi, G. Using supervised machine learning for honey harvest prediction. In Proceedings of the 12th World Congress on Genetics Applied to Livestock Production (WCGALP) Technical and Species Orientated Innovations in Animal Breeding, and Contribution of Genetics to Solving Societal Challenges, Hybrid Event, 3–8 July 2022; Wageningen Academic Publishers: Wageningen, The Netherlands, 2023. [Google Scholar] [CrossRef]

- Karadas, K.; Kadırhanogullari, I.H. Predicting honey production using data mining and artificial neural network algorithms in apiculture. Pak. J. Zool. 2017, 49, 1611–1619. [Google Scholar] [CrossRef]

- Istituto di Servizi per il Mercato Agricolo Alimentare ISMEA. Il Settore Apistico Nazionale. 2019. Available online: https://www.ismea.it/flex/cm/pages/ServeAttachment.php/L/IT/D/b%252F4%252F2%252FD.6a8dfa6e6fabd481ab85/P/BLOB:ID=10772/E/pdf (accessed on 1 February 2015).

- De Iorio, M.G.; Lazzari, B.; Colli, L.; Pagnacco, G.; Minozzi, G. Variability and number of circulating complementary sex determiner (csd) alleles in a breeding population of italian honeybees under controlled mating. Genes 2024, 15, 652. [Google Scholar] [CrossRef]

- Sparks, A. nasapower: A NASA POWER Global Meteorology, Surface Solar Energy and Climatology Data Client for R. J. Open Source Softw. 2018, 3, 1035. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by random Forest. R News 2002, 2, 18–22. [Google Scholar]

- Kursa, M.B.; Miron, R.R.W. Feature selection with the Boruta package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Kursa, M.B. Robustness of Random Forest-based gene selection methods. BMC Bioinform. 2014, 15, 8. [Google Scholar] [CrossRef]

- LaValle, S.M.; Branicky, M.S.; Lindemann, S.R. On the relationship between classical grid search and probabilistic roadmaps. Int. J. Robot. Res. 2004, 23, 673–692. [Google Scholar] [CrossRef]

- Kuhn, M.; Vaughan, D.H.E. Package ‘Yardstick’—Tidy Characterizations of Model Performance. 2022. Available online: https://github.com/tidymodels/yardstick (accessed on 1 February 2025).

- Wright, M.N.; Ziegler, A. ranger: A fast implementation of random forests for high dimensional data in C++ and R. J. Stat. Softw. 2017, 77, 1–17. [Google Scholar] [CrossRef]

- Kuhn, M.; Wickham, H. Tidymodels: A Collection of Packages for Modeling and Machine Learning Using Tidyverse Principles. 2020. Available online: https://www.tidymodels.org (accessed on 1 February 2025).

- Dimitrijevic, S.; Zogović, N. Machine Learning Advances in Beekeeping. In Proceedings of the Conference: 12th International Conference on Information Society and Technology—ICIST 2022, Kopaonik, Serbia, 13–16 March 2022; Zdravković, M., Trajanović, M., Konjović, Z., Eds.; Researchgate: Berlin, Germany, 2022; pp. 59–63. [Google Scholar]

- Knight, C.; Khouakhi, A.; Waine, T.W. The impact of weather patterns on inter-annual crop yield variability. Sci. Total Environ. 2024, 955, 177181. [Google Scholar] [CrossRef] [PubMed]

- Schierhorn, F.; Hofmann, M.; Gagalyuk, T.; Ostapchuk, I.; Muller, D. Machine learning reveals complex effects of climatic means and weather extremes on wheat yields during different plant developmental stages. Clim. Change 2021, 169, 39. [Google Scholar] [CrossRef]

- Wassan, S.; Xi, C.; Jhanjhi, N.; Binte-Imran, L. Effect of frost on plants, leaves, and forecast of frost events using convolutional neural networks. Int. J. Distrib. Sens. Netw. 2021, 17, 15501477211053777. [Google Scholar] [CrossRef]

- Kovac, H.; Käfer, H.; Stabentheiner, A.; Costa, C. Metabolism and upper thermal limits of Apis mellifera carnica and A. m. ligustica. Apidologie 2014, 45, 664–677. [Google Scholar] [CrossRef] [PubMed]

- Szopek, M.; Schmickl, T.; Thenius, R.; Radspieler, G.; Crailsheim, K. Dynamics of collective decision making of honeybees in complex temperature fields. PLoS ONE 2013, 8, e76250. [Google Scholar] [CrossRef] [PubMed]

- Petz, M.; Stabentheiner, A.; Crailsheim, K. Respiration of individual honeybee larvae in relation to age and ambient temperature. J. Comp. Physiol. B 2004, 174, 511–518. [Google Scholar] [CrossRef]

- Human, H.; Nicolson, S.W.; Dietemann, V. Do honeybees, Apis mellifera scutellata, regulate humidity in their nest? Naturwissenschaften 2006, 93, 397–401. [Google Scholar] [CrossRef]

- Boes, K.E. Honeybee colony drone production and maintenance in accordance with environmental factors: An interplay of queen and worker decisions. Insectes Sociaux 2010, 57, 1–9. [Google Scholar] [CrossRef]

- Neupane, K.; Thapa, R. Pollen collection and brood production by honeybees (Apis mellifera L.) under Chitwan condition of Nepal. J. Inst. Agric. Anim. Sci. 2005, 26, 143–148. [Google Scholar] [CrossRef][Green Version]

- Lensky, Y.; Demter, M. Mating flights of the queen honeybee (Apis mellifera) in a subtropical climate. Comp. Biochem. Physiol. Part A Physiol. 1985, 81, 229–241. [Google Scholar] [CrossRef]

- Heidinger, I.; Meixner, M.; Berg, S.; Büchler, R. Observation of the mating behavior of honey bee (Apis mellifera L.) queens using radio-frequency identification (RFID): Factors influencing the duration and frequency of nuptial flights. Insects 2014, 5, 513–527. [Google Scholar] [CrossRef]

- Van der Zee, R.; Brodschneider, R.; Brusbardis, V.; Charrière, J.D.; Chlebo, R.; Coffey, M.F.; Dahle, B.; Dražić, M.; Kauko, L.; Kretavicius, J.; et al. Results of international standardised beekeeper surveys of colony losses for winter 2012–2013: Analysis of winter loss rates and mixed effects modelling of risk factors for winter loss. J. Apic. Res. 2014, 53, 19–34. [Google Scholar]

- Goblirsch, M. Nosema ceranae disease of the honey bee (Apis mellifera). Apidologie 2018, 49, 131–150. [Google Scholar] [CrossRef]

- Esch, H. The effects of temperature on flight muscle potentials in honeybees and cuculiinid winter moths. J. Exp. Biol. 1988, 135, 109–117. [Google Scholar] [CrossRef]

- Szentgyörgyi, H.; Czekońska, K.; Tofilski, A. The effects of starvation of honey bee larvae on reproductive quality and wing asymmetry of honey bee drones. J. Apic. Sci. 2017, 61, 233–243. [Google Scholar] [CrossRef]

- Farooqi, M.A.; Aslam, M.N.; Sajjad, A.; Akram, W.; Maqsood, A. Impact of abiotic factors on the foraging behavior of two honeybee species on canola in Bahawalpur, Punjab-Pakistan. Asian J. Agric. Biol. 2021, 9, 43. [Google Scholar]

- Oliveira, R.C.; Contrera, F.A.L.; Arruda, H.; Jaffé, R.; Costa, L.; Pessin, G.; Venturieri, G.; De Souza, P.; Imperatriz-Fonseca, V.L. Foraging and drifting patterns of the highly eusocial neotropical stingless bee melipona fasciculata assessed by radio-frequency identification tags. Front. Ecol. Evol. 2021, 9, 708178. [Google Scholar] [CrossRef]

- De Iorio, M.G.; Giulio, P.; Tiezzi, F.; Minozzi, G. Effect of host genetics on gut microbiota composition in an italian honeybee breeding population. Ital. J. Anim. Sci. 2024, 23, 1732–1740. [Google Scholar] [CrossRef]

- Rangel, J.; Fisher, A. Factors affecting the reproductive health of honey bee (Apis mellifera) drones—A review. Apidologie 2019, 50, 759–778. [Google Scholar] [CrossRef]

- McMenamin, A.J.; Daughenbaugh, K.F.; Flenniken, M.L. The heat shock response in the western honey bee (Apis mellifera) is Antiviral. Viruses 2020, 12, 245. [Google Scholar] [CrossRef] [PubMed]

- De Iorio, M.G.; Ramirez-Diaz, J.; Biffani, S.; Pagnacco, G.; Stella, A.; Minozzi, G. Deciphering the genetic architecture of honey production in a selected honeybee population. In Proceedings of the Book of Abstracts of the 73rd Annual Meeting of the European Federation of Animal Science, Porto, Portougal, 5–9 September 2022. [Google Scholar]

- Southwick, E.E.; Moritz, R.F.A. Effects of meteorological factors on defensive behaviour of honey bees. Int. J. Biometeorol. 1987, 31, 259–265. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).