Aphid Recognition and Counting Based on an Improved YOLOv5 Algorithm in a Climate Chamber Environment

Abstract

:Simple Summary

Abstract

1. Introduction

- (1)

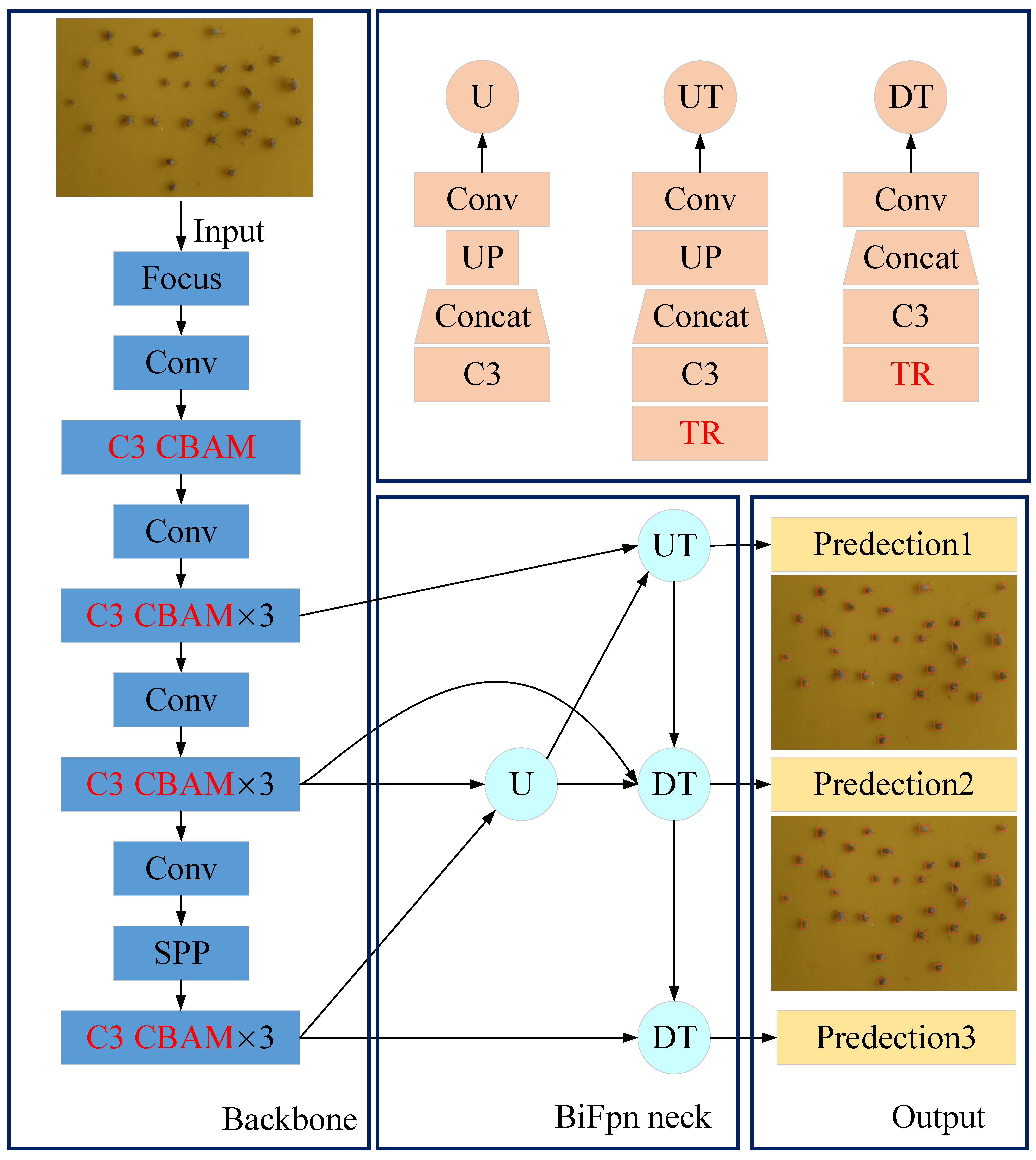

- To distinguish the characteristic information of aphids from other pests or impurities, a convolutional block attention mechanism (CBAM) is introduced in the YOLOv5 backbone layer. It can enhance the model’s feature extraction ability for targets, making it more focused on aphid target information.

- (2)

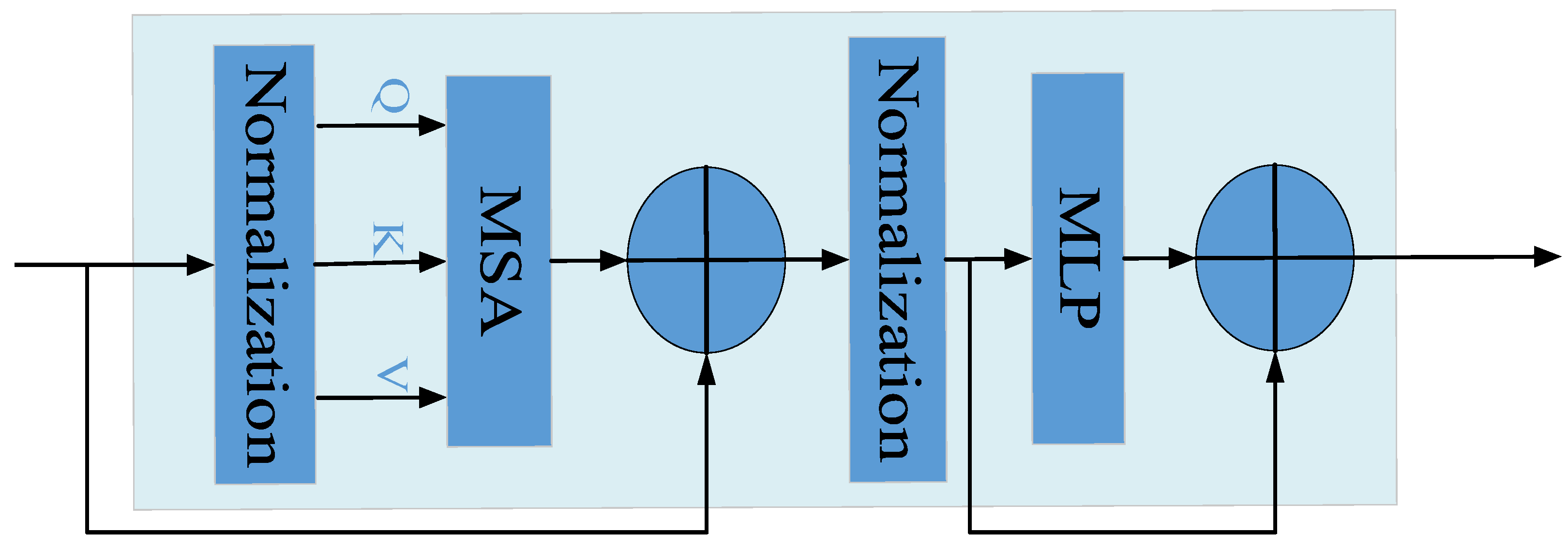

- To further improve the recognition performance of the model for different degrees of aphid aggregation, a Transformer structure is introduced in front of each detection head. This can improve the recognition ability of the model for small aphid targets under different light sensitivity and aggregation levels.

- (3)

- To highlight the overall detection accuracy of the model in different aphid detection scenarios (aphid recognition counting on leaves and recognition counting on lure boards), the idea of a bi-directional feature pyramid network (BiFPN) is employed. Changing the neck PANet to a BiFPN structure reduces the loss of aphid feature information caused by the fusion of contextual information, thereby improving model accuracy.

2. Materials and Methods

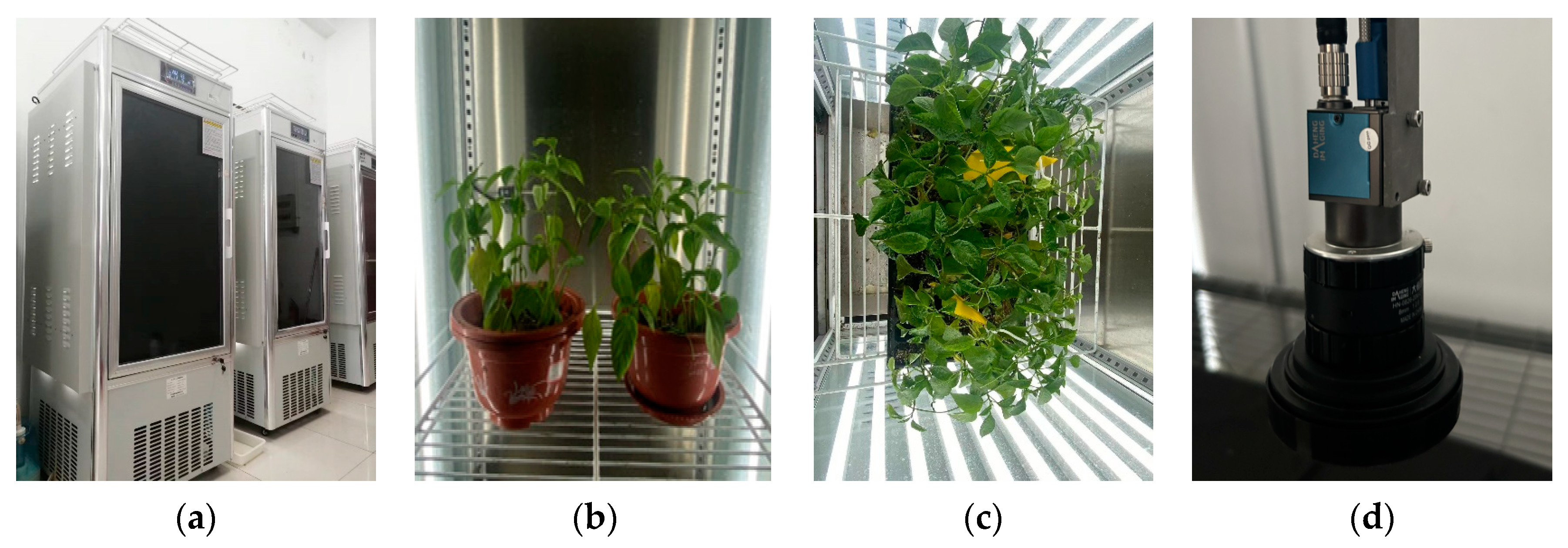

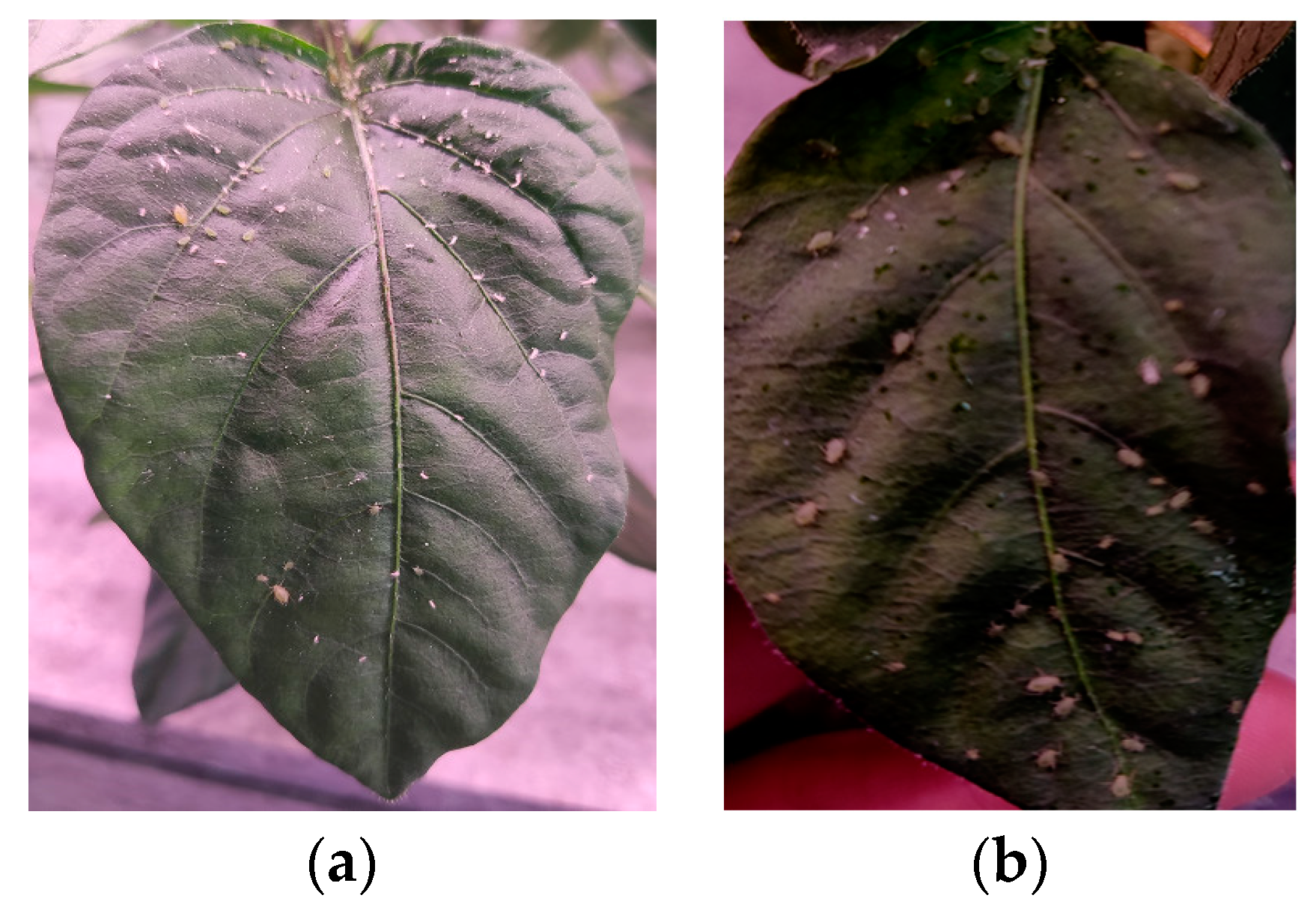

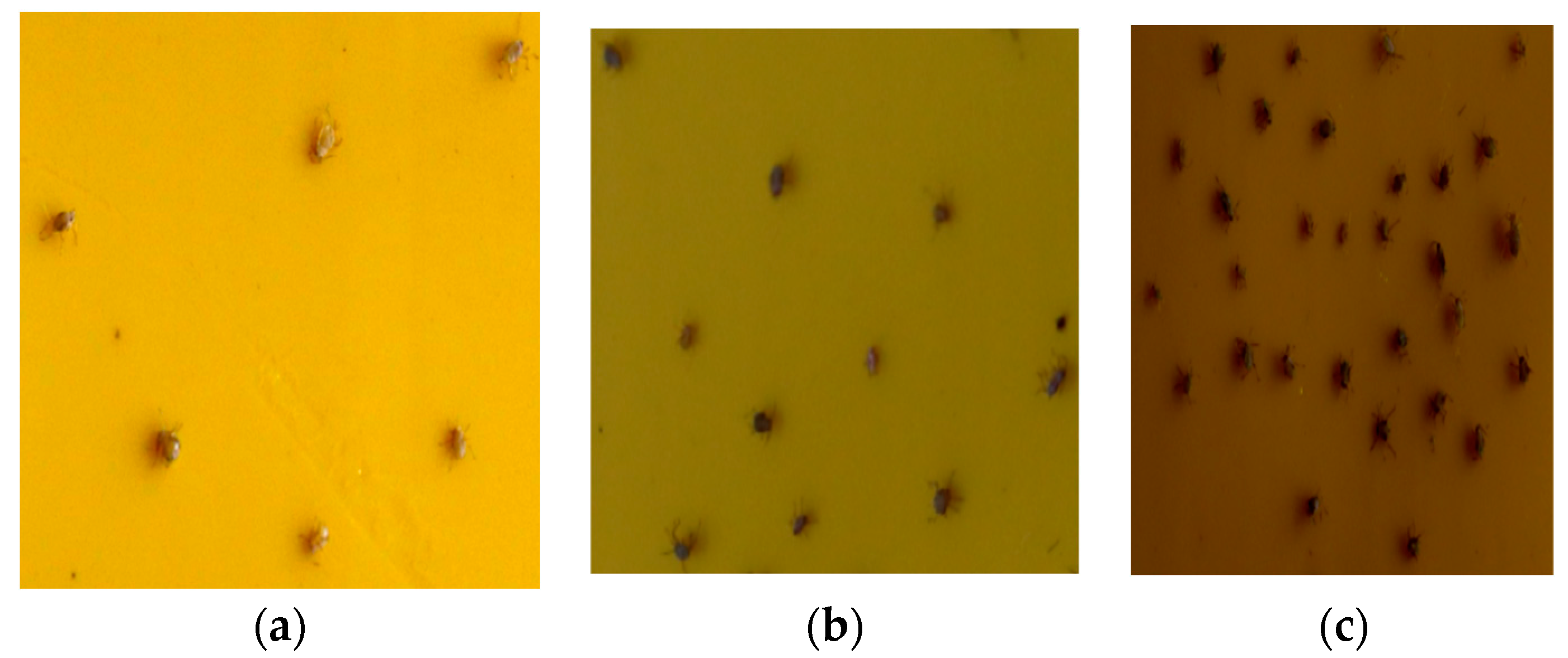

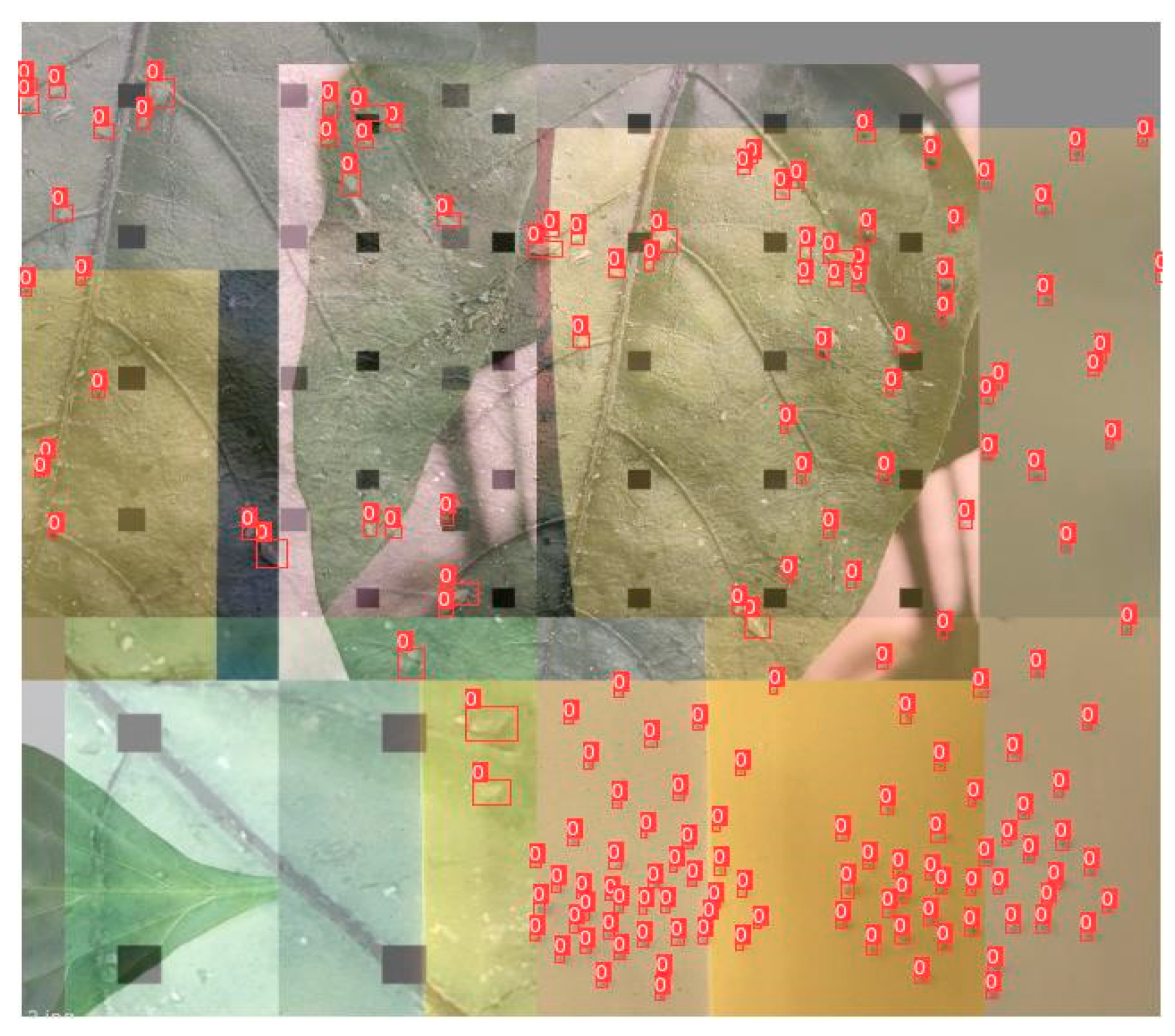

2.1. Aphid Dataset

2.2. Methods

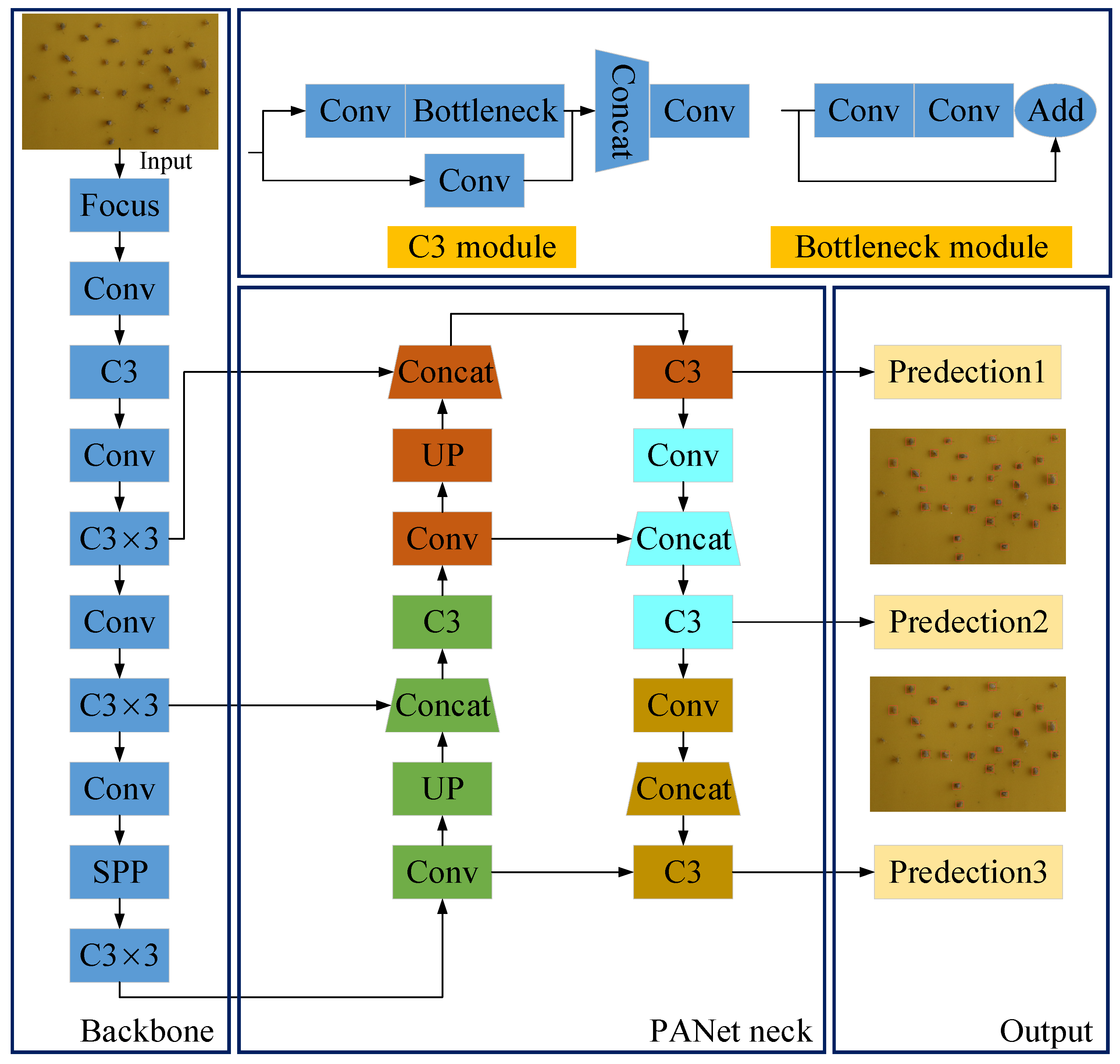

2.2.1. Original YOLOv5 Model

2.2.2. Proposed YOLOv5 Architecture

- (1)

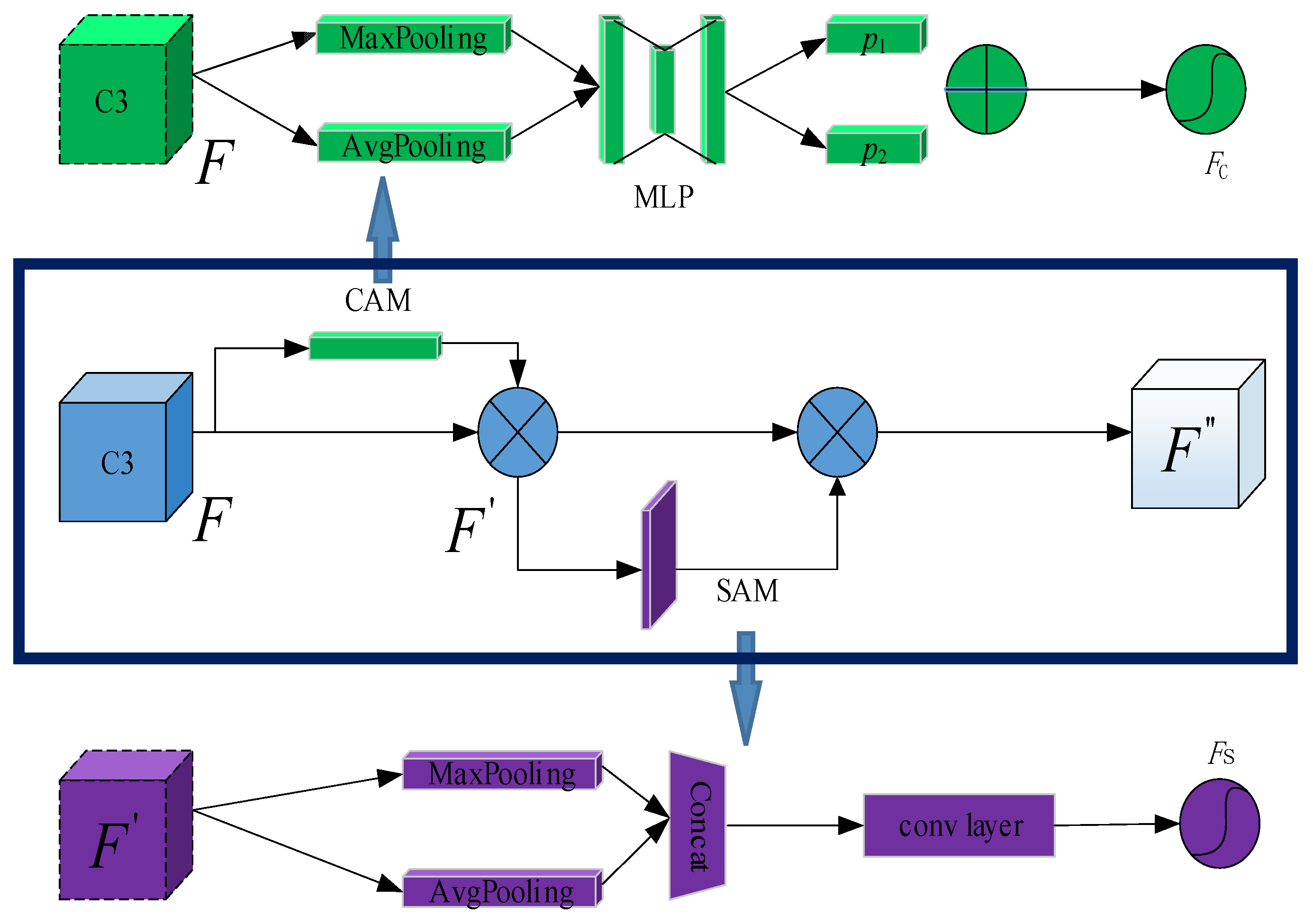

- CBAM attention mechanism:

- (2)

- Transformer module:

- (3)

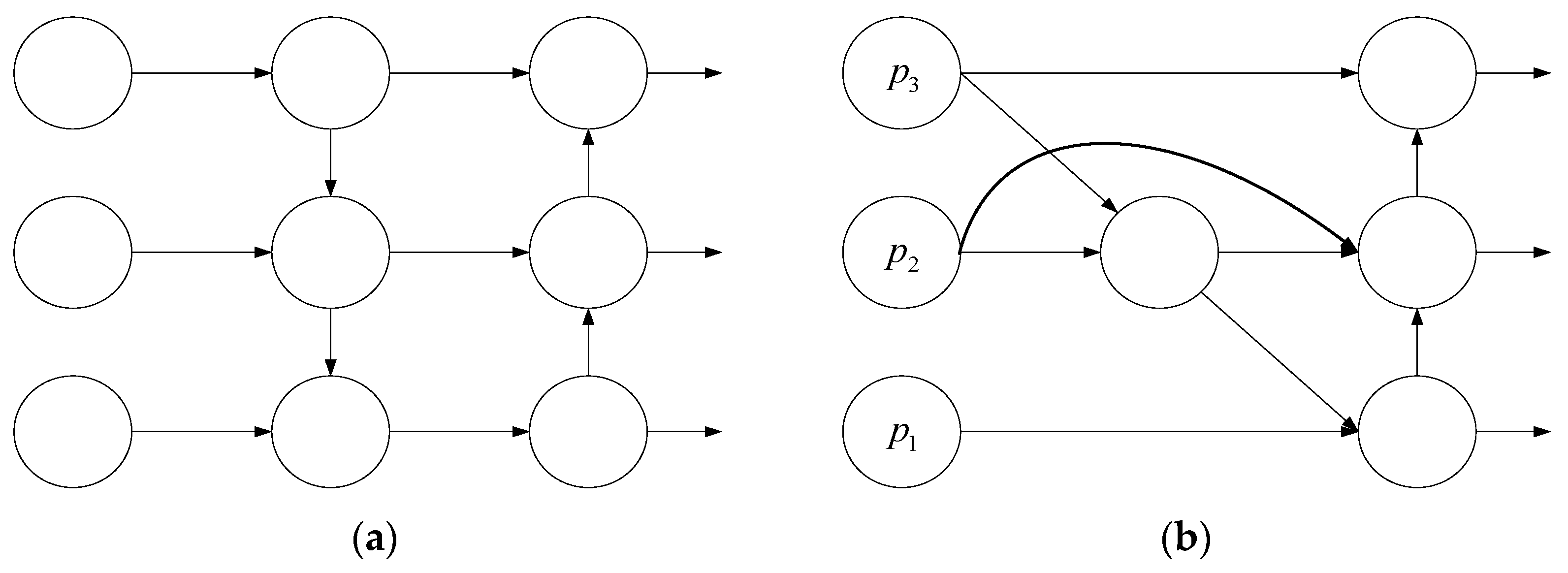

- BiFPN structure:

2.3. Experimental Settings

2.4. Evaluating Indicators

3. Experimental Results and Analyses

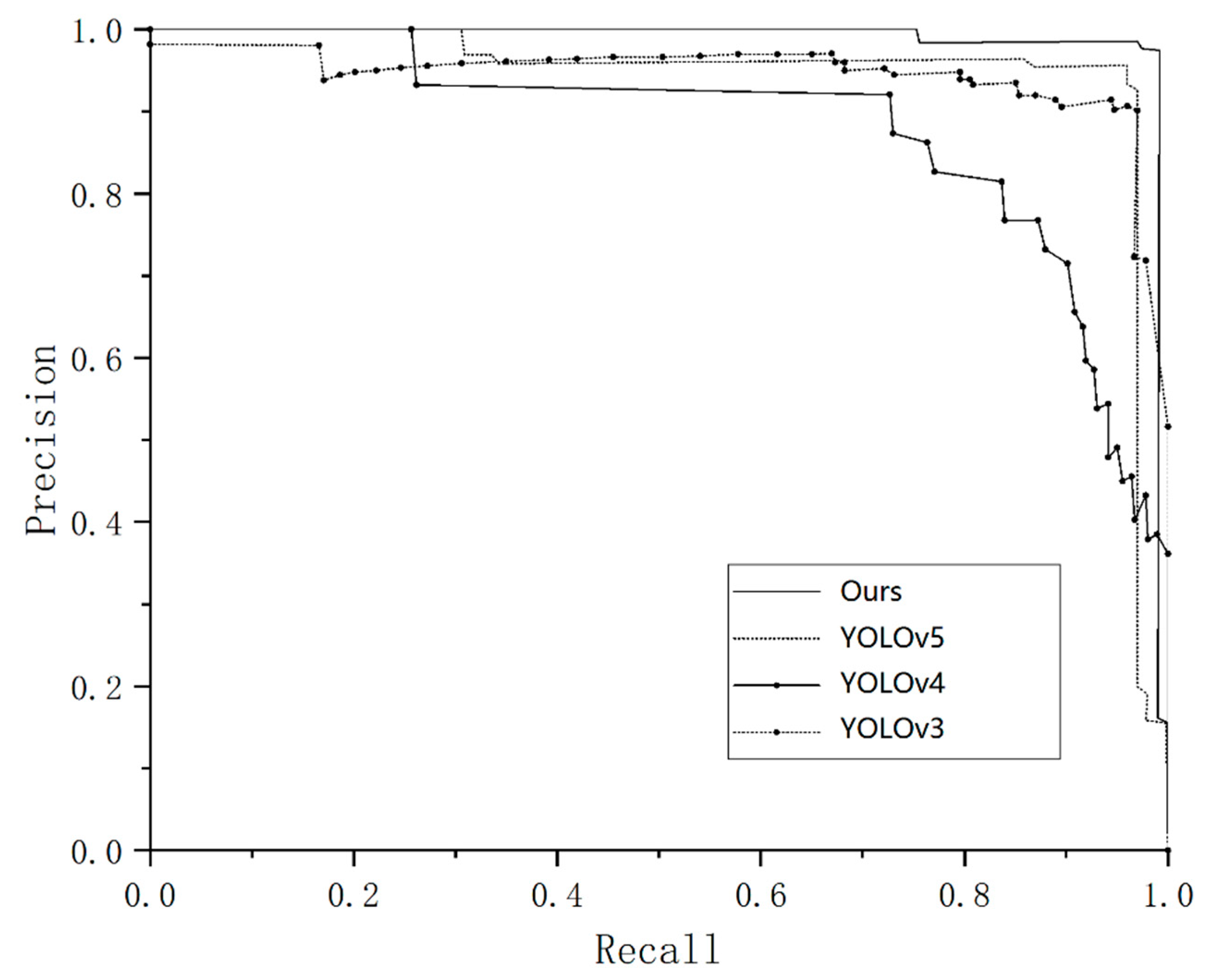

3.1. Experimental Results of the Performance Indicators

3.2. Ablation Experiment Analysis

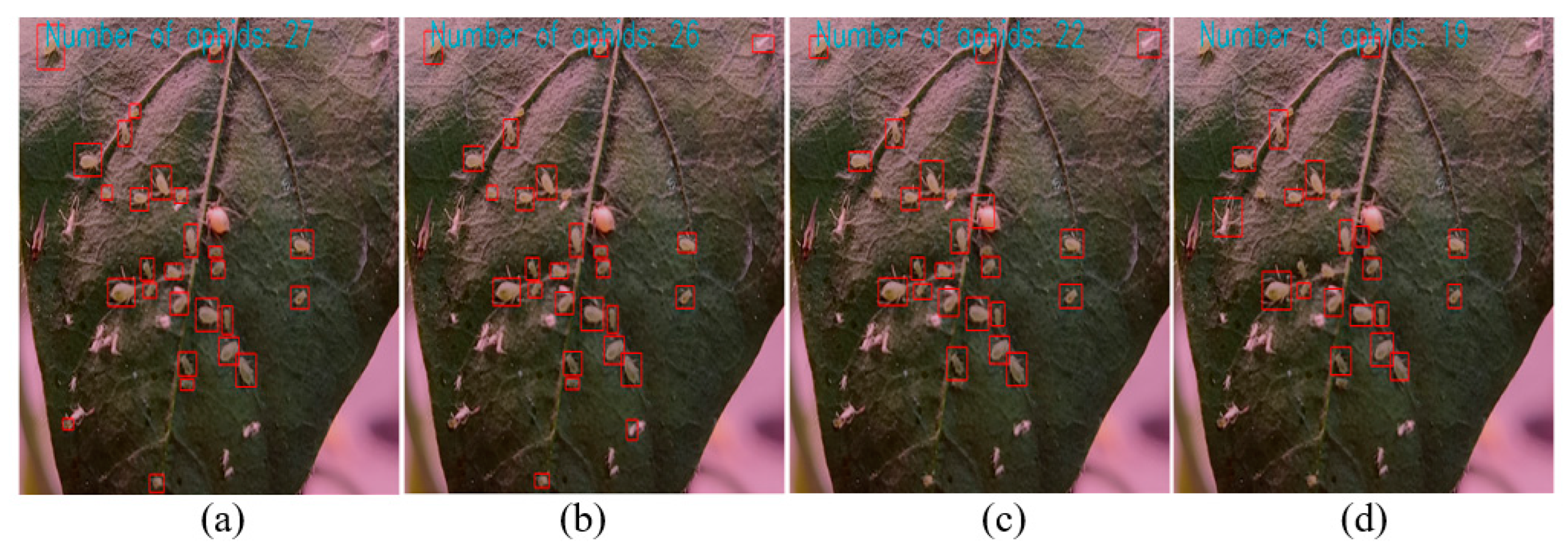

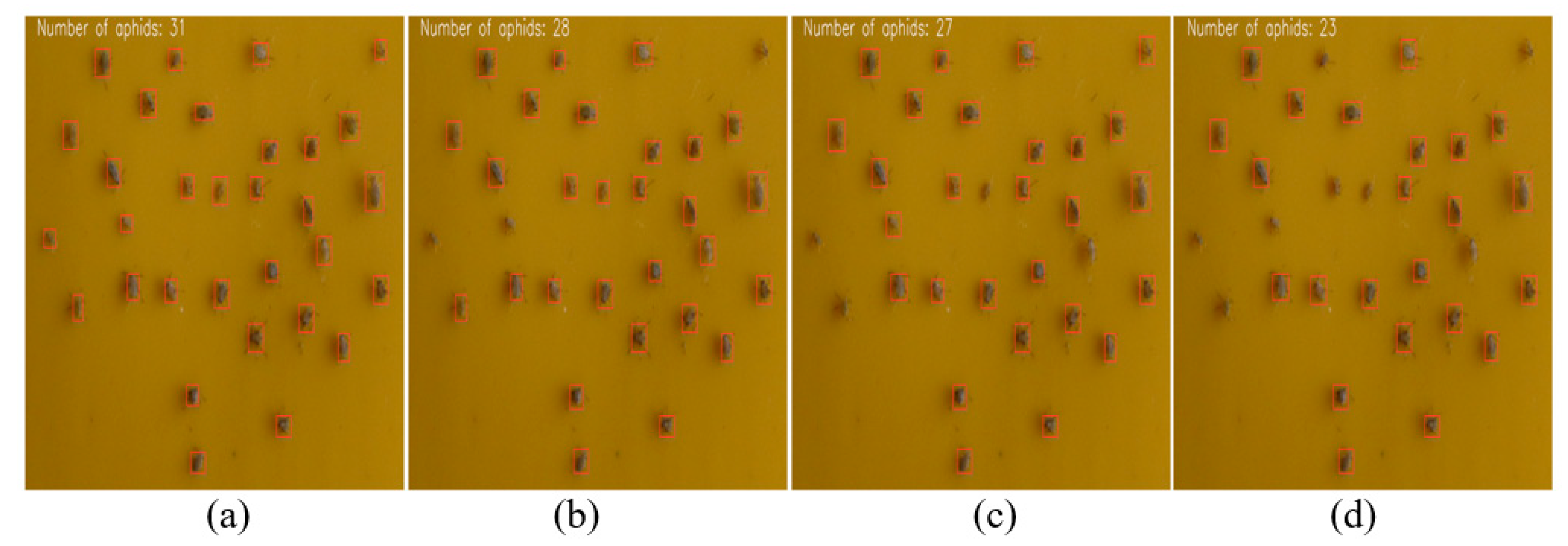

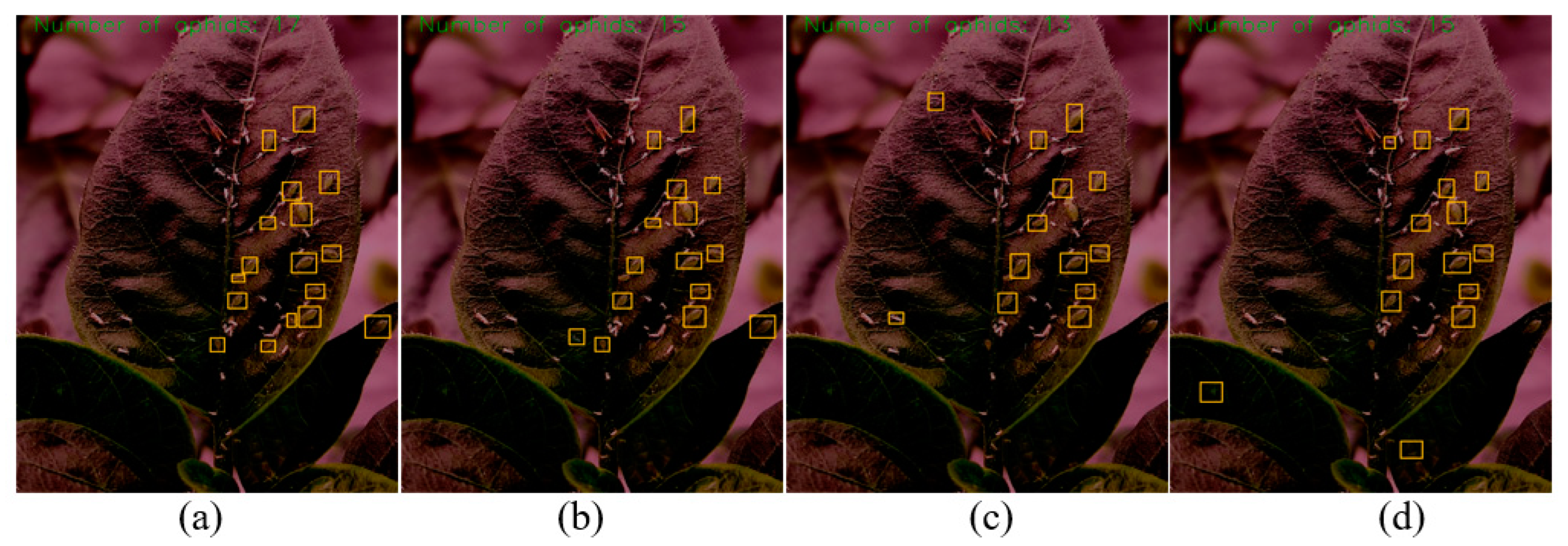

3.3. Experimental Results of Aphid Recognition and Counting

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sun, M.J.; Voorrips, R.E.; Steenhuis-Broers, G.; Van’t Westende, W.; Vosman, B. Reduced phloem uptake of Myzus persicae on an aphid resistant pepper accession. BMC Plant Biol. 2018, 18, 138. [Google Scholar]

- Das Graças Do Carmo, D.; Cristina Lopes, M.; Alves De Araújo, T.; Rafael Silva Soares, J.; Da Silva Paes, J.; Coutinho Picanço, M. Conventional sampling plan for green peach aphid, Myzus persicae (Sulzer) (Hemiptera: Aphididae), in bell pepper crops. Crop Prot. 2021, 145, 105645. [Google Scholar]

- Messelink, G.J.; Lambion, J.; Janssen, A.; Van Rijn, P.C.J. Biodiversity in and around Greenhouses: Benefits and Potential Risks for Pest Management. Insects 2021, 12, 933. [Google Scholar]

- Florencio-Ortiz, V.; Novák, O.; Casas, J.L. Local and systemic hormonal responses in pepper (Capsicum annuum L.) leaves under green peach aphid (Myzus persicae Sulzer) infestation. J. Plant Physiol. 2018, 231, 356–363. [Google Scholar]

- Koestler, R.J.; Sardjono, S.; Koestler, D.L. Detection of insect infestation in museum objects by carbon dioxide measurement using FTIR. INT Biodeter Biodegr. 2000, 46, 285–292. [Google Scholar] [CrossRef]

- Hickling, R.; Wei, W.; Hagstrum, D.W. Studies of sound transmission in various types of stored grain for acoustic detection of insects. Appl. Acoust. 1997, 50, 263–278. [Google Scholar]

- Herrmann, R.; Sachs, J.; Fritsch, H.-C.; Landsberger, B. Use of ultra-wideband (UWB) technology for the detection of active pest infestation. In Proceedings of the International Conference, Vienna, Austria, 1–5 July 2013; pp. 68–74. [Google Scholar]

- Arbat, S.; Forschler, B.T.; Mondi, A.M.; Sharma, A. The case history of an insect infestation revealed using x-ray computed tomography and implications for museum collections management decisions. Heritage 2021, 4, 1016–1025. [Google Scholar]

- Mekha, J.; Parthasarathy, V. An Automated Pest Identification and Classification in Crops Using Artificial Intelligence-A State-of-Art-Review. Autom. Control Comput. 2022, 56, 283–290. [Google Scholar] [CrossRef]

- Lima, M.C.F.; de Almeida Leandro, M.E.D.; Valero, C.; Coronel, L.C.P.; Bazzo, C.O.G. Automatic Detection and Monitoring of Insect Pests—A Review. Agriculture 2020, 10, 161. [Google Scholar]

- Suo, X.; Liu, Z.; Sun, L.; Wang, J.; Zhao, Y. Aphid Identification and Counting Based on Smartphone and Machine Vision. J. Sens. 2017, 2017, 3964376. [Google Scholar]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect Classification and Detection in Field Crops Using Modern Machine Learning Techniques. Inf. Process. Agric. 2021, 8, 446–457. [Google Scholar] [CrossRef]

- Domingues, T.; Brandao, T.; Ferreira, J.C. Machine Learning for Detection and Prediction of Crop Diseases and Pests: A Comprehensive Survey. Agriculture 2022, 12, 1350. [Google Scholar] [CrossRef]

- Li, W.; Yang, Z.; Lv, J.; Zheng, T.; Li, M.; Sun, C. Detection of Small-Sized Insects in Sticky Trapping Images Using Spectral Residual Model and Machine Learning. Front. Plant. Sci. 2022, 13, 915543. [Google Scholar] [CrossRef] [PubMed]

- Rustia, D.J.A.; Lin, C.E.; Chung, J.Y.; Zhuang, Y.J.; Hsu, J.C.; Lin, T.T. Application of an image and environmental sensor network for automated greenhouse insect pest monitoring. J. Asia-Pac. Entomol. 2020, 23, 17–28. [Google Scholar] [CrossRef]

- Yang, Z.; Li, W.; Li, M.; Yang, X. Automatic greenhouse pest recognition based on multiple color space features. Int. J. Agr. Biol. Eng. 2021, 14, 188–195. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect pest monitoring with camera-equipped traps: Strengths and limitations. J. Pest Sci. 2021, 94, 203–217. [Google Scholar]

- Chen, J.; Fan, Y.; Wang, T.; Zhang, C.; Qiu, Z.; He, Y. Automatic Segmentation and Counting of Aphid Nymphs on Leaves Using Convolutional Neural Networks. Agronomy 2018, 8, 129. [Google Scholar]

- She, J.; Zhan, W.; Hong, S.; Min, C.; Dong, T.; Huang, H.; He, Z. A method for automatic real-time detection and counting of fruit fly pests in orchards by trap bottles via convolutional neural network with attention mechanism added. Ecol. Inform. 2022, 70, 101690. [Google Scholar]

- Guo, Q.; Wang, C.; Xiao, D.; Huang, Q. An Enhanced Insect Pest Counter Based on Saliency Map and Improved Non-Maximum Suppression. Insects 2021, 12, 705. [Google Scholar] [CrossRef]

- Rong, M.; Wang, Z.; Ban, B.; Guo, X. Pest Identification and Counting of Yellow Plate in Field Based on Improved Mask R-CNN. Discrete Dyn. Nat. Soc. 2022, 2022, 1913577. [Google Scholar] [CrossRef]

- Wang, L.; Shi, W.; Tang, Y.; Liu, Z.; He, X.; Xiao, H.; Yang, Y. Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus. Agronomy 2023, 13, 1710. [Google Scholar] [CrossRef]

- Dai, M.; Dorjoy, M.M.H.; Miao, H.; Zhang, S.A. New Pest Detection Method Based on Improved YOLOv5m. Insects 2023, 14, 54. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B.A. Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE Computer Society: Las Vegas, NV, USA, 2016; pp. 779–788. [Google Scholar]

- Faisal, M. A pest monitoring system for agriculture using deep learning. Res. Prog. Mech. Manuf. Eng. 2021, 2, 1023–1034. [Google Scholar]

- Liu, J.; Wang, X. Tomato Diseases and Pests Detection Based on Improved Yolo V3 Convolutional Neural Network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef]

- Chen, C.; Liang, Y.; Zhou, L.; Tang, X.; Dai, M. An automatic inspection system for pest detection in granaries using YOLOv4. Comput. Electron. Agr. 2022, 201, 107302. [Google Scholar] [CrossRef]

- Li, S.; Feng, Z.; Yang, B.; Li, H.; Liao, F.; Gao, Y.; Liu, S.; Tang, J.; Yao, Q. An Intelligent Monitoring System of Diseases and Pests on Rice Canopy. Front. Plant Sci. 2022, 13, 972286. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, G.; Liu, Y.; Wang, C.; Yin, Y. An Improved YOLO Network for Unopened Cotton Boll Detection in the Field. J. Intell. Fuzzy Syst. 2022, 42, 2193–2206. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, C.; Xiao, D.; Huang, Q. Automatic Monitoring of Flying Vegetable Insect Pests Using an RGB Camera and YOLO-SIP Detector. Precis. Agric. 2022, 24, 436–457. [Google Scholar] [CrossRef]

- Wen, C.; Chen, H.; Ma, Z.; Zhang, T.; Yang, C.; Su, H.; Chen, H. Pest-YOLO: A model for large-scale multi-class dense and tiny pest detection and counting. Front. Plant Sci. 2022, 13, 973985. [Google Scholar] [CrossRef]

- Mamdouh, N.; Khattab, A. YOLO-Based Deep Learning Framework for Olive Fruit Fly Detection and Counting. IEEE Access 2021, 9, 84252–84262. [Google Scholar] [CrossRef]

- Lacotte, V.; Nguyen, T.; Sempere, J.D.; Novales, V.; Dufour, V.; Moreau, R.; Pham, M.T.; Rabenorosoa, K.; Peignier, S.; Feugier, F.G.; et al. Pesticide-Free Robotic Control of Aphids as Crop Pests. AgriEngineering 2022, 4, 903–921. [Google Scholar] [CrossRef]

- Xu, W.; Xu, T.; Alex Thomasson, J.; Chen, W.; Karthikeyan, R.; Tian, G.; Shi, Y.; Ji, C.; Su, Q. A lightweight SSV2-YOLO based model for detection of sugarcane aphids in unstructured natural environments. Compu. Electron. Agr. 2023, 211, 107961. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, P.; Liu, S.; Zhao, H.; Jia, J. Gridmask data augmentation. arXiv 2020, arXiv:2001.04086. [Google Scholar]

- Tzutalin, D. LabelImg. GitHub Repository. 2015, Volume 6. Available online: https://github.com/tzutalin/labelImg (accessed on 1 May 2022).

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar]

- Li, Q.Y.; Chen, Y.S.; Zeng, T. Transformer with Transfer CNN for Remote-Sensing-Image Object Detection. Remote Sens. 2022, 14, 984. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.C. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE Computer Society: Seattle, WA, USA, 2020; pp. 10778–10787. [Google Scholar]

| Abbreviations | Full Name |

|---|---|

| YOLO | You only look once |

| CNN | Convolutional neural network |

| Mask R-CNN | Mask region with the convolutional neural network |

| SSD | Single shot multi-box detector |

| RCNN | Region with the convolutional neural network |

| CBAM | Convolutional block attention mechanism |

| CAM | Channel attention module |

| SAM | Spatial attention module |

| MLP | Multilayer perceptron |

| MSA | Multi-head self-attention mechanism |

| BiFPN | Bi-directional feature pyramid network |

| PANet | Path aggregation network |

| SiLU | Sigmoid-weighted linear units |

| mAP | Mean average precision |

| AP | Average precision |

| P | Precision |

| R | Recall |

| IOU | Intersection over union |

| Model | P/% | R/% | mAP@0.5/% | Inference Time/ms |

|---|---|---|---|---|

| Ours | 0.991 | 0.991 | 0.993 | 9.4 |

| YOLOv5 | 0.857 | 0.895 | 0.873 | 7.5 |

| YOLOv4 | 0.829 | 0.872 | 0.829 | 17.3 |

| YOLOv3 | 0.815 | 0.833 | 0.762 | 12.9 |

| Model | CBAM | BiFPN | Transformer | P/% | R/% | mAP@0.5/% |

|---|---|---|---|---|---|---|

| Ours | ✓ | ✓ | ✓ | 0.991 | 0.991 | 0.993 |

| YOLOv5 | 0.857 | 0.895 | 0.873 | |||

| YOLOv5-A | ✓ | 0.937 | 0.925 | 0.912 | ||

| YOLOv5-B | ✓ | 0.880 | 0.933 | 0.902 | ||

| YOLOv5-C | ✓ | 0.926 | 0.951 | 0.922 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Wang, L.; Miao, H.; Zhang, S. Aphid Recognition and Counting Based on an Improved YOLOv5 Algorithm in a Climate Chamber Environment. Insects 2023, 14, 839. https://doi.org/10.3390/insects14110839

Li X, Wang L, Miao H, Zhang S. Aphid Recognition and Counting Based on an Improved YOLOv5 Algorithm in a Climate Chamber Environment. Insects. 2023; 14(11):839. https://doi.org/10.3390/insects14110839

Chicago/Turabian StyleLi, Xiaoyin, Lixing Wang, Hong Miao, and Shanwen Zhang. 2023. "Aphid Recognition and Counting Based on an Improved YOLOv5 Algorithm in a Climate Chamber Environment" Insects 14, no. 11: 839. https://doi.org/10.3390/insects14110839

APA StyleLi, X., Wang, L., Miao, H., & Zhang, S. (2023). Aphid Recognition and Counting Based on an Improved YOLOv5 Algorithm in a Climate Chamber Environment. Insects, 14(11), 839. https://doi.org/10.3390/insects14110839