Abstract

Occupational ergonomics aims to optimize the work environment and to enhance both productivity and worker well-being. Work-related exposure assessment, such as lifting loads, is a crucial aspect of this discipline, as it involves the evaluation of physical stressors and their impact on workers’ health and safety, in order to prevent the development of musculoskeletal pathologies. In this study, we explore the feasibility of machine learning (ML) algorithms, fed with time- and frequency-domain features extracted from inertial signals (linear acceleration and angular velocity), to automatically and accurately discriminate safe and unsafe postures during weight lifting tasks. The signals were acquired by means of one inertial measurement unit (IMU) placed on the sternums of 15 subjects, and subsequently segmented to extract several time- and frequency-domain features. A supervised dataset, including the extracted features, was used to feed several ML models and to assess their prediction power. Interesting results in terms of evaluation metrics for a binary safe/unsafe posture classification were obtained with the logistic regression algorithm, which outperformed the others, with accuracy and area under the receiver operating characteristic curve values of up to 96% and 99%, respectively. This result indicates the feasibility of the proposed methodology—based on a single inertial sensor and artificial intelligence—to discriminate safe/unsafe postures associated with load lifting activities. Future investigation in a wider study population and using additional lifting scenarios could confirm the potentiality of the proposed methodology, supporting its applicability in the occupational ergonomics field.

1. Introduction

Work-related musculoskeletal disorders (WMSDs) represent a significant health concern that affects millions of workers worldwide. These disorders encompass a broad range of painful and debilitating conditions that impact musculoskeletal structures. The risk of developing WMSDs is primarily associated with occupational tasks, and it is often the result of biomechanical overload, as has been reported in several studies [1,2,3,4].

In the last years, the prevalence of jobs involving repetitive movements, heavy lifting, and awkward postures has substantially risen; indeed, intensity, repetition, and duration represent the three elements that have the most impact on biomechanical risk during manual tasks [5]. Therefore, several quantitative and semi-quantitative methods have been proposed and implemented in occupational ergonomics to assess the biomechanical risk exposure [6,7,8,9,10]. Currently, wearable sensors are spreading in the field of occupational ergonomics as a valid tool to integrate methodologies which have already been experimented with, revolutionizing the way we monitor work activities and assess biomechanical risk [11,12,13,14,15,16,17]. Among these devices, inertial wearable sensors, which allow for the acquisition of linear acceleration and angular velocity, as well as wearable sensors for surface electromyography (sEMG) and pressure insoles, have proven to be useful for monitoring workers’ activities and assessing biomechanical risk [18,19,20,21].

Additionally, the integration of wearable sensors and artificial intelligence (AI) algorithms is increasingly strengthening in the field of occupational ergonomics, as has been reported in several scientific works. For instance, Donisi et al. [22] proposed a methodology, based on machine learning (ML) models fed with time- and frequency- domain features extracted from inertial signals acquired from the sternum, to classify biomechanical risk during lifting tasks according to the Revised NIOSH (National Institute for Occupational Safety and Health) Lifting Equation. They employed a logistic regression (LR) model, reaching an accuracy classification equal to 82.8%. Conforti et al. [23] used a support vector machine (SVM) fed with time-domain features extracted from inertial signals to discriminate safe and unsafe postures, reaching an accuracy level equal to 99.4%, while Prisco et al. [24] studied the feasibility of several tree-based ML algorithms fed with time-domain features extracted from inertial signals using a single sensor placed on the sternum. Aiello et al. [25] analyzed the discrimination power of ML algorithms to classify low-duty and high-duty activities using information related to the exposure to vibration, which was captured by means of two accelerometers placed on the wrists; the k nearest neighbors (kNN) algorithm reached a classification accuracy equal to 94%. Zhao and Obonyo et al. [26] proposed a model for recognizing construction workers’ postures based on the combination of 5 IMUs and deep learning (DL) algorithms (i.e., convolutional long short-term memory). Antwi-Afari et al. [27] proposed a methodology to recognize workers’ activities associated with overexertion from data acquired by means of a wearable insole pressure system using ML and DL algorithms; they found that the best algorithm was random forest (RaF), with an accuracy of over 97%. Fridolfsson et al. [28] studied the feasibility of ML models, which were fed with features extracted from acceleration signals using a shoe-based sensors, to classify work-specific activities; RaF was the best algorithm, once again reaching an accuracy of up to 71%. Mudiyanselage et al. [29] analyzed the level detection of risk of harmful lifting activities characterized by the Revised NIOSH Lifting Equations using ML and DL algorithms fed with features extracted from thoracic and multifidus sEMG signals, while Donisi et al. [30] studied the feasibility of ML algorithms fed with frequency-domain features extracted from sEMG signals of erector spinae and multifidus muscles to discriminate the biomechanical risk associated with manual material liftings, highlighting that the best algorithm was SVM, with an accuracy equal to 96.1%.

Considering the increasing integration of wearable sensors and AI in the field of occupational ergonomics, the aim of this paper was to study the feasibility of several ML algorithms—fed with time- and frequency-domain features extracted from inertial signals (linear acceleration and angular velocity) acquired from a single inertial measurement unit (IMU) placed on the sternum—to classify safe and unsafe postures during load lifting tasks.

Thus, the proposed methodology could offer an improvement or a valid integration of the procedures already established in the occupational ergonomics field to recognize bad postures, limiting the potential biomechanical risk associated with them in workers.

Moreover, the use of a single sensor (placed on the sternum) and the type of sensor (inertial sensor) make this procedure applicable to the workplace, and not confined to the laboratory like the other methodologies proposed in the scientific literature that are based on optoelectronic systems.

2. Materials and Methods

2.1. The Mobility Lab System (APDM)

The Mobility Lab System (APDM wearable technologies Inc., Portland, OR, USA) is a technically advanced platform for the analysis of human movement, and is used in both clinical and research settings. In clinical practice, it is useful for treatment planning and monitoring patients. In research, it provides valuable data for scientific studies focused on mobility disorders [31,32,33]. This system is composed of both hardware and software components. The hardware is composed of an access point, a docking station, and inertial sensors (OPAL sensors), while the software is based on a dedicated application, namely, Mobility Lab software version 2 (Figure 1). The OPAL sensors are basically IMUs, which include tri-axial accelerometers (14-bit resolution, bandwidth of 50 Hz, and range of ±16 g), tri-axial gyroscopes (16-bit resolution, bandwidth of 50 Hz, and range of ±2000 deg/s), and tri-axial magnetometers (12-bit resolution, bandwidth of 32.5 Hz, and range of ±8 Gauss). These sensors allow for linear acceleration and angular velocity signals to be acquired with a sampling frequency up to 200 Hz. Moreover, the sensors are charged and configurated by means of the docking station. The access point provides the system with wireless communication capability by means of the Bluetooth 3.0 protocol, allowing for real-time data transmission from OPAL sensors to a host computer. Finally, the Mobility Lab software produces detailed reports based on objective metrics related to gait, balance, and movement patterns. In the present work, a single OPAL sensor placed on the sternum was used (Figure 2). The Mobility Lab System has been proven to be repeatable and accurate [34,35].

Figure 1.

Mobility Lab System: access point, docking station, opal sensors, Mobility Lab software version 2.

Figure 2.

OPAL sensor placed on the sternum.

2.2. Study Population

In this study, 15 healthy subjects—9 men and 6 women—between the ages of 22 and 55 years old were enrolled. The subjects were selected excluding those who were affected by musculoskeletal disorders or other occupational pathologies. The anthropometric characteristics of the study population are shown in the Table 1.

Table 1.

Anthropomorphic characteristics of the study population, reported as mean ± standard deviation.

2.3. Experimental Study Protocol

Each subject participated in a session divided into two trials. The first trial consisted of 20 consecutive liftings according to the squat technique—namely, with back extended, legs flexed, rigid arms, and trunk flexed at the hip joints—associated with a safe posture. The load had to be gripped while keeping the legs apart—with a distance between the feet of 20/30 cm—in order to ensure balance during lifting (Figure 3A). The second trial consisted of 20 consecutive liftings associated with an unsafe posture; the liftings were performed with a curved back and non-flexed legs (Figure 3B). Each lifting task was carried out using a plastic container (56 × 35 × 31 cm3) with weights equally distributed inside. Squat and stoop techniques were widely regarded as the “correct” and “incorrect” techniques for lifting activities, as has been reported in several articles in the scientific literature [36,37]. The details regarding the execution of the study protocol are reported in the Table 2.

Figure 3.

Phases of load lifting execution associated with safe posture (A) and unsafe posture (B).

Table 2.

Combination of weight, frequency, duration, and vertical displacement variables for lifting activities corresponding to safe and unsafe postures.

2.4. Digital Signal Processing and Feature Extraction

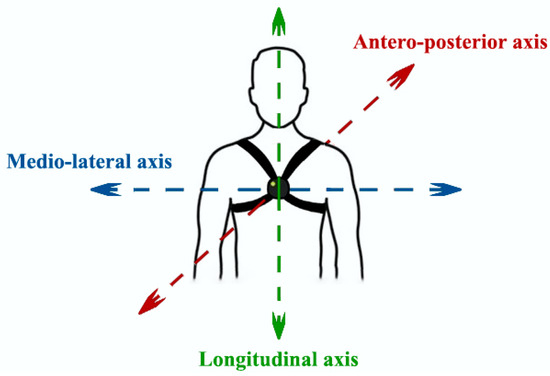

The linear acceleration and angular velocity signals were acquired for each subject during the lifting tasks. The inertial signals were appropriately segmented in order to extract the portion of the signals in the time windows corresponding to the lifting actions. All signals were segmented starting from the segmentation carried on the acceleration signal along the x-axis or longitudinal axis (see Figure 2). The choice of the signal to be segmented fell on the acceleration signal along the x-axis, since the acceleration component along the longitudinal axis—i.e., the axis relating to the lifting of the load—had a more enhanced waveform in terms of amplitude to encourage segmentation.

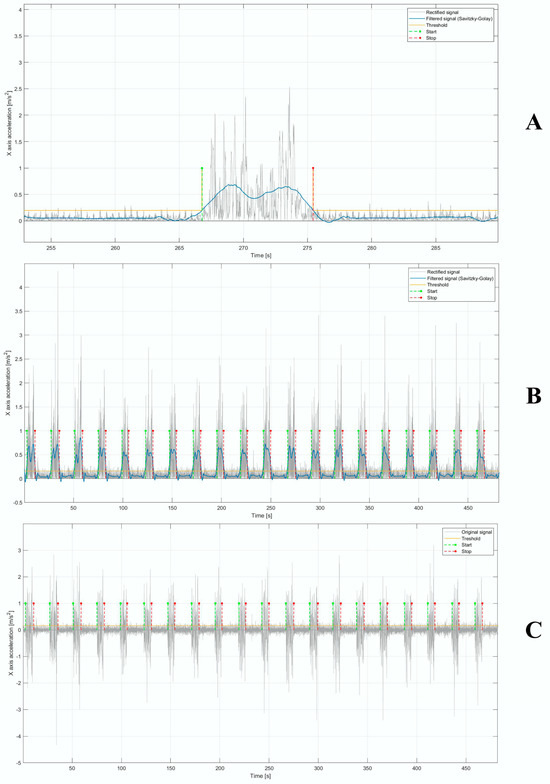

We performed 3 steps to segment the signals. Firstly, the original signal was filtered using a 4° order Butterworth band-pass filter with a band pass ranging from 1 to 50 Hz in order to remove mainly the continuous or DC component. Secondly, the signal was rectified and then filtered by means of a Savitsky–Golay filter [38], with a polynomial order and frame length equal to 4 and 1101, respectively. Finally, an empirical threshold for each subject was set; therefore, from the intersection between the threshold and the final filtered signal, the start and stop points—necessary to segment the signal in the individual region of interest (ROI) corresponding to the lifting tasks—were detected (Figure 4A,B). From our knowledge of the start and stop points, we extracted the ROIs on the original signal (Figure 4C).

Figure 4.

(A) Rectified original signal (in grey); rectified and filtered signal using Savitzky–Golay filter (in blue) and threshold (in yellow) to detect the start and stop points (in green and red, respectively). A single lifting is shown. (B) Rectified original signal (in grey); rectified and filtered signal using Savitzky–Golay filter (in blue) and threshold (in yellow) to determinate the start and stop points (in green and red, respectively). All the liftings of a single trial are shown. (C) Original acceleration signal and start and stop points detected to identify the ROIs.

For each ROI, several time- and frequency-domain features were extracted. The following time-domain features were extracted:

- Standard deviation (STD) (m/s2 for acceleration, deg/s for angular velocity):

- Mean absolute value (MAV) (m/s2 for acceleration, deg/s for angular velocity):

- Peak to peak amplitude (PP) (m/s2 for acceleration, deg/s for angular velocity):

- Zero crossing rate (ZCR) (adim):

- Slope sign changes (SSC) (adim):

Concerning frequency-domain features, the total power spectrum (TPS), computed using the fast Fourier transform (fft) algorithm, was considered to extract the related features. The following features were extracted:

- Total power (P) (m/s2 for acceleration, deg/s for angular velocity):

- Spectral entropy (SE) (adim):

- Kurtosis (Kurt) (adim):

- Skewness (Skew) [adim]:

- : i-th sample of the signal;

- : number of samples of the signal;

- : i-th sample of the Fourier transformation of the signal;

- : i-th sample of the TPS of the signal;

- : mean of the TPS of the signal;

- : STD of the TPS of the signal.

The aforementioned features were implemented according to the following references: absolute arithmetic mean [39], standard deviation [40], peak to peak amplitude [41], zero crossing rate [39], slope sign changes [39], total power [40], entropy [42], kurtosis [40], and skewness [40].

2.5. Statistical Analysis

A statistical analysis was carried out to verify which features presented a statistically significant difference in order to discriminate safe/unsafe postures during weight lifting. A Shapiro–Wilk normality test was performed to evaluate the normality of each feature in order to choose the correct parametric (t-test) or non-parametric (Wilcoxon test) two-tailed paired test. For all the statistical tests, a confidence level equal to 95% was chosen (definition of statistical significance: p-value < 0.05).

Statistical analysis was performed using JASP 0.17.1 (University of Amsterdam, Amsterdam, The Netherlands).

2.6. Machine Learning Analysis

ML is a field in which predictive models are created to learn or improve their performance based on input data observation [43]. In this study, the features extracted for each ROI of the inertial signals were used to build a supervised dataset to feed ML algorithms with the goal of performing a binary classification (safe and unsafe posture classification).

Supervised learning is an important branch of ML which creates models based on labeled data training [44]. In the present study, the following 8 supervised ML algorithms were implemented to assess their classification accuracy in order to discriminate safe and unsafe postures: support vector machine (SVM) [45]; decision tree (DT) [46]; gradient boosted tree (GB) [47]; random forest (RaF) [48]; logistic regression (LR) [49]; k nearest neighbor (kNN) [50]; multilayer perceptron (MLP) [51]; and probabilistic neural network (PNN) [52].

For all the ML algorithms, the hyperparameters’ optimization was performed to maximize the classification accuracy. Regarding SVM, a polynomial kernel with bias, power, and gamma equal to 1.141, 1.734, and 1.489 was set, respectively. For kNN, a k equal to 7 was set. Concerning LR, a step size, maximum of epoch, and epsilon equal to 0.516, 111, and 0.004 were chosen, respectively. Regarding DT, minimum number records per node were set equal to 5, number records to store per view were set equal to 6939, and the maximum nominal value was set equal to 4. Moreover, pruning was not implemented. Concerning GB, the maximum of levels was set equal to 2, the number of models was set equal to 149, and the learning rate was set equal to 0.333. For RaF, the maximum of levels, number of models, and minimum node size were set equal to 5, 52, and 4, respectively. For MLP, the maximum of iterations, hidden layer, and number of hidden layers were chosen to be equal to 60, 1, and 5, respectively. Finally, for PNN, the theta minus was set equal to 0.109, and the theta plus was set equal to 0.928.

Moreover, for the LR, kNN, and SVM algorithms, the min-max (MM) normalization was performed so that all feature values were squeezed (or stretched) within the range of [0, 1]. The MM normalization was set because some models are sensitive to the scale of input features, while other models, such as tree-based models, are less sensitive [53].

As validation strategy, the leave-one-subject-out cross-validation (CV) strategy was adopted. It used each individual subject as a test set and the remaining ones as a training set. In this study, 14 subjects were used to train and 1 subject was used to test the predictive models; this procedure was executed in an iterative way 15 times so as to test the ML models on each subject.

Accuracy, F-measure, specificity, sensitivity, precision, recall, and area under the receiver operating characteristic curve (AUCROC) were used as evaluation metrics to assess the classification power of the proposed ML algorithms fed with the extracted features.

Moreover, a feature importance according to information gain (IG) method was computed. The IG approach—based on entropy—is an indicator of the importance of each feature to the target class [54].

The ML analysis was performed using the Knime Analytics Platform (version 4.1.3), a platform widely used in the biomedical engineering field [55,56,57].

3. Results

Firstly, a statistical analysis based on two-tailed paired tests—the parametric test (t-test) for features with a normal distribution and the non-parametric test (Wilcoxon test) for features with non-normal distribution—was performed to evaluate which features were statistically significant in order to discriminate the two target classes, namely, safe and unsafe postures. This analysis was carried out separately for acceleration and angular velocity, considering all axes (x, y, z). In Table 3 and Table 4, the results of the statistical analysis for linear acceleration and angular velocity, respectively, are shown.

Table 3.

Paired test between safe and unsafe postures for each feature extracted from acceleration signal (acc).

Table 4.

Paired test between safe and unsafe postures for each feature extracted from angular velocity signal (vel).

Secondly, the feasibility of the eight ML algorithms—fed with time- and frequency-domain features extracted from inertial signals acquired by means of a single IMU placed on the sternum—to classify safe and unsafe postures was assessed. The supervised dataset consisted of 600 instances (15 subjects × 40 lifting instances), 54 features (9 features extracted × 2 signal (acceleration and angular velocity) × 3 axis (x, y, z) × 1 body position (sternum)), and 2 classes (safe posture, unsafe posture). The evaluation metric scores reached by the ML classifiers using the leave-one-subject-out CV strategy and hypermeters optimization are reported in Table 5.

Table 5.

Evaluation metric scores reported as mean ± standard deviation using features extracted from inertial signals, leave-one-subject-out CV strategy, and hyperparameter optimization for each classification algorithm.

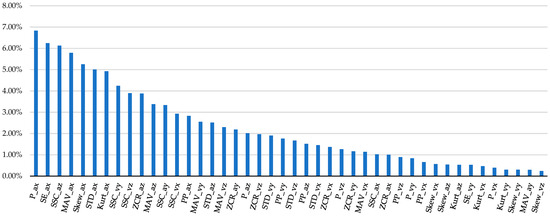

Finally, the feature importance—according to the IG method—is shown in Figure 5. The only features with non-zero ranking values are reported.

Figure 5.

Ranking of the features extracted from acceleration and angular velocity signals along x, y, and z-axes (ax, ay, az, vx, vy, vz) according to the IG method. Spectral entropy (SE); kurtosis (Kurt); skewness (Skew); power (P); peak to peak amplitude (PP); standard deviation (STD); mean absolute value (MAV); zero crossing rate (ZCR); slope sign changes (SSC).

4. Discussion

The purpose of this work was to study the feasibility of ML models fed with time- and frequency-domain features extracted from inertial signals acquired by a single IMU placed on the sternum in order to automatically discriminate safe and unsafe posture during weight liftings.

Table 3 and Table 4 report the statistical analysis results based on two-tailed paired tests, and it is highlighted that almost all the features, for both acceleration and angular velocity, showed statistically significant differences discriminating safe and unsafe postures. The results showed that all features extracted from the angular velocity signal exhibited statistically significant differences between the two classes (p value lower than 0.001), while for the acceleration signal, PP_acc and MAV_acc did not exhibit statistically significant differences, with p-values equal to 0.105 and 0.127, respectively. This result suggests that the angular velocity has a discriminant power slightly higher than the acceleration signal in this context.

Considering the correlation existing among the instances (liftings) of the same subject, and to avoid training the algorithms on instances related to all the subjects, a ML analysis using the leave-one-subject-out CV strategy was performed in order to obtain more robust results. Table 5 shows the evaluation metric scores for each ML model fed with specific time- and frequency-domain features to classify safe and unsafe postures. Almost all of the ML algorithms reached a classification accuracy of more than 0.9, except for PNN and DT (accuracy equal to 0.88 ± 0.17 and 0.79 ± 0.16, respectively). The low accuracy value of the PNN algorithm could be due to the correlation existing between the features, since probabilistic classifiers make a basic assumption of independence among features, which is not always verified. The best ML algorithm was LR, with accuracy, F-measure, specificity, sensitivity, precision, recall, and AUCROC equal to 0.96 ± 0.11, 0.97 ± 0.08, 0.92 ± 0.21, 0.99 ± 0.01, 0.95 ± 0.12, 0.99 ± 0.01, and 0.99 ± 0.01, respectively. AUCROC values provide information about the ability of ML algorithms to discriminate between classes. Conventionally, AUCROC values are divided into three ranges: moderate discrimination power (values between of 0.70–0.80), good discrimination power (values between of 0.80–0.90), and excellent discrimination power (values greater than 0.90). Regarding LR, the AUCROC value was equal to 0.99 ± 0.01, demonstrating its excellent ability to discriminate safe and unsafe classes.

Figure 5 shows the feature importance results based on the IG method, and it emerged that 44 features out of 54 (81.5%) had non-zero values.

Considering all inertial signals, it emerged that the x, y, and z axes showed ranking values equal to 47.87%, 19.40%, and 32.72%, respectively. Therefore, the x-axis (i.e., vertical axis) was more representative than the y and z axes (i.e., medio-lateral and antero-posterior axes, respectively) in classifying the target classes. We expected this result, since the weight lifting was carried out along the x axis, namely, the vertical direction.

Considering all axes (x, y, z) and inertial signals, we highlight that SSC, MAV, STD, ZCR, P, PP, Skew, SE, and Kurt presented the following ranking values, respectively: 21.55%, 15.44%, 12.55%, 11.56%, 11.33%, 7.66%, 6.90%, 6.77%, and 6.22%. This result suggests that the features extracted from the time-domain were more predictive than frequency-domain features in classifying safe and unsafe postures during load liftings, with a ranking value equal to 61.11%. The use of time-domain features alone is relevant, since it could reduce the computational effort by allowing for a real-time analysis. However, it needs to be understood how much it affects the predictive ability of the ML models.

Different studies presented in the scientific literature have attempted to classify safe and unsafe postures during lifting actions using ML algorithms. Hung et al. [58] used a DL model fed with kinematic features extracted from a three-dimensional motion tracking system to classify three posture classes (stoop, stand, squat), reaching a classification accuracy of up to 94%. The limitation of the methodology described above, as highlighted by the authors themselves, was that the effect of holding the load while performing a lifting task was not considered, which determined a change in the classification accuracy. Another limitation is the very small study population, since they considered only two subjects, and therefore, the results cannot be considered robust.

In the study of Greene et al. [59], the authors evaluated the feasibility of DT model using a depth camera located in front of the sagittal plane of the subject to extract kinematic features in order to classify three posture classes (stoop, stand, squat), reaching a classification accuracy of up to 100%. The same authors [59] developed a methodology to automatically classify lifting postures—stoop, squat, and stand—using features obtained by drawing a rectangular bounding box tightly around the body on the sagittal plane in video recordings. A classification CART algorithm was used in this work, reaching an accuracy of up to 100%.

Chae et al. [60] proposed a methodology based on ML algorithms (SVM and RaF) and DL algorithms (artificial neural network (ANN)) fed with kinetic and kinematic features obtained from six cameras and two ground reaction forces for the binary classification of stoop and squat postures, reaching an accuracy equal to 94%.

Conforti et al. [23] studied the feasibility of an SVM algorithm with linear, polynomial (quadratic and cubic), and Gaussian kernels fed with time-domain features—extracted from inertial signals using eight IMUs applied on the upper and lower body segments of the subject—to classify correct and incorrect postures during load lifting, obtaining a classification accuracy greater than 90%. The limitation, as reported by the same authors, was that the high number of wearable sensors used—eight IMUs—allowed it to confine the load lifting assessment to the laboratory. On the other hand, contrary to our study, the extraction of only time-domain features lessened the computational burden, potentially allowing for the analysis of posture in real time.

Furthermore, Ryu et al. [61] assessed the action recognition of masonry workers by means of three ML classification algorithms—SVM, kNN, and MLP—fed with time- and frequency-domain features extracted from acceleration signals acquired from the wrists, reaching a classification accuracy between 80% and 100%.

O’Reilly et al. [62] explored the feasibility of a back-propagation neural network classifier fed with time-domain features, which were extracted from inertial signals using one IMU applied on lumbar, to classify correct and incorrect postures during squat lifting. The classifier was trained and tested using leave-one-subject-out CV, obtaining an accuracy equal to 80.45%.

Finally, Youssef et al. [63] assessed the feasibility of the ANN algorithm fed with kinematic features obtained from nine IMUs and one camera for binary classification between good and bad squat postures. The classifier was trained and tested using 10-fold CV, reaching an accuracy equal to 96%.

On the basis of the highlighted results and considering the number and the type of wearable sensors used in the previously proposed methodologies, it is possible to state that the proposed approach could solve the problem of poor applicability in the workplace by using a methodology based on a single inertial sensor placed on the sternum. Moreover, the results demonstrated the good discrimination power of the proposed methodology, as reported in the Table 5, although we used a simple technology (IMU) and a simple configuration (a single inertial sensor placed on the sternum) that make the procedure applicable in real-world settings (e.g., the workplace). Finally, the proposed methodology, from a cost-effectiveness point of view, is more economical compared to the other technologies proposed in the scientific literature, since it is based on accelerometers and gyroscopes that are cheaper if compared to cameras or optoelectronic systems.

Therefore, the proposed methodology—which combines ML algorithms and time- and frequency-domain features extracted from inertial signals acquired from a single IMU placed on the sternum—proved to be able to discriminate safe and unsafe postures during weight lifting.

5. Conclusions

The combination of specific features extracted from inertial signals acquired by means of a single IMU placed on the sternum and an ML algorithms allowed us to distinguish safe and unsafe postures. Interesting results were obtained, in particular, LR classifier reached high scores in evaluation metrics. The proposed methodology was able to discriminate safe and unsafe postures, making the procedure of posture assessment automatic, economic, non-time consuming, non-invasive, and not operator-dependent. Therefore, the proposed methodology could be of direct practical relevance for occupational ergonomics. Moreover, the use of a single IMU sensor allows this procedure to be applicable in the workplace, and not confined to the laboratory like the other methodologies proposed in the scientific literature that are based on optoelectronic systems. Although the use of several type of sensors can provide further information—i.e., the contribution of lower limb or trunk kinematics—the use of a single type of sensor, as in the case in this study, can make the procedure simpler and more applicable in the workplace. To reduce biomechanical overload and to prevent the occurrence of WMSD, the design of a real-time posture-monitoring platform based on our methodology could be a powerful solution in the workplace, although the analysis in the frequency domain could be limiting.

However, there are some limitations in this study that make the work preliminary. Firstly, only 15 subjects were enrolled in this experiment, and, secondly, we did not consider older people with comorbidities and/or bone fragility, conditions that can trigger or worsen WMSDs, affecting the accuracy of ML classification and participants’ health status if not conducted under medical supervision and with previously determined bone mineral, cardiorespiratory, postural, and fitness statuses. Future investigation in a large study population—in terms of samples and age—could confirm the potential of this methodology to classify safe and unsafe postures during weight lifting in order to offer a valid integration of the procedures already established in the occupational ergonomic field. As a future development, DL algorithms could be also investigated in order to explore their feasibility in discriminating safe and unsafe postures using the same dataset, as well as to understand if there would be improvements in terms of evaluation metrics. Moreover, it could be interesting to validate the proposed methodology by comparing it with methodologies already established in occupational ergonomics (e.g., PoseNet [64]).

Finally, it would be interesting to explore the long-term effectiveness and reliability of using machine learning algorithms and IMUs for ergonomic assessment, even if it is premature, considering the recent interest in the application of wearable technologies coupled with artificial intelligence in the occupational ergonomics field.

Author Contributions

Conceptualization, G.P. and L.D.; methodology, G.P. and L.D.; software, G.P. and L.D.; validation, G.P., M.R., F.E. and L.D.; formal analysis, G.P. and L.D.; investigation, G.P. and L.D.; resources, M.C. and F.A.; data curation, G.P. and L.D.; writing—original draft preparation, G.P. and L.D.; writing—review and editing, M.R., F.E., M.C., A.S., F.A. and L.D.; visualization, G.P. and L.D.; supervision, M.C., A.S., F.A. and L.D.; project administration, F.A. and L.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This article does not require ethics committee or institutional review board approval as it deals with the analysis of datasets acquired in healthy human volunteers, through non-invasive instrumentation, where the data were properly anonymized and informed consent was obtained at the time of original data collection.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets generated and analyzed in this study are not publicly available due to the privacy policy, but are available from the corresponding author upon reasonable request.

Acknowledgments

Work by L.D. and F.E. was supported by #NEXTGENERATIONEU (NGEU) and funded by the Ministry of University and Research (MUR), National Recovery and Resilience Plan (NRRP), project MNESYS (PE0000006)—a multiscale integrated approach to the study of the nervous system in health and disease (DN. 1553 11.10.2022).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Grieco, A.; Molteni, G.; Vito, G.D.; Sias, N. Epidemiology of musculoskeletal disorders due to biomechanical overload. Ergonomics 1998, 41, 1253–1260. [Google Scholar] [CrossRef]

- Radwin, R.G.; Marras, W.S.; Lavender, S.A. Biomechanical aspects of work-related musculoskeletal disorders. Theor. Issues Ergon. Sci. 2001, 2, 153–217. [Google Scholar] [CrossRef]

- Marras, W.S.; Lavender, S.A.; Leurgans, S.E.; Fathallah, F.A.; Ferguson, S.A.; Gary Allread, W.; Rajulu, S.L. Biomechanical risk factors for occupationally related low back disorders. Ergonomics 1995, 38, 377–410. [Google Scholar] [CrossRef]

- Hales, T.R.; Bernard, B.P. Epidemiology of work-related musculoskeletal disorders. Orthop. Clin. N. Am. 1996, 27, 679–709. [Google Scholar] [CrossRef]

- Trask, C.; Mathiassen, S.E.; Wahlström, J.; Heiden, M.; Rezagholi, M. Modeling costs of exposure assessment methods in industrial environments. Work 2012, 41 (Suppl. S1), 6079–6086. [Google Scholar] [CrossRef]

- Waters, T.R.; Putz-Anderson, V.; Garg, A. Applications manual for the revised NIOSH lifting equation. Ergonomics 1993, 36, 749–776. [Google Scholar] [CrossRef]

- Karhu, O.; Härkönen, R.; Sorvali, P.; Vepsäläinen, P. Observing working postures in industry: Examples of OWAS application. Appl. Ergon. 1981, 12, 13–17. [Google Scholar] [CrossRef] [PubMed]

- Battevi, N.; Menoni, O.; Ricci, M.G.; Cairoli, S. MAPO index for risk assessment of patient manual handling in hospital wards: A validation study. Ergonomics 2006, 49, 671–687. [Google Scholar] [CrossRef] [PubMed]

- Hignett, S.; McAtamney, L. Rapid entire body assessment (REBA). Appl. Ergon. 2000, 31, 201–205. [Google Scholar] [CrossRef] [PubMed]

- Lynn, M.; Corlett, N. RULA: A survey method for the investigation of work-related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar]

- Donisi, L.; Cesarelli, G.; Pisani, N.; Ponsiglione, A.M.; Ricciardi, C.; Capodaglio, E. Wearable Sensors and Artificial Intelligence for Physical Ergonomics: A Systematic Review of Literature. Diagnostics 2022, 12, 3048. [Google Scholar] [CrossRef] [PubMed]

- D’Addio, G.; Donisi, L.; Mercogliano, L.; Cesarelli, G.; Bifulco, P.; Cesarelli, M. Potential biomechanical overload on skeletal muscle structures in students during walk with backpack. In Proceedings of the XV Mediterranean Conference on Medical and Biological Engineering and Computing—MEDICON 2019, Coimbra, Portugal, 26–28 September 2019. [Google Scholar]

- Donisi, L.; Cesarelli, G.; Coccia, A.; Panigazzi, M.; Capodaglio, E.M.; D’Addio, G. Work-related risk assessment according to the revised NIOSH lifting equation: A preliminary study using a wearable inertial sensor and machine learning. Sensors 2021, 21, 2593. [Google Scholar] [CrossRef] [PubMed]

- Ranavolo, A.; Draicchio, F.; Varrecchia, T.; Silvetti, A.; Iavicoli, S. Wearable monitoring devices for biomechanical risk assessment at work: Current status and future challenges—A systematic review. Int. J. Environ. Res. Public Health 2018, 15, 2001. [Google Scholar] [CrossRef]

- Greco, A.; Muoio, M.; Lamberti, M.; Gerbino, S.; Caputo, F.; Miraglia, N. Integrated wearable devices for evaluating the biomechanical overload in manufacturing. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd4. 0&IoT), Naples, Italy, 4–6 June 2019. [Google Scholar]

- Stefana, E.; Marciano, F.; Rossi, D.; Cocca, P.; Tomasoni, G. Wearable devices for ergonomics: A systematic literature review. Sensors 2021, 21, 777. [Google Scholar] [CrossRef]

- Peppoloni, L.; Filippeschi, A.; Ruffaldi, E.; Avizzano, C.A. A novel wearable system for the online assessment of risk for biomechanical load in repetitive efforts. Int. J. Ind. Ergon. 2016, 52, 1–11. [Google Scholar] [CrossRef]

- Nath, N.D.; Chaspari, T.; Behzadan, A.H. Automated Ergonomic Risk Monitoring Using Body-Mounted Sensors and Machine Learning. Adv. Eng. Inform. 2018, 38, 514–526. [Google Scholar] [CrossRef]

- Yu, Y.; Li, H.; Yang, X.; Umer, W. Estimating Construction Workers’ Physical Workload by Fusing Computer Vision and Smart Insole Technologies. In Proceedings of the 35th International Symposium on Automation and Robotics in Construction (ISARC 2018), Berlin, Germany, 20–25 July 2018. [Google Scholar]

- Ranavolo, A.; Varrecchia, T.; Iavicoli, S.; Marchesi, A.; Rinaldi, M.; Serrao, M.; Conforti, L.; Cesarelli, M.; Draicchio, F. Surface electromyography for risk assessment in work activities designed using the “revised NIOSH lifting equation”. Int. J. Ind. Ergon. 2018, 68, 34–45. [Google Scholar] [CrossRef]

- Raso, R.; Emrich, A.; Burghardt, T.; Schlenker, M.; Gudehus, T.; Sträter, O.; Fettke, P.; Loos, P. Activity Monitoring Using Wearable Sensors in Manual Production Processes—An Application of CPS for Automated Ergonomic Assessments. In Proceedings of the Multikonferenz Wirtschaftsinformatik 2018 (MKWI 2018), Lüneburg, Germany, 6–9 March 2018. [Google Scholar]

- Donisi, L.; Cesarelli, G.; Capodaglio, E.; Panigazzi, M.; D’Addio, G.; Cesarelli, M.; Amato, F. A Logistic Regression Model for Biomechanical Risk Classification in Lifting Tasks. Diagnostics 2022, 12, 2624. [Google Scholar] [CrossRef]

- Conforti, I.; Mileti, I.; Del Prete, Z.; Palermo, E. Measuring biomechanical risk in lifting load tasks through wearable system and machine-learning approach. Sensors 2020, 20, 1557. [Google Scholar] [CrossRef] [PubMed]

- Prisco, G.; Romano, M.; Esposito, F.; Cesarelli, M.; Santone, A.; Donisi, L. Feasibility of tree-based Machine Learning models to discriminate safe and unsafe postures during weight lifting. In Proceedings of the 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (IEEE MetroXRAINE 2023), Milan, Italy, 25–27 October 2023. [Google Scholar]

- Aiello, G.; Certa, A.; Abusohyon, I.; Longo, F.; Padovano, A. Machine Learning approach towards real time assessment of hand-arm vibration risk. IFAC-Pap. 2021, 54, 1187–1192. [Google Scholar] [CrossRef]

- Zhao, J.; Obonyo, E. Applying incremental Deep Neural Networks-based posture recognition model for ergonomics risk assessment in construction. Adv. Eng. Inform. 2021, 50, 101374. [Google Scholar] [CrossRef]

- Antwi-Afari, M.F.; Li, H.; Umer, W.; Yu, Y.; Xing, X. Construction activity recognition and ergonomic risk assessment using a wearable insole pressure system. J. Constr. Eng. Manag. 2020, 146, 04020077. [Google Scholar] [CrossRef]

- Fridolfsson, J.; Arvidsson, D.; Doerks, F.; Kreidler, T.J.; Grau, S. Workplace activity classification from shoe-based movement sensors. BMC Biomed. Eng. 2020, 2, 8. [Google Scholar] [CrossRef]

- Mudiyanselage, S.E.; Nguyen, P.H.D.; Rajabi, M.S.; Akhavian, R. Automated workers’ ergonomic risk assessment in manual material handling using sEMG wearable sensors and machine learning. Electronics 2021, 10, 2558. [Google Scholar] [CrossRef]

- Donisi, L.; Jacob, D.; Guerrini, L.; Prisco, G.; Esposito, F.; Cesarelli, M.; Amato, F.; Gargiulo, P. sEMG Spectral Analysis and Machine Learning Algorithms Are Able to Discriminate Biomechanical Risk Classes Associated with Manual Material Liftings. Bioengineering 2023, 10, 1103. [Google Scholar] [CrossRef]

- Mancini, M.; King, L.; Salarian, A.; Holmstrom, L.; McNames, J.; Horak, F.B. Mobility lab to assess balance and gait with synchronized body-worn sensors. J. Bioeng. Biomed. Sci. 2011, 007. [Google Scholar]

- Mancini, M.; Horak, F.B. Potential of APDM mobility lab for the monitoring of the progression of Parkinson’s disease. Expert Rev. Med. Devices 2016, 13, 455–462. [Google Scholar] [CrossRef] [PubMed]

- Morris, R.; Stuart, S.; McBarron, G.; Fino, P.C.; Mancini, M.; Curtze, C. Validity of Mobility Lab (version 2) for gait assessment in young adults, older adults and Parkinson’s disease. Physiol. Meas. 2019, 40, 095003. [Google Scholar] [CrossRef] [PubMed]

- Donisi, L.; Pagano, G.; Cesarelli, G.; Coccia, A.; Amitrano, F.; D’Addio, G. Benchmarking between two wearable inertial systems for gait analysis based on a different sensor placement using several statistical approaches. Measurement 2021, 173, 108642. [Google Scholar] [CrossRef]

- Schmitz-Hübsch, T.; Brandt, A.U.; Pfueller, C.; Zange, L.; Seidel, A.; Kühn, A.A.; Minnerop, M.; Doss, S. Accuracy and repeatability of two methods of gait analysis–GaitRite™ und mobility lab™–in subjects with cerebellar ataxia. Gait Posture 2016, 48, 194–201. [Google Scholar] [CrossRef]

- Straker, L. Evidence to support using squat, semi-squat and stoop techniques to lift low-lying objects. Int. J. Ind. Ergon. 2003, 31, 149–160. [Google Scholar] [CrossRef]

- Bazrgari, B.; Shirazi-Adl, A.; Arjmand, N. Analysis of squat and stoop dynamic liftings: Muscle forces and internal spinal loads. Eur. Spine J. 2007, 16, 687–699. [Google Scholar] [CrossRef]

- Schafer, R.W. What is a Savitzky-Golay filter? [lecture notes]. IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

- Geethanjali, P.; Mohan, Y.K.; Sen, J. Time domain feature extraction and classification of EEG data for brain computer interface. In Proceedings of the 2012 9th International Conference on Fuzzy Systems and Knowledge Discovery, Sichuan, China, 29–31 May 2012. [Google Scholar]

- Fan, S.; Jia, Y.; Jia, C. A feature selection and classification method for activity recognition based on an inertial sensing unit. Information 2019, 10, 290. [Google Scholar] [CrossRef]

- Barandas, M.; Folgado, D.; Fernandes, L.; Santos, S.; Abreu, M.; Bota, P.; Liu, H.; Schultz, T.; Gamboa, H. TSFEL: Time series feature extraction library. SoftwareX 2020, 11, 100456. [Google Scholar] [CrossRef]

- Pan, Y.N.; Chen, J.; Li, X.L. Spectral entropy: A complementary index for rolling element bearing performance degradation assessment. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2009, 223, 1223–1231. [Google Scholar] [CrossRef]

- Zhou, Z.H. Machine Learning, 1st ed.; Springer Nature: Nanjing, China, 2021. [Google Scholar]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised learning. In Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef] [PubMed]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Christodoulou, E.; Ma, J.; Collins, G.S.; Steyerberg, E.W.; Verbakel, J.Y.; Van Calster, B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J. Clin. Epidemiol. 2019, 110, 12–22. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Pal, S.K.; Mitra, S. Multilayer perceptron, fuzzy sets, classifiaction. IEEE Trans. Neural Netw. 1992, 3, 683–697. [Google Scholar] [CrossRef]

- Specht, D.F. Probabilistic neural networks. Neural Netw. 1990, 3, 109–118. [Google Scholar] [CrossRef]

- Zheng, A.; Casari, A. Feature Engineering for Machine Learning: Principles and Techniques for Data Scientists; O’Reilly Media, Inc: Sebastopol, CA, USA, 2018. [Google Scholar]

- Azhagusundari, B.; Thanamani, A.S. Feature selection based on information gain. Int. J. Innov. Technol. Explor. Eng. 2013, 2, 18–21. [Google Scholar]

- Jacob, D.; Unnsteinsdóttir Kristensen, I.S.; Aubonnet, R.; Recenti, M.; Donisi, L.; Ricciardi, C.; Svansson, H.A.R.; Agnarsdottir, S.; Colacino, A.; Jonsdottir, M.K.; et al. Towards defining biomarkers to evaluate concussions using virtual reality and a moving platform (BioVRSea). Sci. Rep. 2022, 12, 8996. [Google Scholar] [CrossRef] [PubMed]

- An, J.Y.; Seo, H.; Kim, Y.G.; Lee, K.E.; Kim, S.; Kong, H.J. Codeless Deep Learning of COVID-19 Chest X-Ray Image Dataset with KNIME Analytics Platform. Healthc. Inform. Res. 2021, 27, 82–91. [Google Scholar] [CrossRef] [PubMed]

- Ricciardi, C.; Ponsiglione, A.M.; Scala, A.; Borrelli, A.; Misasi, M.; Romano, G.; Russo, G.; Triassi, M.; Improta, G. Machine learning and regression analysis to model the length of hospital stay in patients with femur fracture. Bioengineering 2022, 9, 172. [Google Scholar] [CrossRef] [PubMed]

- Hung, J.S.; Liu, P.L.; Chang, C.C. A deep learning-based approach for human posture classification. In Proceedings of the 2020 2nd International Conference on Management Science and Industrial Engineering, Osaka, Japan, 7–9 April 2020. [Google Scholar]

- Greene, R.L.; Hu, Y.H.; Difranco, N.; Wang, X.; Lu, M.L.; Bao, S.; Lin, J.; Radwin, R.G. Predicting sagittal plane lifting postures from image bounding box dimensions. Hum. Factors 2019, 61, 64–77. [Google Scholar] [CrossRef]

- Chae, S.; Choi, A.; Jung, H.; Kim, T.H.; Kim, K.; Mun, J.H. Machine learning model to estimate net joint moments during lifting task using wearable sensors: A preliminary study for design of exoskeleton control system. Appl. Sci. 2021, 11, 11735. [Google Scholar] [CrossRef]

- Ryu, J.; Seo, J.; Liu, M.; Lee, S.; Haas, C.T. Action recognition using a wristband-type activity tracker: Case study of masonry work. In Proceedings of the 2016 Construction Research Congress, San Juan, Puerto Rico, 31 May–2 June 2016. [Google Scholar]

- O’Reilly, M.; Whelan, D.; Chanialidis, C.; Friel, N.; Delahunt, E.; Ward, T.; Caulfield, B. Evaluating squat performance with a single inertial measurement unit. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015. [Google Scholar]

- Youssef, F.; Zaki, A.B.; Gomaa, W. Analysis of the Squat Exercise from Visual Data. In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Lisbon, Portugal, 14–16 July 2022. [Google Scholar]

- Chen, Y.; Shen, C.; Wei, X.S.; Liu, L.; Yang, J. Adversarial posenet: A structure-aware convolutional network for human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).