1. Introduction

Epilepsy is one of the most common neurological disorders worldwide. According to the World Health Organization, approximately 50 million people worldwide suffer from this disorder [

1]. Epilepsy occurs due to brain neurons’ abnormal and sudden secretions [

2]. Epilepsy is typically not a direct cause of death in most cases. However, it is important to note that epilepsy can increase the risk of certain potentially life-threatening complications. These complications include sudden unexpected death in epilepsy (SUDEP); SUDEP is a rare but significant risk associated with epilepsy. It refers to cases where a person with epilepsy dies suddenly and unexpectedly, and no clear cause of death is identified during autopsy. The exact mechanisms of SUDEP are not fully understood, but it is believed to be related to a combination of factors, including seizures affecting the respiratory or cardiovascular systems. They also include accidents and injuries; seizures can cause loss of consciousness, convulsions, or altered awareness, which can lead to accidents and injuries. Another complication is status epilepticus: a prolonged seizure or a series of seizures where the person does not regain consciousness between seizures. Status epilepticus is a medical emergency and requires immediate treatment. If not treated promptly, it can lead to severe brain damage or even death. It is worth mentioning that the overall mortality rate for epilepsy is generally low, and most people with epilepsy can lead full and productive lives with appropriate management and treatment. However, it is crucial for individuals with epilepsy to work closely with healthcare professionals to minimize the risks associated with the condition and manage it effectively [

3]. The inability of brain neurons to regulate electric signals in the brain results in seizures, a condition that has been of interest to many researchers because of its complexity and seriousness. Seizures are usually accompanied by disorders in sensation, movement or mental functions [

4]. Over 30% of patients with epilepsy still suffer from uncontrolled seizures despite treatment with antiepileptic drugs. Based on the areas of the brain that are activated during seizures, seizures are classified into two types: partial and generalized. A partial seizure originates from one region of the brain and remains in one hemisphere, whereas a generalized seizure affects the whole brain [

5]. The electrical activity of the brain is represented by EEG signal waves originating from the brain’s neurons. EEG signal waves are recorded by placing non-invasive electrodes on the scalp. These signals display information on mental defects and neurological conditions [

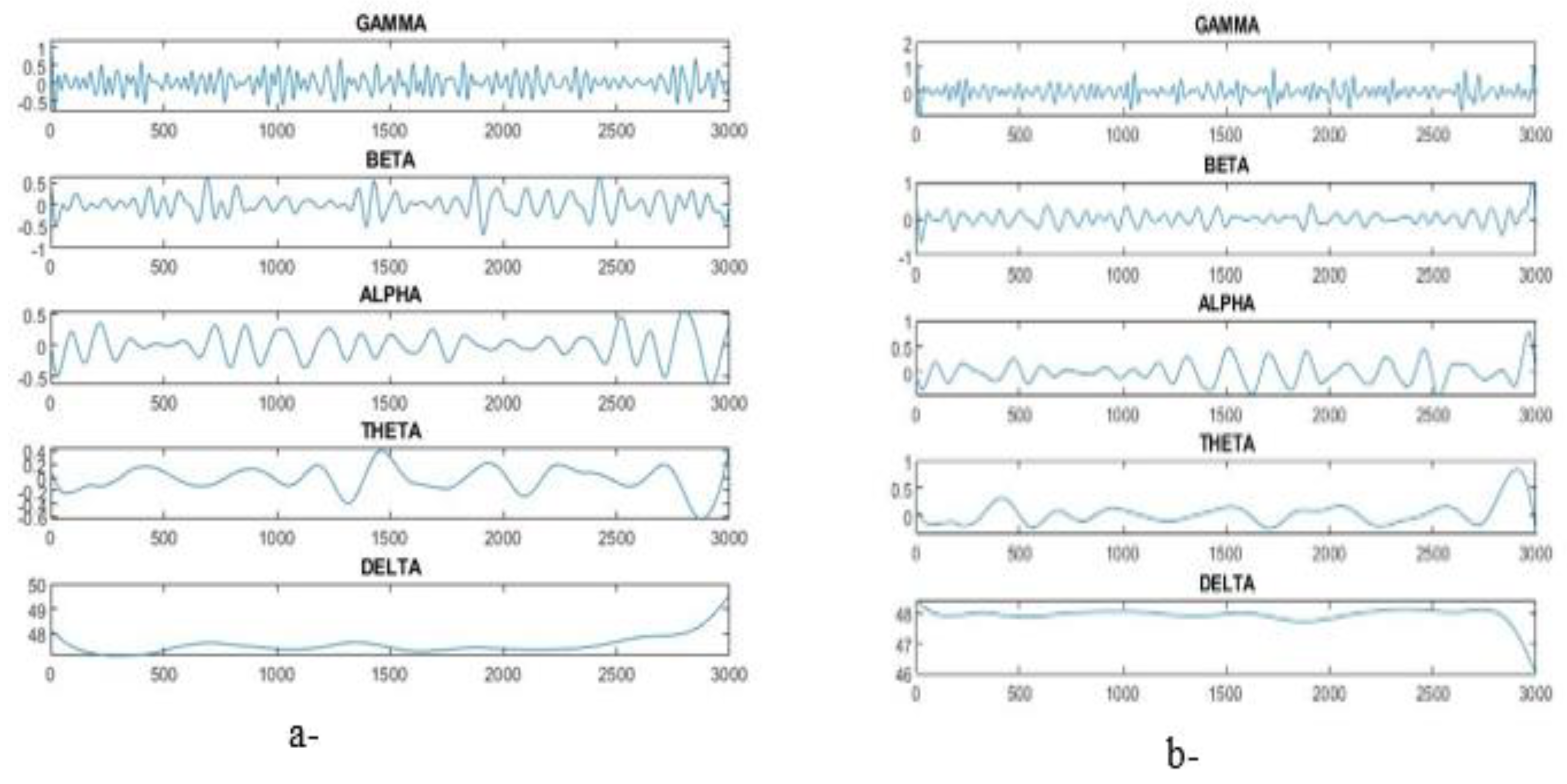

6]. The brain’s electrical signals can be analyzed through the five frequency bands produced by the EEG [

7]: delta, alpha, theta, gamma and beta. Excessive discharge of electrical signals in brain cells produces abnormal seizures, which are one of the signs of epilepsy. Radiologists distinguish EEG signals caused by seizures from other factors via special discriminatory patterns, such as high-amplitude repetitive activities with a combination of slow and spike waves [

8]. Hence, discovering these features is a difficult task, and following each EEG signal is troublesome and time consuming [

9]. Therefore, an automated approach for detecting seizures to diagnose epilepsy in a timely manner needs to be developed [

10]. EEG is an effective tool for detecting areas of differences in neuronal activity correlated with epilepsy. Accordingly, seizures can be detected by analyzing EEG signals. The key point in analyzing EEG signals is to extract the most important and most effective features that represent the characteristics of the signal [

11]. Given that the characteristics of EEG signals have not been established yet, several methods for identifying EEG on the basis of time–frequency (TF) algorithms have been proposed for classification [

12]. The present study established TF algorithms as one of the most important algorithms for discovering the behavior of different signals with different frequencies and times [

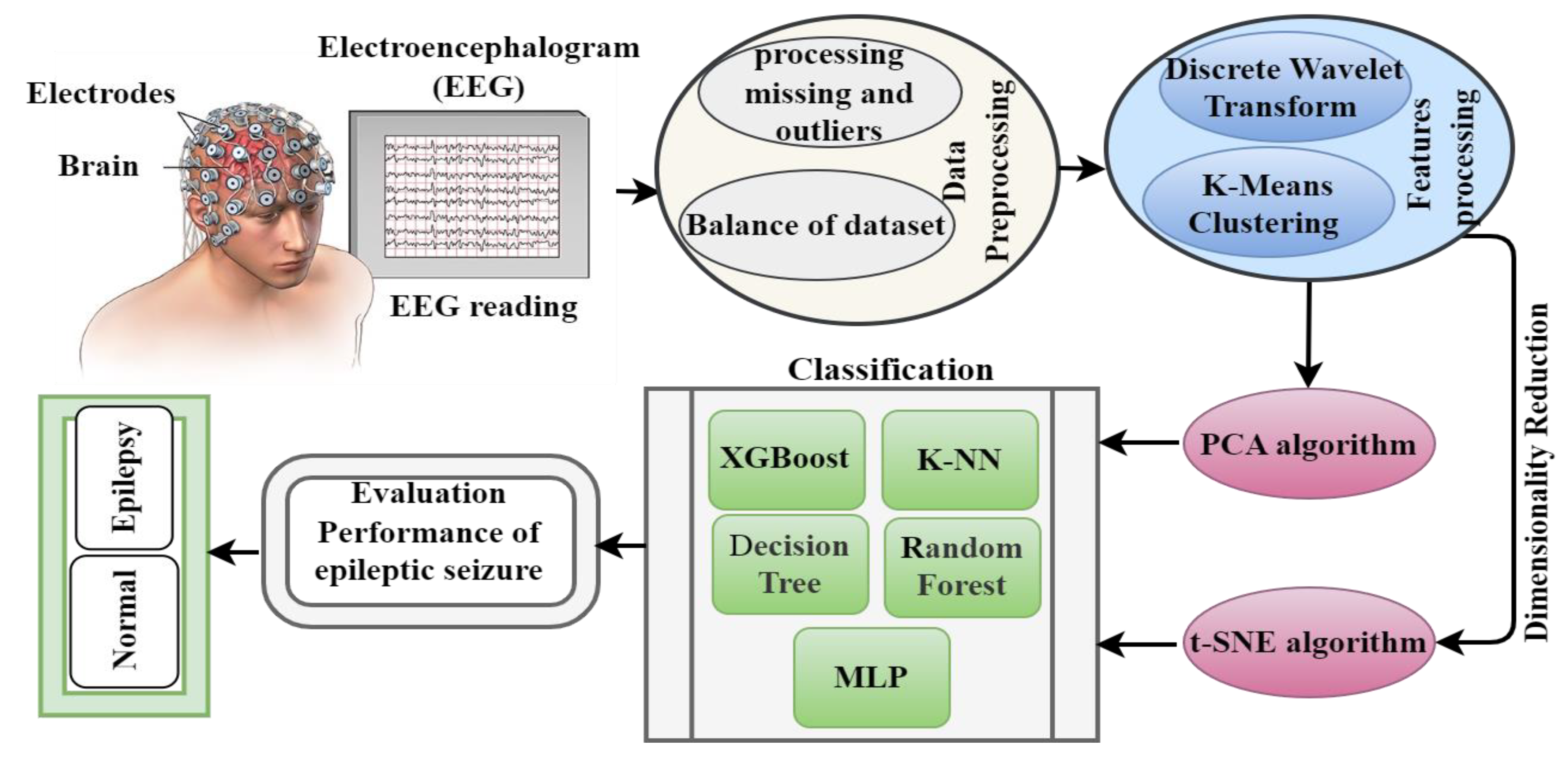

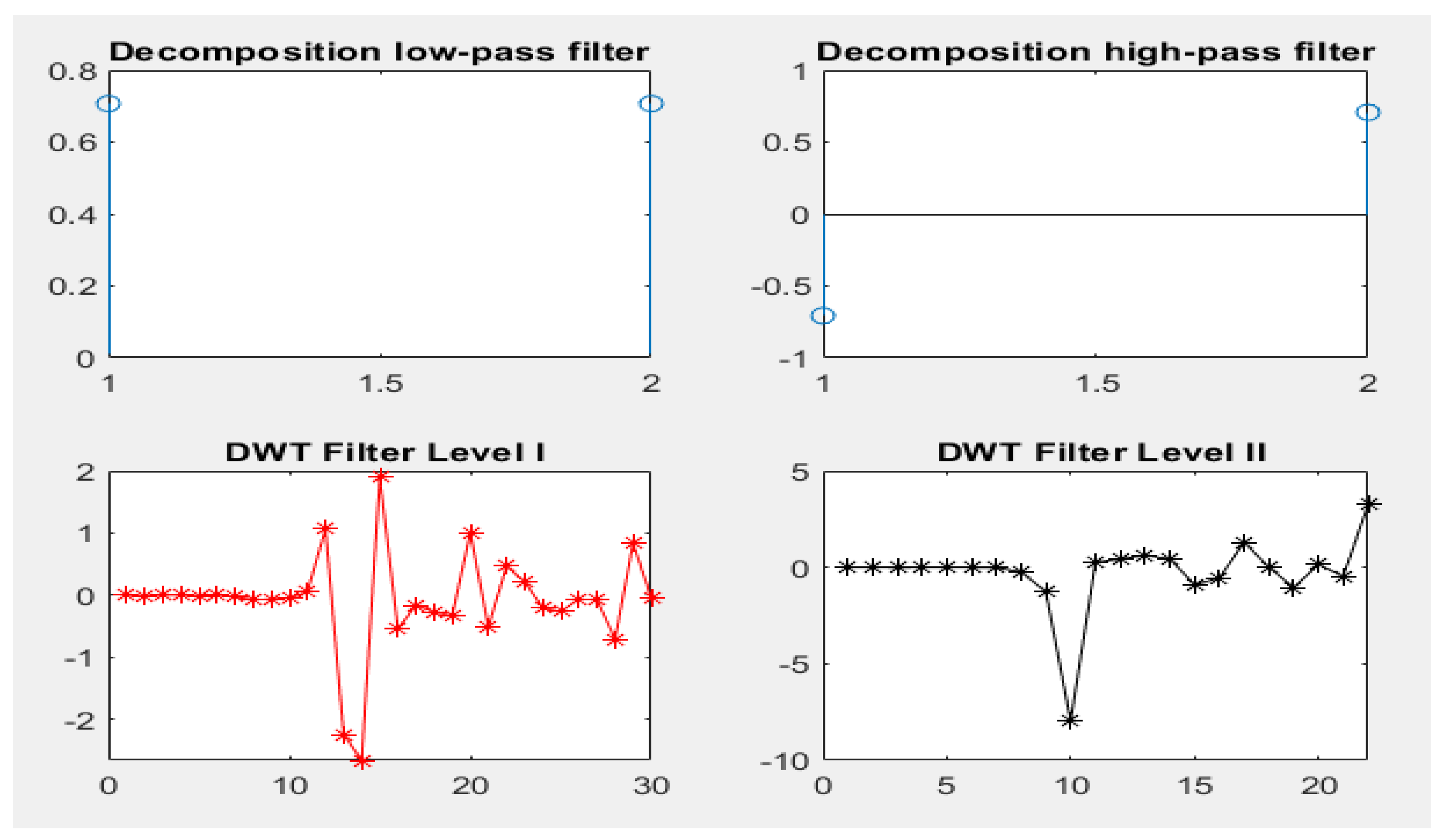

13]. In particular, the DWT algorithm was applied to analyze nonstationary signals, such as EEG, to extract the most effective features. The PCA and t-SNE algorithms were employed to represent high-dimensional data in a low-dimensional spaces. Two models were built by applying the K-means clustering algorithm and PCA (K-means + PCA) and then with t-SNE (K-means + t-SNE). The important features were fed into five types of classification algorithms, which trained the dataset and tested the performance of the classifiers on a part of the dataset.

The main contributions of this paper can be summarized as follows:

Features are extracted by decomposing signal components via the DWT algorithm and by applying PCA and the t-SNE algorithm to reduce data dimensions to obtain a feature vector in two ways, namely, DWT + PCA and DWT + t-SNE.

The data set is divided into subgroups by using K-means clustering, with each group containing similar points. PCA and the t-SNE algorithm are then applied to reduce data dimensions to obtain feature vectors in two ways, namely, K-means + PCA and K-means + t-SNE.

The hyperparameters of all classifiers have are adjusted to attain the best results in diagnosing epilepsy.

These highly efficient algorithms are generalized to help physicians obtain a highly accurate diagnosis while saving time and effort.

The rest of the paper is ordered as follows:

Section 2 discusses techniques used in the relevant literature.

Section 3 describes the materials and methods with subsections for pre-processing data, feature extraction, and methods for reducing data dimensions.

Section 4 provides and discusses the experimental results. Finally, the conclusions are provided in

Section 5.

4. Discussion and Comparison of Systems Performance

In this section, the techniques and tools applied to the diagnosis of epileptic seizures for relevant studies will be discussed and their results will be compared with the proposed systems.

The studies mentioned compare different methods for analyzing EEG signals and diagnosing epilepsy. Tzallas et al. [

36] used a Fourier transform algorithm to analyze EEG signals and extracted important features associated with fractional energy. An artificial neural network (ANN) was then applied to classify epileptics. They reported their method to be effective at classifying epilepsy. Peker et al. [

37] employed a dual-tree complex wavelet transform to analyze EEG signals and derived five statistical features to distinguish epileptic patients. Classification using complex-valued neural networks showed that their wavelet transformation was effective at classifying epilepsy. Alcin et al. [

38] combined the GLCM texture descriptor algorithm with Fisher vector encoding to extract representative features from time–frequency (TF) images. Their method achieved superior results in diagnosing epilepsy. Islamet et al. [

39] developed a stationary wavelet transform algorithm for analyzing EEG signals and detecting seizures. Their algorithm achieved good results in diagnosing epilepsy. Sharmila [

40] presented a framework based on the analysis of EEG signals using the DWT method with linear and nonlinear classifiers to detect seizures. They reported the successful detection of EEG seizures from normal and epilepsy patients. Wang et al. [

41] conducted coherence analysis to extract features and determine the trend and density of information flow from EEG signals. The information flow was used as input to a classifier for seizure detection. Hassan et al. [

42] presented a system for diagnosing epilepsy using the tunable wavelet transform and bagging by EEG signals. Their system showed promising results in epilepsy diagnosis. Yuan et al. [

43] proposed a weighted extreme learning machine (ELM) method for detecting seizures using weighted EEG signals. They applied a wavelet packet analysis and determined the time series complexity of EEG signals. Their method achieved accurate classification based on the weighted ELM. Jaiswal et al. [

44] proposed two methods for extracting features from EEG signals, namely, sub-pattern of PCA and sub-pattern correlation of PCA. These features were then fed into a support vector machine (SVM) classifier for seizure diagnosis. Li et al. [

45] established a multiscale radial basis function method for obtaining high-resolution time–frequency (TF) images from EEG signals. Features were extracted using the GLCM algorithm with FV encoding based on the TF images’ frequency sub-bands. Subasi et al. [

46] developed a hybrid method using a genetic algorithm and particle swarm optimization to tune and determine the best parameters for an SVM classifier. Their system achieved promising accuracy in diagnosing epilepsy. Raghu et al. [

47] applied the DWT method and extracted features from the wavelet coefficients of EEG segments. These features were then used with a random forest classifier for epileptic classification. Chen et al. [

48] used the autoregressive average method to describe the dynamic behavior of EEG signals. Their approach focused on the time series characteristics of the EEG data. Yavuz et al. [

49] calculated mel frequency cepstral coefficients (MFCC) by analyzing frequency according to frequency bandwidth. The MFCCs were used as features for epilepsy diagnosis. Mursalin et al. [

50] applied improved correlation feature selection to extract crucial features from the time, frequency, and entropy domains of EEG signals. These features were determined using a random forest classifier for diagnosing epilepsy. Each study employed different techniques for analyzing EEG signals and diagnosing epilepsy. The choice of methods varied, including Fourier transform and wavelet transform.

From the literature, it is noted that there are deficiencies in the techniques and methodologies applied to diagnose epileptic seizures. Hence, this study focused on the extracted features, purifying and improving them, selecting the most important representative features that distinguish epileptic seizures, and classifying them using many algorithms to achieve a better efficiency.

Patient-independent seizure detection refers to the ability to detect seizures in individuals without the need for prior knowledge or training specific to that individual. While there have been significant advancements in seizure detection technologies, patient-independent seizure detection remains a challenging problem. Here, are some key concerns associated with this setting:

Heterogeneity of seizure patterns: Seizure activity can vary significantly between individuals, making it difficult to develop a universal seizure detection algorithm that works for everyone. Seizure types, duration, and associated physiological changes can differ, making it challenging to establish a single approach that accurately detects seizures across diverse populations.

Lack of personalized data: Patient-independent seizure detection implies the absence of any specific data from the individual being monitored. This lack of personalization makes it harder to tailor detection algorithms to the unique characteristics of an individual’s seizures. Without personalized data, it is challenging to account for individual variations, including pre-seizure patterns, which could potentially improve detection accuracy.

Limited generalizability: Seizure detection models often require training data from individual patients to capture their specific seizure patterns, which limits their generalizability to new patients. Patient-independent seizure detection aims to overcome this limitation but faces the challenge of developing models that can accurately detect seizures in diverse populations without individual-specific training.

Interpatient variability: The electrical activity of the brain during seizures can differ significantly between patients. This interpatient variability in seizure manifestation poses a challenge when developing patient-independent detection methods. Algorithms trained on one population may not perform as effectively on another, leading to reduced detection accuracy and reliability.

Real-world complexity: Seizure detection in real-world scenarios involves dealing with various factors such as artifacts, noise, and non-seizure events that can interfere with accurate detection. Without personalization or patient-specific training, patient-independent seizure detection algorithms must be robust enough to handle these challenges across a wide range of scenarios.

Addressing the ignored problem of patient-independent seizure detection requires extensive research and innovation in the field of machine learning, data analysis, and signal processing. Advanced techniques such as transfer learning, ensemble models, and feature engineering may play a crucial role in improving the performance of patient-independent seizure detection algorithms. Additionally, collecting large-scale, diverse datasets that encompass a wide range of seizure types and characteristics can help in developing more robust and generalized models for patient-independent seizure detection.

The points mentioned about the challenges of patient-independent seizure detection are reflected in the papers cited. The studies all reported that seizure activity can vary significantly across individuals. This makes it difficult to develop a universal seizure detection algorithm that works for everyone. The studies all noted that patient-independent seizure detection implies the absence of any specific data from the individual being monitored. This lack of personalization makes it harder to tailor detection algorithms to the unique characteristics of an individual’s seizures. The studies all found that seizure detection models often require training data from individual patients to capture their specific seizure patterns. This limits their generalizability to new patients. The studies all reported that the electrical activity of the brain during seizures can differ significantly between patients. This interpatient variability in seizure manifestation poses a challenge when developing patient-independent detection methods. The studies all noted that seizure detection in real-world scenarios involves dealing with various factors such as artifacts, noise, and non-seizure events that can interfere with accurate detection. Without personalization or patient-specific training, patient-independent seizure detection algorithms must be robust enough to handle these challenges across a wide range of scenarios. The studies cited suggest that there is still much work to be conducted in the area of patient-independent seizure detection. However, the techniques that have been developed so far offer promise for improving the accuracy and reliability of seizure detection in real-world scenarios.

The performances of the proposed systems were evaluated and compared with those in relevant studies.

Table 7 describes the accuracy and sensitivity of the proposed systems compared to those reported by previous studies. Our system was proved to be superior to that described by previous studies. The proposed system using DWT and PCA with the RF classifier achieved an accuracy of 97.96%. The proposed system using DWT and t-SNE with random forest attained an accuracy of 98.09%. Finally, the system using K-means with PCA with MLP obtained an accuracy of 98.98%. In previous systems, the accuracy was between 82% and 97.17%. With regard to sensitivity, the system using DWT and PCA with random forest reached a sensitivity of 94.41%. The system using DWT and t-SNE with random forest achieved an accuracy of 93.9%. Finally, the system using K-means with PCA with MLP attained a sensitivity of 95.69%. In comparison, previous systems reached an accuracy ranging from 68% to 93.11%. It is noted that the accuracy of the performance of the previous systems ranged between 83.6% and 97.17%, while the performance of the proposed system was 98.98%. While the previous systems resulted in a recall ranging between 68% and 93.11%, while the proposed system resulted in a recall rate of 95.69%. Thus, the performance of the proposed systems is superior to the performance of the systems in the literature.

This paper adds value to the reader by presenting a study focused on the early diagnosis of epileptic seizures using artificial intelligence systems and classification algorithms. The following are some key points that highlight the value of the paper.

Importance of early diagnosis: The paper emphasizes the significance of early diagnosis for epileptic seizures, considering the high prevalence of this health problem worldwide. Early diagnosis allows for the timely intervention and management of seizures, leading to improved patient outcomes.

Integration of artificial intelligence: The paper highlights the role of artificial intelligence systems in assisting doctors with accurate diagnosis. By leveraging machine learning algorithms, the study aims to provide reliable and automated methods for seizure detection and classification.

Feature extraction and dimensionality reduction: The paper proposes the use of the DWT as a feature extraction algorithm. Additionally, dimensionality reduction techniques such as principal component analysis (PCA) and t-SNE are applied to reduce the complexity of the feature space and improve classification accuracy.

Evaluation of multiple classification algorithms: The study evaluates five classification algorithms, namely XGBoost, K-nearest neighbors (KNN), decision tree (DT), random forest (RF), and multilayer perceptron (MLP). This provides a comprehensive analysis of the performance of different algorithms in diagnosing epileptics.

Comparative analysis of feature extraction methods: The paper compares the performance of different feature extraction methods, including DWT + PCA, DWT + t-SNE, K-means + PCA, and K-means + t-SNE. This analysis provides insights into the effectiveness of various feature extraction techniques in seizure diagnosis.

Promising results: The study reports high accuracy rates achieved by the proposed algorithms. During the testing phase, the random forest classifier with DWT + PCA achieved an accuracy of 97.96%, while with DWT + t-SNE, the accuracy reached 98.09%. The MLP classifier with PCA + K-means achieved an accuracy of 98.98%. These results indicate the potential of the proposed methods for accurate and reliable diagnosis of epileptic seizures.

By presenting these findings, the paper contributes to the field of seizure detection and diagnosis, offering insights into the effectiveness of specific algorithms and techniques. The results and methodology described in the paper can guide further research and development of AI-based systems for early detection of epileptic seizures.